Professional Documents

Culture Documents

Text Mining and Classifica1on: Karianne Bergen

Text Mining and Classifica1on: Karianne Bergen

Uploaded by

ANKIT MITTALOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Text Mining and Classifica1on: Karianne Bergen

Text Mining and Classifica1on: Karianne Bergen

Uploaded by

ANKIT MITTALCopyright:

Available Formats

Text

Mining and Classifica1on

Karianne

Bergen

kbergen@stanford.edu

Ins1tute

for

Computa1onal

and

Mathema1cal

Engineering,

Stanford

University

Machine Learning Short Course | August 11-‐15 2014 1

Text Classifica1on

• Determine a characteris1c of a document

based on the text:

– Author iden1fica1on

– Sen1ment analysis (e.g. posi1ve vs. nega1ve

review)

– Subject or topic category

– Spam filtering

Machine Learning Short Course | August 11-‐15 2014 2

Text Classifica1on

hTp://www.theshedonline.org.au/ac1vi1es/ac1vity/scam-‐email-‐examples

Machine Learning Short Course | August 11-‐15 2014 3

Document Features

• How do we generate a set of input features

from a text document to pass to the machine

learning algorithm?

– Bag of words / term-‐document matrix

– N-‐grams

Machine Learning Short Course | August 11-‐15 2014 4

Bag-‐of-‐Words Model

• Representa1on of text data in terms of

frequencies of words from a dic1onary

– The grammar and ordering of words are ignored

– Just keep the (unordered) list of words that

appear and the number of 1mes they appear

Machine Learning Short Course | August 11-‐15 2014 5

Bag-‐of-‐Words Model

Machine Learning Short Course | August 11-‐15 2014 6

Term-‐Document Matrix

• Term-‐document matrix useful for working

with text data

– Sparse matrix, describes frequency of words

occurring in a collec1on of documents

– Rows represent terms/words, Columns represent

individual documents

– Entry (𝑖,𝑗) gives number of occurrences of term 𝑖

in document 𝑗

Machine Learning Short Course | August 11-‐15 2014 7

Term-‐Document Matrix

• Example

– Documents:

1. “one fish two fish”

2. “red fish blue fish”

3. “black fish blue fish”

4. “old fish new fish”

– Terms: “one”, “two”, “fish”, “red”, “blue” “black”

“old”, “new”

Machine Learning Short Course | August 11-‐15 2014 8

Term-‐Document Matrix

Document

1 2 3 4

“one” 1 0 0 0

“two” 1 0 0 0

“fish” 2 2 2 2

Term “red” 0 1 0 0

“blue” 0 1 1 0

“black” 0 0 1 0

“old” 0 0 0 1

“new” 0 0 0 1

Machine Learning Short Course | August 11-‐15 2014 9

N-‐gram

• N-‐gram: a con1guous sequence of 𝑛 items

(e.g. words or characters)

• Used for language modeling -‐ features retain

informa1on related to word ordering

• e.g. “It's kind of fun to do the impossible.”

-‐ Walt Disney

– 3-‐grams: “It’s kind of,” “kind of fun,” “of fun to,”

“fun to do,” “to do the”, “do the impossible,” “the

impossible it’s” “impossible it’s kind”

Machine Learning Short Course | August 11-‐15 2014 10

Text Mining: NMF

• Unsupervised learning method for

dimensionality reduc1on

• NMF is a type of matrix factoriza1on

– Original matrix and factors only contain posi1ve

or zero values

– For dimensionality reduc1on and clustering

– Non-‐nega1vity of factors makes the results easier

to interpret than other factoriza1ons

Machine Learning Short Course | August 11-‐15 2014 11

Nonnega1ve Matrix Factoriza1on

• NMF factors matrix 𝑋 into product of two non-‐

nega1ve matrices:

𝑋≈𝑊𝐻,

𝑊≥0, 𝐻 ≥0

• 𝑊 is the “dic1onary” matrix and columns are

“metafeatures”, 𝐻 is coefficient matrix

Machine Learning Short Course | August 11-‐15 2014 12

NMF for Text

• 𝑋∈ℝ↑𝑡 𝑥 𝑑 : term-‐document matrix

• 𝑊∈ℝ↑𝑡 𝑥 𝑘 : 𝑘 columns (“metafeatures”) ,

each represen1ng a collec1on of terms

• 𝐻∈ℝ↑𝑘 𝑥 𝑑 : coefficients

• Each document is represented as a posi1ve

combia1on of the 𝑘 metafeatures

Machine Learning Short Course | August 11-‐15 2014 13

NMF for Text

• Example

– Documents:

1. “one fish two fish”

2. “red fish blue fish”

3. “old fish new fish”

4. “some are red and some are blue”

5. “some are old and some are new”

– Terms: “one”, “two”, “fish”, “red”, “blue”, “old”,

“new”, “some”, “are”, “and”

Machine Learning Short Course | August 11-‐15 2014 14

NMF for Text:

X (term-‐document matrix)

Document

1 2 3 4 5

“one” 1

“two” 1

“fish” 2 2 2

“red” 1 1

“blue” 1 1

Term

“old” 1 1

“new” 1 1

“some” 2 2

“are” 2 2

“and” 1 1

Machine Learning Short Course | August 11-‐15 2014 15

NMF for Text:

W (dic1onary matrix)

Metafeature

“one” + “fish” “red” + “old” + “some” + “are” +

“two” “blue” “new” 0.5 ·∙ “and”

“one” 1

“two” 1

“fish” 1

“red” 1

Term

“blue” 1

“old” 1

“new” 1

“some” 1

“are” 1

“and” 0.5

Machine Learning Short Course | August 11-‐15 2014 16

NMF for Text:

H (coefficient matrix)

Document

1 2 3 4 5

“one” + “two” 1

“fish” 2 2 2

Metafeature

“red” + “blue” 1 1

“old + new” 1 1

“some” + “are” + 0.5 ·∙ “and” 2 2

• e.g. “one fish two fish” → “one” “fish” “two” “fish”

= 1דone” + 1× “two”+ 2× “fish”

OR = 1×(“one” + “two”) + 2× “fish”

Machine Learning Short Course | August 11-‐15 2014 17

NMF for Text

• Metafeatures in dic1onary matrix 𝑊 may

reveal interes1ng paTerns in the data

– Posi1vity of metafeatures helps with

interpretability

– Groupings of words in metafeatures onen occur

together in the same document

• e.g. “red” and “blue” or “old” and “new”

Machine Learning Short Course | August 11-‐15 2014 18

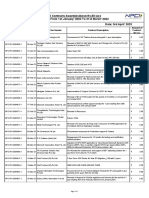

NMF for Text

• e.g. Text from news from business sec1on

– 2500 ar1cles, 50 authors

– 948 terms aner pre-‐processing (stemming, stop

word removal, removal of infrequent terms)

– Apply NMF factoriza1on with 𝐾=25

– Metafeatures in dic1onary factor 𝑊 roughly

correspond to topics within the text

– Representa1on of text: 948 terms à 25 topics

Machine Learning Short Course | August 11-‐15 2014 19

NMF for Text

Ford Motor Co. Thursday announced sweeping

organizational changes and a major shake-up of its

senior management, replacing the head of its

global automotive operations. The moves include

combining Ford's four components divisions into a

single organization with 75,000 employees and $14

billion in revenues, and a consolidation of the

automaker's vehicle product development centers to

three from five.

à { “ford” “motor” “thursday” “announc” “chang”

“major” “senior” “manag” “replac”… }

Machine Learning Short Course | August 11-‐15 2014 20

NMF for Text

Metafeature 1 Metafeature 2 Metafeature 3 Metafeature 4

cargo 0.47 internet 0.43 china 0.73 plant 0.47

air 0.47 comput 0.42 beij 0.31 worker 0.35

airline 0.24 corp 0.30 chines 0.30 uaw 0.24

servic 0.18 use 0.29 state 0.21 strike 0.21

kong 0.16 system 0.20 offici 0.20 ford 0.19

hong 0.16 microsoE 0.19 said 0.19 part 0.17

aircraE 0.13 soEware 0.18 trade 0.14 local 0.15

airport 0.13 inc 0.16 foreign 0.13 auto 0.15

flight 0.12 technolog 0.16 unite 0.11 said motor 0.14

industri 0.16 truck 0.13

network 0.15 chrysler 0.13

product 0.13 work 0.13

servic 0.13 automak 0.13

busi 0.11 union 0.13

contract 0.13

0.11

Machine Learning Short Course | August 11-‐15 2014 21

NMF for Images

Machine Learning Short Course | August 11-‐15 2014 22

NMF for Images

Machine Learning Short Course | August 11-‐15 2014 23

NMF for Images

≈ +

+

+

+

+ + + +

Machine Learning Short Course | August 11-‐15 2014 24

# NMF in R

# install.packages("NMF") # nmf

library(NMF)

V <- scale(data, center = FALSE, scale = colSums(V))

k = 20

res <- nmf(V,k)

W <- basis(res) # get dictionary matrix W

H <- coef(res) # get dictionary matrix H

V.hat <- fitted(res) # get estimate W*H

Machine Learning Short Course | August 11-‐15 2014 25

Text Classifica1on

• Naïve Bayes

– Simple algorithm based on Bayes rule from

sta1s1cs

– Uses the bag-‐of-‐words model for documents

– Has been shown to be very effec1ve for text

classifica1on

Machine Learning Short Course | August 11-‐15 2014 26

Naïve Bayes

• NB chooses the most likely class label based on

the following assump1on about the data:

– Independent feature (word) model – presence of any

word in document is unrelated to the presence/

absence of other words

• This assump1on makes it easier to combine the

contribu1ons of features, don’t need to model

interac1ons between words

• Even though this assump1on rarely hold, NB s1ll

works well in prac1ce

Machine Learning Short Course | August 11-‐15 2014 27

Naïve Bayes

• Compute 𝑃𝑟𝑜𝑏(𝑌=𝑗 |𝑋) for each class 𝑗 and

choose class with greatest probability

• Bayesian classifiers

𝑃𝑟𝑜𝑏𝑌𝑋 = 𝑃𝑟𝑜𝑏(𝑌)𝑃𝑟𝑜𝑏(𝑋|𝑌)/𝑃𝑟𝑜𝑏(𝑋)

• For Naïve Bayes

𝑌 =argmax┬𝑌 𝑃𝑟𝑜𝑏(𝑌)∏𝑗=1↑𝑑▒𝑃𝑟𝑜𝑏(𝑋↓𝑗 |𝑌)

– 𝑃𝑟𝑜𝑏(𝑌), 𝑃𝑟𝑜𝑏𝑋↓𝑗 𝑌 es1mated using training data

Machine Learning Short Course | August 11-‐15 2014 28

Naïve Bayes

• Advantages:

– Does not require a large training set to obtain

good performance, especially in text applica1ons

– Independence assump1on leads to faster

computa1ons

– Is not sensi1ve to irrelevant features

• Disadvantages:

– Independence of features assump1on

– Good classifier, but poor probability es1mates

Machine Learning Short Course | August 11-‐15 2014 29

Author iden1fica1on

• Collec1on of poems – William Shakespeare or

Robert Frost?

Two roads diverged in a yellow wood,

And sorry I could not travel both

And be one traveler, long I stood

And looked down one as far as I could

To where it bent in the undergrowth;

Then took the other, as just as fair,

And having perhaps the better claim…

Shall I compare thee to a summer's day?

Thou art more lovely and more temperate.

Rough winds do shake the darling buds of May,

And summer's lease hath all too short a date.

Sometime too hot the eye of heaven shines,

And often is his gold complexion dimmed;

And every fair from fair sometime declines,

By chance, or nature's changing course, untrimmed;…

Machine Learning Short Course | August 11-‐15 2014 30

Author iden1fica1on

install.packages("tm") # text mining

library(tm) # loads library

# shakespeare

s.dir = "shakespeare"

s.Docs <- Corpus(DirSource(directory=s.dir,

encoding="UTF-8"))

# frost

f.dir = "frost"

f.Docs <- Corpus(DirSource(directory=f.dir,

encoding="UTF-8"))

Machine Learning Short Course | August 11-‐15 2014 31

cleanCorpus<-function(corpus){

# apply stemming

corpus <-tm_map(corpus, stemDocument, lazy=TRUE)

# remove punctuation

corpus.tmp <- tm_map(corpus,removePunctuation)

# remove white spaces

corpus.tmp <- tm_map(corpus.tmp,stripWhitespace)

# remove stop words

corpus.tmp <-

tm_map(corpus.tmp,removeWords,stopwords("en"))

return(corpus.tmp)

}

Machine Learning Short Course | August 11-‐15 2014 32

d.docs <- c(s.docs, f.docs) # combine data sets

d.cldocs <- cleanCorpus(d.docs) # preprocessing

# forms document-term matrix

d.tdm <- DocumentTermMatrix(d.cldocs)

# removes infrequent terms

d.tdm <- removeSparseTerms(d.tdm,0.97)

> dim(d.tdm) # [ #docs, #numterms ]

[1] 264 518

> inspect(d.tdm) # inspect entries in document-term

matrix

Machine Learning Short Course | August 11-‐15 2014 33

# exploring the data

# terms appearing > 55 times in shakespeare’s poems

> findFreqTerms(s.tdm,55)

[1] "and" "but" "doth" "eye" "for" "heart" "love"

"mine" "sweet" "that" "the" "thee" "thi" "thou"

"time" "yet"

# terms appearing > 55 times in frost’s poems

> findFreqTerms(f.tdm,55)

[1] "and" "back" "but" "come" "know" "like" "look"

"make" "one" "say" "see" "that" "the" "they" "way"

"what" "with" "you"

Machine Learning Short Course | August 11-‐15 2014 34

# exploring the data

# identify associations between terms - shakespeare

> findAssocs(s.tdm, "winter", 0.2)

winter

summer 0.50

age 0.40

youth 0.34

like 0.24

old 0.23

beauti 0.21

seen 0.21

Machine Learning Short Course | August 11-‐15 2014 35

# exploring the data

# identify associations between terms - frost

> findAssocs(f.tdm, "winter", 0.5)

winter

climb 0.66

town 0.62

toward 0.57

side 0.55

black 0.53

mountain 0.52

Machine Learning Short Course | August 11-‐15 2014 36

# assign class labels to each document,

# based on the document author

class.names = c('shakespeare','frost')

d.class = c(rep(class.names[1], nrow(s.tdm)),

rep(class.names[2], nrow(f.tdm)))

d.class = as.factor(d.class)

> levels(d.class)

[1] "frost" "shakespeare“

Machine Learning Short Course | August 11-‐15 2014 37

# separate data into training and test sets

set.seed(123) # set random seed

train_frac = 0.6 # fraction of data for training

train_idx = sample.int(nrow(d.tdm), size =

ceiling(nrow(d.tdm) * train_frac),

replace = FALSE);

train_idx <- sort(train_idx)

test_idx <- setdiff(1:nrow(d.tdm), train_idx)

d.tdm.train <- d.tdm[train_idx,]

d.tdm.test <- d.tdm[test_idx,]

d.class.train <- d.class[train_idx]

d.class.test <- d.class[test_idx]

Machine Learning Short Course | August 11-‐15 2014 38

# separate data into training and test sets

> d.tdm.train

<<DocumentTermMatrix (documents: 159, terms: 518)>>

Non-/sparse entries : 6167/76195

Sparsity : 93%

Maximal term length : 9

Weighting : term frequency (tf)

> d.tdm.test

<<DocumentTermMatrix (documents: 105, terms: 518)>>

Non-/sparse entries : 4578/49812

Sparsity : 92%

Maximal term length : 9

Weighting : term frequency (tf)

Machine Learning Short Course | August 11-‐15 2014 39

# CART

install.packages("rpart") # install cart package

library(rpart) # load library

d.frame.train <- data.frame(as.matrix(d.tdm.train));

d.frame.train$class <- as.factor(d.class.train)

treefit <- rpart(class ~., data = d.frame.train)

> summary(treefit)

Variables actually used in tree construction:

[1] doth eyes green grow let thee which

Machine Learning Short Course | August 11-‐15 2014 40

Decision Tree result

plot(treefit, uniform=TRUE)

text(treefit, use.n=T)

Machine Learning Short Course | August 11-‐15 2014 41

• William Shakespeare or Robert Frost?

Two roads diverged in a yellow wood,

And sorry I could not travel both

And be one traveler, long I stood

And looked down one as far as I could

To where it bent in the undergrowth;

Then took the other, as just as fair,

And having perhaps the better claim…

Shall I compare thee to a summer's day?

Thou art more lovely and more temperate.

Rough winds do shake the darling buds of May,

And summer's lease hath all too short a date.

Sometime too hot the eye of heaven shines,

And often is his gold complexion dimmed;

And every fair from fair sometime declines,

By chance, or nature's changing course, untrimmed;…

Machine Learning Short Course | August 11-‐15 2014 42

# CART

Node number 1: 159 observations, complexity param=0.3947368

predicted class=shakespeare expected loss=0.4779874 P(node) =1

class counts: 76 83

probabilities: 0.478 0.522

left son=2 (120 obs) right son=3 (39 obs)

Primary splits:

thee < 0.0007022472 to the left, improve=21.14, (0 missing)

thi < 0.01323529 to the left, improve=21.14, (0 missing)

thou < 0.003511236 to the left, improve=19.58, (0 missing)

doth < 0.0007022472 to the left, improve=16.21, (0 missing)

love < 0.01906318 to the left, improve=14.89, (0 missing)

Surrogate splits:

thou < 0.003511236 to the left, agree=0.906, (0 split)

thi < 0.0007022472 to the left, agree=0.899, (0 split)

art < 0.005088523 to the left, agree=0.836, (0 split)

thine < 0.0007022472 to the left, agree=0.824,(0 split)

hast < 0.009433962 to the left, agree=0.805, (0 split)

Machine Learning Short Course | August 11-‐15 2014 43

# CART

predclass <- predict(treefit1, d.frame.test)

colNames = colnames(predclass)

d.class.pred <-

as.factor(colNames[max.col(predclass)])

tree.table <- table(d.class.pred, d.class.test)

> tree.table

actual

predicted frost shakespeare

frost 55 12

shakespeare 1 37

Machine Learning Short Course | August 11-‐15 2014 44

# CART

errorRate<-function(table){

TP = table[1,1]; # true positives

TN = table[2,2]; # true negatives

FP = table[1,2]; # false positives

FN = table[2,1]; # false negatives

error_rate = (FP + FN)/(TP + TN + FP + FN)

return(error_rate)

}

> errorRate(tree.table)

[1] 0.1238095

Machine Learning Short Course | August 11-‐15 2014 45

COME unto these yellow

sands,

And then take hands:

Court'sied when you have, How countlessly they congregate

and kiss'd,-- O'er our tumultuous snow,

The wild waves whist,-- Which flows in shapes as tall as

Foot it featly here and trees

there; When wintry winds do blow!–

And, sweet sprites, the As if with keenness for our fate,

burthen bear. Our faltering few steps on

Hark, hark! To white rest, and a place of

Bow, wow, rest

The watch-dogs bark: Invisible at dawn,--

Bow, wow. And yet with neither love nor

Hark, hark! I hear hate,

The strain of strutting Those stars like some snow-white

chanticleer Minerva's snow-white marble eyes

Cry, Cock-a-diddle-dow! Without the gift of sight.

Machine Learning Short Course | August 11-‐15 2014 46

COME unto these yellow

sands,

And then take hands:

Court'sied when you have, How countlessly they congregate

and kiss'd,-- O'er our tumultuous snow,

The wild waves whist,-- Which flows in shapes as tall as

Foot it featly here and trees

there; When wintry winds do blow!–

And, sweet sprites, the As if with keenness for our fate,

burthen bear. Our faltering few steps on

Hark, hark! To white rest, and a place of

Bow, wow, rest

The watch-dogs bark: Invisible at dawn,--

Bow, wow. And yet with neither love nor

Hark, hark! I hear hate,

The strain of strutting Those stars like some snow-white

chanticleer Minerva's snow-white marble eyes

Cry, Cock-a-diddle-dow! Without the gift of sight.

True Author: Shakespeare True Author: Frost

Predicted: Frost Predicted: Shakespeare

Machine Learning Short Course | August 11-‐15 2014 47

# KNN

library(class)

knn_res <- knn(d.tdm.train, d.tdm.test,

d.class.train, k = 5, prob=TRUE)

knn.table <- table(knn_res, d.class.test,

dnn = list('predicted','actual'))

> knn.table

actual

predicted frost shakespeare

frost 56 33

shakespeare 0 16

> errorRate(knn.table)

[1] 0.3142857

Machine Learning Short Course | August 11-‐15 2014 48

# naive bayes

nb_classifier <- naiveBayes(as.matrix(d.tdm.train),

d.class.train, laplace = 1)

res <- predict(nb_classifier, as.matrix(d.tdm.test),

type = "raw", threshold = 0.5)

> res

frost shakespeare

[1,] 2.265614e-244 1.000000e+00

[2,] 2.285289e-165 1.000000e+00

[3,] 5.696532e-67 1.000000e+00

…

[104,] 1.000000e+00 0.000000e+00

[105,] 1.000000e+00 0.000000e+00

Machine Learning Short Course | August 11-‐15 2014 49

# naive bayes

> nb_classifier$apriori # breakdown of training data

d.class.train

frost shakespeare

77 82

Machine Learning Short Course | August 11-‐15 2014 50

errorRate<-function(table){

TP = table[1,1]; # true positives

TN = table[2,2]; # true negatives

FP = table[1,2]; # false positives

FN = table[2,1]; # false negatives

error_rate = (FP + FN)/(TP + TN + FP + FN)

return(error_rate)

}

> errorRate(res.table)

[1] 0.1619048

Machine Learning Short Course | August 11-‐15 2014 51

NMF for Text

• 'cargo' 'air' 'airlin' 'servic' 'kong‘ 'hong' 'aircran' 'airport'

'flight’ ( 0.4711 0.4696 0.2349 0.1772 0.1648 0.1583 0.1328

0.1271 0.1245 )

• 'internet' 'comput' 'corp' 'use' 'system' 'microson' 'sonwar‘

'inc' 'technolog' 'industri' 'network' 'product' 'servic'

'busi‘ (0.4285 0.4165 0.2990 0.2885 0.1958 0.1883 0.1776

0.1630 0.1618 0.1565 0.1519 0.1347 0.1320 0.1146)

• 'china' 'beij' 'chines' 'state' 'offici' 'said' 'trade' 'foreign‘

'unite‘ ( 0.7297 0.3059 0.3034 0.2089 0.2038 0.1884 0.1400

0.1337 0.1147 )

• 'plant' 'worker' 'uaw' 'strike' 'ford' 'part' 'local' 'auto‘ 'said'

'motor' 'truck' 'chrysler' 'work' 'automak' 'union‘ 'contract'

'agreement' 'three' 'mich‘ ( 0.4729 0.3485 0.2438 0.2141

0.1877 0.1692 0.1498 0.1452 0.1382 0.1310 0.1305 0.1291

0.1281 0.1264 0.1261 0.1130 0.1044 0.1040 0.1023)

Machine Learning Short Course | August 11-‐15 2014 52

# CART

Node number 1: 159 observations, complexity param=0.3947368

predicted class=shakespeare expected loss=0.4779874 P(node) =1

class counts: 76 83

probabilities: 0.478 0.522

left son=2 (120 obs) right son=3 (39 obs)

Primary splits:

thee < 0.5 to the left, improve=21.14719, (0 missing)

thi < 0.5 to the left, improve=20.35459, (0 missing)

thou < 0.5 to the left, improve=19.57953, (0 missing)

doth < 0.5 to the left, improve=16.20745, (0 missing)

tree < 0.5 to the right, improve=13.91526, (0 missing)

Surrogate splits:

thou < 0.5 to the left, agree=0.906, adj=0.615, (0 split)

thi < 0.5 to the left, agree=0.899, adj=0.590, (0 split)

art < 0.5 to the left, agree=0.830, adj=0.308, (0 split)

thine < 0.5 to the left, agree=0.824, adj=0.282, (0 split)

hast < 0.5 to the left, agree=0.805, adj=0.205, (0 split)

Machine Learning Short Course | August 11-‐15 2014 53

Sample R code

> Auto=read.table("Auto.data")

> fix(Auto)

> dim(Auto)

[1] 392 9

> names(Auto)

[1] "mpg" "cylinders " "displacement" "horsepower "

[5] "weight" "acceleration" "year" "origin"

[9] "name"

Machine Learning Short Course | August 11-‐15 2014 54

You might also like

- Exam 01.03 Name That Red Flag - Social Media 1 V16 (4491) 2Document1 pageExam 01.03 Name That Red Flag - Social Media 1 V16 (4491) 2Jasmel De Los SantosNo ratings yet

- Celpip R WDocument128 pagesCelpip R WaaaNo ratings yet

- Machine Learning Engineer Interview QuestionsDocument2 pagesMachine Learning Engineer Interview QuestionsANKIT MITTALNo ratings yet

- Testing IBE R2Document644 pagesTesting IBE R2claudia veraNo ratings yet

- Lecture 8-3 - Presenting Survey Data and ResultsDocument25 pagesLecture 8-3 - Presenting Survey Data and ResultsGurumurthy BRNo ratings yet

- Advanced NLPDocument111 pagesAdvanced NLPkomalaNo ratings yet

- 2 VS ModelDocument46 pages2 VS ModelGsiangNo ratings yet

- Smart Reading? - Preparing Our Students For The Online Research Environment and Facilitating Critical Discussions About Its ChallengesDocument15 pagesSmart Reading? - Preparing Our Students For The Online Research Environment and Facilitating Critical Discussions About Its Challengeshaenzel1975No ratings yet

- Cs224n 2023 Lecture05 RNNLMDocument68 pagesCs224n 2023 Lecture05 RNNLMwaleed grayNo ratings yet

- AI6122 Topic 3.2 - RankingDocument27 pagesAI6122 Topic 3.2 - RankingYujia TianNo ratings yet

- Mva 2020 SL C4Document98 pagesMva 2020 SL C4Cristian RodriguezNo ratings yet

- CCS369 - TSS-Unit 2Document56 pagesCCS369 - TSS-Unit 2thirushharidossNo ratings yet

- Data Collection Methods in Language Acquisition Research: Sonja Eisenbeiss (University of Essex)Document24 pagesData Collection Methods in Language Acquisition Research: Sonja Eisenbeiss (University of Essex)Karen Banderas ManzanoNo ratings yet

- CLAD Exam Preparation Resource Guide - 3Document2 pagesCLAD Exam Preparation Resource Guide - 3Heartiest GowthamNo ratings yet

- Apex Institute of Technology Natural Language Processing (20CST354)Document43 pagesApex Institute of Technology Natural Language Processing (20CST354)trinidhisingh15No ratings yet

- Quantitative Methods For PMDocument71 pagesQuantitative Methods For PMkanguloNo ratings yet

- NLP PartDocument19 pagesNLP Part아이 커IkerNo ratings yet

- Using Nvivo 9 For Literature ReviewDocument7 pagesUsing Nvivo 9 For Literature Reviewafmzmajcevielt100% (1)

- NLP - Natural Language ProcessingDocument74 pagesNLP - Natural Language ProcessingMichaelLevyNo ratings yet

- 06 Decision TreesDocument29 pages06 Decision TreesJulie ThorNo ratings yet

- Lec 1.1.2Document44 pagesLec 1.1.2yuvrajaditya1306No ratings yet

- Supervised and Unsupervised Learning Using The Yelp DatasetDocument6 pagesSupervised and Unsupervised Learning Using The Yelp Datasetmark21No ratings yet

- Webquest Sec1R5-1R7 June2011Document6 pagesWebquest Sec1R5-1R7 June2011pyjpyjNo ratings yet

- (Kimberly A. Neuendorf) The Content Analysis Guide PDFDocument318 pages(Kimberly A. Neuendorf) The Content Analysis Guide PDFnamopk665100% (3)

- Stroustrup What Have We Learned From C++ 2014Document50 pagesStroustrup What Have We Learned From C++ 2014jaansegusNo ratings yet

- Nvivo DissertationDocument9 pagesNvivo DissertationCheapPaperWritingServiceUK100% (1)

- Guia de InglesDocument24 pagesGuia de Inglesveroelizabeth13No ratings yet

- IS 7118 Unit-4 N-GramsDocument93 pagesIS 7118 Unit-4 N-GramsJmpol John100% (1)

- Tenses: Uous Imp Ov SsDocument2 pagesTenses: Uous Imp Ov SsIvan CvasniucNo ratings yet

- Subject Assignment: Curriculum and Course DesignDocument3 pagesSubject Assignment: Curriculum and Course DesignErika CedilloNo ratings yet

- Scienceunit EartsunmoonDocument2 pagesScienceunit Eartsunmoonapi-97805941No ratings yet

- Notes of NLP - Unit-2Document23 pagesNotes of NLP - Unit-2inamdaramena4No ratings yet

- 3 LM 2024Document78 pages3 LM 2024khchengNo ratings yet

- News Classifier Using Multinomial Naive BayesDocument15 pagesNews Classifier Using Multinomial Naive Bayesmansi tyagiNo ratings yet

- Stepp3 111Document6 pagesStepp3 111api-253593723No ratings yet

- How To Improve Your Spoken EnglishDocument22 pagesHow To Improve Your Spoken EnglishWahyudin JamilNo ratings yet

- Instructional Design UnitDocument16 pagesInstructional Design UnitjapittmanNo ratings yet

- Va4ds01 PDFDocument13 pagesVa4ds01 PDFReza AkbarNo ratings yet

- L02-IR Models MMNDocument27 pagesL02-IR Models MMNMohamed elkholyNo ratings yet

- Adobe Scan Oct 26 2023Document5 pagesAdobe Scan Oct 26 2023Zyrah HolandaNo ratings yet

- N-Grams - Text RepresentationDocument23 pagesN-Grams - Text Representationscribd_comverseNo ratings yet

- R Ism Dec 8 No AnimDocument38 pagesR Ism Dec 8 No AnimChris ShanNo ratings yet

- Additional Point About NLPDocument41 pagesAdditional Point About NLPBulbula KumedaNo ratings yet

- Collage CompositionDocument270 pagesCollage CompositionAbu EdwardNo ratings yet

- Recap: P and NP"Document6 pagesRecap: P and NP"Aktham1610No ratings yet

- Lecture5 Language ModelsDocument68 pagesLecture5 Language ModelsBemenet Biniyam100% (2)

- Lec-1 IntroductionDocument68 pagesLec-1 IntroductionGia ByNo ratings yet

- LRM - Development and EvaluationDocument57 pagesLRM - Development and EvaluationHenry ContemplacionNo ratings yet

- Lec 0 Feb 23Document60 pagesLec 0 Feb 23Cynosure WolfNo ratings yet

- Literature Review Nvivo 10Document9 pagesLiterature Review Nvivo 10afmzvadyiaedla100% (1)

- Ages 45 - Are You Ready For Kindergarten - Pencil SkillsDocument67 pagesAges 45 - Are You Ready For Kindergarten - Pencil SkillsRams Iamor100% (2)

- Ngrams - Language ModelDocument38 pagesNgrams - Language ModelBenny Sukma NegaraNo ratings yet

- Lecture 2b - Overview of The PracticalsDocument43 pagesLecture 2b - Overview of The PracticalsMario MolinaNo ratings yet

- Concept Attainment 1Document5 pagesConcept Attainment 1api-242018367No ratings yet

- Nvivo Literature Review VideoDocument9 pagesNvivo Literature Review Videogihodatodev2100% (1)

- 3.2 Python Variables Operators PDFDocument8 pages3.2 Python Variables Operators PDFritaNo ratings yet

- Motivation Video: Mitsuku Vs Cleverbot - AI (Artificial Intelligence)Document45 pagesMotivation Video: Mitsuku Vs Cleverbot - AI (Artificial Intelligence)MandeaNo ratings yet

- Opinion MiningDocument18 pagesOpinion MiningAbinaya CNo ratings yet

- Nonlinear Modeling of RC Structures Using Opensees: University of Naples Federico IiDocument59 pagesNonlinear Modeling of RC Structures Using Opensees: University of Naples Federico IiJorge Luis Garcia ZuñigaNo ratings yet

- NgramsDocument22 pagesNgramsOuladKaddourAhmed100% (1)

- Apex Institute of Technology Natural Language Processing (CST-354)Document22 pagesApex Institute of Technology Natural Language Processing (CST-354)khuranadikshant3No ratings yet

- Research Paper On P NP ProblemDocument5 pagesResearch Paper On P NP Problemafednfsfq100% (1)

- Python Programming - X: by Nimesh Kumar DagurDocument15 pagesPython Programming - X: by Nimesh Kumar DagurANKIT MITTALNo ratings yet

- Lab Assignment1 MongodbDocument2 pagesLab Assignment1 MongodbANKIT MITTALNo ratings yet

- This Presentation Will Cover:: A Brief History of DBMSDocument21 pagesThis Presentation Will Cover:: A Brief History of DBMSANKIT MITTALNo ratings yet

- Josh Magazine Current Affairs 1Document110 pagesJosh Magazine Current Affairs 1ANKIT MITTALNo ratings yet

- IT Jobs As A Profession: By: Alex Talampas Jordan Boshers Brandon Ashbaugh Steven Davis Chris BoosDocument28 pagesIT Jobs As A Profession: By: Alex Talampas Jordan Boshers Brandon Ashbaugh Steven Davis Chris BoosANKIT MITTALNo ratings yet

- List of Schemes and Initiatives For NABARDDocument5 pagesList of Schemes and Initiatives For NABARDANKIT MITTALNo ratings yet

- Python TextbokDocument215 pagesPython TextbokANKIT MITTALNo ratings yet

- Interview Question & AnswerDocument10 pagesInterview Question & AnswerANKIT MITTALNo ratings yet

- KCC Bank Previous Question Papers DownloadDocument31 pagesKCC Bank Previous Question Papers DownloadANKIT MITTALNo ratings yet

- BURNDY YGHC26C2 SpecsheetDocument2 pagesBURNDY YGHC26C2 SpecsheetGUSTAVO GARCESNo ratings yet

- Megafono BaeDocument13 pagesMegafono BaeHernan CortesNo ratings yet

- Fresenius Kabi Optima PT Vs ST Technical Manual PDFDocument104 pagesFresenius Kabi Optima PT Vs ST Technical Manual PDFPankaj KumarNo ratings yet

- ExcelR Selenium Course Agenda With Value Added CoursesDocument24 pagesExcelR Selenium Course Agenda With Value Added Coursesrajeshgr9063No ratings yet

- Guided Tutorial For Pentaho Data Integration Using OracleDocument41 pagesGuided Tutorial For Pentaho Data Integration Using OracleAsalia ZavalaNo ratings yet

- Microset 4: Features and HighlightsDocument2 pagesMicroset 4: Features and HighlightsAnonymous XYAPaxjbYNo ratings yet

- Blockchain-Mini-project ReportDocument13 pagesBlockchain-Mini-project ReportPRATHMESH JOSHI (RA2011050010082)No ratings yet

- INFINIUM CatalogDocument20 pagesINFINIUM Catalogari kurniawanNo ratings yet

- CSS CATsDocument3 pagesCSS CATsirish ragasaNo ratings yet

- Executive SummaryDocument25 pagesExecutive SummaryAnkit AcharyaNo ratings yet

- RBS G14 Baraka Hitachi 210 Q2023Document2 pagesRBS G14 Baraka Hitachi 210 Q2023Adeel AhsanNo ratings yet

- Name: 3/6Ch Portable Interpretative Electrocardiograph Type: C320 Merk: Fukuda M.E Kogyo Neg. Asal: Japan Specifications: Power Requirement & SizeDocument2 pagesName: 3/6Ch Portable Interpretative Electrocardiograph Type: C320 Merk: Fukuda M.E Kogyo Neg. Asal: Japan Specifications: Power Requirement & SizedanangNo ratings yet

- CSI1241 Assignment 1 v2Document5 pagesCSI1241 Assignment 1 v2sml03137355727No ratings yet

- How To Apply For Submit or Terminate Self-Meter Reading (SMR)Document36 pagesHow To Apply For Submit or Terminate Self-Meter Reading (SMR)dannys danialNo ratings yet

- Alte Filme VechiDocument255 pagesAlte Filme Vechicornel mileaNo ratings yet

- Modern Myths - Shortcomings in Scientific WritingDocument77 pagesModern Myths - Shortcomings in Scientific WritingLi ChNo ratings yet

- SMS Security: Highlighting Its Vulnerabilities & Techniques Towards Developing A SolutionDocument5 pagesSMS Security: Highlighting Its Vulnerabilities & Techniques Towards Developing A SolutionannaNo ratings yet

- All Contract Awarded Above 50 Lacs 01012023 31032023Document2 pagesAll Contract Awarded Above 50 Lacs 01012023 31032023Apurva ModyNo ratings yet

- Prof Letter of Appraisal 2012 PDFDocument2 pagesProf Letter of Appraisal 2012 PDFSaquib.MahmoodNo ratings yet

- Danfoss Program klavuzu-TÜRKÇEDocument50 pagesDanfoss Program klavuzu-TÜRKÇEatakanNo ratings yet

- English 10 DDocument4 pagesEnglish 10 DJhonalyn OrtalezaNo ratings yet

- Slides For Chapter 7: Operating System Support: Distributed Systems: Concepts and DesignDocument23 pagesSlides For Chapter 7: Operating System Support: Distributed Systems: Concepts and DesignAlencar JuniorNo ratings yet

- Relation & Function - DPP 01 (Of Lec 02) - (Prayas 2.0 2023 PW Star)Document3 pagesRelation & Function - DPP 01 (Of Lec 02) - (Prayas 2.0 2023 PW Star)Siddhu SahuNo ratings yet

- Brochure Kairos enDocument6 pagesBrochure Kairos enibojcicNo ratings yet

- IST 310 Class 19Document18 pagesIST 310 Class 19fNo ratings yet

- UNIT 1-OstDocument25 pagesUNIT 1-OstSandyNo ratings yet

- Fundamentals of Business Analytics ReviewerDocument7 pagesFundamentals of Business Analytics ReviewerAldrich Neil RacilesNo ratings yet

- Floating Point ArithmeticDocument15 pagesFloating Point ArithmeticAvirup RayNo ratings yet