Professional Documents

Culture Documents

Statistics Lacture On Basic Term - Perametric Test - ANOVA - MANOVA

Statistics Lacture On Basic Term - Perametric Test - ANOVA - MANOVA

Uploaded by

SANA0 ratings0% found this document useful (0 votes)

8 views26 pagesResearch

Original Title

statistics lacture on basic term_perametric test_ANOVA_ MANOVA

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentResearch

Copyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

Download as pdf

0 ratings0% found this document useful (0 votes)

8 views26 pagesStatistics Lacture On Basic Term - Perametric Test - ANOVA - MANOVA

Statistics Lacture On Basic Term - Perametric Test - ANOVA - MANOVA

Uploaded by

SANAResearch

Copyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

Download as pdf

You are on page 1of 26

{—_- mnoomarssoorossonjeer-pandey-parametretest POF

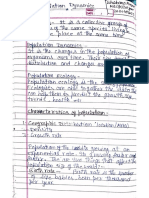

What is Statistics?

Statistics is neither really a science nor a branch of Mathematics. It is perhaps best considered as

‘a meta-science (or meta-language) for dealing with data collection, analysis, and interpretation.

As such its scope is enormous and it provides much guiding insight in many branches of science,

business.

* Statistics is the science of collecting, organizing, analyzing, interpreting, and

presenting data. (Old Definition).

* A statistic is a single measure (number) used to summarize a sample data set.

For example, the average height of students in this class.

* A statistician is an expert with at least a master’s degree in mathematics or

Statistics or a trained professional in a related field.

Statistics is a ‘ool for creating new understanding from a set of numbers.

Statistics is a science of getting informed decisions.

A Taxonomy of Statistics

Done

Statistics is a science of getting informed decisions.

A Taxonomy of Statistics)

= cavwaveavabdbavel

Statistical Inference; is to draw conclusions about the Population on the basis of information

available in the sample which has been drawn from the population by a random sampling

technique/ procedure. There are two branches Statistical Inference namely ESTIMATION &

TESTING OF HYPOTHESIS.

In ESTIMATION, we try to find an estimate of any population characteristic while in TESTING

OF HYPOTHESIS, we try to test the statement about any population characteristic. Here, our main

concern is with “TESTING OF HYPOTHESIS”.

‘Some Basic Definitions:

Population: Any collection of individuals under study is said to be Population (Universe). The

individuals are often called the members or the units of the population may be may be physical

objects or measurements expressed numerically or otherwise.

Sample: A part or small section selected from the population is called a sample and process of

such selection is called sampling.

(Tre fundamental object of sampling is ta get ax much information as possible of the whole universe by

examining only a part of it. Aw attempt is thus made through sampling to give the maximum information

‘about parent universe with the minimum effort).

Parameters: Statistical measurements such as Mean, Variance ete. of the Population are called

Parameters,

Statistic: It a stati

| measure computed from sample observations alone ‘The thanrorina!

Done

° 2

avauapie in ak m sampung

technique/ pre : e@ IMATION &

TESTING OF HYPOTHESIS.

In ESTIMATION, we try to find an estimate of any population characteristic while in TESTING

OF HYPOTHESIS, we try to test the statement about any population characteristic. Here. our main

‘concern is with “TESTING OF HYPOTHESIS”.

‘Some Basic Definitions:

Population: Any collection of individuals under study is said to be Population (Universe). The

individuals are often called the members or the units of the population may be may be physical

objects or measurements expressed numerically or otherwise.

‘Sample: A part or small section sclected from the population is called a sample and process of

such selection is called sampling.

(The fundamemal object of sampling is to get as much information as possible of the whole universe by

‘examining only a part of it. An attempt is thus made through sampling to give the maxinum information

about parent universe with the minimun effort).

Parameters: Statistical measurements such as Mean, Variance etc. of the population are called

parameters.

Statistic: It a statistical measure computed from sample observations alone. The theoretical

distribution of a statistics is called its sampling distribution. Standard deviation of the sampling

distribution of a statistic is called Standard Error.

Hypothesis: is a statement given by an individual, Usually itis required to make decisions about

populations on the basis of sample information. Such decisions are called Statistical Decisions. In

attempting to reach decisions itis often necessary to make assumption about population involved.

SD +2 0

oa

Done

oe Lt 608

a

distribution o, eS 0

Hypothesis: is a statement given by an individual. Usually it is required to make decisions about

populations on the basis of sample information. Such decisions are called Statistical Decisions. in

attempting to reach decisions itis often necessary to make assumption about population involved.

Such assumptions, which are not necessarily true, are called statistical hypothesis.

Parametric Hypothesis: A statistical hypothesis which refers only to values of unknown

parameters of population is usually called a parametric hypothesis.

Null Hypothesis and Alternative Hypothesis: A hypothesis which is tested under the assumption

that itis true is called a null hypothesis and is denoted by Ho. Thus a hypothesis which is tested for

possible rejection under the assumption that it is truc is known as Null Hypothesis. The hypothesis,

which differs from the given Null Hypothesis Hy and is accepted when Ha is rejected is called an

alternative hypothesis and is denoted byl (The hypothesis against which we test the mull

hypothesis, is an alternative hypothesis

Simple and Composite Hypothesis: A parametric hypothesis which describes a distribution

completely is called a simple hypothesis otherwise itis called composite hypothesis. For example;

In case of Normal Distribution N (1, 0”), 4 = 5, 0 = 3 is simple hypothesis whereas w= 5 is a

‘composite hypothesis as nothing have becn said about o.

Similarly, p< 5, 6 =3 is a composite hypothesis,

Let Ho: 1 = 5 be the null hypothesis, then

Hi: # Sis two sided composite alternative hypothesis.

Hi: 1 <5 is one sided (Left) composite altemative hypothesis.

Hi: > 5 is one sided (Right) composite alternative hypothesis.

Test: Test is a rule through we test the null hypothesis against the given alternative hypothesis.

Tests of Significance: Procedure which enables us to decide, on the basis of sample information

whether to accept or reject the hypothesis or to determine whether observed sampling results differ

significantly from expected results are called tests of significance. rules of decisions or tests of

hypothesis.

Level of Significance: The probability level below which we reject the hypothesis is called fevel

of significance. The levels of significance usually employed in testing of hypothesis are 5% and

1%,

Similar, a eo

Let Ho 11 ypuuicors,tsee

HGF SP we set compo aeratvehypobesis. NCU 16

G,_ HTS is one sided (Le) composite alternative hypothesi

Hi: # > 5 is one sided (Right) composite alternative hypothesis.

Y ae NAL?

Test: Testis a rule through we test the null hypothesis against the given alternative hypothesis.

Tests of Significance: Procedure which enables us to decide, on the basis of sample information

whether to accept or reject the hypothesis or to determine whether observed sampling results differ

significantly from expected results are called /ests of significance, rules of decisions or tests of

hypothesis.

Level of Significance: The probability level below which we reject the hypothesis is called level

of significance. ‘The levels of significance usually employed in testing of hypothesis are 5% and

1%.

P-Value: The p-value is the level of marginal significance within a statistieal hypothesis test

Tepresenting the probability of the occurrence of a given event. The p-value is used as an

alternative to rejection points to provide the smallest level of significance at which the null

hypothesis would be rejected.

More liealy cbservation

if P-value

Saha Gu SipLeseniina /

4

WOAZAM S3t24 Jul

Done

Similarly, y - mH eo

Let Ho(u 35D¢ ypuuicors, aren

Hu FSP two Sed composite alternative hypothesis. N C dy 16 [=

GH is one sided (Left) composite alternative hypothesi: Ty:

Hi: 25 is one sided (Right) composite alternative hypothesis. N ( AJ “yt

) —o t

Test: Test is a rule through we test the null hypothesis against the given alternative hypothesis.

Tests of Significance: Procedure which enables us to decide, on the basis of sample information

whether to accept or reject the hypothesis or to determine whether observed sampling results differ

significantly from expected results are called tests of e, rules of decisions or tests of

hypothesis. |-/- (ey) los.

feippores weaaLeve! )

The levels oF Significance usually emptoyeerMrTESTTTE of esis arg 57% nd

el of Significance: The probability level below which we reject

ateaicance)

P-Value: The p-value is the level of marginal sigh CP sot

representing the probability of the occurrence of a given event, The p-value is used as an

altemative to rejection points to provide the smallest level of significance at

hypothesis would be rejected.

ew I

More liealy cbservation

OAD AN Sat 20

; eee

Done -_

x ° ~

significantly -

hypothesis eo

Vow y see fa

nificance: The probability level below which we reject (He Tiypothess 1s calla eve!)

1 or tests of

rel of Sis

Cal The levels of significance usually emptoyeetr Testing of hypothesis ark 3% Ind

aes vp ie :

a CP < 80, 0 < 0s, 8 # Oo is a function of the parameter, ut

consideration, which gives the probability that the test statistic will fall in the critical region when

0 is the true value of the parameter.

Deduction: P (0) =P (rejecting Howhen Hi is true)

= P (W belong to W- / Hi) = 1 — P (accept Ho! H)

=1-B)

The value of the power function at a particular value of the parameter is called the power of the

test

Best Critical Region: In testing the hypothesis Ho: 0 = Qo against the given alternative Hy: 0 ~ 01,

the critical region is best if the type II error is minimum or the power is maximum when compared

to every other possible critical region of size a. A test defined by this critical region is called most

powerful test.

PARAMETRIC and NON-PARAMETRIC TESTS

In the literal meaning of the terms, a parametric statistical test is one that makes assumptions

about the parameters (defining properties) of the population distribution(s) from which one’s data

are drawn, while a non-parametric test is one that makes no such assumptions.

PARAMETRIC TESTS: Most of the statistical tests we perform are based on oY

ra

assumptions. When these assumptions are violated the results of the analysis can be mish

consideratior. some ' seo region

@iis the true value vs ure paraneics.

Deduction: P (6) =P (rejecting Howhen Hi is true)

=P (W belong to W./ Hi) = 1 — P (accept Ho/ Hi)

=1-B()

The value of the power function at a particular value of the parameter is called the power of the

test

Best Critical Region: In testing the hypothesis Hy: 0 = 0p against the given alternative Hi; 8 = @1,

the critical region is best if the type Il error is minimum or the power is maximum when compared

to every other possible critical region of size a. A test defined by this critical region is called most

powerful test.

PARAMETRIC and NON-PARAMETRIC TESTS

In the literal meaning of the terms, a parametric statistical test is one that makes assumptions

about the parameters (defining properties) of the population distribution(s) from which one's data

are drawn, while a non-parametric test is one that makes no such assumptions.

PARAMETRIC TESTS: Most of the statistical tests we perform are based on a set of

assumptions. When these assumptions are violated the results of the analysis can be misleading or

completely erroncous.

loa’

Typical assumptions are:

Normality: Data have a normal distribution (or at least is symmetric)

Homogeneity of variances: Data from multiple groups have the same variance

Linearity: Data have a linear relationship

Independence: Data are independent

We explore in detail what it means for data to be normally distributed in Normal Distribution, but

in general it means that the graph of the data has the shape of a bell curve. Such data is symmetric

around its mean and has kurtosis equal to zero. In Testing for Normality and Symmetry we provide

tests to determine whether data meet this assumption.

Some tests (e.g. ANOVA) require that the groups of data being studied have the same variance.

In Homogeneity of Variances we provide some tests for determining whether groups of dat

the same variance. r4

. Ceo Bs

Typical assumptions are:

Si ality: Data have a normal distribution (or at least is symmetric]

Homogeneity oF Variances: Data from multiple grou ee iance

«= Linearity: Data: ear ret

+ Independence: Data are independent

We explore in detail what it means for data to be normally distributed in Normal Distribution, but

in general it means that the graph of the data has the shape of a bell curve. Such data is symmetric

around its mean and has kurtosis equal to zero. In Testing for Normality and Symmetry we provide

tests to determine whether data meet this assumption.

Some tests (e.g, ANOVA) require that the groups of data being studied have the same variance,

In Homogeneity of Variances we provide some tests for determining whether groups of data have

the same variance.

Some tests (e.g. Regression) require that there be a linear correlation between the dependent and

independent variables. Generally linearity can be tested graphically using scatter diagrams,

other techniques explored in Correlation, Regression and Multiple Regression. VraN

‘Typical assumptions are:

+ Normality: Data have a normal distribution (or at least is symmgfric)

+ Homogeneity of vari from multiple groups have thd same variace

We explore in detail what it means for data to be normally distributed in Normal Distribution, but

in genteraTt means that the graptrottic data has thé shape of a bell curve-Such Gata is symmetric

around itsTmearrand has kurtosis equal to zero, In Testirfg Tor Normality and Symmetry we provide

tests to determine whether data meet this assumption.

Some tests (e.g. ANOVA) require that the groups of data being studied have the same variance.

In Homogeneity of Variances we provide some tests for determining whether groups of data have

the same variance.

Some tests (e.g. Regression) require that there be a linear correlation between the dependent and

independent variables, Generally linearity can be tested graphically using scatter diagrams or via

other techniques explored in Correlation, Regression and Multiple Regression.

(rt

Wave [KS eV KaVabitlave

Introduction:

© The t-test is a basic test that is limited to two groups. For multiple groups, you would have

to compare each pair of groups. For example with three groups there would be three tests

(AB, AC, BC) whilst with seven groups there would be need of 21 tests.

® The basic principle is to test the null hypothesis that means of the two groups are equal.

The t-test assumes:

» A normal distribution (parametric data)

> Underlying variances are equal (if not, use welch’s test)

} [tis used when there is random assignment and only two sets of measurement to compare.

‘There are two main types of t-test:

nt — measures —t- test: when samples are not matched.

Independe

: in pairs (eg. before and after)

¢ Match — pair — t-test: when samples appear

A single — sample t-test compares a sample against a known figure. For example when measures

ota manufactured item are compared against the requinod standard, =

Done ié

aeekpAing eee... @n

(AB,. THE e600 ee

‘© The basic principte 1s to ‘test tne nult nypotnesis tat means oF the two groups are equal

90r19

The t-test assumes:

> A normal distribution (parametric data)

> Underlying variances are equal (if not, use welch’s test)

} It is used when there is random assignment and only two sets of measurement to compare.

ere are two main types of t-test:

«Independent — measures — t- test: when samples are not matched.

«Match — pair — t-test: when samples appear in pairs (eg. before and after)

sample against a known figure. For example when measures

> — Acsingle — sample t-test compares a

against the required standard.

of a manufactured item are compared

APPLICATIONS:

sample with population mean. (Simple t-test)

‘© =Tocompare the mean of a

sample with the independent sample. (Independent Semple t-

© Tocompare the mean of one

sai two occasions. (Paired

pare between the values (readings) of one sample but in

© To com

sample t-test)

5% region of rejection of null hypothesis

Non directional

Done

12of 19

gariable are

(lypothesis Testing with Chi-Square:

-ii:sauare follows five steps:

£1. Making assumptions (random sampling)

2. Stating the research and null hypotheses.

3. Sclecting the sampling distribution and specifying the test statistic

4. Computing the test statistic

5. Making a decision and interpreting the results

The Assumptions:

+ The chi-square test requires mo assumptions about the shape of the population

distribution from which the sample was drawn

+ However, like all inferential techniques it assumes random sampling.

‘Hy: The two variables arc related in the population. Gender and fear of walking alone at night are

statistically dependent.

[Afraid Men ‘Women Total

INo 83.3% 57.2% TA%

lYes 16.7% 42.8% 28.9%

[Total 100% 100% 100%

“Hp: There is no association between the two variables. Gender and fear ‘of walking alone at night

are statistically independent.

‘The Concept of Expected Frequencies:

Expected frequencies fo: the cell frequencies that would be expected in a bivariate table if the

two tables were statistically independent. a

Done

Sof 19

A p value is used in hypothesis testing to help you support or reject the null hypothesis. The p

value is the evidence against a nul) hypothesis. The smaller the p-value, the stronger the evidence

that you should reject the null hypothesis.

P values are expressed as decimals although it may be easier to understand what they are if

you convert them to a percentage. For example, a p value of 0.0254 is 2.54%. This means there is

22.54% chance your results could be random (i.e. happened by chance). That's pretty tiny. On the

other hand, a large p-value of .990%) means your results have a 90% probability of being

completely random and not due to anything in your experiment. Theref ler the p-value,

the more important (“significant”) your results.

Region and Acceptance Region: A region (corresponding t0 a statistic t) is called the

sample space. The part of sample space which amounts to rejection of mull hypothesis Ho,is called

critical region or region of rejection.

If X = (x2 %q) is the random vector observed and We is the critical region (which

corresponds the rejection of the hypothesis according to a prescribed test procedure) of the sample

space W, then W, = W ~ We of the sample space is called the acceptance region.

‘Two Types of Errors in Testing of a Hypothesis: While testing & hypothesis Ho, the following.

four situations may arise:

(a) The test stat

reject Ho when it is true.

imay fall in the critical region even if Ho is true then we shall be led to

in the acceptance region when Ho is true we shall be led ase

_) eco... @n

ra

- wes

5 ve sl &

— pce M oD

ADEA D

Nok 40 knee one en”

tim of

=

se ltt @©@@ reece :-

eo

Nort to kansae ne tu SH

tre of

bi Crp

[wR 2heey

AF :

Trem Tw Tram.

Pim: To lind batten 7)

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5823)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Case Presentation On PolyhydraminosDocument13 pagesCase Presentation On PolyhydraminosSANANo ratings yet

- OBG Clinical Procedure, Case Study, NSG Care Plan ANPDocument342 pagesOBG Clinical Procedure, Case Study, NSG Care Plan ANPSANANo ratings yet

- OBG - Population DynamicsDocument8 pagesOBG - Population DynamicsSANANo ratings yet

- Education AimDocument23 pagesEducation AimSANANo ratings yet

- HIS Hospital Management Information System ModuleDocument49 pagesHIS Hospital Management Information System ModuleSANANo ratings yet

- OBG Clinical Procedure, Case Study, NSG Care Plan ANPDocument342 pagesOBG Clinical Procedure, Case Study, NSG Care Plan ANPSANA100% (1)

- Augmentation of LabourDocument16 pagesAugmentation of LabourSANANo ratings yet

- Neonate InfectionDocument14 pagesNeonate InfectionSANANo ratings yet

- Genital Tract Injuries001Document14 pagesGenital Tract Injuries001SANANo ratings yet

- Nursing Diagnosis For Suicidal PersonDocument1 pageNursing Diagnosis For Suicidal PersonSANANo ratings yet

- Research ToolsDocument22 pagesResearch ToolsSANANo ratings yet

- Aptitude TestDocument19 pagesAptitude TestSANANo ratings yet

- Notes On Hospital Management Information SystemDocument49 pagesNotes On Hospital Management Information SystemSANANo ratings yet

- HARMONY TOWARDS DIVERSITY BrochureDocument5 pagesHARMONY TOWARDS DIVERSITY BrochureSANANo ratings yet

- Family Planning 2020Document24 pagesFamily Planning 2020SANANo ratings yet

- Pedia History TakingDocument8 pagesPedia History TakingSANANo ratings yet

- Sepsis and AsepsisDocument53 pagesSepsis and AsepsisSANANo ratings yet

- DefibrillatorDocument10 pagesDefibrillatorSANA100% (1)

- E Stimation of HemoglobinDocument13 pagesE Stimation of HemoglobinSANANo ratings yet

- Knowledge Institute of Nursing: 1st Year G.N.MDocument8 pagesKnowledge Institute of Nursing: 1st Year G.N.MSANANo ratings yet

- OBG DrugsDocument30 pagesOBG DrugsSANANo ratings yet

- Internationally Accepted Rights of The ChildDocument33 pagesInternationally Accepted Rights of The ChildSANA100% (1)

- Knowledge Institute of Nursing: 1st Year G.N.MDocument8 pagesKnowledge Institute of Nursing: 1st Year G.N.MSANANo ratings yet