Professional Documents

Culture Documents

White Paper - FR - Validation AUM

White Paper - FR - Validation AUM

Uploaded by

Fabricio VincesCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

White Paper - FR - Validation AUM

White Paper - FR - Validation AUM

Uploaded by

Fabricio VincesCopyright:

Available Formats

Outer Brow Raiser

Eyes Closed

Cheek Raiser

Lips Part

Lip Corner Puller

Mouth Stretch

Validation

Action Unit Module

By Amogh Gudi and Paul Ivan, VicarVision

A white paper by Noldus Information Technology

introduction

This document presents a validation of the FaceReader 8 Action Unit

Module by evaluating the performance on an annotated dataset. It provides

the agreement between the FaceReader classifications and the coded data-

set, as well as evaluation of the performance of the individual AU classifiers.

dataset

The dataset used for this validation is the Amsterdam Dynamic Facial

Expression Set (ADFES) [2] that consists of 22 models (10 female, 12 male)

performing nine different emotional expressions (anger, disgust, fear, joy,

sadness, surprise, contempt, pride, and embarrassment). As there was no

FACS [1] coding available for this dataset, we had a selection of the data-

base (213 images) annotated manually by two certified FACS coders.

person

characteristics

Gender Male

Age 30-40 year

expression

intensity Cheek Raiser

Neutral

Happy Upper Lip Raiser

sad Lid Tightener

Angry

Surprised

Scared

Disgusted

Lips Part Lip Corner Puller

Contempt

2 — FaceReader - Validation Action Unit Module

agreement

To assess the reliability of the annotated dataset, we can calculate the agree-

ment between the two FACS coders using the Agreement Index, as described

by Paul Ekman et al. in the FACS Manual [1].

formula

This index can be computed for every annotated image according to the

following formula:

(Number of AUs that both coders agree upon) * 2 / The total number of AUs

To pass the official scored by the two coders

FACS certification test

example

[6], an agreement

If an image was coded as 1+2+5+6+12 by one coder and as 5+6+12 by the

score of 0.70 or above other, the agreement index would be: 3 * 2 / 8 = 0.75. Note that the intensi-

should be obtained. ty of the action unit (AU) classification is ignored for the calculation of the

agreement index, the focus is on the AU being active or not.

The agreement be-

tween the FaceReader

agreement

AU classifications and

The agreement between the two human FACS coders on the selection of

the human-coded images from the ADFES dataset was 0.83. A minimum agreement score of

dataset was 0.78. 0.80 is usually regarded as reliable [1], so this minimum is met for the anno-

tation of this database. The agreement between the FaceReader AU classifica-

tions and the human-coded dataset was 0.78. To pass the official FACS certifi-

cation test [6], an agreement score of 0.70 or above should be obtained. This

is achieved for a number of AUs, the next section describes the performance.

3 — FaceReader - Validation Action Unit Module

action unit

performance

Apart from the agreement between FaceReader (FR) and the database, it

is also interesting to examine the accuracy of every individual action unit.

Therefore, the table below shows the performance of 17 AUs that FR is

capable of classifying and were also present in the dataset. The difficulty of

determining the accuracy of AU classifiers, is that the obtained performance

depends heavily on the chosen evaluation metric. Taking this into account,

the table shows the AU performance on five common evaluation metrics, i.e.

Recall, Precision, F1, and Accuracy.

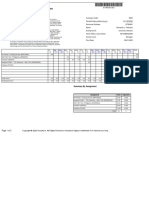

Table. ADFES (AU), 213 annotated images.

AU Present Recall Precision F1 Accuracy

1 100 0,920 0,902 0,911 0,915

2 72 0,986 0,835 0,904 0,930

4 84 0,940 0,782 0,854 0,873

5 67 0,866 0,753 0,806 0,869

6 53 0,849 0,900 0,874 0,939

7 58 0,397 0,639 0,489 0,775

9 22 0,955 1,000 0,977 0,995

10 16 0,750 0,480 0,585 0,920

12 58 0,914 0,815 0,862 0,920

14 54 0,741 0,784 0,762 0,883

15 26 0,885 0,920 0,902 0,977

17 65 0,754 0,803 0,778 0,869

20 21 1,000 0,525 0,689 0,911

23 15 0,733 0,478 0,579 0,925

24 26 0,808 0,636 0,712 0,920

25 86 0,977 0,824 0,894 0,906

26 28 0,929 0,605 0,732 0,911

Average 50,1 0,847 0,746 0,783 0,908

4 — FaceReader - Validation Action Unit Module

evaluation metrics

A brief description for the terms used in the table:

▪ AU – the action unit number.

▪ Present – the number of times an AU was coded in the dataset.

▪ Recall – denotes the ratio of annotated AUs that were detected by

FaceReader. A recall of 0.92 for AU1 indicates, for example, that 92% of the

annotated images with AU1 are classified as such by FaceReader [5].

▪ Precision – a ratio denoting how often FaceReader is correct when clas-

sifying an AU as present. For example, in the case of AU1 the FaceReader

classification is correct 90% of the time [5].

▪ F1 – there exists a trade-off between the recall and precision measures,

and a good classifier ought to have a decent score on both measures. The

F1 measure summarizes this trade-off in a single value and is computed

using the formula: 2 * ((precision * recall) / (precision + recall)) [4].

▪ Accuracy – simply represents the percentage of correct classifications. It

is computed by dividing the number of correctly classified images (both

positive and negative) by the total number of images [3].

action unit evaluation

The F1 measure com- To evaluate and compare the quality of the individual AU classifiers from the

data in the table above, we could make a discrimination between the AU clas-

bines the important sifiers based on the F1 measure. The F1 measure is a suitable metric for this

recall and precision purpose because it combines the important recall and precision measures,

measures, and dis- and displays the largest differences between the AU classifiers. Based on the

F1 measure the best classifiers – those that might be good enough already

plays the largest dif- to pass the FACS test – are AUs 1, 2, 4, 5, 6, 9, 12, 14, 15, 17, 24, 25 and 26 (F1: 0.70

ferences between AU - 0.98); the classifiers that performed reasonably well are AUs 10, 20, and 23

classifiers. (F1: 0.57 - 0.69); and the only AU that performed not so well is AU 7 (F1: 0.49).

FaceReader can also classify AUs 18, 27 and 43, but these were not sufficiently

present in the dataset to evaluate their performance.

5 — FaceReader - Validation Action Unit Module

baby facereader

For validation of Baby FaceReader the Baby FACS Manual Dataset was used,

which consists of 67 annotated images. The table below shows the perfor-

mance of the analysis of Action Units in Baby FaceReader.

Table. Baby FACS Manual (AU), 67 annotated images.

AU Present Recall Precision F1 Accuracy

1 10 0,438 0,700 0,538 0,821

2 11 0,333 0,182 0,235 0,806

3+4 9 0,375 1,000 0,545 0,776

5 10 0,583 0,700 0,636 0,881

6 30 0,636 0,933 0,757 0,731

7 20 0,581 0,900 0,706 0,776

9 8 0,333 0,375 0,353 0,836

12 11 0,306 1,000 0,468 0,627

15 8 0,750 0,375 0,500 0,910

17 13 0,556 0,769 0,645 0,836

20 11 0,583 0,636 0,609 0,866

25 46 0,929 0,848 0,886 0,851

26 21 0,600 0,857 0,706 0,776

27 12 0,846 0,917 0,880 0,955

43 11 0,579 1,000 0,733 0,881

Average 15,4 0,562 0,746 0,613 0,822

6 — FaceReader - Validation Action Unit Module

references

1 Ekman, P.; Friesen, W. V.; Hager, J. C. (2002). Facial action coding system: The manual on CD-ROM.

Instructor’s Guide. Salt Lake City: Network Information Research Co.

2 Van der Schalk, J.; Hawk, S. T.; Fischer, A. H.; Doosje, B. J. (2011). Moving faces, looking places: The

Amsterdam Dynamic Facial Expressions Set (ADFES). Emotion, 11, 907-920. DOI: 10.1037/a0023853

3 http://en.wikipedia.org/wiki/Accuracy

4 http://en.wikipedia.org/wiki/F1_Score

5 http://en.wikipedia.org/wiki/Precision_and_recall

6 http://face-and-emotion.com/dataface/facs/fft.jsp

7 Oster, H. (2016). Baby FACS: Facial Action Coding System for infants and young children.

Unpublished monograph and coding manual. New York University.

Inner Brow Raiser

Upper Lid Raiser

Outer Brow Raiser Cheek Raiser

Upper Lip Raiser Lid Tightener

Lip Corner Puller

Lips Part

Lips Part

Mouth Stretch

7 — FaceReader - Validation Action Unit Module

international headquarters

Noldus Information Technology bv

Wageningen, The Netherlands

Phone: +31-317-473300

Fax: +31-317-424496

E-mail: info@noldus.nl

north american headquarters

Noldus Information Technology Inc.

Leesburg, VA, USA

Phone: +1-703-771-0440

Toll-free: 1-800-355-9541

Fax: +1-703-771-0441

E-mail: info@noldus.com

representation

We are also represented by a

worldwide network of distributors

and regional offices. Visit our website

for contact information.

www.noldus.com

Due to our policy of continuous product improvement,

information in this document is subject to change with-

out notice. The Observer is a registered trademark of

Noldus Information Technology bv. FaceReader is a

trademark of VicarVision bv. © 2019 Noldus Information

Technology bv. All rights reserved.

You might also like

- Aloha Kitchen Troubleshooting GuideDocument24 pagesAloha Kitchen Troubleshooting GuideRicardo Paso GonzalezNo ratings yet

- Creating A Story On Employee Data SheetDocument8 pagesCreating A Story On Employee Data SheetNgumbau MasilaNo ratings yet

- Sun Microsystems Case PDFDocument30 pagesSun Microsystems Case PDFJasdeep SinghNo ratings yet

- Relations and FunctionsDocument10 pagesRelations and Functionskiahjessie100% (6)

- StoneLock Pro User Manual v. 17.02.01 PDFDocument85 pagesStoneLock Pro User Manual v. 17.02.01 PDFAntonio GNo ratings yet

- Clamps Specification GeneralDocument20 pagesClamps Specification Generalpiyush_123456789No ratings yet

- Advanced TheoraticalDocument9 pagesAdvanced TheoraticalMega Pop LockerNo ratings yet

- Ark700a 1Document84 pagesArk700a 1Ana LauraNo ratings yet

- Section 2 0 Current Carrying Capacity of BusbarsDocument13 pagesSection 2 0 Current Carrying Capacity of BusbarsCarlos ChalcoNo ratings yet

- Formulae and Discount Tables: Professional Level Examination Financial ManagementDocument2 pagesFormulae and Discount Tables: Professional Level Examination Financial ManagementClaudine CjNo ratings yet

- ReportDocument15 pagesReportBala HarunaNo ratings yet

- Oup 6Document48 pagesOup 6TAMIZHAN ANo ratings yet

- a1948a9392c842949001ebd059aa752eDocument5 pagesa1948a9392c842949001ebd059aa752enaveenNo ratings yet

- 1233Document4 pages1233Pendu JattNo ratings yet

- ANALYSISDocument4 pagesANALYSISjob371637No ratings yet

- Contoh Scoreboard WIG RSAWDocument14 pagesContoh Scoreboard WIG RSAWFarashanti ArbiatuningsihNo ratings yet

- Hydrotest Spool Manifold XMT (Pep-Mgdp Well DNG-07)Document34 pagesHydrotest Spool Manifold XMT (Pep-Mgdp Well DNG-07)Barrarumi HabibieNo ratings yet

- Compensation and Rewards Management - Session 9-10Document12 pagesCompensation and Rewards Management - Session 9-10Daksh AnejaNo ratings yet

- APM Class Notes Sep 2021 As at 24 February 2021 FINALDocument156 pagesAPM Class Notes Sep 2021 As at 24 February 2021 FINAL3bbas Al-3bbasNo ratings yet

- Appendix 1: Formulae, Ratios and Mathematical Tables: BPP Tutor ToolkitDocument6 pagesAppendix 1: Formulae, Ratios and Mathematical Tables: BPP Tutor ToolkitalibanaylaNo ratings yet

- Mytimeandexpenses Time Report: Summary by AssignmentDocument2 pagesMytimeandexpenses Time Report: Summary by AssignmentAngelica LalisanNo ratings yet

- Prowessdx BrochureDocument8 pagesProwessdx BrochureBCNo ratings yet

- Naive Bayes Model With Python 1684166563Document9 pagesNaive Bayes Model With Python 1684166563mohit c-35No ratings yet

- Assignment Module-9 Coefficient of CorrelationDocument4 pagesAssignment Module-9 Coefficient of CorrelationFranchesca CuraNo ratings yet

- Data Analysis: 4.1 Measurement ModelDocument9 pagesData Analysis: 4.1 Measurement ModelMuhammad Ammar FaisalNo ratings yet

- Hruby Ondrej Hw3Document18 pagesHruby Ondrej Hw3ondrah2No ratings yet

- Acca f9 Class Notes j09 Final 21 JanDocument186 pagesAcca f9 Class Notes j09 Final 21 Jansimon mtNo ratings yet

- U S DividendChampions-LIVEDocument8 pagesU S DividendChampions-LIVEmdc8223No ratings yet

- Alain Charpentier: Presented byDocument16 pagesAlain Charpentier: Presented bycspowerNo ratings yet

- Mastering The Fundamentals of Aws Cost EfficiencyDocument31 pagesMastering The Fundamentals of Aws Cost EfficiencyluckylautnerNo ratings yet

- AFM Class Notes PDFDocument154 pagesAFM Class Notes PDFSiddharth NarayananNo ratings yet

- Statistics PresentationDocument20 pagesStatistics PresentationChoton AminNo ratings yet

- Relacion Edad - TurnoDocument12 pagesRelacion Edad - Turnojhere blancoNo ratings yet

- Excercise DemandPlan&InvMgmtDocument7 pagesExcercise DemandPlan&InvMgmtkarthik sNo ratings yet

- Study of Behavioral Intension Towards The Adoption of E-Hrm With Reference To It SectorDocument19 pagesStudy of Behavioral Intension Towards The Adoption of E-Hrm With Reference To It SectorSindhu ShubhakarNo ratings yet

- Template FINDINGSDocument10 pagesTemplate FINDINGSKafeTEEN SabahNo ratings yet

- Variance Explained: Dimensions / ItemsDocument12 pagesVariance Explained: Dimensions / ItemsAnurag KhandelwalNo ratings yet

- Prowessdx BrochureDocument8 pagesProwessdx Brochuremail meNo ratings yet

- Hasil Olah DataDocument21 pagesHasil Olah Datalailatul badriaNo ratings yet

- Financial Management-1 Project ReportDocument6 pagesFinancial Management-1 Project ReportNakulesh VijayvargiyaNo ratings yet

- Oneway: ONEWAY Gaji BY Bagian /statistics Descriptives Homogeneity /missing Analysis /POSTHOC LSD ALPHA (0.05)Document3 pagesOneway: ONEWAY Gaji BY Bagian /statistics Descriptives Homogeneity /missing Analysis /POSTHOC LSD ALPHA (0.05)daniel WibawaNo ratings yet

- HS50020 - Economics of InsuranceDocument3 pagesHS50020 - Economics of Insurancewigeh54591No ratings yet

- Lecture7appendixa 12thsep2009Document31 pagesLecture7appendixa 12thsep2009Richa ShekharNo ratings yet

- Selection of A Probabilty Distribution Function For Construction Cost EstimationDocument4 pagesSelection of A Probabilty Distribution Function For Construction Cost EstimationEduardo MenaNo ratings yet

- Prowessdx BrochureDocument8 pagesProwessdx BrochureAmol BagulNo ratings yet

- 09.technical Documents - 4.5.6.7.8.9 ClauseDocument106 pages09.technical Documents - 4.5.6.7.8.9 ClauseKarina GorisNo ratings yet

- Case Week 2 - Kelompok 2Document10 pagesCase Week 2 - Kelompok 2Felix FelixNo ratings yet

- Eclectic Accounting ProblemsDocument2 pagesEclectic Accounting ProblemsHarsha MohanNo ratings yet

- Jan Feb 1.7.1 Acquisition 1.7.3 Subscribe: 1.7 Product Funnel Conversion Analysis (Acquisition, Activation)Document55 pagesJan Feb 1.7.1 Acquisition 1.7.3 Subscribe: 1.7 Product Funnel Conversion Analysis (Acquisition, Activation)jeremiah eliorNo ratings yet

- Certificate of Analysis: National Institute of Standards & TechnologyDocument7 pagesCertificate of Analysis: National Institute of Standards & TechnologyCesar BarretoNo ratings yet

- Duy CH20: Peak Summary With Statistics NameDocument8 pagesDuy CH20: Peak Summary With Statistics NameHai quynh Van leNo ratings yet

- Assignment 2-Data Analysis and Report WritingDocument2 pagesAssignment 2-Data Analysis and Report WritingAmith SoyzaNo ratings yet

- Bảng traDocument4 pagesBảng traTrần Thị Mỹ LinhNo ratings yet

- Standard Normal Density Curve (Z) P (Z Z)Document4 pagesStandard Normal Density Curve (Z) P (Z Z)Do Thi Cam GiangNo ratings yet

- Standard Normal Density Curve (Z) P (Z Z)Document4 pagesStandard Normal Density Curve (Z) P (Z Z)Quân VõNo ratings yet

- bảng n,t,chiDocument4 pagesbảng n,t,chihoangdk11345No ratings yet

- Bảng traDocument4 pagesBảng traTrần Thị Mỹ LinhNo ratings yet

- Text Relaibility and ValidityDocument4 pagesText Relaibility and Validityuasad8966No ratings yet

- We LL Co MeDocument24 pagesWe LL Co MeShibly Qureshi TamimNo ratings yet

- Tugas Statistik KORELASIDocument20 pagesTugas Statistik KORELASIDenyraNo ratings yet

- Appendix: Further Discussion of The Weather Data and ResultsDocument4 pagesAppendix: Further Discussion of The Weather Data and ResultsAmin AzadNo ratings yet

- Outputbaocao Lai Thi Huyen TraDocument11 pagesOutputbaocao Lai Thi Huyen TraHuyền TràNo ratings yet

- Tank and Dike Work BookDocument9 pagesTank and Dike Work BookElvisLeeNo ratings yet

- Rajasthan Spinning and Weeving Mill: A Analytical Study ofDocument16 pagesRajasthan Spinning and Weeving Mill: A Analytical Study ofprateekksoniNo ratings yet

- ECC024 Econometric Modelling 1 Course Work ExerciseDocument4 pagesECC024 Econometric Modelling 1 Course Work Exerciseben_jamin_19No ratings yet

- Cloud Computing Fundamentals: Introduction To Microsoft Azure Az-900 ExamFrom EverandCloud Computing Fundamentals: Introduction To Microsoft Azure Az-900 ExamNo ratings yet

- Noldus White Paper Facereader MethodologyDocument16 pagesNoldus White Paper Facereader MethodologyFabricio VincesNo ratings yet

- ND White Paper - FaceReader Methodology ScreenDocument6 pagesND White Paper - FaceReader Methodology ScreenFabricio VincesNo ratings yet

- Facial-Expression Recognition An Emergent Approach To The Measurement of Turist Satisfaction Through EmotionsDocument14 pagesFacial-Expression Recognition An Emergent Approach To The Measurement of Turist Satisfaction Through EmotionsFabricio VincesNo ratings yet

- FC Denuyl and Vankuilenburg 2005Document2 pagesFC Denuyl and Vankuilenburg 2005Fabricio VincesNo ratings yet

- METHODS OF TEACHING Lesson 1Document19 pagesMETHODS OF TEACHING Lesson 1Joey Bojo Tromes BolinasNo ratings yet

- Users Manual For VRP Spreadsheet Solver v3Document18 pagesUsers Manual For VRP Spreadsheet Solver v3Hernandez Miguel AngelNo ratings yet

- Putting The Balanced ScorecardDocument12 pagesPutting The Balanced ScorecardArnabNo ratings yet

- Biopad BiosprayDocument24 pagesBiopad BiosprayLuci ContiuNo ratings yet

- English 8 DLPDocument21 pagesEnglish 8 DLPRichelle Cayubit Dela Peña-LosdoNo ratings yet

- 1044-472013 Bagrut Module F Answers To Rules of The GameDocument9 pages1044-472013 Bagrut Module F Answers To Rules of The Gamegaya friedmanNo ratings yet

- Attitude Is EverythingDocument10 pagesAttitude Is Everythingzawiah_abdullah_1No ratings yet

- TEP Course FileDocument22 pagesTEP Course FilemohsinpakistanNo ratings yet

- cd74hc4051 q1Document18 pagescd74hc4051 q1richardleonn2No ratings yet

- Digital Clock ProjectDocument20 pagesDigital Clock ProjectMenzeli Mtunzi40% (5)

- Median and ModeDocument24 pagesMedian and Modemayra kshyapNo ratings yet

- Ocr A-Level History Coursework DeadlineDocument8 pagesOcr A-Level History Coursework Deadlinelozuzimobow3100% (2)

- La Sallian SaintsDocument13 pagesLa Sallian SaintsJosh BilbaoNo ratings yet

- Blibli Merchant API Documentation v-3.6.0 PDFDocument92 pagesBlibli Merchant API Documentation v-3.6.0 PDFKanto TerrorNo ratings yet

- Iecep Math 5Document5 pagesIecep Math 5Julio Gabriel AseronNo ratings yet

- TOEIC - Intermediate Feelings, Emotions, and Opinions Vocabulary Set 6 PDFDocument6 pagesTOEIC - Intermediate Feelings, Emotions, and Opinions Vocabulary Set 6 PDFNguraIrunguNo ratings yet

- IB History IA SampleDocument14 pagesIB History IA SampleEscalusNo ratings yet

- Assignment On InflationDocument7 pagesAssignment On InflationKhalid Mahmood100% (4)

- Online ExaminationDocument30 pagesOnline ExaminationArihant BothraNo ratings yet

- EP001 LifeCoachSchoolTranscriptDocument13 pagesEP001 LifeCoachSchoolTranscriptVan GuedesNo ratings yet

- IELTS MASTER - IELTS Reading Test 3Document1 pageIELTS MASTER - IELTS Reading Test 3poon isaacNo ratings yet

- IAD Compare Contrast Frame Method CC 2018Document2 pagesIAD Compare Contrast Frame Method CC 2018Sri NarendiranNo ratings yet

- Siddhashram PanchangDocument8 pagesSiddhashram Panchangomshanti3000100% (1)

- GAD When Did GAD Came Into Being?Document3 pagesGAD When Did GAD Came Into Being?John Alvin de LaraNo ratings yet