Professional Documents

Culture Documents

Pros and Cons of Autonomous Weapons Systems: Amitai Etzioni, PHD Oren Etzioni, PHD

Pros and Cons of Autonomous Weapons Systems: Amitai Etzioni, PHD Oren Etzioni, PHD

Uploaded by

ubaidOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Pros and Cons of Autonomous Weapons Systems: Amitai Etzioni, PHD Oren Etzioni, PHD

Pros and Cons of Autonomous Weapons Systems: Amitai Etzioni, PHD Oren Etzioni, PHD

Uploaded by

ubaidCopyright:

Available Formats

Pros and Cons of

Autonomous Weapons

Systems

Amitai Etzioni, PhD

Oren Etzioni, PhD

A utonomous weapons systems and military ro-

bots are progressing from science fiction mov-

ies to designers’ drawing boards, to engineer-

ing laboratories, and to the battlefield. These machines

have prompted a debate among military planners,

systems generally point to several military advantages.

First, autonomous weapons systems act as a force mul-

tiplier. That is, fewer warfighters are needed for a given

mission, and the efficacy of each warfighter is greater.

Next, advocates credit autonomous weapons systems with

roboticists, and ethicists about the development and expanding the battlefield, allowing combat to reach into

deployment of weapons that can perform increasingly areas that were previously inaccessible. Finally, autono-

advanced functions, including targeting and application mous weapons systems can reduce casualties by removing

of force, with little or no human oversight. human warfighters from dangerous missions.1

Some military experts hold that autonomous The Department of Defense’s Unmanned Systems

weapons systems not only confer significant strategic Roadmap: 2007-2032 provides additional reasons for

and tactical advantages in the battleground but also pursuing autonomous weapons systems. These include

that they are preferable on moral grounds to the use that robots are better suited than humans for “‘dull, dirty,

of human combatants. In contrast, critics hold that or dangerous’ missions.”2 An example of a dull mission is

these weapons should be curbed, if not banned alto- long-duration sorties. An example of a dirty mission is

gether, for a variety of moral and legal reasons. This one that exposes humans to potentially harmful radio-

article first reviews arguments by those who favor logical material. An example of a dangerous mission is

autonomous weapons systems and then by those who explosive ordnance disposal. Maj. Jeffrey S. Thurnher,

oppose them. Next, it discusses challenges to limiting U.S. Army, adds, “[lethal autonomous robots] have

and defining autonomous weapons. Finally, it closes the unique potential to operate at a tempo faster than

with a policy recommendation. humans can possibly achieve and to lethally strike even

when communications links have been severed.”3

Arguments in Support of In addition, the long-term savings that could be

Autonomous Weapons Systems achieved through fielding an army of military robots

Support for autonomous weapons systems falls into have been highlighted. In a 2013 article published in The

two general categories. Some members of the defense Fiscal Times, David Francis cites Department of Defense

community advocate autonomous weapons because of figures showing that “each soldier in Afghanistan costs

military advantages. Other supporters emphasize moral the Pentagon roughly $850,000 per year.”4 Some esti-

justifications for using them. mate the cost per year to be even higher. Conversely,

Military advantages. Those who call for further according to Francis, “the TALON robot—a small rover

development and deployment of autonomous weapons that can be outfitted with weapons, costs $230,000.”5

72 May-June 2017 MILITARY REVIEW

AUTONOMOUS WEAPONS SYSTEMS

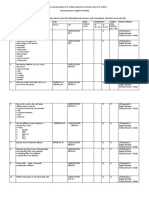

According to Defense News, Gen. Robert Cone, former As autonomous weapons systems move from concept to reality, military

commander of the U.S. Army Training and Doctrine planners, roboticists, and ethicists debate the advantages, disadvantages,

and morality of their use in current and future operating environments. (Im-

Command, suggested at the 2014 Army Aviation age by Peggy Frierson)

Symposium that by relying more on “support robots,”

the Army eventually could reduce the size of a brigade

from four thousand to three thousand soldiers without

a concomitant reduction in effectiveness.6 and unpredictable action that could confuse an op-

Air Force Maj. Jason S. DeSon, writing in the Air ponent. More striking still, Air Force Capt. Michael

Force Law Review, notes the potential advantages of Byrnes predicts that a single unmanned aerial vehicle

autonomous aerial weapons systems.7 According to with machine-controlled maneuvering and accuracy

DeSon, the physical strain of high-G maneuvers and could, “with a few hundred rounds of ammunition

the intense mental concentration and situational and sufficient fuel reserves,” take out an entire fleet of

awareness required of fighter pilots make them very aircraft, presumably one with human pilots.8

prone to fatigue and exhaustion; robot pilots, on the In 2012, a report by the Defense Science Board, in

other hand would not be subject to these physiological support of the Office of the Under Secretary of Defense

and mental constraints. Moreover, fully autonomous for Acquisition, Technology and Logistics, identified

planes could be programmed to take genuinely random “six key areas in which advances in autonomy would

MILITARY REVIEW May-June 2017 73

have significant benefit to [an] unmanned system: to human fighters. For example, roboticist Ronald C.

perception, planning, learning, human-robot interac- Arkin believes autonomous robots in the future will

tion, natural language understanding, and multiagent be able to act more “humanely” on the battlefield for

coordination.”9 Perception, or perceptual processing, a number of reasons, including that they do not need

refers to sensors and sensing. Sensors include hardware, to be programmed with a self-preservation instinct,

and sensing includes software.10 potentially eliminating the need for a “shoot-first, ask

Next, according to the Defense Science Board, plan- questions later” attitude.21 The judgments of autonomous

ning refers to “computing a sequence or partial order of weapons systems will not be clouded by emotions such

actions that … [achieve] a desired state.”11 The process re- as fear or hysteria, and the systems will be able to process

lies on effective processes and “algorithms needed to make much more incoming sensory information than humans

decisions about action (provide autonomy) in situations without discarding or distorting it to fit preconceived

in which humans are not in the environment (e.g., space, notions. Finally, per Arkin, in teams comprised of human

the ocean).”12 Then, learning refers to how machines can and robot soldiers, the robots could be more relied upon

collect and process large amounts of data into knowledge. to report ethical infractions they observed than would a

The report asserts that research has shown machines team of humans who might close ranks.22

process data into knowledge more effectively than people Lt. Col. Douglas A. Pryer, U.S. Army, asserts there

do.13 It gives the example of machine learning for autono- might be ethical advantages to removing humans from

mous navigation in land vehicles and robots.14 high-stress combat zones in favor of robots. He points

Human-robot interaction refers to “how people work or to neuroscience research that suggests the neural

play with robots.”15 Robots are quite different from other circuits responsible for conscious self-control can shut

computers or tools because they are “physically situated down when overloaded with stress, leading to sexual

agents,” and human users interact with them in distinct assaults and other crimes that soldiers would otherwise

ways.16 Research on interaction needs to span a number be less likely to commit. However, Pryer sets aside the

of domains well beyond engineering, including psycholo- question of whether or not waging war via robots is

gy, cognitive science, and communications, among others. ethical in the abstract. Instead, he suggests that because

“Natural language processing concerns … systems that it sparks so much moral outrage among the populations

can communicate with people using ordinary human from whom the United States most needs support,

languages.”17 Moreover, “natural language is the most robot warfare has serious strategic disadvantages, and it

normal and intuitive way for humans to instruct au- fuels the cycle of perpetual warfare.23

tonomous systems; it allows them to provide diverse,

high-level goals and strategies rather than detailed tele- Arguments Opposed to

operation.”18 Hence, further development of the ability of Autonomous Weapons Systems

autonomous weapons systems to respond to commands While some support autonomous weapons systems

in a natural language is necessary. with moral arguments, others base their opposition on

Finally, the Defense Science Board uses the term moral grounds. Still others assert that moral arguments

multiagent coordination for circumstances in which a task against autonomous weapons systems are misguided.

is distributed among “multiple robots, software agents, Opposition on moral grounds. In July 2015, an open

or humans.”19 Tasks could be centrally planned or coor- letter calling for a ban on autonomous weapons was

dinated through interactions of the agents. This sort of released at an international joint conference on artificial

coordination goes beyond mere cooperation because “it intelligence. The letter warns, “Artificial Intelligence (AI)

assumes that the agents have a cognitive understanding technology has reached a point where the deployment of

of each other’s capabilities, can monitor progress towards such systems is—practically if not legally—feasible within

the goal, and engage in more human-like teamwork.”20 years, not decades, and the stakes are high: autonomous

Moral justifications. Several military experts and weapons have been described as the third revolution in

roboticists have argued that autonomous weapons sys- warfare, after gunpowder and nuclear arms.”24 The letter

tems should not only be regarded as morally acceptable also notes that AI has the potential to benefit humanity,

but also that they would in fact be ethically preferable but that if a military AI arms race ensues, AI’s reputation

74 May-June 2017 MILITARY REVIEW

AUTONOMOUS WEAPONS SYSTEMS

could be tarnished, and a public backlash might curtail even for humans.29 Allowing AI to make decisions about

future benefits of AI. The letter has an impressive list of targeting will most likely result in civilian casualties and

signatories, including Elon Musk (inventor and founder unacceptable collateral damage.

of Tesla), Steve Wozniak (cofounder of Apple), physicist Another major concern is the problem of ac-

Stephen Hawking (University of Cambridge), and Noam countability when autonomous weapons systems are

Chomsky (Massachusetts Institute of Technology), deployed. Ethicist Robert Sparrow highlights this

among others. Over three thousand AI and robotics ethical issue by noting that a fundamental condition

researchers have also signed the letter. The open letter of international humanitarian law, or jus in bello, re-

simply calls for “a ban on offensive autonomous weapons quires that some person must be held responsible for

beyond meaningful human control.”25 civilian deaths. Any weapon or other means of war

We note in passing that it is often unclear whether that makes it impossible to identify responsibility for

a weapon is offensive or defensive. Thus, many assume the casualties it causes does not meet the require-

that an effective missile defense shield is strictly defen- ments of jus in bello, and, therefore, should not be

sive, but it can be extremely destabilizing if it allows employed in war.30

one nation to launch a nuclear strike against another This issue arises because AI-equipped machines

without fear of retaliation. make decisions on their own, so it is difficult to deter-

In April 2013, the United Nations (UN) special mine whether a flawed decision is due to flaws in the

rapporteur on extrajudicial, summary, or arbitrary program or in the autonomous deliberations of the

executions presented a report to the UN Human Rights AI-equipped (so-called smart) machines. The nature

Council. The report recommended that member states of this problem was highlighted when a driverless car

should declare and implement moratoria on the testing, violated the speed limits by moving too slowly on a

production, transfer, and deployment of lethal auton- highway, and it was unclear to whom the ticket should

omous robotics (LARs) until an internationally agreed be issued.31 In situations where a human being makes

upon framework for LARs has been established.26 the decision to use force against a target, there is a

That same year, a group of engineers, AI and clear chain of accountability, stretching from whoever

robotics experts, and other scientists and researchers actually “pulled the trigger” to the commander who

from thirty-seven countries issued the “Scientists’ Call gave the order. In the case of autonomous weapons

to Ban Autonomous Lethal Robots.” The statement systems, no such clarity exists. It is unclear who or

notes the lack of scientific evidence that robots could, what are to be blamed

in the future, have “the functionality required for or held liable.

accurate target identification, situational awareness, Oren Etzioni is chief

executive officer of the

or decisions regarding the proportional use of force.”27

Allen Institute for Artificial

Hence, they may cause a high level of collateral dam- Amitai Etzioni is a Intelligence. He received a

age. The statement ends by insisting that “decisions professor of international PhD from Carnegie Mellon

about the application of violent force must not be relations at The George University and a BA from

delegated to machines.”28 Washington University. He Harvard University. He has

Indeed, the delegation of life-or-death decision mak- served as a senior advisor been a professor at the

at the Carter White House University of Washington’s

ing to nonhuman agents is a recurring concern of those and taught at Colum- computer science depart-

who oppose autonomous weapons systems. The most bia University, Harvard ment since 1991. He was

obvious manifestation of this concern relates to systems Business School, and the the founder or cofounder

that are capable of choosing their own targets. Thus, high- University of California of several companies,

ly regarded computer scientist Noel Sharkey has called at Berkeley. A study by including Farecast (later sold

for a ban on “lethal autonomous targeting” because it vi- Richard Posner ranked him to Microsoft) and Decide

among the top one hun- (later sold to eBay), and the

olates the Principle of Distinction, considered one of the dred American intellectu- author of over one hundred

most important rules of armed conflict—autonomous als. His most recent book technical papers that have

weapons systems will find it very hard to determine who is Foreign Policy: Thinking garnered over twenty-five

is a civilian and who is a combatant, which is difficult Outside the Box (2016). thousand citations.

MILITARY REVIEW May-June 2017 75

What Sharkey, Sparrow and the signatories of the seeking retaliation for harm done to their compatri-

open letter propose could be labeled “upstream reg- ots.35 Lucas holds that the debate thus far has been

ulation,” that is, a proposal for setting limits on the obfuscated by the confusion of machine autonomy

development of autonomous weapons systems tech- with moral autonomy. The Roomba vacuum cleaner

nology and drawing red lines that future technological and Patriot missile “are both ‘autonomous’ in that

developments should not be allowed to cross. This they perform their assigned missions, including

kind of upstream approach tries to foresee the direc- encountering and responding to obstacles, problems,

tion of technological development and preempt the and unforeseen circumstances with minimal human

dangers such developments would pose. Others prefer oversight,” but not in the sense that they can change

“downstream regulation,” which takes a wait-and-see or abort their mission if they have “moral objec-

approach by developing regulations as new advances tions.”36 Lucas thus holds that the primary concern

occur. Legal scholars Kenneth Anderson and Matthew of engineers and designers developing autonomous

Waxman, who advocate this approach, argue that reg- weapons systems should not be ethics but rather

ulation will have to emerge along with the technology safety and reliability, which means taking due care to

because they believe that morality will coevolve with address the possible risks of malfunctions, mistakes,

technological development.32 or misuse that autonomous weapons systems will

Thus, arguments about the irreplaceability of human present. We note, though, that safety is of course a

conscience and moral judgment may have to be revisit- moral value as well.

ed.33 In addition, they suggest that as humans become Lt. Col. Shane R. Reeves and Maj. William J.

more accustomed to machines performing functions with Johnson, judge advocates in the U.S. Army, note

life-or-death implications or consequences (such as driv- that there are battlefields absent of civilians, such as

ing cars or performing surgeries), humans will most likely underwater and space, where autonomous weapons

become more comfortable with AI technology’s incor- could reduce the possibility of suffering and death by

poration into weaponry. Thus, Anderson and Waxman eliminating the need for combatants.37 We note that

propose what might be considered a communitarian this valid observation does not agitate against a ban in

solution by suggesting that the United States should work other, in effect most, battlefields.

on developing norms and principles (rather than binding Michael N. Schmitt of the Naval War College

legal rules) guiding and constraining research and devel- makes a distinction between weapons that are illegal

opment—and eventual deployment—of autonomous per se and the unlawful use of otherwise legal weap-

weapons systems. Those norms could help establish ex- ons. For example, a rifle is not prohibited under

pectations about legally or ethically appropriate conduct. international law, but using it to shoot civilians would

Anderson and Waxman write, constitute an unlawful use. On the other hand, some

To be successful, the United States gov- weapons (e.g., biological weapons) are unlawful per

ernment would have to resist two extreme se, even when used only against combatants. Thus,

instincts. It would have to resist its own Schmitt grants that some autonomous weapons

instincts to hunker down behind secrecy and systems might contravene international law, but “it

avoid discussing and defending even guiding is categorically not the case that all such systems

principles. It would also have to refuse to cede will do so.”38 Thus, even an autonomous system that

the moral high ground to critics of autono- is incapable of distinguishing between civilians and

mous lethal systems, opponents demanding combatants should not necessarily be unlawful per

some grand international treaty or multilater- se, as autonomous weapons systems could be used in

al regime to regulate or even prohibit them.34 situations where no civilians were present, such as

Counterarguments. In response, some argue against tank formations in the desert or against war-

against any attempt to apply to robots the language ships. Such a system could be used unlawfully, though,

of morality that applies to human agents. Military if it were employed in contexts where civilians were

ethicist George Lucas Jr. points out, for example, that present. We assert that some limitations on such

robots cannot feel anger or a desire to “get even” by weapons should be called for.

76 May-June 2017 MILITARY REVIEW

AUTONOMOUS WEAPONS SYSTEMS

In their review of the debate, legal scholars Soldiers from 2nd Battalion, 27th Infantry Regiment, 3rd Brigade

Gregory Noone and Diana Noone conclude that ev- Combat Team, 25th Infantry Division, move forward toward simulat-

ed opposing forces with a multipurpose unmanned tactical transport

eryone is in agreement that any autonomous weapons 22 July 2016 during the Pacific Manned-Unmanned Initiative at Ma-

system would have to comply with the Law of Armed rine Corps Training Area Bellows, Hawaii. (Photo by Staff Sgt. Christo-

Conflict (LOAC), and thus be able to distinguish pher Hubenthal, U.S. Air Force)

between combatants and noncombatants. They write,

“No academic or practitioner is stating anything to

the contrary; therefore, this part of any argument

from either side must be ignored as a red herring. Limits on autonomous weapons systems. We take

Simply put, no one would agree to any weapon that it for granted that no nation would agree to forswear

ignores LOAC obligations.”39 the use of autonomous weapons systems unless its

adversaries would do the same. At first blush, it may

Limits on Autonomous seem that it is not beyond the realm of possibility to

Weapons Systems and Definitions obtain an international agreement to ban autonomous

of Autonomy weapons systems or at least some kinds of them.

The international community has agreed to limits Many bans exist in one category or another of

on mines and chemical and biological weapons, but weapons, and they have been quite well honored

an agreement on limiting autonomous weapons and enforced. These include the Convention on

systems would meet numerous challenges. One chal- the Prohibition of the Use, Stockpiling, Production

lenge is the lack of consensus on how to define the and Transfer of Anti-Personnel Mines and on their

autonomy of weapons systems, even among members Destruction (known as the Ottawa Treaty, which

of the Department of Defense. A standard defini- became international law in 1999); the Chemical

tion that accounts for levels of autonomy could help Weapons Convention (ratified in 1997); and the

guide an incremental approach to proposing limits. Convention on the Prohibition of the Development,

MILITARY REVIEW May-June 2017 77

Production and Stockpiling of Bacteriological concepts suggest rather different views on the future

(Biological) and Toxin Weapons and on their of robotic warfare. One definition, used by the Defense

Destruction (known as the Biological Weapons Science Board, views autonomy merely as high-end auto-

Convention, adopted in 1975). The record of the mation: “a capability (or a set of capabilities) that enables

Treaty on the Nonproliferation of Nuclear Weapons a particular action of a system to be automatic or, within

(adopted in 1970) is more complicated, but it is credit- programmed boundaries, ‘self-governing.’”41 According to

ed with having stopped several nations from developing this definition, already existing capabilities, such as auto-

nuclear arms and causing at least one to give them up. pilot used in aircraft, could qualify as autonomous.

Some advocates of a ban on autonomous weapons Another definition, used in the Unmanned

systems seek to ban not merely production and de- Systems Integrated Roadmap FY2011–2036, suggests a

qualitatively different view of au-

tonomy: “an autonomous system is

T

able to make a decision based on a

he U.S. Army Robotic and Autonomous Sys-

tems Strategy, published March 2017 by set of rules and/or limitations. It

U.S. Army Training and Doctrine Command, is able to determine what infor-

describes how the Army intends to integrate mation is important in making a

new technologies into future organizations to decision.”42 In this view, autono-

help ensure overmatch against increasingly ca- mous systems are less predictable

pable enemies. Five capability objectives are to

than merely automated ones, as

increase situational awareness, lighten soldiers’

workloads, sustain the force, facilitate move- the AI not only is performing a

ment and maneuver, and protect the force. specified action but also is making

To view the strategy, visit https://www.tradoc. decisions and thus potentially tak-

army.mil/FrontPageContent/Docs/RAS_Strate- ing an action that a human did not

gy.pdf. order. A human is still responsible

for programming the behavior of

the autonomous system, and the

ployment but also research, development, and testing actions the system takes would have to be consistent

of these machines. This may well be impossible as with the laws and strategies provided by humans.

autonomous weapons systems can be developed and However, no individual action would be completely

tested in small workshops and do not leave a trail. predictable or preprogrammed.

Nor could one rely on satellites for inspection data It is easy to find still other definitions of autono-

for the same reasons. We hence assume that if such a my. The International Committee of the Red Cross

ban were possible, it would mainly focus on deploy- defines autonomous weapons as those able to “inde-

ment and mass production. pendently select and attack targets, i.e., with auton-

Even so, such a ban would face considerable omy in the ‘critical functions’ of acquiring, tracking,

difficulties. While it is possible to determine what selecting and attacking targets.”43

is a chemical weapon and what is not (despite some A 2012 Human Rights Watch report by Bonnie

disagreements at the margin, for example, about law Docherty, Losing Humanity: The Case against Killer

enforcement use of irritant chemical weapons), and Robots, defines three categories of autonomy. Based

to clearly define nuclear arms or land mines, auton- on the kind of human involvement, the categories are

omous weapons systems come with very different human-in-the-loop, human-on-the-loop, and human-

levels of autonomy.40 A ban on all autonomous weap- out-of-the-loop weapons.44

ons would require foregoing many modern weapons “Human-in-the-loop weapons [are] robots that can

already mass produced and deployed. select targets and deliver force only with a human com-

Definitions of autonomy. Different definitions mand.”45 Numerous examples of the first type already are

have been attached to the word “autonomy” in different in use. For example, Israel’s Iron Dome system detects in-

Department of Defense documents, and the resulting coming rockets, predicts their trajectory, and then sends

78 May-June 2017 MILITARY REVIEW

AUTONOMOUS WEAPONS SYSTEMS

this information to a human soldier who decides whether instructions and parameters its producers, program-

to launch an interceptor rocket.46 mers, and users provided to the machine.

“Human-on-the-loop weapons [are] robots that

can select targets and deliver force under the over- A Way to Initiate an

sight of a human operator who can override the ro- International Agreement

bots’ actions.”47 An example mentioned by Docherty Limiting Autonomous Weapons

includes the SGR-A1 built by Samsung, a sentry We find it hard to imagine nations agreeing to re-

robot used along the Korean Demilitarized Zone. turn to a world in which weapons had no measure of

It uses a low-light camera and pattern-recognition autonomy. On the contrary, development in AI leads

software to detect intruders and then issues a verbal one to expect that more and more machines and

warning. If the intruder does not surrender, the ro- instruments of all kinds will become more autono-

bot has a machine gun that can be fired remotely by a mous. Bombers and fighter aircraft having no human

soldier the robot has alerted, or by the robot itself if pilot seem inevitable. Although it is true that any

it is in fully automatic mode.48 level of autonomy entails, by definition, some loss of

The United States also deploys human-on-the-loop human control, this genie has left the bottle and we

weapons systems. For example, the MK 15 Phalanx see no way to put it back again.

Close-In Weapons System has been used on Navy ships Where to begin. The most promising way to

since the 1980s, and it is capable of detecting, evaluat- proceed is to determine whether one can obtain an in-

ing, tracking, engaging, and using force against antiship ternational agreement to ban fully autonomous weapons

missiles and high-speed aircraft threats without any with missions that cannot be aborted and that cannot

human commands.49 The Center for a New American be recalled once they are launched. If they malfunction

Security published a white paper that estimated as and target civilian centers, there is no way to stop them.

of 2015 at least thirty countries have deployed or are Like unexploded landmines placed without marks,

developing human-supervised systems.50 these weapons will continue to kill even after the sides

“Human-out-of-the-loop weapons [are] robots settle their difference and sue for peace.

capable of selecting targets and delivering force with- One may argue that gaining such an agreement

out any human input or interaction.”51 This kind of should not be arduous because no rational policy

autonomous weapons system is the source of much maker will favor such a weapon. Indeed, the Pentagon

concern about “killing machines.” Military strategist has directed that “autonomous and semi-autonomous

Thomas K. Adams warned that, in the future, humans weapon systems shall be designed to allow commanders

would be reduced to making only initial policy deci- and operators to exercise appropriate levels of human

sions about war, and they would have mere symbolic judgment over the use of force.”54

authority over automated systems.52 In the Human Why to begin. However, one should note that

Rights Watch report, Docherty warns, “By eliminating human-out-of-the-loop arms are very effective in re-

human involvement in the decision to use lethal force inforcing a red line. Declaration by representatives of

in armed conflict, fully autonomous weapons would one nation that if another nation engages in a certain

undermine other, nonlegal protections for civilians.”53 kind of hostile behavior, swift and severe retaliation

For example, a repressive dictator could deploy will follow, are open to misinterpretation by the oth-

emotionless robots to kill and instill fear among a er side, even if backed up with deployment of troops

population without having to worry about soldiers or other military assets.

who might empathize with their victims (who might Leaders, drawing on considerable historical expe-

be neighbors, acquaintances, or even family members) rience, may bet that they be able to cross the red line

and then turn against the dictator. and be spared because of one reason or another. Hence,

For the purposes of this paper, we take autonomy arms without a human in the loop make for much

to mean a machine has the ability to make decisions more credible red lines. (This is a form of the “precom-

based on information gathered by the machine and to mitment strategy” discussed by Thomas Schelling in

act on the basis of its own deliberations, beyond the Arms and Influence, in which one party limits its own

MILITARY REVIEW May-June 2017 79

options by obligating itself to retaliate, thus making its might malfunction and attack civilian centers. Finally, if

deterrence more credible.)55 a ban on fully autonomous weapons were agreed upon

We suggest that nations might be willing to forgo and means of verification were developed, one could

this advantage of fully autonomous arms in order to aspire to move toward limiting weapons with a high

gain the assurance that once hostilities ceased, they but not full measure of autonomy.

could avoid becoming entangled in new rounds of

fighting because some bombers were still running loose The authors are indebted to David Kroeker Maus for

and attacking the other side, or because some bombers substantial research on this article.

Notes

1. Gary E. Marchant et al., “International Governance of 17. Ibid., 49.

Autonomous Military Robots,” Columbia Science and Technology 18. Ibid.

Law Review 12 ( June 2011): 272–76, accessed 27 March 2017, 19. Ibid., 50.

http://stlr.org/download/volumes/volume12/marchant.pdf. 20. Ibid.

2. James R. Clapper Jr. et al., Unmanned Systems Roadmap: 21. Ronald C. Arkin, “The Case for Ethical Autonomy in

2007-2032 (Washington, DC: Department of Defense [DOD], Unmanned Systems,” Journal of Military Ethics 9, no. 4 (2010):

2007), 19, accessed 28 March 2017, http://www.globalsecurity. 332–41.

org/intell/library/reports/2007/dod-unmanned-systems-road- 22. Ibid.

map_2007-2032.pdf. 23. Douglas A. Pryer, “The Rise of the Machines: Why Increas-

3. Jeffrey S. Thurnher, “Legal Implications of Fully Autono- ingly ‘Perfect’ Weapons Help Perpetuate Our Wars and Endanger

mous Targeting,” Joint Force Quarterly 67 (4th Quarter, October Our Nation,” Military Review 93, no. 2 (2013): 14–24.

2012): 83, accessed 8 March 2017, http://ndupress.ndu.edu/ 24. “Autonomous Weapons: An Open Letter from AI [Artifi-

Portals/68/Documents/jfq/jfq-67/JFQ-67_77-84_Thurnher.pdf. cial Intelligence] & Robotics Researchers,” Future of Life Institute

4. David Francis, “How a New Army of Robots Can Cut the website, 28 July 2015, accessed 8 March 2017, http://futureoflife.

Defense Budget,” Fiscal Times, 2 April 2013, accessed 8 March org/open-letter-autonomous-weapons/.

2017, http://www.thefiscaltimes.com/Articles/2013/04/02/How- 25. Ibid.

a-New-Army-of-Robots-Can-Cut-the-Defense-Budget. Francis 26. Report of the Special Rapporteur on Extrajudicial, Sum-

attributes the $850,000 cost estimate to an unnamed DOD mary, or Arbitrary Executions, Christof Heyns, September 2013,

source, presumed from 2012 or 2013. United Nations Human Rights Council, 23rd Session, Agenda

5. Ibid. Item 3, United Nations Document A/HRC/23/47.

6. Quoted in Evan Ackerman, “U.S. Army Considers 27. International Committee for Robot Arms Control (ICRAC),

Replacing Thousands of Soldiers with Robots,” IEEE Spec- “Scientists’ Call to Ban Autonomous Lethal Robots,” ICRAC website,

trum, 22 January 2014, accessed 28 March 2016, http:// October 2013, accessed 24 March 2017, icrac.net.

spectrum.ieee.org/automaton/robotics/military-robots/ 28. Ibid.

army-considers-replacing-thousands-of-soldiers-with-robots. 29. Noel Sharkey, “Saying ‘No!’ to Lethal Autonomous Target-

7. Jason S. DeSon, “Automating the Right Stuff? The Hidden ing,” Journal of Military Ethics 9, no. 4 (2010): 369–83, accessed

Ramifications of Ensuring Autonomous Aerial Weapon Systems 28 March 2017, doi:10.1080/15027570.2010.537903. For more

Comply with International Humanitarian Law,” Air Force Law Review on this subject, see Peter Asaro, “On Banning Autonomous Weap-

72 (2015): 85–122, accessed 27 March 2017, http://www.afjag.af.mil/ on Systems: Human Rights, Automation, and the Dehumanization

Portals/77/documents/AFD-150721-006.pdf. of Lethal Decision-making,” International Review of the Red Cross

8. Michael Byrnes, “Nightfall: Machine Autonomy in Air- 94, no. 886 (2012): 687–709.

to-Air Combat,” Air & Space Power Journal 23, no. 3 (May- 30. Robert Sparrow, “Killer Robots,” Journal of Applied Philos-

June 2014): 54, accessed 8 March 2017, http://www.au.af. ophy 24, no. 1 (2007): 62–77.

mil/au/afri/aspj/digital/pdf/articles/2014-May-Jun/F-Byrnes. 31. For more discussion on this topic, see Amitai Etzioni

pdf?source=GovD. and Oren Etzioni, “Keeping AI Legal,” Vanderbilt Journal of

9. Defense Science Board, Task Force Report: The Role of Entertainment & Technology Law 19, no. 1 (Fall 2016): 133–46,

Autonomy in DoD Systems (Washington, DC: Office of the Under accessed 8 March 2017, http://www.jetlaw.org/wp-content/up-

Secretary of Defense for Acquisition, Technology and Logistics, loads/2016/12/Etzioni_Final.pdf.

July 2012), 31. 32. Kenneth Anderson and Matthew C. Waxman, “Law and

10. Ibid., 33. Ethics for Autonomous Weapon Systems: Why a Ban Won’t Work

11. Ibid., 38–39. and How the Laws of War Can,” Stanford University, Hoover

12. Ibid., 39. Institution Press, Jean Perkins Task Force on National Security and

13. Ibid., 41. Law Essay Series, 9 April 2013.

14. Ibid., 42. 33. Ibid.

15. Ibid., 44. 34. Anderson and Waxman, “Law and Ethics for Robot Sol-

16. Ibid. diers,” Policy Review 176 (December 2012): 46.

80 May-June 2017 MILITARY REVIEW

35. George Lucas Jr., “Engineering, Ethics & Industry: the Moral

Challenges of Lethal Autonomy,” in Killing by Remote Control: The

Ethics of an Unmanned Military, ed. Bradley Jay Strawser (New York:

Oxford, 2013).

36. Ibid., 218.

37. Shane Reeves and William Johnson, “Autonomous Weapons:

Are You Sure these Are Killer Robots? Can We Talk About It?,” in

Department of the Army Pamphlet 27-50-491, The Army Lawyer

(Charlottesville, VA: Judge Advocate General’s Legal Center and

School, April 2014), 25–31.

38. Michael N. Schmitt, “Autonomous Weapon Systems and

International Humanitarian Law: a Reply to the Critics,” Harvard

National Security Journal, 5 February 2013, accessed 28 March

2017, http://harvardnsj.org/2013/02/autonomous-weapon-sys-

tems-and-international-humanitarian-law-a-reply-to-the-critics/.

39. Gregory P. Noone and Diana C. Noone, “The Debate over Office of the Secretary of Defense Logistics Program fellows visit the

Autonomous Weapons Systems,” Case Western Reserve Journal of bridge of the USNS Gilliland 28 October 2015 during a tour of the

International Law 47, no. 1 (Spring 2015): 29, accessed 27 March 2017, Military Sealift Command (MSC) ship. Shown from left to right are

http://scholarlycommons.law.case.edu/jil/vol47/iss1/6/. Stanley McMillian, Lt. Col. Ed Hogan (kneeling), Bryan Jerkatis, Donald

40. Neil Davison, ed., ‘Non-lethal’ Weapons (Houndmills, En- Gillespie, Art Clark (MSC Surge Sealift Program Readiness Officer),

gland: Palgrave Macmillan, 2009). USNS Gilliland’s Master Keith Finnerty, and Renee Hubbard. (Photo

41. DOD Defense Science Board, Task Force Report: The Role of

courtesy of U.S. Navy)

Autonomy in DOD Systems, 1.

42. DOD, Unmanned Systems Integrated Roadmap FY2011-2036

(Washington, DC: Government Publishing Office [GPO], 2011), 43.

43. International Committee of the Red Cross (ICRC), Expert

Meeting 26–28 March 2014 report, “Autonomous Weapon Systems:

The Office of the Secretary

Technical, Military, Legal and Humanitarian Aspects” (Geneva: ICRC, of Defense Logistics Fellows

November 2014), 5.

44. Bonnie Docherty, Losing Humanity: The Case against Program

Killer Robots (Cambridge, MA: Human Rights Watch, 19 Novem-

T

ber 2012), 2, accessed 10 March 2017, https://www.hrw.org/ he Office of the Secretary of Defense (OSD) Logis-

report/2012/11/19/losing-humanity/case-against-killer-robots.

tics Fellows Program is open to field-grade officer

45. Ibid.

46. Paul Marks, “Iron Dome Rocket Smasher Set to Change Gaza (O4 to O5) and Department of Defense (DOD) civilian

Conflict,” New Scientist Daily News online, 20 November 2012, logisticians (GS-13 to GS-14). According to the Office

accessed 24 March 2017, https://www.newscientist.com/article/ of the Assistant Secretary of Defense for Logistics and

dn22518-iron-dome-rocket-smasher-set-to-change-gaza-conflict/.

Materiel Readiness, which administers the program, the

47. Docherty, Losing Humanity, 2.

48. Ibid.; Patrick Lin, George Bekey, and Keith Abney, Auton- one-year fellowship is a developmental assignment that

omous Military Robotics: Risk, Ethics, and Design (Arlington, VA: fosters learning, growth, and experiential opportunities.

Department of the Navy, Office of Naval Research, 20 December Fellows participate in policy formulation and depart-

2008), accessed 24 March 2017, http://digitalcommons.calpoly.edu/ ment-wide oversight responsibilities. They tour public-

cgi/viewcontent.cgi?article=1001&context=phil_fac.

49. “MK 15—Phalanx Close-In Weapons System (CIWS)” Navy Fact and private-sector logistics organizations to learn how

Sheet, 25 January 2017, accessed 10 March 2017, http://www.navy.mil/ they conduct operations and to observe industry best

navydata/fact_print.asp?cid=2100&tid=487&ct=2&page=1. practices. They gain insight into legislative processes

50. Paul Scharre and Michael Horowitz, “An Introduction to through visits to Congress, and they attend national-level

Autonomy in Weapons Systems” (working paper, Center for a New

American Security, February 2015), 18, accessed 24 March 2017, forums and engage in collaborative efforts with industry

http://www.cnas.org/. partners. Fellows can observe and interact with appoint-

51. Docherty, Losing Humanity, 2. ed and career senior executives and flag officers, includ-

52. Thomas K. Adams, “Future Warfare and the Decline of ing one-on-one meetings with senior logistics leaders

Human Decisionmaking,” Parameters 31, no. 4 (Winter 2001–2002):

57–71.

in the military departments, joint staff, OSD, and other

53. Docherty, Losing Humanity, 4. agencies. They can gain a deeper understanding of the

54. DOD Directive 3000.09, Autonomy in Weapon Systems (Wash- OSD perspective and how it affects the DOD enterprise.

ington, DC: U.S. GPO, 21 November 2012), 2, accessed 10 March 2017, More information about the program and how to apply

http://www.dtic.mil/whs/directives/corres/pdf/300009p.pdf.

can be found at http://www.acq.osd.mil/log/lmr/fellows_

55. Thomas C. Schelling, Arms and Influence (New Haven: Yale

University, 1966). program.html.

81 May-June 2017 MILITARY REVIEW

You might also like

- Engine - 2uz-Fe EngineDocument3 pagesEngine - 2uz-Fe EnginePablo PérezNo ratings yet

- Autonomous Weapons PDFDocument10 pagesAutonomous Weapons PDFapi-510681335No ratings yet

- Managing Digital Marketing 2020 Smart InsightsDocument24 pagesManaging Digital Marketing 2020 Smart InsightsKiều Trinh100% (1)

- Pre-Standard: Publicly Available SpecificationDocument20 pagesPre-Standard: Publicly Available SpecificationNalex GeeNo ratings yet

- Ethical Robots in Warfare: Ronald C. ArkinDocument4 pagesEthical Robots in Warfare: Ronald C. ArkinJeff JerousekNo ratings yet

- Tim Bradley AS2Document10 pagesTim Bradley AS2TimBradleyNo ratings yet

- Adapting The Law of Armed Conflict To Autonomous Weapon SystemsDocument27 pagesAdapting The Law of Armed Conflict To Autonomous Weapon SystemsgidiusgodwineNo ratings yet

- IF11105Document3 pagesIF11105chichponkli24No ratings yet

- Speaker's Summary Autonomous Weapons and Human Supervisory ControlDocument3 pagesSpeaker's Summary Autonomous Weapons and Human Supervisory ControlLeizle Funa-FernandezNo ratings yet

- 0457 02 Id167 0029 IrDocument6 pages0457 02 Id167 0029 IrFuazXNo ratings yet

- International Governance of Autonomous Military RobotsDocument45 pagesInternational Governance of Autonomous Military RobotsPaulinah SyumbuaNo ratings yet

- Law and Ethics For Autonomous Weapon SystemsDocument32 pagesLaw and Ethics For Autonomous Weapon SystemsHoover Institution100% (3)

- 13.cernea - The Ethical Troubles of Future Warfare. On The Prohibition of Autonomous Weapon SystemsDocument24 pages13.cernea - The Ethical Troubles of Future Warfare. On The Prohibition of Autonomous Weapon SystemsMihaela NițăNo ratings yet

- Forum: Question Of: Student OfficerDocument9 pagesForum: Question Of: Student OfficerZay Ya Phone PyaihNo ratings yet

- Laws: Lethal Autonomous Weapon SystemsDocument6 pagesLaws: Lethal Autonomous Weapon SystemsabhasNo ratings yet

- January LD - LAWSDocument114 pagesJanuary LD - LAWSTrentonNo ratings yet

- Command Responsibility A Model For Defining Meaningful Human Control - 2Document14 pagesCommand Responsibility A Model For Defining Meaningful Human Control - 2sroemermanNo ratings yet

- Creating Moral Buffers in Weapon Control Interface Design: M.L. CummingsDocument7 pagesCreating Moral Buffers in Weapon Control Interface Design: M.L. CummingstNo ratings yet

- AI in Military Operations - Part I - PDF - 15 Nov 2020 PDFDocument6 pagesAI in Military Operations - Part I - PDF - 15 Nov 2020 PDFLt Gen (Dr) R S PanwarNo ratings yet

- Inquiry EssayDocument7 pagesInquiry Essayapi-248878413No ratings yet

- 2017-02-26 - Lethal Autonomous Weapon Systems and The Conduct of HostilitiesDocument4 pages2017-02-26 - Lethal Autonomous Weapon Systems and The Conduct of HostilitiesgfournierNo ratings yet

- Artificial Intelligence Drone Swarming ADocument12 pagesArtificial Intelligence Drone Swarming Avivgo100% (1)

- Maas 2019Document29 pagesMaas 2019Hebert Azevedo SaNo ratings yet

- Agenda - " Artificial Intelligence in Military"Document3 pagesAgenda - " Artificial Intelligence in Military"azharNo ratings yet

- The Inevitable Militarization of Artificial Intelligence: Introduction To Autonomous WeaponsDocument7 pagesThe Inevitable Militarization of Artificial Intelligence: Introduction To Autonomous WeaponsMuskan MisharNo ratings yet

- AnnotatedsourcelistDocument13 pagesAnnotatedsourcelistapi-306711602No ratings yet

- Sehra Wat 2016Document19 pagesSehra Wat 2016Adi Prakoso BintangNo ratings yet

- Winnie Aff ConstructiveDocument16 pagesWinnie Aff ConstructiveBrian LuoNo ratings yet

- Agenda - "Artificial Intelligence in Military"Document3 pagesAgenda - "Artificial Intelligence in Military"azharNo ratings yet

- Moral Predators StrawserDocument28 pagesMoral Predators StrawserrdelsogNo ratings yet

- The Future of WarsDocument28 pagesThe Future of WarsRachelLyeNo ratings yet

- Sparrow. Killer RobotsDocument16 pagesSparrow. Killer RobotsIrene IturraldeNo ratings yet

- Grounds For Discrimination: Autonomous Robot WeaponsDocument4 pagesGrounds For Discrimination: Autonomous Robot Weapons404 System ErrorNo ratings yet

- 0909 Ethics and Robotics AltmannDocument14 pages0909 Ethics and Robotics AltmannCharleneCleoEibenNo ratings yet

- RUSI Journal JamesJohnson (2020)Document17 pagesRUSI Journal JamesJohnson (2020)Aung KoNo ratings yet

- Kerr - Szilagyi - Asleep at The SwitchDocument39 pagesKerr - Szilagyi - Asleep at The SwitchNorbs SarigumbaNo ratings yet

- The Perilous Coming Age of AI Warfare - Foreign AffairsDocument4 pagesThe Perilous Coming Age of AI Warfare - Foreign AffairsZallan KhanNo ratings yet

- Military Applicability of Artificial Intelligence A Study: Department of Computer Science OFDocument6 pagesMilitary Applicability of Artificial Intelligence A Study: Department of Computer Science OFshivnanduNo ratings yet

- Scalability of Weapons Systems and Prolifiration RiskDocument3 pagesScalability of Weapons Systems and Prolifiration RiskrautbsamarthNo ratings yet

- XAIintheMilitaryDomain-N WoodDocument29 pagesXAIintheMilitaryDomain-N Woodsrikant ramanNo ratings yet

- Purves2015 Article AutonomousMachinesMoralJudgmenDocument22 pagesPurves2015 Article AutonomousMachinesMoralJudgmenfelmmandoNo ratings yet

- Roff Moyes Meaningful Human Control-With-Cover-Page-V2Document7 pagesRoff Moyes Meaningful Human Control-With-Cover-Page-V2GayaumeNo ratings yet

- Normative Epistemology For Lethal Autonomous Weapons SystemsDocument30 pagesNormative Epistemology For Lethal Autonomous Weapons SystemsJames NeuendorfNo ratings yet

- Killer Robots: UK Government Policy On Fully Autonomous WeaponsDocument5 pagesKiller Robots: UK Government Policy On Fully Autonomous WeaponsEdwin WeirowskiNo ratings yet

- Moral Predators: The Duty To Employ Uninhabited Aerial VehiclesDocument28 pagesMoral Predators: The Duty To Employ Uninhabited Aerial Vehiclesclad7913No ratings yet

- 22140-The Terminator DilemmaDocument2 pages22140-The Terminator Dilemmaizzoh izzNo ratings yet

- Implications of Lethal Autonomous Weapon Systems (LAWS) Options For PakistanDocument24 pagesImplications of Lethal Autonomous Weapon Systems (LAWS) Options For PakistanKinza Zeb100% (1)

- Saying No!' To Lethal Autonomous Targeting: Noel SharkeyDocument16 pagesSaying No!' To Lethal Autonomous Targeting: Noel SharkeyJorge Luis MortonNo ratings yet

- Mil Technology Latest TrendDocument37 pagesMil Technology Latest Trendprajwal khanalNo ratings yet

- Commission Guide - LAWSDocument8 pagesCommission Guide - LAWSexcruciatingNo ratings yet

- Artificial Intelligence The Future of WarfareDocument6 pagesArtificial Intelligence The Future of WarfareMikhailNo ratings yet

- Is It Sensible To Grant Autonomous Decision-Making To Military Robots of The FutureDocument5 pagesIs It Sensible To Grant Autonomous Decision-Making To Military Robots of The FutureGiovanny Garcés ?No ratings yet

- Killer Drones - Moral Ups DwonsDocument11 pagesKiller Drones - Moral Ups DwonsrdelsogNo ratings yet

- 2016-12-18 - Current Legal Status of Unmanned and Autonomous Weapons SystemsDocument3 pages2016-12-18 - Current Legal Status of Unmanned and Autonomous Weapons SystemsgfournierNo ratings yet

- Artificial Inteligent 2Document27 pagesArtificial Inteligent 23IA04 Hilman FansyuriNo ratings yet

- Ethics of Autonomous Weapons Systems and Its ApplicabilityDocument15 pagesEthics of Autonomous Weapons Systems and Its ApplicabilityAndreea MadalinaNo ratings yet

- Deadly Algorithms: Can Legal Codes Hold Software Accountable For Code That Kills?Document7 pagesDeadly Algorithms: Can Legal Codes Hold Software Accountable For Code That Kills?Susan SchuppliNo ratings yet

- The Morality of Robotic War: New York Times Op-EdDocument3 pagesThe Morality of Robotic War: New York Times Op-EdmuhammadmusakhanNo ratings yet

- The Necropolitics of DronesDocument15 pagesThe Necropolitics of DronessilvinaNo ratings yet

- Premier Debate JF21 Free LD BriefDocument65 pagesPremier Debate JF21 Free LD BriefoesmfpomsepofNo ratings yet

- Third Wave of WarfareDocument11 pagesThird Wave of WarfareLouise DebonoNo ratings yet

- The Hacker Way Pre Publication-With-Cover-Page-V2Document23 pagesThe Hacker Way Pre Publication-With-Cover-Page-V2k kNo ratings yet

- 009 Chambers+ReportsDocument165 pages009 Chambers+ReportsubaidNo ratings yet

- 14186-Article Text-18894-1-10-20100401Document9 pages14186-Article Text-18894-1-10-20100401ubaidNo ratings yet

- Cognitive Reflection and Decision Making: Shane FrederickDocument110 pagesCognitive Reflection and Decision Making: Shane FrederickubaidNo ratings yet

- The Runaway Judge John Grisham S Appearance in Judicial OpinionsDocument22 pagesThe Runaway Judge John Grisham S Appearance in Judicial OpinionsubaidNo ratings yet

- Details Python Practicum+Document13 pagesDetails Python Practicum+ubaidNo ratings yet

- Ccbe Considerations On The Legal Aspects of Artificial Intelligence 2020Document37 pagesCcbe Considerations On The Legal Aspects of Artificial Intelligence 2020ubaidNo ratings yet

- Union - of - India - UOI - and - Ors - Vs - C - Damani 1Document9 pagesUnion - of - India - UOI - and - Ors - Vs - C - Damani 1ubaidNo ratings yet

- ADR Brochure. 2021Document16 pagesADR Brochure. 2021ubaidNo ratings yet

- The Student's Guide To The Leading Law Firms and Sets in The UKDocument65 pagesThe Student's Guide To The Leading Law Firms and Sets in The UKubaidNo ratings yet

- ALC Client Counselling Competition BrochureDocument2 pagesALC Client Counselling Competition BrochureubaidNo ratings yet

- The Student's Guide To The Leading Law Firms and Sets in The UKDocument38 pagesThe Student's Guide To The Leading Law Firms and Sets in The UKubaidNo ratings yet

- Effects of Demonetization in India: Ms - Asra Fatima, Anwarul UloomDocument4 pagesEffects of Demonetization in India: Ms - Asra Fatima, Anwarul UloomubaidNo ratings yet

- ALC Client Counselling Competition RRDocument4 pagesALC Client Counselling Competition RRubaidNo ratings yet

- Congratulations!: We Will Need To Know by Monday, September 16, If You Will Be Accepting Your Spot in The ForumDocument3 pagesCongratulations!: We Will Need To Know by Monday, September 16, If You Will Be Accepting Your Spot in The ForumubaidNo ratings yet

- A Practical Manual From Professional Traders Academy Mr. YatharthDocument28 pagesA Practical Manual From Professional Traders Academy Mr. YatharthubaidNo ratings yet

- Nilesh Bhavsar ResumeDocument4 pagesNilesh Bhavsar ResumeSumit BhattaNo ratings yet

- Jetir2211099 PDFDocument6 pagesJetir2211099 PDFVaishnavi PatilNo ratings yet

- Training Cayman 2Document90 pagesTraining Cayman 2Garikoitz Franciscoene100% (3)

- Information Technology For Managers: 1 DR.B.H.KDocument11 pagesInformation Technology For Managers: 1 DR.B.H.Ka NaniNo ratings yet

- Design and Analyses of Car Model by CFD SoftwareDocument2 pagesDesign and Analyses of Car Model by CFD SoftwareKrishan KamtaNo ratings yet

- Original Xiaomi Redmi Airdots Bluetooth 5.0 TWS Wireless Active Earbuds Headset EbayDocument1 pageOriginal Xiaomi Redmi Airdots Bluetooth 5.0 TWS Wireless Active Earbuds Headset EbayM Wasim IshaqNo ratings yet

- Honda XR 190ct CombinedDocument2 pagesHonda XR 190ct CombinedCharles BoccaccioNo ratings yet

- Ultrasonic Inspection Report: REPORT NO.-UT-HB-1003723Document1 pageUltrasonic Inspection Report: REPORT NO.-UT-HB-1003723Ahmed GomaaNo ratings yet

- LR Portal/ALS Digital Network System/Teacher's Guide LR Portal/ALS Digital Network System/Teacher's GuideDocument4 pagesLR Portal/ALS Digital Network System/Teacher's Guide LR Portal/ALS Digital Network System/Teacher's GuideManilou M. BascoNo ratings yet

- National Egovernance Services Delivery AssessmentDocument8 pagesNational Egovernance Services Delivery AssessmentRohini TiwariNo ratings yet

- Toward A Unified Framework For Cloud Computing Governance - An Approach For Evaluating and Integrating IT Management and Governance ModelsDocument21 pagesToward A Unified Framework For Cloud Computing Governance - An Approach For Evaluating and Integrating IT Management and Governance ModelsbaekjungNo ratings yet

- AvishkaaaaaaaDocument14 pagesAvishkaaaaaaaAman YadavNo ratings yet

- 869 1601 0450 C410 1 1 - MergedDocument100 pages869 1601 0450 C410 1 1 - MergedWilber RojasNo ratings yet

- Daftar Pustaka: Standard Naming Convention For Aircraft Landing Gear Configurations - United State of AmericaDocument4 pagesDaftar Pustaka: Standard Naming Convention For Aircraft Landing Gear Configurations - United State of AmericaNaldo LumbantoruanNo ratings yet

- R6010e Mvax PDFDocument9 pagesR6010e Mvax PDFBasim Al-AttarNo ratings yet

- Mechanical Component ToleranceDocument2 pagesMechanical Component ToleranceOmkar Kumar JhaNo ratings yet

- Oracle CloudDocument34 pagesOracle Cloudlakshmi narayanaNo ratings yet

- Failed 12Document2 pagesFailed 12subhrajitm47No ratings yet

- NOTIFIER Alarm BellDocument2 pagesNOTIFIER Alarm Bellcallmeeng.cm1No ratings yet

- Identifying Best Practices in Hydraulic Fracturing Using Virtual Intelligence TechniquesDocument1 pageIdentifying Best Practices in Hydraulic Fracturing Using Virtual Intelligence TechniquesMichel IsereNo ratings yet

- Assignment 05Document6 pagesAssignment 05Dil NawazNo ratings yet

- Nis MPDocument14 pagesNis MPLalit BorseNo ratings yet

- M492P305 - Online Software ManualDocument37 pagesM492P305 - Online Software ManualcembozNo ratings yet

- Hef 4021 BTDocument14 pagesHef 4021 BTarielNo ratings yet

- 4n25 OptoDocument6 pages4n25 OptoHeber Garcia VazquezNo ratings yet

- Rack 6115Document16 pagesRack 6115gokata86No ratings yet

- Datasheet SQ R 2.41Document2 pagesDatasheet SQ R 2.41Alejandro CarhuacusmaNo ratings yet