Professional Documents

Culture Documents

Facial Recognition Using Histogram of Gradients and SVM

Facial Recognition Using Histogram of Gradients and SVM

Uploaded by

akinlabi aderibigbeCopyright:

Available Formats

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (350)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (824)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Apa Assignment 1 LeadershipDocument12 pagesApa Assignment 1 LeadershipDinah RosalNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 9+ Final Year Project Proposal Examples - PDF - ExamplesDocument11 pages9+ Final Year Project Proposal Examples - PDF - Examplesakinlabi aderibigbeNo ratings yet

- The Absorbent MindDocument1 pageThe Absorbent Mindkarl symondsNo ratings yet

- The Extension Delivery System PDFDocument10 pagesThe Extension Delivery System PDFkristine_abas75% (4)

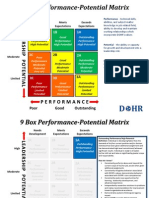

- 9 BoxDocument10 pages9 BoxclaudiuoctNo ratings yet

- Facial Emotion Detection Using Haar-Cascade Classifier and Convolutional Neural Networks.Document6 pagesFacial Emotion Detection Using Haar-Cascade Classifier and Convolutional Neural Networks.akinlabi aderibigbeNo ratings yet

- Comprehensive Final Year Project Proposal ExampleDocument2 pagesComprehensive Final Year Project Proposal Exampleakinlabi aderibigbeNo ratings yet

- Face Recognition For Motorcycle EngineDocument12 pagesFace Recognition For Motorcycle Engineakinlabi aderibigbeNo ratings yet

- Broadcast Industry - Trends To Watch Out For in 2021 - Business Insider IndiaDocument15 pagesBroadcast Industry - Trends To Watch Out For in 2021 - Business Insider Indiaakinlabi aderibigbeNo ratings yet

- A Cost Effective Method For Automobile Security Based On Detection and Recognition of Human FaceDocument7 pagesA Cost Effective Method For Automobile Security Based On Detection and Recognition of Human Faceakinlabi aderibigbeNo ratings yet

- Arduino Interfacing With LCD Without Potentiometer - InstructablesDocument6 pagesArduino Interfacing With LCD Without Potentiometer - Instructablesakinlabi aderibigbeNo ratings yet

- Broadcast Technology Trends For 2020 - Mebucom InternationalDocument3 pagesBroadcast Technology Trends For 2020 - Mebucom Internationalakinlabi aderibigbeNo ratings yet

- Automatic Access To An Automobile Via BiometricsDocument10 pagesAutomatic Access To An Automobile Via Biometricsakinlabi aderibigbeNo ratings yet

- Antitheft System For Motor VehiclesDocument4 pagesAntitheft System For Motor Vehiclesakinlabi aderibigbeNo ratings yet

- Automated Vehicle Security System Using ALPR and Face DetectionDocument9 pagesAutomated Vehicle Security System Using ALPR and Face Detectionakinlabi aderibigbeNo ratings yet

- Anti-Theft Security System Using Face Recognition Thesis Report by Chong Guan YuDocument76 pagesAnti-Theft Security System Using Face Recognition Thesis Report by Chong Guan Yuakinlabi aderibigbeNo ratings yet

- A New Era in Broadcast TV - 2020 Media Trends To Set The Stage For ATSC 3.0 - Broadcasting+CableDocument11 pagesA New Era in Broadcast TV - 2020 Media Trends To Set The Stage For ATSC 3.0 - Broadcasting+Cableakinlabi aderibigbeNo ratings yet

- A Face Detection and Recognition System For Intelligent VehiclesDocument9 pagesA Face Detection and Recognition System For Intelligent Vehiclesakinlabi aderibigbeNo ratings yet

- 2021 - A New Era in Broadcast TV - The Media SchoolsDocument9 pages2021 - A New Era in Broadcast TV - The Media Schoolsakinlabi aderibigbeNo ratings yet

- Covenant University Medical Centre: Fee-For-Service R EportDocument2 pagesCovenant University Medical Centre: Fee-For-Service R Eportakinlabi aderibigbeNo ratings yet

- 9.3 Issues and Trends in The Television Industry - Understanding Media and CultureDocument12 pages9.3 Issues and Trends in The Television Industry - Understanding Media and Cultureakinlabi aderibigbeNo ratings yet

- Contact and Lexical Borrowing 8: Philip DurkinDocument11 pagesContact and Lexical Borrowing 8: Philip DurkinPatriciaSfagauNo ratings yet

- Ids, Credit Card, Debit Cards and DocumentsDocument4 pagesIds, Credit Card, Debit Cards and DocumentsShawn Lesnar R. AbayanNo ratings yet

- Before ReadingDocument280 pagesBefore ReadingAnuhas WichithraNo ratings yet

- SAMPLE Chapter 3 Marketing ResearchDocument12 pagesSAMPLE Chapter 3 Marketing ResearchTrixie JordanNo ratings yet

- Rote LearningDocument4 pagesRote LearningAnanga Bhaskar BiswalNo ratings yet

- Behaviour Support Guidelines ChildrenDocument44 pagesBehaviour Support Guidelines Childrenmsanusi100% (1)

- Studuco 3Document6 pagesStuduco 3Shayne VelardeNo ratings yet

- Allais, Lucy - Kant's Transcendental Idealism and Contemporary Anti-RealismDocument25 pagesAllais, Lucy - Kant's Transcendental Idealism and Contemporary Anti-Realismhuobrist100% (1)

- Models of CommunicationDocument27 pagesModels of CommunicationCarl ArriesgadoNo ratings yet

- Essay Test MGT103Document3 pagesEssay Test MGT103http140304No ratings yet

- Program Implementation Review (Pir) : Year-EndDocument14 pagesProgram Implementation Review (Pir) : Year-EndJofit DayocNo ratings yet

- Final Assessment Ads514 July 2022Document3 pagesFinal Assessment Ads514 July 2022Adilah SyamimiNo ratings yet

- 31Document15 pages31Amit TamboliNo ratings yet

- UTS Output Module 1Document9 pagesUTS Output Module 1Blethy April PalaoNo ratings yet

- Teach) AppForm PerfExcFrameworkDocument6 pagesTeach) AppForm PerfExcFrameworknickjonatNo ratings yet

- CV of Kaniz Fatema MouDocument2 pagesCV of Kaniz Fatema MouOnik Sunjy Dul IslamNo ratings yet

- Resume GabbarDocument2 pagesResume GabbarsrajparsuramNo ratings yet

- Manpower Handling TrainingDocument20 pagesManpower Handling TrainingbuddeyNo ratings yet

- Chapter 6. Formal Approaches To SlaDocument17 pagesChapter 6. Formal Approaches To SlaEric John VegafriaNo ratings yet

- PPG Week A - The Concepts of Politics and GovernanceDocument7 pagesPPG Week A - The Concepts of Politics and GovernanceMarilyn DizonNo ratings yet

- Adult Needs and Strengths Assessment: ANSA ManualDocument39 pagesAdult Needs and Strengths Assessment: ANSA ManualAimee BethNo ratings yet

- The Sapir-Whorf HypothesisDocument2 pagesThe Sapir-Whorf HypothesisqwertyuiopNo ratings yet

- Craap Test: Using The To Evaluate WebsitesDocument2 pagesCraap Test: Using The To Evaluate WebsitesNuch NuchanNo ratings yet

- LearningDocument20 pagesLearningHibo JirdeNo ratings yet

- Acr Conduct OrientationDocument2 pagesAcr Conduct OrientationRechel SegarinoNo ratings yet

- Student Perception On LeadershipDocument42 pagesStudent Perception On LeadershipPhan ThảoNo ratings yet

Facial Recognition Using Histogram of Gradients and SVM

Facial Recognition Using Histogram of Gradients and SVM

Uploaded by

akinlabi aderibigbeOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Facial Recognition Using Histogram of Gradients and SVM

Facial Recognition Using Histogram of Gradients and SVM

Uploaded by

akinlabi aderibigbeCopyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/317418143

Facial recognition using histogram of gradients and support vector machines

Conference Paper · January 2017

DOI: 10.1109/ICCCSP.2017.7944082

CITATIONS READS

19 2,064

2 authors:

Josephine Julina J. K. T. Sree Sharmila

Sri Sivasubramaniya Nadar College of Engineering Sri Sivasubramaniya Nadar College of Engineering

5 PUBLICATIONS 32 CITATIONS 71 PUBLICATIONS 301 CITATIONS

SEE PROFILE SEE PROFILE

Some of the authors of this publication are also working on these related projects:

content based image retrieval View project

Computer Vision Based Automated Detection of Object Tracking in Video Surveillance Applications View project

All content following this page was uploaded by Josephine Julina J. K. on 08 November 2017.

The user has requested enhancement of the downloaded file.

IEEE International Conference on Computer, Communication, and Signal Processing (ICCCSP-2017)

Facial Recognition using Histogram of Gradients and

Support Vector Machines

J. Kulandai Josephine Julina T. Sree Sharmila

Department of Information Technology Department of Information Technology

SSN College of Engineering SSN College of Engineering

Chennai, India Chennai, India

josephinejulinajk@ssn.edu.in sreesharmilat@ssn.edu.in

Abstract— Face recognition is widely used in computer vision stored in the database or face gallery. The details about the

and in many other biometric applications where security is a face and its different facial components can be obtained based

major concern. The most common problem in recognizing a face on features which are chosen in such a way that they are

arises due to pose variations, different illumination conditions robust to noise and different orientation. Most of the facial

and so on. The main focus of this paper is to recognize whether a recognition problems deal with feature extraction and machine

given face input corresponds to a registered person in the learning techniques. Facial recognition tasks are performed in

database. Face recognition is done using Histogram of Oriented many areas of image and vision applications where security is

Gradients (HOG) technique in AT & T database with an more focused without any second thought of compromising it.

inclusion of a real time subject to evaluate the performance of the

Many applications deal with mug shot images which are used

algorithm. The feature vectors generated by HOG descriptor are

used to train Support Vector Machines (SVM) and results are

to recognize faces of any matched criminals in the database.

verified against a given test input. The proposed method checks The remaining sections of the paper is organized as

whether a test image in different pose and lighting conditions is follows: Section II provides details about related work carried

matched correctly with trained images of the facial database. The in recognition of faces and feature extraction. Section III

results of the proposed approach show minimal false positives provides details about the architecture of the proposed method

and improved detection accuracy. for detecting whether an image corresponds to matched face in

the gallery. Section IV provides qualitative results obtained

Keywords- AT & T facial database; Face recognition; Feature

extraction; Histogram of Oriented Gradients; Support Vector

after testing with images of AT & T facial database [16]. The

Machine system is able to detect 90% of faces over 41 test images

resulting in few false positives. Section V provides conclusion

and future work followed by references.

I. INTRODUCTION

The face is an important identity of a person. It is obvious II. RELATED WORK

that humans are experts in recognizing people at a farther The well-known datasets available to detect and recognize

distance, predicting the identity of a person in more prominent faces and facial expressions [18] includes CVL [21], CMU

occlusions and in different lighting conditions. However, for pose, illumination & expression (CMU - PIE) [25], FERET

the system, it requires many iterations and tuning of threshold [8], extended M2VTS dataset [17], Cohn-Kanade (CK) [24],

parameters to recognize a person and to reject appropriately JAFFE dataset [20] and so on. This paper uses AT & T facial

when a wrong input is given. database [16] and real time images to recognize faces based on

The location of facial components namely eyes, nose, and feature extraction using HOG [10].

mouth constitute prime landmarks in marking the presence of Faces can be detected most generally using Viola-Jones

a face in an image. However, this process would be made algorithm [9]. Facial features being extracted should be

difficult when a person tends to exhibit different expressions invariant to pose variations. Such invariant features can be

and pose variations. Some of the commonly encountered obtained based on Active Shape Model (ASM) [4] and Active

challenges in facial detection and recognition include Appearance Model (AAM) [12]. Features of an image include

variations in lighting conditions, occlusion, wearing of edge details, texture, color information, corners, and interest

spectacles, having more facial hair and so on. Certain points of regions that constitute what is known as the Region

techniques like template matching methods [19], geometry of Interest (ROI). The feature space can be reduced by

based approaches [1], appearance-based models [3] are identifying dominant and unique feature using Principal

adopted to overcome such challenges. Component Analysis (PCA) [5] technique or by means of

Face detection is the first and foremost step in identifying distance metric like Euclidean [2]. Some of the well known

the presence of faces in an image. Then face recognition or facial recognition algorithms use Gabor filters [30], LBP

verification is carried out which checks whether a given test (Local Binary Pattern) [30] and Linear Discriminant Analysis

input containing face is matched with already available faces (LDA) [30]. The raw pixel information does not discriminate

978-1-5090-3716-2/17/$31.00 ©2017 IEEE

IEEE International Conference on Computer, Communication, and Signal Processing (ICCCSP-2017)

unique pattern of images that are prone to pose variations. based on input data alone. Supervised learning performs

Hence, it is essential to extract information in larger blob areas classification if the output is discrete, else regression if output

based on the application of interest. Most of the feature is continuous. Unsupervised learning deals with clustering as

extraction methods are based on the type of data. There are the pattern is hidden in the data. Some of the algorithms

many features namely SURF (Speeded-up Robust Features) include Support Vector Machine (SVM) [11], Bayesian

[26], FREAK (Fast Retina Keypoint) [27], BRISK (Binary learning [14], neural networks [13], Hidden Markov Model

Robust Invariant Scalable Keypoints) [28], MSER (Maximally (HMM) [7], K-Means [14] and so on. Choosing an appropriate

Stable Extremal Regions) [29], SIFT (Scale-invariant Feature algorithm is somewhat trivial when data is not given

Transformation) [30] and HOG (Histogram of Oriented importance. It is also essential to avoid over-fitting and under

Gradients) [23]. fitting problems while bringing out optimal decision boundary

[11].

The HOG descriptor can overcome the problem of varying

illumination as it is invariant to lighting conditions. It is used

to extract magnitude of edge information and works well even III. SYSTEM ARCHITECTURE

during variations in poses and illumination conditions. HOG The system is designed to handle pose and illumination

works well under such challenging situations as it represents problems in recognition of faces. This paper focuses on

directionality of edge information thereby making it finding an exact match of the face from 40 subjects in AT & T

significant for the study of pattern and structure of the facial database in addition to user defined subject, based on

interested object. The HOG feature descriptor can be used

HOG features and matching. Fig. 2 shows the overall system

along with other feature descriptors [10]. SURF features are

architecture which covers the entire workflow that includes

mainly used in facial recognition applications. FAST/SURF

and Harris/Freak [6] combination lessens illumination setting up of the database, feature extraction using HOG,

distortion to a greater extent. The combination of HOG and facial landmark detection, building up of classifier model and

SVM [7] yields improved detection accuracy. Fig. 1. shows feature matching.

general marking of facial landmarks such as eyes, mouth, and

nose using Harris corner points.

Fig. 1. Face representation

Machine learning approach is adopted when a system is

trained to take decisions based on the data available to it. It

makes the machine perform tasks as how human perceive the

environment and perform actions. It relies upon the quantum

of data for learning. The results depend on the quality of data

and it is more important than an algorithm itself. The changes

in data convey behavior of the model. It helps in making Fig. 2. System architecture

predictions and it typically falls under two categories such as A. Database creation

supervised and unsupervised learning [11]. The former

learning method knows input and output while the latter works The AT & T database is used to recognize faces. It

contains 40 subjects with 10 images for each. All images in

978-1-5090-3716-2/17/$31.00 ©2017 IEEE

IEEE International Conference on Computer, Communication, and Signal Processing (ICCCSP-2017)

the database are in the same aspect ratio with differences in C. Feature extraction and classification

poses and expressions with constant illumination. Fig. 3 shows Both training and testing phase requires undergoing the

sample images of AT & T facial database. A new subject is same type of feature extraction technique which in this case is

added with 10 different poses taken in varying lighting done by using HOG features.

conditions and different periods of time. The database is split

into a training set and testing set. Fig. 4 shows the images Algorithm: Feature-based facial recognition

present in the testing set.

Input: Images of persons S = {1,2,...41} each with

S i = {1,2,...,10} where i = {1,2,....41} forming a total of

410 images

Output: Matched subject in the database based on training

features F and labels generated by means of HOG feature and

Harris feature matching of different facial components

Training set: T = I 1 , I 2 ,..., I 9 from 41 subjects forming a total

of 369 images

Fig. 3. Sample images from AT & T facial database Testing set: Te = I 10 each from 41 subjects forming a total of

41 images. These images used to test the system are not

included in the training set.

1. Create database by accessing files from original AT &

T database in which original images in .pgm format

are converted to .jpg format to match with inclusion

of real-time images

2. Include 10 new user images by detecting faces using

Viola-Jones face detector

3. Partition the database into training and testing set

4. Extract facial features for all images in the training

Fig. 4. Test input

dataset T

5. Train the dataset T using HOG feature descriptor

B. Pre-processing techniques

Facial alignment and normalization are essential in 6. Choose a test image from the test dataset Te

achieving higher recognition rates as all feature vectors work 7. Extract feature vector F using HOG feature

very well in same feature space. A set of 10 images of the descriptor

newly added self-defined images requires pre-processing to

match with images of the database. All faces of the newly 8. Predict the class of test input using SVM classifier

added subject are cropped using Viola-Jones algorithm [9]. It based on matching data available in training features

uses Haar features and Adaboost [31] classifier to detect faces and labels

in an integral image [9]. All images in the new image set 9. Perform feature matching to display the

should be resized to match with the common size of corresponding matching between a test image and

112X 92 pixels. Fig. 5 shows the set of newly added images in trained images.

the training set.

The model built in the training phase is used to predict the

class of the subject by using SVM classifier. SVM can be

used most frequently as a binary classifier. However, it can

be used to classify multi-class data.

The labels and features are used to train a model which

can be used as an input to the SVM classifier for finding an

exact match of the given test data against relevant subjects

available in the database. SVM is trained to correct even

possible misclassification [7] when an unknown test input is

Fig. 5. Many appearances of same subject in different illumination and poses given for recognition.

978-1-5090-3716-2/17/$31.00 ©2017 IEEE

IEEE International Conference on Computer, Communication, and Signal Processing (ICCCSP-2017)

The conventional method of evaluating a classification

IV. RESULTS AND DISCUSSION model belonging to a supervised learning technique is to

The training set contains 369 images and testing set formulate confusion matrix [22] over test data. Fig. 8 shows

contains 41 images. All images in the training set are trained the outcome of the classification results in the form of

using HOG. Hence, a total of 369 feature vectors is obtained providing them in confusion matrix.

with a dimensionality of 4680 for each image. The larger

dimension of feature vector accommodates magnitude of all

possible edge information in different orientations. Fig. 6

shows the HOG visualization of a new subject.

Fig. 8. Confusion Matrix

¦ TruePositive + ¦ TrueNegative

Accuracy = (1)

¦ TotalPopulation

This shows an overall accuracy of 90.2439% computed

based on (1).

V. CONCLUSION AND FUTURE WORK

This paper focused on feature extraction using HOG by

exhibiting greater accuracy primarily in recognizing faces in

AT & T facial database. The increase in cross-validation

testing can evaluate the system in such a way that rejection of

Fig. 6. HOG visualization

false detections is improved to ensure better classification. As

the number of subjects gets increased, the method of

generating feature vectors using HOG would be time-

The test set contains 41 images and classification result is

given below: consuming. The future work involves the recognition of faces

in 3D images using the concept of deep learning where

• Matched correctly (37): s1 – s7, s9, s11 – s18, s20 –

convolution neural network [13] is used for training a dataset

s39, s41 as it captures high-level features providing good

• Not matched or wrong predictions: s8, s10, s19, s40 representation.

Fig. 7 shows the classifier result retrieving all matched REFERENCES

subjects from the database against the given test input. [1] Ferdinando Silvestro Samaria, "Face Recognition Using Hidden Markov

Models" in Thesis submitted in Trinity College

[2] Henry A. Rowley, Shumeet Baluja, Takeo Kanade, "Neural Network-

Based Face Detection" in IEEE Transactions on Pattern Analysis and

Machine Intelligence, vol. 20, no. 1, pp. 23-38, 1998

[3] Khan RA, Meyer A, Konik H, Bouakaz S., "Framework for reliable,

real-time facial expression recognition for low resolution images" in

Pattern Recognit Lett., pp. 1159–1168, 2013

[4] Leonardo A. Cament, Francisco J. Galdames, Kevin W. Bowyer,

Claudio A. Perez, "Face recognition under pose variation with Gabor

features enhanced by Active Shape and Statistical Models" in Pattern

Recognition, vol. 48, pp. 3371-3384, 2015

[5] M. Turk, A. Pentland, "Eigenfaces for Recognition" in Journal of

Cognitive Neurosicence, vol. 3, no. 1, pp. 71-86, Win. 1991

[6] Madbouly, M. Wafy, Mostafa-Sami M. Mostafa, "Performance

Assessment of Feature Detector-Descriptor Combination in Int. J. of

Computer Science, vol. 12, no. 5, pp. 87-94, 42248

Fig. 7. Classifier output showing matched results from the database

978-1-5090-3716-2/17/$31.00 ©2017 IEEE

IEEE International Conference on Computer, Communication, and Signal Processing (ICCCSP-2017)

[7] Martin Schels and Friedhelm Schwenker, "A Multiple Classifier System

Approach for Facial Expressions in Image Sequences Utilizing GMM

Supervectors" in International Conference on Pattern Recognition

[8] P.J. Phillips, H. Moon, S.A. Rizvi, P.J. Rauss, "The FERET Evaluation

Methodology for Face-Recognition Algorithms" in IEEE Transactions

on Pattern Analysis and Machine Intelligence, vol. 22, no. 10, pp. 1090-

1104, October 2000

[9] Paul Viola, Michael J. Jones, "Robust Real-Time Face Detection" in Int.

J. of Computer Vision, vol. 57, no. 2, pp. 137 - 154, 2004

[10] Pierluigi Carcagni, Marco Del Coco, Marco Leo, Cosimo Distante,

"Facial expression recognition and histograms of oriented gradients: a

comprehensive survey" in Springer Open Journal, pp. 1-25, 2015

[11] R. Chellappa, C.L. Wilson, S. Sirohey, "Human and Machine

Recognition of Faces: A Survey" in Proceedings of the IEEE, vol. 83,

no. 5, pp. 705-740, May 1995

[12] Shan Du, Rabab Ward, "Face recognition under pose variations" in

Journal of Franklin Institute, pp. 596-613, 2006

[13] Sun Y, Wang X, Tang X., "Deep learning face representation from

predicting 10,000 classes" in IEEE Conference on Computer Vision and

Pattern Recognition (CVPR), pp. 1898-1898, 2014

[14] Y. Bengio, "Learning deep architectures for AI" in Foundations and

trends in machine learning, vol. 2, no. 1, pp. 1-127, 2009

[15] Zhao, W., Chellappa, R., Phillips, P.J., Rosenfeld, Face recognition: A

literature survey in ACM Computing Survey, vol. 34, no. 4, pp. 399-

485, 2003

[16] http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html

[17] http://www.ee.surrey.ac.uk/CVSSP/xm2vtsdb/

[18] http://www.face-rec.org/databases/

[19] http://www.i-programmer.info/babbages-bag/1091-face-recognition.html

[20] http://www.kasrl.org/jaffe.html

[21] http://www.lrv.fri.uni-lj.si/database.html

[22] https://en.wikipedia.org/wiki/Sensitivity_and_specificity

[23] https://in.mathworks.com/help/vision/feature-detection-and-

extraction.html

[24] http://www.pitt.edu/~emotion/downloads.html

[25] http://www.ri.cmu.edu/research_project_detail.html?project_id=418&m

enu_id=261

[26] https://www.cse.unr.edu/~bebis/CS491Y/Papers/Bay06.pdf

[27] https://infoscience.epfl.ch/record/175537/files/2069.pdf

[28] https://e-collection.library.ethz.ch/eserv.php?pid=eth:7684&dsID=eth-

7684-01.pdf

[29] http://in.mathworks.com/help/vision/ref/detectmserfeatures.html

[30] http://www.cs.ubc.ca/~lowe/papers/ijcv04.pdf

[31] Dwarakesh T P, S Ananda Subramaniam, T Sree Sharmila, Vacant Seat

Detection System using Adaboost and Camshift in International Journal

of Electrical and Computing Engineering, vol. 1, no. 3, pp. 41518, April

2015

978-1-5090-3716-2/17/$31.00 ©2017 IEEE

View publication stats

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (350)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (824)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Apa Assignment 1 LeadershipDocument12 pagesApa Assignment 1 LeadershipDinah RosalNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 9+ Final Year Project Proposal Examples - PDF - ExamplesDocument11 pages9+ Final Year Project Proposal Examples - PDF - Examplesakinlabi aderibigbeNo ratings yet

- The Absorbent MindDocument1 pageThe Absorbent Mindkarl symondsNo ratings yet

- The Extension Delivery System PDFDocument10 pagesThe Extension Delivery System PDFkristine_abas75% (4)

- 9 BoxDocument10 pages9 BoxclaudiuoctNo ratings yet

- Facial Emotion Detection Using Haar-Cascade Classifier and Convolutional Neural Networks.Document6 pagesFacial Emotion Detection Using Haar-Cascade Classifier and Convolutional Neural Networks.akinlabi aderibigbeNo ratings yet

- Comprehensive Final Year Project Proposal ExampleDocument2 pagesComprehensive Final Year Project Proposal Exampleakinlabi aderibigbeNo ratings yet

- Face Recognition For Motorcycle EngineDocument12 pagesFace Recognition For Motorcycle Engineakinlabi aderibigbeNo ratings yet

- Broadcast Industry - Trends To Watch Out For in 2021 - Business Insider IndiaDocument15 pagesBroadcast Industry - Trends To Watch Out For in 2021 - Business Insider Indiaakinlabi aderibigbeNo ratings yet

- A Cost Effective Method For Automobile Security Based On Detection and Recognition of Human FaceDocument7 pagesA Cost Effective Method For Automobile Security Based On Detection and Recognition of Human Faceakinlabi aderibigbeNo ratings yet

- Arduino Interfacing With LCD Without Potentiometer - InstructablesDocument6 pagesArduino Interfacing With LCD Without Potentiometer - Instructablesakinlabi aderibigbeNo ratings yet

- Broadcast Technology Trends For 2020 - Mebucom InternationalDocument3 pagesBroadcast Technology Trends For 2020 - Mebucom Internationalakinlabi aderibigbeNo ratings yet

- Automatic Access To An Automobile Via BiometricsDocument10 pagesAutomatic Access To An Automobile Via Biometricsakinlabi aderibigbeNo ratings yet

- Antitheft System For Motor VehiclesDocument4 pagesAntitheft System For Motor Vehiclesakinlabi aderibigbeNo ratings yet

- Automated Vehicle Security System Using ALPR and Face DetectionDocument9 pagesAutomated Vehicle Security System Using ALPR and Face Detectionakinlabi aderibigbeNo ratings yet

- Anti-Theft Security System Using Face Recognition Thesis Report by Chong Guan YuDocument76 pagesAnti-Theft Security System Using Face Recognition Thesis Report by Chong Guan Yuakinlabi aderibigbeNo ratings yet

- A New Era in Broadcast TV - 2020 Media Trends To Set The Stage For ATSC 3.0 - Broadcasting+CableDocument11 pagesA New Era in Broadcast TV - 2020 Media Trends To Set The Stage For ATSC 3.0 - Broadcasting+Cableakinlabi aderibigbeNo ratings yet

- A Face Detection and Recognition System For Intelligent VehiclesDocument9 pagesA Face Detection and Recognition System For Intelligent Vehiclesakinlabi aderibigbeNo ratings yet

- 2021 - A New Era in Broadcast TV - The Media SchoolsDocument9 pages2021 - A New Era in Broadcast TV - The Media Schoolsakinlabi aderibigbeNo ratings yet

- Covenant University Medical Centre: Fee-For-Service R EportDocument2 pagesCovenant University Medical Centre: Fee-For-Service R Eportakinlabi aderibigbeNo ratings yet

- 9.3 Issues and Trends in The Television Industry - Understanding Media and CultureDocument12 pages9.3 Issues and Trends in The Television Industry - Understanding Media and Cultureakinlabi aderibigbeNo ratings yet

- Contact and Lexical Borrowing 8: Philip DurkinDocument11 pagesContact and Lexical Borrowing 8: Philip DurkinPatriciaSfagauNo ratings yet

- Ids, Credit Card, Debit Cards and DocumentsDocument4 pagesIds, Credit Card, Debit Cards and DocumentsShawn Lesnar R. AbayanNo ratings yet

- Before ReadingDocument280 pagesBefore ReadingAnuhas WichithraNo ratings yet

- SAMPLE Chapter 3 Marketing ResearchDocument12 pagesSAMPLE Chapter 3 Marketing ResearchTrixie JordanNo ratings yet

- Rote LearningDocument4 pagesRote LearningAnanga Bhaskar BiswalNo ratings yet

- Behaviour Support Guidelines ChildrenDocument44 pagesBehaviour Support Guidelines Childrenmsanusi100% (1)

- Studuco 3Document6 pagesStuduco 3Shayne VelardeNo ratings yet

- Allais, Lucy - Kant's Transcendental Idealism and Contemporary Anti-RealismDocument25 pagesAllais, Lucy - Kant's Transcendental Idealism and Contemporary Anti-Realismhuobrist100% (1)

- Models of CommunicationDocument27 pagesModels of CommunicationCarl ArriesgadoNo ratings yet

- Essay Test MGT103Document3 pagesEssay Test MGT103http140304No ratings yet

- Program Implementation Review (Pir) : Year-EndDocument14 pagesProgram Implementation Review (Pir) : Year-EndJofit DayocNo ratings yet

- Final Assessment Ads514 July 2022Document3 pagesFinal Assessment Ads514 July 2022Adilah SyamimiNo ratings yet

- 31Document15 pages31Amit TamboliNo ratings yet

- UTS Output Module 1Document9 pagesUTS Output Module 1Blethy April PalaoNo ratings yet

- Teach) AppForm PerfExcFrameworkDocument6 pagesTeach) AppForm PerfExcFrameworknickjonatNo ratings yet

- CV of Kaniz Fatema MouDocument2 pagesCV of Kaniz Fatema MouOnik Sunjy Dul IslamNo ratings yet

- Resume GabbarDocument2 pagesResume GabbarsrajparsuramNo ratings yet

- Manpower Handling TrainingDocument20 pagesManpower Handling TrainingbuddeyNo ratings yet

- Chapter 6. Formal Approaches To SlaDocument17 pagesChapter 6. Formal Approaches To SlaEric John VegafriaNo ratings yet

- PPG Week A - The Concepts of Politics and GovernanceDocument7 pagesPPG Week A - The Concepts of Politics and GovernanceMarilyn DizonNo ratings yet

- Adult Needs and Strengths Assessment: ANSA ManualDocument39 pagesAdult Needs and Strengths Assessment: ANSA ManualAimee BethNo ratings yet

- The Sapir-Whorf HypothesisDocument2 pagesThe Sapir-Whorf HypothesisqwertyuiopNo ratings yet

- Craap Test: Using The To Evaluate WebsitesDocument2 pagesCraap Test: Using The To Evaluate WebsitesNuch NuchanNo ratings yet

- LearningDocument20 pagesLearningHibo JirdeNo ratings yet

- Acr Conduct OrientationDocument2 pagesAcr Conduct OrientationRechel SegarinoNo ratings yet

- Student Perception On LeadershipDocument42 pagesStudent Perception On LeadershipPhan ThảoNo ratings yet