Professional Documents

Culture Documents

Source Code For Logistic Regression and Dijkstra's Algorithm

Source Code For Logistic Regression and Dijkstra's Algorithm

Uploaded by

Archita GogoiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Source Code For Logistic Regression and Dijkstra's Algorithm

Source Code For Logistic Regression and Dijkstra's Algorithm

Uploaded by

Archita GogoiCopyright:

Available Formats

In [57]: # importing pandas library and data file in csv format

In [58]: import pandas as pd

In [59]: df = pd.read_csv("DATA1.csv",skiprows=1)

df

Out[59]: Building Monday Tuesday Wednesday Thursday Friday Saturday Sunday

0 A 91.0 95.0 97.0 99.0 90.0 95.0 54.0

1 B 234.0 281.0 293.0 280.0 269.0 165.0 34.0

2 C 156.0 149.0 174.0 167.0 146.0 61.0 8.0

3 D 81.0 120.0 110.0 91.0 95.0 68.0 8.0

4 E 252.0 231.0 287.0 259.0 273.0 226.0 6.0

5 F 97.0 117.0 134.0 117.0 118.0 74.0 1.0

6 G 21.0 21.0 21.0 21.0 21.0 21.0 0.0

7 H 7.0 8.0 7.0 7.0 7.0 8.0 4.0

8 I 62.0 56.0 48.0 42.0 42.0 44.0 0.0

9 J 2.0 0.0 0.0 2.0 2.0 0.0 0.0

10 K 23.0 38.0 30.0 27.0 25.0 15.0 0.0

11 L 4.0 3.0 0.0 0.0 0.0 0.0 0.0

12 M 14.0 24.0 19.0 29.0 23.0 14.0 18.0

13 NaN NaN NaN NaN NaN NaN NaN NaN

14 waste collected or not collected : collected (... NaN NaN NaN NaN NaN NaN NaN

15 A 1.0 1.0 1.0 1.0 1.0 1.0 0.0

16 B 1.0 1.0 1.0 1.0 1.0 1.0 0.0

17 C 1.0 1.0 1.0 1.0 1.0 1.0 0.0

18 D 1.0 1.0 1.0 1.0 1.0 1.0 0.0

19 E 1.0 1.0 1.0 1.0 1.0 1.0 0.0

20 F 0.0 0.0 1.0 1.0 1.0 1.0 0.0

21 G 0.0 0.0 0.0 0.0 0.0 0.0 0.0

22 H 0.0 0.0 0.0 0.0 0.0 0.0 0.0

23 I 1.0 0.0 0.0 0.0 0.0 0.0 0.0

24 J 0.0 0.0 0.0 0.0 0.0 0.0 0.0

25 K 0.0 0.0 0.0 0.0 0.0 0.0 0.0

26 L 0.0 0.0 0.0 0.0 0.0 0.0 0.0

27 M 0.0 0.0 0.0 0.0 0.0 0.0 0.0

In [60]: # rearranging the data

In [61]: class_schedule = df.iloc[0:13]

class_schedule.set_index('Building',inplace=True)

class_schedule

Out[61]: Monday Tuesday Wednesday Thursday Friday Saturday Sunday

Building

A 91.0 95.0 97.0 99.0 90.0 95.0 54.0

B 234.0 281.0 293.0 280.0 269.0 165.0 34.0

C 156.0 149.0 174.0 167.0 146.0 61.0 8.0

D 81.0 120.0 110.0 91.0 95.0 68.0 8.0

E 252.0 231.0 287.0 259.0 273.0 226.0 6.0

F 97.0 117.0 134.0 117.0 118.0 74.0 1.0

G 21.0 21.0 21.0 21.0 21.0 21.0 0.0

H 7.0 8.0 7.0 7.0 7.0 8.0 4.0

I 62.0 56.0 48.0 42.0 42.0 44.0 0.0

J 2.0 0.0 0.0 2.0 2.0 0.0 0.0

K 23.0 38.0 30.0 27.0 25.0 15.0 0.0

L 4.0 3.0 0.0 0.0 0.0 0.0 0.0

M 14.0 24.0 19.0 29.0 23.0 14.0 18.0

In [62]: waste_info = df.iloc[15:28]

waste_info.set_index("Building",inplace=True)

waste_info

Out[62]: Monday Tuesday Wednesday Thursday Friday Saturday Sunday

Building

A 1.0 1.0 1.0 1.0 1.0 1.0 0.0

B 1.0 1.0 1.0 1.0 1.0 1.0 0.0

C 1.0 1.0 1.0 1.0 1.0 1.0 0.0

D 1.0 1.0 1.0 1.0 1.0 1.0 0.0

E 1.0 1.0 1.0 1.0 1.0 1.0 0.0

F 0.0 0.0 1.0 1.0 1.0 1.0 0.0

G 0.0 0.0 0.0 0.0 0.0 0.0 0.0

H 0.0 0.0 0.0 0.0 0.0 0.0 0.0

I 1.0 0.0 0.0 0.0 0.0 0.0 0.0

J 0.0 0.0 0.0 0.0 0.0 0.0 0.0

K 0.0 0.0 0.0 0.0 0.0 0.0 0.0

L 0.0 0.0 0.0 0.0 0.0 0.0 0.0

M 0.0 0.0 0.0 0.0 0.0 0.0 0.0

In [63]: # creating a new dataframe with new set of columns

In [64]: days = list(class_schedule.columns)

buildings = list(class_schedule.index)

print(days,buildings)

['Monday', 'Tuesday', 'Wednesday', 'Thursday', 'Friday', 'Saturday', 'Sunday'] ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L', 'M']

In [65]: new_cols = days + buildings + ["classes","waste_collected"]

new_cols

Out[65]: ['Monday',

'Tuesday',

'Wednesday',

'Thursday',

'Friday',

'Saturday',

'Sunday',

'A',

'B',

'C',

'D',

'E',

'F',

'G',

'H',

'I',

'J',

'K',

'L',

'M',

'classes',

'waste_collected']

In [66]: new_df = pd.DataFrame(columns=new_cols)

new_df

Out[66]: Monday Tuesday Wednesday Thursday Friday Saturday Sunday A B C ... F G H I J K L M classes waste_collected

0 rows × 22 columns

In [67]: # 'one hot encoding' for assigning numeric values to string or character inputs

In [68]: for day in days:

for building in buildings:

row=[0]*22

dI = new_cols.index(day)

row[dI] = 1

bI = new_cols.index(building)

row[bI] = 1

row[-2] = class_schedule[day][building]

row[-1] = waste_info[day][building]

series_row = pd.Series(row, index=new_df.columns )

new_df = new_df.append(series_row,ignore_index=True)

new_df

Out[68]: Monday Tuesday Wednesday Thursday Friday Saturday Sunday A B C ... F G H I J K L M classes waste_collected

0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 91.0 1.0

1 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 234.0 1.0

2 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 1.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 156.0 1.0

3 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 81.0 1.0

4 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 252.0 1.0

... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ...

86 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0

87 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 0.0 0.0

88 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 0.0

89 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0

90 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 1.0 18.0 0.0

91 rows × 22 columns

In [69]: # performing Logistic Regression with ScikitLearn

In [70]: from sklearn.linear_model import LogisticRegressionCV

In [71]: # taking 'waste_collected' as 'y' i.e the dependent variable (to be predicted) and all other columns as the independent variable 'x.'

In [72]: y = new_df['waste_collected']

X = new_df.drop(['waste_collected'],axis=1)

In [73]: my_model = LogisticRegressionCV(cv=5, random_state=0).fit(X, y)

result = my_model.predict_proba(X)

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\tapin\anaconda3\lib\site-packages\sklearn\linear_model\_logistic.py:762: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

In [74]: # no. of classes into which the output is classified and their probabilities

In [75]: print(my_model.classes_)

result

[0. 1.]

Out[75]: array([[1.57815565e-01, 8.42184435e-01],

[3.18800532e-06, 9.99996812e-01],

[1.26868415e-03, 9.98731316e-01],

[2.86993656e-01, 7.13006344e-01],

[7.99583728e-07, 9.99999200e-01],

[1.06798461e-01, 8.93201539e-01],

[9.76032728e-01, 2.39672721e-02],

[9.91688138e-01, 8.31186239e-03],

[6.35804123e-01, 3.64195877e-01],

[9.94323294e-01, 5.67670566e-03],

[9.72179252e-01, 2.78207480e-02],

[9.93386831e-01, 6.61316874e-03],

[9.85862147e-01, 1.41378533e-02],

[1.21987791e-01, 8.78012209e-01],

[8.69018382e-08, 9.99999913e-01],

[2.18780436e-03, 9.97812196e-01],

[1.98820681e-02, 9.80117932e-01],

[4.04561838e-06, 9.99995954e-01],

[2.52800024e-02, 9.74719998e-01],

[9.76221656e-01, 2.37783441e-02],

[9.91102201e-01, 8.89779874e-03],

[7.36182182e-01, 2.63817818e-01],

[9.95166967e-01, 4.83303258e-03],

[9.17555227e-01, 8.24447728e-02],

[9.93922003e-01, 6.07799708e-03],

[9.70245840e-01, 2.97541595e-02],

[1.05820160e-01, 8.94179840e-01],

[3.43349987e-08, 9.99999966e-01],

[3.19024161e-04, 9.99680976e-01],

[4.16282878e-02, 9.58371712e-01],

[5.44212012e-08, 9.99999946e-01],

[6.93066679e-03, 9.93069333e-01],

[9.76063558e-01, 2.39364425e-02],

[9.91699001e-01, 8.30099938e-03],

[8.36714156e-01, 1.63285844e-01],

[9.95134206e-01, 4.86579448e-03],

[9.53351615e-01, 4.66483851e-02],

[9.95134222e-01, 4.86577785e-03],

[9.79404772e-01, 2.05952283e-02],

[9.20581516e-02, 9.07941848e-01],

[9.31166202e-08, 9.99999907e-01],

[5.45561274e-04, 9.99454439e-01],

[1.57379628e-01, 8.42620372e-01],

[4.67183667e-07, 9.99999533e-01],

[2.50898522e-02, 9.74910148e-01],

[9.76041201e-01, 2.39587990e-02],

[9.91691123e-01, 8.30887680e-03],

[8.90312425e-01, 1.09687575e-01],

[9.94325339e-01, 5.67466119e-03],

[9.62559588e-01, 3.74404117e-02],

[9.95129589e-01, 4.87041134e-03],

[9.56592342e-01, 4.34076584e-02],

[1.68295711e-01, 8.31704289e-01],

[2.16696040e-07, 9.99999783e-01],

[2.73109190e-03, 9.97268908e-01],

[1.20734381e-01, 8.79265619e-01],

[1.59317110e-07, 9.99999841e-01],

[2.32695744e-02, 9.76730426e-01],

[9.76032702e-01, 2.39672984e-02],

[9.91688128e-01, 8.31187163e-03],

[8.90276933e-01, 1.09723067e-01],

[9.94323288e-01, 5.67671198e-03],

[9.67708269e-01, 3.22917311e-02],

[9.95127827e-01, 4.87217291e-03],

[9.72166606e-01, 2.78333943e-02],

[1.20231749e-01, 8.79768251e-01],

[6.33252930e-04, 9.99366747e-01],

[6.50420307e-01, 3.49579693e-01],

[5.20045116e-01, 4.79954884e-01],

[5.84290789e-06, 9.99994157e-01],

[4.09646391e-01, 5.90353609e-01],

[9.75835668e-01, 2.41643318e-02],

[9.90955528e-01, 9.04447231e-03],

[8.73420462e-01, 1.26579538e-01],

[9.95086966e-01, 4.91303367e-03],

[9.84631053e-01, 1.53689471e-02],

[9.95086983e-01, 4.91301687e-03],

[9.85744725e-01, 1.42552755e-02],

[7.63255733e-01, 2.36744267e-01],

[9.37645265e-01, 6.23547353e-02],

[9.91018466e-01, 8.98153396e-03],

[9.90992328e-01, 9.00767225e-03],

[9.92317771e-01, 7.68222921e-03],

[9.94798656e-01, 5.20134415e-03],

[9.95145057e-01, 4.85494283e-03],

[9.93406604e-01, 6.59339645e-03],

[9.95144870e-01, 4.85512957e-03],

[9.95141809e-01, 4.85819143e-03],

[9.95147901e-01, 4.85209900e-03],

[9.95141825e-01, 4.85817482e-03],

[9.80927963e-01, 1.90720373e-02]])

In [76]: # printing the probability output in the tabular form

In [77]: waste_not_collected = pd.DataFrame(columns = ['Day','A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L', 'M'])

waste_collected = pd.DataFrame(columns = ['Day','A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L', 'M'])

i=0

for day in days:

row_nc = []

row_c = []

row_nc.append(day)

row_c.append(day)

for building in buildings:

i+=1

row_nc.append(round(result[i-1][0],3))

row_c.append(round(result[i-1][1],3))

series_nc = pd.Series(row_nc, index=waste_not_collected.columns )

waste_not_collected = waste_not_collected.append(series_nc,ignore_index=True)

series_c = pd.Series(row_c, index=waste_collected.columns )

waste_collected = waste_collected.append(series_c,ignore_index=True)

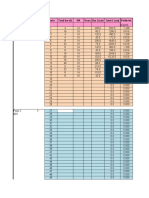

In [78]: waste_not_collected

Out[78]: Day A B C D E F G H I J K L M

0 Monday 0.158 0.000 0.001 0.287 0.000 0.107 0.976 0.992 0.636 0.994 0.972 0.993 0.986

1 Tuesday 0.122 0.000 0.002 0.020 0.000 0.025 0.976 0.991 0.736 0.995 0.918 0.994 0.970

2 Wednesday 0.106 0.000 0.000 0.042 0.000 0.007 0.976 0.992 0.837 0.995 0.953 0.995 0.979

3 Thursday 0.092 0.000 0.001 0.157 0.000 0.025 0.976 0.992 0.890 0.994 0.963 0.995 0.957

4 Friday 0.168 0.000 0.003 0.121 0.000 0.023 0.976 0.992 0.890 0.994 0.968 0.995 0.972

5 Saturday 0.120 0.001 0.650 0.520 0.000 0.410 0.976 0.991 0.873 0.995 0.985 0.995 0.986

6 Sunday 0.763 0.938 0.991 0.991 0.992 0.995 0.995 0.993 0.995 0.995 0.995 0.995 0.981

In [79]: waste_collected

Out[79]: Day A B C D E F G H I J K L M

0 Monday 0.842 1.000 0.999 0.713 1.000 0.893 0.024 0.008 0.364 0.006 0.028 0.007 0.014

1 Tuesday 0.878 1.000 0.998 0.980 1.000 0.975 0.024 0.009 0.264 0.005 0.082 0.006 0.030

2 Wednesday 0.894 1.000 1.000 0.958 1.000 0.993 0.024 0.008 0.163 0.005 0.047 0.005 0.021

3 Thursday 0.908 1.000 0.999 0.843 1.000 0.975 0.024 0.008 0.110 0.006 0.037 0.005 0.043

4 Friday 0.832 1.000 0.997 0.879 1.000 0.977 0.024 0.008 0.110 0.006 0.032 0.005 0.028

5 Saturday 0.880 0.999 0.350 0.480 1.000 0.590 0.024 0.009 0.127 0.005 0.015 0.005 0.014

6 Sunday 0.237 0.062 0.009 0.009 0.008 0.005 0.005 0.007 0.005 0.005 0.005 0.005 0.019

In [80]: # converting the output table into csv format and downloading

In [81]: waste_not_collected.to_csv("probability (waste not collected).csv",index=False)

In [82]: waste_collected.to_csv("probability (waste collected).csv",index=False)

In [83]: # predicted output

In [84]: pred_result = my_model.predict(X)

pred_result

Out[84]: array([1., 1., 1., 1., 1., 1., 0., 0., 0., 0., 0., 0., 0., 1., 1., 1., 1.,

1., 1., 0., 0., 0., 0., 0., 0., 0., 1., 1., 1., 1., 1., 1., 0., 0.,

0., 0., 0., 0., 0., 1., 1., 1., 1., 1., 1., 0., 0., 0., 0., 0., 0.,

0., 1., 1., 1., 1., 1., 1., 0., 0., 0., 0., 0., 0., 0., 1., 1., 0.,

0., 1., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 0.])

In [85]: actual_output = pd.DataFrame(columns = ['Day','A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L', 'M'])

i=0

for day in days:

row=[]

row.append(day)

for building in buildings:

i+=1

row.append(pred_result[i-1])

series_ao = pd.Series(row, index=actual_output.columns )

actual_output = actual_output.append(series_ao,ignore_index=True)

actual_output

Out[85]: Day A B C D E F G H I J K L M

0 Monday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

1 Tuesday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

2 Wednesday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

3 Thursday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

4 Friday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

5 Saturday 1.0 1.0 0.0 0.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

6 Sunday 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

In [86]: actual_output.to_csv("predictd result.csv",index=False)

In [87]: # classification report of the model which measures the quality of predictions

In [88]: from sklearn.metrics import classification_report

report=classification_report(y,pred_result)

print(report)

precision recall f1-score support

0.0 0.95 0.96 0.96 56

1.0 0.94 0.91 0.93 35

accuracy 0.95 91

macro avg 0.94 0.94 0.94 91

weighted avg 0.94 0.95 0.94 91

In [89]: # plotting the Receiver Operating Curve (ROC Curve)

In [90]: from sklearn.metrics import roc_auc_score

from sklearn.metrics import roc_curve,auc

required_curve=roc_auc_score(y,pred_result)

fpr,tpr,thresholds=roc_curve(y,pred_result,pos_label=1)

In [91]: import matplotlib.pyplot as plt

plt.figure()

plt.plot(fpr,tpr,color='darkorange',lw=1,label='ROC curve(area = %0.2f)'% required_curve)

plt.plot([0, 1], [0, 1], color='navy', lw=1, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.0])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Receiver Operating Characteristic Curve')

plt.legend(loc="lower right")

plt.show()

In [92]: actual_output=actual_output.set_index(['Day'])

actual_output

Out[92]: A B C D E F G H I J K L M

Day

Monday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Tuesday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Wednesday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Thursday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Friday 1.0 1.0 1.0 1.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Saturday 1.0 1.0 0.0 0.0 1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Sunday 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

In [93]: actual_output=actual_output.transpose()

actual_output

Out[93]: Day Monday Tuesday Wednesday Thursday Friday Saturday Sunday

A 1.0 1.0 1.0 1.0 1.0 1.0 0.0

B 1.0 1.0 1.0 1.0 1.0 1.0 0.0

C 1.0 1.0 1.0 1.0 1.0 0.0 0.0

D 1.0 1.0 1.0 1.0 1.0 0.0 0.0

E 1.0 1.0 1.0 1.0 1.0 1.0 0.0

F 1.0 1.0 1.0 1.0 1.0 1.0 0.0

G 0.0 0.0 0.0 0.0 0.0 0.0 0.0

H 0.0 0.0 0.0 0.0 0.0 0.0 0.0

I 0.0 0.0 0.0 0.0 0.0 0.0 0.0

J 0.0 0.0 0.0 0.0 0.0 0.0 0.0

K 0.0 0.0 0.0 0.0 0.0 0.0 0.0

L 0.0 0.0 0.0 0.0 0.0 0.0 0.0

M 0.0 0.0 0.0 0.0 0.0 0.0 0.0

In [94]: actual_output.insert(0,'Buildings',['A','B','C','D','E','F','G','H','I','J','K','L','M'],True)

actual_output

Out[94]: Day Buildings Monday Tuesday Wednesday Thursday Friday Saturday Sunday

A A 1.0 1.0 1.0 1.0 1.0 1.0 0.0

B B 1.0 1.0 1.0 1.0 1.0 1.0 0.0

C C 1.0 1.0 1.0 1.0 1.0 0.0 0.0

D D 1.0 1.0 1.0 1.0 1.0 0.0 0.0

E E 1.0 1.0 1.0 1.0 1.0 1.0 0.0

F F 1.0 1.0 1.0 1.0 1.0 1.0 0.0

G G 0.0 0.0 0.0 0.0 0.0 0.0 0.0

H H 0.0 0.0 0.0 0.0 0.0 0.0 0.0

I I 0.0 0.0 0.0 0.0 0.0 0.0 0.0

J J 0.0 0.0 0.0 0.0 0.0 0.0 0.0

K K 0.0 0.0 0.0 0.0 0.0 0.0 0.0

L L 0.0 0.0 0.0 0.0 0.0 0.0 0.0

M M 0.0 0.0 0.0 0.0 0.0 0.0 0.0

In [95]: actual_output=actual_output.set_index(['Buildings'])

actual_output

Out[95]: Day Monday Tuesday Wednesday Thursday Friday Saturday Sunday

Buildings

A 1.0 1.0 1.0 1.0 1.0 1.0 0.0

B 1.0 1.0 1.0 1.0 1.0 1.0 0.0

C 1.0 1.0 1.0 1.0 1.0 0.0 0.0

D 1.0 1.0 1.0 1.0 1.0 0.0 0.0

E 1.0 1.0 1.0 1.0 1.0 1.0 0.0

F 1.0 1.0 1.0 1.0 1.0 1.0 0.0

G 0.0 0.0 0.0 0.0 0.0 0.0 0.0

H 0.0 0.0 0.0 0.0 0.0 0.0 0.0

I 0.0 0.0 0.0 0.0 0.0 0.0 0.0

J 0.0 0.0 0.0 0.0 0.0 0.0 0.0

K 0.0 0.0 0.0 0.0 0.0 0.0 0.0

L 0.0 0.0 0.0 0.0 0.0 0.0 0.0

M 0.0 0.0 0.0 0.0 0.0 0.0 0.0

In [96]: Index_label1 = actual_output.query('Monday==1').index.tolist()

print('Monday =',Index_label1)

Index_label2 = actual_output.query('Tuesday==1').index.tolist()

print('Tuesday =',Index_label2)

Index_label3 = actual_output.query('Wednesday==1').index.tolist()

print('Wednesday =',Index_label3)

Index_label4 = actual_output.query('Thursday==1').index.tolist()

print('Thursday =',Index_label4)

Index_label5 = actual_output.query('Friday==1').index.tolist()

print('Friday =',Index_label5)

Index_label6 = actual_output.query('Saturday==1').index.tolist()

print('Saturday =',Index_label6)

Index_label7 = actual_output.query('Sunday==1').index.tolist()

print('Sunday =',Index_label7)

Monday = ['A', 'B', 'C', 'D', 'E', 'F']

Tuesday = ['A', 'B', 'C', 'D', 'E', 'F']

Wednesday = ['A', 'B', 'C', 'D', 'E', 'F']

Thursday = ['A', 'B', 'C', 'D', 'E', 'F']

Friday = ['A', 'B', 'C', 'D', 'E', 'F']

Saturday = ['A', 'B', 'E', 'F']

Sunday = []

In [97]: buildings_to_clean=actual_output['Monday'].values.sum()

print(buildings_to_clean)

6.0

In [98]: import math

from random import *

import random

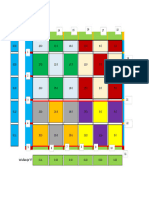

In [168… # generating nodes randomly

# since there are 13 buildings or nodes

num = 13

# radius of the circle (range of the wifi module)

circle_r = 50

# centre of the circle (x, y)

circle_x = 0

circle_y = 0

xy_list=[]

for i in range(num):

t = 2 * math.pi * random.random()

r = circle_r * math.sqrt(random.random())

x = r * math.cos(t) + circle_x

y = r * math.sin(t) + circle_y

xy_list.append([x,y])

circle_r = circle_r

# coordinate of the generated point is now the

# new centre of the circle

circle_x = x

circle_y = y

# connection between the nodes have been arbitrarily fixed

# however, the edges (distances) between them varies randomly.

xi=[xy_list[0][0], xy_list[1][0], xy_list[2][0], xy_list[3][0], xy_list[4][0], xy_list[5][0], xy_list[6][0],

xy_list[7][0], xy_list[8][0], xy_list[9][0], xy_list[10][0], xy_list[11][0], xy_list[12][0], xy_list[0][0],

xy_list[2][0], xy_list[4][0], xy_list[1][0], xy_list[3][0], xy_list[6][0], xy_list[9][0], xy_list[12][0],

xy_list[8][0], xy_list[5][0], xy_list[11][0], xy_list[7][0]]

yi=[xy_list[0][1], xy_list[1][1], xy_list[2][1], xy_list[3][1], xy_list[4][1], xy_list[5][1], xy_list[6][1],

xy_list[7][1], xy_list[8][1], xy_list[9][1], xy_list[10][1], xy_list[11][1], xy_list[12][1], xy_list[0][1],

xy_list[2][1], xy_list[4][1], xy_list[1][1], xy_list[3][1], xy_list[6][1], xy_list[9][1], xy_list[12][1],

xy_list[8][1], xy_list[5][1], xy_list[11][1], xy_list[7][1]]

n=['A','B','C','D','E','F','G','H','I','J','K','L','M']

fig, ax = plt.subplots()

ax.scatter(xi, yi,color='orange')

ax.plot(xi,yi,linestyle='dashed')

ax.set_title('Random Generated Graph')

for i, txt in enumerate(n):

ax.annotate(txt, (xi[i], yi[i]))

In [169… data={'Points':['A','B','C','D','E','F','G','H','I','J','K','L','M'],

'Coordinates':xy_list}

df=pd.DataFrame(data)

df

Out[169… Points Coordinates

0 A [-22.811352766369502, -42.9051483798065]

1 B [-16.548585957869044, -64.99301949290735]

2 C [-2.7135371843524982, -111.84658944434184]

3 D [14.504731245459316, -71.85514523206425]

4 E [52.616349152583524, -88.24676662203245]

5 F [83.45028968379334, -89.17275941357737]

6 G [112.04231553326235, -69.67377414401894]

7 H [138.77444345907816, -87.58117115205548]

8 I [104.70398680301169, -109.18049652906625]

9 J [65.50935819552903, -115.87765861437762]

10 K [19.718736052297082, -108.9197063224358]

11 L [47.22360087625079, -83.45634507275099]

12 M [73.86999598965316, -61.226726940341635]

In [170… # find the distance between the connected nodes

AB=math.sqrt( ((xy_list[0][0]-xy_list[1][0])**2)+((xy_list[0][1]-xy_list[1][1])**2) )

BC=math.sqrt( ((xy_list[1][0]-xy_list[2][0])**2)+((xy_list[1][1]-xy_list[2][1])**2) )

CD=math.sqrt( ((xy_list[2][0]-xy_list[3][0])**2)+((xy_list[2][1]-xy_list[3][1])**2) )

DE=math.sqrt( ((xy_list[3][0]-xy_list[4][0])**2)+((xy_list[3][1]-xy_list[4][1])**2) )

EF=math.sqrt( ((xy_list[4][0]-xy_list[5][0])**2)+((xy_list[4][1]-xy_list[5][1])**2) )

FG=math.sqrt( ((xy_list[5][0]-xy_list[6][0])**2)+((xy_list[5][1]-xy_list[6][1])**2) )

GH=math.sqrt( ((xy_list[6][0]-xy_list[7][0])**2)+((xy_list[6][1]-xy_list[7][1])**2) )

HI=math.sqrt( ((xy_list[7][0]-xy_list[8][0])**2)+((xy_list[7][1]-xy_list[8][1])**2) )

IJ=math.sqrt( ((xy_list[8][0]-xy_list[9][0])**2)+((xy_list[8][1]-xy_list[9][1])**2) )

JK=math.sqrt( ((xy_list[9][0]-xy_list[10][0])**2)+((xy_list[9][1]-xy_list[10][1])**2) )

KL=math.sqrt( ((xy_list[10][0]-xy_list[11][0])**2)+((xy_list[10][1]-xy_list[11][1])**2) )

LM=math.sqrt( ((xy_list[11][0]-xy_list[12][0])**2)+((xy_list[11][1]-xy_list[12][1])**2) )

MA=math.sqrt( ((xy_list[12][0]-xy_list[0][0])**2)+((xy_list[12][1]-xy_list[0][1])**2) )

AC=math.sqrt( ((xy_list[0][0]-xy_list[2][0])**2)+((xy_list[0][1]-xy_list[2][1])**2) )

CE=math.sqrt( ((xy_list[2][0]-xy_list[4][0])**2)+((xy_list[2][1]-xy_list[4][1])**2) )

EB=math.sqrt( ((xy_list[4][0]-xy_list[1][0])**2)+((xy_list[4][1]-xy_list[1][1])**2) )

BD=math.sqrt( ((xy_list[1][0]-xy_list[3][0])**2)+((xy_list[1][1]-xy_list[3][1])**2) )

DG=math.sqrt( ((xy_list[3][0]-xy_list[6][0])**2)+((xy_list[3][1]-xy_list[6][1])**2) )

GJ=math.sqrt( ((xy_list[6][0]-xy_list[9][0])**2)+((xy_list[6][1]-xy_list[9][1])**2) )

JM=math.sqrt( ((xy_list[9][0]-xy_list[12][0])**2)+((xy_list[9][1]-xy_list[12][1])**2) )

MI=math.sqrt( ((xy_list[12][0]-xy_list[8][0])**2)+((xy_list[12][1]-xy_list[8][1])**2) )

IF=math.sqrt( ((xy_list[8][0]-xy_list[5][0])**2)+((xy_list[8][1]-xy_list[5][1])**2) )

FL=math.sqrt( ((xy_list[5][0]-xy_list[11][0])**2)+((xy_list[5][1]-xy_list[11][1])**2) )

LH=math.sqrt( ((xy_list[11][0]-xy_list[7][0])**2)+((xy_list[11][1]-xy_list[7][1])**2) )

Nodes=['AB','BC','CD','DE','EF','FG','GH','HI','IJ','JK','KL','LM','MA','AC','CE','EB','BD','DG','GJ','JM','MI','IF','FL','LH']

Distance=[AB,BC,CD,DE,EF,FG,GH,HI,IJ,JK,KL,LM,MA,AC,CE,EB,BD,DG,GH,JM,MI,IF,FL,LH]

table=pd.DataFrame()

table['Nodes']=Nodes

table['Distance']=Distance

table

Out[170… Nodes Distance

0 AB 22.958578

1 BC 48.853512

2 CD 43.540606

3 DE 41.487115

4 EF 30.847842

5 FG 34.608010

6 GH 32.175791

7 HI 40.340140

8 IJ 39.762682

9 JK 46.316241

10 KL 37.482000

11 LM 34.701388

12 MA 98.402050

13 AC 71.811172

14 CE 60.152705

15 EB 72.969343

16 BD 31.802473

17 DG 97.561974

18 GJ 32.175791

19 JM 55.286749

20 MI 57.011394

21 IF 29.189539

22 FL 36.674928

23 LH 91.643718

In [173… import sys

from heapq import heappop, heappush

# A class to store a graph edge

class Edge:

def __init__(self, source, dest, weight):

self.source = source

self.dest = dest

self.weight = weight

# A class to store a heap node

class Node:

def __init__(self, vertex, weight):

self.vertex = vertex

self.weight = weight

# Override the `__lt__()` function to make `Node` class work with a min-heap

def __lt__(self, other):

return self.weight < other.weight

# A class to represent a graph object

class Graph:

def __init__(self, edges, N):

# allocate memory for the adjacency list

self.adj = [[] for _ in range(N)]

# add edges to the undirected graph

for edge in edges:

self.adj[edge.source].append(edge)

def get_route(prev, i, route):

if i >= 0:

# changing the value of integer i to corresponding character

node = chr(ord(root) + i)

get_route(prev, prev[i], route)

route.append(node)

# Run Dijkstra’s algorithm on a given graph

def findShortestPaths(graph, source, N):

# create a min-heap and push source node having distance 0

pq = []

heappush(pq, Node(source, 0))

# set initial distance from the source to `v` as INFINITY

dist = [sys.maxsize] * N

# distance from the source to itself is zero

dist[source] = 0

# list to track vertices for which minimum cost is already found

done = [False] * N

done[source] = True

# stores predecessor of a vertex (to a print path)

prev = [-1] * N

route = []

# run till min-heap is empty

while pq:

node = heappop(pq) # Remove and return the best vertex

u = node.vertex # get the vertex number

# do for each neighbor `v` of `u`

for edge in graph.adj[u]:

v = edge.dest

weight = edge.weight

# Relaxation step

if not done[v] and (dist[u] + weight) < dist[v]:

dist[v] = dist[u] + weight

prev[v] = u

heappush(pq, Node(v, dist[v]))

# mark vertex `u` as done so it will not get picked up again

done[u] = True

for i in range(1, N):

if i != source and dist[i] != sys.maxsize:

get_route(prev, i, route)

# changing the value of integer i to corresponding character

node = chr(ord(root) + i)

# Changed source to root and i to node

print(f"Path ({root} —> {node}): Minimum Distance = {dist[i]} Route = {route}")

route.clear()

if __name__ == '__main__':

# initialize edges as per the above diagram

# `(u, v, w)` triplet represent undirected edge from

# vertex `u` to vertex `v` having weight `w`

edges = [Edge(0,1,22.958578), Edge(0,2,71.811172), Edge(0,12,98.402050), Edge(1,2,48.853512), Edge(1,4,72.969343),

Edge(1,3,31.802473), Edge(2,3,43.5406062), Edge(2,4,60.152705), Edge(3,4,41.487115), Edge(3,6,97.561974),

Edge(4,5,30.847842), Edge(5,6,34.608010), Edge(5,8,29.189539), Edge(5,11,36.674928), Edge(6,7,32.175791),

Edge(6,9,32.175791), Edge(7,8,40.340140), Edge(7,11,91.643718), Edge(8,9,39.762682), Edge(8,12,57.011394),

Edge(9,10,46.316241), Edge(9,12,55.286749), Edge(10,11,37.482000), Edge(11,12,34.701388)]

# total number of nodes in the graph

N = 25

# construct graph

graph = Graph(edges, N)

source = 0

# a new variable 'root' as char data type

root = 'A'

findShortestPaths(graph, source, N)

Path (A —> B): Minimum Distance = 22.958578 Route = ['A', 'B']

Path (A —> C): Minimum Distance = 71.811172 Route = ['A', 'C']

Path (A —> D): Minimum Distance = 54.761050999999995 Route = ['A', 'B', 'D']

Path (A —> E): Minimum Distance = 95.927921 Route = ['A', 'B', 'E']

Path (A —> F): Minimum Distance = 126.775763 Route = ['A', 'B', 'E', 'F']

Path (A —> G): Minimum Distance = 152.323025 Route = ['A', 'B', 'D', 'G']

Path (A —> H): Minimum Distance = 184.498816 Route = ['A', 'B', 'D', 'G', 'H']

Path (A —> I): Minimum Distance = 155.965302 Route = ['A', 'B', 'E', 'F', 'I']

Path (A —> J): Minimum Distance = 184.498816 Route = ['A', 'B', 'D', 'G', 'J']

Path (A —> K): Minimum Distance = 230.815057 Route = ['A', 'B', 'D', 'G', 'J', 'K']

Path (A —> L): Minimum Distance = 163.450691 Route = ['A', 'B', 'E', 'F', 'L']

Path (A —> M): Minimum Distance = 98.40205 Route = ['A', 'M']

In [ ]:

You might also like

- Case Study Example KodakDocument19 pagesCase Study Example KodakAngelo Bagolboc0% (1)

- Hyundai Sonata Workshop Manual (V6-3.3L (2006) ) - OptimizedDocument16,706 pagesHyundai Sonata Workshop Manual (V6-3.3L (2006) ) - Optimizedmnbvqwert80% (5)

- PLATE BEARING TEST (Acc. DIN 18134) - Part - 1 (Field Data)Document2 pagesPLATE BEARING TEST (Acc. DIN 18134) - Part - 1 (Field Data)EMANUELINo ratings yet

- Subject Code Subject Name Year X Semester X Mid Sem Exam Results 20Xx Laqs (15 Marks Each)Document10 pagesSubject Code Subject Name Year X Semester X Mid Sem Exam Results 20Xx Laqs (15 Marks Each)Aida WaniNo ratings yet

- 2.dag Out (Berth-Ps)Document4 pages2.dag Out (Berth-Ps)dongdong910409No ratings yet

- 2.PTK in (PS-SPTT)Document4 pages2.PTK in (PS-SPTT)dongdong910409No ratings yet

- Grade SHEET CalculatorDocument4 pagesGrade SHEET Calculatorgmurali_179568No ratings yet

- Traveler Delivery Program For T-Flange RingsDocument3 pagesTraveler Delivery Program For T-Flange RingsMohamed NaeimNo ratings yet

- Progress MeetingDocument11 pagesProgress MeetingSampath KumarNo ratings yet

- Example 10.1: A B A BDocument1 pageExample 10.1: A B A BEmerson Chen FuNo ratings yet

- Data 39 BusDocument16 pagesData 39 BusRajesh GangwarNo ratings yet

- Item Analysis & Mastery Level Spreadsheet - XLSX Version 1Document8 pagesItem Analysis & Mastery Level Spreadsheet - XLSX Version 1Diona A. PalayenNo ratings yet

- Pg068 - T11 Conductor ResistanceDocument1 pagePg068 - T11 Conductor ResistanceDolyNo ratings yet

- Img 20220927 Wa0006Document4 pagesImg 20220927 Wa0006Khaled MohamedNo ratings yet

- 1-1.dalian In2 (Pilot Station To Berth)Document4 pages1-1.dalian In2 (Pilot Station To Berth)dongdong910409No ratings yet

- Time Sheet SampleDocument2 pagesTime Sheet SampleTácito De LuccaNo ratings yet

- Experiments 4 and 5 - Post-Lab Test and QuestionsDocument2 pagesExperiments 4 and 5 - Post-Lab Test and QuestionsrosaNo ratings yet

- Data Untuk Routing Waduk: Hasil PerhitunganDocument2 pagesData Untuk Routing Waduk: Hasil PerhitunganSiti RauhunNo ratings yet

- Solution To HW 4 Problem 1Document2 pagesSolution To HW 4 Problem 1ALWAYNE BUCKNORNo ratings yet

- FINAL Exam (Open) Attempt Sheet: Professional Training and ConsultationDocument1 pageFINAL Exam (Open) Attempt Sheet: Professional Training and ConsultationMurugananthamParamasivamNo ratings yet

- Anggraini Lenry Rahman (1910247973) - TUGAS 1 - Sistem Pengambilan KeputusanDocument8 pagesAnggraini Lenry Rahman (1910247973) - TUGAS 1 - Sistem Pengambilan Keputusananggraini.lenryrahmaNo ratings yet

- Cash Flow Diagram ExampleDocument3 pagesCash Flow Diagram ExampleFarid DarwishNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- Priority End Date Work Categor y CR / PTR Number Complex Ity Current Status Create DateDocument6 pagesPriority End Date Work Categor y CR / PTR Number Complex Ity Current Status Create Datemeenakshi singhNo ratings yet

- Particle Size Distribution Bs 1377-2-1990Document12 pagesParticle Size Distribution Bs 1377-2-1990Nzunzu BenNo ratings yet

- Division of Northern Samar Curriculum Implementation Division (Cid)Document8 pagesDivision of Northern Samar Curriculum Implementation Division (Cid)gener r. rodelasNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- B-Flenzen2018 Vlakkelas Flens enDocument6 pagesB-Flenzen2018 Vlakkelas Flens enRoberta RamosNo ratings yet

- Design 001Document3 pagesDesign 001Carlos Alvarez L.No ratings yet

- Plan de Encofrado y VaciadoDocument1 pagePlan de Encofrado y Vaciadodaniel mirallesNo ratings yet

- Fish To Xy To FmeaDocument25 pagesFish To Xy To FmeaSudhagarNo ratings yet

- Member Name Member # Steel N Total Bar (#) HA Doux Bar L (CM) Total L (CM) Poids/mLDocument269 pagesMember Name Member # Steel N Total Bar (#) HA Doux Bar L (CM) Total L (CM) Poids/mLYoumna ShatilaNo ratings yet

- Stress Sheet Mod For MemoDocument108 pagesStress Sheet Mod For MemoUmar UmarNo ratings yet

- Solution Manual For Introduction To Geotechnical Engineering 2Nd Edition Das Sivakugan 1305257324 9781305257320 Full Chapter PDFDocument36 pagesSolution Manual For Introduction To Geotechnical Engineering 2Nd Edition Das Sivakugan 1305257324 9781305257320 Full Chapter PDFkaren.boles184100% (16)

- Solution Manual For Introduction To Geotechnical Engineering 2nd Edition Das Sivakugan 1305257324 9781305257320Document27 pagesSolution Manual For Introduction To Geotechnical Engineering 2nd Edition Das Sivakugan 1305257324 9781305257320juliaNo ratings yet

- SpssDocument5 pagesSpssIrwanNo ratings yet

- Επιφάνεια Βαφής Και Βάρος Δοκών Μεταλλικής ΚατασκευήςDocument27 pagesΕπιφάνεια Βαφής Και Βάρος Δοκών Μεταλλικής ΚατασκευήςTheodoros AtheridisNo ratings yet

- Dynamic, Absolute and Kinematic Viscosity Fluid Density: - 3 2 - 1 2 - 6 2 2 - 4 2 1) o o o oDocument1 pageDynamic, Absolute and Kinematic Viscosity Fluid Density: - 3 2 - 1 2 - 6 2 2 - 4 2 1) o o o osrNo ratings yet

- Calculate The 3 Control Limits For X-Bar and R Charts Based On The First 12 Samples ReflectingDocument6 pagesCalculate The 3 Control Limits For X-Bar and R Charts Based On The First 12 Samples ReflectingRamzi SaeedNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- Drawing1 ModelDocument1 pageDrawing1 Modeldanom firmansyahNo ratings yet

- Spun 450 Load Settlement CurveDocument2 pagesSpun 450 Load Settlement Curveyin hoe ongNo ratings yet

- Para JhosssDocument15 pagesPara JhosssINSTITUTO GEOTECNICO PERUNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- Unit Hidrograf Satuan Adalah SBBDocument16 pagesUnit Hidrograf Satuan Adalah SBBAlan WijayaNo ratings yet

- Secao Transversal - CaracteristicasDocument4 pagesSecao Transversal - CaracteristicasRafael BentesNo ratings yet

- Iwanda R1Document12 pagesIwanda R1tabnadya11No ratings yet

- GEO SIR Template RevisedDocument3 pagesGEO SIR Template RevisedSaif MshahNo ratings yet

- Tabla de FrecuenciasDocument14 pagesTabla de FrecuenciasAiner ShuanNo ratings yet

- AbsenteismoDocument2 pagesAbsenteismoMarcelo LimaNo ratings yet

- Spun 500 Load Settlement CurveDocument2 pagesSpun 500 Load Settlement Curveyin hoe ongNo ratings yet

- 00549Levantamento de EstruturaDocument55 pages00549Levantamento de EstruturaElson QueirozNo ratings yet

- SsssDocument2 pagesSsssDarwin MachacaNo ratings yet

- Design of Mat Foundation Mat Foundation Design (ACI 318-11) - MetricDocument27 pagesDesign of Mat Foundation Mat Foundation Design (ACI 318-11) - MetricsalmanNo ratings yet

- UPS CalculatorDocument1 pageUPS CalculatordesignselvaNo ratings yet

- Sample Size Calculator - Discrete DataDocument93 pagesSample Size Calculator - Discrete DataKelvinNo ratings yet

- Unit Identifier: Recorder1 - Sona2: Same As Unit GraphicDocument1 pageUnit Identifier: Recorder1 - Sona2: Same As Unit GraphicfaizNo ratings yet

- Reference Material 1Document97 pagesReference Material 1Archita GogoiNo ratings yet

- Conflict - Answer KeyDocument88 pagesConflict - Answer KeyArchita GogoiNo ratings yet

- Es 202 Tutorial Sheet: Isotactic Structures)Document3 pagesEs 202 Tutorial Sheet: Isotactic Structures)Archita GogoiNo ratings yet

- Role of Technology in The Era of Covid-19 Pandemic: by Reshmi JyotiDocument12 pagesRole of Technology in The Era of Covid-19 Pandemic: by Reshmi JyotiArchita GogoiNo ratings yet

- B.tech Project Power Point Presentation 2021Document40 pagesB.tech Project Power Point Presentation 2021Archita GogoiNo ratings yet

- Poster of Team JalshanDocument1 pagePoster of Team JalshanArchita GogoiNo ratings yet

- Wooden Adjustable Measuring SpoonDocument3 pagesWooden Adjustable Measuring SpoonArchita GogoiNo ratings yet

- HRM - Unit 1 CombinedDocument149 pagesHRM - Unit 1 CombinedArchita GogoiNo ratings yet

- PowerDocument2 pagesPowerMiguel Angel RodriguezNo ratings yet

- TL-SG1005D (UN) 8.0&TL-SG1008D (UN) 8.0 - DatasheetDocument4 pagesTL-SG1005D (UN) 8.0&TL-SG1008D (UN) 8.0 - Datasheetg4277No ratings yet

- To The Learners: Consuelo M. Delima Elizabeth M. RuralDocument9 pagesTo The Learners: Consuelo M. Delima Elizabeth M. RuralMyla MillapreNo ratings yet

- Properties and Applicable Usage of PosmacDocument3 pagesProperties and Applicable Usage of PosmacLucio PereiraNo ratings yet

- Dr. Andri Dwi Setiawan, Dr. Armand Omar Moeis, M.SC: Group 9Document21 pagesDr. Andri Dwi Setiawan, Dr. Armand Omar Moeis, M.SC: Group 9jasmine pradnyaNo ratings yet

- CAT1000P: Program To Create A GEOPAK Part Program With The Help of CAD DataDocument39 pagesCAT1000P: Program To Create A GEOPAK Part Program With The Help of CAD DataYnomata RusamellNo ratings yet

- 8.2 J-Ring TestDocument15 pages8.2 J-Ring TestbilalNo ratings yet

- Manual de Operación Charrua Soft CWSDocument16 pagesManual de Operación Charrua Soft CWSOmar Stalin Lucio RonNo ratings yet

- Pneumatic Actuators PDFDocument16 pagesPneumatic Actuators PDFkotrex_tre3No ratings yet

- Questionnaire - Influence of TechnologyDocument2 pagesQuestionnaire - Influence of TechnologyRi ElNo ratings yet

- Steam TurbineDocument5 pagesSteam Turbinerashm006ranjanNo ratings yet

- Marking Dt0 SMD TransistorDocument8 pagesMarking Dt0 SMD TransistorWee Chuan PoonNo ratings yet

- Ligaduras Griegas - WikipediaDocument10 pagesLigaduras Griegas - WikipediaCarlos Arturo MedinaNo ratings yet

- Correcting Work Standards and Work Practices On Tractor Assembly LineDocument47 pagesCorrecting Work Standards and Work Practices On Tractor Assembly LineRianca SharmaNo ratings yet

- Airis 2Document253 pagesAiris 2Ma DamasNo ratings yet

- 3TNV80FT ZmyaDocument26 pages3TNV80FT Zmyapecasplh02No ratings yet

- Exercise 1 (Openmp-I)Document10 pagesExercise 1 (Openmp-I)Dhruv SakhujaNo ratings yet

- Indexing and History of IndexingDocument2 pagesIndexing and History of IndexingArjun VaradrajNo ratings yet

- 401VPN X2 ManualDocument74 pages401VPN X2 ManualDaniel AbdallaNo ratings yet

- DS025106H Multi CableDocument1 pageDS025106H Multi CabletqhunghnNo ratings yet

- 4 CH DMX Dimmer: InstructablesDocument7 pages4 CH DMX Dimmer: InstructablesCorrado BrianteNo ratings yet

- DX100 Field Maintenance Guide: Downloaded From Manuals Search EngineDocument24 pagesDX100 Field Maintenance Guide: Downloaded From Manuals Search Engine조장현No ratings yet

- Learning Log: Ask SMART Questions About Real Life Data SourcesDocument2 pagesLearning Log: Ask SMART Questions About Real Life Data SourcesDamilola Babalola0% (1)

- Transaction HeaderDocument3 pagesTransaction HeaderWaleed AljackNo ratings yet

- Abandoned Cart - Flows - KlaviyoDocument1 pageAbandoned Cart - Flows - KlaviyoAdeps SmithsNo ratings yet

- CHAP 3.2 Protection Against Malicious SoftwareDocument22 pagesCHAP 3.2 Protection Against Malicious SoftwareFirdausNo ratings yet

- Research Method in Technology (It542) Assignment 2 Section IiDocument14 pagesResearch Method in Technology (It542) Assignment 2 Section Iimelesse bisemaNo ratings yet

- C80 Brochure PDFDocument9 pagesC80 Brochure PDFFaisal HillalNo ratings yet