Professional Documents

Culture Documents

Prakash2019 - Face Recognition

Prakash2019 - Face Recognition

Uploaded by

eshwari2000Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Prakash2019 - Face Recognition

Prakash2019 - Face Recognition

Uploaded by

eshwari2000Copyright:

Available Formats

Second International Conference on Smart Systems and Inventive Technology (ICSSIT 2019)

IEEE Xplore Part Number: CFP19P17-ART; ISBN:978-1-7281-2119-2

Face Recognition with Convolutional Neural Network

and Transfer Learning

R.Meena Prakash 1 , N.Thenmoezhi2 , M.Gayathri3

Department of Electronics and Communication Engineering, AAA College of Engineering and Technology, Amathur, India

Abstract— Face recognition finds its major applications in Neural Networks, Radial Basis Function (RBF) network,

biometrical security, health care and marketing. Among many Convolutional Neural Network (CNN) etc., The deep learning

biometrics like finger print, iris, voice, hand geometry, signature features give better classification results than the hand crafted

etc., face recognition has attained the most popular research features.

orientation because of high recognition rate, uniqueness and

large number of features. S ome of the challenges in face Nowadays CNN is used for major classification problems

recognition task include the differences in illumination, poses, in which the features are automatically learnt from low level to

expressions and background. Now-a-days deep learning methods high level on successive increasing layers of the network.

are widely employed and they ensure promising results for image Transfer learning is the process of transfer of knowledge

recognition and classification problems. Convolutional Neural gained in solving one problem to solve another related

Networks automatically learn features at multiple levels of problem. Transfer learning is advantageous when there is lack

abstraction through back propagation with convolution layers, of sufficient training data and also it reduces computational

pooling layers and fully connected layers. In this work, an complexity. It offers good classification accuracy on smaller

automated face recognition method using Convolutional Neural database. In the proposed work, VGG16 architecture which is

Network (CNN) with transfer learning approach is proposed. pre-trained on huge ImageNet database with more than 1

The CNN with weights learned from pre-trained model VGG-16 million images belonging to 1000 different categories is used

on huge ImageNet database is used to train the images from the

to train the input face images. Then, classification is done using

face database. The extracted features are fed as input to the Fully

Fully Connected Layer and Softmax activation. The

connected layer and softmax activation for classification. Two

publicly available databases of face images – Yale and AT&T are contribution of the article is implementation of an automated

used to test the performance of the proposed method. face recognition method using CNN with transfer learning. The

Experimental results verify that the method gives better rest of the paper is organized as follows. Review of Literature

recognition results compared to other methods. is given in Section II. The proposed method is explained in

Section III. Experiments are results are discussed in Section IV

Keywords— Face recognition, Convolutional Neural Network, with a conclusion part in Section V.

transfer learning, classification

II. LITERATURE SURVEY

I. INTRODUCTION An age - invariant face recognition method using

Face recognition is one of the active research areas which discriminative model with deep feature training is proposed

has its extensive range of applications such as identity [1]. In this work, AlexNet is used as the transfer learning CNN

management and security. The face recognition system is model to learn high level deep features. These features are then

currently employed for numerous applications. In healthcare encoded using a code book into a code word with higher

technology, emotion recognition facilitates automated dimension for image representation. The encoding framework

diagnosis of diseases such as Down syndrome. It is also being ensures similar code word for the same person’s face images

employed by the advertising media to understand the photographed during different time scale. Linear regression

customer’s facial expressions. Face recognition poses certain based classifier is used for face recognition and the method is

challenges due to conditions such as poor illumination, tested on three datasets including FGNET which are publicly

differences in poses and background, occlusion etc., The available. A face recognition system which incorporates the

design of face recognition systems focuses on improving the Convolutional neural network, auto encoder and denoising is

speed and accuracy. The face recognition task in general proposed which is called Deep Stacked Denoising Sparse

consists of the following steps – pre-processing of the input Autoencoders (DS-DSA). Multiclass Support Vector Machine

face images, followed by extraction of features and then (SVM) and Softmax classifiers are used for the classification

classification. The pre-processing step is used to remove any process. The method is tested on four publicly available

noise content, background illumination, etc., and also datasets including ORL, Yale and Pubfig. Cross-modality face

normalization. From the pre-processed face images, features recognition using deep local descriptor learning frame work is

are extracted for which different methods are adapted including proposed in which both the compact local information and

Histogram of Oriented Gradients (HOG), Principal Component discriminant features are learnt from raw facial patches directly

Analysis (PCA), Local Binary Pattern (LBP), Scale Invariant [3]. Deep local descriptors are extracted with Convolutional

Feature Transform (SIFT), Linear Discriminant Analysis Neural Network (CNN). The method is tested on six

(LDA), deep learning and others [13,14,15]. Classification is extensively used face recognition datasets of different modes .

done using classifiers such as Support Vector Machine (SVM),

978-1-7281-2119-2/19/$31.00 ©2019 IEEE 861

Second International Conference on Smart Systems and Inventive Technology (ICSSIT 2019)

IEEE Xplore Part Number: CFP19P17-ART; ISBN:978-1-7281-2119-2

Deep learning with transfer learning CNN architecture is III. PROPOSED METHOD

proposed which consists of three modules: base convolution A. Convolutional Neural Network (CNN) and Transfer

module, transfer module and linear modules [4]. The method is Learning

tested on SCFace, ChokePoint, FSV and TIP datasets. A face

recognition system based on individual component is proposed Deep learning is one of the areas of machine learning which

in which knowledge is gathered from whole images of face and learns representations from data with emphasis on learning

transfer learning is used to classify the face components using a successive layers of increasingly meaningful representations.

CNN architecture [5]. The method is tested to classify face The number of layers which contribute to the information is

images from Computer Vision Research Projects Faces94 called the depth of the model. The neural network basically

database. A face recognition method consisting of the steps of comprises of three layers - input layer, multiple hidden layers

face enhancement, extraction of features and finally and output layer. The number of layers which contribute to the

classification into one of the known faces is proposed in which data is called the depth of the model. Convolutional Neural

Scale Invariant Feature Transform (SIFT) descriptors are Networks are neural networks that employ convolution

calculated from detected face regions ’ patches [6]. Multiclass

operation in place of matrix multiplication in the

SVM is used as the classifier and the method is tested on

popular face databases. An automated face recognition method convolutional layers. The convolution operation is denoted as

based on CNN architecture with pre-trained VGG model for given in equation (1)

() ( )( ) ( )

transfer learning is proposed [7]. The cosine similarity and the

linear SVM are used for classification and the method is tested In equation (1), is the input, is filter kernel and is the

on two public datasets. output called feature map.

CNN consists of three stages. In the first stage, multiple

Livestock identification method using CNN is proposed in convolutions are performed using filters simultaneously to

which pre-trained CNN model is trained using artificially produce a set of linear activations. In the second stage, a non -

augmented dataset [8]. Face recognition using a recurrent

linear activation, for example, rectified linear activation

regression neural network (RRNN) framework is proposed and

function is performed which will directly output the input if

is applied to capture video frames and still images [9]. For still

images, sequential poses of the images are predicted from one positive and zero otherwise. In the third stage, pooling

static image and for face recognition in videos, one entire video operation is used to reduce size of the feature map. Some of

sequence is used as input. A shallow CNN with cascade the pooling functions are max pooling which finds and

classifier is proposed for face recognition [10]. The method is replaces with the maximum value in a rectangular window,

tested on publicly available datasets –Wild dataset and the average pooling which replaces with the average of all the

AT&T database. A face recognition method which employs an values in the rectangular window, weighted average pooling

architecture consisting of the stages of image enhancement and others. At the end of CNN, the output of last pooling layer

with noise removal, histogram equalization, face region is given as input to the fully connected (FC) layer. Every node

detection, segmentation of faces, feature extraction and finally in FC layer is connected to all nodes in the preceding layer.

classification is proposed [11]. A face benchmark dataset with The FC layer performs classification into different classes.

ID photos of 100 celebrities is also constructed and used for The CNNs are trained through a process called back

training and testing. propagation which consists of 4 stages – forward pass, loss

CNN architecture with two different loss functions based function, backward pass and weight update. The filter weights

on Compact Discriminative loss is proposed for face are initialized randomly. During the forward pass, the training

recognition and three CNN’s –CNN-M, LeNet, and ResNet-50 images are passed to the network. The error rate is calculated

are employed to test the method [12]. The implementation of using loss function which compares the network output with

facial recognition framework in smart glasses is proposed the desired output. Then back pass and weight update stages

which employs CNN with transfer learning [16]. A survey on take place based on the error rate. The back propagation

face recognition methods, face emotion recognition and gender process is repeated for several iterations till convergence

classification techniques is done in which the performance of occurs.

different methods such as CNN, trans fer leaning and traditional Transfer learning is a machine learning method where the

methods of HOG, Random Decision Forests is compared [17]. knowledge gained from a particular task is transferred to

Based on the literature survey, it is inferred that CNN gives improve the process of learning in another related task. The

promising results for classification problems and can be used CNN architectures like VGG, ResNet, AlexNet etc., are

for face recognition application. To reduce the computational already trained on the huge image database of ImageNet

complexity, transfer learning can be employed in which consisting of more than 1 million labelled high resolution

knowledge gained by the pre-trained models on huge image images belonging to 1000 classes. In this way, the knowledge

database can be transferred to learn on new database of

gained already from one task is transferred to learn new task.

relatively smaller size. In this article, VGG16 architecture is

Transfer learning is especially used where there is lack of

used for transfer learning and to train on the face images from

the database. The extracted features are given to the Fully sufficient training data. It shows good performance in

Connected Layer for classification followed by face classification and also the computational complexity is greatly

recognition in the testing phase. reduced as the process need not start from the scratch.

978-1-7281-2119-2/19/$31.00 ©2019 IEEE 862

Second International Conference on Smart Systems and Inventive Technology (ICSSIT 2019)

IEEE Xplore Part Number: CFP19P17-ART; ISBN:978-1-7281-2119-2

B. CNN Architecture the faces dataset. The input images to the CNN are of size

In the proposed method, VGG16 CNN architecture is used 224x224x3. The input images are passed through stack of 13

for transfer learning and is trained on the input images from convolutional layers with filter size 3x3 and different depths

of 64,528,256 and 512. Following the convolutional layers,

Input Size three fully connected layers (FC) are used. The first two FC

224x224x3 layers have 4096 units and the last FC layer performs

classification into 1000 classes. All the hidden layers have

non-linear rectification (ReLU). Softmax layer is used as the

Input Layer+2x(Convolution Layer + ReLU) last layer of the CNN which performs activation function.

Including the input and output layer, there are totally 41 layers

in the architecture. The architecture of VGG16 CNN model is

Max Pooling Layer shown in Figure 1.

The VGG16 was already trained on huge ImageNet database

and the weights were learnt. These weights are used to

2 x ( Convolution Layer with ReLU)

initialize the model and trained on the input faces images

training dataset. The extracted features are fed as input to the

FC layer followed by softmax activation and classified into

Max Pooling Layer different faces.

IV. EXPERIM ENTS AND RESULTS

A. Description of database

3 x ( Convolution Layer with ReLU)

The performance of the method is tested on two popular

publicly available face databases – Yale and AT&T. The

Yale face database contains 165 gray scale images , where

Max Pooling Layer there are faces of 15 individual persons with different

emotions. There are 11 images per subject with different

facial expressions. The size of each image is 480x640. In the

3 x ( Convolution Layer with ReLU) AT&T database, there are 10 different face images of 40

individual persons. The images have different facial

expressions and were taken at different times. The images are

of size 92x112. The dataset is formed with images for

Max Pooling Layer training and images for testing. The description of the dataset

is given in Table 1.

T ABLE 1

DESCRIPT ION OF DAT ASET

3 x ( Convolution Layer with ReLU)

S .No Dataset Total Training Testing No. of

Images Images Images classes

1 Yale 165 90 75 15

Max Pooling Layer 2 AT & T 400 240 160 40

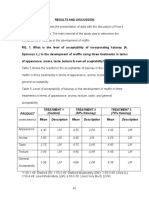

B. Experimental Results

2x (Fully Connected Layer+Drop out+ReLU) The sample images from AT&T and yale datasets are

given in Figures 2 and 3 respectively. The proposed method is

implemented with Keras using python. The number of epochs

is set as 10; batch size is selected as 2. The learning rate of the

Fully Connected Layer + Softmax + network is set at 0.00001. The gradient descent optimization

Output Layer algorithm is used. The results obtained are given in Table 2 and

are compared against results obtained with face recognition

using Principal Component Analysis. The screen capture of the

Size of output vector =1000 results obtained using training phase is given in Figure 4.

The experimental results for PCA and the proposed method

using CNN with transfer learning in terms of classification

Fig. 1 VGG16 Architecture

accuracy are given in Table 2. It is inferred from the results

that the proposed method of face recognition using CNN with

978-1-7281-2119-2/19/$31.00 ©2019 IEEE 863

Second International Conference on Smart Systems and Inventive Technology (ICSSIT 2019)

IEEE Xplore Part Number: CFP19P17-ART; ISBN:978-1-7281-2119-2

transfer learning achieves better classification accuracy face images and 96.5% for Yale database face images. The

compared to the method using Principal Component Analysis. experimental results show that face recognition using CNN

It achieves 100% accuracy for AT&T database face images with transfer learning gives better classification accuracy in

and 98.7% accuracy for face images from Yale dataset. comparison with other methods.

REFERENCES

[1] Shakeel, M. S., & Lam, K. (2019). Deep-feature encoding-based

discriminative model for age-invariant face recognition. Pattern

Recognition, 93, 442-457.

[2] Görgel, P., & Simsek, A. (2019). Face recognition via Deep Stacked

Denoising Sparse Autoencoders (DSDSA). Applied Mathematics and

Computation, 355, 325-342.

Fig. 2 Sample Images from AT & T database [3] Peng, C., Wang, N., Li, J., & Gao, X. (2019). DLFace: Deep local

descriptor for cross-modality face recognition. Pattern Recognition, 90,

161-171.

[4] Banerjee, S., & Das, S. (2018). Mutual variation of information on

transfer-CNN for face recognition with degraded probe

samples. Neurocomputing, 310, 299-315.

[5] Kute, R. S., Vyas, V., & Anuse, A. (2019). Component -based face

Fig. 3 Sample Images from Yale database recognition under transfer learning for forensic applications. Information

Sciences, 476, 176-191.

[6] Umer, S., Dhara, B. C., & Chanda, B. (2019). Face recognition using

fusion of feature learning techniques. Measurement, 146, 43-54.

[7] Elmahmudi, A., & Ugail, H. (2019). Deep face recognition using

imperfect facial data. Future Generation Computer Systems, 99, 213-

225.

[8] Hansen, M. F., Smith, M. L., Smith, L. N., Salter, M. G., Baxter, E. M.,

Farish, M., & Grieve, B. (2018). T owards on-farm pig face recognition

using convolutional neural networks. Computers in Industry, 98, 145-

152.

[9] Li, Y., Zheng, W., Cui, Z., & Zhang, T . (2018). Face recognition based

on recurrent regression neural network. Neurocomputing, 297, 50-58.

[10] Leng, B., Liu, Y., Yu, K., Xu, S., Yuan, Z., & Qin, J. (2016). Cascade

shallow CNN structure for face verification and

Figure 4 Screen capture of results obtained using training phase identification. Neurocomputing, 215, 232-240.

[11] Cui, D., Zhang, G., Hu, K., Han, W., & Huang, G. (2019). Face

recognition using total loss function on face database with ID

T ABLE 2 photos. Optics & Laser Technology, 110, 227-233.

CLASSIFICATION RESULTS IN T ERMS OF ACCURACY

[12] Zhang, M. M., Shang, K., & Wu, H. (2019). Deep compact

discriminative representation for unconstrained face recognition. Signal

S .No Method Dataset Accuracy (%) Processing: Image Communication, 75, 118-127.

1 PCA Yale 82.0 [13] A Survey on Face Recognition T echniques for Undersampled Data.

AT & T 96.5 (2017). International Journal of Science and Research (IJSR), 6(1),

2 Proposed M ethod Yale 98.7 1582-1585.

using CNN AT & T 100.0 [14] Nielsen, M. A. (2015). Neural Networks and Deep Learning.

Determination Press.

[15] Xu, Y., Ma, H., Cao, L., Cao, H., Zhai, Y., Piuri, V., & Scotti, F. (2018).

Robust Face Recognition Based on Convolutional Neural

V. CONCLUSION Network. DEStech Transactions on Computer Science and

An automated face recognition method has been proposed Engineering, (Icmsie)

in this paper. The pre-trained CNN model VGG16 which was [16] Khan, S., Javed, M. H., Ahmed, E., Shah, S. A. A., & Ali, S. U. (2019).

Facial Recognition using Convolutional Neural Networks and

trained on huge database of ImageNet is used to initialize the Implementation on Smart Glasses. 2019 International Conference on

weights and the model is trained on the input face images Information Science and Communication Technology (ICISCT).

dataset. The features are extracted during the training face and [17] Mane, S., & Shah, G. (2018). Facial Recognition, Expression

fed to the fully connected layer with softmax activation Recognition, and Gender Identification. Data Management, Analytics

function for classification. The method is tested on two and Innovation Advances in Intelligent Systems and Compu ting, 275–

publicly available face datasets of Yale and AT&T. Face 290.

recognition accuracy of 100% is achieved for AT&T database

978-1-7281-2119-2/19/$31.00 ©2019 IEEE 864

You might also like

- Teuvo Kohonen - Self-Organizing MapsDocument438 pagesTeuvo Kohonen - Self-Organizing MapsAdri Dos Anjos100% (2)

- A Compressed Deep Convolutional Neural Networks For Face RecognitionDocument6 pagesA Compressed Deep Convolutional Neural Networks For Face RecognitionSiddharth KoliparaNo ratings yet

- SPIN-2021 Paper 610Document8 pagesSPIN-2021 Paper 610cherrygoudgudavalliNo ratings yet

- TS PPT (Autosaved)Document16 pagesTS PPT (Autosaved)Vasiha Fathima RNo ratings yet

- A Deep Learning Convolutional Neural Network in Health Care EnvironmentDocument17 pagesA Deep Learning Convolutional Neural Network in Health Care EnvironmentVasiha Fathima RNo ratings yet

- Face Recognition Based On Convolutional Neural Network.: November 2017Document5 pagesFace Recognition Based On Convolutional Neural Network.: November 2017MackNo ratings yet

- Face Recognition Based On Convolutional Neural Network.: November 2017Document5 pagesFace Recognition Based On Convolutional Neural Network.: November 2017unbuild mailNo ratings yet

- Convolutional Neural Network For Satellite Image ClassificationDocument14 pagesConvolutional Neural Network For Satellite Image Classificationwilliam100% (1)

- Real Time Gender Classification and Age EstimationDocument3 pagesReal Time Gender Classification and Age EstimationSNEHAL HASENo ratings yet

- A Robust Approach For Gender Recognition Using Deep LearningDocument6 pagesA Robust Approach For Gender Recognition Using Deep LearningRohit GuptaNo ratings yet

- Kaam Ka ChizDocument4 pagesKaam Ka Chizpapunjay kumarNo ratings yet

- SSRN Id4447364Document8 pagesSSRN Id4447364Mohamed SaaidNo ratings yet

- Face Tracking With Convolutional Neural Network Heat-Map: February 2018Document7 pagesFace Tracking With Convolutional Neural Network Heat-Map: February 2018kavi testNo ratings yet

- Ijeces 13 07 07 939Document9 pagesIjeces 13 07 07 939Karama AbdelJabbarNo ratings yet

- 1.convolutional Neural Networks For Image ClassificationDocument11 pages1.convolutional Neural Networks For Image ClassificationMuhammad ShoaibNo ratings yet

- Real Time Face Recognition Based On Convolution Neural NetworkDocument4 pagesReal Time Face Recognition Based On Convolution Neural NetworkCNS CNSNo ratings yet

- 2 Convolutional Neural Network For Image ClassificationDocument6 pages2 Convolutional Neural Network For Image ClassificationKompruch BenjaputharakNo ratings yet

- Research PaperDocument7 pagesResearch PaperAhmed BenchekrounNo ratings yet

- Hizami CHAPTER 1-FeedbacksDocument5 pagesHizami CHAPTER 1-FeedbacksMohd Hizami Ab HalimNo ratings yet

- Final Project DocumentationDocument49 pagesFinal Project Documentationinstagramhacker136No ratings yet

- Classification of MNIST Image Dataset Using Improved Convolutional Neural NetworkDocument10 pagesClassification of MNIST Image Dataset Using Improved Convolutional Neural NetworkIJRASETPublicationsNo ratings yet

- Comparative Study of Convolutional Neural Network and Haar Cascade Performance On Mask Detection Systems Using MatlabDocument5 pagesComparative Study of Convolutional Neural Network and Haar Cascade Performance On Mask Detection Systems Using MatlabImelda Uli Vistalina SimanjuntakNo ratings yet

- A Literature Study of Deep Learning and Its Application in Digital Image ProcessingDocument12 pagesA Literature Study of Deep Learning and Its Application in Digital Image ProcessingArct John Alfante ZamoraNo ratings yet

- (IJCST-V10I5P12) :mrs J Sarada, P Priya BharathiDocument6 pages(IJCST-V10I5P12) :mrs J Sarada, P Priya BharathiEighthSenseGroupNo ratings yet

- Face Recognition Using CNNDocument17 pagesFace Recognition Using CNNgchetan8008No ratings yet

- Journal Publication - Liyew BinyamDocument7 pagesJournal Publication - Liyew BinyamETechno TricksNo ratings yet

- Deep Learning Approach For Object Detection Using CNN: AbstractDocument7 pagesDeep Learning Approach For Object Detection Using CNN: AbstractAYUSH MISHRANo ratings yet

- (IJCST-V10I4P20) :khanaghavalle G R, Arvind Nachiappan L, Bharath Vyas S, Chaitanya MDocument5 pages(IJCST-V10I4P20) :khanaghavalle G R, Arvind Nachiappan L, Bharath Vyas S, Chaitanya MEighthSenseGroupNo ratings yet

- Object Detection and Its Implementation On Android DevicesDocument8 pagesObject Detection and Its Implementation On Android DevicesPrateek singhNo ratings yet

- A13 Mahaputra Stamped eDocument5 pagesA13 Mahaputra Stamped eImelda Uli Vistalina SimanjuntakNo ratings yet

- Implementation of FaceNet and Support Vector Machine in A Real-Time Web-Based Timekeeping ApplicationDocument9 pagesImplementation of FaceNet and Support Vector Machine in A Real-Time Web-Based Timekeeping ApplicationIAES IJAINo ratings yet

- One To Many Face Recognition With Bilinear CNNS: Sonal Mishra, Pratyush TripathiDocument8 pagesOne To Many Face Recognition With Bilinear CNNS: Sonal Mishra, Pratyush Tripathianil kasotNo ratings yet

- TS - ChaptersDocument46 pagesTS - ChaptersS VarshithaNo ratings yet

- 10 1109icaccs 2019 8728411Document6 pages10 1109icaccs 2019 8728411sandhyaphd801No ratings yet

- Evaluating DNN and Classical ML Algorithms For NidsDocument24 pagesEvaluating DNN and Classical ML Algorithms For NidsrishiNo ratings yet

- Assignment 2, Machine LearningDocument5 pagesAssignment 2, Machine LearningmariashoukatNo ratings yet

- On Line Emotion Detection Using Retrainable Deep Neural NetworksDocument8 pagesOn Line Emotion Detection Using Retrainable Deep Neural NetworksAbdullah ShabbirNo ratings yet

- Automatic Facial Expression Recognition Based On A Deep Convolutional-Neural-Network StructureDocument6 pagesAutomatic Facial Expression Recognition Based On A Deep Convolutional-Neural-Network StructureMoulod MouloudNo ratings yet

- CNN and RNN Comparative Study For Intrusion Detection SystemDocument12 pagesCNN and RNN Comparative Study For Intrusion Detection SystemIshika ChokshiNo ratings yet

- IMINT Target Acquisition Using Deep LearningDocument5 pagesIMINT Target Acquisition Using Deep LearningGhazi MarzoukNo ratings yet

- Vehicle Accident and Traffic Classification Using Deep Convolutional Neural NetworksDocument6 pagesVehicle Accident and Traffic Classification Using Deep Convolutional Neural NetworksRoman ReingsNo ratings yet

- A High-Accuracy Model Average Ensemble of ConvolutDocument16 pagesA High-Accuracy Model Average Ensemble of ConvolutKedar SawantNo ratings yet

- Automatic Facial Expression Recognition Based On A Deep Convolutional-Neural-Network StructureDocument6 pagesAutomatic Facial Expression Recognition Based On A Deep Convolutional-Neural-Network StructuresravankumarNo ratings yet

- Image Classification Using Small Convolutional Neural NetworkDocument5 pagesImage Classification Using Small Convolutional Neural NetworkKompruch BenjaputharakNo ratings yet

- TS - ChaptersDocument46 pagesTS - ChaptersS VarshithaNo ratings yet

- Liveness Detection in Face Recognition Using Deep LearningDocument4 pagesLiveness Detection in Face Recognition Using Deep LearningIvan FadillahNo ratings yet

- File TraceDocument4 pagesFile TraceJaimito MoralesNo ratings yet

- Zhang 2020Document5 pagesZhang 2020VEETRAG JAINNo ratings yet

- Learning and Transferring Representations For Image Steganalysis Using Convolutional Neural NetworkDocument5 pagesLearning and Transferring Representations For Image Steganalysis Using Convolutional Neural NetworkSaeed MoNo ratings yet

- Cross-Domain Face Recognition For Criminal IdentificationDocument6 pagesCross-Domain Face Recognition For Criminal IdentificationInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- 1 s2.0 S0031320311004006 MainDocument8 pages1 s2.0 S0031320311004006 MainOnur OzdemirNo ratings yet

- Transfer Learning With Convolutional Neural Networks For Iris RecognitionDocument18 pagesTransfer Learning With Convolutional Neural Networks For Iris RecognitionAdam HansenNo ratings yet

- EI 2017 Art00005 Daniel-Mas-MontserratDocument10 pagesEI 2017 Art00005 Daniel-Mas-Montserratlfailiure0No ratings yet

- 2020 Deep CNN TR LeDocument6 pages2020 Deep CNN TR LeSunil ChaluvaiahNo ratings yet

- Report - EC390Document7 pagesReport - EC390Shivam PrajapatiNo ratings yet

- Recognition of Handwritten Digit Using Convolutional Neural Network in Python With Tensorflow and Comparison of Performance For Various Hidden LayersDocument6 pagesRecognition of Handwritten Digit Using Convolutional Neural Network in Python With Tensorflow and Comparison of Performance For Various Hidden Layersjaime yelsin rosales malpartidaNo ratings yet

- An Efficient Convolutional Neural Network Approach For Facial RecognitionDocument6 pagesAn Efficient Convolutional Neural Network Approach For Facial RecognitionBihari Lal GuptaNo ratings yet

- Convolutional Neural Network (CNN) For Image Detection and RecognitionDocument6 pagesConvolutional Neural Network (CNN) For Image Detection and RecognitionJeanpierre H. AsdikianNo ratings yet

- A Face Recognition System On Embedded DeviceDocument8 pagesA Face Recognition System On Embedded DeviceChiranjib PatraNo ratings yet

- A New Design Based SVM of The CNN Classifier Architecture With Dropout For Offline Arabic Handwritten RecognitionDocument12 pagesA New Design Based SVM of The CNN Classifier Architecture With Dropout For Offline Arabic Handwritten RecognitionPrince GurajenaNo ratings yet

- Deep Learning with Python: A Comprehensive Guide to Deep Learning with PythonFrom EverandDeep Learning with Python: A Comprehensive Guide to Deep Learning with PythonNo ratings yet

- Journal of Medical SystemsDocument9 pagesJournal of Medical Systemseshwari2000No ratings yet

- Unit 1 - Cloud ComputingDocument5 pagesUnit 1 - Cloud Computingeshwari2000No ratings yet

- 3unit-Agricutlture ElectronicsDocument34 pages3unit-Agricutlture Electronicseshwari2000No ratings yet

- Python For Machine Learning BasicsDocument36 pagesPython For Machine Learning Basicseshwari2000No ratings yet

- Workshop On "Machine Learning Concepts and Applications": P.S.R.Engineering College Sevalpatti, SivakasiDocument45 pagesWorkshop On "Machine Learning Concepts and Applications": P.S.R.Engineering College Sevalpatti, Sivakasieshwari2000No ratings yet

- Machine Learning BasicsDocument57 pagesMachine Learning Basicseshwari2000No ratings yet

- Ij 909 1 Mrbrains13-MeenaprakashDocument9 pagesIj 909 1 Mrbrains13-Meenaprakasheshwari2000No ratings yet

- EC8361 - ADCLab Manual PDFDocument105 pagesEC8361 - ADCLab Manual PDFeshwari2000100% (1)

- Australian Journal: Australian Journal of Basic and Applied Sciences January 2018Document8 pagesAustralian Journal: Australian Journal of Basic and Applied Sciences January 2018eshwari2000No ratings yet

- EC8361 - ADCLab Manual PDFDocument105 pagesEC8361 - ADCLab Manual PDFeshwari2000100% (1)

- Gesture RecognitionDocument4 pagesGesture Recognitioneshwari2000No ratings yet

- Dissertation GuidelinesDocument9 pagesDissertation Guidelinesrejipg86% (7)

- Paired T TestDocument24 pagesPaired T TestpriyankaswaminathanNo ratings yet

- Reconciling Interoperability With Efficient Verification and Validation Within Open Source Simulation EnvironmentsDocument36 pagesReconciling Interoperability With Efficient Verification and Validation Within Open Source Simulation EnvironmentsnagsNo ratings yet

- M - A - Education 1st To 4 TH SMESTER SLAYBUSSDocument66 pagesM - A - Education 1st To 4 TH SMESTER SLAYBUSSPankaj DhaiyaNo ratings yet

- On The Optimal Weighting Matrix For The GMM System Estimator in Dynamic Panel Data ModelsDocument28 pagesOn The Optimal Weighting Matrix For The GMM System Estimator in Dynamic Panel Data ModelsNeemaNo ratings yet

- Unit 3Document46 pagesUnit 3Khari haranNo ratings yet

- Empowerment Series Generalist Practice With Organizations and Communities 7Th Edition PDF Full ChapterDocument41 pagesEmpowerment Series Generalist Practice With Organizations and Communities 7Th Edition PDF Full Chapterrosa.green630100% (26)

- MA2040: Probability, Statistics and Stochastic Processes Problem Set-IIDocument4 pagesMA2040: Probability, Statistics and Stochastic Processes Problem Set-IIRam Lakhan MeenaNo ratings yet

- Chapter 3Document32 pagesChapter 3Harsh ThakurNo ratings yet

- ECV5602 - Statistical Methods For TransportationDocument16 pagesECV5602 - Statistical Methods For TransportationIlyas H. AliNo ratings yet

- Science Process Skills and Proficiency Levels Among The Junior and Senior High School Science TeachersDocument6 pagesScience Process Skills and Proficiency Levels Among The Junior and Senior High School Science TeachersIOER International Multidisciplinary Research Journal ( IIMRJ)No ratings yet

- Comparing Multiple Intelligences Approach With Traditional Teaching On Eight Grade Students' Achievement in and Attitudes Toward ScienceDocument13 pagesComparing Multiple Intelligences Approach With Traditional Teaching On Eight Grade Students' Achievement in and Attitudes Toward ScienceDyan SeptyaningsihNo ratings yet

- A Study of Alienation and Emotional Stability Among Orphan and Non-Orphan AdolescentsDocument10 pagesA Study of Alienation and Emotional Stability Among Orphan and Non-Orphan AdolescentsDr. Manoranjan TripathyNo ratings yet

- Evaluation Metrics and EvaluationDocument9 pagesEvaluation Metrics and EvaluationWa HidzNo ratings yet

- Journal Article Writing and Publication Your Guide To Mastering Clinical Health Care Reporting Standards 1st Edition Sharon GutmanDocument70 pagesJournal Article Writing and Publication Your Guide To Mastering Clinical Health Care Reporting Standards 1st Edition Sharon Gutmanlawrence.carrion499100% (9)

- Results and DiscussionDocument13 pagesResults and DiscussionAiko BacdayanNo ratings yet

- Fisher Albrecht Yen Klingerman Mcnamee 1974Document98 pagesFisher Albrecht Yen Klingerman Mcnamee 1974Eric NolascoNo ratings yet

- Pengaruh Current CR DER Dan ROA Terhadap Harga SahDocument14 pagesPengaruh Current CR DER Dan ROA Terhadap Harga SahVanessa AlexandraNo ratings yet

- Econ275 (Stanford) PDFDocument4 pagesEcon275 (Stanford) PDFInvestNo ratings yet

- Estimation of Cronbach's Alpha For Sparse Datasets: Mike LopezDocument6 pagesEstimation of Cronbach's Alpha For Sparse Datasets: Mike LopezFarida Binti YusofNo ratings yet

- Term Paper StatDocument20 pagesTerm Paper StatMaisha Tanzim RahmanNo ratings yet

- Jurnal Bing 4Document5 pagesJurnal Bing 4fira annisaNo ratings yet

- Building Multiple Regression ModelsDocument72 pagesBuilding Multiple Regression ModelsWahaaj RanaNo ratings yet

- Rocket Propellant DataDocument12 pagesRocket Propellant DatatsrforfunNo ratings yet

- Woldemariam FujawDocument83 pagesWoldemariam FujawMehari TemesgenNo ratings yet

- Interpolation 12786416547886 Phpapp01Document17 pagesInterpolation 12786416547886 Phpapp01Pedro AntunesNo ratings yet

- Amos 4.0Document2 pagesAmos 4.0Mahatma Fransiskus NababanNo ratings yet

- Hockey Hypothesis Test PaperDocument2 pagesHockey Hypothesis Test Paperapi-345354701No ratings yet

- Topic Linear RegressionDocument9 pagesTopic Linear RegressionZuera OpeqNo ratings yet