Professional Documents

Culture Documents

Spectral Graph Clustering

Spectral Graph Clustering

Uploaded by

ale3265Copyright:

Available Formats

You might also like

- Practice Homework SetDocument58 pagesPractice Homework SetTro emaislivrosNo ratings yet

- Electromagnetics Theory: Tutorial Sheet No.: - 1Document2 pagesElectromagnetics Theory: Tutorial Sheet No.: - 1HonnyesNo ratings yet

- Isye 6669 HW 2: 1 2 3 3 I 1 I 2 I 1 4 J 2 Ij I 3 T 1 X T! 1 K 1 2 K 1 3 I 1 I J 1 I+J 4 N 2 N+2 M N N MDocument2 pagesIsye 6669 HW 2: 1 2 3 3 I 1 I 2 I 1 4 J 2 Ij I 3 T 1 X T! 1 K 1 2 K 1 3 I 1 I J 1 I+J 4 N 2 N+2 M N N MMalik KhaledNo ratings yet

- Linear MethodsDocument430 pagesLinear Methodsddahink100% (1)

- Algebraic Thinking 5Document278 pagesAlgebraic Thinking 5prakashraman100% (1)

- Dr. Alexander Schaum Chair of Automatic Control, Christian-Albrechts-University KielDocument4 pagesDr. Alexander Schaum Chair of Automatic Control, Christian-Albrechts-University KielkevweNo ratings yet

- Group Representation Theory 2014Document114 pagesGroup Representation Theory 2014JerryGillNo ratings yet

- Apm2611 2018 202 2Document21 pagesApm2611 2018 202 2themba alex khumaloNo ratings yet

- #U649 Prakash Pant Solution MRDocument2 pages#U649 Prakash Pant Solution MRmrr.pant92No ratings yet

- IE598-lecture10-projected Gradient DescentDocument9 pagesIE598-lecture10-projected Gradient DescentFaragNo ratings yet

- Lec03 2015eightDocument41 pagesLec03 2015eightAbdelmajid AbouloifaNo ratings yet

- Problem Session 6Document1 pageProblem Session 6zwuvincentNo ratings yet

- Monte Carlo Multivariate Normal ExampleDocument3 pagesMonte Carlo Multivariate Normal ExampleDipankar MondalNo ratings yet

- Ra2 2015Document43 pagesRa2 2015KAMAL SINGHNo ratings yet

- ProblemsDocument46 pagesProblemsgaur1234No ratings yet

- JpegDocument28 pagesJpegSayem HasanNo ratings yet

- Calculus IntegralsDocument4 pagesCalculus IntegralsChandra ParkNo ratings yet

- Lecture 1 PDFDocument2 pagesLecture 1 PDFNouman KhanNo ratings yet

- Detection EstimationDocument25 pagesDetection EstimationErdemNo ratings yet

- Spielman Laplacian Matrices of Graphs and ApplicationsDocument83 pagesSpielman Laplacian Matrices of Graphs and Applicationsdeath eaterNo ratings yet

- Bankas1 AtsDocument16 pagesBankas1 AtsVilma SkiepenaitienėNo ratings yet

- Arizona State University: School of Electrical, Computer, and Energy EngineeringDocument2 pagesArizona State University: School of Electrical, Computer, and Energy EngineeringDaniel BarbozaNo ratings yet

- ADC - Lec 8 - ModulationDocument190 pagesADC - Lec 8 - ModulationRabbia SalmanNo ratings yet

- Homework: F Inal: Unidad SaltilloDocument5 pagesHomework: F Inal: Unidad SaltilloRodolfoReyes-BáezNo ratings yet

- Problems Chap2Document26 pagesProblems Chap2Mohamed TahaNo ratings yet

- ME 8692 FEA - WatermarkDocument126 pagesME 8692 FEA - WatermarkDHARMADURAI.P MEC-AP/AERONo ratings yet

- Phy1002 HW ErrorDocument1 pagePhy1002 HW Error关zcNo ratings yet

- Chino Poisson 1dDocument8 pagesChino Poisson 1dLeonel MoralesNo ratings yet

- Fe Industrial EngineeringDocument14 pagesFe Industrial Engineeringvzimak2355No ratings yet

- Chapter 3Document67 pagesChapter 3Bexultan MustafinNo ratings yet

- Measures of DispersionDocument7 pagesMeasures of DispersionBloody Gamer Of BDNo ratings yet

- Econ 2001Document35 pagesEcon 2001Sebastián Carrillo SantanaNo ratings yet

- CSCE 3110 Data Structures & Algorithm Analysis: Rada MihalceaDocument30 pagesCSCE 3110 Data Structures & Algorithm Analysis: Rada MihalceaAnuja KhamitkarNo ratings yet

- Design of Engineering Experiments Part 2 - Basic Statistical ConceptsDocument11 pagesDesign of Engineering Experiments Part 2 - Basic Statistical ConceptsEnio BrogniNo ratings yet

- Spectral Partitioning: One Way To Slice A Problem in HalfDocument23 pagesSpectral Partitioning: One Way To Slice A Problem in HalfWeb devNo ratings yet

- MA261Notes Part1Document33 pagesMA261Notes Part1lasnieyanNo ratings yet

- Probability PDFDocument30 pagesProbability PDFeetahaNo ratings yet

- Gaussian Probability Density Functions: Properties and Error CharacterizationDocument30 pagesGaussian Probability Density Functions: Properties and Error CharacterizationNizar SaadiNo ratings yet

- Random Matrix Theory For Wireless Communications: Merouane - Debbah@Document58 pagesRandom Matrix Theory For Wireless Communications: Merouane - Debbah@Susa AkNo ratings yet

- Yukitaka AbeDocument55 pagesYukitaka AbeGrado ZeroNo ratings yet

- Sheet 0Document4 pagesSheet 0HanadiNo ratings yet

- HKDSE 2012 Math M2 MSDocument13 pagesHKDSE 2012 Math M2 MSTW ChanNo ratings yet

- 107 2 EM Midterm 2 SolDocument2 pages107 2 EM Midterm 2 SolAn ChaikekeNo ratings yet

- Nonlinear Dynamics and Chaos - Mit 2002Document72 pagesNonlinear Dynamics and Chaos - Mit 2002Alejandro Hernández MartínezNo ratings yet

- Option Valuation Methods (2017-04-20)Document2 pagesOption Valuation Methods (2017-04-20)Andrew JohnNo ratings yet

- Homework Solution 01 KNN DTDocument4 pagesHomework Solution 01 KNN DTCarl WoodsNo ratings yet

- Tutorial 7Document1 pageTutorial 7me230003066No ratings yet

- SolutionsDocument107 pagesSolutionseyuel.ap24No ratings yet

- cs675 SS2022 Midterm Solution PDFDocument10 pagescs675 SS2022 Midterm Solution PDFgauravNo ratings yet

- Approximating The Sum of A Convergent SeriesDocument8 pagesApproximating The Sum of A Convergent SeriesPhương LêNo ratings yet

- Problem Set 4Document1 pageProblem Set 4Marc AsenjoNo ratings yet

- Lecture 11 Background SubtractionDocument17 pagesLecture 11 Background SubtractionmayajogiNo ratings yet

- PDE Textbook (351 392)Document42 pagesPDE Textbook (351 392)ancelmomtmtcNo ratings yet

- CU-2021 B.Sc. (General) Mathematics Semester-3 Paper-CC3-GE3 QPDocument9 pagesCU-2021 B.Sc. (General) Mathematics Semester-3 Paper-CC3-GE3 QPAnshika ChoudharyNo ratings yet

- Exercise 6: TT 2 XX TDocument6 pagesExercise 6: TT 2 XX TMONA KUMARINo ratings yet

- Step 7:: Iteration I 2Document11 pagesStep 7:: Iteration I 2Sanika TalathiNo ratings yet

- Report Modeling and Computational PracticeDocument16 pagesReport Modeling and Computational PracticeAdriano BrandãoNo ratings yet

- Digital Signal ProcessingDocument7 pagesDigital Signal Processingind sh1No ratings yet

- Finite Difference Methods: MSC Course in Mathematics and Finance Imperial College London, 2010-11Document40 pagesFinite Difference Methods: MSC Course in Mathematics and Finance Imperial College London, 2010-11Muhammad FahimNo ratings yet

- Assignment 2Document17 pagesAssignment 2ChrisNo ratings yet

- On Sums of Two Squares and Sums of Two Triangular NumbersDocument5 pagesOn Sums of Two Squares and Sums of Two Triangular Numbersizan aznarNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Bhaskara II: Casey GregoryDocument16 pagesBhaskara II: Casey GregoryVinayakaNo ratings yet

- Pharmacy Study PlanDocument158 pagesPharmacy Study PlanaleksandraNo ratings yet

- Maths Formula Sheet by Gaurav SutharDocument14 pagesMaths Formula Sheet by Gaurav Sutharsparsh garruwarNo ratings yet

- Class 2 - Intro and Vector AlgebraDocument26 pagesClass 2 - Intro and Vector AlgebraverbicarNo ratings yet

- Mathematics10 - q1 - Melc8 - Performs Division of Polynomials Using Long Division and Synthetic Division - v1 1Document19 pagesMathematics10 - q1 - Melc8 - Performs Division of Polynomials Using Long Division and Synthetic Division - v1 1ro geNo ratings yet

- Wa0019.Document4 pagesWa0019.Janki JagatNo ratings yet

- EMFT Electronics GATE IES PSU Study MaterialsDocument14 pagesEMFT Electronics GATE IES PSU Study MaterialsMayank NautiyalNo ratings yet

- CIE 115 Lesson 4Document5 pagesCIE 115 Lesson 4Alexandra Faye Locsin LegoNo ratings yet

- Pca Vs PlsDocument20 pagesPca Vs PlsBryan R. BalajadiaNo ratings yet

- Lecture # 1 (Introduction)Document17 pagesLecture # 1 (Introduction)Rakhmeen GulNo ratings yet

- Solved Examples For Method of Undetermined CoefficientsDocument1 pageSolved Examples For Method of Undetermined CoefficientsFellippe GumahinNo ratings yet

- Form 4 - Add Maths TestDocument3 pagesForm 4 - Add Maths Testkgobi1100% (1)

- COT For MathematicsDocument5 pagesCOT For MathematicsAngelica Capellan AbanNo ratings yet

- Advanced P&C Ex.1 (A)Document7 pagesAdvanced P&C Ex.1 (A)AJINKYA V. BOBDENo ratings yet

- PDF University of Toronto Mathematics Competition 2001 2015 1St Edition Edward J Barbeau Auth Ebook Full ChapterDocument53 pagesPDF University of Toronto Mathematics Competition 2001 2015 1St Edition Edward J Barbeau Auth Ebook Full Chapterrafael.thompson345100% (2)

- Mat223 w23 Test2 SolDocument11 pagesMat223 w23 Test2 SolRohan NairNo ratings yet

- Topics in Precalculus ExamDocument1 pageTopics in Precalculus ExamLorenzo TellezNo ratings yet

- Curriculum Map: St. Andrew Christian AcademyDocument3 pagesCurriculum Map: St. Andrew Christian AcademyRoby PadillaNo ratings yet

- Week 2 - Lesson 8 - Solving Problems Involving Linear Equations and Inequalities in One VariableDocument32 pagesWeek 2 - Lesson 8 - Solving Problems Involving Linear Equations and Inequalities in One VariableMaria Aireen RamosNo ratings yet

- A Comparison of The Content of The Primary and Secondary School Mathematics Curricula of The Philippines and SingaporeDocument6 pagesA Comparison of The Content of The Primary and Secondary School Mathematics Curricula of The Philippines and SingaporeJoel Reyes Noche100% (1)

- Math 31132Document8 pagesMath 31132Solutions MasterNo ratings yet

- Linear Equations in One VariableDocument13 pagesLinear Equations in One VariableClaire E JoeNo ratings yet

- Sparse Representation in ClassificationDocument145 pagesSparse Representation in ClassificationRatan KumarNo ratings yet

- The DerivativeDocument14 pagesThe DerivativeBiancaMihalacheNo ratings yet

- Fractions Improper1 PDFDocument2 pagesFractions Improper1 PDFthenmoly100% (1)

Spectral Graph Clustering

Spectral Graph Clustering

Uploaded by

ale3265Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Spectral Graph Clustering

Spectral Graph Clustering

Uploaded by

ale3265Copyright:

Available Formats

Signal Processing for Big Data

Sergio Barbarossa

19/10/20 Signal Processing for Big Data 1

Clustering

Graph

partitioning

Given a graph, split in two complementary subsets S and S

c, let us associate different labels

to nodes belonging to different subsets:

si= 1, if i belongs to S,

si= -1, if i belongs to Sc

Note

0.5 (1-si sj ) = 0, if i and j belong to the same set,

0.5 (1-si sj ) = 1, if i and j belong to different sets

1 XX

Definition: Cut size = R = Aij (1 si sj )

4 i j

Problem: Split a graph in two subsets in such a way that the cut size is minimum

19/10/20 Signal Processing for Big Data 2

Clustering

Graph

partitioning

Cut size can be rewritten as

1

R = sT L s

4

Constraints: - number of nodes / cluster

- bounded norm

Problem formulation:

s = argmin sT L s

subject to si 2 { 1, 1}

N

X

s i = n1 n2

i=1

This is a combinatorial problem

19/10/20 Signal Processing for Big Data 3

Clustering

Graph

partitioning

Relaxed problem:

s = argmin sT L s

N

X

subject to s2i = N

i=1

XN

s i = n1 n2

i=1

Lagrangian: 0 1 0 1

N X

X N N

X N

X

L(s; , µ) = Ljk sj sk + @N s2j A + 2µ @n1 n2 sj A

k=1 j=1 j=1 j=1

Setting the gradient to zero, we get

Ls = s + µ1

19/10/20 Signal Processing for Big Data 4

Clustering

Graph

partitioning

Relaxed problem:

n2 n1

T

Multiplying from the left side by , we get

1 µ =

N

Introducing the vector

µ n2 n1

x := s + 1 = s + 1

N

we get

Lx = x

x

is then an eigenvector of L

n1 n2

The cut size can be rewritten as R =

N

19/10/20 Signal Processing for Big Data 5

Clustering

Graph

partitioning

Relaxed problem:

x

is then the eigenvector associated to the second smallest eigenvalue of :

L u2

The (real) solution is then

n1 n2

sR = x + 1

N

The closest binary solution is obtained by maximizing the scalar product sT sR

s

The optimal is achieved by assigning to the vertices with the largest

si = +1 n1

and to the other vertices

xi + (n1 n2 )/N si = 1

19/10/20 Signal Processing for Big Data 6

Clustering

Graph

partitioning

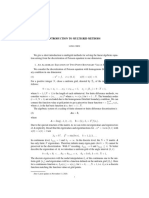

Example

u2

1.6

0.06

1.4

0.04

1.2

0.02

1

0.8 0

0.6

−0.02

0.4

−0.04

0.2

−0.06

0

−1 −0.5 0 0.5 1 1.5

19/10/20 Signal Processing for Big Data 7

Clustering

Graph

partitioning: Extension to K clusters

K

1 X

cut(A1 , . . . , AK ) := W (Ai , Ai )

2 i=1

with

XX

W (A, B) := aij

i2A j2B

Minimizing the cut does not prevent solutions where the partition simply separates

one individual vertex from the rest of the graph

To avoid this undesired solution, it is useful to introduce the normalized cut:

K K

1 X W (Ai , Ai ) X cut(Ai , Ai )

RatioCut(A1 , . . . , AK ) := =

2 i=1

|Ai | |Ai |

i=1

Unfortunately, minimizing RatioCut is NP-hard !

19/10/20 Signal Processing for Big Data 8

Clustering

Relaxed

minimum RatioCut (K=2)

Problem:

min RatioCut(A, A)

A⇢V

Introducing 8q

< |A|/|A|, if i 2 A

xi = q (1)

: |A|/|A|, if i 2 A

such that

n

T 1 X

x Lx = aij (xi xj )2 = . . . = |V | · RatioCut(A, A)

2 i,j=1

n

X n

X

T

xi = 1 x = 0 x2i = kxk2 = n

i=1 i=1

the partition problem can be formulated as

min xT Lx, subject to x ? 1, kxk2 = n and (1)

A⇢V

This problem is still NP-hard

19/10/20 Signal Processing for Big Data 9

Clustering

The

problem can be relaxed by removing the discreteness condition on x

The

solution of the relaxed problem is given by the eigenvector associated to

the second smallest eigenvalue of L

Generalization to K-cluster case

Introducing the matrix H 2 Rn⇥k whose coefficients are

(

p1 , if vi 2 Aj , i = 1, . . . , n; j = 1, . . . , k

hi,j = |Aj |

0, otherwise

we can write

n

X

RatioCut(A1 , . . . , Ak ) = hTi L hi = Tr HT L H

i=1

The relaxed problem becomes

min Tr HT L H , subject to HT H = I

A1 ,...,Ak

The solution is given by the k eigenvectors associated to the k smallest eigenvalues of L

19/10/20 Signal Processing for Big Data 10

Clustering

Clustering

algorithm [1]

[1] Ulrike von Luxburg, “A tutorial on spectral clustering”

19/10/20 Signal Processing for Big Data 11

Clustering

Selection

of number of clusters

The

number of clusters can be estimated by selecting the index i such that

the eigenvalue gap gi = i+1 i is sufficiently larger than the previous gaps

Numerical example

(the graph has been obtained from the data by building the 10-nearest neighbor graph) [1]

[1] Ulrike von Luxburg, “A tutorial on spectral clustering”

19/10/20 Signal Processing for Big Data 12

References

1. Ulrike von Luxburg, “A tutorial on spectral clustering”

13

19/10/20 Signal Processing for Big Data

You might also like

- Practice Homework SetDocument58 pagesPractice Homework SetTro emaislivrosNo ratings yet

- Electromagnetics Theory: Tutorial Sheet No.: - 1Document2 pagesElectromagnetics Theory: Tutorial Sheet No.: - 1HonnyesNo ratings yet

- Isye 6669 HW 2: 1 2 3 3 I 1 I 2 I 1 4 J 2 Ij I 3 T 1 X T! 1 K 1 2 K 1 3 I 1 I J 1 I+J 4 N 2 N+2 M N N MDocument2 pagesIsye 6669 HW 2: 1 2 3 3 I 1 I 2 I 1 4 J 2 Ij I 3 T 1 X T! 1 K 1 2 K 1 3 I 1 I J 1 I+J 4 N 2 N+2 M N N MMalik KhaledNo ratings yet

- Linear MethodsDocument430 pagesLinear Methodsddahink100% (1)

- Algebraic Thinking 5Document278 pagesAlgebraic Thinking 5prakashraman100% (1)

- Dr. Alexander Schaum Chair of Automatic Control, Christian-Albrechts-University KielDocument4 pagesDr. Alexander Schaum Chair of Automatic Control, Christian-Albrechts-University KielkevweNo ratings yet

- Group Representation Theory 2014Document114 pagesGroup Representation Theory 2014JerryGillNo ratings yet

- Apm2611 2018 202 2Document21 pagesApm2611 2018 202 2themba alex khumaloNo ratings yet

- #U649 Prakash Pant Solution MRDocument2 pages#U649 Prakash Pant Solution MRmrr.pant92No ratings yet

- IE598-lecture10-projected Gradient DescentDocument9 pagesIE598-lecture10-projected Gradient DescentFaragNo ratings yet

- Lec03 2015eightDocument41 pagesLec03 2015eightAbdelmajid AbouloifaNo ratings yet

- Problem Session 6Document1 pageProblem Session 6zwuvincentNo ratings yet

- Monte Carlo Multivariate Normal ExampleDocument3 pagesMonte Carlo Multivariate Normal ExampleDipankar MondalNo ratings yet

- Ra2 2015Document43 pagesRa2 2015KAMAL SINGHNo ratings yet

- ProblemsDocument46 pagesProblemsgaur1234No ratings yet

- JpegDocument28 pagesJpegSayem HasanNo ratings yet

- Calculus IntegralsDocument4 pagesCalculus IntegralsChandra ParkNo ratings yet

- Lecture 1 PDFDocument2 pagesLecture 1 PDFNouman KhanNo ratings yet

- Detection EstimationDocument25 pagesDetection EstimationErdemNo ratings yet

- Spielman Laplacian Matrices of Graphs and ApplicationsDocument83 pagesSpielman Laplacian Matrices of Graphs and Applicationsdeath eaterNo ratings yet

- Bankas1 AtsDocument16 pagesBankas1 AtsVilma SkiepenaitienėNo ratings yet

- Arizona State University: School of Electrical, Computer, and Energy EngineeringDocument2 pagesArizona State University: School of Electrical, Computer, and Energy EngineeringDaniel BarbozaNo ratings yet

- ADC - Lec 8 - ModulationDocument190 pagesADC - Lec 8 - ModulationRabbia SalmanNo ratings yet

- Homework: F Inal: Unidad SaltilloDocument5 pagesHomework: F Inal: Unidad SaltilloRodolfoReyes-BáezNo ratings yet

- Problems Chap2Document26 pagesProblems Chap2Mohamed TahaNo ratings yet

- ME 8692 FEA - WatermarkDocument126 pagesME 8692 FEA - WatermarkDHARMADURAI.P MEC-AP/AERONo ratings yet

- Phy1002 HW ErrorDocument1 pagePhy1002 HW Error关zcNo ratings yet

- Chino Poisson 1dDocument8 pagesChino Poisson 1dLeonel MoralesNo ratings yet

- Fe Industrial EngineeringDocument14 pagesFe Industrial Engineeringvzimak2355No ratings yet

- Chapter 3Document67 pagesChapter 3Bexultan MustafinNo ratings yet

- Measures of DispersionDocument7 pagesMeasures of DispersionBloody Gamer Of BDNo ratings yet

- Econ 2001Document35 pagesEcon 2001Sebastián Carrillo SantanaNo ratings yet

- CSCE 3110 Data Structures & Algorithm Analysis: Rada MihalceaDocument30 pagesCSCE 3110 Data Structures & Algorithm Analysis: Rada MihalceaAnuja KhamitkarNo ratings yet

- Design of Engineering Experiments Part 2 - Basic Statistical ConceptsDocument11 pagesDesign of Engineering Experiments Part 2 - Basic Statistical ConceptsEnio BrogniNo ratings yet

- Spectral Partitioning: One Way To Slice A Problem in HalfDocument23 pagesSpectral Partitioning: One Way To Slice A Problem in HalfWeb devNo ratings yet

- MA261Notes Part1Document33 pagesMA261Notes Part1lasnieyanNo ratings yet

- Probability PDFDocument30 pagesProbability PDFeetahaNo ratings yet

- Gaussian Probability Density Functions: Properties and Error CharacterizationDocument30 pagesGaussian Probability Density Functions: Properties and Error CharacterizationNizar SaadiNo ratings yet

- Random Matrix Theory For Wireless Communications: Merouane - Debbah@Document58 pagesRandom Matrix Theory For Wireless Communications: Merouane - Debbah@Susa AkNo ratings yet

- Yukitaka AbeDocument55 pagesYukitaka AbeGrado ZeroNo ratings yet

- Sheet 0Document4 pagesSheet 0HanadiNo ratings yet

- HKDSE 2012 Math M2 MSDocument13 pagesHKDSE 2012 Math M2 MSTW ChanNo ratings yet

- 107 2 EM Midterm 2 SolDocument2 pages107 2 EM Midterm 2 SolAn ChaikekeNo ratings yet

- Nonlinear Dynamics and Chaos - Mit 2002Document72 pagesNonlinear Dynamics and Chaos - Mit 2002Alejandro Hernández MartínezNo ratings yet

- Option Valuation Methods (2017-04-20)Document2 pagesOption Valuation Methods (2017-04-20)Andrew JohnNo ratings yet

- Homework Solution 01 KNN DTDocument4 pagesHomework Solution 01 KNN DTCarl WoodsNo ratings yet

- Tutorial 7Document1 pageTutorial 7me230003066No ratings yet

- SolutionsDocument107 pagesSolutionseyuel.ap24No ratings yet

- cs675 SS2022 Midterm Solution PDFDocument10 pagescs675 SS2022 Midterm Solution PDFgauravNo ratings yet

- Approximating The Sum of A Convergent SeriesDocument8 pagesApproximating The Sum of A Convergent SeriesPhương LêNo ratings yet

- Problem Set 4Document1 pageProblem Set 4Marc AsenjoNo ratings yet

- Lecture 11 Background SubtractionDocument17 pagesLecture 11 Background SubtractionmayajogiNo ratings yet

- PDE Textbook (351 392)Document42 pagesPDE Textbook (351 392)ancelmomtmtcNo ratings yet

- CU-2021 B.Sc. (General) Mathematics Semester-3 Paper-CC3-GE3 QPDocument9 pagesCU-2021 B.Sc. (General) Mathematics Semester-3 Paper-CC3-GE3 QPAnshika ChoudharyNo ratings yet

- Exercise 6: TT 2 XX TDocument6 pagesExercise 6: TT 2 XX TMONA KUMARINo ratings yet

- Step 7:: Iteration I 2Document11 pagesStep 7:: Iteration I 2Sanika TalathiNo ratings yet

- Report Modeling and Computational PracticeDocument16 pagesReport Modeling and Computational PracticeAdriano BrandãoNo ratings yet

- Digital Signal ProcessingDocument7 pagesDigital Signal Processingind sh1No ratings yet

- Finite Difference Methods: MSC Course in Mathematics and Finance Imperial College London, 2010-11Document40 pagesFinite Difference Methods: MSC Course in Mathematics and Finance Imperial College London, 2010-11Muhammad FahimNo ratings yet

- Assignment 2Document17 pagesAssignment 2ChrisNo ratings yet

- On Sums of Two Squares and Sums of Two Triangular NumbersDocument5 pagesOn Sums of Two Squares and Sums of Two Triangular Numbersizan aznarNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Bhaskara II: Casey GregoryDocument16 pagesBhaskara II: Casey GregoryVinayakaNo ratings yet

- Pharmacy Study PlanDocument158 pagesPharmacy Study PlanaleksandraNo ratings yet

- Maths Formula Sheet by Gaurav SutharDocument14 pagesMaths Formula Sheet by Gaurav Sutharsparsh garruwarNo ratings yet

- Class 2 - Intro and Vector AlgebraDocument26 pagesClass 2 - Intro and Vector AlgebraverbicarNo ratings yet

- Mathematics10 - q1 - Melc8 - Performs Division of Polynomials Using Long Division and Synthetic Division - v1 1Document19 pagesMathematics10 - q1 - Melc8 - Performs Division of Polynomials Using Long Division and Synthetic Division - v1 1ro geNo ratings yet

- Wa0019.Document4 pagesWa0019.Janki JagatNo ratings yet

- EMFT Electronics GATE IES PSU Study MaterialsDocument14 pagesEMFT Electronics GATE IES PSU Study MaterialsMayank NautiyalNo ratings yet

- CIE 115 Lesson 4Document5 pagesCIE 115 Lesson 4Alexandra Faye Locsin LegoNo ratings yet

- Pca Vs PlsDocument20 pagesPca Vs PlsBryan R. BalajadiaNo ratings yet

- Lecture # 1 (Introduction)Document17 pagesLecture # 1 (Introduction)Rakhmeen GulNo ratings yet

- Solved Examples For Method of Undetermined CoefficientsDocument1 pageSolved Examples For Method of Undetermined CoefficientsFellippe GumahinNo ratings yet

- Form 4 - Add Maths TestDocument3 pagesForm 4 - Add Maths Testkgobi1100% (1)

- COT For MathematicsDocument5 pagesCOT For MathematicsAngelica Capellan AbanNo ratings yet

- Advanced P&C Ex.1 (A)Document7 pagesAdvanced P&C Ex.1 (A)AJINKYA V. BOBDENo ratings yet

- PDF University of Toronto Mathematics Competition 2001 2015 1St Edition Edward J Barbeau Auth Ebook Full ChapterDocument53 pagesPDF University of Toronto Mathematics Competition 2001 2015 1St Edition Edward J Barbeau Auth Ebook Full Chapterrafael.thompson345100% (2)

- Mat223 w23 Test2 SolDocument11 pagesMat223 w23 Test2 SolRohan NairNo ratings yet

- Topics in Precalculus ExamDocument1 pageTopics in Precalculus ExamLorenzo TellezNo ratings yet

- Curriculum Map: St. Andrew Christian AcademyDocument3 pagesCurriculum Map: St. Andrew Christian AcademyRoby PadillaNo ratings yet

- Week 2 - Lesson 8 - Solving Problems Involving Linear Equations and Inequalities in One VariableDocument32 pagesWeek 2 - Lesson 8 - Solving Problems Involving Linear Equations and Inequalities in One VariableMaria Aireen RamosNo ratings yet

- A Comparison of The Content of The Primary and Secondary School Mathematics Curricula of The Philippines and SingaporeDocument6 pagesA Comparison of The Content of The Primary and Secondary School Mathematics Curricula of The Philippines and SingaporeJoel Reyes Noche100% (1)

- Math 31132Document8 pagesMath 31132Solutions MasterNo ratings yet

- Linear Equations in One VariableDocument13 pagesLinear Equations in One VariableClaire E JoeNo ratings yet

- Sparse Representation in ClassificationDocument145 pagesSparse Representation in ClassificationRatan KumarNo ratings yet

- The DerivativeDocument14 pagesThe DerivativeBiancaMihalacheNo ratings yet

- Fractions Improper1 PDFDocument2 pagesFractions Improper1 PDFthenmoly100% (1)