Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

9 views1.4 Understanding Instrument Specifications: 1.4.1 Definition of Accuracy Terms

1.4 Understanding Instrument Specifications: 1.4.1 Definition of Accuracy Terms

Uploaded by

Arshad AminThe document discusses instrument specifications, defining terms related to accuracy such as sensitivity, resolution, repeatability, reproducibility, absolute accuracy, and relative accuracy. It notes that while instrument accuracy is very important, other specifications to consider include noise, deratings, and speed. Precision refers to freedom from uncertainty in a measurement.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You might also like

- Platelet Count Slide MethodDocument17 pagesPlatelet Count Slide MethodPrecious Yvanne PanagaNo ratings yet

- DBT Visual Review Flash CardDocument14 pagesDBT Visual Review Flash CardBeyza Gül89% (9)

- 1 - Participate in Workplace CommunicaitonDocument38 pages1 - Participate in Workplace CommunicaitonSahlee Rose Falcunit GacaNo ratings yet

- Play Therapy (Report Outline)Document4 pagesPlay Therapy (Report Outline)Jan Michael SuarezNo ratings yet

- Accuracy and RepeatabilityDocument61 pagesAccuracy and Repeatabilityjustin cardinalNo ratings yet

- 1.4 System Accuracy PDFDocument8 pages1.4 System Accuracy PDFRavi Chandra PenumartiNo ratings yet

- Measurements - Specifications - Indicators - BiomarkersDocument33 pagesMeasurements - Specifications - Indicators - Biomarkersdilek_uçarNo ratings yet

- Module 4 Full NotesDocument33 pagesModule 4 Full NotesAskar BashaNo ratings yet

- Basic Principles of Engineering MetrologyDocument25 pagesBasic Principles of Engineering MetrologyRay DebashishNo ratings yet

- Unit 1: Generalized Measurement SystemDocument51 pagesUnit 1: Generalized Measurement Systemanadinath sharmaNo ratings yet

- MET307 M4 Ktunotes.inDocument34 pagesMET307 M4 Ktunotes.inSURYA R SURESHNo ratings yet

- Lecture 2 - Characteristics of MSDocument33 pagesLecture 2 - Characteristics of MSSceva AquilaNo ratings yet

- PhysicsloveDocument6 pagesPhysicsloveJean Tañala BagueNo ratings yet

- Combine PDFDocument204 pagesCombine PDFsridevi73No ratings yet

- Generalized CharacteristicsDocument13 pagesGeneralized CharacteristicspvpriyaNo ratings yet

- Metrology Terminology Accuracy Precision ResolutionDocument2 pagesMetrology Terminology Accuracy Precision ResolutionQuality controllerNo ratings yet

- Metrology Uniit IDocument44 pagesMetrology Uniit Irramesh2k8712No ratings yet

- A Closer Look at Precision of Measuring and Test EquipmentDocument3 pagesA Closer Look at Precision of Measuring and Test EquipmentGonzalo VargasNo ratings yet

- Metrology - Book - Hari PDFDocument212 pagesMetrology - Book - Hari PDFKallol ChakrabortyNo ratings yet

- Basic Engineering Measurement AGE 2310Document30 pagesBasic Engineering Measurement AGE 2310ahmed gamalNo ratings yet

- How Accurate Is Accurate Parts 1-2-3Document10 pagesHow Accurate Is Accurate Parts 1-2-3wlmostiaNo ratings yet

- Terminology Used in Instrument Accuracy: Rick Williams Rawson & Co., IncDocument6 pagesTerminology Used in Instrument Accuracy: Rick Williams Rawson & Co., Incjulpian tulusNo ratings yet

- Where: - Absolute Error - Expected Value - Measured ValueDocument2 pagesWhere: - Absolute Error - Expected Value - Measured ValueJaymilyn Rodas SantosNo ratings yet

- Accuracy and PrecisionDocument4 pagesAccuracy and PrecisionashokNo ratings yet

- Metrology-and-Measurements-Notes MCQDocument10 pagesMetrology-and-Measurements-Notes MCQUjjwal kecNo ratings yet

- Measurement and Metrology NotesDocument18 pagesMeasurement and Metrology NotesAnything You Could ImagineNo ratings yet

- Electronic Measurements and Measuring InstrumentsDocument45 pagesElectronic Measurements and Measuring InstrumentsNandan PatelNo ratings yet

- CompactDocument6 pagesCompactzyrusgaming11No ratings yet

- Accuracy, PrecisionDocument4 pagesAccuracy, PrecisionfatimasulaimanishereNo ratings yet

- Humidity Eguide B211616ENDocument22 pagesHumidity Eguide B211616ENleonardo sarrazolaNo ratings yet

- Lecture 2Document4 pagesLecture 2Sherif SaidNo ratings yet

- Mechanical Measurement Unit-1 Two MarkDocument3 pagesMechanical Measurement Unit-1 Two MarkmanikandanNo ratings yet

- Ele312 Mod 1bDocument3 pagesEle312 Mod 1bOGUNNIYI FAVOURNo ratings yet

- Module-I Concept of MeasurementDocument27 pagesModule-I Concept of MeasurementSumanth ChintuNo ratings yet

- Kmu Kym437 Hafta 5 6Document25 pagesKmu Kym437 Hafta 5 6Berkay TatlıNo ratings yet

- 4 Errors and SamplingDocument7 pages4 Errors and SamplingJonathan MusongNo ratings yet

- The Fundamentals of Pressure CalibrationDocument20 pagesThe Fundamentals of Pressure CalibrationMaria Isabel LadinoNo ratings yet

- 6 Static Characteristics of Measuring InstrumentsDocument5 pages6 Static Characteristics of Measuring InstrumentsAmit Kr GodaraNo ratings yet

- TD DC Low Frequency MetrologyDocument4 pagesTD DC Low Frequency Metrologyfrancisco monsivaisNo ratings yet

- CC Quality Control TransesDocument6 pagesCC Quality Control TransesJohanna Rose Cobacha SalvediaNo ratings yet

- MPE 352 Instrumentation Course NotesDocument63 pagesMPE 352 Instrumentation Course NotesKing EdwinNo ratings yet

- Short and Long QuestionsDocument14 pagesShort and Long Questionsdream breakerNo ratings yet

- M Systems: Rinciples of Easurement YstemsDocument10 pagesM Systems: Rinciples of Easurement YstemsAnkit KumarNo ratings yet

- I. Measurement System Analysis Part 1 II. Measurement System Analysis Part 2Document9 pagesI. Measurement System Analysis Part 1 II. Measurement System Analysis Part 2ShobinNo ratings yet

- Instrumentation Chapter 2 and 3Document6 pagesInstrumentation Chapter 2 and 3Гарпия Сова100% (1)

- Instruments and Measurement Systems - 1Document34 pagesInstruments and Measurement Systems - 1mubanga20000804No ratings yet

- MSA Presentation by M Negi 31.01.09Document76 pagesMSA Presentation by M Negi 31.01.09Mahendra100% (2)

- General Physics Module 1 & 2Document2 pagesGeneral Physics Module 1 & 2Claire VillaminNo ratings yet

- Alsadik. Error. Precision. Exactitud. Confiabilidad. p.7-11.2019Document5 pagesAlsadik. Error. Precision. Exactitud. Confiabilidad. p.7-11.2019Julio BringasNo ratings yet

- Accuracy and PrecisionDocument3 pagesAccuracy and PrecisionRaghuveerprasad IppiliNo ratings yet

- Metrology and MeasurementsDocument14 pagesMetrology and MeasurementsCrazy VibesNo ratings yet

- Teacher Resource Bank: GCE Physics A Other Guidance: Glossary of TermsDocument4 pagesTeacher Resource Bank: GCE Physics A Other Guidance: Glossary of TermsSammuel JacksonNo ratings yet

- Measurement (KWiki - Ch1 - Error Analysis)Document14 pagesMeasurement (KWiki - Ch1 - Error Analysis)madivala nagarajaNo ratings yet

- Physics Lesson 2Document1 pagePhysics Lesson 2shenNo ratings yet

- Measurement System Analysis - 2Document3 pagesMeasurement System Analysis - 2Gustavo Balarezo InumaNo ratings yet

- Measurement Errors UNIT IDocument11 pagesMeasurement Errors UNIT ISwati DixitNo ratings yet

- Terminology Used in Instrument AccuracyDocument6 pagesTerminology Used in Instrument Accuracyinfo6043No ratings yet

- Accuracy and PrecisionDocument4 pagesAccuracy and PrecisionbismashakilsNo ratings yet

- Chapter Two Static XtcsDocument26 pagesChapter Two Static XtcsAgirma girmaNo ratings yet

- SPR 1202 Engineering Metrology: Technical TermsDocument23 pagesSPR 1202 Engineering Metrology: Technical TermsBHOOMINo ratings yet

- SEM 3characteristics of Transducers or SensorsDocument10 pagesSEM 3characteristics of Transducers or Sensorsasnaph9No ratings yet

- Ego Resilience ScaleDocument2 pagesEgo Resilience ScalejyoNo ratings yet

- SHS Hope IvDocument4 pagesSHS Hope IvMacapagal Andrea Nicole DayritNo ratings yet

- Biopsychosocial Model in Depression Revisited: Mauro Garcia-Toro, Iratxe AguirreDocument9 pagesBiopsychosocial Model in Depression Revisited: Mauro Garcia-Toro, Iratxe AguirreayannaxemNo ratings yet

- The Effect of Weekly Training Load Across A Competitive Microcycle On Contextual Variables in Professional SoccerDocument10 pagesThe Effect of Weekly Training Load Across A Competitive Microcycle On Contextual Variables in Professional SoccerAmin CherbitiNo ratings yet

- MorbiliDocument30 pagesMorbiliMjNo ratings yet

- CPR Salbutamol+Ipratropium Neb (BRODIX PLUS) 35'sDocument2 pagesCPR Salbutamol+Ipratropium Neb (BRODIX PLUS) 35'sRacquel SolivenNo ratings yet

- Research in Primary Dental Care 1 PDFDocument4 pagesResearch in Primary Dental Care 1 PDFMARIA NAENo ratings yet

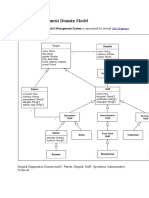

- Hospital Management Domain ModelDocument4 pagesHospital Management Domain Modelvinod kapateNo ratings yet

- ICN Framework of Disaster NursingDocument18 pagesICN Framework of Disaster NursingKRIZIA ANE A. SULONG100% (1)

- Lesson 4 Pe 1Document11 pagesLesson 4 Pe 1Maya RosarioNo ratings yet

- Editted Letter For Practice Clerks and InternsDocument1 pageEditted Letter For Practice Clerks and InternsPaul Rizel LedesmaNo ratings yet

- Handbook HealthDocument79 pagesHandbook HealthVadhan PrasannaNo ratings yet

- Msds FCC CatalystDocument8 pagesMsds FCC CatalystciclointermedioNo ratings yet

- Physical Exercise and Executive Functions in Preadolescent Children, Adolescents and Young Adults - A Meta-AnalysisDocument9 pagesPhysical Exercise and Executive Functions in Preadolescent Children, Adolescents and Young Adults - A Meta-AnalysisPedro J. Conesa CerveraNo ratings yet

- 6 - Breath SoundsDocument31 pages6 - Breath SoundsggNo ratings yet

- Midterm Quiz#1Document6 pagesMidterm Quiz#1AIRINI MAY L. GUEVARRANo ratings yet

- ResumDocument11 pagesResummmmmder7No ratings yet

- Management Is Nothing More Than Motivating Other People GHEORGHE MDocument6 pagesManagement Is Nothing More Than Motivating Other People GHEORGHE MCristina PaliuNo ratings yet

- Septic Shock: Current Management and New Therapeutic FrontiersDocument77 pagesSeptic Shock: Current Management and New Therapeutic Frontiersamal.fathullahNo ratings yet

- Chapter 1 The Human OrganismDocument18 pagesChapter 1 The Human OrganismEuniece AnicocheNo ratings yet

- PCX - Report For Reduction of Heavy Metals in BoreholeDocument32 pagesPCX - Report For Reduction of Heavy Metals in BoreholeUtibe Tibz IkpembeNo ratings yet

- Module 5 Notebook Individual Service PlanDocument44 pagesModule 5 Notebook Individual Service PlanAung Tun100% (1)

- Antibiotic Prophylaxis OrthoDocument4 pagesAntibiotic Prophylaxis OrthoDonNo ratings yet

- 1 s2.0 S2212443823000371 MainDocument11 pages1 s2.0 S2212443823000371 MainMirza GlusacNo ratings yet

- $1-5 Donations Benefits - Medical Study ZoneDocument63 pages$1-5 Donations Benefits - Medical Study ZoneVishal KumarNo ratings yet

- Hospital List-MD INDIA HealthcareDocument288 pagesHospital List-MD INDIA HealthcarePankaj67% (12)

1.4 Understanding Instrument Specifications: 1.4.1 Definition of Accuracy Terms

1.4 Understanding Instrument Specifications: 1.4.1 Definition of Accuracy Terms

Uploaded by

Arshad Amin0 ratings0% found this document useful (0 votes)

9 views1 pageThe document discusses instrument specifications, defining terms related to accuracy such as sensitivity, resolution, repeatability, reproducibility, absolute accuracy, and relative accuracy. It notes that while instrument accuracy is very important, other specifications to consider include noise, deratings, and speed. Precision refers to freedom from uncertainty in a measurement.

Original Description:

Original Title

Accuracy terms

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document discusses instrument specifications, defining terms related to accuracy such as sensitivity, resolution, repeatability, reproducibility, absolute accuracy, and relative accuracy. It notes that while instrument accuracy is very important, other specifications to consider include noise, deratings, and speed. Precision refers to freedom from uncertainty in a measurement.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

9 views1 page1.4 Understanding Instrument Specifications: 1.4.1 Definition of Accuracy Terms

1.4 Understanding Instrument Specifications: 1.4.1 Definition of Accuracy Terms

Uploaded by

Arshad AminThe document discusses instrument specifications, defining terms related to accuracy such as sensitivity, resolution, repeatability, reproducibility, absolute accuracy, and relative accuracy. It notes that while instrument accuracy is very important, other specifications to consider include noise, deratings, and speed. Precision refers to freedom from uncertainty in a measurement.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 1

1.

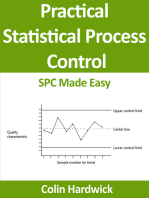

4 Understanding Instrument Specifications

Knowing how to interpret instrument specifications properly is an impor-

tant aspect of making good low level measurements. Although instrument

accuracy is probably the most important of these specifications, there are

several other factors to consider when reviewing specifications, including

noise, deratings, and speed.

1.4.1 Definition of Accuracy Terms

This section defines a number of terms related to instrument accuracy.

Some of these terms are further discussed in subsequent paragraphs. Table

1-1 summarizes conversion factors for various specifications associated with

instruments.

SENSITIVITY - the smallest change in the signal that can be detected.

RESOLUTION - the smallest portion of the signal that can be observed.

REPEATABILITY - the closeness of agreement between successive measure-

ments carried out under the same conditions.

REPRODUCIBILITY - the closeness of agreement between measurements of

the same quantity carried out with a stated change in conditions.

ABSOLUTE ACCURACY - the closeness of agreement between the result of a

measurement and its true value or accepted standard value. Accuracy

is often separated into gain and offset terms.

RELATIVE ACCURACY - the extent to which a measurement accurately

reflects the relationship between an unknown and a reference value.

ERROR - the deviation (difference or ratio) of a measurement from its true

value. Note that true values are by their nature indeterminate.

RANDOM ERROR - the mean of a large number of measurements influenced

by random error matches the true value.

SYSTEMATIC ERROR - the mean of a large number of measurements influ-

enced by systematic error deviates from the true value.

UNCERTAINTY - an estimate of the possible error in a measurement, i.e., the

estimated possible deviation from its actual value. This is the opposite

of accuracy.

“Precision” is a more qualitative term than many of those defined here.

It refers to the freedom from uncertainty in the measurement. It’s often

applied in the context of repeatability or reproducibility, but it shouldn’t be

used in place of “accuracy.”

1.4.2 Accuracy

One of the most important considerations in any measurement situation is

reading accuracy. For any given test setup, a number of factors can affect

accuracy. The most important factor is the accuracy of the instrument itself,

which may be specified in several ways, including a percentage of full scale,

1-10 SECTION 1

You might also like

- Platelet Count Slide MethodDocument17 pagesPlatelet Count Slide MethodPrecious Yvanne PanagaNo ratings yet

- DBT Visual Review Flash CardDocument14 pagesDBT Visual Review Flash CardBeyza Gül89% (9)

- 1 - Participate in Workplace CommunicaitonDocument38 pages1 - Participate in Workplace CommunicaitonSahlee Rose Falcunit GacaNo ratings yet

- Play Therapy (Report Outline)Document4 pagesPlay Therapy (Report Outline)Jan Michael SuarezNo ratings yet

- Accuracy and RepeatabilityDocument61 pagesAccuracy and Repeatabilityjustin cardinalNo ratings yet

- 1.4 System Accuracy PDFDocument8 pages1.4 System Accuracy PDFRavi Chandra PenumartiNo ratings yet

- Measurements - Specifications - Indicators - BiomarkersDocument33 pagesMeasurements - Specifications - Indicators - Biomarkersdilek_uçarNo ratings yet

- Module 4 Full NotesDocument33 pagesModule 4 Full NotesAskar BashaNo ratings yet

- Basic Principles of Engineering MetrologyDocument25 pagesBasic Principles of Engineering MetrologyRay DebashishNo ratings yet

- Unit 1: Generalized Measurement SystemDocument51 pagesUnit 1: Generalized Measurement Systemanadinath sharmaNo ratings yet

- MET307 M4 Ktunotes.inDocument34 pagesMET307 M4 Ktunotes.inSURYA R SURESHNo ratings yet

- Lecture 2 - Characteristics of MSDocument33 pagesLecture 2 - Characteristics of MSSceva AquilaNo ratings yet

- PhysicsloveDocument6 pagesPhysicsloveJean Tañala BagueNo ratings yet

- Combine PDFDocument204 pagesCombine PDFsridevi73No ratings yet

- Generalized CharacteristicsDocument13 pagesGeneralized CharacteristicspvpriyaNo ratings yet

- Metrology Terminology Accuracy Precision ResolutionDocument2 pagesMetrology Terminology Accuracy Precision ResolutionQuality controllerNo ratings yet

- Metrology Uniit IDocument44 pagesMetrology Uniit Irramesh2k8712No ratings yet

- A Closer Look at Precision of Measuring and Test EquipmentDocument3 pagesA Closer Look at Precision of Measuring and Test EquipmentGonzalo VargasNo ratings yet

- Metrology - Book - Hari PDFDocument212 pagesMetrology - Book - Hari PDFKallol ChakrabortyNo ratings yet

- Basic Engineering Measurement AGE 2310Document30 pagesBasic Engineering Measurement AGE 2310ahmed gamalNo ratings yet

- How Accurate Is Accurate Parts 1-2-3Document10 pagesHow Accurate Is Accurate Parts 1-2-3wlmostiaNo ratings yet

- Terminology Used in Instrument Accuracy: Rick Williams Rawson & Co., IncDocument6 pagesTerminology Used in Instrument Accuracy: Rick Williams Rawson & Co., Incjulpian tulusNo ratings yet

- Where: - Absolute Error - Expected Value - Measured ValueDocument2 pagesWhere: - Absolute Error - Expected Value - Measured ValueJaymilyn Rodas SantosNo ratings yet

- Accuracy and PrecisionDocument4 pagesAccuracy and PrecisionashokNo ratings yet

- Metrology-and-Measurements-Notes MCQDocument10 pagesMetrology-and-Measurements-Notes MCQUjjwal kecNo ratings yet

- Measurement and Metrology NotesDocument18 pagesMeasurement and Metrology NotesAnything You Could ImagineNo ratings yet

- Electronic Measurements and Measuring InstrumentsDocument45 pagesElectronic Measurements and Measuring InstrumentsNandan PatelNo ratings yet

- CompactDocument6 pagesCompactzyrusgaming11No ratings yet

- Accuracy, PrecisionDocument4 pagesAccuracy, PrecisionfatimasulaimanishereNo ratings yet

- Humidity Eguide B211616ENDocument22 pagesHumidity Eguide B211616ENleonardo sarrazolaNo ratings yet

- Lecture 2Document4 pagesLecture 2Sherif SaidNo ratings yet

- Mechanical Measurement Unit-1 Two MarkDocument3 pagesMechanical Measurement Unit-1 Two MarkmanikandanNo ratings yet

- Ele312 Mod 1bDocument3 pagesEle312 Mod 1bOGUNNIYI FAVOURNo ratings yet

- Module-I Concept of MeasurementDocument27 pagesModule-I Concept of MeasurementSumanth ChintuNo ratings yet

- Kmu Kym437 Hafta 5 6Document25 pagesKmu Kym437 Hafta 5 6Berkay TatlıNo ratings yet

- 4 Errors and SamplingDocument7 pages4 Errors and SamplingJonathan MusongNo ratings yet

- The Fundamentals of Pressure CalibrationDocument20 pagesThe Fundamentals of Pressure CalibrationMaria Isabel LadinoNo ratings yet

- 6 Static Characteristics of Measuring InstrumentsDocument5 pages6 Static Characteristics of Measuring InstrumentsAmit Kr GodaraNo ratings yet

- TD DC Low Frequency MetrologyDocument4 pagesTD DC Low Frequency Metrologyfrancisco monsivaisNo ratings yet

- CC Quality Control TransesDocument6 pagesCC Quality Control TransesJohanna Rose Cobacha SalvediaNo ratings yet

- MPE 352 Instrumentation Course NotesDocument63 pagesMPE 352 Instrumentation Course NotesKing EdwinNo ratings yet

- Short and Long QuestionsDocument14 pagesShort and Long Questionsdream breakerNo ratings yet

- M Systems: Rinciples of Easurement YstemsDocument10 pagesM Systems: Rinciples of Easurement YstemsAnkit KumarNo ratings yet

- I. Measurement System Analysis Part 1 II. Measurement System Analysis Part 2Document9 pagesI. Measurement System Analysis Part 1 II. Measurement System Analysis Part 2ShobinNo ratings yet

- Instrumentation Chapter 2 and 3Document6 pagesInstrumentation Chapter 2 and 3Гарпия Сова100% (1)

- Instruments and Measurement Systems - 1Document34 pagesInstruments and Measurement Systems - 1mubanga20000804No ratings yet

- MSA Presentation by M Negi 31.01.09Document76 pagesMSA Presentation by M Negi 31.01.09Mahendra100% (2)

- General Physics Module 1 & 2Document2 pagesGeneral Physics Module 1 & 2Claire VillaminNo ratings yet

- Alsadik. Error. Precision. Exactitud. Confiabilidad. p.7-11.2019Document5 pagesAlsadik. Error. Precision. Exactitud. Confiabilidad. p.7-11.2019Julio BringasNo ratings yet

- Accuracy and PrecisionDocument3 pagesAccuracy and PrecisionRaghuveerprasad IppiliNo ratings yet

- Metrology and MeasurementsDocument14 pagesMetrology and MeasurementsCrazy VibesNo ratings yet

- Teacher Resource Bank: GCE Physics A Other Guidance: Glossary of TermsDocument4 pagesTeacher Resource Bank: GCE Physics A Other Guidance: Glossary of TermsSammuel JacksonNo ratings yet

- Measurement (KWiki - Ch1 - Error Analysis)Document14 pagesMeasurement (KWiki - Ch1 - Error Analysis)madivala nagarajaNo ratings yet

- Physics Lesson 2Document1 pagePhysics Lesson 2shenNo ratings yet

- Measurement System Analysis - 2Document3 pagesMeasurement System Analysis - 2Gustavo Balarezo InumaNo ratings yet

- Measurement Errors UNIT IDocument11 pagesMeasurement Errors UNIT ISwati DixitNo ratings yet

- Terminology Used in Instrument AccuracyDocument6 pagesTerminology Used in Instrument Accuracyinfo6043No ratings yet

- Accuracy and PrecisionDocument4 pagesAccuracy and PrecisionbismashakilsNo ratings yet

- Chapter Two Static XtcsDocument26 pagesChapter Two Static XtcsAgirma girmaNo ratings yet

- SPR 1202 Engineering Metrology: Technical TermsDocument23 pagesSPR 1202 Engineering Metrology: Technical TermsBHOOMINo ratings yet

- SEM 3characteristics of Transducers or SensorsDocument10 pagesSEM 3characteristics of Transducers or Sensorsasnaph9No ratings yet

- Ego Resilience ScaleDocument2 pagesEgo Resilience ScalejyoNo ratings yet

- SHS Hope IvDocument4 pagesSHS Hope IvMacapagal Andrea Nicole DayritNo ratings yet

- Biopsychosocial Model in Depression Revisited: Mauro Garcia-Toro, Iratxe AguirreDocument9 pagesBiopsychosocial Model in Depression Revisited: Mauro Garcia-Toro, Iratxe AguirreayannaxemNo ratings yet

- The Effect of Weekly Training Load Across A Competitive Microcycle On Contextual Variables in Professional SoccerDocument10 pagesThe Effect of Weekly Training Load Across A Competitive Microcycle On Contextual Variables in Professional SoccerAmin CherbitiNo ratings yet

- MorbiliDocument30 pagesMorbiliMjNo ratings yet

- CPR Salbutamol+Ipratropium Neb (BRODIX PLUS) 35'sDocument2 pagesCPR Salbutamol+Ipratropium Neb (BRODIX PLUS) 35'sRacquel SolivenNo ratings yet

- Research in Primary Dental Care 1 PDFDocument4 pagesResearch in Primary Dental Care 1 PDFMARIA NAENo ratings yet

- Hospital Management Domain ModelDocument4 pagesHospital Management Domain Modelvinod kapateNo ratings yet

- ICN Framework of Disaster NursingDocument18 pagesICN Framework of Disaster NursingKRIZIA ANE A. SULONG100% (1)

- Lesson 4 Pe 1Document11 pagesLesson 4 Pe 1Maya RosarioNo ratings yet

- Editted Letter For Practice Clerks and InternsDocument1 pageEditted Letter For Practice Clerks and InternsPaul Rizel LedesmaNo ratings yet

- Handbook HealthDocument79 pagesHandbook HealthVadhan PrasannaNo ratings yet

- Msds FCC CatalystDocument8 pagesMsds FCC CatalystciclointermedioNo ratings yet

- Physical Exercise and Executive Functions in Preadolescent Children, Adolescents and Young Adults - A Meta-AnalysisDocument9 pagesPhysical Exercise and Executive Functions in Preadolescent Children, Adolescents and Young Adults - A Meta-AnalysisPedro J. Conesa CerveraNo ratings yet

- 6 - Breath SoundsDocument31 pages6 - Breath SoundsggNo ratings yet

- Midterm Quiz#1Document6 pagesMidterm Quiz#1AIRINI MAY L. GUEVARRANo ratings yet

- ResumDocument11 pagesResummmmmder7No ratings yet

- Management Is Nothing More Than Motivating Other People GHEORGHE MDocument6 pagesManagement Is Nothing More Than Motivating Other People GHEORGHE MCristina PaliuNo ratings yet

- Septic Shock: Current Management and New Therapeutic FrontiersDocument77 pagesSeptic Shock: Current Management and New Therapeutic Frontiersamal.fathullahNo ratings yet

- Chapter 1 The Human OrganismDocument18 pagesChapter 1 The Human OrganismEuniece AnicocheNo ratings yet

- PCX - Report For Reduction of Heavy Metals in BoreholeDocument32 pagesPCX - Report For Reduction of Heavy Metals in BoreholeUtibe Tibz IkpembeNo ratings yet

- Module 5 Notebook Individual Service PlanDocument44 pagesModule 5 Notebook Individual Service PlanAung Tun100% (1)

- Antibiotic Prophylaxis OrthoDocument4 pagesAntibiotic Prophylaxis OrthoDonNo ratings yet

- 1 s2.0 S2212443823000371 MainDocument11 pages1 s2.0 S2212443823000371 MainMirza GlusacNo ratings yet

- $1-5 Donations Benefits - Medical Study ZoneDocument63 pages$1-5 Donations Benefits - Medical Study ZoneVishal KumarNo ratings yet

- Hospital List-MD INDIA HealthcareDocument288 pagesHospital List-MD INDIA HealthcarePankaj67% (12)