Professional Documents

Culture Documents

ME 781 Statistical Machine Learning and Data Mining-Outline

ME 781 Statistical Machine Learning and Data Mining-Outline

Uploaded by

Scion Of Virikvas0 ratings0% found this document useful (0 votes)

97 views2 pagesThis document outlines a course on machine learning that covers topics such as regression, classification, clustering, dimensionality reduction, deep learning, and big data. It introduces fundamental machine learning concepts like supervised vs unsupervised learning, and also covers algorithms like logistic regression, decision trees, support vector machines, neural networks, and time series analysis. Mathematical preliminaries like probability, statistics, and linear algebra are reviewed. Model evaluation, feature selection, and methods for addressing problems like overfitting are also discussed.

Original Description:

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThis document outlines a course on machine learning that covers topics such as regression, classification, clustering, dimensionality reduction, deep learning, and big data. It introduces fundamental machine learning concepts like supervised vs unsupervised learning, and also covers algorithms like logistic regression, decision trees, support vector machines, neural networks, and time series analysis. Mathematical preliminaries like probability, statistics, and linear algebra are reviewed. Model evaluation, feature selection, and methods for addressing problems like overfitting are also discussed.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

97 views2 pagesME 781 Statistical Machine Learning and Data Mining-Outline

ME 781 Statistical Machine Learning and Data Mining-Outline

Uploaded by

Scion Of VirikvasThis document outlines a course on machine learning that covers topics such as regression, classification, clustering, dimensionality reduction, deep learning, and big data. It introduces fundamental machine learning concepts like supervised vs unsupervised learning, and also covers algorithms like logistic regression, decision trees, support vector machines, neural networks, and time series analysis. Mathematical preliminaries like probability, statistics, and linear algebra are reviewed. Model evaluation, feature selection, and methods for addressing problems like overfitting are also discussed.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 2

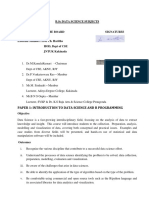

1.

Introduction to Machine Learning

1.1. Types of Learning Algorithms - Supervised, Unsupervised, Reinforcement Learning

1.2. Modeling Basics - Dependent and Independent Variables

1.3. Data Type and Data Scale

2. Mathematical preliminaries

2.1. Set theory

2.2. Elementary Probability and Statistics

2.3. Linear algebra for machine learning

2.4. Dissimilarity and similarity measures

3. Regression - I

3.1. Simple Linear Regression - Estimating the Coefficients

3.2. Assessing the Accuracy of the Coefficient and the estimates

3.3. Concept of t-statistic and p-value

4. Regression – II

4.1. Multiple Linear Regression

4.2. Hypothesis testing in multi-linear regression (F- statistic)

4.3. Incorporating Qualitative Variables in Modeling

4.4. Non-linear regression

4.5. Potential Problems of Linear Regression

5. Regression – II

5.1. k-Nearest Neighbors regression

5.2. Linear Regression vs KNN

5.3. Modeling optimization - Hyper-parameter tuning

6. Assessing Model Accuracy

6.1. Loss functions

6.2. Resampling Methodologies

6.2.1.Cross-Validation (The Wrong and Right Way)

6.2.2.Bootstrap

6.3. Capacity, Overfitting, and Underfitting

6.4. The Bias-Variance Trade-Off

7. Feature selection and regularization

7.1. Subset selection

7.2. Shrinkage Methods (Ridge and Lasso Regression)

7.3. High dimensional data and the curse of dimensionality

7.4. Dimensionality Reduction (PCA)

8. Non-linear models

8.1. Polynomial regression

8.2. Step functions

8.3. Splines and local regression

8.4. Generalized additive models

9. Classification – I

9.1. Simple Logistic Regression - Basic assumptions, evaluating model performance, performing

predictions

9.2. Multiple Logistic Regression

9.3. Evaluating the performance of a classifier – ROC Curve, [Precision, Recall], Confusion Matrix

[TP, FP, TN, FN]

9.4. Multi-class classification using Logistic Regression

9.5. Linear Discriminant Analysis

9.6. Baye’s Classifier

10. Classification – II

10.1. Decision Trees – I

10.2. Classification Trees - Fitting a tree, Performing predictions

10.3. Pruning Trees

10.4. Regression Trees

10.5. Random Forests

11. Classification - III

11.1. Support Vector-Theory (Maximal Margin Classifier, SVC, SVM)

11.2. SVM using different kernels - linear, polynomial, radial

11.3. Multi-class SVM

11.4. SVM and Logistic Regression

12. Unsupervised learning

12.1. Association Rules

12.2. k-Means clustering

12.3. Hierarchical clustering

12.4. Self-Organizing Maps

12.5. PCA and Independent Component Analysis

12.6.

13. Universal Approximator

13.1. Projection Pursuit Regression

13.2. Neural Networks

13.3. Forward and Backpropagation Algorithms

13.4. Introduction to Deep Learning (CNN and RNN)

14. Time series analysis

14.1. Time series forecasting

14.2. Introduction to autoregressive and moving average models

14.3. Change-point detection

15. Big Data

15.1. Introduction to big data

15.2. Big data technologies

Reference books:

1. Gareth James, Daniela Witten, Trevor Hastie, Robert Tibshirani: An Introduction to Statistical

Learning

2. Trevor Hastie, Robert Tibshirani, Jerome Friedman: The Elements of Statistical Learning Data

Mining, Inference, and Prediction

3. Ian Goodfellow, Yoshua Bengio, Aaron Courville: Deep Learning

4. Kevin P. Murphy: Machine Learning A Probabilistic Perspective

5. Thomas A. Runkler: Data Analytics_ Models and Algorithms for Intelligent Data Analysis

You might also like

- Testing Staircase PressurizationDocument5 pagesTesting Staircase Pressurizationthanhlamndl100% (1)

- ASTM D1193-99 Standard Specification For Reagent Water PDFDocument3 pagesASTM D1193-99 Standard Specification For Reagent Water PDFabdulrehman731100% (4)

- CIT-651 Introduction To Machine Learning and Statistical Analysis (42 Hours, 14 Lectures)Document4 pagesCIT-651 Introduction To Machine Learning and Statistical Analysis (42 Hours, 14 Lectures)Amgad AlsisiNo ratings yet

- Indian Statistical Institute: Students' BrochureDocument8 pagesIndian Statistical Institute: Students' Brochurerushikes80No ratings yet

- InftDocument22 pagesInftapi-236544093No ratings yet

- Certificate Course of Data AnalyticsDocument5 pagesCertificate Course of Data Analyticsnattu kakaNo ratings yet

- M.Tech ITDocument52 pagesM.Tech ITMadhu SudhananNo ratings yet

- 2024 CFA Level 2 Exam Curriculum BookDocument46 pages2024 CFA Level 2 Exam Curriculum BookcraigsappletreeNo ratings yet

- 21AI502 SyllbusDocument5 pages21AI502 SyllbusTharun KumarNo ratings yet

- Compre SyllabusDocument11 pagesCompre SyllabusSUJIT KUMARNo ratings yet

- A1388404476 - 64039 - 23 - 2023 - Machine Learning IIDocument10 pagesA1388404476 - 64039 - 23 - 2023 - Machine Learning IIraj241299No ratings yet

- Week 1Document3 pagesWeek 1Micheal BudhathokiNo ratings yet

- Data Mining OutlineDocument5 pagesData Mining OutlineNoureen ZafarNo ratings yet

- Machine Learning PDFDocument2 pagesMachine Learning PDFKhushi JainNo ratings yet

- Advanced Statistical Techniques For Analytics (Course Handout, 2018H2)Document6 pagesAdvanced Statistical Techniques For Analytics (Course Handout, 2018H2)mugenishereNo ratings yet

- MITx 6.86x Notes - MDDocument91 pagesMITx 6.86x Notes - MDKALI AKALINo ratings yet

- SyllabusDocument21 pagesSyllabusmohdsharukhkhanNo ratings yet

- Machine Learning DSE Course HandoutDocument7 pagesMachine Learning DSE Course Handoutbhavana2264No ratings yet

- Psychological StatisticsDocument170 pagesPsychological StatisticsKarthick VijayNo ratings yet

- ML SyllabusDocument2 pagesML SyllabusMANOJ KUMARNo ratings yet

- Mgt1051 Business-Analytics-For-Engineers TH 1.1 47 Mgt1051Document2 pagesMgt1051 Business-Analytics-For-Engineers TH 1.1 47 Mgt1051Anbarasan RNo ratings yet

- 3rd Year SyllabusDocument3 pages3rd Year Syllabusyadavnisha8449No ratings yet

- ML SyllabusDocument5 pagesML Syllabuskopima2657No ratings yet

- New Syllabus - COMP 482 Data Mining1674216496Document3 pagesNew Syllabus - COMP 482 Data Mining1674216496Arima KouseiNo ratings yet

- II Year - 3 Sem SyllabusDocument14 pagesII Year - 3 Sem SyllabussankarcsharpNo ratings yet

- Nptel: NOC:Introduction To Data Analytics - Video CourseDocument2 pagesNptel: NOC:Introduction To Data Analytics - Video CourseKanhaNo ratings yet

- Bapatla Engineering College: Unit - IDocument2 pagesBapatla Engineering College: Unit - Inalluri_08No ratings yet

- Linear Regression (15%)Document4 pagesLinear Regression (15%)Suneela MatheNo ratings yet

- Syllabus 8 SemDocument9 pagesSyllabus 8 SemRahulNo ratings yet

- B.Sc. Degree Course in Computer Science: SyllabusDocument25 pagesB.Sc. Degree Course in Computer Science: Syllabuscall me chakaraNo ratings yet

- Course Title Course NumberDocument15 pagesCourse Title Course NumberAbdul WahabNo ratings yet

- Unit I: B.E. 401 - Engineering Mathematics IiiDocument7 pagesUnit I: B.E. 401 - Engineering Mathematics Iii9y9aNo ratings yet

- Data Science - SylDocument2 pagesData Science - SylPranav PandyNo ratings yet

- CSE 1st SemesterDocument6 pagesCSE 1st SemesterkdjsajkdjkdshkjaNo ratings yet

- Business Statistics - H1UB105TDocument2 pagesBusiness Statistics - H1UB105TAryan PurwarNo ratings yet

- 15 Module in ADV NURSING STATDocument6 pages15 Module in ADV NURSING STATCEFI Office for Research and PublicationsNo ratings yet

- Question Bank RDocument19 pagesQuestion Bank Rcherry07 cherryNo ratings yet

- Indian Institute of Technology, Kanpur: Department of Computer Science and Engineering Revision of Existing Course CS771Document2 pagesIndian Institute of Technology, Kanpur: Department of Computer Science and Engineering Revision of Existing Course CS771broken heartNo ratings yet

- Subjects Allotted For This SemesterDocument4 pagesSubjects Allotted For This SemesterAbinaya CNo ratings yet

- AICM 702 - Research Statistical MethodsDocument156 pagesAICM 702 - Research Statistical MethodsCar Sebial VelascoNo ratings yet

- Updated Syllabus - ME CSE Word Document PDFDocument62 pagesUpdated Syllabus - ME CSE Word Document PDFGayathri R HICET CSE STAFFNo ratings yet

- Course Code CSA400 8 Course Type LTP Credits 4: Applied Machine LearningDocument3 pagesCourse Code CSA400 8 Course Type LTP Credits 4: Applied Machine LearningDileep singh Rathore 100353No ratings yet

- MA204 3-1-0-4 2016 Prerequisite: Nil: Course No. Course Name L-T-P - Credits Year ofDocument27 pagesMA204 3-1-0-4 2016 Prerequisite: Nil: Course No. Course Name L-T-P - Credits Year ofTHOMASAVIO KOTTEPARAMBILNo ratings yet

- Introduction of MLDocument53 pagesIntroduction of MLchauhansumi143No ratings yet

- Theory of ComputationDocument5 pagesTheory of ComputationsowmiyabalaNo ratings yet

- Short Quetions Data AnalyticsDocument15 pagesShort Quetions Data AnalyticssinghvikrantkingNo ratings yet

- B.SC CSIT Micro Syllabus - Semester VDocument21 pagesB.SC CSIT Micro Syllabus - Semester VKamal ShrishNo ratings yet

- ME CSE SyllabusDocument65 pagesME CSE SyllabusjayarajmeNo ratings yet

- PGDBA SyllabusDocument5 pagesPGDBA Syllabusarpan singhNo ratings yet

- Geoe Stadi SticaDocument507 pagesGeoe Stadi SticaMarta Rodriguez MartinezNo ratings yet

- Machine Learning Methods in Environmental SciencesDocument365 pagesMachine Learning Methods in Environmental SciencesAgung Suryaputra100% (1)

- Sem 620Document21 pagesSem 620arkarajsaha2002No ratings yet

- BCA Syllabus 2017-18 PDFDocument40 pagesBCA Syllabus 2017-18 PDF『 NSD 』 LuciferNo ratings yet

- Syllabification of ScriptingDocument44 pagesSyllabification of ScriptingSubi GovindNo ratings yet

- ME F321 - Data Minining in Mechanical Sciences - Handout - Jan 2023Document4 pagesME F321 - Data Minining in Mechanical Sciences - Handout - Jan 2023PVS AdityaNo ratings yet

- MCA SubjectsDocument33 pagesMCA SubjectsLakshmi PujariNo ratings yet

- Semester I 32creditsDocument11 pagesSemester I 32creditsAkshayRMishraNo ratings yet

- MBA-Executive (Working Professionals) : Syllabus - First SemesterDocument10 pagesMBA-Executive (Working Professionals) : Syllabus - First SemesterRachnaNo ratings yet

- Syllabu 3rd - SemDocument13 pagesSyllabu 3rd - SemArnav GuptaNo ratings yet

- Karanataka State Open University: M.SC StatisticsDocument21 pagesKaranataka State Open University: M.SC Statisticsk4rajeshNo ratings yet

- Forecasting with Time Series Analysis Methods and ApplicationsFrom EverandForecasting with Time Series Analysis Methods and ApplicationsNo ratings yet

- Linear Regression-3: Prof. Asim Tewari IIT BombayDocument50 pagesLinear Regression-3: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- 00 ME781 Merged Till SVMDocument604 pages00 ME781 Merged Till SVMScion Of VirikvasNo ratings yet

- Linear Regression-1: Prof. Asim Tewari IIT BombayDocument27 pagesLinear Regression-1: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- Linear Regression-2: Prof. Asim Tewari IIT BombayDocument19 pagesLinear Regression-2: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- L 4 5 ProbabilityDocument45 pagesL 4 5 ProbabilityScion Of VirikvasNo ratings yet

- L - 1 - 2 - Emerging Trends in Artificial Intelligence and Data ScienceDocument106 pagesL - 1 - 2 - Emerging Trends in Artificial Intelligence and Data ScienceScion Of VirikvasNo ratings yet

- L 7 Dissimilarity MeasuresDocument16 pagesL 7 Dissimilarity MeasuresScion Of VirikvasNo ratings yet

- Data Scales and Representation: Prof. Asim Tewari IIT BombayDocument27 pagesData Scales and Representation: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- Set Theory: Prof. Asim Tewari IIT BombayDocument22 pagesSet Theory: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- L 9 Linear Regression 1Document19 pagesL 9 Linear Regression 1Scion Of VirikvasNo ratings yet

- ME 781 - Statistical Machine: Learning and Data MiningDocument2 pagesME 781 - Statistical Machine: Learning and Data MiningScion Of VirikvasNo ratings yet

- 16 Linear Regression - CP, AIC, BIC, and Adjusted R2 - PCADocument83 pages16 Linear Regression - CP, AIC, BIC, and Adjusted R2 - PCAScion Of VirikvasNo ratings yet

- 21 K-Nearest Neighbors RegressionDocument8 pages21 K-Nearest Neighbors RegressionScion Of VirikvasNo ratings yet

- 24 Support Vector MachineDocument14 pages24 Support Vector MachineScion Of VirikvasNo ratings yet

- Classification: Prof. Asim Tewari IIT BombayDocument35 pagesClassification: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- 23 Tree-Based MethodsDocument35 pages23 Tree-Based MethodsScion Of VirikvasNo ratings yet

- Resampling Methods: Prof. Asim Tewari IIT BombayDocument15 pagesResampling Methods: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- 19 Assessing Model AccuracyDocument16 pages19 Assessing Model AccuracyScion Of VirikvasNo ratings yet

- One-Dimensional Heat Conduction Equation - Long CylinderDocument9 pagesOne-Dimensional Heat Conduction Equation - Long CylinderScion Of VirikvasNo ratings yet

- One-Dimensional Heat Conduction Equation - Plane WallDocument15 pagesOne-Dimensional Heat Conduction Equation - Plane WallScion Of VirikvasNo ratings yet

- Conservation of Momentum-8 Derivation: UdydzvDocument15 pagesConservation of Momentum-8 Derivation: UdydzvScion Of VirikvasNo ratings yet

- Confusion Matrix: Prof. Asim Tewari IIT BombayDocument8 pagesConfusion Matrix: Prof. Asim Tewari IIT BombayScion Of VirikvasNo ratings yet

- Chapter #4 Bending Stress: Simple Bending Theory Area of Second Moment Parallel Axes Theorem Deflection of Composite BeamDocument30 pagesChapter #4 Bending Stress: Simple Bending Theory Area of Second Moment Parallel Axes Theorem Deflection of Composite Beamlayiro2No ratings yet

- Project - Network Analysis (PERT and CPM) L1Document39 pagesProject - Network Analysis (PERT and CPM) L1Arnab GhoshNo ratings yet

- Please Follow These Steps To Upgrade Your SystemDocument3 pagesPlease Follow These Steps To Upgrade Your SystemphanisanyNo ratings yet

- Software Requirements Specification: Mini Project KCS-354Document5 pagesSoftware Requirements Specification: Mini Project KCS-354Shubham GuptaNo ratings yet

- SmartSketch CodeDocument3 pagesSmartSketch Codesimran100% (1)

- CH 05Document57 pagesCH 05Keyur MahantNo ratings yet

- Moody's Report 2005Document52 pagesMoody's Report 2005as111320034667No ratings yet

- PDP 11 Handbook 1969Document112 pagesPDP 11 Handbook 1969Jean GrégoireNo ratings yet

- Assignment 1-Blueprint Reading Recap and Introduction To SolidWorks v1Document19 pagesAssignment 1-Blueprint Reading Recap and Introduction To SolidWorks v1Carlo BalbuenaNo ratings yet

- Finite Field TheoryDocument16 pagesFinite Field TheoryJack AlNo ratings yet

- Giant - Anthem Advanced Pro 29 2 - 2466Document1 pageGiant - Anthem Advanced Pro 29 2 - 2466KM MuiNo ratings yet

- Eco 2201Document7 pagesEco 2201Fu Shao YiNo ratings yet

- Experimental Assessment On Air Clearance of MultipDocument13 pagesExperimental Assessment On Air Clearance of MultipFirdaus AzmiNo ratings yet

- ADC and DACDocument7 pagesADC and DACGhulam MurtazaNo ratings yet

- GL 117Document14 pagesGL 117Luiz CarlosNo ratings yet

- Conductors: NCERT Notes For Class 12 Chemistry Chapter 3: ElectrochemistryDocument12 pagesConductors: NCERT Notes For Class 12 Chemistry Chapter 3: ElectrochemistryDev Printing SolutionNo ratings yet

- Experiments With An Axial Fan: Page 1/3 10/2010Document3 pagesExperiments With An Axial Fan: Page 1/3 10/2010Priyam ParasharNo ratings yet

- Beta wp311 PDFDocument18 pagesBeta wp311 PDFmarcotuliolopesNo ratings yet

- Datasheet Infineon bts840s2Document16 pagesDatasheet Infineon bts840s2edsononoharaNo ratings yet

- QAed-SSLM EALS Q1 WK1 LAYOG-RTP-1Document5 pagesQAed-SSLM EALS Q1 WK1 LAYOG-RTP-1Trash BinNo ratings yet

- Mitsubishi Heavy Industries KRX6 SMDocument244 pagesMitsubishi Heavy Industries KRX6 SMАртур БобкинNo ratings yet

- Saudi Aramco Inspection ChecklistDocument23 pagesSaudi Aramco Inspection ChecklistjahaanNo ratings yet

- Hazop Finals ExcelDocument36 pagesHazop Finals ExcelHandyNo ratings yet

- ABAP ProgrammingDocument15 pagesABAP ProgrammingSmackNo ratings yet

- 3E Jet - PCETDocument10 pages3E Jet - PCETSathya NarayananNo ratings yet

- 1819 G-L Final Timetable T2 (Manama) UpdatedDocument2 pages1819 G-L Final Timetable T2 (Manama) Updatedelena123456elena123456No ratings yet

- Experiment No. 2.1 Sand Control Test: AFS Grain Fineness NumberDocument5 pagesExperiment No. 2.1 Sand Control Test: AFS Grain Fineness NumberDhananjay Shimpi100% (2)

- Palynology (Pollen, Spores, Etc.) : Palynology Sensu Stricto and Sensu LatoDocument10 pagesPalynology (Pollen, Spores, Etc.) : Palynology Sensu Stricto and Sensu Latoxraghad99No ratings yet