Professional Documents

Culture Documents

Sai Kumar Aindla: Education

Sai Kumar Aindla: Education

Uploaded by

Harold0 ratings0% found this document useful (0 votes)

65 views1 pageThis document is a resume for Sai Kumar Aindla. It summarizes his education, work experience, skills, and projects. For education, it lists that he has an MS in Computer Science from UB and a BE in IT from CBIT in India. For work experience, it details internships at ViacomCBS and a research assistant role. It also lists skills in languages, cloud platforms, data warehousing, and blockchain development. It provides brief descriptions of three professional projects and three academic projects involving distributed systems, blockchain, and image classification.

Original Description:

sai

Original Title

Aindla_Resume

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThis document is a resume for Sai Kumar Aindla. It summarizes his education, work experience, skills, and projects. For education, it lists that he has an MS in Computer Science from UB and a BE in IT from CBIT in India. For work experience, it details internships at ViacomCBS and a research assistant role. It also lists skills in languages, cloud platforms, data warehousing, and blockchain development. It provides brief descriptions of three professional projects and three academic projects involving distributed systems, blockchain, and image classification.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

65 views1 pageSai Kumar Aindla: Education

Sai Kumar Aindla: Education

Uploaded by

HaroldThis document is a resume for Sai Kumar Aindla. It summarizes his education, work experience, skills, and projects. For education, it lists that he has an MS in Computer Science from UB and a BE in IT from CBIT in India. For work experience, it details internships at ViacomCBS and a research assistant role. It also lists skills in languages, cloud platforms, data warehousing, and blockchain development. It provides brief descriptions of three professional projects and three academic projects involving distributed systems, blockchain, and image classification.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 1

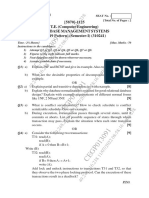

SAI KUMAR AINDLA

saindla@buffalo.edu | +1 (414)231-0622 | [LinkedIn] | [GitHub]

EDUCATION

University at Buffalo (UB) | NY, USA Aug ‘19 – Dec ‘20

MS in Computer Science | GPA – 3.7/4 | Analysis & Design of Algorithms | Machine Learning | Distributed Systems | Database Systems | Blockchain

Application Development | Data Mining | Seminar on Edge Computing

Chaitanya Bharathi Institute of Technology (CBIT) | Hyderabad, India Sep ‘14 – Apr ‘18

BE in Information Technology (Computer Science and Electronics) | GPA – 8.5/10

WORK EXPERIENCE

ViacomCBS | Data Engineer Intern | Fort Lauderdale, Florida, USA June ‘20 – Aug ‘20

o Designed, pioneered and presented use case that gives options to reduce difficulty of data discovery and data governance by introducing Google Data

Catalog, its features to various teams and stakeholders dealing with petabytes amount of data in the organization.

o Deployed data ingestion jobs to ingest incoming CBS sports data from multiple ViacomCBS sources into HBase with Spark, Kafka and Avro schema as a

data format to store it.

o Improved the data discovery of Tableau workbooks metadata by 15% through detailed analysis of metadata data ingestion and data pipelines using

Data Catalog Rest API.

o Exceeded the expectations and expanded their existing pipeline of a project in production by integrating it with Data Catalog work I initiated.

Data Intensive Data Computing Lab, SUNY Buffalo | Research Assistant | Buffalo, USA Sep ‘19 – Jan ‘20

o Worked as front-end developer using React JS for research project called “OneDataShare”, which is based on data scheduling and optimization as cloud

hosted service.

Core Compete Pvt Ltd | Data Engineer | Hyderabad, India Jul ’18 – Aug ‘19

o Programmed the data migration from SQL server and AWS Redshift to Snowflake data warehouse. Formulated the pipeline to amplify performance of

migration and validation using AWS RDS, S3, EC2 Windows and Linux instances.

o Developed complex queries in Hive to validate data migration of historical and incremental data between Hadoop HDFS and Hive data warehouse and

decreased validation time from 3 hours to 28 minutes for each table.

o Augmented anomaly detection by 5% on customer transactions data using Spark with cumulative analysis based on business rules and followed by

building the dashboards for higher level business analysis.

SKILLS

Languages - Java, Python, C, SQL, Solidity

Familiar Cloud Platforms - AWS, Google Cloud Platform

Data warehouse & Database - Google BigQuery, Snowflake on AWS, AWS Redshift, Hive, Postgres, MySQL, SQL server

Web Development - HTML, CSS, JavaScript, jQuery, React JS, Express, REST API

Distributed Programming - Network Socket programming to implement distributed systems algorithms using Java in Android Studio

Software Products - Kafka, Android Studio, Airflow, Spark, Hadoop, TensorFlow, Talend, JIRA, Trello, Tableau, MS Power BI, GitHub

Blockchain Development - Solidity, Truffle Ganache, Remix Ide, MetaMask Ethereum.

PROFESSIONAL PROJECTS

Data Governance and Discovery using Google Cloud Data Catalog, [ViacomCBS], [presentation]

o Designed business metadata to identify and make sense of the YouTube analytics data in Google Big Query by creating Python API Calls.

o Phases include - Identifying the data to apply Data Catalog, exploring already present technical metadata, creating schematized tags to understand the

data that is unknown. Created an Airflow Dag to automatically populate the tags of data assets.

o Customized a CI/CD pipeline leveraging Viacom cloud service and Docker images which motorized airflow schedulers to automatically populate the tags.

Data lake on AWS using Snowflake, [Core Compete Pvt Ltd]

o Developed an end-to-end ETL pipeline and orchestrated the flow using Airflow to extract the useful information from raw data of 100GB.

o Functionality involves data staging and transformation using Python and Spark in AWS EMR as well as AWS Glue, storing the transformed data in S3 and

migrating the data from S3 to the fact & dimension tables in Snowflake, reporting the data in MS Power BI.

o Transformations briefed Data Cleaning, Data Integration and Data Validation.

ACADEMIC PROJECTS

Total FIFO ordering in Group Messenger (Distributed Android Programming), [code]

o Implemented decentralized algorithm to multicast the messages in Total-FIFO ordering among 5 android emulators in the amidst of one random

instance failure during the execution. Followed IS-IS algorithm to achieve the Total ordering.

o Performed network socket programming using Java for the communication and SQLite database to store/receive the messages on each instance.

o Model succeeded in handling sending/receiving messages multiple times concurrently under at most one failure.

Soccer Club Balloting – Blockchain Web Application, [code]

o A balloting application helps soccer club to boost the fan engagement towards them, developed on blockchain technology to pre vent voter fraud.

o Implemented in the form of web-based application where backend is developed using Solidity and front end using Express server, JavaScript, jQuery,

html and bootstrap. Devised balloting using the Ethereum of the MetaMask cryptocurrency wallet and Truffle Ganache.

o The application records each vote on their chain of nodes, and one cannot change it once it is cast.

o Contributed this project to open source 2020 GitHub archive program.

Image classification using Neural Networks, [code]

o Classified dataset consisting of 70k sample images by training and testing using Neural Networks, Multi-layer Neural Networks and Convolutional Neural

Networks. Compared the accuracies of three classification approaches.

o Concluded with best accuracy of 90% using CNN model in contrast with Neural Networks with one hidden layer and MNN.

CERTIFICATIONS

AWS CERTIFIED DEVELOPER – ASSOCIATE SAS CERTIFIED BASE PROGRAMMER FOR SAS 9

You might also like

- Vishwa SrDataEngineer ResumeDocument4 pagesVishwa SrDataEngineer ResumeHARSHANo ratings yet

- Google Cloud Platform for Data Engineering: From Beginner to Data Engineer using Google Cloud PlatformFrom EverandGoogle Cloud Platform for Data Engineering: From Beginner to Data Engineer using Google Cloud PlatformRating: 5 out of 5 stars5/5 (1)

- Dice Resume CV Vijay KrishnaDocument4 pagesDice Resume CV Vijay KrishnaRAJU PNo ratings yet

- Generating An Output File With .CSV Extension From An E-Text Template Based BIP Report in Oracle HCM Cloud ApplicationDocument5 pagesGenerating An Output File With .CSV Extension From An E-Text Template Based BIP Report in Oracle HCM Cloud ApplicationBala SubramanyamNo ratings yet

- ShantamMudgal - BigData & Azure Analysis For CororateDocument1 pageShantamMudgal - BigData & Azure Analysis For Cororatejijijanardhanan006No ratings yet

- Data Engineer Resume v1.1Document2 pagesData Engineer Resume v1.1Mandeep BakshiNo ratings yet

- Sherbin W - ResumeDocument1 pageSherbin W - Resumewsherbin92No ratings yet

- Resume YogeshdarjiDocument1 pageResume Yogeshdarjiapi-324175597No ratings yet

- Sample Resume 5 2024 3 1 2 24 41 106Document4 pagesSample Resume 5 2024 3 1 2 24 41 106mgemal376No ratings yet

- Abhishek Patil Resume Python AI-ML DeveloperDocument2 pagesAbhishek Patil Resume Python AI-ML DeveloperPhani PrakashNo ratings yet

- Main PDFDocument2 pagesMain PDFziya mohammedNo ratings yet

- Photo ResumeDocument2 pagesPhoto Resumeafreed khanNo ratings yet

- Ashish Kedia ResumeDocument2 pagesAshish Kedia Resumeadi chopra75% (4)

- Resume Arijit PDFDocument1 pageResume Arijit PDFyugantd22No ratings yet

- CVDocument1 pageCVkushal sainiNo ratings yet

- Sai Likhith Kanuparthi Resume Mar 19 2020Document1 pageSai Likhith Kanuparthi Resume Mar 19 2020Sai LikhithNo ratings yet

- Maanvendra CV IndDocument1 pageMaanvendra CV Indanuj palNo ratings yet

- Dimple Dhok ResumeDocument4 pagesDimple Dhok Resumeashish109826No ratings yet

- CV KartikDutta 2022Document2 pagesCV KartikDutta 2022Kartik DuttaNo ratings yet

- Suraj Resume CTRDocument2 pagesSuraj Resume CTRbojir21485No ratings yet

- Kritika Rag Resume LinkedInDocument2 pagesKritika Rag Resume LinkedInAshwini KumarNo ratings yet

- Saurav Dudulwar ResumeDocument1 pageSaurav Dudulwar Resumemd mansoorNo ratings yet

- KannanS Bigdata Resume Oct10Document5 pagesKannanS Bigdata Resume Oct10prajaNo ratings yet

- Akshay GavandiDocument1 pageAkshay GavandiAkshay GavandiNo ratings yet

- Uday Tak: Data EngineerDocument2 pagesUday Tak: Data Engineeranthony talentitNo ratings yet

- Parth Doshi: EducationDocument1 pageParth Doshi: EducationPrit GalaNo ratings yet

- Resume YyDocument1 pageResume YySuniti KerNo ratings yet

- KumarShivendu - CV Software Development Qdrant GermanyDocument1 pageKumarShivendu - CV Software Development Qdrant Germanyg2500110012No ratings yet

- Sonali Singh-Resume PDFDocument1 pageSonali Singh-Resume PDFArya KumarNo ratings yet

- BDocument4 pagesBaniruddhaNo ratings yet

- Naukri SridharA (15y 0m)Document2 pagesNaukri SridharA (15y 0m)Anirudh SinghNo ratings yet

- Bikash - Jha-CV - Docx (1) 2Document3 pagesBikash - Jha-CV - Docx (1) 2bikash jhaNo ratings yet

- Ankit ResumeDocument1 pageAnkit ResumeAman NegiNo ratings yet

- Didiksha Talwaniya ResumeDocument4 pagesDidiksha Talwaniya Resumeirfan93940No ratings yet

- Ihumza980Document4 pagesIhumza980ezandluxforeverNo ratings yet

- Data Scientist CV 2023 Addiel DeAlbaDocument5 pagesData Scientist CV 2023 Addiel DeAlbalifexbiosciencesNo ratings yet

- Shabarish Kesa h1b ResumeDocument3 pagesShabarish Kesa h1b Resumeapi-489910015No ratings yet

- Resume 1Document1 pageResume 1sunilkumarsavana1No ratings yet

- Jayasree Yedlapally: Data Architecture Engineering - SeniorDocument5 pagesJayasree Yedlapally: Data Architecture Engineering - SeniorShantha GopaalNo ratings yet

- Barkha Ahuja ResumeDocument1 pageBarkha Ahuja ResumeSandeep Kumar YadlapalliNo ratings yet

- Naukri Girivasbadagi (4y 0m)Document3 pagesNaukri Girivasbadagi (4y 0m)rajendrasNo ratings yet

- Jialu Sui Resume SDE PDFDocument1 pageJialu Sui Resume SDE PDFvimalkNo ratings yet

- Bikash Jha CV GeospatialDocument4 pagesBikash Jha CV Geospatialbikash jhaNo ratings yet

- Shaik MahajabinDocument4 pagesShaik Mahajabinsoumyaranjan panigrahyNo ratings yet

- Hemanth HadoopDocument3 pagesHemanth Hadoopvinodh.bestinsuranceNo ratings yet

- Kartik NubinyalDocument1 pageKartik NubinyalAbhimanyuGangulaNo ratings yet

- Cloud RanganathJastiDocument6 pagesCloud RanganathJastiHarshvardhini MunwarNo ratings yet

- Copious CV - Rohan K - Data EngineerDocument4 pagesCopious CV - Rohan K - Data EngineerAnuj DekaNo ratings yet

- MonishKunar DataAnalyst ResumeDocument3 pagesMonishKunar DataAnalyst Resumevalish silverspaceNo ratings yet

- CV TemplateDocument2 pagesCV TemplatetultuliiNo ratings yet

- Anusha Narayanan Resume SDEDocument2 pagesAnusha Narayanan Resume SDEpragnesh.xoriantNo ratings yet

- Mohit ShivramwarCVDocument5 pagesMohit ShivramwarCVNoor Ayesha IqbalNo ratings yet

- First Last: EducationDocument1 pageFirst Last: EducationÑutrinoᐆGAMINGNo ratings yet

- ANSAR HAYAT BigData ArchitectDocument3 pagesANSAR HAYAT BigData ArchitectSana AliNo ratings yet

- Neel DEDocument1 pageNeel DENEEL KANABARNo ratings yet

- Mikhail Rakhlin - Resume - ExtendedDocument3 pagesMikhail Rakhlin - Resume - ExtendedPushpendraNo ratings yet

- Abhishek Resume1Document1 pageAbhishek Resume1aviatwork1No ratings yet

- Dheeraj Ponnoju ResumeDocument2 pagesDheeraj Ponnoju Resumedhrumil.gecNo ratings yet

- Bindiya - 144628950Document3 pagesBindiya - 144628950preeti dNo ratings yet

- Snehil OracleDocument1 pageSnehil OracleSnehil KumarNo ratings yet

- Simple Reflex Agent: Question # 3: 10 MarksDocument4 pagesSimple Reflex Agent: Question # 3: 10 MarksAli ZainNo ratings yet

- Data Transformation Manager (DTM) Process: Courtesy InformaticaDocument2 pagesData Transformation Manager (DTM) Process: Courtesy InformaticaSQL INFORMATICANo ratings yet

- Management Information System.: © Pearson Education Limited, 2004Document19 pagesManagement Information System.: © Pearson Education Limited, 2004Sarath GangadharanNo ratings yet

- Chatbot AbstractDocument6 pagesChatbot AbstractAshiru FemiNo ratings yet

- Hash Function ThesisDocument7 pagesHash Function Thesisfjdqvrcy100% (2)

- Leslie Turner CH 13Document34 pagesLeslie Turner CH 13AYI FADILLAHNo ratings yet

- May Jun 2022 PDFDocument2 pagesMay Jun 2022 PDFAbhijeet Dhanraj SalveNo ratings yet

- Geoscience Knowledge Graph in The Big Data EraDocument11 pagesGeoscience Knowledge Graph in The Big Data Eracyberangel1000No ratings yet

- Learning ManagementDocument55 pagesLearning ManagementArsalan MakkiNo ratings yet

- UG - B.Lib.I.Sc. - Library Information Science - 109 21 - ICT in LibrariesDocument290 pagesUG - B.Lib.I.Sc. - Library Information Science - 109 21 - ICT in Librariesambika venkateshNo ratings yet

- Francisco Facio, Teacher at Palestine ISD in Texas Sends His Penis Picture To Secretary When He Worked at TDCJ PriosnDocument10 pagesFrancisco Facio, Teacher at Palestine ISD in Texas Sends His Penis Picture To Secretary When He Worked at TDCJ PriosnTDCJInsider.comNo ratings yet

- Week 02 - Decision Support Systems and Data WarehousingDocument11 pagesWeek 02 - Decision Support Systems and Data WarehousingSarasi YashodhaNo ratings yet

- PDF New Advances in Information Systems and Technologies 1St Edition Alvaro Rocha Ebook Full ChapterDocument53 pagesPDF New Advances in Information Systems and Technologies 1St Edition Alvaro Rocha Ebook Full Chapterdavid.ritter532100% (1)

- Accident Case Retrieval and Analyses Using Natural Language Processing in The Construction IndustryDocument13 pagesAccident Case Retrieval and Analyses Using Natural Language Processing in The Construction IndustrySerge BNo ratings yet

- Unit 5 BdaDocument18 pagesUnit 5 Bdajaya97548No ratings yet

- Zauchensee2020 Ralph CONTRIBUTIONDocument12 pagesZauchensee2020 Ralph CONTRIBUTIONSANKET KUMARNo ratings yet

- Acp ML DL Brochure 1657011895924Document19 pagesAcp ML DL Brochure 1657011895924still life productionNo ratings yet

- JSS3 Exam Ist TermDocument10 pagesJSS3 Exam Ist TermGabbs AdeNo ratings yet

- Abhishek TiwariDocument10 pagesAbhishek TiwariAbhishek TiwariNo ratings yet

- Webometric Analysis of Central Government Universities in South IndiaDocument20 pagesWebometric Analysis of Central Government Universities in South IndiaJelani SNo ratings yet

- Ieee 2021-22 BigdataDocument4 pagesIeee 2021-22 BigdataNexgen TechnologyNo ratings yet

- Enterprise Application IntegrationDocument6 pagesEnterprise Application Integrationkatherine976100% (1)

- A Generative Adversari AL Network Based Deep Learning Method For Low Quality Defect Image Reconstruction and RecognitionDocument4 pagesA Generative Adversari AL Network Based Deep Learning Method For Low Quality Defect Image Reconstruction and RecognitionGabriel DAnnunzioNo ratings yet

- Pawan Resume May 2023Document2 pagesPawan Resume May 2023mkranrhikr93No ratings yet

- Blockchain-Mini-project ReportDocument13 pagesBlockchain-Mini-project ReportPRATHMESH JOSHI (RA2011050010082)No ratings yet

- New Challenges in Computational Collective Intelligence 2009Document347 pagesNew Challenges in Computational Collective Intelligence 2009petarnurkiccNo ratings yet

- Normal Form - Unit - IIDocument13 pagesNormal Form - Unit - IISuryaNo ratings yet

- Panskura Banamali College (Autonomous) : SemesterDocument2 pagesPanskura Banamali College (Autonomous) : SemesterArijit MaityNo ratings yet

- Speech Emotions Recognition Using Machine LearningDocument5 pagesSpeech Emotions Recognition Using Machine LearningAditya KumarNo ratings yet