Professional Documents

Culture Documents

Cheatsheet Supervised Learning

Cheatsheet Supervised Learning

Uploaded by

masda ssasaCopyright:

Available Formats

You might also like

- Copie de ملخص-الرياضيات-1باك-علوم-موقع الاستاذ المودن PDFDocument132 pagesCopie de ملخص-الرياضيات-1باك-علوم-موقع الاستاذ المودن PDFFatima OusaaadaNo ratings yet

- Copie de ملخص الرياضيات 1باك علوم موقع الاستاذ المودنDocument132 pagesCopie de ملخص الرياضيات 1باك علوم موقع الاستاذ المودنحواش عبد النورNo ratings yet

- تمارين حول الاشتقاقDocument3 pagesتمارين حول الاشتقاقtalbiyahia2006No ratings yet

- Hrka Qthifa Fi Mjal Althqala Almntthm Mlkhs Aldrs 1 1Document2 pagesHrka Qthifa Fi Mjal Althqala Almntthm Mlkhs Aldrs 1 1Zakaria AmraniNo ratings yet

- رياضيات الاستاذة مالكيDocument5 pagesرياضيات الاستاذة مالكيhamamaNo ratings yet

- المحور 4 الجزء 1 التوزيع الاحتمالي المتقطعDocument4 pagesالمحور 4 الجزء 1 التوزيع الاحتمالي المتقطعHamouda AllaliNo ratings yet

- ملخص الاحصاء + تمارين مع الحل في الرياضيات للسنة الثالثة ثانوي شعبة تسيير و اقتصادDocument46 pagesملخص الاحصاء + تمارين مع الحل في الرياضيات للسنة الثالثة ثانوي شعبة تسيير و اقتصادYaCiNe DZNo ratings yet

- مبادئ في المنطق - الأولى بكالوريا علوم تجريبية مادة الرياضياتDocument6 pagesمبادئ في المنطق - الأولى بكالوريا علوم تجريبية مادة الرياضياتMed ChetNo ratings yet

- 2eme SC Devoir s1Document1 page2eme SC Devoir s1ZIAD BIDJAOUINo ratings yet

- Modakira 18Document4 pagesModakira 18Mouha MedNo ratings yet

- UntitledDocument1 pageUntitledMahmoud ElkenawyNo ratings yet

- Modakira 3Document1 pageModakira 3ⴰⴱⵓ ⴰⵍⴰⴻNo ratings yet

- Modakira 3Document1 pageModakira 3ⴰⴱⵓ ⴰⵍⴰⴻNo ratings yet

- Ø Ø Ø Ø Ù Ø Ù Ù Ø Ù Ø Ø Ù Ù Ø Ø Ù Ø Ø Ø Ù Ù Ø Ù ØDocument5 pagesØ Ø Ø Ø Ù Ø Ù Ù Ø Ù Ø Ø Ù Ù Ø Ø Ù Ø Ø Ø Ù Ù Ø Ù ØmehdimehdicheerifNo ratings yet

- Ôø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä Á - ARB - 9-3-0402-2014-2015 2Document7 pagesÔø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä Á - ARB - 9-3-0402-2014-2015 2christinafrancis1711No ratings yet

- جميع دروس وتمارين الأولى باك علوم تجريبيةDocument133 pagesجميع دروس وتمارين الأولى باك علوم تجريبيةEssaid AjanaNo ratings yet

- مجلة الاعداد المركبة للاستاذ مرنيز وليدDocument66 pagesمجلة الاعداد المركبة للاستاذ مرنيز وليدWahiba KheloufiNo ratings yet

- مجلة الاعداد المركبةDocument68 pagesمجلة الاعداد المركبةRamziNo ratings yet

- Mbadi Fi Almntq Aldrs 1Document7 pagesMbadi Fi Almntq Aldrs 1elabbassiayoub071No ratings yet

- دروس الرياضيات أولى باكالوريا علوم تجريبيةDocument153 pagesدروس الرياضيات أولى باكالوريا علوم تجريبيةemadadreamNo ratings yet

- 6 - تحليل الانحدارDocument9 pages6 - تحليل الانحدارyousralou911No ratings yet

- Ôø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä À - ARB - 9-3-0401-2014-2015 2Document6 pagesÔø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä À - ARB - 9-3-0401-2014-2015 2christinafrancis1711No ratings yet

- MatlapDocument9 pagesMatlapalialamy06No ratings yet

- Alhsa 5 1 Altadad Aldrs 2Document2 pagesAlhsa 5 1 Altadad Aldrs 2Med BeeNo ratings yet

- درس المنطقDocument7 pagesدرس المنطقHassan HassanNo ratings yet

- Mbadi Fi Almntq Aldrs 1 2Document7 pagesMbadi Fi Almntq Aldrs 1 2anjoumiNo ratings yet

- Mbadi Fi Almntq Aldrs 2 3Document6 pagesMbadi Fi Almntq Aldrs 2 3Ali OuchnNo ratings yet

- Mabadie Fi Almantik PDFDocument6 pagesMabadie Fi Almantik PDFMeriem ZAGRIRINo ratings yet

- تجربة تحديد فواقد الإحتكاك لسريان مضطربDocument9 pagesتجربة تحديد فواقد الإحتكاك لسريان مضطربIbrahim ALsultanNo ratings yet

- P16Document6 pagesP16JOHN freeNo ratings yet

- الهندسة المستوية 3ع - ت1 - 2011 طارق العشDocument11 pagesالهندسة المستوية 3ع - ت1 - 2011 طارق العشtarekeleshNo ratings yet

- مذكرات المقطع 02 للسنة 01 من اعداد الأستاذة ناديةDocument30 pagesمذكرات المقطع 02 للسنة 01 من اعداد الأستاذة ناديةHichem BERKAKNo ratings yet

- مذكرات المقطع 02 من اعداد الأستاذ يعقوب طارق بصيغة البي دي أف - 2 متوسطDocument14 pagesمذكرات المقطع 02 من اعداد الأستاذ يعقوب طارق بصيغة البي دي أف - 2 متوسطRiyad BrikciNo ratings yet

- Complexe CoursDocument19 pagesComplexe CoursФатима Әл ЗахраNo ratings yet

- المجال6- المتتاليات العدديةDocument11 pagesالمجال6- المتتاليات العدديةYasser BouterbiatNo ratings yet

- c6 2sc Log PDFDocument4 pagesc6 2sc Log PDFMOhmmed taawytNo ratings yet

- بنك اسئلة رقابة برمجيات نسيبةDocument9 pagesبنك اسئلة رقابة برمجيات نسيبةosamaalammari2022No ratings yet

- مقاييس النزعة المركزية PDFDocument21 pagesمقاييس النزعة المركزية PDFAhmed Kadem Arab100% (2)

- مبادىء-الاحصاء: مقاييس النزعة المركزيةDocument21 pagesمبادىء-الاحصاء: مقاييس النزعة المركزيةAbdullah Al-shehriNo ratings yet

- مبادىء الاحصاء PDFDocument21 pagesمبادىء الاحصاء PDFAbdullah Al-shehriNo ratings yet

- مبادىء الاحصاءDocument21 pagesمبادىء الاحصاءlina kharratNo ratings yet

- APznzaZ6s9384bzYc5cu9Gjf51hv0vDq4gJUcGXUoZQL9i1I1htlz0udtU LkX6rq4iD7iuEBKKbKxroDPdUZvCQkXSv6wKrnoCluPONdgco4nUexKwv0QpbJx4PxWyTAIsc5xrjkMcbNgqQIUFELibp0PHdiR0oSvMzVVSgDocument6 pagesAPznzaZ6s9384bzYc5cu9Gjf51hv0vDq4gJUcGXUoZQL9i1I1htlz0udtU LkX6rq4iD7iuEBKKbKxroDPdUZvCQkXSv6wKrnoCluPONdgco4nUexKwv0QpbJx4PxWyTAIsc5xrjkMcbNgqQIUFELibp0PHdiR0oSvMzVVSgalshyaslam8No ratings yet

- مستوى ثانويDocument2 pagesمستوى ثانويMassi HsnNo ratings yet

- 2018_revision_01-1hhhhhhr2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhhDocument3 pages2018_revision_01-1hhhhhhr2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhhCry FelinaNo ratings yet

- C 12Document4 pagesC 12Ar AyoubNo ratings yet

- Dzexams 3as Mathematiques 856698Document3 pagesDzexams 3as Mathematiques 856698rebah laidiNo ratings yet

- خرائط موضوعاتية تحليلةDocument24 pagesخرائط موضوعاتية تحليلةISSAM BENALINo ratings yet

- Dzexams 1as Mathematiques 2356914Document5 pagesDzexams 1as Mathematiques 2356914ranouna.1.rania.2No ratings yet

- مذكرات السنة الثالثةDocument19 pagesمذكرات السنة الثالثةAbdErrahmaneMouffokiNo ratings yet

- Dzexams Docs em 902595Document5 pagesDzexams Docs em 902595mthstation33No ratings yet

- Cours 03Document10 pagesCours 03Mohamed MedNo ratings yet

- اقتصاد قياسي PDFDocument32 pagesاقتصاد قياسي PDFBbaggi Bk100% (1)

- Markov ChainDocument30 pagesMarkov ChainZ0 ZooNo ratings yet

- Dzexams 1am Mathematiques 573160Document6 pagesDzexams 1am Mathematiques 573160sofianeNo ratings yet

- Math 4cem Math Exams 4cem m201 343851Document6 pagesMath 4cem Math Exams 4cem m201 343851Massi HsnNo ratings yet

- دعم 01Document9 pagesدعم 01mehdi2008mcaNo ratings yet

- Ber 4100Document7 pagesBer 4100Naima ElNo ratings yet

- Edited - وَرقة عَمَل ١- درس حادث مروريDocument2 pagesEdited - وَرقة عَمَل ١- درس حادث مروريAmatullah HabibNo ratings yet

- الهندسة الفضائيةDocument3 pagesالهندسة الفضائيةabiraljana47No ratings yet

Cheatsheet Supervised Learning

Cheatsheet Supervised Learning

Uploaded by

masda ssasaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Cheatsheet Supervised Learning

Cheatsheet Supervised Learning

Uploaded by

masda ssasaCopyright:

Available Formats

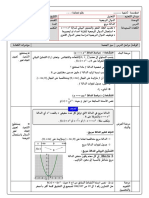

- CS ۲۲۹ﺗﻌﻠﻢ آﻟﻲ https://stanford.

edu/~shervine/l/ar/

اﻟﺮﻣﻮز وﻣﻔﺎﻫﻴﻢ أﺳﺎﺳﻴﺔ

rاﻟﻔﺮﺿﻴﺔ ) – (Hypothesisاﻟﻔﺮﺿﻴﺔ ،وﻳﺮﻣﺰ ﻟﻬﺎ ﺑـ ،hθﻫﻲ اﻟﻨﻤﻮذج اﻟﺬي ﻧﺨﺘﺎره .إذا ﻛﺎن ﻟﺪﻳﻨﺎ اﻟﻤﺪﺧﻞ ) ،x(iﻓﺈن

اﻟﻤﺨﺮج اﻟﺬي ﺳﻴﺘﻮﻗﻌﻪ اﻟﻨﻤﻮذج ﻫﻮ ) ).hθ (x(i

اﻟﻤ َﻮ ﱠﺟﻪ ّ

ﻟﻠﺘﻌﻠﻢ ُ ﻣﺮﺟﻊ ﺳﺮﻳﻊ

rداﻟﺔ اﻟﺨﺴﺎرة ) – (Loss functionداﻟﺔ اﻟﺨﺴﺎرة ﻫﻲ اﻟﺪاﻟﺔ L : (z,y) ∈ R × Y 7−→ L(z,y) ∈ Rاﻟﺘﻲ ﺗﺄﺧﺬ

ﻛﻤﺪﺧﻼت اﻟﻘﻴﻤﺔ اﻟﻤﺘﻮﻗﻌﺔ zواﻟﻘﻴﻤﺔ اﻟﺤﻘﻴﻘﻴﺔ yوﺗﻌﻄﻴﻨﺎ اﻻﺧﺘﻼف ﺑﻴﻨﻬﻤﺎ .اﻟﺠﺪول اﻟﺘﺎﻟﻲ ﻳﺤﺘﻮي ﻋﻠﻰ ﺑﻌﺾ

دوال اﻟﺨﺴﺎرة اﻟﺸﺎﺋﻌﺔ: اﻓﺸﯿﻦ ﻋﻤﯿﺪی و ﺷﺮوﯾﻦ ﻋﻤﯿﺪی

اﻻﻧﺘﺮوﺑﻴﺎ اﻟﺘﻘﺎﻃﻌﻴﺔ ﺧﺴﺎرة ﻣﻔﺼﻠﻴﺔ ﺧﺴﺎرة ﻟﻮﺟﺴﺘﻴﺔ ﺧﻄﺄ أﺻﻐﺮ ﺗﺮﺑﻴﻊ ١٤رﺑﻴﻊ اﻟﺜﺎﻧﻲ١٤٤١ ،

)(Cross-entropy )(Hinge loss )(Logistic loss )(Least squared error

[ ] 1

)− y log(z) + (1 − y) log(1 − z )max(0,1 − yz ))log(1 + exp(−yz (y − z)2 ﺗﻤﺖ اﻟﺘﺮﺟﻤﺔ ﺑﻮاﺳﻄﺔ ﻓﺎرس اﻟﻘﻨﻴﻌﻴﺮ .ﺗﻤﺖ اﻟﻤﺮاﺟﻌﺔ ﺑﻮاﺳﻄﺔ زﻳﺪ اﻟﻴﺎﻓﻌﻲ.

2

ّ

ﻟﻠﺘﻌﻠﻢ اﻟﻤُ ﻮَ ﱠﺟﻪ ﻣﻘﺪﻣﺔ

إذا ﻛﺎن ﻟﺪﻳﻨﺎ ﻣﺠﻤﻮﻋﺔ ﻣﻦ ﻧﻘﺎط اﻟﺒﻴﺎﻧﺎت } ) {x(1) , ..., x(mﻣﺮﺗﺒﻄﺔ ﺑﻤﺠﻤﻮﻋﺔ ﻣﺨﺮﺟﺎت } )،{y (1) , ..., y (m

ﺼ ﱢﻨﻒ ﻳﺘﻌﻠﻢ ﻛﻴﻒ ﻳﺘﻮﻗﻊ yﻣﻦ .x

ﻧﺮﻳﺪ أن ﻧﺒﻨﻲ ﻣُ َ

ّ

اﻟﺘﻮﻗﻊ اﻟﻤﺨﺘﻠﻔﺔ ﻣﻮﺿﺤﺔ ﻓﻲ اﻟﺠﺪول اﻟﺘﺎﻟﻲ: ّ

اﻟﺘﻮﻗﻊ – أﻧﻮاع ﻧﻤﺎذج rﻧﻮع

اﻟﺸﺒﻜﺎت اﻟﻌﺼﺒﻴﺔ آﻟﺔ اﻟﻤﺘﺠﻬﺎت اﻟﺪاﻋﻤﺔ اﻻﻧﺤﺪار اﻟﻠﻮﺟﺴﺘﻲ ّ

اﻟﺨﻄﻲ اﻻﻧﺤﺪار

)(Neural Network )(SVM )(Logistic regression )(Linear regression اﻟﺘﺼﻨﻴﻒ اﻻﻧﺤﺪار

)(Classification )(Regression

ﺻﻨﻒ ﻣﺴﺘﻤﺮ اﻟﻤُ ﺨﺮَج

rداﻟﺔ اﻟﺘﻜﻠﻔﺔ ) – (Cost functionداﻟﺔ اﻟﺘﻜﻠﻔﺔ Jﺗﺴﺘﺨﺪم ﻋﺎدة ﻟﺘﻘﻴﻴﻢ أداء ﻧﻤﻮذج ﻣﺎ ،وﻳﺘﻢ ﺗﻌﺮﻳﻔﻬﺎ ﻣﻊ داﻟﺔ

اﻟﺨﺴﺎرة Lﻛﺎﻟﺘﺎﻟﻲ: اﻧﺤﺪار ﻟﻮﺟﺴﺘﻲ )،(Logistic regression

آﻟﺔ اﻟﻤﺘﺠﻬﺎت اﻟﺪاﻋﻤﺔ )،(SVM اﻧﺤﺪار ّ

ﺧﻄﻲ )(Linear regression أﻣﺜﻠﺔ

∑

m

= )J(θ L(hθ (x )(i

), y )(i

) ﺑﺎﻳﺰ اﻟﺒﺴﻴﻂ )(Naive Bayes

i=1

rﻧﻮع اﻟﻨﻤﻮذج – أﻧﻮاع اﻟﻨﻤﺎذج اﻟﻤﺨﺘﻠﻔﺔ ﻣﻮﺿﺤﺔ ﻓﻲ اﻟﺠﺪول اﻟﺘﺎﻟﻲ:

ّ

اﻟﺘﻌﻠﻢ ،α ∈ Rﻳﻤﻜﻦ ﺗﻌﺮﻳﻒ اﻟﻘﺎﻧﻮن اﻟﺬي ﻳﺘﻢ ﺗﺤﺪﻳﺚ rاﻟﻨﺰول اﻻﺷﺘﻘﺎﻗﻲ ) – (Gradient descentﻟﻨﻌﺮّ ف ﻣﻌﺪل

ّ ﻧﻤﻮذج ﺗﻮﻟﻴﺪي )(Generative ﻧﻤﻮذج ﺗﻤﻴﻴﺰي )(Discriminative

ﺧﻮارزﻣﻴﺔ اﻟﻨﺰول اﻻﺷﺘﻘﺎﻗﻲ ﻣﻦ ﺧﻼﻟﻪ ﺑﺎﺳﺘﺨﺪام ﻣﻌﺪل اﻟﺘﻌﻠﻢ وداﻟﺔ اﻟﺘﻜﻠﻔﺔ Jﻛﺎﻟﺘﺎﻟﻲ:

ﺗﻘﺪﻳﺮ ) P (x|yﺛﻢ اﺳﺘﻨﺘﺎج )P (y|x اﻟﺘﻘﺪﻳﺮ اﻟﻤﺒﺎﺷﺮ ﻟـ )P (y|x اﻟﻬﺪف

)θ ←− θ − α∇J(θ

اﻟﺘﻮزﻳﻊ اﻻﺣﺘﻤﺎﻟﻲ ﻟﻠﺒﻴﺎﻧﺎت ﺣﺪود اﻟﻘﺮار ﻣﺎذا ﻳﺘﻌﻠﻢ

ﺗﻮﺿﻴﺢ

،GDAﺑﺎﻳﺰ اﻟﺒﺴﻴﻂ )(Naive Bayes اﻻﻧﺤﺪار ) ،(Regressionآﻟﺔ اﻟﻤﺘﺠﻬﺎت اﻟﺪاﻋﻤﺔ )(SVM أﻣﺜﻠﺔ

ﺟﺎﻣﻌﺔ ﺳﺘﺎﻧﻔﻮرد ۱ ﺧﺮﻳﻒ ۲۰۱۸

- CS ۲۲۹ﺗﻌﻠﻢ آﻟﻲ https://stanford.edu/~shervine/l/ar/

اﻟﺘﺼﻨﻴﻒ واﻻﻧﺤﺪار اﻟﻠﻮﺟﺴﺘﻲ ﻣﻼﺣﻈﺔ :ﻓﻲ اﻟﻨﺰول اﻻﺷﺘﻘﺎﻗﻲ اﻟﻌﺸﻮاﺋﻲ )) (Stochastic gradient descent (SGDﻳﺘﻢ ﺗﺤﺪﻳﺚ اﻟﻤُ ﻌﺎﻣﻼت

اﻟﺤ َﺰﻣﻲ )(batch gradient descent ً

ﺑﻨﺎءا ﻋﻠﻰ ﻛﻞ ﻋﻴﻨﺔ ﺗﺪرﻳﺐ ﻋﻠﻰ ﺣﺪة ،ﺑﻴﻨﻤﺎ ﻓﻲ اﻟﻨﺰول اﻻﺷﺘﻘﺎﻗﻲ ُ )(parameters

rداﻟﺔ ﺳﻴﺠﻤﻮﻳﺪ ) – (Sigmoidداﻟﺔ ﺳﻴﺠﻤﻮﻳﺪ ،gوﺗﻌﺮف ﻛﺬﻟﻚ ﺑﺎﻟﺪاﻟﺔ اﻟﻠﻮﺟﺴﺘﻴﺔ ،ﺗﻌﺮّ ف ﻛﺎﻟﺘﺎﻟﻲ:

ﻳﺘﻢ ﺗﺤﺪﻳﺜﻬﺎ ﺑﺎﺳﺘﺨﺪام ُﺣ َﺰم ﻣﻦ ﻋﻴﻨﺎت اﻟﺘﺪرﻳﺐ.

1

∀z ∈ R, = )g(z [∈]0,1 rاﻷرﺟﺤﻴﺔ ) – (Likelihoodﺗﺴﺘﺨﺪم أرﺟﺤﻴﺔ اﻟﻨﻤﻮذج ) ،L(θﺣﻴﺚ أن θﻫﻲ اﻟﻤُ ﺪﺧﻼت ،ﻟﻠﺒﺤﺚ ﻋﻦ اﻟﻤُ ﺪﺧﻼت θ

1 + e−z

ً

ﻋﻤﻠﻴﺎ ﻳﺘﻢ اﺳﺘﺨﺪام اﻷرﺟﺤﻴﺔ اﻟﻠﻮﻏﺎرﻳﺜﻤﻴﺔ )(log-likelihood اﻷﺣﺴﻦ ﻋﻦ ﻃﺮﻳﻖ ﺗﻌﻈﻴﻢ ) (maximizingاﻷرﺟﺤﻴﺔ.

)) ℓ(θ) = log(L(θﺣﻴﺚ أﻧﻬﺎ أﺳﻬﻞ ﻓﻲ اﻟﺘﺤﺴﻴﻦ ) .(optimizeﻓﻴﻜﻮن ﻟﺪﻳﻨﺎ:

rاﻻﻧﺤﺪار اﻟﻠﻮﺟﺴﺘﻲ ) – (Logistic regressionﻧﻔﺘﺮض ﻫﻨﺎ أن ) .y|x; θ ∼ Bernoulli(ϕﻓﻴﻜﻮن ﻟﺪﻳﻨﺎ:

1 )θopt = arg max L(θ

= )ϕ = p(y = 1|x; θ )= g(θT x θ

)1 + exp(−θT x

ﻣﻼﺣﻈﺔ :ﻟﻴﺲ ﻫﻨﺎك ﺣﻞ رﻳﺎﺿﻲ ﻣﻐﻠﻖ ﻟﻼﻧﺤﺪار اﻟﻠﻮﺟﺴﺘﻲ. rﺧﻮارزﻣﻴﺔ ﻧﻴﻮﺗﻦ ) – (Newton’s algorithmﺧﻮارزﻣﻴﺔ ﻧﻴﻮﺗﻦ ﻫﻲ ﻃﺮﻳﻘﺔ ﺣﺴﺎﺑﻴﺔ ﻟﻠﻌﺜﻮر ﻋﻠﻰ θﺑﺤﻴﺚ ﻳﻜﻮن

.ℓ′ (θ) = 0ﻗﺎﻋﺪة اﻟﺘﺤﺪﻳﺚ ﻟﻠﺨﻮارزﻣﻴﺔ ﻛﺎﻟﺘﺎﻟﻲ:

rاﻧﺤﺪار ﺳﻮﻓﺖ ﻣﺎﻛﺲ ) – (Softmaxوﻳﻄﻠﻖ ﻋﻠﻴﻪ اﻻﻧﺤﺪار اﻟﻠﻮﺟﺴﺘﻲ ﻣﺘﻌﺪد اﻷﺻﻨﺎف (multiclass logistic

)ℓ′ (θ

) ،regressionﻳﺴﺘﺨﺪم ﻟﺘﻌﻤﻴﻢ اﻻﻧﺤﺪار اﻟﻠﻮﺟﺴﺘﻲ إذا ﻛﺎن ﻟﺪﻳﻨﺎ أﻛﺜﺮ ﻣﻦ ﺻﻨﻔﻴﻦ .ﻓﻲ اﻟﻌﺮف ﻳﺘﻢ ﺗﻌﻴﻴﻦ ،θK = 0 θ←θ−

)ℓ′′ (θ

ﺑﺤﻴﺚ ﺗﺠﻌﻞ ﻣُ ﺪﺧﻞ ﺑﻴﺮﻧﻮﻟﻠﻲ ) ϕi (Bernoulliﻟﻜﻞ ﻓﺌﺔ iﻳﺴﺎوي:

ﻣﻼﺣﻈﺔ :ﻫﻨﺎك ﺧﻮارزﻣﻴﺔ أﻋﻢ وﻫﻲ ﻣﺘﻌﺪدة اﻷﺑﻌﺎد ) ،(multidimensionalﻳﻄﻠﻖ ﻋﻠﻴﻬﺎ ﺧﻮارزﻣﻴﺔ ﻧﻴﻮﺗﻦ�راﻓﺴﻮن

)exp(θiT x

= ϕi ) ،(Newton-Raphsonوﻳﺘﻢ ﺗﺤﺪﻳﺜﻬﺎ ﻋﺒﺮ اﻟﻘﺎﻧﻮن اﻟﺘﺎﻟﻲ:

∑K

( )−1

)exp(θjT x )θ ← θ − ∇2θ ℓ(θ )∇θ ℓ(θ

j=1

ّ

اﻟﺨﻄﻲ )(Linear regression اﻻﻧﺤﺪار

اﻟﻨﻤﺎذج اﻟﺨﻄﻴﺔ اﻟﻌﺎﻣﺔ )(Generalized Linear Models - GLM

ﻫﻨﺎ ﻧﻔﺘﺮض أن ) y|x; θ ∼ N (µ,σ 2

ﺳﻴﺔ ) – (Exponential familyﻳﻄﻠﻖ ﻋﻠﻰ ﺻﻨﻒ ﻣﻦ اﻟﺘﻮزﻳﻌﺎت ) (distributionsﺑﺄﻧﻬﺎ ﺗﻨﺘﻤﻲ إﻟﻰ ُ

rاﻟﻌﺎﺋﻠﺔ اﻷ ّ rاﻟﻤﻌﺎدﻟﺔ اﻟﻄﺒﻴﻌﻴﺔ�اﻟﻨﺎﻇﻤﻴﺔ ) – (Normalإذا ﻛﺎن ﻟﺪﻳﻨﺎ اﻟﻤﺼﻔﻮﻓﺔ ،Xاﻟﻘﻴﻤﺔ θاﻟﺘﻲ ﺗﻘﻠﻞ ﻣﻦ داﻟﺔ اﻟﺘﻜﻠﻔﺔ ﻳﻤﻜﻦ

ﻛﺎف (sufficient

ٍ اﻷﺳﻴﺔ إذا ﻛﺎن ﻳﻤﻜﻦ ﻛﺘﺎﺑﺘﻬﺎ ﺑﻮاﺳﻄﺔ ﻣُ ﺪﺧﻞ ﻗﺎﻧﻮﻧﻲ ) ،η (canonical parameterإﺣﺼﺎء

ّ اﻟﻌﺎﺋﻠﺔ ً

رﻳﺎﺿﻴﺎ ﺑﺸﻜﻞ ﻣﻐﻠﻖ ) (closed-formﻋﻦ ﻃﺮﻳﻖ: ﺣﻠﻬﺎ

) ،T (y) statisticوداﻟﺔ ﺗﺠﺰﺋﺔ ﻟﻮﻏﺎرﻳﺜﻤﻴﺔ ) ،a(ηﻛﺎﻟﺘﺎﻟﻲ:

θ = (X T X)−1 X T y

))p(y; η) = b(y) exp(ηT (y) − a(η

ً ّ

اﻟﺘﻌﻠﻢ ،αﻓﺈن ﻗﺎﻧﻮن اﻟﺘﺤﺪﻳﺚ ﻟﺨﻮارزﻣﻴﺔ أﺻﻐﺮ rﺧﻮارزﻣﻴﺔ أﺻﻐﺮ ﻣﻌﺪل ﺗﺮﺑﻴﻊ – LMSإذا ﻛﺎن ﻟﺪﻳﻨﺎ ﻣﻌﺪل

ﻛﺜﻴﺮا ﻣﺎ ﺳﻴﻜﻮن .T (y) = yﻛﺬﻟﻚ ﻓﺈن )) exp(−a(ηﻳﻤﻜﻦ أن ﺗﻔﺴﺮ ﻛﻤُ ﺪﺧﻞ ﺗﺴﻮﻳﺔ )(normalization ﻣﻼﺣﻈﺔ:

ﻟﻠﺘﺄﻛﺪ ﻣﻦ أن اﻻﺣﺘﻤﺎﻻت ﻳﻜﻮن ﺣﺎﺻﻞ ﺟﻤﻌﻬﺎ ﻳﺴﺎوي واﺣﺪ. ﻣﻌﺪل ﺗﺮﺑﻴﻊ )) (Least Mean Squares (LMSﻟﻤﺠﻤﻮﻋﺔ ﺑﻴﺎﻧﺎت ﻣﻦ mﻋﻴﻨﺔ ،وﻳﻄﻠﻖ ﻋﻠﻴﻪ ﻗﺎﻧﻮن ﺗﻌﻠﻢ وﻳﺪرو�ﻫﻮف

) ،(Widrow-Hoffﻛﺎﻟﺘﺎﻟﻲ:

ً

اﺳﺘﺨﺪاﻣﺎ ﻓﻲ اﻟﺠﺪول اﻟﺘﺎﻟﻲ: اﻷﺳﻴﺔ

ّ ﺗﻢ ﺗﻠﺨﻴﺺ أﻛﺜﺮ اﻟﺘﻮزﻳﻌﺎت

∑

m

[ ] )(i

∀j, θj ← θj + α y (i) − hθ (x(i) ) xj

)b(y )a(η )T (y η اﻟﺘﻮزﻳﻊ i=1

( )

1 ))log(1 + exp(η y log ϕ

ِﺑﺮﻧﻮﻟﻠﻲ )(Bernoulli ﻣﻼﺣﻈﺔ :ﻗﺎﻧﻮن اﻟﺘﺤﺪﻳﺚ ﻫﺬا ﻳﻌﺘﺒﺮ ﺣﺎﻟﺔ ﺧﺎﺻﺔ ﻣﻦ اﻟﻨﺰول اﻻﺷﺘﻘﺎﻗﻲ ).(Gradient descent

1−ϕ

( )

2

η2 ﻣﺤﻠﻴ ًﺎ ) ،(Locally Weighted Regressionوﻳﻌﺮف ﺑـ ،LWR

ّ ﻣﺤﻠﻴ ًﺎ ) – (LWRاﻻﻧﺤﺪار اﻟﻤﻮزون

ّ rاﻻﻧﺤﺪار اﻟﻤﻮزون

√1 exp − y2 y µ ﺟﺎوﺳﻲ )(Gaussian

2π 2

ﻫﻮ ﻧﻮع ﻣﻦ اﻻﻧﺤﺪار اﻟﺨﻄﻲ َﻳ ِﺰن ﻛﻞ ﻋﻴﻨﺔ ﺗﺪرﻳﺐ أﺛﻨﺎء ﺣﺴﺎب داﻟﺔ اﻟﺘﻜﻠﻔﺔ ﺑﺎﺳﺘﺨﺪام ) ،w (xاﻟﺘﻲ ﻳﻤﻜﻦ ﺗﻌﺮﻳﻔﻬﺎ

)(i

1 ﺑﺎﺳﺘﺨﺪام اﻟﻤُ ﺪﺧﻞ ) τ ∈ R (parameterﻛﺎﻟﺘﺎﻟﻲ:

eη y )log(λ ﺑﻮاﺳﻮن )(Poisson

!y ( )

( ) (x(i) − x)2

eη w(i) (x) = exp −

1 log 1−eη

y )log(1 − ϕ ﻫﻨﺪﺳﻲ )(Geometric 2τ 2

ﺟﺎﻣﻌﺔ ﺳﺘﺎﻧﻔﻮرد ۲ ﺧﺮﻳﻒ ۲۰۱۸

- CS ۲۲۹ﺗﻌﻠﻢ آﻟﻲ https://stanford.edu/~shervine/l/ar/

اﻟﺨﻄﻴﺔ اﻟﻌﺎﻣﺔ ) (GLMإﻟﻰ ﺗﻮﻗﻊ اﻟﻤﺘﻐﻴﺮ اﻟﻌﺸﻮاﺋﻲ yﻛﺪاﻟﺔ ﻟـ ،x ∈ Rn+1

ّ rاﻓﺘﺮاﺿﺎت – GLMsﺗﻬﺪف اﻟﻨﻤﺎذج

وﺗﺴﺘﻨﺪ إﻟﻰ ﺛﻼﺛﺔ اﻓﺘﺮاﺿﺎت:

)(1 )y|x; θ ∼ ExpFamily(η )(2 ]hθ (x) = E[y|x; θ )(3 η = θT x

ﻣﻼﺣﻈﺔ :أﺻﻐﺮ ﺗﺮﺑﻴﻊ ) (least squaresاﻻﻋﺘﻴﺎدي و اﻻﻧﺤﺪار اﻟﻠﻮﺟﺴﺘﻲ ﻳﻌﺘﺒﺮان ﻣﻦ اﻟﺤﺎﻻت اﻟﺨﺎﺻﺔ ﻟﻠﻨﻤﺎذج

اﻟﺨﻄﻴﺔ اﻟﻌﺎﻣﺔ.

ّ

ﻣﻼﺣﻈﺔ :ﻧﻘﻮل أﻧﻨﺎ ﻧﺴﺘﺨﺪم ”ﺣﻴﻠﺔ اﻟﻨﻮاة” ) (kernel trickﻟﺤﺴﺎب داﻟﺔ اﻟﺘﻜﻠﻔﺔ ﻋﻨﺪ اﺳﺘﺨﺪام اﻟﻨﻮاة ﻷﻧﻨﺎ ﻓﻲ

آﻟﺔ اﻟﻤﺘﺠﻬﺎت اﻟﺪاﻋﻤﺔ )(Support Vector Machines

اﻟﺤﻘﻴﻘﺔ ﻻ ﻧﺤﺘﺎج أن ﻧﻌﺮف اﻟﺘﺤﻮﻳﻞ اﻟﺼﺮﻳﺢ ،ϕاﻟﺬي ﻳﻜﻮن ﻓﻲ اﻟﻐﺎﻟﺐ ﺷﺪﻳﺪ اﻟﺘﻌﻘﻴﺪ .وﻟﻜﻦ ،ﻧﺤﺘﺎج أن ﻓﻘﻂ أن

ﻧﺤﺴﺐ اﻟﻘﻴﻢ ).K(x,z ﺗﻬﺪف آﻟﺔ اﻟﻤﺘﺠﻬﺎت اﻟﺪاﻋﻤﺔ ) (SVMإﻟﻰ اﻟﻌﺜﻮر ﻋﻠﻰ اﻟﺨﻂ اﻟﺬي ﻳﻌﻈﻢ أﺻﻐﺮ ﻣﺴﺎﻓﺔ إﻟﻴﻪ:

اﻟﻼﻏﺮاﻧﺠﻲ ) – (Lagrangianﻳﺘﻢ ﺗﻌﺮﻳﻒ ّ

اﻟﻼﻏﺮاﻧﺠﻲ ) L(w,bﻋﻠﻰ اﻟﻨﺤﻮ اﻟﺘﺎﻟﻲ: ّ r rﻣُ ﺼ ﱢﻨﻒ اﻟﻬﺎﻣﺶ اﻷﺣﺴﻦ ) – (Optimal margin classifierﻳﻌﺮﱠ ف ﻣُ ﺼ ﱢﻨﻒ اﻟﻬﺎﻣﺶ اﻷﺣﺴﻦ hﻛﺎﻟﺘﺎﻟﻲ:

∑

l

)h(x) = sign(wT x − b

L(w,b) = f (w) + )βi hi (w

i=1 ﺣﻴﺚ (w, b) ∈ Rn × Rﻫﻮ اﻟﺤﻞ ﻟﻤﺸﻜﻠﺔ اﻟﺘﺤﺴﻴﻦ ) (optimizationاﻟﺘﺎﻟﻴﺔ:

ﻣﻼﺣﻈﺔ :اﻟﻤﻌﺎﻣِ ﻼت ) βi (coefficientsﻳﻄﻠﻖ ﻋﻠﻴﻬﺎ ﻣﻀﺮوﺑﺎت ﻻﻏﺮاﻧﺞ ).(Lagrange multipliers 1

min ||w||2 ﺑﺤﻴﺚ أن y (i) (wT x(i) − b) ⩾ 1

2

اﻟﺘﻌﻠﻢ اﻟﺘﻮﻟﻴﺪي )(Generative Learning

اﻟﻨﻤﻮذج اﻟﺘﻮﻟﻴﺪي ﻓﻲ اﻟﺒﺪاﻳﺔ ﻳﺤﺎول أن ﻳﺘﻌﻠﻢ ﻛﻴﻒ ﺗﻢ ﺗﻮﻟﻴﺪ اﻟﺒﻴﺎﻧﺎت ﻋﻦ ﻃﺮﻳﻖ ﺗﻘﺪﻳﺮ ) ،P (x|yاﻟﺘﻲ ﻳﻤﻜﻦ

ﺣﻴﻨﻬﺎ اﺳﺘﺨﺪاﻣﻬﺎ ﻟﺘﻘﺪﻳﺮ ) P (y|xﺑﺎﺳﺘﺨﺪام ﻗﺎﻧﻮن ﺑﺎﻳﺰ ).(Bayes’ rule

ﺗﺤﻠﻴﻞ اﻟﺘﻤﺎﻳﺰ اﻟﺠﺎوﺳﻲ )(Gaussian Discriminant Analysis

rاﻹﻃﺎر – ﺗﺤﻠﻴﻞ اﻟﺘﻤﺎﻳﺰ اﻟﺠﺎوﺳﻲ ﻳﻔﺘﺮض أن yو x|y = 0و x|y = 1ﺑﺤﻴﺚ ﻳﻜﻮﻧﻮا ﻛﺎﻟﺘﺎﻟﻲ:

)y ∼ Bernoulli(ϕ

)x|y = 0 ∼ N (µ0 ,Σ و )x|y = 1 ∼ N (µ1 ,Σ

rاﻟﺘﻘﺪﻳﺮ – اﻟﺠﺪول اﻟﺘﺎﻟﻲ ﻳﻠﺨﺺ اﻟﺘﻘﺪﻳﺮات اﻟﺘﻲ ﻳﻤﻜﻨﻨﺎ اﻟﺘﻮﺻﻞ ﻟﻬﺎ ﻋﻨﺪ ﺗﻌﻈﻴﻢ اﻷرﺟﺤﻴﺔ ):(likelihood

ﻣﻼﺣﻈﺔ :ﻳﺘﻢ ﺗﻌﺮﻳﻒ اﻟﺨﻂ ﺑﻬﺬه اﻟﻤﻌﺎدﻟﺔ . wT x − b = 0

b

Σ )µbj (j = 0,1 b

ϕ

∑m rاﻟﺨﺴﺎرة اﻟﻤﻔﺼﻠﻴﺔ ) – (Hinge lossﺗﺴﺘﺨﺪم اﻟﺨﺴﺎرة اﻟﻤﻔﺼﻠﻴﺔ ﻓﻲ ﺣﻞ SVMوﻳﻌﺮف ﻋﻠﻰ اﻟﻨﺤﻮ اﻟﺘﺎﻟﻲ:

1 ∑

m

1

}i=1 {y (i) =j

)x(i 1 ∑m

(x )(i

− µy(i) )(x )(i

) )− µy(i T ∑ m }1{y(i) =1 )L(z,y) = [1 − yz]+ = max(0,1 − yz

m }1{y(i) =j m

i=1 i=1 i=1

rاﻟﻨﻮاة ) – (Kernelإذا ﻛﺎن ﻟﺪﻳﻨﺎ داﻟﺔ رﺑﻂ اﻟﺨﺼﺎﺋﺺ ) ،ϕ (featuresﻳﻤﻜﻨﻨﺎ ﺗﻌﺮﻳﻒ اﻟﻨﻮاة Kﻛﺎﻟﺘﺎﻟﻲ:

ﺑﺎﻳﺰ اﻟﺒﺴﻴﻂ )(Naive Bayes

)K(x,z) = ϕ(x)T ϕ(z

rاﻻﻓﺘﺮاض – ﻳﻔﺘﺮض ﻧﻤﻮذج ﺑﺎﻳﺰ اﻟﺒﺴﻴﻂ أن ﺟﻤﻴﻊ اﻟﺨﺼﺎﺋﺺ ﻟﻜﻞ ﻋﻴﻨﺔ ﺑﻴﺎﻧﺎت ﻣﺴﺘﻘﻠﺔ ):(independent

∏

n ( )

،K(x,z) = exp −وﻳﻄﻠﻖ ﻋﻠﻴﻬﺎ اﻟﻨﻮاة اﻟﺠﺎوﺳﻴﺔ

||x−z||2 ً

ﻋﻤﻠﻴﺎ ،ﻳﻤﻜﻦ أن ُﺗﻌَ ﺮﱠ ف اﻟﺪاﻟﺔ Kﻋﻦ ﻃﺮﻳﻖ اﻟﻤﻌﺎدﻟﺔ

= P (x|y) = P (x1 ,x2 ,...|y) = P (x1 |y)P (x2 |y)... )P (xi |y 2σ 2

i=1 ) ،(Gaussian kernelوﻫﻲ ﺗﺴﺘﺨﺪم ﺑﻜﺜﺮة.

ﺟﺎﻣﻌﺔ ﺳﺘﺎﻧﻔﻮرد ۳ ﺧﺮﻳﻒ ۲۰۱۸

- CS ۲۲۹ﺗﻌﻠﻢ آﻟﻲ https://stanford.edu/~shervine/l/ar/

rاﻟﺤﻞ – ﺗﻌﻈﻴﻢ اﻷرﺟﺤﻴﺔ اﻟﻠﻮﻏﺎرﻳﺜﻤﻴﺔ ) (log-likelihoodﻳﻌﻄﻴﻨﺎ اﻟﺤﻠﻮل اﻟﺘﺎﻟﻴﺔ إذا ﻛﺎن ]]:k ∈ {0,1},l ∈ [[1,L

xiو #{j|y (j) = k

)(j

1 }= l

= )P (y = k }× #{j|y (j) = k و = )P (xi = l|y = k

m )#{j|y (j }= k

ﻣﻼﺣﻈﺔ :ﺑﺎﻳﺰ اﻟﺒﺴﻴﻂ ﻳﺴﺘﺨﺪم ﺑﺸﻜﻞ واﺳﻊ ﻟﺘﺼﻨﻴﻒ اﻟﻨﺼﻮص واﻛﺘﺸﺎف اﻟﺒﺮﻳﺪ اﻹﻟﻜﺘﺮوﻧﻲ اﻟﻤﺰﻋﺞ.

اﻟﻄﺮق اﻟﺸﺠﺮﻳﺔ ) (tree-basedواﻟﺘﺠﻤﻴﻌﻴﺔ )(ensemble

ﱡ

اﻟﺘﻌﻠﻢ ﻧﻈﺮﻳﺔ

ﻟﻜﻞ ﻣﻦ ﻣﺸﺎﻛﻞ اﻻﻧﺤﺪار ) (regressionواﻟﺘﺼﻨﻴﻒ ).(classification

ٍ ﻫﺬه اﻟﻄﺮق ﻳﻤﻜﻦ اﺳﺘﺨﺪاﻣﻬﺎ

rﺣﺪ اﻻﺗﺤﺎد ) – (Union boundﻟﻨﺠﻌﻞ A1 , ..., Akﺗﻤﺜﻞ kﺣﺪث .ﻓﻴﻜﻮن ﻟﺪﻳﻨﺎ:

rاﻟﺘﺼﻨﻴﻒ واﻻﻧﺤﺪار اﻟﺸﺠﺮي ) – (CARTواﻻﺳﻢ اﻟﺸﺎﺋﻊ ﻟﻪ أﺷﺠﺎر اﻟﻘﺮار ) ،(decision treesﻳﻤﻜﻦ أن ﻳﻤﺜﻞ

) P (A1 ∪ ... ∪ Ak ) ⩽ P (A1 ) + ... + P (Ak

ﻛﺄﺷﺠﺎر ﺛﻨﺎﺋﻴﺔ ) .(binary treesﻣﻦ اﻟﻤﺰاﻳﺎ ﻟﻬﺬه اﻟﻄﺮﻳﻘﺔ إﻣﻜﺎﻧﻴﺔ ﺗﻔﺴﻴﺮﻫﺎ ﺑﺴﻬﻮﻟﺔ.

ً

ﻛﺒﻴﺮا ﻣﻦ أﺷﺠﺎر اﻟﻘﺮار ً

ﻋﺪدا rاﻟﻐﺎﺑﺔ اﻟﻌﺸﻮاﺋﻴﺔ ) – (Random forestﻫﻲ أﺣﺪ اﻟﻄﺮق اﻟﺸﺠﺮﻳﺔ اﻟﺘﻲ ﺗﺴﺘﺨﺪم

ﻣﺒﻨﻴﺔ ﺑﺎﺳﺘﺨﺪام ﻣﺠﻤﻮﻋﺔ ﻋﺸﻮاﺋﻴﺔ ﻣﻦ اﻟﺨﺼﺎﺋﺺ .ﺑﺨﻼف ﺷﺠﺮة اﻟﻘﺮار اﻟﺒﺴﻴﻄﺔ ﻻ ﻳﻤﻜﻦ ﺗﻔﺴﻴﺮ اﻟﻨﻤﻮذج ﺑﺴﻬﻮﻟﺔ،

وﻟﻜﻦ أداﺋﻬﺎ اﻟﻌﺎﻟﻲ ﺟﻌﻠﻬﺎ أﺣﺪ اﻟﺨﻮارزﻣﻴﺔ اﻟﻤﺸﻬﻮرة.

ﻣﻼﺣﻈﺔ :أﺷﺠﺎر اﻟﻘﺮار ﻧﻮع ﻣﻦ اﻟﺨﻮارزﻣﻴﺎت اﻟﺘﺠﻤﻴﻌﻴﺔ ).(ensemble

rﻣﺘﺮاﺟﺤﺔ ﻫﻮﻓﺪﻳﻨﺞ ) – (Hoeffdingﻟﻨﺠﻌﻞ Z1 , .., Zmﺗﻤﺜﻞ mﻣﺘﻐﻴﺮ ﻣﺴﺘﻘﻠﺔ وﻣﻮزﻋﺔ ﺑﺸﻜﻞ ﻣﻤﺎﺛﻞ )(iid rاﻟﺘﻌﺰﻳﺰ ) – (Boostingﻓﻜﺮة ﺧﻮارزﻣﻴﺎت اﻟﺘﻌﺰﻳﺰ ﻫﻲ دﻣﺞ ﻋﺪة ﺧﻮارزﻣﻴﺎت ﺗﻌﻠﻢ ﺿﻌﻴﻔﺔ ﻟﺘﻜﻮﻳﻦ ﻧﻤﻮذج ﻗﻮي.

ﻣﺄﺧﻮذة ﻣﻦ ﺗﻮزﻳﻊ ِﺑﺮﻧﻮﻟﻠﻲ ) (Bernoulli distributionذا ﻣُ ﺪﺧﻞ .ϕﻟﻨﺠﻌﻞ b

ϕﻣﺘﻮﺳﻂ اﻟﻌﻴﻨﺔ ) (sample meanو اﻟﻄﺮق اﻷﺳﺎﺳﻴﺔ ﻣﻠﺨﺼﺔ ﻓﻲ اﻟﺠﺪول اﻟﺘﺎﻟﻲ:

γ > 0ﺛﺎﺑﺖ .ﻓﻴﻜﻮن ﻟﺪﻳﻨﺎ:

)b| > γ) ⩽ 2 exp(−2γ 2 m

P (|ϕ − ϕ

اﻟﺘﻌﺰﻳﺰ اﻻﺷﺘﻘﺎﻗﻲ )(Gradient boosting اﻟﺘﻌﺰﻳﺰ اﻟﺘ َ

َﻜ ﱡﻴﻔﻲ )(Adaptive boosting

ﻣﻼﺣﻈﺔ :ﻫﺬه اﻟﻤﺘﺮاﺟﺤﺔ ﺗﻌﺮف ﻛﺬﻟﻚ ﺑﺤﺪ ﺗﺸﺮﻧﻮف (Chernoff bound).

-ﻳﺘﻢ ﺗﺪرﻳﺐ ﺧﻮارزﻣﻴﺎت -ﻳﺘﻢ اﻟﺘﺮﻛﻴﺰ ﻋﻠﻰ ﻣﻮاﻃﻦ اﻟﺨﻄﺄ

rﺧﻄﺄ اﻟﺘﺪرﻳﺐ – ﻟﻴﻜﻦ ﻟﺪﻳﻨﺎ اﻟﻤُ ﺼ ﱢﻨﻒ ،hﻳﻤﻜﻦ ﺗﻌﺮﻳﻒ ﺧﻄﺄ اﻟﺘﺪرﻳﺐ )ϵ(h

،bوﻳﻌﺮف ﻛﺬﻟﻚ ﺑﺎﻟﺨﻄﺮ اﻟﺘﺠﺮﻳﺒﻲ أو

اﻟﺘﻌﻠﻢ اﻟﻀﻌﻴﻔﺔ ﻋﻠﻰ اﻷﺧﻄﺎء اﻟﻤﺘﺒﻘﻴﺔ. ﻟﺘﺤﺴﻴﻦ اﻟﻨﺘﻴﺠﺔ ﻓﻲ اﻟﺨﻄﻮة اﻟﺘﺎﻟﻴﺔ.

اﻟﺨﻄﺄ اﻟﺘﺠﺮﻳﺒﻲ ،ﻛﺎﻟﺘﺎﻟﻲ:

”Adaboost” -

∑ 1

m

= )bϵ(h } )1{h(x(i) )̸=y(i

m

i=1

ﻃﺮق أﺧﺮى ﻏﻴﺮ ﺑﺎراﻣﺘﺮﻳﺔ )(non-parametric

ً

اﺣﺘﻤﺎﻟﻴﺎ )) – (Probably Approximately Correct (PACﻫﻮ إﻃﺎر ﻳﺘﻢ ﻣﻦ ﺧﻼﻟﻪ إﺛﺒﺎت ً

ﺗﻘﺮﻳﺒﺎ ﺻﺤﻴﺢ r

اﻟﻌﺪﻳﺪ ﻣﻦ ﻧﻈﺮﻳﺎت اﻟﺘﻌﻠﻢ ،وﻳﺤﺘﻮي ﻋﻠﻰ اﻻﻓﺘﺮاﺿﺎت اﻟﺘﺎﻟﻴﺔ: rﺧﻮارزﻣﻴﺔ أﻗﺮب اﻟﺠﻴﺮان ) – (k-nearest neighborsﺗﻌﺘﺒﺮ ﺧﻮارزﻣﻴﺔ أﻗﺮب اﻟﺠﻴﺮان ،وﺗﻌﺮف ﺑـ ،-NNkﻃﺮﻳﻘﺔ

ﻏﻴﺮ ﺑﺎراﻣﺘﺮﻳﺔ ،ﺣﻴﺚ ﻳﺘﻢ ﺗﺤﺪﻳﺪ ﻧﺘﻴﺠﺔ ﻋﻴﻨﺔ ﻣﻦ اﻟﺒﻴﺎﻧﺎت ﻣﻦ ﺧﻼل ﻋﺪد kﻣﻦ اﻟﺒﻴﺎﻧﺎت اﻟﻤﺠﺎورة ﻓﻲ ﻣﺠﻤﻮﻋﺔ

• ﻣﺠﻤﻮﻋﺘﻲ اﻟﺘﺪرﻳﺐ واﻻﺧﺘﺒﺎر ﻳﺘﺒﻌﺎن ﻧﻔﺲ اﻟﺘﻮزﻳﻊ. اﻟﺘﺪرﻳﺐ .وﻳﻤﻜﻦ اﺳﺘﺨﺪاﻣﻬﺎ ﻟﻠﺘﺼﻨﻴﻒ واﻻﻧﺤﺪار.

• ﻋﻴﻨﺎت اﻟﺘﺪرﻳﺐ ﺗﺆﺧﺬ ﺑﺸﻜﻞ ﻣﺴﺘﻘﻞ. ﻣﻼﺣﻈﺔ :ﻛﻠﻤﺎ زاد اﻟﻤُ ﺪﺧﻞ ،kﻛﻠﻤﺎ زاد اﻻﻧﺤﻴﺎز ) ،(biasوﻛﻠﻤﺎ ﻧﻘﺺ ،kزاد اﻟﺘﺒﺎﻳﻦ ).(variance

ﺟﺎﻣﻌﺔ ﺳﺘﺎﻧﻔﻮرد ۴ ﺧﺮﻳﻒ ۲۰۱۸

- CS ۲۲۹ﺗﻌﻠﻢ آﻟﻲ https://stanford.edu/~shervine/l/ar/

rﻣﺠﻤﻮﻋﺔ ﺗﻜﺴﻴﺮﻳﺔ ) – (Shattering Setإذا ﻛﺎن ﻟﺪﻳﻨﺎ اﻟﻤﺠﻤﻮﻋﺔ } ) ،S = {x(1) ,...,x(dوﻣﺠﻤﻮﻋﺔ ﻣُ ﱟ

ﺼﻨﻔﺎت

،Hﻧﻘﻮل أن Hﺗﻜﺴﺮ (H shatters S) Sإذا ﻛﺎن ﻟﻜﻞ ﻣﺠﻤﻮﻋﺔ ﻋﻼﻣﺎت ) {y (1) , ..., y (d) } (labelsﻟﺪﻳﻨﺎ:

∃h ∈ H, ∀i ∈ [[1,d]], )h(x(i) ) = y (i

rﻣﺒﺮﻫﻨﺔ اﻟﺤﺪ اﻷﻋﻠﻰ ) – (Upper bound theoremﻟﻨﺠﻌﻞ Hﻓﺌﺔ ﻓﺮﺿﻴﺔ ﻣﺤﺪودة )(finite hypothesis class

ﺑﺤﻴﺚ ،|H| = kو δوﺣﺠﻢ اﻟﻌﻴﻨﺔ mﺛﺎﺑﺘﻴﻦ .ﺣﻴﻨﻬﺎ ﺳﻴﻜﻮن ﻟﺪﻳﻨﺎ ،ﻣﻊ اﺣﺘﻤﺎل ﻋﻠﻰ اﻷﻗﻞ ،1 − δاﻟﺘﺎﻟﻲ:

( ) √ ( )

1 2k

ϵ(b

⩽ )h min ϵ(h) + 2 log

h∈H 2m δ

rﺑُﻌْ ﺪ ﻓﺎﺑﻨﻴﻚ – ﺗﺸﺮﻓﻮﻧﻴﻜﺲ ) (Vapnik-Chervonenkis - VCﻟﻔﺌﺔ ﻓﺮﺿﻴﺔ ﻏﻴﺮ ﻣﺤﺪودة (infinite hypothesis

) ،H classوﻳﺮﻣﺰ ﻟﻪ ﺑـ ) ،VC(Hﻫﻮ ﺣﺠﻢ أﻛﺒﺮ ﻣﺠﻤﻮﻋﺔ ) (setاﻟﺘﻲ ﺗﻢ ﺗﻜﺴﻴﺮﻫﺎ ﺑﻮاﺳﻄﺔ .(shattered by H) H

ﻣﻼﺣﻈﺔ :ﺑُﻌْ ﺪ ﻓﺎﺑﻨﻴﻚ�ﺗﺸﺮﻓﻮﻧﻴﻜﺲ VCﻟـ = { Hﻣﺠﻤﻮﻋﺔ اﻟﺘﺼﻨﻴﻔﺎت اﻟﺨﻄﻴﺔ ﻓﻲ ﺑُﻌﺪﻳﻦ} ﻳﺴﺎوي .۳

ﻋﻴﻨﺎت اﻟﺘﺪرﻳﺐ .mﺳﻴﻜﻮن

rﻣﺒﺮﻫﻨﺔ ﻓﺎﺑﻨﻴﻚ ) – (Vapnik theoremﻟﻴﻜﻦ ﻟﺪﻳﻨﺎ ،Hﻣﻊ VC(H) = dوﻋﺪد ّ

ﻟﺪﻳﻨﺎ ،ﻣﻊ اﺣﺘﻤﺎل ﻋﻠﻰ اﻷﻗﻞ ،1 − δاﻟﺘﺎﻟﻲ:

( ) √( ) ( )) (

d m 1 1

ϵ(b

⩽ )h min ϵ(h) + O log + log

h∈H m d m δ

ﺟﺎﻣﻌﺔ ﺳﺘﺎﻧﻔﻮرد ۵ ﺧﺮﻳﻒ ۲۰۱۸

You might also like

- Copie de ملخص-الرياضيات-1باك-علوم-موقع الاستاذ المودن PDFDocument132 pagesCopie de ملخص-الرياضيات-1باك-علوم-موقع الاستاذ المودن PDFFatima OusaaadaNo ratings yet

- Copie de ملخص الرياضيات 1باك علوم موقع الاستاذ المودنDocument132 pagesCopie de ملخص الرياضيات 1باك علوم موقع الاستاذ المودنحواش عبد النورNo ratings yet

- تمارين حول الاشتقاقDocument3 pagesتمارين حول الاشتقاقtalbiyahia2006No ratings yet

- Hrka Qthifa Fi Mjal Althqala Almntthm Mlkhs Aldrs 1 1Document2 pagesHrka Qthifa Fi Mjal Althqala Almntthm Mlkhs Aldrs 1 1Zakaria AmraniNo ratings yet

- رياضيات الاستاذة مالكيDocument5 pagesرياضيات الاستاذة مالكيhamamaNo ratings yet

- المحور 4 الجزء 1 التوزيع الاحتمالي المتقطعDocument4 pagesالمحور 4 الجزء 1 التوزيع الاحتمالي المتقطعHamouda AllaliNo ratings yet

- ملخص الاحصاء + تمارين مع الحل في الرياضيات للسنة الثالثة ثانوي شعبة تسيير و اقتصادDocument46 pagesملخص الاحصاء + تمارين مع الحل في الرياضيات للسنة الثالثة ثانوي شعبة تسيير و اقتصادYaCiNe DZNo ratings yet

- مبادئ في المنطق - الأولى بكالوريا علوم تجريبية مادة الرياضياتDocument6 pagesمبادئ في المنطق - الأولى بكالوريا علوم تجريبية مادة الرياضياتMed ChetNo ratings yet

- 2eme SC Devoir s1Document1 page2eme SC Devoir s1ZIAD BIDJAOUINo ratings yet

- Modakira 18Document4 pagesModakira 18Mouha MedNo ratings yet

- UntitledDocument1 pageUntitledMahmoud ElkenawyNo ratings yet

- Modakira 3Document1 pageModakira 3ⴰⴱⵓ ⴰⵍⴰⴻNo ratings yet

- Modakira 3Document1 pageModakira 3ⴰⴱⵓ ⴰⵍⴰⴻNo ratings yet

- Ø Ø Ø Ø Ù Ø Ù Ù Ø Ù Ø Ø Ù Ù Ø Ø Ù Ø Ø Ø Ù Ù Ø Ù ØDocument5 pagesØ Ø Ø Ø Ù Ø Ù Ù Ø Ù Ø Ø Ù Ù Ø Ø Ù Ø Ø Ø Ù Ù Ø Ù ØmehdimehdicheerifNo ratings yet

- Ôø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä Á - ARB - 9-3-0402-2014-2015 2Document7 pagesÔø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä Á - ARB - 9-3-0402-2014-2015 2christinafrancis1711No ratings yet

- جميع دروس وتمارين الأولى باك علوم تجريبيةDocument133 pagesجميع دروس وتمارين الأولى باك علوم تجريبيةEssaid AjanaNo ratings yet

- مجلة الاعداد المركبة للاستاذ مرنيز وليدDocument66 pagesمجلة الاعداد المركبة للاستاذ مرنيز وليدWahiba KheloufiNo ratings yet

- مجلة الاعداد المركبةDocument68 pagesمجلة الاعداد المركبةRamziNo ratings yet

- Mbadi Fi Almntq Aldrs 1Document7 pagesMbadi Fi Almntq Aldrs 1elabbassiayoub071No ratings yet

- دروس الرياضيات أولى باكالوريا علوم تجريبيةDocument153 pagesدروس الرياضيات أولى باكالوريا علوم تجريبيةemadadreamNo ratings yet

- 6 - تحليل الانحدارDocument9 pages6 - تحليل الانحدارyousralou911No ratings yet

- Ôø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä À - ARB - 9-3-0401-2014-2015 2Document6 pagesÔø÷ 4 - Äôåð÷öéä Äøéáåòéú - Øîä À - ARB - 9-3-0401-2014-2015 2christinafrancis1711No ratings yet

- MatlapDocument9 pagesMatlapalialamy06No ratings yet

- Alhsa 5 1 Altadad Aldrs 2Document2 pagesAlhsa 5 1 Altadad Aldrs 2Med BeeNo ratings yet

- درس المنطقDocument7 pagesدرس المنطقHassan HassanNo ratings yet

- Mbadi Fi Almntq Aldrs 1 2Document7 pagesMbadi Fi Almntq Aldrs 1 2anjoumiNo ratings yet

- Mbadi Fi Almntq Aldrs 2 3Document6 pagesMbadi Fi Almntq Aldrs 2 3Ali OuchnNo ratings yet

- Mabadie Fi Almantik PDFDocument6 pagesMabadie Fi Almantik PDFMeriem ZAGRIRINo ratings yet

- تجربة تحديد فواقد الإحتكاك لسريان مضطربDocument9 pagesتجربة تحديد فواقد الإحتكاك لسريان مضطربIbrahim ALsultanNo ratings yet

- P16Document6 pagesP16JOHN freeNo ratings yet

- الهندسة المستوية 3ع - ت1 - 2011 طارق العشDocument11 pagesالهندسة المستوية 3ع - ت1 - 2011 طارق العشtarekeleshNo ratings yet

- مذكرات المقطع 02 للسنة 01 من اعداد الأستاذة ناديةDocument30 pagesمذكرات المقطع 02 للسنة 01 من اعداد الأستاذة ناديةHichem BERKAKNo ratings yet

- مذكرات المقطع 02 من اعداد الأستاذ يعقوب طارق بصيغة البي دي أف - 2 متوسطDocument14 pagesمذكرات المقطع 02 من اعداد الأستاذ يعقوب طارق بصيغة البي دي أف - 2 متوسطRiyad BrikciNo ratings yet

- Complexe CoursDocument19 pagesComplexe CoursФатима Әл ЗахраNo ratings yet

- المجال6- المتتاليات العدديةDocument11 pagesالمجال6- المتتاليات العدديةYasser BouterbiatNo ratings yet

- c6 2sc Log PDFDocument4 pagesc6 2sc Log PDFMOhmmed taawytNo ratings yet

- بنك اسئلة رقابة برمجيات نسيبةDocument9 pagesبنك اسئلة رقابة برمجيات نسيبةosamaalammari2022No ratings yet

- مقاييس النزعة المركزية PDFDocument21 pagesمقاييس النزعة المركزية PDFAhmed Kadem Arab100% (2)

- مبادىء-الاحصاء: مقاييس النزعة المركزيةDocument21 pagesمبادىء-الاحصاء: مقاييس النزعة المركزيةAbdullah Al-shehriNo ratings yet

- مبادىء الاحصاء PDFDocument21 pagesمبادىء الاحصاء PDFAbdullah Al-shehriNo ratings yet

- مبادىء الاحصاءDocument21 pagesمبادىء الاحصاءlina kharratNo ratings yet

- APznzaZ6s9384bzYc5cu9Gjf51hv0vDq4gJUcGXUoZQL9i1I1htlz0udtU LkX6rq4iD7iuEBKKbKxroDPdUZvCQkXSv6wKrnoCluPONdgco4nUexKwv0QpbJx4PxWyTAIsc5xrjkMcbNgqQIUFELibp0PHdiR0oSvMzVVSgDocument6 pagesAPznzaZ6s9384bzYc5cu9Gjf51hv0vDq4gJUcGXUoZQL9i1I1htlz0udtU LkX6rq4iD7iuEBKKbKxroDPdUZvCQkXSv6wKrnoCluPONdgco4nUexKwv0QpbJx4PxWyTAIsc5xrjkMcbNgqQIUFELibp0PHdiR0oSvMzVVSgalshyaslam8No ratings yet

- مستوى ثانويDocument2 pagesمستوى ثانويMassi HsnNo ratings yet

- 2018_revision_01-1hhhhhhr2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhhDocument3 pages2018_revision_01-1hhhhhhr2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhh2018_revision_01-1hhhhhhCry FelinaNo ratings yet

- C 12Document4 pagesC 12Ar AyoubNo ratings yet

- Dzexams 3as Mathematiques 856698Document3 pagesDzexams 3as Mathematiques 856698rebah laidiNo ratings yet

- خرائط موضوعاتية تحليلةDocument24 pagesخرائط موضوعاتية تحليلةISSAM BENALINo ratings yet

- Dzexams 1as Mathematiques 2356914Document5 pagesDzexams 1as Mathematiques 2356914ranouna.1.rania.2No ratings yet

- مذكرات السنة الثالثةDocument19 pagesمذكرات السنة الثالثةAbdErrahmaneMouffokiNo ratings yet

- Dzexams Docs em 902595Document5 pagesDzexams Docs em 902595mthstation33No ratings yet

- Cours 03Document10 pagesCours 03Mohamed MedNo ratings yet

- اقتصاد قياسي PDFDocument32 pagesاقتصاد قياسي PDFBbaggi Bk100% (1)

- Markov ChainDocument30 pagesMarkov ChainZ0 ZooNo ratings yet

- Dzexams 1am Mathematiques 573160Document6 pagesDzexams 1am Mathematiques 573160sofianeNo ratings yet

- Math 4cem Math Exams 4cem m201 343851Document6 pagesMath 4cem Math Exams 4cem m201 343851Massi HsnNo ratings yet

- دعم 01Document9 pagesدعم 01mehdi2008mcaNo ratings yet

- Ber 4100Document7 pagesBer 4100Naima ElNo ratings yet

- Edited - وَرقة عَمَل ١- درس حادث مروريDocument2 pagesEdited - وَرقة عَمَل ١- درس حادث مروريAmatullah HabibNo ratings yet

- الهندسة الفضائيةDocument3 pagesالهندسة الفضائيةabiraljana47No ratings yet