Professional Documents

Culture Documents

Big Data & Hadoop

Big Data & Hadoop

Uploaded by

Nowshin Jerin0 ratings0% found this document useful (0 votes)

33 views7 pagesBig data is large datasets that cannot be processed using traditional techniques. It includes data from sources like social media, stock exchanges, power grids, search engines, and more. Hadoop is an open source framework for storing and processing big data across clusters of commodity servers. It provides benefits like cost savings, faster processing speeds, and insights to help businesses. Key components of Hadoop include HDFS for storage, MapReduce for processing, and tools like Hive, Pig, Flume, Sqoop, Zookeeper, Kafka, and HBase.

Original Description:

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentBig data is large datasets that cannot be processed using traditional techniques. It includes data from sources like social media, stock exchanges, power grids, search engines, and more. Hadoop is an open source framework for storing and processing big data across clusters of commodity servers. It provides benefits like cost savings, faster processing speeds, and insights to help businesses. Key components of Hadoop include HDFS for storage, MapReduce for processing, and tools like Hive, Pig, Flume, Sqoop, Zookeeper, Kafka, and HBase.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

0 ratings0% found this document useful (0 votes)

33 views7 pagesBig Data & Hadoop

Big Data & Hadoop

Uploaded by

Nowshin JerinBig data is large datasets that cannot be processed using traditional techniques. It includes data from sources like social media, stock exchanges, power grids, search engines, and more. Hadoop is an open source framework for storing and processing big data across clusters of commodity servers. It provides benefits like cost savings, faster processing speeds, and insights to help businesses. Key components of Hadoop include HDFS for storage, MapReduce for processing, and tools like Hive, Pig, Flume, Sqoop, Zookeeper, Kafka, and HBase.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

You are on page 1of 7

What is Big Data?

Big data is a collection of large datasets that cannot be

processed using traditional computing techniques. It is

not a single technique or a tool, rather it has become a

complete subject, which involves various tools,

technqiues and frameworks.

What Comes Under Big Data?

Big data involves the data produced by different

devices and applications. Given below are some of the

fields that come under the umbrella of Big Data.

Black Box Data − It is a component of helicopter,

airplanes, and jets, etc. It captures voices of the flight crew,

recordings of microphones and earphones, and the performance

information of the aircraft.

Social Media Data − Social media such as Facebook and

Twitter hold information and the views posted by millions of

people across the globe.

Stock Exchange Data − The stock exchange data holds

information about the ‘buy’ and ‘sell’ decisions made on a share

of different companies made by the customers.

Power Grid Data − The power grid data holds information

consumed by a particular node with respect to a base station.

Transport Data − Transport data includes model, capacity,

distance and availability of a vehicle.

Search Engine Data − Search engines retrieve lots of

data from different databases.

Benefits of Big Data

Using the information kept in the social network

like Facebook, the marketing agencies are learning

about the response for their campaigns,

promotions, and other advertising mediums.

Using the information in the social media like

preferences and product perception of their

consumers, product companies and retail

organizations are planning their production.

Using the data regarding the previous medical

history of patients, hospitals are providing better

and quick service.

Hadoop:

Hadoop is an open source, Java based framework used

for storing and processing big data. The data is stored

on inexpensive commodity servers that run as clusters.

Its distributed file system enables concurrent processing

and fault tolerance. Developed by Doug Cutting and

Michael J. Cafarella, Hadoop uses the MapReduce

programming model for faster storage and retrieval of

data from its nodes.

From a business point of view, too, there are direct and

indirect benefits. By using open-source technology on

inexpensive servers that are mostly in the cloud (and

sometimes on-premises), organizations achieve

significant cost savings.

Additionally, the ability to collect massive data, and the

insights derived from crunching this data, results in

better business decisions in the real-world—such as the

ability to focus on the right consumer segment, weed

out or fix erroneous processes, optimize floor

operations, provide relevant search results, perform

predictive analytics, and so on.

How Hadoop Improves on

Traditional Databases

Hadoop solves two key challenges with traditional

databases:

1. Capacity: Hadoop stores large

volumes of data.

By using a distributed file system called an HDFS

(Hadoop Distributed File System), the data is split into

chunks and saved across clusters of commodity servers.

As these commodity servers are built with simple

hardware configurations, these are economical and

easily scalable as the data grows.

2. Speed: Hadoop stores and retrieves

data faster.

Hadoop uses the MapReduce functional programming

model to perform parallel processing across data sets.

So, when a query is sent to the database, instead of

handling data sequentially, tasks are split and

concurrently run across distributed servers. Finally, the

output of all tasks is collated and sent back to the

application, drastically improving the processing speed.

The Hadoop Ecosystem: Supplementary Components

The following are a few supplementary

components that are extensively used in the

Hadoop ecosystem.

Hive: Data Warehousing

Hive is a data warehousing system that helps to

query large datasets in the HDFS. Before Hive,

developers were faced with the challenge of

creating complex MapReduce jobs to query the

Hadoop data. Hive uses HQL (Hive Query

Language), which resembles the syntax of SQL.

Since most developers come from a SQL

background, Hive is easier to get on-board.

The advantage of Hive is that a JDBC/ODBC driver

acts as an interface between the application and

the HDFS. Originally developed by the

Facebook team, Hive is now an open source

technology.

Pig: Reduce MapReduce Functions

Pig, initially developed by Yahoo!, is similar to

Hive in that it eliminates the need to create

MapReduce functions to query the HDFS. Similar

to HQL, the language used — here, called “Pig

Latin” — is closer to SQL. “Pig Latin” is a high-level

data flow language layer on top of MapReduce.

Pig also has a runtime environment that interfaces

with HDFS. Scripts in languages such as Java or

Python can also be embedded inside Pig.

Flume: Big Data Ingestion

Flume is a big data ingestion tool that acts as a

courier service between multiple data sources and

the HDFS. It collects, aggregates, and sends

huge amounts of streaming data (e.g. log

files, events) generated by applications such

as social media sites, IoT apps, and

ecommerce portals into the HDFS.

Flume is feature-rich, it:

Has a distributed architecture.

Ensures reliable data transfer.

Is fault-tolerant.

Has the flexibility to collect data in batches or real-

time.

Can be scaled horizontally to handle more traffic,

as needed.

Data sources communicate with Flume agents —

every agent has a source, channel, and a sink. The

source collects data from the sender, the channel

temporarily stores the data, and finally, the sink

transfers data to the destination, which is a

Hadoop server.

Sqoop: Data Ingestion for Relational

Databases

Sqoop (“SQL,” to Hadoop) is another data

ingestion tool like Flume. While Flume works on

unstructured or semi-structured data, Sqoop is

used to export data from and import data into

relational databases. As most enterprise data is

stored in relational databases, Sqoop is used to

import that data into Hadoop for analysts to

examine.

Database admins and developers can use a simple

command line interface to export and import data.

Sqoop converts these commands to MapReduce

format and sends them to the HDFS using YARN.

Sqoop is also fault-tolerant and performs

concurrent operations like Flume.

Zookeeper: Coordination of Distributed

Applications

Zookeeper is a service that coordinates

distributed applications. In the Hadoop

framework, it acts as an admin tool with a

centralized registry that has information about the

cluster of distributed servers it manages. Some of

its key functions are:

Maintaining configuration information (shared

state of configuration data)

Naming service (assignment of name to each

server)

Synchronization service (handles deadlocks, race

condition, and data inconsistency)

Leader election (elects a leader among the servers

through consensus)

The cluster of servers that the Zookeeper service

runs on is called an “ensemble.” The ensemble

elects a leader among the group, with the rest

behaving as followers. All write-operations from

clients need to be routed through the leader,

whereas read operations can go directly to any

server.

Zookeeper provides high reliability and resilience

through fail-safe synchronization, atomicity, and

serialization of messages.

Kafka: Faster Data Transfers

Kafka is a distributed publish-subscribe

messaging system that is often used with

Hadoop for faster data transfers. A Kafka

cluster consists of a group of servers that act as an

intermediary between producers and consumers.

In the context of big data, an example of a

producer could be a sensor gathering temperature

data to relay back to the server. Consumers are

the Hadoop servers. The producers publish

message on a topic and the consumers pull

messages by listening to the topic.

A single topic can be split further into partitions.

All messages with the same key arrive to a specific

partition. A consumer can listen to one or more

partitions.

By grouping messages under one key and

getting a consumer to cater to specific

partitions, many consumers can listen on the

same topic at the same time. Thus, a topic is

parallelized, increasing the throughput of the

system. Kafka is widely adopted for its speed,

scalability, and robust replication.

HBase: Non-Relational Database

HBase is a column-oriented, non-relational

database that sits on top of HDFS. One of the

challenges with HDFS is that it can only do

batch processing. So for simple interactive

queries, data still has to be processed in batches,

leading to high latency.

HBase solves this challenge by allowing queries for

single rows across huge tables with low latency. It

achieves this by internally using hash tables. It is

modelled along the lines of Google BigTable

that helps access the Google File System

(GFS).

HBase is scalable, has failure support when a node

goes down, and is good with unstructured as well

as semi-structured data. Hence, it is ideal for

querying big data stores for analytical purposes.

You might also like

- CDISC SDTM ConversionDocument11 pagesCDISC SDTM ConversionNeha TyagiNo ratings yet

- Big Data & HadoopDocument7 pagesBig Data & HadoopNowshin JerinNo ratings yet

- Big Data OverviewDocument39 pagesBig Data Overviewnoor khanNo ratings yet

- Haddob Lab ReportDocument12 pagesHaddob Lab ReportMagneto Eric Apollyon ThornNo ratings yet

- Apache HadoopDocument11 pagesApache HadoopImaad UkayeNo ratings yet

- Big Data Analytics Unit-3Document15 pagesBig Data Analytics Unit-34241 DAYANA SRI VARSHANo ratings yet

- CC-KML051-Unit VDocument17 pagesCC-KML051-Unit VFdjsNo ratings yet

- Big Data and Hadoop - SuzanneDocument5 pagesBig Data and Hadoop - SuzanneTripti SagarNo ratings yet

- BDA Unit 3Document6 pagesBDA Unit 3SpNo ratings yet

- Chapter - 2 HadoopDocument32 pagesChapter - 2 HadoopRahul PawarNo ratings yet

- Big Data Introduction & EcosystemsDocument4 pagesBig Data Introduction & EcosystemsHarish ChNo ratings yet

- BDA NotesDocument25 pagesBDA Notesmrudula.sbNo ratings yet

- HADOOP and PYTHON For BEGINNERS - 2 BOOKS in 1 - Learn Coding Fast! HADOOP and PYTHON Crash Course, A QuickStart Guide, Tutorial Book by Program Examples, in Easy Steps!Document89 pagesHADOOP and PYTHON For BEGINNERS - 2 BOOKS in 1 - Learn Coding Fast! HADOOP and PYTHON Crash Course, A QuickStart Guide, Tutorial Book by Program Examples, in Easy Steps!Antony George SahayarajNo ratings yet

- MapreduceDocument15 pagesMapreducemanasaNo ratings yet

- What Is The Hadoop EcosystemDocument5 pagesWhat Is The Hadoop EcosystemZahra MeaNo ratings yet

- Unit 5 - Introduction To HadoopDocument50 pagesUnit 5 - Introduction To HadoopShree ShakNo ratings yet

- SDL Module-No SQL Module Assignment No. 2: Q1 What Is Hadoop and Need For It? Discuss It's ArchitectureDocument6 pagesSDL Module-No SQL Module Assignment No. 2: Q1 What Is Hadoop and Need For It? Discuss It's ArchitectureasdfasdfNo ratings yet

- Hadoop Features 2Document3 pagesHadoop Features 2sharan kommiNo ratings yet

- HADOOPDocument55 pagesHADOOPVivek GargNo ratings yet

- Big Data - Unit 2 Hadoop FrameworkDocument19 pagesBig Data - Unit 2 Hadoop FrameworkAditya DeshpandeNo ratings yet

- BigData Unit 2Document15 pagesBigData Unit 2Sreedhar ArikatlaNo ratings yet

- Hadoop Ecosystem PDFDocument6 pagesHadoop Ecosystem PDFKittuNo ratings yet

- HadoopDocument25 pagesHadoopRAJNISH KUMAR ROYNo ratings yet

- Unit 5 - Introduction To HadoopDocument50 pagesUnit 5 - Introduction To HadoopShree ShakNo ratings yet

- Testing Big Data: Camelia RadDocument31 pagesTesting Big Data: Camelia RadCamelia Valentina StanciuNo ratings yet

- BDA Lab Assignment 3 PDFDocument17 pagesBDA Lab Assignment 3 PDFparth shahNo ratings yet

- SubtitleDocument2 pagesSubtitleTai NguyenNo ratings yet

- Alternatives To HIVE SQL in Hadoop File StructureDocument5 pagesAlternatives To HIVE SQL in Hadoop File StructureInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- 02 Unit-II Hadoop Architecture and HDFSDocument18 pages02 Unit-II Hadoop Architecture and HDFSKumarAdabalaNo ratings yet

- Big Data Ana Unit - II Part - II (Hadoop Architecture)Document47 pagesBig Data Ana Unit - II Part - II (Hadoop Architecture)Mokshada YadavNo ratings yet

- Unit 2Document56 pagesUnit 2Ramstage TestingNo ratings yet

- Getting Started With HDP SandboxDocument107 pagesGetting Started With HDP Sandboxrisdianto sigmaNo ratings yet

- Research Paper On Apache HadoopDocument6 pagesResearch Paper On Apache Hadoopsoezsevkg100% (1)

- Cloud - UNIT VDocument18 pagesCloud - UNIT VShikha SharmaNo ratings yet

- Bda Lab ManualDocument40 pagesBda Lab Manualvishalatdwork5730% (1)

- h13999 Hadoop Ecs Data Services WPDocument9 pagesh13999 Hadoop Ecs Data Services WPVijay ReddyNo ratings yet

- Hadoop EcosystemDocument16 pagesHadoop Ecosystempoojan thakkarNo ratings yet

- 2 HadoopDocument20 pages2 HadoopYASH PRAJAPATINo ratings yet

- Lect - 11 - BIG DATADocument42 pagesLect - 11 - BIG DATARasika MalodeNo ratings yet

- Bda Summer 2022 SolutionDocument30 pagesBda Summer 2022 SolutionVivekNo ratings yet

- Hadoop EcosystemDocument55 pagesHadoop EcosystemnehalNo ratings yet

- HadoopDocument7 pagesHadoopAnonymous mFO6slhI0No ratings yet

- .Analysis and Processing of Massive Data Based On Hadoop Platform A Perusal of Big Data Classification and Hadoop TechnologyDocument3 pages.Analysis and Processing of Massive Data Based On Hadoop Platform A Perusal of Big Data Classification and Hadoop TechnologyPrecious PearlNo ratings yet

- Big Data Technology StackDocument12 pagesBig Data Technology StackKhalid ImranNo ratings yet

- HadoopDocument6 pagesHadoopVikas SinhaNo ratings yet

- Hadoop EcosystemDocument56 pagesHadoop EcosystemRUGAL NEEMA MBA 2021-23 (Delhi)No ratings yet

- Techniques of Handling Big Data All SessionsDocument56 pagesTechniques of Handling Big Data All SessionsVaboy100% (1)

- Hadoop Overview Training MaterialDocument44 pagesHadoop Overview Training MaterialsrinidkNo ratings yet

- Bigdata and Hadoop NotesDocument5 pagesBigdata and Hadoop NotesNandini MalviyaNo ratings yet

- Bda Unit 2Document21 pagesBda Unit 2245120737162No ratings yet

- Technical SeminarDocument32 pagesTechnical SeminarSda SdasdNo ratings yet

- Big Data Hadoop Training 8214944.ppsxDocument52 pagesBig Data Hadoop Training 8214944.ppsxhoukoumatNo ratings yet

- Bda QBDocument18 pagesBda QBtanupandav333No ratings yet

- Chapter 2 Hadoop Eco SystemDocument34 pagesChapter 2 Hadoop Eco Systemlamisaldhamri237No ratings yet

- Unit 3Document15 pagesUnit 3xcgfxgvxNo ratings yet

- Parallel ProjectDocument32 pagesParallel Projecthafsabashir820No ratings yet

- Hadoop Ecosystem PDFDocument55 pagesHadoop Ecosystem PDFRishabh GuptaNo ratings yet

- Hadoop Ecosystem PDFDocument55 pagesHadoop Ecosystem PDFRishabh GuptaNo ratings yet

- Research Paper On HadoopDocument5 pagesResearch Paper On Hadoopwlyxiqrhf100% (1)

- Zavrsni Rad - Konacna VerzijaDocument5 pagesZavrsni Rad - Konacna VerzijaNaida GolaćNo ratings yet

- Oracle Engineered Systems Price List: Prices in USA (Dollar)Document13 pagesOracle Engineered Systems Price List: Prices in USA (Dollar)René VegaNo ratings yet

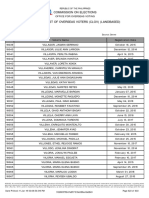

- Commission On Elections Certified List of Overseas Voters (Clov) (Landbased)Document1 pageCommission On Elections Certified List of Overseas Voters (Clov) (Landbased)chadvillelaNo ratings yet

- Fadli, 2011Document45 pagesFadli, 2011Putri ZainidarNo ratings yet

- User Manual On Registration of Apartment Owner Association For State-Wise Rollout of E-District MMP in West BengalDocument29 pagesUser Manual On Registration of Apartment Owner Association For State-Wise Rollout of E-District MMP in West BengalBasudev GanguliNo ratings yet

- CSC Project OutlineDocument19 pagesCSC Project OutlinesyazaNo ratings yet

- (Database Management System (DBMS) PDFDocument136 pages(Database Management System (DBMS) PDFJimmyconnors ChettipallyNo ratings yet

- Worksheet Binary Search TreeDocument4 pagesWorksheet Binary Search TreeSonal CoolNo ratings yet

- Oracle Ucertify 1z0-060 Exam Question V2019-Aug-20 by Haley 144q Vce PDFDocument19 pagesOracle Ucertify 1z0-060 Exam Question V2019-Aug-20 by Haley 144q Vce PDFBelu IonNo ratings yet

- Manual Overview SQLDocument24 pagesManual Overview SQLnumpaquesNo ratings yet

- Big Data Analytics With Applications in Insider Threat DetectionDocument579 pagesBig Data Analytics With Applications in Insider Threat Detectionstas.berdar97No ratings yet

- Unit-Vi Hive Hadoop & Big DataDocument24 pagesUnit-Vi Hive Hadoop & Big DataAbhay Dabhade100% (1)

- F.3 Computer Literacy Database: Select From Where AND ANDDocument18 pagesF.3 Computer Literacy Database: Select From Where AND ANDNigerian NegusNo ratings yet

- DBMS - Lab CAT2 Code SWE1001 SLOT L47+48 DATE 16/10/19Document2 pagesDBMS - Lab CAT2 Code SWE1001 SLOT L47+48 DATE 16/10/19msroshi madhuNo ratings yet

- SEO Test QuestionsDocument2 pagesSEO Test QuestionsRose ]SwindellNo ratings yet

- Dbms Lab ManualDocument44 pagesDbms Lab ManualBilal ShaikhNo ratings yet

- ΨΥΧΙΚΗ ΥΓΕΙΑ ΚΑΙ ΔΙΑΠΡΟΣΩΠΙΚΕΣ ΣΧΕΣΕΙΣ 6-8 ΔΑΣΚΑΛΟΥDocument42 pagesΨΥΧΙΚΗ ΥΓΕΙΑ ΚΑΙ ΔΙΑΠΡΟΣΩΠΙΚΕΣ ΣΧΕΣΕΙΣ 6-8 ΔΑΣΚΑΛΟΥpanamitsisNo ratings yet

- Safely Cleaning Up Log Files in VRealize Operations 6.x (2145578)Document2 pagesSafely Cleaning Up Log Files in VRealize Operations 6.x (2145578)பாரதி ராஜாNo ratings yet

- SMA Session 1Document24 pagesSMA Session 1Siddhartha PatraNo ratings yet

- Parallel DatabasesDocument11 pagesParallel DatabasesMadara UchihaNo ratings yet

- Oracle Lifetime Support:: Coverage For Oracle Technology ProductsDocument17 pagesOracle Lifetime Support:: Coverage For Oracle Technology ProductsM Amiruddin HasanNo ratings yet

- Assignment of RDBMS: Submitted To Submitted by Mrs. Jaspreet Kaur Rosy Walia Batch-5 Reg No.-7450070126 Group-1Document26 pagesAssignment of RDBMS: Submitted To Submitted by Mrs. Jaspreet Kaur Rosy Walia Batch-5 Reg No.-7450070126 Group-1Simar PreetNo ratings yet

- Database Management System Sixth Semester (C.B.S.)Document4 pagesDatabase Management System Sixth Semester (C.B.S.)suhasvkaleNo ratings yet

- Case Study - SQL Server Business IntelligenceDocument9 pagesCase Study - SQL Server Business IntelligenceSushil Mishra100% (1)

- Week 7 SolutionDocument10 pagesWeek 7 SolutionbalainsaiNo ratings yet

- Storage Devices: Mohit Kumar Pandey Mohit NeheteDocument31 pagesStorage Devices: Mohit Kumar Pandey Mohit NeheteMohit pandeyNo ratings yet

- Data Mining Lab ReportDocument6 pagesData Mining Lab ReportRedowan Mahmud RatulNo ratings yet

- Discoverer Calculated Functions - How To GuideDocument11 pagesDiscoverer Calculated Functions - How To GuidenthackNo ratings yet

- Final 1Document51 pagesFinal 1jansky37No ratings yet