Professional Documents

Culture Documents

Vedanth Kunchala Data Integration Engineer

Vedanth Kunchala Data Integration Engineer

Uploaded by

Dummy GammyOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Vedanth Kunchala Data Integration Engineer

Vedanth Kunchala Data Integration Engineer

Uploaded by

Dummy GammyCopyright:

Available Formats

Christopher.Kaspar@artech.

com

Vedanth Kunchala Data Integration Engineer

Summary

Certified professional data engineer with overall IT experience more than 7 years in different domain

clients and technology spectrums.

Experienced in working in highly scalable and large-scale applications building with different

technologies using Cloud, BigData, DevOps and Spring boot. Also, expert in working in different

working environments like Agile and Waterfall.

Experience in working multi cloud, migration, and scalable application projects.

BigData:

Experience in working various hadoop distributions like Cloudera, Hortonworks and MapR.

Expert in ingesting batch data for incremental loads from various RBMS tools using Apache Sqoop.

Developed scalable applications for real-time ingestions into various databases using Apache Kafka.

Developed Pig Latin scripts and MapReduce jobs for large data transformations and Loads.

Experience in using optimized data formats like ORC, Parquet and Avro.

Experience in building optimized ETL data pipelines using Apache Hive and Spark.

Implemented various optimizing techniques in Hive scripts for data crunching and transformations.

Experience in building ETL scripts in Impala for faster access for reporting layer.

Built spark data pipelines with various optimization techniques using python and scala.

Experience in loading transactional and delta loads into NoSQL databases like HBase.

Developed various automation flows using Apache Oozie, Azkaban, and Airflow.

Experience in working with NoSQL Databases like HBase, Cassandra and MongoDB.

Experience in various integration tools like Talend, NiFi for ingesting batch and streaming data.

Cloud:

Experience in working with various cloud distributions like AWS, Azure and GCP.

Developed various ETL applications using Databricks Spark distributions and Notebooks.

Implemented streaming applications to consume data from Event Hub and Pub/Sub.

Developed various scalable bigdata applications in Azure HDInsight’s for ETL services .

Developed scalable applications using AWS tools like Redshift, DynamoDB.

Worked on building pipelines using snowflake for extensive data aggregations.

Working knowledge on GCP tools like BigQuery, Pub/Sub, Cloud SQL, and Cloud functions.

Experience in visualizing reporting data using tools like PowerBi, Google analytics.

DevOps:

Experience in building continuous integration and deployments using Jenkins, Drone, Travis CI.

Expert in building containerized apps using tools like Docker, Kubernetes and terraform.

Developed reusable application libraries using docker containers .

Experience in building metrics dashboards and alerts using Grafana and Kibana.

Expert in java and scala built tools like Maven, Pom and SBT for application development.

Experience in working with tools like GitHub, GitLab and SVN for code repository.

Expert in writing various YAML scripts for automation purpose

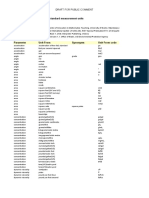

Technical Skills:

Big Data: Hadoop, Sqoop, Flume, Hive, Spark, Pig, Kafka, Talend, HBase, Impala

ETL Tools: Informatica, Talend, Microsoft SSIS, Confidential DataStage

Artech Information Systems

Christopher.Kaspar@artech.com

Database: Oracle, Mongo DB, SQL Server 2016, Teradata, Netezza, MS Access

Reporting: Microsoft Power BI, Tableau, QlikView, SSRS, Business Objects(Crystal)

Business Intelligence: MDM, Change Data Capture (CDC), Metadata, Data Cleansing, OLAP, OLTP,

SCD, SOA, REST, Web Services

Tools: Ambari, SQL Developer, TOAD, Erwin, Visio, Tortoise SVN

Operating Systems: Windows Server, UNIX (Red Hat, Linux, Solaris, AIX)

Web Technologies: J2EE, JMS, Web Service

Languages: UNIX shell scripting, SCALA, SQL, PL/SQL, T-SQL

Scripting : HTML, JavaScript, CSS, XML, Shell Script, Perl, and Ajax

Cloud: Azure , AWS, GCP

Version control: Git, SVN, CVS, GitLab

Tools: FileZilla, Putty, PL/SQL Developer, JUnit,

IDE: Eclipse, Microsoft Visual Studio 2008,2012 , Flex Builder

Experience:

Client: FedEx ServicesLocation Jan 2020 – Present

Memphis, TN

Sr.Data Engineer

Responsibilities

Developed code for importing and exporting data from RBMS into HDFS using Sqoop and vice versa.

Implemented Partitions, Buckets based on State to further process using Bucket based Hive joins.

Developed custom UDF’s in Java as and used whenever necessary to reduce code in Hive queries.

Handled ETL Framework in Spark with python and scala for data transformations.

Implemented various optimization techniques for Spark applications for improving performance.

Involved in Spark streaming for real-time computations to process JSON files from Kafka.

Developed API’s for quick real-time lookup on top of HBase tables for transactional data.

Built optimized dynamic schema tables using AVRO and columnar tables using parquet.

Built various oozie actions , workflows and coordinators for automation purpose.

Developed various scripting functionality using shell , Bash and Python for various operations.

Pushed application logs and data streams logs to Grafana server for monitoring and alerting purpose.

Developed Jenkins and Drone pipelines for continuous integration and deployment purpose.

Worked on building various pipelines and integration using NiFi for ingestion and exports.

Built custom end points and libraries in NiFi for ingesting data from traditional legacy systems.

Implemented integrations to various cloud environments like AWS, Azure and GCP for external vendor

integrations for files exchange systems.

Implemented secure transfer routes for external clients using microservices to integrated external

storage locations like AWS S3 and Google Storage Buckets(GCS).

Built SFTP integrations using various VMWare solutions for external vendors on boarding.

Developed automated file transfer mechanism using python from MFT, SFTP to HDFS.

Environment: Apache Hadoop 2.0, Cloudera, HDFS, MapReduce, Hive, Impala, HBase, Sqoop, Kafka, Spark,

Linux, MySQL, NiFi, Oozie, SFTP

Client: USAA, Plano, TX Oct 2018 - Dec 2019

Sr.Data Engineer

Responsibilities

Artech Information Systems

Christopher.Kaspar@artech.com

Developed Hive ETL Logic for data cleansing and transformation of data coming through RBMS.

Implemented complex data types in hive also used multiple data formats like ORC, Parquet.

Worked in different parts of data lake implementations and maintenance for ETL processing.

Developed Spark Streaming application using Scala and python for processing data from Kafka.

Implemented various optimization techniques in spark streaming with python applications.

Imported batch data using Sqoop to load data from MySQL to HDFS on regular intervals.

Extracted data from various APIs, data cleansing and processing by using Java and Scala.

Converted Hive queries into Spark SQL that integrate Spark environment for optimized runs.

Developed a migration data pipelines from HDFS on prem cluster to Azure HD Insights.

Developed Complex queries and ETL process in Jupyter notebooks using data bricks spark.

Developed different modules in microservices to collect stats of application for visualization.

Worked on docker and Kubernetes for deploying application and make it containerize.

Implemented NiFi pipelines to export data from HDFS to cloud locations like AWS and Azure .

Ingested data from Azure Event Hub for Realtime data ingestion into various applications.

Experience designing solutions in Azure tools like Azure Data Factory, Azure Data Lake, Azure SQL &

Azure SQL Data Warehouse, Azure Functions.

Implemented DataLake migration from on prem clusters to Azure for highly scalable solutions.

Worked on implementing various airflow automations for building integrations between clusters.

Environment: Hive, Sqoop, Linux, Cloudera CDH 5, Scala, Kafka, HBase, Avro, Spark, Zookeeper and

MySQL, Azure , Databricks, Scala, Python, airflow .

Client: Discover, Chicago, IL Jan 2016 - Sep

2018

Sr.Data Engineer

Responsibilities

Worked on building and developing ETL pipelines using Spark-based applications.

Worked in migration of RDMS data into Data Lake applications.

Build optimized hive and spark jobs for data cleansing and transformations.

Developed spark scala applications in an optimized way to complete in time.

Worked on various optimizations techniques in Hive for data transformations and loading.

Expert in working with dynamic data schema evolutions like Avro formats.

Built API on top of HBase data to expose for external teams for quick lookups.

Experience in building impala script for quick retrieval of data to expose through tableau.

Experience in developing various oozie actions for automation purpose.

Developed a monitoring platform for our jobs in Kibana and Grafana.

Developed real-time log aggregations on Kibana for analyzing data.

Worked in developed Ni-Fi pipelines for extracting data from external sources.

Developed Jenkins pipelines for data pipeline deployments.

Worked on building different modules in spring boot scalable applications.

Developed Docker container for automating run time environments for various applications.

Expert in building ingestion pipelines for reading real time data from Kafka.

Worked in Poc for setup Talend environments and custom libraries for different pipelines.

Developed various python and shell scripting for various operations.

Worked in Agile environment with various teams and projects in fast phase environments.

Artech Information Systems

Christopher.Kaspar@artech.com

Environment: Hadoop, Sqoop, Pig, HBase, Hive, Flume, Java 6, Eclipse, Apache Tomcat 7.0, Oracle, Java,

J2ee, Talend, NiFi, Scala, Python .

Client: Jarus Technologies Pvt Ltd June 2012 - July

2014

Hyderabad, TG

Software Engineer

Responsibilities

Developed MapReduce jobs in java for data cleaning and preprocessing.

Worked on Hadoop cluster & different big data analytic tools including Pig, HBase and Sqoop.

Responsible for building scalable distributed data solutions using big data tools.

Implemented nine nodes CDH3 Hadoop cluster on Red hat LINUX and worked on automations.

Created Hive and HBase tables to store variable data formats of data coming from different portfolios.

Load and transform large sets of structured and unstructured data using Hive and Pig.

Automated various hadoop jobs using oozie and crontab for daily run’s.

Setup QA environment and updating configurations for implementing scripts with Pig and Sqoop.

Developed various java applications for migrating data from NFS mount to HDFS locations.

Developed SSIS automations for automating various SQL operations on SQL server.

Worked as L3 support engineer for various large-scale applications.

Worked in setting up automated test setups for various data applications.

Worked on Teradata and oracle data models developments and designs.

Worked in waterfall model for application design, developments, and deployments.

Environment: Hadoop, Sqoop, Pig, HBase, Hive, Flume, Java 6, Eclipse, Apache Tomcat 7.0, Oracle, Java,

J2ee.

Education

Masters at Eastern Illinois University 2015

Bachelor of Technology at JNTUH 2012

Artech Information Systems

You might also like

- How To Enhance The Shipping CockpitDocument41 pagesHow To Enhance The Shipping CockpitkamalrajNo ratings yet

- Vishwa SrDataEngineer ResumeDocument4 pagesVishwa SrDataEngineer ResumeHARSHANo ratings yet

- Dice Resume CV Vijay KrishnaDocument4 pagesDice Resume CV Vijay KrishnaRAJU PNo ratings yet

- Mondeo MY10 - 5Document135 pagesMondeo MY10 - 5t77100% (2)

- Sap 7.5Document18 pagesSap 7.5surya25% (4)

- Bigdata - Cloud - DevopsDocument5 pagesBigdata - Cloud - DevopsVrahtaNo ratings yet

- NandanaReddy SrDataEngineerDocument5 pagesNandanaReddy SrDataEngineerVrahtaNo ratings yet

- Dice Resume CV Saumya SDocument7 pagesDice Resume CV Saumya SHARSHANo ratings yet

- PR Ofessional Summary: Data Frames and RDD'sDocument6 pagesPR Ofessional Summary: Data Frames and RDD'sRecruitmentNo ratings yet

- Chaitanya - Sr. Data EngineerDocument7 pagesChaitanya - Sr. Data Engineerabhay.rajauriya1No ratings yet

- Dice Resume CV Yamini VakulaDocument5 pagesDice Resume CV Yamini VakulaharshNo ratings yet

- Aslam Big Data EngineerDocument6 pagesAslam Big Data EngineerMadhav GarikapatiNo ratings yet

- Aslam, Mohammad Email: Phone: Big Data/Cloud DeveloperDocument6 pagesAslam, Mohammad Email: Phone: Big Data/Cloud DeveloperMadhav GarikapatiNo ratings yet

- Lekhana Data EngineerDocument5 pagesLekhana Data EngineerSravanpalNo ratings yet

- Mahesh - Big Data EngineerDocument5 pagesMahesh - Big Data EngineerNoor Ayesha IqbalNo ratings yet

- Nikhil Kumar Mutyala - Senior Big Data EngineerDocument7 pagesNikhil Kumar Mutyala - Senior Big Data Engineer0305vipulNo ratings yet

- Dice Resume CV SAI KARTHIKDocument4 pagesDice Resume CV SAI KARTHIKRAJU PNo ratings yet

- DONDocument6 pagesDONssreddy.dataNo ratings yet

- Guru Data ResumeDocument6 pagesGuru Data ResumeakumarNo ratings yet

- Sri3Document8 pagesSri3ssreddy.dataNo ratings yet

- Akhil Data+Engineer1Document5 pagesAkhil Data+Engineer1Vivek SagarNo ratings yet

- Email ID: Contact: 469-294-5069: Shesh RajDocument6 pagesEmail ID: Contact: 469-294-5069: Shesh Rajvitig2No ratings yet

- Santosh Goud - Senior AWS Big Data EngineerDocument9 pagesSantosh Goud - Senior AWS Big Data EngineerPranay GNo ratings yet

- Akhil Reddy GCPDocument8 pagesAkhil Reddy GCPabhay.rajauriya1No ratings yet

- GCP SampleDocument7 pagesGCP SampleMadhu SudhanNo ratings yet

- Sr. AWS Data Engineer. Resume Nashville, TN - Hire IT People - We get IT doneDocument10 pagesSr. AWS Data Engineer. Resume Nashville, TN - Hire IT People - We get IT doneSangem SandeepNo ratings yet

- Deepak (Sr. Data Engineer)Document10 pagesDeepak (Sr. Data Engineer)ankulNo ratings yet

- Deepak Professional SummaryDocument3 pagesDeepak Professional Summaryaras4mavis1932No ratings yet

- Dice Resume CV Deema AlkDocument6 pagesDice Resume CV Deema AlkShivam PandeyNo ratings yet

- Nagarjuna Hadoop ResumeDocument7 pagesNagarjuna Hadoop ResumerecruiterkkNo ratings yet

- Dhanush Bigdata Resume UpdatedDocument9 pagesDhanush Bigdata Resume UpdatedNishant KumarNo ratings yet

- Ahmed Mohammed: Professional SummaryDocument5 pagesAhmed Mohammed: Professional SummaryvishuchefNo ratings yet

- Dice Resume CV Likitha PaillaDocument5 pagesDice Resume CV Likitha PaillaHARSHANo ratings yet

- Jagrut Nimmala ResumeDocument5 pagesJagrut Nimmala ResumeDummy GammyNo ratings yet

- Abhinav Puskuru - GCP Data EngineerDocument5 pagesAbhinav Puskuru - GCP Data EngineerT Chandra sekharNo ratings yet

- Prem Data ResumeDocument8 pagesPrem Data ResumesriNo ratings yet

- Yamini-Data Engineer MarketingDocument3 pagesYamini-Data Engineer MarketingKaushalNo ratings yet

- Professional SummaryDocument5 pagesProfessional SummaryNaresh HITNo ratings yet

- TejaDocument5 pagesTejassreddy.dataNo ratings yet

- Naresh DEDocument5 pagesNaresh DEHARSHANo ratings yet

- Adithya Jatangi: Professional SummaryDocument7 pagesAdithya Jatangi: Professional SummaryPranay GNo ratings yet

- Evan - Big Data ArchitectDocument5 pagesEvan - Big Data ArchitectMadhav GarikapatiNo ratings yet

- R01 1Document7 pagesR01 1vitig2No ratings yet

- DE Sample ResumeDocument6 pagesDE Sample ResumeSri GuruNo ratings yet

- BigData - ResumeDocument5 pagesBigData - ResumemuralindlNo ratings yet

- Dice Resume CV Kumar HariDocument6 pagesDice Resume CV Kumar HariNaman BhardwajNo ratings yet

- Chandralekha Rao YachamaneniDocument7 pagesChandralekha Rao YachamaneniKritika ShuklaNo ratings yet

- Mohit ShivramwarCVDocument5 pagesMohit ShivramwarCVNoor Ayesha IqbalNo ratings yet

- Bhavith: Sr. Data EngineerDocument5 pagesBhavith: Sr. Data EngineerxovoNo ratings yet

- Deepika SR Python Developer AWS CloudDocument9 pagesDeepika SR Python Developer AWS CloudSatish Kumar SinhaNo ratings yet

- Dice Resume CV Karthik SDocument4 pagesDice Resume CV Karthik SRAJU PNo ratings yet

- 1Document2 pages1Anuj GargNo ratings yet

- Chaitanya - Sr. AWS EngineerDocument3 pagesChaitanya - Sr. AWS EngineerrecruiterkkNo ratings yet

- Uma Maheshwar Rao Bodi Data Engineer 2 MDocument7 pagesUma Maheshwar Rao Bodi Data Engineer 2 MHARSHANo ratings yet

- Vinay Kumar Data EngineerDocument8 pagesVinay Kumar Data Engineerkevin711588No ratings yet

- List of VendorsDocument5 pagesList of VendorsaalexaNo ratings yet

- Swapnik DEDocument6 pagesSwapnik DESanthosh KumarNo ratings yet

- Piyush Patel-Lead Python DeveloperDocument13 pagesPiyush Patel-Lead Python DeveloperNick RecruitNo ratings yet

- PH: 601-691-1228 Linkedin:: Karthik Potharaju Sr. Hadoop/Big Data DeveloperDocument5 pagesPH: 601-691-1228 Linkedin:: Karthik Potharaju Sr. Hadoop/Big Data DeveloperVinothNo ratings yet

- Manideep LenkalapallyDocument7 pagesManideep LenkalapallyNoor Ayesha IqbalNo ratings yet

- Samatha GCP Data EngineerDocument8 pagesSamatha GCP Data EngineerMandeep BakshiNo ratings yet

- John pualDocument10 pagesJohn pualssreddy.dataNo ratings yet

- Kafka Up and Running for Network DevOps: Set Your Network Data in MotionFrom EverandKafka Up and Running for Network DevOps: Set Your Network Data in MotionNo ratings yet

- Jagrut Nimmala ResumeDocument5 pagesJagrut Nimmala ResumeDummy GammyNo ratings yet

- My Order History - OldOrderDocument3 pagesMy Order History - OldOrderDummy GammyNo ratings yet

- My Order History - NewOrderDocument2 pagesMy Order History - NewOrderDummy GammyNo ratings yet

- Vishesh Kain: Get in ContactDocument1 pageVishesh Kain: Get in ContactDummy GammyNo ratings yet

- ch4 Lesson 5,6Document40 pagesch4 Lesson 5,6Abhi RokXsNo ratings yet

- Bab 2 Euclidean Vector Spaces (Compatibility Mode) PDFDocument13 pagesBab 2 Euclidean Vector Spaces (Compatibility Mode) PDFKanisha Lakshmi BakthavasalamNo ratings yet

- Homework English Course: Univeristatea Titu Maiorescu Bucuresti Facultatea de InformaticaDocument9 pagesHomework English Course: Univeristatea Titu Maiorescu Bucuresti Facultatea de InformaticaMihai Alexandru TuțuNo ratings yet

- MSDS 6. 33kv, 33 KV, PTDocument2 pagesMSDS 6. 33kv, 33 KV, PTRamu RamuNo ratings yet

- PDF Algorithms Illuminated Part 3 Greedy Algorithms and Dynamic Programming 1St Edition Tim Roughgarden Ebook Full ChapterDocument53 pagesPDF Algorithms Illuminated Part 3 Greedy Algorithms and Dynamic Programming 1St Edition Tim Roughgarden Ebook Full Chapterkimberly.hawkins835100% (7)

- Recidual Force EquationDocument14 pagesRecidual Force EquationGirish DeshmukhNo ratings yet

- 1 - Relays & Contactors PDFDocument14 pages1 - Relays & Contactors PDFSallak IdrisNo ratings yet

- Electrical Energy Audit ReportDocument61 pagesElectrical Energy Audit ReportMohd. Zain100% (1)

- Java Eclipse ZK Maven Tomcat SetupDocument54 pagesJava Eclipse ZK Maven Tomcat SetupJuan NohNo ratings yet

- CHPT 12.2 PowerpointDocument62 pagesCHPT 12.2 PowerpointA ANo ratings yet

- Lab 4-5 Applied Linear Algebra For It - 501032: 1 ExercisesDocument4 pagesLab 4-5 Applied Linear Algebra For It - 501032: 1 ExercisesAnh QuocNo ratings yet

- Memory Modul Part Number D2X533BW-X256Document8 pagesMemory Modul Part Number D2X533BW-X256Rendy Adam FarhanNo ratings yet

- HP Laser 103a PrinterDocument2 pagesHP Laser 103a PrinterIsaac Musiwa BandaNo ratings yet

- Personality Traits of College Students in Emilio Aguinaldo CollegeDocument47 pagesPersonality Traits of College Students in Emilio Aguinaldo CollegeKassandra Fermano100% (1)

- Multipliers Used To Convert To Standard Measurement Units ReferencesDocument18 pagesMultipliers Used To Convert To Standard Measurement Units Referencesira huttNo ratings yet

- MAD Worksheet 1Document8 pagesMAD Worksheet 1harshasingha450No ratings yet

- Method Statement For StatnamicDocument15 pagesMethod Statement For StatnamicMohd ZulhaidyNo ratings yet

- C# Code ShutdownDocument5 pagesC# Code ShutdownNhạt PhaiNo ratings yet

- FAQ How Do I Register A Hotkey in VBNETDocument3 pagesFAQ How Do I Register A Hotkey in VBNET.adtmmalNo ratings yet

- Sample Street Light Report 2 PDFDocument58 pagesSample Street Light Report 2 PDFApoorva v Hejjaji100% (1)

- Design and Implementation of A Software Result Processing and Transcript Generation SystemDocument7 pagesDesign and Implementation of A Software Result Processing and Transcript Generation SystemDinomarshal PezumNo ratings yet

- Trig FunctionsDocument6 pagesTrig FunctionsJunaidNo ratings yet

- Lxw1028735ref - SB KL Ex Ba Si - 1220001412Document51 pagesLxw1028735ref - SB KL Ex Ba Si - 1220001412johnahearne8639No ratings yet

- Microeconomics 8th Edition Perloff Solutions ManualDocument21 pagesMicroeconomics 8th Edition Perloff Solutions Manualtheclamarrowlkro21100% (23)

- Chap 123 Final Paper N Aunt ADocument48 pagesChap 123 Final Paper N Aunt AjoannaotawayNo ratings yet

- Ni Based Superalloy: Casting Technology, Metallurgy, Development, Properties and ApplicationsDocument46 pagesNi Based Superalloy: Casting Technology, Metallurgy, Development, Properties and ApplicationsffazlaliNo ratings yet

- MIniMax AlgorithmDocument8 pagesMIniMax AlgorithmVariable 14No ratings yet