Professional Documents

Culture Documents

7043 Rows × 21 Columns: Import As Import As Import As Import As Import As

7043 Rows × 21 Columns: Import As Import As Import As Import As Import As

Copyright:

Available Formats

You might also like

- CQE Study Questions, Answers and SolutionsDocument108 pagesCQE Study Questions, Answers and Solutionsrick.pepper394896% (23)

- Bill Miller Investment Strategy and Theory of Efficient Market.Document12 pagesBill Miller Investment Strategy and Theory of Efficient Market.Xun YangNo ratings yet

- MinitabDocument26 pagesMinitabgpuonline75% (8)

- Untitled 14Document1 pageUntitled 14Sujeet RajputNo ratings yet

- Retail Info Nov'20Document358 pagesRetail Info Nov'20Shahriar SabbirNo ratings yet

- Statistics Xi Annual Examination 2023 All GroupsDocument6 pagesStatistics Xi Annual Examination 2023 All GroupsSaadat KhokharNo ratings yet

- Bank LoanDocument441 pagesBank LoanDhansiri HouseNo ratings yet

- Cross CheckDocument2,913 pagesCross CheckSomdeb KarmakarNo ratings yet

- HR AnalyticsDocument5 pagesHR AnalyticsJohnNo ratings yet

- Sic Temporal Portal 80 (Gas Natural) (WC1061940) (20200809 - 2000)Document1 pageSic Temporal Portal 80 (Gas Natural) (WC1061940) (20200809 - 2000)kevinmmp3No ratings yet

- Book 2Document28 pagesBook 2BALRAJ RANDHAWANo ratings yet

- Loan Sanction TestDocument22 pagesLoan Sanction TestAnand Anubhav DataAnalyst Batch48No ratings yet

- Mã Nhân Viên Họ Tên Mã Thẻ Mã Phòng Ban Tên Phòng Bangiới Tính Phiên Bản Vân Tay 10.0 Phiên Bản Vận Tay 9.0 Số Vein Chất Lượng Khuôn Mặt Plamprint QtyDocument6 pagesMã Nhân Viên Họ Tên Mã Thẻ Mã Phòng Ban Tên Phòng Bangiới Tính Phiên Bản Vân Tay 10.0 Phiên Bản Vận Tay 9.0 Số Vein Chất Lượng Khuôn Mặt Plamprint QtyPhương Nguyễn Dương ThếNo ratings yet

- Report Team Bu Widi April 2022Document43 pagesReport Team Bu Widi April 2022Ridho RinumpokoNo ratings yet

- Cleaning DataDocument18 pagesCleaning DataVicky VickyNo ratings yet

- Presentasi Puskesmas PokaDocument12 pagesPresentasi Puskesmas PokaBotchanNo ratings yet

- Item Analysis TemplateDocument12 pagesItem Analysis TemplateRey Salomon VistalNo ratings yet

- 1 Host CommunitiesDocument1 page1 Host Communitiesteamz omanyiNo ratings yet

- Apznzaauzxxdj2l Zfencthb3sxrgtwx86kdf0gihdqyts5nz5gjtie 8sjzp0f3zpmbkk3 Oxbt2dqfznq w0gsbzdptuw4qqk8zfzjo9nd8nz9xnowdj8oobvnlfon4vmsr6isvfuif1fmqpibg0lmstq o2y4zatql8ju4ravmimjr6gqsttcii9rxvprdzcr5ne1weycey0gy2wDocument5 pagesApznzaauzxxdj2l Zfencthb3sxrgtwx86kdf0gihdqyts5nz5gjtie 8sjzp0f3zpmbkk3 Oxbt2dqfznq w0gsbzdptuw4qqk8zfzjo9nd8nz9xnowdj8oobvnlfon4vmsr6isvfuif1fmqpibg0lmstq o2y4zatql8ju4ravmimjr6gqsttcii9rxvprdzcr5ne1weycey0gy2wakshat.wadhwaNo ratings yet

- Optus Delivery NoteDocument1 pageOptus Delivery NoteAna ModyNo ratings yet

- Analysis & Interpretation: Q 1 Do You Take Any Financial Services From Bank?Document10 pagesAnalysis & Interpretation: Q 1 Do You Take Any Financial Services From Bank?Aayushi ShahNo ratings yet

- Questions Strongly Agree Agree Undecided Disagree: Chart TitleDocument3 pagesQuestions Strongly Agree Agree Undecided Disagree: Chart TitleGiezel RevisNo ratings yet

- Male Female Info GraphicDocument11 pagesMale Female Info GraphicLeonardo CorreiaNo ratings yet

- Data 2016Document2 pagesData 2016Johan NicholasNo ratings yet

- Jee Mains Answer KeysDocument35 pagesJee Mains Answer KeysSarjit GurjarNo ratings yet

- All Lectures BasicsDocument348 pagesAll Lectures BasicsKarim HassaanNo ratings yet

- All ReportDocument31 pagesAll ReportAzizki WanieNo ratings yet

- RL 3.2 - Rawat DaruratDocument3 pagesRL 3.2 - Rawat DaruratatynorengNo ratings yet

- 14 Registered Candidates-1Document1 page14 Registered Candidates-1Kalicharan PandeyNo ratings yet

- CML For NetDocument23 pagesCML For Netsarath6872No ratings yet

- Business Analytics (Case 3.3)Document213 pagesBusiness Analytics (Case 3.3)savisuren312No ratings yet

- HR AnalyticsDocument24 pagesHR AnalyticsJohnNo ratings yet

- GTUTeachingScheme Sem-4 MBA PDFDocument4 pagesGTUTeachingScheme Sem-4 MBA PDFHansa PrajapatiNo ratings yet

- Market Res Testing SteelscrDocument23 pagesMarket Res Testing SteelscrÅdnAn MehmOodNo ratings yet

- Vlookup File For PracticeDocument12 pagesVlookup File For PracticeRushikesh KharatNo ratings yet

- Template For 'Skill Development System Performance'Document20 pagesTemplate For 'Skill Development System Performance'DhruvilJakasaniyaNo ratings yet

- JB Al Khuwair In-Store CSAT Survey Consolidator Ver 3Document2,278 pagesJB Al Khuwair In-Store CSAT Survey Consolidator Ver 3Maria roxanne HernandezNo ratings yet

- 25 - December - 2022 Truck Sheet Match (Responses)Document13 pages25 - December - 2022 Truck Sheet Match (Responses)ds wwNo ratings yet

- Object Parameter Sub - Topic (Short) : Reporttimerimlba4Document6 pagesObject Parameter Sub - Topic (Short) : Reporttimerimlba4Mardianto ChandraNo ratings yet

- FINALDocument5 pagesFINALSrishti GaurNo ratings yet

- Table D. Key Employment Indicators by Sex With Measures of Precision, Philippines February2022f, January2023p and February2023pDocument5 pagesTable D. Key Employment Indicators by Sex With Measures of Precision, Philippines February2022f, January2023p and February2023pGellie Rose PorthreeyesNo ratings yet

- Atharva: Bill No-45Document2 pagesAtharva: Bill No-45jaiswalpd165No ratings yet

- Adobe Scan 15-Feb-2024Document3 pagesAdobe Scan 15-Feb-2024Ganta AravindNo ratings yet

- Aits Slip PWDocument1 pageAits Slip PWbikrampaul021No ratings yet

- DT. Penduduk Beutong Edit 2021Document3 pagesDT. Penduduk Beutong Edit 2021intan fazlianiNo ratings yet

- 265 Prasad Seeds P LTD Medchal (265)Document1 page265 Prasad Seeds P LTD Medchal (265)krishna makkaNo ratings yet

- Quotation Format 5Document2 pagesQuotation Format 5alpesh patelNo ratings yet

- Conteos PresentacionDocument13 pagesConteos PresentacionKevin Daniel Guerrero TolentinoNo ratings yet

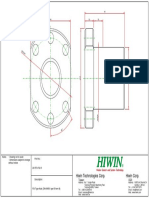

- Hiwin Corp. Hiwin Technologies Corp.: Usa: TaiwanDocument1 pageHiwin Corp. Hiwin Technologies Corp.: Usa: TaiwanRadovan KnezevicNo ratings yet

- Carril 2calculosDocument8 pagesCarril 2calculosjose geovanny garcia cajinaNo ratings yet

- Benchmarking HR Practices Across Media Related CompaniesDocument48 pagesBenchmarking HR Practices Across Media Related CompanieslonikasinghNo ratings yet

- Pone 0181807 s004Document124 pagesPone 0181807 s004kierra2No ratings yet

- CDW ReportDocument4 pagesCDW ReportMike Zoren BalicocoNo ratings yet

- Shortlisting Criteria SAP - 2023Document1 pageShortlisting Criteria SAP - 2023ULTIMATE SUPER HERO SERIES USHSNo ratings yet

- Sex Disaggregated Data of Magsingal National High Schoolschool Personnel SY 2019-2020Document15 pagesSex Disaggregated Data of Magsingal National High Schoolschool Personnel SY 2019-2020L.a.MarquezNo ratings yet

- Statistics: 1. A. Ukuran Tendensi SentralDocument16 pagesStatistics: 1. A. Ukuran Tendensi SentralEco BodoNo ratings yet

- Rsran046 - Sho Adjacencies-CellpairDocument10 pagesRsran046 - Sho Adjacencies-CellpairOnnyNo ratings yet

- Institute Wise Activities From 2023-01-01 To 2023-12-31Document1 pageInstitute Wise Activities From 2023-01-01 To 2023-12-31Fozia LiaqatNo ratings yet

- December 2021 90% Confidence Interval) : F P P P P P P P P P P P PDocument10 pagesDecember 2021 90% Confidence Interval) : F P P P P P P P P P P P PRealie BorbeNo ratings yet

- Shital powerBIsheet1Document16 pagesShital powerBIsheet1Shital MashitakarNo ratings yet

- Flipkart Labels 03 Apr 2024-12-54 CroppedDocument4 pagesFlipkart Labels 03 Apr 2024-12-54 Croppedchamundaxerox88No ratings yet

- Post Graduate Session 2 OperationsDocument129 pagesPost Graduate Session 2 Operationssujithgec1No ratings yet

- 2012 HCI MA H2 P2 Prelim SolnDocument7 pages2012 HCI MA H2 P2 Prelim Solnkll93No ratings yet

- Solution Econometric Chapter 10 Regression Panel DataDocument3 pagesSolution Econometric Chapter 10 Regression Panel Datadumuk473No ratings yet

- Analisis Deret Waktu SarimaDocument11 pagesAnalisis Deret Waktu SarimaAjeng Jasmine FirdausyNo ratings yet

- 05 Quantitative Manual Chapter 4Document31 pages05 Quantitative Manual Chapter 4Ivy Gultian VillavicencioNo ratings yet

- S1-Introduction To Quantitative Research 12-9-2017Document25 pagesS1-Introduction To Quantitative Research 12-9-2017YaraNo ratings yet

- Prep - Data Sets - For Students - BADocument33 pagesPrep - Data Sets - For Students - BAMyThinkPotNo ratings yet

- Statisticshomework f14Document2 pagesStatisticshomework f14Pooja JainNo ratings yet

- Burnout Syndrome Among Dental Students: A Short Version of The "Burnout Clinical Subtype Questionnaire " Adapted For Students (BCSQ-12-SS)Document11 pagesBurnout Syndrome Among Dental Students: A Short Version of The "Burnout Clinical Subtype Questionnaire " Adapted For Students (BCSQ-12-SS)abhishekbmcNo ratings yet

- 2.measures of Variation by Shakil-1107Document18 pages2.measures of Variation by Shakil-1107MD AL-AMINNo ratings yet

- Pengaruh Etika Kerja Terhadap Kualitas Pelayanan Melalui Profesionalisme Kerja Pada Cv. Sentosa Deli Mandiri MedanDocument13 pagesPengaruh Etika Kerja Terhadap Kualitas Pelayanan Melalui Profesionalisme Kerja Pada Cv. Sentosa Deli Mandiri MedanGamma AnggaraNo ratings yet

- Correlation and Linear RegressionDocument25 pagesCorrelation and Linear RegressionMudita ChawlaNo ratings yet

- Measures of Central TendencyDocument8 pagesMeasures of Central TendencyNor HidayahNo ratings yet

- Standard Deviation - Wikipedia, The Free EncyclopediaDocument12 pagesStandard Deviation - Wikipedia, The Free EncyclopediaManoj BorahNo ratings yet

- BBM 350 Managerial StatisticsDocument5 pagesBBM 350 Managerial StatisticsphelixrabiloNo ratings yet

- Null Hypothesis Significance TestingDocument9 pagesNull Hypothesis Significance Testingkash1080No ratings yet

- Chapter 2 - P2 PDFDocument41 pagesChapter 2 - P2 PDFDr-Rabia Almamalook100% (1)

- Lazy Learning (Or Learning From Your Neighbors)Document3 pagesLazy Learning (Or Learning From Your Neighbors)Diyar T AlzuhairiNo ratings yet

- EC221: Principles of Econometrics Introducing Lent: DR M. SchafgansDocument518 pagesEC221: Principles of Econometrics Introducing Lent: DR M. SchafgansRemo MuharemiNo ratings yet

- Ho MultivariateDocument4 pagesHo MultivariateChirag SharmaNo ratings yet

- 15XD25Document3 pages15XD2520PW02 - AKASH ANo ratings yet

- Stat4006 2022-23 PS4Document3 pagesStat4006 2022-23 PS4resulmamiyev1No ratings yet

- Forecasting: by Alok Kumar SinghDocument30 pagesForecasting: by Alok Kumar Singhchandel08No ratings yet

- Introduction To Boosting - 2Document79 pagesIntroduction To Boosting - 2Waqar SaleemNo ratings yet

- Egranger Version 1 CodeDocument7 pagesEgranger Version 1 CodeAftab KhakwaniNo ratings yet

- Linear Regression AssignmentDocument1 pageLinear Regression AssignmentJohnry DayupayNo ratings yet

- Weighted Average and Exponential SmoothingDocument3 pagesWeighted Average and Exponential SmoothingKumuthaa IlangovanNo ratings yet

- Stat-112 1-10Document108 pagesStat-112 1-10Mikaela BasconesNo ratings yet

7043 Rows × 21 Columns: Import As Import As Import As Import As Import As

7043 Rows × 21 Columns: Import As Import As Import As Import As Import As

Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

7043 Rows × 21 Columns: Import As Import As Import As Import As Import As

7043 Rows × 21 Columns: Import As Import As Import As Import As Import As

Copyright:

Available Formats

In [1]: print("hello world")

hello world

In [2]: import numpy as np

In [3]: import pandas as pd

In [4]: import seaborn as sns

In [5]: import matplotlib.pyplot as plt

In [6]: import matplotlib.ticker as mtick

In [7]: telecom_cust_churn = pd.read_csv('F:/PRADEEP/CASE STUDY PYTHON/Churn.csv')

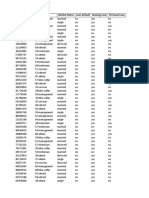

In [8]: telecom_cust_churn

Out[8]: customerID gender SeniorCitizen Partner Dependents tenure PhoneService MultipleLines InternetSe

7590- No phone

0 Female 0 Yes No 1 No

VHVEG service

5575-

1 Male 0 No No 34 Yes No

GNVDE

3668-

2 Male 0 No No 2 Yes No

QPYBK

7795- No phone

3 Male 0 No No 45 No

CFOCW service

4 9237-HQITU Female 0 No No 2 Yes No Fiber

... ... ... ... ... ... ... ... ...

7038 6840-RESVB Male 0 Yes Yes 24 Yes Yes

2234-

7039 Female 0 Yes Yes 72 Yes Yes Fiber

XADUH

No phone

7040 4801-JZAZL Female 0 Yes Yes 11 No

service

8361-

7041 Male 1 Yes No 4 Yes Yes Fiber

LTMKD

7042 3186-AJIEK Male 0 No No 66 Yes No Fiber

7043 rows × 21 columns

In [39]: telecom_cust_churn.head()

Out[39]: customerID gender SeniorCitizen Partner Dependents tenure PhoneService MultipleLines InternetServi

7590- No phone

0 Female 0 Yes No 1 No D

VHVEG service

5575-

1 Male 0 No No 34 Yes No D

GNVDE

3668-

2 Male 0 No No 2 Yes No D

QPYBK

7795- No phone

3 Male 0 No No 45 No D

CFOCW service

4 9237-HQITU Female 0 No No 2 Yes No Fiber opt

5 rows × 21 columns

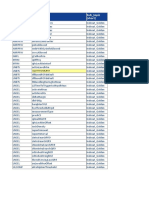

In [40]: telecom_cust_churn.columns.values

Out[40]: array(['customerID', 'gender', 'SeniorCitizen', 'Partner', 'Dependents',

'tenure', 'PhoneService', 'MultipleLines', 'InternetService',

'OnlineSecurity', 'OnlineBackup', 'DeviceProtection',

'TechSupport', 'StreamingTV', 'StreamingMovies', 'Contract',

'PaperlessBilling', 'PaymentMethod', 'MonthlyCharges',

'TotalCharges', 'Churn'], dtype=object)

In [14]: telecom_cust_churn.head()

Out[14]: customerID gender SeniorCitizen Partner Dependents tenure PhoneService MultipleLines InternetServi

7590- No phone

0 Female 0 Yes No 1 No D

VHVEG service

5575-

1 Male 0 No No 34 Yes No D

GNVDE

3668-

2 Male 0 No No 2 Yes No D

QPYBK

7795- No phone

3 Male 0 No No 45 No D

CFOCW service

4 9237-HQITU Female 0 No No 2 Yes No Fiber opt

5 rows × 21 columns

In [15]: telecom_cust_churn.columns.values

Out[15]: array(['customerID', 'gender', 'SeniorCitizen', 'Partner', 'Dependents',

'tenure', 'PhoneService', 'MultipleLines', 'InternetService',

'OnlineSecurity', 'OnlineBackup', 'DeviceProtection',

'TechSupport', 'StreamingTV', 'StreamingMovies', 'Contract',

'PaperlessBilling', 'PaymentMethod', 'MonthlyCharges',

'TotalCharges', 'Churn'], dtype=object)

In [16]: # Checking the data types of all the columns

telecom_cust_churn.dtypes

Out[16]: customerID object

gender object

SeniorCitizen int64

Partner object

Dependents object

tenure int64

PhoneService object

MultipleLines object

InternetService object

OnlineSecurity object

OnlineBackup object

DeviceProtection object

TechSupport object

StreamingTV object

StreamingMovies object

Contract object

PaperlessBilling object

PaymentMethod object

MonthlyCharges float64

TotalCharges object

Churn object

dtype: object

In [19]: # Converting Total Charges to a numerical data type.

telecom_cust_churn.TotalCharges = pd.to_numeric(telecom_cust_churn.TotalCharges, error

telecom_cust_churn.isnull().sum()

Out[19]: customerID 0

gender 0

SeniorCitizen 0

Partner 0

Dependents 0

tenure 0

PhoneService 0

MultipleLines 0

InternetService 0

OnlineSecurity 0

OnlineBackup 0

DeviceProtection 0

TechSupport 0

StreamingTV 0

StreamingMovies 0

Contract 0

PaperlessBilling 0

PaymentMethod 0

MonthlyCharges 0

TotalCharges 11

Churn 0

dtype: int64

In [20]: #Removing missing values

telecom_cust_churn.dropna(inplace = True)

#Remove customer IDs from the data set

df2 = telecom_cust_churn.iloc[:,1:]

#Converting the predictor variable in a binary numeric variable

df2['Churn'].replace(to_replace='Yes', value=1, inplace=True)

df2['Churn'].replace(to_replace='No', value=0, inplace=True)

#Let's convert all the categorical variables into dummy variables

df_dummies = pd.get_dummies(df2)

df_dummies.head()

Out[20]:

SeniorCitizen tenure MonthlyCharges TotalCharges Churn gender_Female gender_Male Partner_No Part

0 0 1 29.85 29.85 0 1 0 0

1 0 34 56.95 1889.50 0 0 1 1

2 0 2 53.85 108.15 1 0 1 1

3 0 45 42.30 1840.75 0 0 1 1

4 0 2 70.70 151.65 1 1 0 1

5 rows × 46 columns

In [21]: #Get Correlation of "Churn" with other variables:

plt.figure(figsize=(15,8))

df_dummies.corr()['Churn'].sort_values(ascending = False).plot(kind='bar')

Out[21]: <AxesSubplot:>

In [ ]: # Observation

Month to month contracts, absence of online security and tech support seem to be posit

Interestingly, services such as Online security, streaming TV, online backup, tech sup

We will explore the patterns for the above correlations below before we delve into mod

In [22]: # Relation Between Monthly and Total Charges

telecom_cust_churn[['MonthlyCharges', 'TotalCharges']].plot.scatter(x = 'MonthlyCharge

y='TotalCharges')

Out[22]: <AxesSubplot:xlabel='MonthlyCharges', ylabel='TotalCharges'>

In [23]: sns.boxplot(x = telecom_cust_churn.Churn, y = telecom_cust_churn.tenure)

Out[23]: <AxesSubplot:xlabel='Churn', ylabel='tenure'>

In [24]: #1 Logistic Regression

# We will use the data frame where we had created dummy variables

y = df_dummies['Churn'].values

X = df_dummies.drop(columns = ['Churn'])

# Scaling all the variables to a range of 0 to 1

from sklearn.preprocessing import MinMaxScaler

features = X.columns.values

scaler = MinMaxScaler(feature_range = (0,1))

scaler.fit(X)

X = pd.DataFrame(scaler.transform(X))

X.columns = features

In [25]: # Create Train & Test Data

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=

In [26]: # Running logistic regression model

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

result = model.fit(X_train, y_train)

In [27]: from sklearn import metrics

prediction_test = model.predict(X_test)

# Print the prediction accuracy

print (metrics.accuracy_score(y_test, prediction_test))

0.8075829383886256

In [28]: # To get the weights of all the variables

weights = pd.Series(model.coef_[0],

index=X.columns.values)

print (weights.sort_values(ascending = False)[:10].plot(kind='bar'))

AxesSubplot(0.125,0.125;0.775x0.755)

In [30]: print(weights.sort_values(ascending = False)[-10:].plot(kind='bar'))

AxesSubplot(0.125,0.125;0.775x0.755)

In [ ]: # Observations

We can see that some variables have a negative relation to our predicted variable (Chu

1) As we saw in our EDA, having a 2 month contract reduces chances of churn. 2 month c

2) Having DSL internet service also reduces the proability of Churn

3) Lastly, total charges, monthly contracts, fibre optic internet services and seniori

Any hypothesis on the above would be really helpful!

In [31]: #2 Random Forest

from sklearn.ensemble import RandomForestClassifier

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=

model_rf = RandomForestClassifier(n_estimators=1000 , oob_score = True, n_jobs = -1,

random_state =50, max_features = "auto",

max_leaf_nodes = 30)

model_rf.fit(X_train, y_train)

# Make predictions

prediction_test = model_rf.predict(X_test)

print (metrics.accuracy_score(y_test, prediction_test))

0.8088130774697939

In [32]: importances = model_rf.feature_importances_

weights = pd.Series(importances,

index=X.columns.values)

weights.sort_values()[-10:].plot(kind = 'barh')

Out[32]: <AxesSubplot:>

In [ ]: # Observations:

1) From random forest algorithm, monthly contract, tenure and total charges are the mo

2) The results from random forest are very similar to that of the logistic regression

In [33]: #3 Support Vector Machine (SVM)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=

In [35]: from sklearn.svm import SVC

model.svm = SVC(kernel='linear')

model.svm.fit(X_train,y_train)

preds = model.svm.predict(X_test)

metrics.accuracy_score(y_test, preds)

Out[35]: 0.820184790334044

In [36]: # Create the Confusion matrix

from sklearn.metrics import classification_report, confusion_matrix

print(confusion_matrix(y_test,preds))

[[953 89]

[164 201]]

In [ ]: # Observation

Wth SVM I was able to increase the accuracy to upto 82%.

You might also like

- CQE Study Questions, Answers and SolutionsDocument108 pagesCQE Study Questions, Answers and Solutionsrick.pepper394896% (23)

- Bill Miller Investment Strategy and Theory of Efficient Market.Document12 pagesBill Miller Investment Strategy and Theory of Efficient Market.Xun YangNo ratings yet

- MinitabDocument26 pagesMinitabgpuonline75% (8)

- Untitled 14Document1 pageUntitled 14Sujeet RajputNo ratings yet

- Retail Info Nov'20Document358 pagesRetail Info Nov'20Shahriar SabbirNo ratings yet

- Statistics Xi Annual Examination 2023 All GroupsDocument6 pagesStatistics Xi Annual Examination 2023 All GroupsSaadat KhokharNo ratings yet

- Bank LoanDocument441 pagesBank LoanDhansiri HouseNo ratings yet

- Cross CheckDocument2,913 pagesCross CheckSomdeb KarmakarNo ratings yet

- HR AnalyticsDocument5 pagesHR AnalyticsJohnNo ratings yet

- Sic Temporal Portal 80 (Gas Natural) (WC1061940) (20200809 - 2000)Document1 pageSic Temporal Portal 80 (Gas Natural) (WC1061940) (20200809 - 2000)kevinmmp3No ratings yet

- Book 2Document28 pagesBook 2BALRAJ RANDHAWANo ratings yet

- Loan Sanction TestDocument22 pagesLoan Sanction TestAnand Anubhav DataAnalyst Batch48No ratings yet

- Mã Nhân Viên Họ Tên Mã Thẻ Mã Phòng Ban Tên Phòng Bangiới Tính Phiên Bản Vân Tay 10.0 Phiên Bản Vận Tay 9.0 Số Vein Chất Lượng Khuôn Mặt Plamprint QtyDocument6 pagesMã Nhân Viên Họ Tên Mã Thẻ Mã Phòng Ban Tên Phòng Bangiới Tính Phiên Bản Vân Tay 10.0 Phiên Bản Vận Tay 9.0 Số Vein Chất Lượng Khuôn Mặt Plamprint QtyPhương Nguyễn Dương ThếNo ratings yet

- Report Team Bu Widi April 2022Document43 pagesReport Team Bu Widi April 2022Ridho RinumpokoNo ratings yet

- Cleaning DataDocument18 pagesCleaning DataVicky VickyNo ratings yet

- Presentasi Puskesmas PokaDocument12 pagesPresentasi Puskesmas PokaBotchanNo ratings yet

- Item Analysis TemplateDocument12 pagesItem Analysis TemplateRey Salomon VistalNo ratings yet

- 1 Host CommunitiesDocument1 page1 Host Communitiesteamz omanyiNo ratings yet

- Apznzaauzxxdj2l Zfencthb3sxrgtwx86kdf0gihdqyts5nz5gjtie 8sjzp0f3zpmbkk3 Oxbt2dqfznq w0gsbzdptuw4qqk8zfzjo9nd8nz9xnowdj8oobvnlfon4vmsr6isvfuif1fmqpibg0lmstq o2y4zatql8ju4ravmimjr6gqsttcii9rxvprdzcr5ne1weycey0gy2wDocument5 pagesApznzaauzxxdj2l Zfencthb3sxrgtwx86kdf0gihdqyts5nz5gjtie 8sjzp0f3zpmbkk3 Oxbt2dqfznq w0gsbzdptuw4qqk8zfzjo9nd8nz9xnowdj8oobvnlfon4vmsr6isvfuif1fmqpibg0lmstq o2y4zatql8ju4ravmimjr6gqsttcii9rxvprdzcr5ne1weycey0gy2wakshat.wadhwaNo ratings yet

- Optus Delivery NoteDocument1 pageOptus Delivery NoteAna ModyNo ratings yet

- Analysis & Interpretation: Q 1 Do You Take Any Financial Services From Bank?Document10 pagesAnalysis & Interpretation: Q 1 Do You Take Any Financial Services From Bank?Aayushi ShahNo ratings yet

- Questions Strongly Agree Agree Undecided Disagree: Chart TitleDocument3 pagesQuestions Strongly Agree Agree Undecided Disagree: Chart TitleGiezel RevisNo ratings yet

- Male Female Info GraphicDocument11 pagesMale Female Info GraphicLeonardo CorreiaNo ratings yet

- Data 2016Document2 pagesData 2016Johan NicholasNo ratings yet

- Jee Mains Answer KeysDocument35 pagesJee Mains Answer KeysSarjit GurjarNo ratings yet

- All Lectures BasicsDocument348 pagesAll Lectures BasicsKarim HassaanNo ratings yet

- All ReportDocument31 pagesAll ReportAzizki WanieNo ratings yet

- RL 3.2 - Rawat DaruratDocument3 pagesRL 3.2 - Rawat DaruratatynorengNo ratings yet

- 14 Registered Candidates-1Document1 page14 Registered Candidates-1Kalicharan PandeyNo ratings yet

- CML For NetDocument23 pagesCML For Netsarath6872No ratings yet

- Business Analytics (Case 3.3)Document213 pagesBusiness Analytics (Case 3.3)savisuren312No ratings yet

- HR AnalyticsDocument24 pagesHR AnalyticsJohnNo ratings yet

- GTUTeachingScheme Sem-4 MBA PDFDocument4 pagesGTUTeachingScheme Sem-4 MBA PDFHansa PrajapatiNo ratings yet

- Market Res Testing SteelscrDocument23 pagesMarket Res Testing SteelscrÅdnAn MehmOodNo ratings yet

- Vlookup File For PracticeDocument12 pagesVlookup File For PracticeRushikesh KharatNo ratings yet

- Template For 'Skill Development System Performance'Document20 pagesTemplate For 'Skill Development System Performance'DhruvilJakasaniyaNo ratings yet

- JB Al Khuwair In-Store CSAT Survey Consolidator Ver 3Document2,278 pagesJB Al Khuwair In-Store CSAT Survey Consolidator Ver 3Maria roxanne HernandezNo ratings yet

- 25 - December - 2022 Truck Sheet Match (Responses)Document13 pages25 - December - 2022 Truck Sheet Match (Responses)ds wwNo ratings yet

- Object Parameter Sub - Topic (Short) : Reporttimerimlba4Document6 pagesObject Parameter Sub - Topic (Short) : Reporttimerimlba4Mardianto ChandraNo ratings yet

- FINALDocument5 pagesFINALSrishti GaurNo ratings yet

- Table D. Key Employment Indicators by Sex With Measures of Precision, Philippines February2022f, January2023p and February2023pDocument5 pagesTable D. Key Employment Indicators by Sex With Measures of Precision, Philippines February2022f, January2023p and February2023pGellie Rose PorthreeyesNo ratings yet

- Atharva: Bill No-45Document2 pagesAtharva: Bill No-45jaiswalpd165No ratings yet

- Adobe Scan 15-Feb-2024Document3 pagesAdobe Scan 15-Feb-2024Ganta AravindNo ratings yet

- Aits Slip PWDocument1 pageAits Slip PWbikrampaul021No ratings yet

- DT. Penduduk Beutong Edit 2021Document3 pagesDT. Penduduk Beutong Edit 2021intan fazlianiNo ratings yet

- 265 Prasad Seeds P LTD Medchal (265)Document1 page265 Prasad Seeds P LTD Medchal (265)krishna makkaNo ratings yet

- Quotation Format 5Document2 pagesQuotation Format 5alpesh patelNo ratings yet

- Conteos PresentacionDocument13 pagesConteos PresentacionKevin Daniel Guerrero TolentinoNo ratings yet

- Hiwin Corp. Hiwin Technologies Corp.: Usa: TaiwanDocument1 pageHiwin Corp. Hiwin Technologies Corp.: Usa: TaiwanRadovan KnezevicNo ratings yet

- Carril 2calculosDocument8 pagesCarril 2calculosjose geovanny garcia cajinaNo ratings yet

- Benchmarking HR Practices Across Media Related CompaniesDocument48 pagesBenchmarking HR Practices Across Media Related CompanieslonikasinghNo ratings yet

- Pone 0181807 s004Document124 pagesPone 0181807 s004kierra2No ratings yet

- CDW ReportDocument4 pagesCDW ReportMike Zoren BalicocoNo ratings yet

- Shortlisting Criteria SAP - 2023Document1 pageShortlisting Criteria SAP - 2023ULTIMATE SUPER HERO SERIES USHSNo ratings yet

- Sex Disaggregated Data of Magsingal National High Schoolschool Personnel SY 2019-2020Document15 pagesSex Disaggregated Data of Magsingal National High Schoolschool Personnel SY 2019-2020L.a.MarquezNo ratings yet

- Statistics: 1. A. Ukuran Tendensi SentralDocument16 pagesStatistics: 1. A. Ukuran Tendensi SentralEco BodoNo ratings yet

- Rsran046 - Sho Adjacencies-CellpairDocument10 pagesRsran046 - Sho Adjacencies-CellpairOnnyNo ratings yet

- Institute Wise Activities From 2023-01-01 To 2023-12-31Document1 pageInstitute Wise Activities From 2023-01-01 To 2023-12-31Fozia LiaqatNo ratings yet

- December 2021 90% Confidence Interval) : F P P P P P P P P P P P PDocument10 pagesDecember 2021 90% Confidence Interval) : F P P P P P P P P P P P PRealie BorbeNo ratings yet

- Shital powerBIsheet1Document16 pagesShital powerBIsheet1Shital MashitakarNo ratings yet

- Flipkart Labels 03 Apr 2024-12-54 CroppedDocument4 pagesFlipkart Labels 03 Apr 2024-12-54 Croppedchamundaxerox88No ratings yet

- Post Graduate Session 2 OperationsDocument129 pagesPost Graduate Session 2 Operationssujithgec1No ratings yet

- 2012 HCI MA H2 P2 Prelim SolnDocument7 pages2012 HCI MA H2 P2 Prelim Solnkll93No ratings yet

- Solution Econometric Chapter 10 Regression Panel DataDocument3 pagesSolution Econometric Chapter 10 Regression Panel Datadumuk473No ratings yet

- Analisis Deret Waktu SarimaDocument11 pagesAnalisis Deret Waktu SarimaAjeng Jasmine FirdausyNo ratings yet

- 05 Quantitative Manual Chapter 4Document31 pages05 Quantitative Manual Chapter 4Ivy Gultian VillavicencioNo ratings yet

- S1-Introduction To Quantitative Research 12-9-2017Document25 pagesS1-Introduction To Quantitative Research 12-9-2017YaraNo ratings yet

- Prep - Data Sets - For Students - BADocument33 pagesPrep - Data Sets - For Students - BAMyThinkPotNo ratings yet

- Statisticshomework f14Document2 pagesStatisticshomework f14Pooja JainNo ratings yet

- Burnout Syndrome Among Dental Students: A Short Version of The "Burnout Clinical Subtype Questionnaire " Adapted For Students (BCSQ-12-SS)Document11 pagesBurnout Syndrome Among Dental Students: A Short Version of The "Burnout Clinical Subtype Questionnaire " Adapted For Students (BCSQ-12-SS)abhishekbmcNo ratings yet

- 2.measures of Variation by Shakil-1107Document18 pages2.measures of Variation by Shakil-1107MD AL-AMINNo ratings yet

- Pengaruh Etika Kerja Terhadap Kualitas Pelayanan Melalui Profesionalisme Kerja Pada Cv. Sentosa Deli Mandiri MedanDocument13 pagesPengaruh Etika Kerja Terhadap Kualitas Pelayanan Melalui Profesionalisme Kerja Pada Cv. Sentosa Deli Mandiri MedanGamma AnggaraNo ratings yet

- Correlation and Linear RegressionDocument25 pagesCorrelation and Linear RegressionMudita ChawlaNo ratings yet

- Measures of Central TendencyDocument8 pagesMeasures of Central TendencyNor HidayahNo ratings yet

- Standard Deviation - Wikipedia, The Free EncyclopediaDocument12 pagesStandard Deviation - Wikipedia, The Free EncyclopediaManoj BorahNo ratings yet

- BBM 350 Managerial StatisticsDocument5 pagesBBM 350 Managerial StatisticsphelixrabiloNo ratings yet

- Null Hypothesis Significance TestingDocument9 pagesNull Hypothesis Significance Testingkash1080No ratings yet

- Chapter 2 - P2 PDFDocument41 pagesChapter 2 - P2 PDFDr-Rabia Almamalook100% (1)

- Lazy Learning (Or Learning From Your Neighbors)Document3 pagesLazy Learning (Or Learning From Your Neighbors)Diyar T AlzuhairiNo ratings yet

- EC221: Principles of Econometrics Introducing Lent: DR M. SchafgansDocument518 pagesEC221: Principles of Econometrics Introducing Lent: DR M. SchafgansRemo MuharemiNo ratings yet

- Ho MultivariateDocument4 pagesHo MultivariateChirag SharmaNo ratings yet

- 15XD25Document3 pages15XD2520PW02 - AKASH ANo ratings yet

- Stat4006 2022-23 PS4Document3 pagesStat4006 2022-23 PS4resulmamiyev1No ratings yet

- Forecasting: by Alok Kumar SinghDocument30 pagesForecasting: by Alok Kumar Singhchandel08No ratings yet

- Introduction To Boosting - 2Document79 pagesIntroduction To Boosting - 2Waqar SaleemNo ratings yet

- Egranger Version 1 CodeDocument7 pagesEgranger Version 1 CodeAftab KhakwaniNo ratings yet

- Linear Regression AssignmentDocument1 pageLinear Regression AssignmentJohnry DayupayNo ratings yet

- Weighted Average and Exponential SmoothingDocument3 pagesWeighted Average and Exponential SmoothingKumuthaa IlangovanNo ratings yet

- Stat-112 1-10Document108 pagesStat-112 1-10Mikaela BasconesNo ratings yet