Professional Documents

Culture Documents

Ode and Atasets: Rating Easy? AI? Sys? Thy? Morning?

Ode and Atasets: Rating Easy? AI? Sys? Thy? Morning?

Uploaded by

Jiahong He0 ratings0% found this document useful (0 votes)

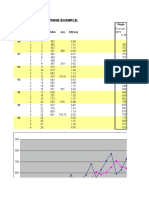

8 views6 pagesThe document contains two sections. The first section is a dataset with 20 rows of ratings for courses rated on ease, use of AI, systems focus, theory focus, and morning class. The second section is a bibliography with 18 citations related to machine learning topics such as domain adaptation, fairness, and imitation learning.

Original Description:

Original Title

19 Back Matter

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document contains two sections. The first section is a dataset with 20 rows of ratings for courses rated on ease, use of AI, systems focus, theory focus, and morning class. The second section is a bibliography with 18 citations related to machine learning topics such as domain adaptation, fairness, and imitation learning.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

8 views6 pagesOde and Atasets: Rating Easy? AI? Sys? Thy? Morning?

Ode and Atasets: Rating Easy? AI? Sys? Thy? Morning?

Uploaded by

Jiahong HeThe document contains two sections. The first section is a dataset with 20 rows of ratings for courses rated on ease, use of AI, systems focus, theory focus, and morning class. The second section is a bibliography with 18 citations related to machine learning topics such as domain adaptation, fairness, and imitation learning.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 6

C ODE AND DATASETS

Rating Easy? AI? Sys? Thy? Morning?

+2 y y n y n

+2 y y n y n

+2 n y n n n

+2 n n n y n

+2 n y y n y

+1 y y n n n

+1 y y n y n

+1 n y n y n

0 n n n n y

0 y n n y y

0 n y n y n

0 y y y y y

-1 y y y n y

-1 n n y y n

-1 n n y n y

-1 y n y n y

-2 n n y y n

-2 n y y n y

-2 y n y n n

-2 y n y n y Table 1: Course rating data set

B IBLIOGRAPHY

Shai Ben-David, John Blitzer, Koby Crammer, and Fernando Pereira.

Analysis of representations for domain adaptation. Advances in

neural information processing systems, 19:137, 2007.

Steffen Bickel, Michael Bruckner, and Tobias Scheffer. Discriminative

learning for differing training and test distributions. In Proceedings

of the International Conference on Machine Learning (ICML), 2007.

Sergey Brin. Near neighbor search in large metric spaces. In Confer-

ence on Very Large Databases (VLDB), 1995.

Hal Daumé III. Frustratingly easy domain adaptation. In Conference

of the Association for Computational Linguistics (ACL), Prague, Czech

Republic, 2007.

Sorelle A Friedler, Carlos Scheidegger, and Suresh Venkatasub-

ramanian. On the (im)possibility of fairness. arXiv preprint

arXiv:1609.07236, 2016.

Moritz Hardt, Eric Price, and Nathan Srebro. Equality of oppor-

tunity in supervised learning. In Advances in Neural Information

Processing Systems, pages 3315–3323, 2016.

Matti Kääriäinen. Lower bounds for reductions. Talk at the Atomic

Learning Workshop (TTI-C), March 2006.

Tom M. Mitchell. Machine Learning. McGraw Hill, 1997.

J. Ross Quinlan. Induction of decision trees. Machine learning, 1(1):

81–106, 1986.

Frank Rosenblatt. The perceptron: A probabilistic model for infor-

mation storage and organization in the brain. Psychological Review,

65:386–408, 1958. Reprinted in Neurocomputing (MIT Press, 1998).

Stéphane Ross, Geoff J. Gordon, and J. Andrew Bagnell. A reduction

of imitation learning and structured prediction to no-regret online

learning. In Proceedings of the Workshop on Artificial Intelligence and

Statistics (AIStats), 2011.

224 a course in machine learning

I NDEX

K-nearest neighbors, 58 categorical features, 30 dual variables, 151

dA -distance, 82 chain rule, 117, 120

p-norms, 104 chord, 102 early stopping, 53, 132

0/1 loss, 100 circuit complexity, 138 embedding, 178

80% rule, 80 clustering, 35, 178 ensemble, 164

clustering quality, 178 error driven, 43

absolute loss, 14 complexity, 34 error rate, 100

activation function, 130 compounding error, 215 estimation error, 71

activations, 41 concave, 102 Euclidean distance, 31

AdaBoost, 166 concavity, 193 evidence, 127

adaptation, 74 concept, 157 example normalization, 59, 60

algorithm, 99 confidence intervals, 68 examples, 9

all pairs, 92 constrained optimization problem, expectation maximization, 186, 189

all versus all, 92 112 expected loss, 16

approximation error, 71 contour, 104 expert, 212

architecture selection, 139 convergence rate, 107 exponential loss, 102, 169

area under the curve, 64, 96 convex, 99, 101

argmax problem, 199 covariate shift, 74 feasible region, 113

AUC, 64, 95, 96 cross validation, 65, 68 feature augmentation, 78

AVA, 92 cubic feature map, 144 feature combinations, 54

averaged perceptron, 52 curvature, 107 feature mapping, 54

feature normalization, 59

back-propagation, 134, 137 data covariance matrix, 184 feature scale, 33

bag of words, 56 data generating distribution, 15 feature space, 31

bagging, 165 decision boundary, 34 feature values, 11, 29

base learner, 164 decision stump, 168 feature vector, 29, 31

batch, 173 decision tree, 8, 10 features, 11, 29

Bayes error rate, 20 decision trees, 57 forward-propagation, 137

Bayes optimal classifier, 19 density estimation, 76 fractional assignments, 191

Bayes optimal error rate, 20 development data, 26 furthest-first heuristic, 180

Bayes rule, 117 dimensionality reduction, 178

Bernouilli distribution, 121 discrepancy, 82 Gaussian distribution, 121

bias, 42 discrete distribution, 121 Gaussian kernel, 147

bias/variance trade-off, 72 disparate impact, 80 Gaussian Mixture Models, 191

binary features, 30 distance, 31 generalize, 9, 17

bipartite ranking problems, 95 domain adaptation, 74 generative story, 123

boosting, 155, 164 dominates, 63 geometric view, 29

bootstrap resampling, 165 dot product, 45 global minimum, 106

bootstrapping, 67, 69 dual problem, 151 GMM, 191

226 a course in machine learning

gradient, 105 KKT conditions, 152 non-convex, 135

gradient ascent, 105 non-linear, 129

gradient descent, 105 label, 11 Normal distribution, 121

Lagrange multipliers, 119 normalize, 46, 59

Hamming loss, 202 Lagrange variable, 119 null hypothesis, 67

hard-margin SVM, 113 Lagrangian, 119

hash kernel, 177 lattice, 200 objective function, 100

held-out data, 26 layer-wise, 139 one versus all, 90

hidden units, 129 learning by demonstration, 212 one versus rest, 90

hidden variables, 186 leave-one-out cross validation, 65 online, 42

hinge loss, 102, 203 level-set, 104 optimization problem, 100

histogram, 12 license, 2 oracle, 212, 220

horizon, 213 likelihood, 127 oracle experiment, 28

hyperbolic tangent, 130 linear classifier, 169 output unit, 129

hypercube, 38 linear classifiers, 169 OVA, 90

hyperparameter, 26, 44, 101 linear decision boundary, 41 overfitting, 23

hyperplane, 41 linear regression, 110 oversample, 88

hyperspheres, 38 linearly separable, 48

hypothesis, 71, 157 link function, 130 p-value, 67

hypothesis class, 71, 160 log likelihood, 118 PAC, 156, 166

hypothesis testing, 67 log posterior, 127 paired t-test, 67

log probability, 118 parametric test, 67

i.i.d. assumption, 117 log-likelihood ratio, 122 parity, 21

identically distributed, 24 logarithmic transformation, 61 parity function, 138

ILP, 195, 207 logistic loss, 102 patch representation, 56

imbalanced data, 85 logistic regression, 126 PCA, 184

imitation learning, 212 LOO cross validation, 65 perceptron, 41, 42, 58

importance sampling, 75 loss function, 14 perpendicular, 45

importance weight, 86 loss-augmented inference, 205 pixel representation, 55

independent, 24 loss-augmented search, 205 policy, 212

independently, 117 polynomial kernels, 146

independently and identically dis- margin, 49, 112 positive semi-definite, 146

tributed, 117 margin of a data set, 49 posterior, 127

indicator function, 100 marginal likelihood, 127 precision, 62

induce, 16 marginalization, 117 precision/recall curves, 63

induced distribution, 88 Markov features, 198 predict, 9

induction, 9 maximum a posteriori, 127 preference function, 94

inductive bias, 20, 31, 33, 103, 121 maximum depth, 26 primal variables, 151

integer linear program, 207 maximum likelihood estimation, 118 principle components analysis, 184

integer linear programming, 195 mean, 59 prior, 127

iteration, 36 Mercer’s condition, 146 probabilistic modeling, 116

model, 99 Probably Approximately Correct, 156

jack-knifing, 69 modeling, 25 programming by example, 212

Jensen’s inequality, 193 multi-layer network, 129 projected gradient, 151

joint, 124 projection, 46

naive Bayes assumption, 120 psd, 146

K-nearest neighbors, 32 nearest neighbor, 29, 31

Karush-Kuhn-Tucker conditions, 152 neural network, 169 radial basis function, 139

kernel, 141, 145 neural networks, 54, 129 random forests, 169

kernel trick, 146 neurons, 41 random variable, 117

kernels, 54 noise, 21 RBF kernel, 147

index 227

RBF network, 139 span, 143 time horizon, 213

recall, 62 sparse, 104 total variation distance, 82

receiver operating characteristic, 64 specificity, 64 train/test mismatch, 74

reconstruction error, 184 squared loss, 14, 102 training data, 9, 16, 24

reductions, 88 statistical inference, 116 training error, 16

redundant features, 56 statistically significant, 67 trajectory, 213

regularized objective, 101 steepest ascent, 105 trellis, 200

regularizer, 100, 103 stochastic gradient descent, 173 trucated gradients, 175

reinforcement learning, 212 stochastic optimization, 172 two-layer network, 129

representer theorem, 143, 145 strong law of large numbers, 24

ROC curve, 64 strong learner, 166 unary features, 198

strong learning algorithm, 166 unbiased, 47

sample complexity, 157, 158, 160 strongly convex, 107 underfitting, 23

sample mean, 59 structural risk minimization, 99 unit hypercube, 39

sample selection bias, 74 structured hinge loss, 203 unit vector, 46

sample variance, 59 structured prediction, 195 unsupervised adaptation, 75

semi-supervised adaptation, 75 sub-sampling, 87 unsupervised learning, 35

sensitivity, 64 subderivative, 108

separating hyperplane, 99 subgradient, 108 validation data, 26

sequential decision making, 212 subgradient descent, 109 Vapnik-Chernovenkis dimension, 162

SGD, 173 sum-to-one, 117 variance, 59, 165

shallow decision tree, 21, 168 support vector machine, 112 variational distance, 82

shape representation, 56 support vectors, 153 VC dimension, 162

sigmoid, 130 surrogate loss, 102 vector, 31

sigmoid function, 126 symmetric modes, 135 visualize, 178

sigmoid network, 139 vote, 32

sign, 130 t-test, 67 voted perceptron, 52

single-layer network, 129 test data, 25 voting, 52

singular, 110 test error, 25

slack, 148 test set, 9 weak learner, 166

slack parameters, 113 text categorization, 56 weak learning algorithm, 166

smoothed analysis, 180 the curse of dimensionality, 37 weights, 41

soft assignments, 190 threshold, 42

soft-margin SVM, 113 Tikhonov regularization, 99 zero/one loss, 14

You might also like

- ABM LP Business Mathematics (Measure of Central Tendency and Variability Etc.)Document6 pagesABM LP Business Mathematics (Measure of Central Tendency and Variability Etc.)Magz Magan50% (2)

- Mann-Whitney U TestDocument72 pagesMann-Whitney U TestHazel Mai CelinoNo ratings yet

- 17.0 PP 342 349 Subject IndexDocument8 pages17.0 PP 342 349 Subject Indexelhombredenegro3000No ratings yet

- Advanced Calculus IndexDocument6 pagesAdvanced Calculus Indexhyd arnesNo ratings yet

- 3 s2.0 B9780128023068099912 MainDocument7 pages3 s2.0 B9780128023068099912 MainSeemal JelaniNo ratings yet

- Index - 2010 - Essential Matlab For Engineers and ScientistsDocument9 pagesIndex - 2010 - Essential Matlab For Engineers and ScientistswatayaNo ratings yet

- 15of15 - Subject IndexDocument4 pages15of15 - Subject IndexBranko NikolicNo ratings yet

- IndexDocument5 pagesIndexMirela FrandesNo ratings yet

- 3 s2.0 B9780123869128500142 MainDocument3 pages3 s2.0 B9780123869128500142 MainDheeraj_GopalNo ratings yet

- 2009 Bookmatter FunctionalAnalysisDocument7 pages2009 Bookmatter FunctionalAnalysisSpurthy YNo ratings yet

- 2016 Bookmatter SolvingPDEsInPythonDocument6 pages2016 Bookmatter SolvingPDEsInPythonJese MadridNo ratings yet

- 11.0 PP 704 712 Subject IndexDocument9 pages11.0 PP 704 712 Subject IndexNima MoaddeliNo ratings yet

- Capitulo 10 - Luck. 2014-12Document25 pagesCapitulo 10 - Luck. 2014-12Alejandra GaonaNo ratings yet

- Uncorrected Sample Pages: IndexDocument3 pagesUncorrected Sample Pages: IndexJonathan SkeltonNo ratings yet

- 10 0000@www Cambridge Org@Document3 pages10 0000@www Cambridge Org@Red Pearl AliNo ratings yet

- Index: - Cut of TFN 209Document4 pagesIndex: - Cut of TFN 209Reza SadeghiNo ratings yet

- Uc1.b4064632 365 1588311625Document1 pageUc1.b4064632 365 1588311625vigneshwaran50No ratings yet

- Index: Unauthenticated Download Date - 4/8/16 5:37 PMDocument6 pagesIndex: Unauthenticated Download Date - 4/8/16 5:37 PMmompou88No ratings yet

- Bibliography: Field Guide To Image ProcessingDocument6 pagesBibliography: Field Guide To Image ProcessingVishnu VardhanNo ratings yet

- (Download PDF) Elementary Linear Algebra Metric Version Eighth Edition Ron Larson Online Ebook All Chapter PDFDocument42 pages(Download PDF) Elementary Linear Algebra Metric Version Eighth Edition Ron Larson Online Ebook All Chapter PDFkristie.macpartland818100% (13)

- John V. Guttag - Introduction To Computation and Programming Using Python - With Application To Understanding Data-The MIT Press (2016) PDFDocument17 pagesJohn V. Guttag - Introduction To Computation and Programming Using Python - With Application To Understanding Data-The MIT Press (2016) PDFben Tennessen100% (1)

- Index: Parallel To Y-Axis, 506,510Document4 pagesIndex: Parallel To Y-Axis, 506,510coaching cksNo ratings yet

- Index: Naive Set TheoryDocument3 pagesIndex: Naive Set Theorydavid.contatos4308No ratings yet

- N-Space. Volume Of. (C. 1) Integrability. 325Document10 pagesN-Space. Volume Of. (C. 1) Integrability. 325Kevin ChiangNo ratings yet

- Afterword and Acknowledgements: AppendixDocument6 pagesAfterword and Acknowledgements: Appendixadammhoja35No ratings yet

- heiUP 534 978 3 947732 59 3 CH20Document4 pagesheiUP 534 978 3 947732 59 3 CH20enlightenedepNo ratings yet

- C Language Reference ManualDocument1 pageC Language Reference ManualGautam BrownNo ratings yet

- Index: (Identically Equal), 312Document5 pagesIndex: (Identically Equal), 312Austin VuongNo ratings yet

- Massfidtale24 7175Document51 pagesMassfidtale24 7175lori.jacobson760No ratings yet

- Free Download Elementary Linear Algebra Metric Version Eighth Edition Ron Larson Full Chapter PDFDocument51 pagesFree Download Elementary Linear Algebra Metric Version Eighth Edition Ron Larson Full Chapter PDFdavid.kulikowski465100% (28)

- Back MatterDocument2 pagesBack MatterFrancis ProulxNo ratings yet

- Theory of Deep Learning 1652786371Document118 pagesTheory of Deep Learning 1652786371Washington AzevedoNo ratings yet

- Theory of Deep Learning 1655537386Document68 pagesTheory of Deep Learning 1655537386Berk AyvazNo ratings yet

- Matemática Competitiva para o Ensino MédioDocument267 pagesMatemática Competitiva para o Ensino MédiohelioteixeiraNo ratings yet

- Comm RaoDocument120 pagesComm RaojohnoftheroadNo ratings yet

- DLbookDocument165 pagesDLbookYAQI ZHANNo ratings yet

- Index: DB Frequency, AC CI HD HD HDDocument10 pagesIndex: DB Frequency, AC CI HD HD HDe616vNo ratings yet

- GATE (MA) Previous Year Question PaperDocument346 pagesGATE (MA) Previous Year Question PaperJoy RoyNo ratings yet

- This Sheet Is For 1 Mark Questions S.R No Image E.GDocument56 pagesThis Sheet Is For 1 Mark Questions S.R No Image E.GSohel SutriyaNo ratings yet

- Statistical Inference For EveryoneDocument202 pagesStatistical Inference For EveryoneSAMUEL EDUARDO CRUZ TELLEZNo ratings yet

- GADataMining CNADocument73 pagesGADataMining CNAarpita1790No ratings yet

- SlidesDocument428 pagesSlidesKayceeNo ratings yet

- 3.probability Random Variables and Stochastic Processes Athanasios Papoulis S. Unnikrishna Pillai 601 865 251 265Document15 pages3.probability Random Variables and Stochastic Processes Athanasios Papoulis S. Unnikrishna Pillai 601 865 251 265AlvaroNo ratings yet

- 1index PDFDocument2 pages1index PDFxan30tosNo ratings yet

- Index - 2018 - C Algebras and Their Automorphism GroupsDocument6 pagesIndex - 2018 - C Algebras and Their Automorphism GroupsPœta OciosoNo ratings yet

- Index - 2017 - Building Wireless Sensor NetworksDocument2 pagesIndex - 2017 - Building Wireless Sensor NetworksMurat SevincNo ratings yet

- Aux5 IndexDocument9 pagesAux5 Indexluis fernando davalos castroNo ratings yet

- 2020 Manual 3Document278 pages2020 Manual 3Hykal FaridNo ratings yet

- Index: More InformationDocument5 pagesIndex: More InformationEduardo HernandezNo ratings yet

- (Download PDF) Elementary Linear Algebra Ron Larson Online Ebook All Chapter PDFDocument42 pages(Download PDF) Elementary Linear Algebra Ron Larson Online Ebook All Chapter PDFkristie.macpartland818100% (14)

- Lecture 2Document37 pagesLecture 2Sulaiman NurhidayatNo ratings yet

- Mathematical Statistics Iqra LiaqatDocument300 pagesMathematical Statistics Iqra LiaqatAmna ShiekhNo ratings yet

- Hans J. Herrmann, Lucas Böttcher - Computational StatisticalDocument273 pagesHans J. Herrmann, Lucas Böttcher - Computational StatisticalGJobs100% (1)

- Compressing Polygon Mesh Geometry: With Parallelogram PredictionDocument80 pagesCompressing Polygon Mesh Geometry: With Parallelogram PredictionAntonio Carlos Salzvedel FurtadoNo ratings yet

- IndexDocument14 pagesIndexBrhane W YgzawNo ratings yet

- Intro To Machine Learning With PyTorchDocument48 pagesIntro To Machine Learning With PyTorchOvidiu TomaNo ratings yet

- Advanced BASIC Scientific SubroutinesDocument189 pagesAdvanced BASIC Scientific SubroutinesGarry GrosseNo ratings yet

- 100 Key Concepts To Know For Data Science InterviewsDocument4 pages100 Key Concepts To Know For Data Science Interviewsoops sorryNo ratings yet

- The Importance of Being Fuzzy: And Other Insights from the Border between Math and ComputersFrom EverandThe Importance of Being Fuzzy: And Other Insights from the Border between Math and ComputersRating: 3 out of 5 stars3/5 (2)

- Machine Learning: COMS 4771 Fall 2018Document6 pagesMachine Learning: COMS 4771 Fall 2018Jiahong HeNo ratings yet

- Xpectation Aximization: Grading An Exam Without An Answer KeyDocument9 pagesXpectation Aximization: Grading An Exam Without An Answer KeyJiahong HeNo ratings yet

- 13 Ensemble MethodsDocument7 pages13 Ensemble MethodsJiahong HeNo ratings yet

- 14 Efficient LearningDocument7 pages14 Efficient LearningJiahong HeNo ratings yet

- 15 Unsupervised LearningDocument8 pages15 Unsupervised LearningJiahong HeNo ratings yet

- 7 Linear ModelDocument17 pages7 Linear ModelJiahong HeNo ratings yet

- 12 Learning TheoryDocument10 pages12 Learning TheoryJiahong HeNo ratings yet

- Ias and Airness: Train/Test MismatchDocument12 pagesIas and Airness: Train/Test MismatchJiahong HeNo ratings yet

- 10 Neural NetworksDocument12 pages10 Neural NetworksJiahong HeNo ratings yet

- 6 Beyond Binary ClassificationDocument14 pages6 Beyond Binary ClassificationJiahong HeNo ratings yet

- Stevenson7ce PPT Ch03Document86 pagesStevenson7ce PPT Ch03tareqalakhrasNo ratings yet

- INDE 3364 Final Exam Cheat SheetDocument5 pagesINDE 3364 Final Exam Cheat SheetbassoonsrockNo ratings yet

- Exploratory Factor Analysis: Prof. Andy FieldDocument33 pagesExploratory Factor Analysis: Prof. Andy FieldSyedNo ratings yet

- QuestionsDocument22 pagesQuestionsAnonymous NUP7oTOv100% (1)

- Course Outline Bba Business STATISTICSDocument3 pagesCourse Outline Bba Business STATISTICSAbroo ZulfiqarNo ratings yet

- Time Series Formula orDocument4 pagesTime Series Formula or2B Dai Ko DUPLICATENo ratings yet

- Lecture 3 - Forecasting(s)Document96 pagesLecture 3 - Forecasting(s)Vicaria McDowellNo ratings yet

- NLTK Final Test CLCTC k55Document3 pagesNLTK Final Test CLCTC k55Quỳnh Anh ShineNo ratings yet

- F TableDocument4 pagesF TablehshshdhdNo ratings yet

- 20220117162233-Session 10-1Document46 pages20220117162233-Session 10-1jafriyie011No ratings yet

- Naïve Bayes Classifier AlgorithmDocument14 pagesNaïve Bayes Classifier Algorithmshreya sarkarNo ratings yet

- 49-Article Text-292-1-10-20201231Document8 pages49-Article Text-292-1-10-20201231meirin windyasariNo ratings yet

- Handnote Chapter 4 Measures of DispersioDocument45 pagesHandnote Chapter 4 Measures of DispersioKim NamjoonneNo ratings yet

- Chapter 8Document15 pagesChapter 8Phước NgọcNo ratings yet

- Econonometrics Module-1Document160 pagesEcononometrics Module-1Firanbek Bg100% (3)

- PS2 SolutionsDocument4 pagesPS2 SolutionsAdad AsadNo ratings yet

- Test Bank Chap 3 PDFDocument36 pagesTest Bank Chap 3 PDFThu Hiền Khương100% (1)

- Problem Set 2Document2 pagesProblem Set 2SOPHIA BEATRIZ UYNo ratings yet

- RPP bwm22502 Sem1 - 18 - 19Document6 pagesRPP bwm22502 Sem1 - 18 - 19muhd aziq fikri mohd raziNo ratings yet

- Koyck - Polynomial DL (PDL) : - A Form of Short-Term Model - Explanatory Variables - Three ComponentsDocument5 pagesKoyck - Polynomial DL (PDL) : - A Form of Short-Term Model - Explanatory Variables - Three ComponentsmazamniaziNo ratings yet

- Distribution Type PDFDocument8 pagesDistribution Type PDFRajesh DhanambalNo ratings yet

- Generalized Linear ModelsDocument109 pagesGeneralized Linear ModelsaufernugasNo ratings yet

- Little Book of R For Multivariate AnalysisDocument51 pagesLittle Book of R For Multivariate AnalysisDebasish PadhyNo ratings yet

- Sta404 Chapter 06Document94 pagesSta404 Chapter 06Ibnu IyarNo ratings yet

- Hubungan Dukungan Sosial Teman Sebaya Dengan Stres Pada Mahasiswa Yang Mengerjakan Skripsi Di Fakultas Keperawatan UnklabDocument7 pagesHubungan Dukungan Sosial Teman Sebaya Dengan Stres Pada Mahasiswa Yang Mengerjakan Skripsi Di Fakultas Keperawatan UnklabSalim RumraNo ratings yet

- Introduction To Simulations in R: Charles Dimaggio, PHD, MPH, Pa-CDocument48 pagesIntroduction To Simulations in R: Charles Dimaggio, PHD, MPH, Pa-CKo DuanNo ratings yet

- Exponential Smoothing Example:: Single Years Quarter Period Sales Avg A (T) /avgDocument6 pagesExponential Smoothing Example:: Single Years Quarter Period Sales Avg A (T) /avgchikomborero maranduNo ratings yet

- Chap3 Inference About Mean StructureDocument16 pagesChap3 Inference About Mean StructurechanpeinNo ratings yet

- 1 To 10 DSBDA Case StudyDocument17 pages1 To 10 DSBDA Case StudyGanesh BagulNo ratings yet