Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

2 views9 Read and Write in Hadoop

9 Read and Write in Hadoop

Uploaded by

kotha yaswanthThere are three main components of Hadoop - HDFS for storage, MapReduce for processing, and YARN for resource management. HDFS uses a namenode to store metadata and datanodes to store data blocks. For read operations, a client contacts the namenode to get block locations then reads data directly from datanodes. For write operations, a client creates a file and writes data to datanodes while the namenode tracks block allocation and metadata.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You might also like

- Fiscal DevicesDocument2 pagesFiscal Devicesericonasis0% (1)

- Hadoop - The Final ProductDocument42 pagesHadoop - The Final ProductVenkataprasad Boddu100% (2)

- Tsizepro ManualDocument15 pagesTsizepro ManualgmeadesNo ratings yet

- 1.HDFS Architecture and Its OperationsDocument6 pages1.HDFS Architecture and Its OperationsShriDikshanyaNo ratings yet

- HDFS Tutorial - Architecture, Read & Write Operation Using Java APIDocument3 pagesHDFS Tutorial - Architecture, Read & Write Operation Using Java APIWWE OFFICAL MATCH CARDS.No ratings yet

- Manasi Kawathekar - 20020343027 Tech Class: B: Operations in HDFS: Write CaseDocument2 pagesManasi Kawathekar - 20020343027 Tech Class: B: Operations in HDFS: Write CaseSiddhant SinghNo ratings yet

- Big Data Assignment PDFDocument18 pagesBig Data Assignment PDFMukul MishraNo ratings yet

- Anatomy OF File Write and ReadDocument6 pagesAnatomy OF File Write and ReadDhavan KumarNo ratings yet

- Unit 4Document104 pagesUnit 4nosopa5904No ratings yet

- Read Write in HDFSDocument6 pagesRead Write in HDFShemantsinghNo ratings yet

- 1) Discuss The Design of Hadoop Distributed File System (HDFS) and Concept in DetailDocument11 pages1) Discuss The Design of Hadoop Distributed File System (HDFS) and Concept in DetailMudit KumarNo ratings yet

- Unit 3 BdaDocument9 pagesUnit 3 BdaVINAY AGGARWALNo ratings yet

- 004 - Hadoop Daemons (HDFS Only)Document3 pages004 - Hadoop Daemons (HDFS Only)Srinivas ReddyNo ratings yet

- DFS, HDFS, Architecture, Scaling ProblemDocument32 pagesDFS, HDFS, Architecture, Scaling Problemsaif salahNo ratings yet

- Hadoop: OREIN IT TechnologiesDocument65 pagesHadoop: OREIN IT TechnologiesSindhushaPotuNo ratings yet

- Data Encoding Principles Assignment 1Document4 pagesData Encoding Principles Assignment 1Abhishek AcharyaNo ratings yet

- Big Data Huawei CourseDocument12 pagesBig Data Huawei CourseThiago SiqueiraNo ratings yet

- Unit - 3 HDFS MAPREDUCE HBASEDocument34 pagesUnit - 3 HDFS MAPREDUCE HBASEsixit37787No ratings yet

- Hadoop SessionDocument65 pagesHadoop SessionSuresh ChowdaryNo ratings yet

- Unit 5 PrintDocument32 pagesUnit 5 Printsivapunithan SNo ratings yet

- UNIT V-Cloud ComputingDocument33 pagesUNIT V-Cloud ComputingJayanth V 19CS045No ratings yet

- Module 1 PDFDocument49 pagesModule 1 PDFAjayNo ratings yet

- HDFS ConceptsDocument10 pagesHDFS Conceptspallavibhardwaj1124No ratings yet

- Computer Networks TFTP ReportDocument11 pagesComputer Networks TFTP ReportNour AllamNo ratings yet

- UNIT5Document33 pagesUNIT5sureshkumar aNo ratings yet

- CC Unit 5 NotesDocument30 pagesCC Unit 5 NotesHrudhai SNo ratings yet

- Cloud Computing - Unit 5 NotesDocument33 pagesCloud Computing - Unit 5 Notessteffinamorin LNo ratings yet

- BDA Module-1 NotesDocument14 pagesBDA Module-1 NotesKavita HoradiNo ratings yet

- Document 4 HDFSDocument8 pagesDocument 4 HDFSPiyaliNo ratings yet

- CC Unit-5Document33 pagesCC Unit-5Rajamanikkam RajamanikkamNo ratings yet

- Module I - Hadoop Distributed File System (HDFS)Document51 pagesModule I - Hadoop Distributed File System (HDFS)Sid MohammedNo ratings yet

- HDFS MaterialDocument24 pagesHDFS MaterialNik Kumar100% (1)

- Unit 5-Cloud PDFDocument33 pagesUnit 5-Cloud PDFGOKUL bNo ratings yet

- HDFS InternalsDocument30 pagesHDFS InternalsTijoy TomNo ratings yet

- Hadoop File SystemDocument36 pagesHadoop File SystemVarun GuptaNo ratings yet

- Unit V Cloud Technologies and Advancements 8Document33 pagesUnit V Cloud Technologies and Advancements 8Jaya Prakash MNo ratings yet

- Hadoop File System: B. RamamurthyDocument36 pagesHadoop File System: B. RamamurthyforjunklikescribdNo ratings yet

- Big Data-Module 1 - VTU Aug 2020 Solved PaperDocument10 pagesBig Data-Module 1 - VTU Aug 2020 Solved PaperHarmeet SinghNo ratings yet

- SampleDocument30 pagesSampleSoya BeanNo ratings yet

- Hadoop Provides 2 ServicesDocument3 pagesHadoop Provides 2 ServicesMoulikplNo ratings yet

- BDA Module 2 - Notes PDFDocument101 pagesBDA Module 2 - Notes PDFNidhi SrivastavaNo ratings yet

- Assignment 1Document2 pagesAssignment 1Dêëpåk MallineniNo ratings yet

- Introduction To HadoopDocument52 pagesIntroduction To Hadoopanytingac1No ratings yet

- Unit-2 Introduction To HadoopDocument19 pagesUnit-2 Introduction To HadoopSivaNo ratings yet

- Hadoop ArchitectureDocument84 pagesHadoop ArchitectureCelina SawanNo ratings yet

- Reliable Distributed SystemsDocument44 pagesReliable Distributed Systemslcm3766lNo ratings yet

- Assignment 3Document1 pageAssignment 3Angel MontessoriNo ratings yet

- BagelDocument26 pagesBagelbank slashNo ratings yet

- BigData Module 1 NewDocument17 pagesBigData Module 1 Newsangamesh kNo ratings yet

- Splits Input Into Independent Chunks in Parallel MannerDocument4 pagesSplits Input Into Independent Chunks in Parallel MannerSayali ShivarkarNo ratings yet

- Comp Sci - IJCSEITR - A Hybrid Method of - Preetika SinghDocument6 pagesComp Sci - IJCSEITR - A Hybrid Method of - Preetika SinghTJPRC PublicationsNo ratings yet

- Hadoop Distributed File System: Bhavneet Kaur B.Tech Computer Science 2 YearDocument34 pagesHadoop Distributed File System: Bhavneet Kaur B.Tech Computer Science 2 YearSimran GulianiNo ratings yet

- Experiment No. 2 Training Session On Hadoop: Hadoop Distributed File SystemDocument9 pagesExperiment No. 2 Training Session On Hadoop: Hadoop Distributed File SystemShubhamNo ratings yet

- Introduction To Hadoop EcosystemDocument46 pagesIntroduction To Hadoop EcosystemGokul J LNo ratings yet

- Module 1Document66 pagesModule 1Anusha KpNo ratings yet

- Automation of Database Startup During Linux BootupDocument13 pagesAutomation of Database Startup During Linux BootupAnonymous 8RhRm6Eo7hNo ratings yet

- Unix Interview Questions and AnswersDocument21 pagesUnix Interview Questions and AnswersChakriNo ratings yet

- Hadoop Administration Interview Questions and Answers For 2022Document6 pagesHadoop Administration Interview Questions and Answers For 2022sahil naghateNo ratings yet

- UNIT 3 HDFS, Hadoop Environment Part 1Document9 pagesUNIT 3 HDFS, Hadoop Environment Part 1works8606No ratings yet

- CMSMS Config Variables: CMS Made Simple Development TeamDocument13 pagesCMSMS Config Variables: CMS Made Simple Development Teamcode vikashNo ratings yet

- Lab Manual 2 C++ PDFDocument11 pagesLab Manual 2 C++ PDFZain iqbalNo ratings yet

- Operating System TutorialDocument5 pagesOperating System TutorialDaniel McDougallNo ratings yet

- 6.033 Computer System Engineering: Mit OpencoursewareDocument7 pages6.033 Computer System Engineering: Mit OpencoursewareAnwar KovilNo ratings yet

- Step by Step Install Guide OpenQRM Data Center Management and Cloud Computing PlatformDocument32 pagesStep by Step Install Guide OpenQRM Data Center Management and Cloud Computing PlatformBharat Rohera100% (1)

- Eclipse Mylyn Tutorial en v1.3 20100911Document41 pagesEclipse Mylyn Tutorial en v1.3 20100911oliminationNo ratings yet

- Software Engineer: ETL Developer - Larsen & Toubro Infotech LimitedDocument4 pagesSoftware Engineer: ETL Developer - Larsen & Toubro Infotech LimitedRamanan AssignmentNo ratings yet

- OOSE mcq1Document3 pagesOOSE mcq1dhruvsohaniNo ratings yet

- Chapter 1 Introdroduction To ComputerDocument32 pagesChapter 1 Introdroduction To ComputerStricker ManNo ratings yet

- 13-06-11 Homecare Homebase Submission On Public InterestDocument3 pages13-06-11 Homecare Homebase Submission On Public InterestFlorian MuellerNo ratings yet

- Department of Computer Science and Engineering: Unit I - MCQ BankDocument7 pagesDepartment of Computer Science and Engineering: Unit I - MCQ BankRamesh KumarNo ratings yet

- WSA 7.0.0 UserGuideDocument784 pagesWSA 7.0.0 UserGuideLuis JimenezNo ratings yet

- SMF Imp Guide 630Document399 pagesSMF Imp Guide 630Ramzi BoubakerNo ratings yet

- MT4 ForexDocument22 pagesMT4 ForexSebastian GhermanNo ratings yet

- Manual GBS Fingerprint SDK 2014Document15 pagesManual GBS Fingerprint SDK 2014Kainã Almeida100% (1)

- App Inventor Lab 1Document26 pagesApp Inventor Lab 1Rue BNo ratings yet

- Mupdf ExploredDocument165 pagesMupdf Exploredpatrick.disscadaNo ratings yet

- Implementing NetScaler VPX™ - Second Edition - Sample ChapterDocument33 pagesImplementing NetScaler VPX™ - Second Edition - Sample ChapterPackt Publishing100% (1)

- Digital WatchDocument5 pagesDigital WatchAshik MahmudNo ratings yet

- OpenProject User GuideDocument85 pagesOpenProject User GuidezatNo ratings yet

- Business FinalDocument10 pagesBusiness FinalNgọc Nguyễn Trần BảoNo ratings yet

- Pocket Guide To Probing Solutions For CNC Machine ToolsDocument40 pagesPocket Guide To Probing Solutions For CNC Machine Toolsgheorghemocanet100% (1)

- International School Yangon PDFDocument20 pagesInternational School Yangon PDFBui Duc Luong VSSNo ratings yet

- Installation swpm20 Ux Abap Hana PDFDocument200 pagesInstallation swpm20 Ux Abap Hana PDFJamy RosaldoNo ratings yet

- Practical Malware Analysis Based On SandboxingDocument6 pagesPractical Malware Analysis Based On SandboxingHamza sultanNo ratings yet

- DMR2014 PDFDocument350 pagesDMR2014 PDFHai Duc NguyenNo ratings yet

- GX3 Mac Driver Installation GuideDocument10 pagesGX3 Mac Driver Installation GuideHouseman74No ratings yet

- Launch Easydiag Software Download - Iphone OBD2Document6 pagesLaunch Easydiag Software Download - Iphone OBD2Auto DiagNo ratings yet

9 Read and Write in Hadoop

9 Read and Write in Hadoop

Uploaded by

kotha yaswanth0 ratings0% found this document useful (0 votes)

2 views3 pagesThere are three main components of Hadoop - HDFS for storage, MapReduce for processing, and YARN for resource management. HDFS uses a namenode to store metadata and datanodes to store data blocks. For read operations, a client contacts the namenode to get block locations then reads data directly from datanodes. For write operations, a client creates a file and writes data to datanodes while the namenode tracks block allocation and metadata.

Original Description:

Original Title

9 read and write in hadoop

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThere are three main components of Hadoop - HDFS for storage, MapReduce for processing, and YARN for resource management. HDFS uses a namenode to store metadata and datanodes to store data blocks. For read operations, a client contacts the namenode to get block locations then reads data directly from datanodes. For write operations, a client creates a file and writes data to datanodes while the namenode tracks block allocation and metadata.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

0 ratings0% found this document useful (0 votes)

2 views3 pages9 Read and Write in Hadoop

9 Read and Write in Hadoop

Uploaded by

kotha yaswanthThere are three main components of Hadoop - HDFS for storage, MapReduce for processing, and YARN for resource management. HDFS uses a namenode to store metadata and datanodes to store data blocks. For read operations, a client contacts the namenode to get block locations then reads data directly from datanodes. For write operations, a client creates a file and writes data to datanodes while the namenode tracks block allocation and metadata.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

You are on page 1of 3

9. There are three components of Hadoop.

Hadoop HDFS - Hadoop Distributed File System (HDFS) is the storage unit of Hadoop.

Hadoop MapReduce - Hadoop MapReduce is the processing unit of Hadoop.

Hadoop YARN - Hadoop YARN is a resource management unit of Hadoop.

Client Read and Write operation in hadoop

1. HDFS Client: On user behalf, HDFS client interacts with NameNode and Datanode to

fulfill user requests.

2. NameNode: NameNode is the master node that stores metadata about block locations,

blocks of a file, etc. This metadata is used for file read and write operation.

3. DataNode: DataNodes are the slave nodes in HDFS. They store actual data (data

blocks).

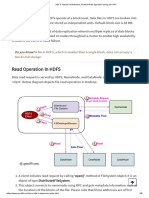

Read Operation In Hadoop

Data read request is served by HDFS, NameNode, and DataNode. Let’s call the reader as a

‘client’. Below diagram depicts file read operation in Hadoop.

1. A client initiates read request by calling ‘open()’ method of FileSystem object; it is an

object of type DistributedFileSystem.

2. This object connects to namenode using RPC and gets metadata information such as

the locations of the blocks of the file. Please note that these addresses are of first few

blocks of a file.

3. In response to this metadata request, addresses of the DataNodes having a copy of that

block is returned back.

4. Once addresses of DataNodes are received, an object of type FSDataInputStream is

returned to the client. FSDataInputStream contains DFSInputStream which takes

care of interactions with DataNode and NameNode. In step 4 shown in the above

diagram, a client invokes ‘read()’ method which causes DFSInputStream to

establish a connection with the first DataNode with the first block of a file.

5. Data is read in the form of streams wherein client invokes ‘read()’ method repeatedly.

This process of read() operation continues till it reaches the end of block.

6. Once the end of a block is reached, DFSInputStream closes the connection and moves

on to locate the next DataNode for the next block

7. Once a client has done with the reading, it calls a close() method.

Write Operation In Hadoop

In this section, we will understand how data is written into HDFS through files.

1. A client initiates write operation by calling ‘create()’ method of

DistributedFileSystem object which creates a new file – Step no. 1 in the above

diagram.

2. DistributedFileSystem object connects to the NameNode using RPC call and initiates

new file creation. However, this file creates operation does not associate any blocks

with the file. It is the responsibility of NameNode to verify that the file (which is

being created) does not exist already and a client has correct permissions to create a

new file. If a file already exists or client does not have sufficient permission to create

a new file, then IOException is thrown to the client. Otherwise, the operation

succeeds and a new record for the file is created by the NameNode.

3. Once a new record in NameNode is created, an object of type FSDataOutputStream is

returned to the client. A client uses it to write data into the HDFS. Data write method

is invoked (step 3 in the diagram).

4. FSDataOutputStream contains DFSOutputStream object which looks after

communication with DataNodes and NameNode. While the client continues writing

data, DFSOutputStream continues creating packets with this data. These packets are

enqueued into a queue which is called as DataQueue.

5. There is one more component called DataStreamer which consumes

this DataQueue. DataStreamer also asks NameNode for allocation of new blocks

thereby picking desirable DataNodes to be used for replication.

6. Now, the process of replication starts by creating a pipeline using DataNodes. In our

case, we have chosen a replication level of 3 and hence there are 3 DataNodes in the

pipeline.

7. The DataStreamer pours packets into the first DataNode in the pipeline.

8. Every DataNode in a pipeline stores packet received by it and forwards the same to

the second DataNode in a pipeline.

9. Another queue, ‘Ack Queue’ is maintained by DFSOutputStream to store packets

which are waiting for acknowledgment from DataNodes.

10. Once acknowledgment for a packet in the queue is received from all DataNodes in the

pipeline, it is removed from the ‘Ack Queue’. In the event of any DataNode failure,

packets from this queue are used to reinitiate the operation.

11. After a client is done with the writing data, it calls a close() method (Step 9 in the

diagram) Call to close(), results into flushing remaining data packets to the pipeline

followed by waiting for acknowledgment.

12. Once a final acknowledgment is received, NameNode is contacted to tell it that the

file write operation is complete.

You might also like

- Fiscal DevicesDocument2 pagesFiscal Devicesericonasis0% (1)

- Hadoop - The Final ProductDocument42 pagesHadoop - The Final ProductVenkataprasad Boddu100% (2)

- Tsizepro ManualDocument15 pagesTsizepro ManualgmeadesNo ratings yet

- 1.HDFS Architecture and Its OperationsDocument6 pages1.HDFS Architecture and Its OperationsShriDikshanyaNo ratings yet

- HDFS Tutorial - Architecture, Read & Write Operation Using Java APIDocument3 pagesHDFS Tutorial - Architecture, Read & Write Operation Using Java APIWWE OFFICAL MATCH CARDS.No ratings yet

- Manasi Kawathekar - 20020343027 Tech Class: B: Operations in HDFS: Write CaseDocument2 pagesManasi Kawathekar - 20020343027 Tech Class: B: Operations in HDFS: Write CaseSiddhant SinghNo ratings yet

- Big Data Assignment PDFDocument18 pagesBig Data Assignment PDFMukul MishraNo ratings yet

- Anatomy OF File Write and ReadDocument6 pagesAnatomy OF File Write and ReadDhavan KumarNo ratings yet

- Unit 4Document104 pagesUnit 4nosopa5904No ratings yet

- Read Write in HDFSDocument6 pagesRead Write in HDFShemantsinghNo ratings yet

- 1) Discuss The Design of Hadoop Distributed File System (HDFS) and Concept in DetailDocument11 pages1) Discuss The Design of Hadoop Distributed File System (HDFS) and Concept in DetailMudit KumarNo ratings yet

- Unit 3 BdaDocument9 pagesUnit 3 BdaVINAY AGGARWALNo ratings yet

- 004 - Hadoop Daemons (HDFS Only)Document3 pages004 - Hadoop Daemons (HDFS Only)Srinivas ReddyNo ratings yet

- DFS, HDFS, Architecture, Scaling ProblemDocument32 pagesDFS, HDFS, Architecture, Scaling Problemsaif salahNo ratings yet

- Hadoop: OREIN IT TechnologiesDocument65 pagesHadoop: OREIN IT TechnologiesSindhushaPotuNo ratings yet

- Data Encoding Principles Assignment 1Document4 pagesData Encoding Principles Assignment 1Abhishek AcharyaNo ratings yet

- Big Data Huawei CourseDocument12 pagesBig Data Huawei CourseThiago SiqueiraNo ratings yet

- Unit - 3 HDFS MAPREDUCE HBASEDocument34 pagesUnit - 3 HDFS MAPREDUCE HBASEsixit37787No ratings yet

- Hadoop SessionDocument65 pagesHadoop SessionSuresh ChowdaryNo ratings yet

- Unit 5 PrintDocument32 pagesUnit 5 Printsivapunithan SNo ratings yet

- UNIT V-Cloud ComputingDocument33 pagesUNIT V-Cloud ComputingJayanth V 19CS045No ratings yet

- Module 1 PDFDocument49 pagesModule 1 PDFAjayNo ratings yet

- HDFS ConceptsDocument10 pagesHDFS Conceptspallavibhardwaj1124No ratings yet

- Computer Networks TFTP ReportDocument11 pagesComputer Networks TFTP ReportNour AllamNo ratings yet

- UNIT5Document33 pagesUNIT5sureshkumar aNo ratings yet

- CC Unit 5 NotesDocument30 pagesCC Unit 5 NotesHrudhai SNo ratings yet

- Cloud Computing - Unit 5 NotesDocument33 pagesCloud Computing - Unit 5 Notessteffinamorin LNo ratings yet

- BDA Module-1 NotesDocument14 pagesBDA Module-1 NotesKavita HoradiNo ratings yet

- Document 4 HDFSDocument8 pagesDocument 4 HDFSPiyaliNo ratings yet

- CC Unit-5Document33 pagesCC Unit-5Rajamanikkam RajamanikkamNo ratings yet

- Module I - Hadoop Distributed File System (HDFS)Document51 pagesModule I - Hadoop Distributed File System (HDFS)Sid MohammedNo ratings yet

- HDFS MaterialDocument24 pagesHDFS MaterialNik Kumar100% (1)

- Unit 5-Cloud PDFDocument33 pagesUnit 5-Cloud PDFGOKUL bNo ratings yet

- HDFS InternalsDocument30 pagesHDFS InternalsTijoy TomNo ratings yet

- Hadoop File SystemDocument36 pagesHadoop File SystemVarun GuptaNo ratings yet

- Unit V Cloud Technologies and Advancements 8Document33 pagesUnit V Cloud Technologies and Advancements 8Jaya Prakash MNo ratings yet

- Hadoop File System: B. RamamurthyDocument36 pagesHadoop File System: B. RamamurthyforjunklikescribdNo ratings yet

- Big Data-Module 1 - VTU Aug 2020 Solved PaperDocument10 pagesBig Data-Module 1 - VTU Aug 2020 Solved PaperHarmeet SinghNo ratings yet

- SampleDocument30 pagesSampleSoya BeanNo ratings yet

- Hadoop Provides 2 ServicesDocument3 pagesHadoop Provides 2 ServicesMoulikplNo ratings yet

- BDA Module 2 - Notes PDFDocument101 pagesBDA Module 2 - Notes PDFNidhi SrivastavaNo ratings yet

- Assignment 1Document2 pagesAssignment 1Dêëpåk MallineniNo ratings yet

- Introduction To HadoopDocument52 pagesIntroduction To Hadoopanytingac1No ratings yet

- Unit-2 Introduction To HadoopDocument19 pagesUnit-2 Introduction To HadoopSivaNo ratings yet

- Hadoop ArchitectureDocument84 pagesHadoop ArchitectureCelina SawanNo ratings yet

- Reliable Distributed SystemsDocument44 pagesReliable Distributed Systemslcm3766lNo ratings yet

- Assignment 3Document1 pageAssignment 3Angel MontessoriNo ratings yet

- BagelDocument26 pagesBagelbank slashNo ratings yet

- BigData Module 1 NewDocument17 pagesBigData Module 1 Newsangamesh kNo ratings yet

- Splits Input Into Independent Chunks in Parallel MannerDocument4 pagesSplits Input Into Independent Chunks in Parallel MannerSayali ShivarkarNo ratings yet

- Comp Sci - IJCSEITR - A Hybrid Method of - Preetika SinghDocument6 pagesComp Sci - IJCSEITR - A Hybrid Method of - Preetika SinghTJPRC PublicationsNo ratings yet

- Hadoop Distributed File System: Bhavneet Kaur B.Tech Computer Science 2 YearDocument34 pagesHadoop Distributed File System: Bhavneet Kaur B.Tech Computer Science 2 YearSimran GulianiNo ratings yet

- Experiment No. 2 Training Session On Hadoop: Hadoop Distributed File SystemDocument9 pagesExperiment No. 2 Training Session On Hadoop: Hadoop Distributed File SystemShubhamNo ratings yet

- Introduction To Hadoop EcosystemDocument46 pagesIntroduction To Hadoop EcosystemGokul J LNo ratings yet

- Module 1Document66 pagesModule 1Anusha KpNo ratings yet

- Automation of Database Startup During Linux BootupDocument13 pagesAutomation of Database Startup During Linux BootupAnonymous 8RhRm6Eo7hNo ratings yet

- Unix Interview Questions and AnswersDocument21 pagesUnix Interview Questions and AnswersChakriNo ratings yet

- Hadoop Administration Interview Questions and Answers For 2022Document6 pagesHadoop Administration Interview Questions and Answers For 2022sahil naghateNo ratings yet

- UNIT 3 HDFS, Hadoop Environment Part 1Document9 pagesUNIT 3 HDFS, Hadoop Environment Part 1works8606No ratings yet

- CMSMS Config Variables: CMS Made Simple Development TeamDocument13 pagesCMSMS Config Variables: CMS Made Simple Development Teamcode vikashNo ratings yet

- Lab Manual 2 C++ PDFDocument11 pagesLab Manual 2 C++ PDFZain iqbalNo ratings yet

- Operating System TutorialDocument5 pagesOperating System TutorialDaniel McDougallNo ratings yet

- 6.033 Computer System Engineering: Mit OpencoursewareDocument7 pages6.033 Computer System Engineering: Mit OpencoursewareAnwar KovilNo ratings yet

- Step by Step Install Guide OpenQRM Data Center Management and Cloud Computing PlatformDocument32 pagesStep by Step Install Guide OpenQRM Data Center Management and Cloud Computing PlatformBharat Rohera100% (1)

- Eclipse Mylyn Tutorial en v1.3 20100911Document41 pagesEclipse Mylyn Tutorial en v1.3 20100911oliminationNo ratings yet

- Software Engineer: ETL Developer - Larsen & Toubro Infotech LimitedDocument4 pagesSoftware Engineer: ETL Developer - Larsen & Toubro Infotech LimitedRamanan AssignmentNo ratings yet

- OOSE mcq1Document3 pagesOOSE mcq1dhruvsohaniNo ratings yet

- Chapter 1 Introdroduction To ComputerDocument32 pagesChapter 1 Introdroduction To ComputerStricker ManNo ratings yet

- 13-06-11 Homecare Homebase Submission On Public InterestDocument3 pages13-06-11 Homecare Homebase Submission On Public InterestFlorian MuellerNo ratings yet

- Department of Computer Science and Engineering: Unit I - MCQ BankDocument7 pagesDepartment of Computer Science and Engineering: Unit I - MCQ BankRamesh KumarNo ratings yet

- WSA 7.0.0 UserGuideDocument784 pagesWSA 7.0.0 UserGuideLuis JimenezNo ratings yet

- SMF Imp Guide 630Document399 pagesSMF Imp Guide 630Ramzi BoubakerNo ratings yet

- MT4 ForexDocument22 pagesMT4 ForexSebastian GhermanNo ratings yet

- Manual GBS Fingerprint SDK 2014Document15 pagesManual GBS Fingerprint SDK 2014Kainã Almeida100% (1)

- App Inventor Lab 1Document26 pagesApp Inventor Lab 1Rue BNo ratings yet

- Mupdf ExploredDocument165 pagesMupdf Exploredpatrick.disscadaNo ratings yet

- Implementing NetScaler VPX™ - Second Edition - Sample ChapterDocument33 pagesImplementing NetScaler VPX™ - Second Edition - Sample ChapterPackt Publishing100% (1)

- Digital WatchDocument5 pagesDigital WatchAshik MahmudNo ratings yet

- OpenProject User GuideDocument85 pagesOpenProject User GuidezatNo ratings yet

- Business FinalDocument10 pagesBusiness FinalNgọc Nguyễn Trần BảoNo ratings yet

- Pocket Guide To Probing Solutions For CNC Machine ToolsDocument40 pagesPocket Guide To Probing Solutions For CNC Machine Toolsgheorghemocanet100% (1)

- International School Yangon PDFDocument20 pagesInternational School Yangon PDFBui Duc Luong VSSNo ratings yet

- Installation swpm20 Ux Abap Hana PDFDocument200 pagesInstallation swpm20 Ux Abap Hana PDFJamy RosaldoNo ratings yet

- Practical Malware Analysis Based On SandboxingDocument6 pagesPractical Malware Analysis Based On SandboxingHamza sultanNo ratings yet

- DMR2014 PDFDocument350 pagesDMR2014 PDFHai Duc NguyenNo ratings yet

- GX3 Mac Driver Installation GuideDocument10 pagesGX3 Mac Driver Installation GuideHouseman74No ratings yet

- Launch Easydiag Software Download - Iphone OBD2Document6 pagesLaunch Easydiag Software Download - Iphone OBD2Auto DiagNo ratings yet