Professional Documents

Culture Documents

Measuring Service Quality

Measuring Service Quality

Uploaded by

Velda Mc DonaldOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Measuring Service Quality

Measuring Service Quality

Uploaded by

Velda Mc DonaldCopyright:

Available Formats

Service Quality: A Measure of Information Systems Effectiveness Author(s): Leyland F. Pitt, Richard T. Watson, C.

Bruce Kavan Source: MIS Quarterly, Vol. 19, No. 2 (Jun., 1995), pp. 173-187 Published by: Management Information Systems Research Center, University of Minnesota Stable URL: http://www.jstor.org/stable/249687 . Accessed: 28/02/2011 06:44

Your use of the JSTOR archive indicates your acceptance of JSTOR's Terms and Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp. JSTOR's Terms and Conditions of Use provides, in part, that unless you have obtained prior permission, you may not download an entire issue of a journal or multiple copies of articles, and you may use content in the JSTOR archive only for your personal, non-commercial use. Please contact the publisher regarding any further use of this work. Publisher contact information may be obtained at . http://www.jstor.org/action/showPublisher?publisherCode=misrc. . Each copy of any part of a JSTOR transmission must contain the same copyright notice that appears on the screen or printed page of such transmission. JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact support@jstor.org.

Management Information Systems Research Center, University of Minnesota is collaborating with JSTOR to digitize, preserve and extend access to MIS Quarterly.

http://www.jstor.org

IS Service Quality-Measurement

Service Quality:A Measure of InformationSystems Effectiveness

By: Leyland F. Pitt Henley Management College Greenlands Henley on Thames, RG9 3AU Oxfordshire United Kingdom leylandp@henleymc.ac.uk Richard T. Watson Department of Management University of Georgia Athens, GA 30602-6256 U.S.A. rwatson@uga.cc.uga.edu C. Bruce Kavan Department of Management, Marketing, and Logistics University of North Florida 4567 St. John's Bluff Road Jacksonville, FL 32216 U.S.A. bkavan@unflvm.cis.unf.edu

was assessed in three differenttypes of organizations in three countries.After examination of content validity, reliability,convergent validity, nomological validity,and discriminantvalidity, the study concludes that SERVQUALis an appropriateinstrumentfor researchers seeking a measure of IS service quality. Keywords: IS management, service quality, measurement ISRL Categories: A104, E10206.03, GA03, GB02, GB07

Introduction

The role of the IS departmentwithinthe organization has broadenedconsiderablyover the last decade. Once primarily developer and operaa tor of information systems, the IS department now has a much broaderrole. The introduction of personal computers results in more users of informationtechnology interactingwith the IS departmentmore often. Users expect the IS departmentto assist them with a myriadof tasks, such as hardwareand softwareselection, installation, problemresolution,connection to LANs, systems development, and software education. Facilities such as the informationcenter and IS help desk reflectthis enhanced responsibility. departmentsnow providea wider range of services to their users.1 They have expanded their roles from product developers and operations managersto become service providers. IS departmentshave always had a service role because they assist users in converting data into information. Users frequentlyseek reports that sort, summarize,and present data in a form that is meaningful decision making.The confor version of data to informationhas the typical characteristicsof a service. Itis often a customwith a user. However, ized, personal interaction service rarelyappears in the vocabularyof the traditionalsystems development life cycle. IS textbooks(e.g., Alter,1992; Laudonand Laudon, 1991) describe the final phase of systems development as maintenance. They state

1We prefer"client" the morecommon"user"-it drawsmore to attentionto the service-missionaspect of IS departments. However, because "user" is so ingrained in the IS we community, have stuckwithit.

Abstract

The IS functionnow includes a significantservice component.However,commonlyused measures of IS effectiveness focus on the products, rather than the services, of the IS function. Thus, there is the danger that IS researchers will mismeasure IS effectiveness if they do not include in theirassessment package a measure of IS service quality.SERVQUAL, instrument an developed by the marketing area, is offeredas a possible measure of IS service quality. measures service dimensionsof tanSERVQUAL gibles, reliability,responsiveness, assurance, and empathy. The suitability of SERVQUAL

MIS 1995 173 Quarterly/June

IS Service Quality-Measurement

that the key deliverables include operations manual, usage statistics, enhancement requests, and bug-fix requests. The notion that IS departments are service providers is not well-established in the IS literature. A recent review identifies six measures of IS success (Delone and McLean, 1992). In recognition of the expanded role of the IS department, we have augmented this list to include service quality as a measure of IS success. This paper discusses the appropriateness of SERVQUAL to assess IS service quality. The instrument was originally developed by marketing academics to assess service quality in general.

categories are labeled as production and product, respectively (Mason, 1978). Since system quality and information quality precede other measures of IS success, existing measures seem strongly product focused. This is not surprising given that many of the studies providing the empirical basis for the categorization are based on data collected in the early 1980s, when IS was still in the mainframe era. As the introduction pointed out, the IS department is not just a provider of products. It is also a service provider. Indeed, this may be its major function. This recognition has been apparent for some years: The quality of the IS department's service, as perceived by its users, is a key indicator of IS success (Moad, 1989; Rockart, 1982). The principle reason IS departments measure user satisfaction is to improve the quality of service they provide (Conrath and Mignen, 1990). A significantly broader perspective claims that quality and service are key measures of white-collar productivity (Deming, 1981-82). For Deming, quality is a super ordinate goal. Product and system suppliers are in the service business, albeit less so than service firms. Virtually all tangible products have intangible attributes, and all services possess some properties of tangibility. There is a perspective in the marketing literature (Berry and Parasuraman, 1991, p.9; Shostack, 1977) that argues the inappropriateness of a rigid goods-services classification. Shostack (1977) asserts that when a customer buys a tangible product (e.g., a car) he or she also buys a service (e.g., transportation). In many cases, a product is only a means of accessing a service. Personal computer users do not just want a machine; rather they seek a system that satisfies their personal computing needs. Thus, the IS department's ability to supply installation assistance, product knowledge, software training and support, and online help is a factor that will have an impact on the relationship between IS and users. Goods and services are not neatly compartmentalized. They exist along a spectrum of tangibility, ranging from relatively pure products, to relatively pure services, with a hybrid somewhere near the center point. Current IS success measures, product and system quality, focus on the tangible end of the spectrum. We argue that

Measuring IS Effectiveness

Organizations typically have many stakeholders with multiple and conflicting objectives of varying time horizons (Cameron and Whetton, 1983; Hannan and Freeman, 1977; Quinn and Rohrbaugh, 1983). There is rarely a single, common objective for all stakeholders. Thus, measuring the success of most organizational endeavors is problematic. IS is not immune to this problem. IS effectiveness is a multidimensional construct, and there is no single, overarching measure of IS success (DeLone and McLean, 1992). Consequently, multiple measures are required, and DeLone and McLean identify six categories into which these measures can be grouped, namely system quality, inuser satisfaction, formation quality, use, individual impact, and organizational impact. The categories are linked to define an IS success model (see Figure 1). The basis of the DeLone and McLean categoriand Weaver's (1949) theory zation-Shannon of communication-is product oriented. For example, system quality describes measures of the information processing system. Most measures in this category tap engineering-oriented characteristics (DeLone and performance McLean, 1992). Information quality represents measures of information systems output. Typical measures in this area include accuracy, precision, currency, timeliness, and reliability of information provided. In an earlier study, also based on the theory of communication, these

174

MISQuarterly/June 1995

IS Service Quality-Measurement

Figure 1. Augmented IS Success

Model (Adapted from DeLone and McLean, 1992) information systems, the quality of service can influence use and user satisfaction. In summary, multiple instruments are required to assess IS effectiveness. The current selection ignores service quality, an increasingly important facet of the IS function. If IS researchers disregard service quality, they may gain an inaccurate reading of overall IS effectiveness. We propose that service quality should be included in the researcher's armory of measures of IS effectiveness.

service quality, the other end of the spectrum, needs to be considered as an additional measure of IS success. DeLone and McLean's model needs to be augmented to reflect the IS department's service role. As the revised model shows (see Figure 1), service quality affects both use and user satisfaction. There are two possible units of analysis for IS service quality-the IS department and a particular information system. In those cases where users predominantly interact with one system (e.g., sales personnel taking telephone orders), a user's impression of service quality is based almost exclusively on one system. In this case, the unit of analysis is the information system. In situations where users have multiple interactions with the IS department (e.g., a personnel manager using a human resources management system, electronic mail, and a variety of personal computer products), the unit of analysis can be a particular system or the IS department. For the user of multiple systems, however, this distinction may be irrelevant. Thus, for example, the user who finds it difficult to get a personal computer repaired is concerned not with poor service for a designated system but with poor service in general from the IS department. Thus, while system quality and information quality may be closely associated with a particular software product, this is not always the case with service quality. Irrespective of whether a user interacts with one or multiple

Measuring Service Quality

Service quality is the most researched area of services marketing (Fisk, et al., 1993). The concept was investigated in an extensive series of focus group interviews conducted by Parasuraman, et al. (1985). They conclude that service quality is founded on a comparison between what the customer feels should be offered and what is provided. Other marketing researchers (Gronroos, 1982; Sasser, et al., 1978) also support the notion that service quality is the discrepancy between customers' perceptions and expectations. There is support for this argument in the IS literature. Conrath and Mignen (1990) report that the second most important component of user satisfaction, after general quality of service, is the match between users' expectations and

MISQuarterly/June 1995 175

IS Service Quality-Measurement

actual IS service. Rushinek and Rushinek (1986) conclude that fulfilled user expectations have a strong effect on overall satisfaction. Users' expressions of what they want are revealed by their expectations and their perceptions of what they think they are getting. Parasuraman and his colleagues (Parasuraman, et al., 1985; 1988; 1991; Zeithaml, et al., 1990) suggest that service quality can be assessed by measuring customers' expectations and perceptions of performance levels for a range of service attributes. Then the difference between expectations and perceptions of actual performance can be calculated and averaged across attributes. As a result, the gap between expectations and perceptions can be measured. The prime determinants of expected service quality, as suggested by Zeithaml, et al. (1990), are word-of-mouth communications, personal needs, past experiences, and communications by the service provider to the user. Users talk to each other and exchange stories about their relationship with the IS department. These conversations are a factor in fashioning users' expectations of IS service. Users' personal needs influence their expectation of IS service. A manager's need for urgency may differ depending on whether he or she has a PC failure the day before an annual presentation, or simply wants a new piece of software installed. Of course, prior experience is a key molder of expectations. Users may adjust or raise their User Word-ofmouth communications Vendors Vendor communications Gap

Personaal

I

expectations based on previous service encounters. For instance, users who find that the help desk frequently solves their problems are likely to expect answers to future problems. The three factors just discussed all relate to expectations that originate with the user. Another important source of expectations is the IS department itself, as the service provider. Its communications influence expectations. In particular, IS can be a very powerful shaper of expectations during systems development. Users are reliant on IS to convert their needs into a system. In the process, IS creates an expectation as to what the finished system will do and how it will appear. It would seem that too frequently IS misinterprets users' requirements or gives users a false impression of the outcome because many systems fail to meet users' expectations. For example, Laudon and Laudon (1991) report studies that show IS failure rates of 35 percent and 75 percent. In the IS sphere at least, users are subject to another source of communication not appearing in Zeithaml, et al.'s (1990) model. Vendors often influence users' expectations. Users may read vendors' advertisements, attend presentations, or even receive sales calls. Vendors, in trying to sell their products, often raise expectations by parading the positive features of their wares and downplaying issues such as systems conversion, compatibility,or integrationwith existing systems. IS has no control over vendors' communications IS department

I

needs

I

Past experiences

Expected service

IS communications

t

|

I Perceived service Figure 2. Determinants of Users' Expectations

176

1995 MISQuarterly/June

IS Service Quality-Measurement

and must recognize that they are an ever present force shaping expectations. This is not necessarily bad. Vendors' communications can be a positive force for change when they make users aware of what they should expect from IS. The forces influencing users' expectations are shown in Figure 2. The difference between expected service and IS's perceived service is depicted as a gap-the discrepancy between what users expect and what they think they are getting. If IS is to address this disparity it needs some way of assessing users' expectations and perceptions, and measuring the gap. Parasuraman, et al. (1988) operationalized their conceptual model of service quality by following the framework of Churchill (1979) for developing measures of marketing constructs. They started by making extensive use of focus groups, who identified 10 potentially overlapping dimensions of service quality. These dimensions were used to generate 97 items. Each item was then to measure turned into two statements-one expectations and one to measure perceptions. A sample of service users was asked to rate each item on a seven-point scale anchored on strongly disagree (1) and strongly agree (7). This initial questionnaire was used to assess the service-quality perceptions of customers who had recently used the services of five different types of service organizations (essentially following Lovelock's (1983) classification). The 97item instrument was then purified and condensed by first focusing on the questions clearly discriminating between respondents having different perceptions, and second, by focusing on the dimensionality of the scale and establishing the reliabilityof its components. Parasuraman, et al.'s work resulted in a 45-item instrument, SERVQUAL, for assessing customer expectations and perceptions of service quality in service and retailing organizations. The instrument has three parts (see the Appendix). The first part consists of 22 questions for measuring expectations. Questions are framed in terms of the performance of an excellent provider of the service being studied. The second part consists of 22 questions for measuring perceptions. Questions are framed in terms of the performance of the actual service provider.

The final part is a single question to assess overall service quality. Underlying the 22 items are five dimensions that the authors claim are used by customers when evaluating service quality, regardless of the type of service. These dimensions are: * Tangibles: Physical facilities, equipment, and appearance of personnel. * Reliability: to the Ability perform promisedservice dependably and accurately. * Responsiveness: Willingness to help customers and provide promptservice. * Assurance: Knowledge and courtesy of employees and their ability to inspiretrust and confidence. Caring, individualizedattention the service provider gives its customers.

* Empathy:

Service quality for each dimension is captured by a difference score G (representing perceived quality for that item), where G=P-E and P and E are the average ratings of a dimension's corresponding perception and expectation statements respectively. Because service quality is a significant topic in marketing, SERVQUAL has been subject to considerable debate (e.g., Brown, et al., 1993; Parasuraman, et al., 1993) regarding its dimensionality and the wording of items (Fisk, et al., 1993). Nevertheless, after examining seven studies, Fisk, et al. conclude that researchers generally agree that the instrument is a good predictor of overall service quality. Parasuraman, et al. (1991) argue that SERVQUAL's items measure the core criteria of service quality. They assert that it transcends specific functions, companies, and industries. They suggest that context-specific items may be used to supplement the measurement of the core criteria. In this case, we found some slight rewording of one item was required to measure IS service quality. The first question was originally asked in terms of "up-to-date equipment." We changed the wording to "up-to-datehardware and software" because equipment could be perceived as referring only to hardware.

MISQuarterly/June 1995 177

IS Service Quality-Measurement

Assessment of SERVQUAL'sValidity

Before SERVQUAL can be used as a measure of IS effectiveness, it is necessary to assess its validity in an IS setting. Data for validity assessment were collected in three organizations-a large South African financial institution (n=237), a large British accounting information management consulting firm (n=181), and a U.S. information services business (n=267). In each case, the standard SERVQUAL questionnaire was administered, with minor appropriate changes, to internal computer users in all firms (see the Appendix). Respondents were also required to give an overall rating of the IS department's service quality on a seven-point scale, ranging from 1 through 7 (1 = very poor, 7 = excellent). In the financial institution, the respondents were users of online production systems. Potential respondents were identified from a security profile list of all people having access to production systems. A total of 494 questionnaires were distributed to personnel in the head office and six branches of the financial institution.The response rate was 48 percent (237 usable questionnaires). In the accounting and consulting firm, respondents were internal users of the information systems department throughout the organization. The questionnaires were dispatched to 500 users by means of the internal mail system, and

181 usable responses were received by the cutoff date, for a response rate of 36.2 percent. In the information services business, respondents were internal users of the information systems department. The questionnaires were dispatched by means of the internal mail system, and 267 usable responses were received by the cutoff date. The response rate was 68 percent. Parasuraman, et al.'s (1988) construct validity appraisal of SERVQUAL guided the assessment of the validity of SERVQUAL for measuring IS service quality. This section discusses content validity, reliability, convergent validity, and nomological and discriminant validity.

Contentvalidity

Content validity refers to the extent to which an instrument covers the range of meanings included in the concept (Babbie, 1992; p. 133). Parasuraman, et al. (1988) used focus groups to determine the dimensions of service quality and then a two-stage process was used to refine the instrument. Their thoroughness suggests that SERVQUAL does measure the concept of service quality. We could not discern any unique features of IS that make the dimensions underlying SERVQUAL (tangibles, reliability, responsiveness, assurance, and empathy) inappropriate for measuring IS service quality or

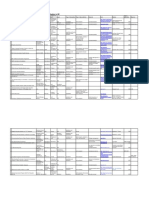

Table 1. Reliability of the SERVQUAL by Dimension Average Reliability Coefficient Across Four Service Companies (source: Parasuraman,et al., 1988) 0.61 0.79 0.72 0.84 0.75 0.89

Dimension Sample size Tangibles Reliability Responsiveness Assurance Empathy of Realiability linear combination

Numberof Items 4 5 4 4 5

Reliability Coefficients Information Financial Consulting Service Firm Institution 267 181 237 0.65 0.73 0.62 0.94 0.86 0.87 0.66 0.96 0.82 0.83 0.79 0.91 0.82 0.90 0.75 0.90 0.94 0.96

178

1995 MISQuarterly/June

IS Service Quality-Measurement

excluding some meaning of service quality in the IS domain.

concepts. Thus, in the ideal case, exact reproduction of the five-factor model would indicate both nomological and discriminant validity. The data from the three studies were analyzed independently using the method suggested by Johnson and Wichern (1982) to determine the number of factors to extract. Essentially, principal components and maximum likelihood methods with varimax rotation were tried and compared for a range of models. Financial Institution The analysis suggested that a seven-factor model, explaining 68 percent of the variance, is appropriate (see Table 3). The seven-factor model splits the suggested factor of tangibles into two parts. One part (G12) deals with the state of hardware and software, and the other (G2 - G4) concerns physical appearances. This is not surprising for an MIS environment because clearly, hardware and software are quite distinct from physical appearance. The suggested empathy factor also splits into two parts. One part (G18 - G19) concerns personal attention, and the other (G20 - G22) is focused on more broadly based customer needs. This was also observed by Parasuraman, et al. (1991). Reliability, responsiveness, and assurance load close to expectations. Consulting Firm Analysis indicated that five factors should be extracted, and these explain 66 percent of the variance (see Table 4). There is an acceptable correspondence between expected and actual loadings for tangibles, reliability,assurance, and empathy. In each case, all but one of the items in the group load together on the factor (e.g., for reliability, items 5-8 load together but not item 9). Responsiveness is problematic because only two of the items load together. Information Services Business Analysis indicated that three factors, explaining 71 percent of the variance, should be extracted

2

Reliability

The reliabilityof each of the dimensions was assessed using Cronbach's (1951) alpha (see Table 1). Reliabilities vary from 0.62 to 0.96, and the reliability of the linear combination (Nunally, 1978) is 0.90 or above in each case. These values compare very favorably with the average reliabilities of SERVQUAL for four service companies. The tangibles dimension provides the most concern because two of the three reliability measures are below the 0.70 level required for commercial applications (Carman, 1990).

Convergent validity

To assess convergent validity, the correlation between the overall service quality index, computed from the five dimensions, was compared to the response for a single question on the IS department's overall quality (see Table 2). The high correlation between the two measures indicates convergent validity. Table 2. Correlation of Service Quality Index With Single-Item Overall Measure Financial Consulting Information Institution Firm Service Correlation 0.60 0.82 0.60 coefficient <0.0001 <0.0001 <0.0001 p value

Nomological and discriminant validity

Nomological validity refers to an observed relationship between measures purported to assess different but conceptually related constructs. If two constructs (C1 and C2) are conceptually related, evidence that purported measures of each (M1 and M2) are related is usually accepted as empirical support for the conceptual relationship. Nomological validity is indicated if items expected to load together in a factor analysis, actually do so (Carman, 1990). Discriminant validity is evident if items underlying each dimension load as different factors. The dimensions are then measuring different

G1 refers to E1 - P1 where E1 is expectation question 1 and

P1 is perception question1.

MISQuarterly/June 1995 179

IS Service Quality-Measurement

Table 3. Exploratory Factor Analysis-Financial Institution (Principal Components Method With Varimax Rotation; Loadings > .55*) F1 F2 F3 F4 F6 F5 Dimensions G1 0.78 Tangibles 0.81 G2 0.73 G3 0.64 G4 0.75 G5 Reliability 0.75 G6 G7 0.70 0.80 G8 0.69 G9 G10 Responsiveness G1l G12 G13 0.80 G14 Assurance 0.75 G15 0.55 G16 G17 0.87 G18 Empathy 0.82 G19 0.75 G20 0.69 G21 0.63 G22 of items in the questionnaire.

F7

0.61 0.77 0.74

* The cutoff point for loadings is .01 significance, which is determined by calculating 2.58N1n, where n is the number

Firm Table 4. Exploratory Factor Analysis-Consulting (Principal Components Method With Varimax Rotation; Loadings > .55) F4 F3 F2 F1 Dimensions 0.78 G1 Tangibles 0.83 G2 0.57 G3 0.70 G4 0.85 G5 Reliability 0.57 G6 0.76 G7 0.80 G8 G9 0.69 G10 Responsiveness 0.60 G11 G12 G13 G14 Assurance 0.55 G15 G16 0.56 G17 G18 Empathy 0.77 G19 0.55 G20 0.65 G21 0.55 G22

F5

0.61 0.67 0.63 0.82

180

1995 MISQuarterly/June

IS Service Quality-Measurement

Services Business Table 5. Exploratory Factor Analysis--nformation (Principal Components Method With Varimax Rotation; Loadings > .55) F2 F1 Dimensions F3 G1 0.60 Tangibles G2 0.78 G3 0.77 G4 0.85 G5 0.86 Reliability G6 0.78 G7 0.76 G8 0.79 G9 0.67 G10 0.67 Responsiveness 0.58 0.63 G11 G12 0.73 G13 0.70 G14 0.67 Assurance G15 0.64 G16 0.75 0.72 G17 0.80 G18 Empathy G19 0.80 G20 G21 0.78 G22 0.74 (see Table 5). The factor loadings are reasonably consistent with the suggested model. Reliability and assurance load as expected. Tangible, responsiveness, and empathy miss by one item in each case. In summary, SERVQUAL passes content, reliability and convergent validity examination. There are some uncertainties with nomological and discriminant validity, but not enough to discontinue consideration of SERVQUAL. It is a suitable measure of IS service quality.

Comparisonof the factoranalyses

An examination of the three factor analyses indicates support for nomological validity. Of the 15 loadings examined, there are three exact fits (all variables of a dimension loading together), 10 near fits (all but one variable of a dimension loading together), and two poor fits (two or more variables of a dimension not loading). There are some problems with discriminant validity because some factors do not appear to be different from one another. This is particularly noticeable in the case of the information services business, where responsiveness, assurance, and empathy load as one factor. It may be that some of these concepts are too close together for respondents to differentiate. Concepts like responsiveness and empathy are similar; in his Thesaurus, Roget twice puts them together in the classifications of sensation and feeling (Roget, 1977).

Limitations

Potential users of SERVQUAL should be cautious. The reliability of the tangibles construct is low. Although this is also a problem with the original instrument, it cannot be ignored. The whole issue of tangibles in an IS environment probably needs further investigation. It may be appropriate to split tangibles into two dimensions: appearance and hardware/software. Because hardware and software can have a significant impact, a measure of IS service quality possibly needs further questions to tap these dimensions. SERVQUAL does not always clearly discriminate among the dimensions of service quality. Researchers who use SERVQUAL to discriminate the impact of service changes should be wary of using it to distinguish among the closely aligned concepts of responsiveness, assurance,

MIS Quarterly/June 1995

181

IS Service Quality-Measurement

and empathy. These concepts are not semantically distant, and there appear to be situations where users perceive them very similarly.

IS Research and Service Quality

Service qualitycan be both an independentand dependent variable of IS research. The augmented IS success model (see Figure 1) indicates that IS service qualityis an antecedent of use and user satisfaction. Because IS now has service component, IS researchers an important which managemay be interested in identifying rial actions raise service level. In this situation, service quality becomes a dependent variable. Furthermore,service quality may be part of a causal model. Considera studywhere a service policy intervention (e.g., a departmental help desk) is designed to increase personal computeruse. Figure 1 suggests that service quality would be a mediatingvariablein this situation.

for provides valuable information redirectingIS staff toward providinghigher-quality service to users. Because SERVQUAL a general measis ure of service quality,it is well-suited to benchmarking(Camp, 1989), which establishes goals based on best practice.Thus, IS managers can to potentiallyuse SERVQUAL benchmarktheir service performance against service industry leaders such as airlines,banks, and hotels.

Future Research

We see three possible directionsfor future research on service quality.First,Q-methodcould be used to examine service qualityfroma different perspective. Second, the relationship between customer service life cycle and service quality could be examined. Third,the relative importance of the determinants of expected service could be investigated.

Q-method

as SERVQUAL, with most questionnairesof this kind,does not requirerespondents to express a preference for one service characteristicover over assurance). They another (e.g., reliability can rate items comprisingboth dimensions at the high end of the scale. However, organizations frequentlyhave to make a choice when allocating scarce resources. For example, managers need to know whether they should or put more effort into reliability empathy. This issue can be addressed by asking respondents to allocate relative dimension importanceon a constant sum scale (e.g., 100 points).Zeithaml, et al. (1990) recommend this approach, which results in a "weightedgap score" by dimension and in total. Another approach to identifying preferences is Q-method (Brown, 1980; Stephenson, 1953), which can identifya preference structure and indicate patterningwithin groups of users. We intendto use Q-methodto gain insights into users' preference structurefor the dimensions of service and to discover if there is a single uniformprofileof service expectations, or there are classes of users with different expectations.We to use the questions from SERVQUAL propose

IS Practice and Service Quality

Any instrumentadvocated as a measure of IS success should firstbe validatedbefore application. This study provides evidence that practitioners can, with considerable confidence, use SERVQUALas a measure of IS success. Our research subsequent to this study (e.g., Pittand of Watson, 1994) has focused on the application as SERVQUAL a diagnostictool. We have comstudies in two organizations. pleted longitudinal measIn each case, service qualitywas initially ured, then IS management took steps to improve service, and service quality was remeasured 12 months later. In bothcases, significant improvements in service quality were detected. These organizations have embarked on a program of continuous incremental improvement of IS service quality and use to SERVQUAL measure their progress. Ourexperience shows that IS practitionersaccept service qualityas a useful, valid measure of IS effectiveness, and they find that SERVQUAL

182

1995 MISQuarterly/June

IS Service Quality-Measurement

and recast them for Q-method. Subjects will be asked to sort the items twice: first, on the basis of an ideal service provider-their expectations; second, on the basis of the actual service provider-their perceptions. This is very similar to a technique described by Stephenson (1953), the father of Q-method, in his study of student achievement and motivation.

Customerservice life cycle

The customer service life cycle, a variation on the customer resource life cycle (Ives and Learmonth, 1984; Ives and Mason, 1990), breaks down the service relationship with a customer into four major phases: requirements, acquisitions, stewardship, and retirement. It is highly likely users' expectations differ among these phases. Empathy might be the major need during requirements and reliability during stewardship. Thus, examining service quality by the customer service life cycle phase is an opportunity for future research.

tems, an IS department performs other functions such as responding to questions about software products, providing training, and giving equipment advice. In each of these cases, the user's goal is to acquire information. Thus, the IS department delivers information through both highly structured information systems and customized personal interactions. Clearly, providing information is a fundamental service of an IS department, and it should be concerned with the quality of service it delivers. Thus, we believe, the effectiveness of an IS unit can be partially assessed by its capability to provide quality service to its users. The major contribution of this study is to demonstrate that SERVQUAL, an extensively applied marketing instrument for measuring service quality, is applicable in the IS arena. The paper's other contributions are highlighting the service component of the IS department, augmenting the IS success model, presenting a logical model for user's expectations, and giving some directions for future research.

Determinantsof expected service

The model shown in Figure 2 indicates there are five determinants of expected service. The relative influence of each of these variables can be measured (see Boulding, et al., 1993; Zeithaml, et al., 1993). Again, the marketing literature provides a suitable starting point, because discovering what influences customers is a major theme of marketing research.

Acknowledgements

We gratefully acknowledge the insightful comments of the editor, associate editor, and anonymous reviewers of MISQ. We thank Neil Lilford of The Old Mutual,South Africafor his assistance.

References

Alter, S.L. Information Systems: A Management Perspective, Addison-Wesley, Reading, MA, 1992. Babbie, E. The Practice of Social Research (6th ed.), Wadsworth, Belmont, CA, 1992. Berry, L.L. and Parasuraman, A. Marketing Services: Competing Through Quality, Free Press, New York, NY, 1991. Boulding, W., Kalra, A., Staelin, R., and Zeithaml, V.A. "A Dynamic Model of Service Quality: From Expectations to Behavioral Intentions," Journal of Marketing Research (30:1), February 1993, pp. 7-27. Brown, T.J., Churchill, G.A.J., and Peter, J.P. "Improving the Measurement of Service Quality," Journal of Retailing (69:1), Spring 1993, pp. 127-139.

Conclusion

The traditional goal of an IS organization is to build, maintain, and operate information delivery systems. Users expect efficient and effective delivery systems. However, for the user, the goal is not the delivery system, but rather the information it can provide. For example, a user may want sales reported by geographic region. A computer-based information system is one alternative for satisfying that need. The same report could be produced manually. For the user, the information is paramount and the delivery mechanism secondary. In addition to developing and operating computer-based information sys-

MISQuarterly/June 1995 183

IS Service Quality-Measurement

Cameron, K.S. and Whetton, D.A. Organizational Effectiveness: A Comparison of Multiple Models, Academic Press, New York, NY, 1983. Camp, R.C. Benchmarking: The Search for Industry Best Practices that Lead to Superior Performance, Quality Press, Milwaukee, WI, 1989. Carman, J.M. "Consumer Perceptions of Service Quality: An Assessment of SERVQUAL Dimensions," Journal of Retailing (66:1), Spring 1990, pp. 33-53. Churchill, G.A. "A Paradigm for Developing Better Measures of Marketing Constructs," Journal of Marketing Research (16), February 1979, pp. 64-73 Conrath, D.W. and Mignen, O.P. "What is Being Done to Measure User Satisfaction with EDP/MIS," Information & Management (19:1), August 1990, pp. 7-19. Cronbach, L.J. "Coefficient Alpha and the Internal Structure of Tests," Psychometrika (16:3), September 1951, pp. 297-333. DeLone, W.H. and McLean, E.R. "Information Systems Success: The Quest for the Dependent Variable," Information Systems Research (3:1), March 1992, pp. 60-95. Deming, W.E. "Improvement of Quality and Productivity Through Action by Management," National Productivity Review, Winter 198182, pp. 12-22. Fisk, R.P., Brown, S.W., and Bitner, M.J. "Tracking the Evolution of the Services Marketing Literature,"Joural of Retailing (69:1), Spring 1993, pp. 61-103. Gronroos, C. Strategic Management and Marketing in the Service Sector, Swedish School of Economics and Business Administration, Helsingfors, Finland, 1982. Hannan, M.T. and Freeman, J. "Obstacles to Comparative Studies," In New Perspectives on Organizational Effectiveness, P.S. Goodman and J.M. Pennings (eds.), Jossey-Bass, San Francisco, CA, 1977, pp. 106-131. Ives, B. and Learmonth, G.P. "The Information Systems as a Competitive Weapon," Communications of the ACM (27:12), December 1984, pp. 1193-1201. Ives, B. and Mason, R. "Can Information Technology Revitalize Your Customer Service?" Academy of Management Executive (4:4), November 1990, pp. 52-69.

Johnson, R.A. and Wichern, D.W. Applied Multivariate Statistical Analysis, Prentice-Hall, Englewood Cliffs, NJ, 1982. Laudon, K.C. and Laudon, J.P. Management Information Systems: A Contemporary Perspective (2nd ed.), Macmillan, New York, NY, 1991. Lovelock, C.H. "Classifying Services to Gain Strategic Marketing Insights," Joumal of Marketing (47), Summer 1983, pp. 9-20. Mason, R.O. "Measuring Information Output: A Communication Systems Approach," Information & Management (1:5), 1978, pp. 219234. Moad, J. "Asking Users to Judge IS," Datamation (35:21), November 1, 1989, pp. 93-100. Nunnally, J.C. Psychometric Theory (2nd ed.), McGraw-Hill,New York, NY, 1978. Parasuraman, A., Zeithaml, V.A., and Berry, L.L. "A Conceptual Model of Service Quality and Its Implications for Future Research," Journal of Marketing (49), Fall 1985, pp. 41-50. Parasuraman, A., Zeithaml, V.A., and Berry, L.L. "SERVQUAL: A Multiple-item Scale for Measuring Consumer Perceptions of Service Quality," Journal of Retailing (64:1), Spring 1988, pp. 12-40. Parasuraman, A., Berry, L.L., and Zeithaml, V.A. "Refinement and Reassessment of the SERVQUAL Scale," Journal of Retailing (67:4), Winter 1991, pp. 420-450. Parasuraman, A., Berry, L.L., and Zeithaml, V.A. "More on Improving the Measurement of Service Quality," Journal of Retailing (69:1), Spring 1993, pp. 140-147. Pitt, L.F. and Watson, R.T. Longitudinal Measurement of Service Quality in Information Systems: A Case Study, Proceedings of the Fifteenth International Conference on Information Systems, Vancouver, B.C., 1994. Quinn, R.E. and Rohrbaugh, J. "A Spatial Model of Effectiveness Criteria:Towards a Competing Values Approach to Organizational (29:3), Analysis," Management Science March 1983, pp. 363-377. Rockart, J.F. "The Changing Role of the Information Systems Executive: A Critical Success Factors Perspective," Sloan Management Review (24:1), Fall 1982, pp. 3-13. Roget, P.M. Roget's International Thesaurus, revised by Chapman, R.L. (4th ed.), Thomas Y. Crowell, New York 1977.

184

1995 MISQuarterly/June

IS Service Quality-Measurement

Rushinek, A. and Rushinek, S.F. "What Makes Users Happy?" Communications of the ACM (29:7), July 1986, pp. 594-598. Sasser, W.E., Olsen, R.P., and Wychoff, D.D. Management of Service Operations: Text and Cases, Allyn and Bacon, Boston, MA, 1978. Shannon, C.E. and Weaver, W. The Mathematical Theory of Communication, University of Illinois Press, Urbana, IL, 1949. Shostack, G.L. "Breaking Free from Product Marketing,"Joumal of Marketing (41:2), April 1977, pp. 73-80. Stephenson, W. The Study of Behavior: Q-technique and Its Methodology, University of Chicago Press, Chicago, IL, 1953. Zeithaml, V., Parasuraman, A., and Berry, L.L. Delivering Quality Service: Balancing Customer Perceptions and Expectations, Free Press, New York, NY, 1990. Zeithaml, V.A., Berry, L.L., and Parasuraman, A. "The Nature and Determinants of Customer Expectations of Service," Journal of the Academy of Marketing Science (21:1), Winter 1993, pp. 1-12.

and executive programs in the U.S., Australia, Singapore, South Africa, France, Malta, and Greece. His publications have been accepted by such journals as The Joural of Small Business Management, Industrial Marketing Management, Journal of Business Ethics, and Omega. Current research interests are in the areas of services marketing, market orientation, and international marketing. Richard T. Watson is an associate professor and graduate coordinator in the Department of Management at the University of Georgia. He has a Ph.D. in MIS from the University of Minnesota. His publications include articles in MIS Quarterly, Communications of the ACM, Journal of Business Ethics, Omega, and Communication Research. His research focuses on national culture and its impact on group support systems, management of IS, and national information infrastructure. He has taught in more than 10 countries. C. Bruce Kavan is an assistant professor of MIS and interim director of the Barnett Institute at the University of North Florida. Prior to completing his doctorate at the University of Georgia in 1991, he held several executive positions with Dun & Bradstreet including vice president of Information Service for their Receivable Management Services division. Dr. Kavan is an extremely active consultant in the strategic use of technology, system architecture, and client/server technologies. His main areas of research interest are in inter-organizational systems, the delivery of IT services, and technology adoption/diffusion. His work has appeared in such publications as Information Strategy: The Executive's Journal, Auerback's Handbook of IS Management, and Journal of Services Marketing.

About the Authors

Leyland F. Pitt is a professor of management studies at Henley Management College and Brunel University, Henley-on-Thames, UK, where he teaches marketing. He holds an MCom in marketing from Rhodes University, and M.B.A. and Ph.D. degrees in marketing from the University of Pretoria. He is also an adjunct faculty member of the Bodo Graduate School, Norway, and the University of Oporto, Portugal. He has taught marketing in graduate

MISQuarterly/June 1995 185

IS Service Quality-Measurement

Appendix

Service Quality Expectations

Directions: This survey deals with your opinion of the Information Systems Department (IS). Based on your experiences as a user, please think about the kind of IS unit that would deliver excellent quality of service. Think about the kind of IS unit with which you would be pleased to do business. Please show the extent to which you think such a unit would possess the feature described by each statement. If you strongly agree that these units should possess a feature, circle 7. If you strongly disagree that these units should possess a feature, circle 1. If your feeling is less strong, circle one of the numbers in the middle. There are no right or wrong answers-all we are interested in is a number that truly reflects your expectations about IS. Please respond to ALL the statements Strongly disagree E1 E2 E3 E4 E5 E6 E7 E8 E9 1 and They willhave up-to-datehardware software 1 Theirphysicalfacilitieswillbe visuallyappealing neat in appearance 1 Theiremployees willbe well dressed and The appearanceof the physicalfacilitiesof these IS unitswill be in keepingwiththe kindof services provided When these IS unitspromiseto do somethingby a certain time, they willdo so When users have a problem,these IS unitswillshow a sincere interestin solvingit These IS unitswillbe dependable They willprovidetheirservices at the times they promise to do so 1 1 1 -1 11 1 12 22 - 2 -2 2 2 2 2 -222 22 2 2 -2 2 2 2 2 2 3 33 -33 3 3 3 33 33 3 3 3 3 3 3 -3 3 3 3 4 4 -4 44 4 4 -4 4 - 4 4 44 - 4 4 4 4 4 --4 4 4 4 --5 5 5 5 5 - 5 5 5 5 5 5 5 -6 6 5 5 5 5 5 5 5 5 5 - 5 Strongly agree 6 66 6 666 6 6 6 66 6 -66 6 6 6 6 6 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7

records They willinsist on error-free E10 They willtell users exactlywhen services willbe performed E11 Employeeswillgive promptservice to users E12

1 to Employeeswillalways be willing help users E13 Employeeswillnever be too busy to respondto users' requests 1 1 confidencein users E14 The behaviorof employees willinstill withthese IS units E15 Users willfeel safe in theirtransactions 1 employees 1 E16 Employeeswillbe consistentlycourteouswithusers E17 Employeeswillhave the knowledgeto do theirjob well attention E18 These IS unitswillgive users individual willhave operatinghoursconvenientto all E19 These IS units theirusers These IS unitswillhave employees who give users personal E20 attention E21 These IS unitswillhave the users' best interestsat heart the E22 The employees of these IS unitswillunderstand specific needs of theirusers 1 1 1 1 11

186

1995 MISQuarterly/June

IS Service Quality-Measurement

Service Quality Perceptions

Directions: The following set of statements relate to your feelings about ABC corporation's IS unit. For each statement, please show the extent to which you believe ABC corporation's IS has the feature described by the statement. Once again, circling a 7 means that you strongly agree that ABC corporation's IS has that feature, and circling 1 means that you strongly disagree. You may circle any of the numbers in the middle that show how strong your feelings are. There are no right or wrong answers-all we are interested in is a number that best shows your perceptions about ABC corporation's IS unit. Please respond to ALL the statements Strongly disagree P1 P2 P3 P4 P5 P6 P7 P8 P9 P10 P11 P12 P13 P14 P15 P16 P17 P18 P19 P20 P21 P22 IS has up-to-datehardware software and IS's physicalfacilitiesare visuallyappealing IS's employees are well dressed and neat in appearance The appearanceof the physicalfacilitiesof IS is in keeping withthe kindof services provided When IS promisesto do somethingby a certaintime, it does so When users have a problem,IS shows a sincere interest in solving it IS is dependable IS providesits services at the times it promisesto do so IS insists on error-free records IS tell users exactlywhen services willbe performed IS employees give prompt service to users IS employees are always willing help users to IS employees are never be too busy to respondto users' requests The behaviorof IS employees instillsconfidencein users Users willfeel safe in theirtransactions withIS's employees IS employees are consistentlycourteouswithusers IS employees have the knowledgeto do theirjob welll IS gives users individual attention IS has operatinghoursconvenientto all its users IS has employees who give users personalattention IS has the users' best interestsat heart the Employeesof IS understand specific needs of its users

1 2 3 4 5 6

Strongly agree

7

1 1 1 11

1

-2 -2 2 2 2

2

3 3 3 3 3

3

4 4 4 4 4

4

5 5 5 5 5

5

6 6 6 6 6

6

7 7 7 7 7

7

1 1 1-1 --2 1 1 1 11 --2-1 1 ---2

2 2 2 -3

3 3 -4 3

4 4 4 4 4 4 4

4

5 5 5 5 5 5 5 5

5 5 5 5 5 5 -5 -6

6 6-6 6 6 66 6

6 6 6 6 6 6 -7

7 7 7 7 7 7 7 7

7 7 7 7 7 7

2 2 2 -3 2

2 -3

3 3 3

3 ---4

3 3 --33-3 -4

4 4 --4 4

--1 ------2 2 -3 1 1 1 -2 2 2

Now please complete the following: 1. Overall, how would you rate the quality of service provided by IS? Please indicate your assessment by circling one of the points on the scale below: Poor 1 --2 -3 -4 5 6 Excellent 7

MISQuarterly/June 1995 187

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (824)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Issc Meet 2024Document2 pagesIssc Meet 2024Ace E. BadolesNo ratings yet

- How We Organize OurselvesDocument9 pagesHow We Organize Ourselvesapi-262652070No ratings yet

- Synopsis of Placement Office AutomationDocument29 pagesSynopsis of Placement Office AutomationFreeProjectz.com100% (2)

- Academic Vocabulary in UseDocument2 pagesAcademic Vocabulary in UseSyed AhmadNo ratings yet

- AppendixE.1 MRF T1-3Document7 pagesAppendixE.1 MRF T1-3Jiro SarioNo ratings yet

- Iafba Subroto Memorial Scholarship Scheme Jan 19Document7 pagesIafba Subroto Memorial Scholarship Scheme Jan 19Nipun Tiwari100% (1)

- Selamawit NibretDocument75 pagesSelamawit NibretYishak KibruNo ratings yet

- Applied Linguistics and Language EducationDocument1 pageApplied Linguistics and Language EducationJose miguel Sanchez gutierrezNo ratings yet

- Chapter 1 (Latest) - The Concept of Educational SociologyDocument25 pagesChapter 1 (Latest) - The Concept of Educational SociologyNur Khairunnisa Nezam II0% (1)

- Clarna Jannelle A. Gonzales: Pamantasan NG CabuyaoDocument2 pagesClarna Jannelle A. Gonzales: Pamantasan NG CabuyaoClarna Jannelle Aragon GonzalesNo ratings yet

- 1001 Motivational Quotes For Success Great Quotes From Great MindsDocument521 pages1001 Motivational Quotes For Success Great Quotes From Great Mindsronin100% (1)

- University of Illinois at Chicago Actg / Ids 475 - Database Accounting Systems Course Syllabus Fall Semester 2018 InstructorDocument5 pagesUniversity of Illinois at Chicago Actg / Ids 475 - Database Accounting Systems Course Syllabus Fall Semester 2018 Instructordynamicdude1994No ratings yet

- Nisichawayasihk Cree Nation Family & Community Wellness CentreDocument6 pagesNisichawayasihk Cree Nation Family & Community Wellness CentreNCNwellnessNo ratings yet

- Existing Tools For Measuring or Evaluating Internationalisation in HEDocument2 pagesExisting Tools For Measuring or Evaluating Internationalisation in HEJanice AmlonNo ratings yet

- Cy 2022 Action Plan On Curriculum Implementation Sdo Dinagat IslandsDocument4 pagesCy 2022 Action Plan On Curriculum Implementation Sdo Dinagat IslandsAdam PulidoNo ratings yet

- CELTA O3/2020 Moodle and Assignments Timetable: Teacher Training For Today's AfricaDocument2 pagesCELTA O3/2020 Moodle and Assignments Timetable: Teacher Training For Today's AfricaSalah EddineNo ratings yet

- 3rd WeekDocument7 pages3rd WeekMelegin RatonNo ratings yet

- SPELD SA Set 6 Sant The Ant Has lunch-DSDocument16 pagesSPELD SA Set 6 Sant The Ant Has lunch-DSLinhNo ratings yet

- Cox News Volume 7 Issue 16Document4 pagesCox News Volume 7 Issue 16David M. Cox ESNo ratings yet

- NSTP Chapter 1Document50 pagesNSTP Chapter 1Andrea Nicole De LeonNo ratings yet

- TEC 266 OBTL Teaching Competencies in Agri Fishery ArtsDocument14 pagesTEC 266 OBTL Teaching Competencies in Agri Fishery ArtsPrincess Ira SantillanNo ratings yet

- Chapter 7 HedgeDocument4 pagesChapter 7 Hedgedeborah_methods100% (1)

- PT1 Revision - Practice Questions (Answers)Document4 pagesPT1 Revision - Practice Questions (Answers)aliza42043No ratings yet

- Confras Mod1 N PDFDocument7 pagesConfras Mod1 N PDFdexter gentrolesNo ratings yet

- Project Director or Senior Project ManagerDocument5 pagesProject Director or Senior Project Managerapi-78785489No ratings yet

- Firsts and Lasts: Start SpeakingDocument10 pagesFirsts and Lasts: Start SpeakingCốm VịtNo ratings yet

- AICTE - Approval Process Handbook 2022-23Document313 pagesAICTE - Approval Process Handbook 2022-23Manoj Ramachandran100% (1)

- Sample Paper CCNADocument4 pagesSample Paper CCNASyedAsadAliNo ratings yet

- Module 3 Quiz - Attempt Review 2Document5 pagesModule 3 Quiz - Attempt Review 2ManritNo ratings yet

- Placement Brochure 2016-17Document28 pagesPlacement Brochure 2016-17Nishant JoshiNo ratings yet