Professional Documents

Culture Documents

Chapter 5 - Vector Calculus File

Chapter 5 - Vector Calculus File

Uploaded by

Hoang Quoc TrungOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Chapter 5 - Vector Calculus File

Chapter 5 - Vector Calculus File

Uploaded by

Hoang Quoc TrungCopyright:

Available Formats

Vector Calculus

Contents

• ex11-12, bt11-12

Differentiation of Univariate Functions

Partial Differentiation and Gradients

Gradients of Matrices

Backpropagation

Higher-Order Derivatives

Linearization and Multivariate Taylor Series

1/10/2022 Chapter 5 - Vector Calculus 2

The Chain Rule

x f f(x) g (gf)(x)

(gf)(x) = g(f(x))f(x) # gf means g after f

dg dg df

=

dx df dx

1/9/2022 Chapter 5 - Vector Calculus 3

Chain rule – Ex

• Use the chain rule to compute the derivative of

h(x) = (2x + 1)4

x 2() 2x () + 1 2x+ 1 ()4 (2x+1)4

• h can be expressed as h(x) = (gfu)(x)

u(x) = 2x, f(u) = u + 1, g(f) = f4

h(x) = (gfu)(x) = g(f)f(u)u(x) = 4f3(1)(2) = 8(2x + 1)3

1/9/2022 Chapter 5 - Vector Calculus 4

Partial Derivative

Definition (Partial Derivative). For a function of n variables

x1, . . . , xn f : n → ,

x f(x)

we define the partial derivatives as

f f(x1,…, xk+ h, xk+1,..., xn) – f(x1,..., xk,…, xn)

= limh→0

xk h

1/9/2022 Chapter 5 - Vector Calculus 5

Gradients of f : n →

• We collect all partial derivatives of f in the row vector to

form the gradient of f f f … f

x1 x2 xn

df

• Notation. xf gradf

dx

• Ex. For f : 2 → , f(x1, x2) = x13 – x1x2

f f

• Partial derivatives = 3x12 – x2, = x 1 3 – x1

x1 x2

• The Gradient of f

xf = [3x12x2 – x2 x13 – x1] 12 (1 row, 2 columns)

1/9/2022 Chapter 5 - Vector Calculus 6

Gradients of f : n → x 3 f 1

df

gradf = = xf 1n

dx

Ex. For f(x, y) = (x3 + 2y)2, xf 13

we obtain the partial derivatives

f

• = 2(x3 + 2y) (x3 + 2y) = 6x2(x3 + 2y)

x x

f

• = 2(x3 + 2y) (x3 + 2y) = 4(x3 + 2y)

y y

The gradient of f is [6x2(x3 + 2y) 4(x3 + 2y)]

1/9/2022 Chapter 5 - Vector Calculus 7

Gradients/Jacobian of Vector-Valued Functions f : n m

• For a vector-valued function f: n m,

f(x) = [f1(x) f2(x) … fm(x)]T m-row vector

where fi : n

Gradient (or Jacobian) of f

df ∇xf 1

J = ∇xf = = … Dimension: mn

dx

∇xfm

1/9/2022 Chapter 5 - Vector Calculus 8

Jacobian of f: n m – size

x3 f4 J 43

x1 x2 x3

f1

f2

f2 x3

f3

fm

1/9/2022 Chapter 5 - Vector Calculus 9

Jacobian of f: n m – Ex

Ex. Find the Jacobian of f: 3 2

f1(x1, x2, x3) = 2x1 + x2x3, f2(x1, x2, x3) = x1x3 - x22

Jacobian of f: J 23

1/9/2022 Chapter 5 - Vector Calculus 10

Gradient of f: n m – Ex

• We are given f(x) = Ax, f(x) ∈ m, A ∈ mn, x ∈ n. Compute

the gradient ∇xf

fi

• ∇xf = its size is mn

xj

mn

𝑛

fi(x) = 𝑗=1 aij xj

fi

= aij ∇xf = A

xj

1/9/2022 Chapter 5 - Vector Calculus 11

Gradient of f: n m – Ex2

• Given h : → , h(t) = (fx)(t)

where f : 2 → , f(x) = exp(x1 + x22),

x1(t) t

x : → , x(t) =

2 =

x2(t) sint

dh

Compute , the gradient of h with respect to t.

dt

• Use the chain rule (matrix version) for h = fx

dh d df dx

= (fx) =

dt dt dx dt

1/9/2022 Chapter 5 - Vector Calculus 12

Gradient of f: n m – Ex2

x1

dh

= f f t = f x1 + f x2

dt x1 x2 x2 x1 t x2 t

t

= exp(x1 + x22) + 2x2exp(x1 + x22)cost,

where x1 = t, x2 = sint.

1/9/2022 Chapter 5 - Vector Calculus 13

Gradient of f: n m – Exercise

• y N, θ D, Φ ND

e: D N, e(θ) = y − Φθ,

L: N , L(e) = e2 = eTe

dL de dL

Find , , and

de dθ dθ

1/9/2022 Chapter 5 - Vector Calculus 14

Gradient of A : m pq

Approach 1

4×2×3 tensor

1/9/2022 Chapter 5 - Vector Calculus 15

Gradient of A : m pq

Approach 2: Re-shape matrices into vectors

4×2×3 tensor

1/9/2022 Chapter 5 - Vector Calculus 16

Gradients of A : m pq – Ex

• Ex. Consider A: 3 32

𝑥1 − 𝑥2 𝑥1 + 𝑥3

• A(x1, x2, x3) = 𝑥1 2 + 𝑥3 2𝑥1

𝑥3 − 𝑥2 𝑥1 + 𝑥2 + 𝑥3

dA

• The dimension of : (32)3

dx

• Approach 1

1 1 −1 0 0 1

A A A

= 2𝑥1 2 , = 0 0, = 1 0

x 1 x2 x3 (32)3 tensor

0 1 −1 1 1 1

1/9/2022 Chapter 5 - Vector Calculus 17

Gradient of f : mn p – Ex

fM AMN xN

… … … … x1 f: MN M, f(A) = Ax

fj Aj1 … AjN … fi = Ai1x1 +… + Aikxk +…+ AiNxN

fi Ai1 … AiN xN

fi fi

… … … … = xk, = 0 (j i)

Aik Ajk

df

M(MN)

dA … … …

… … …

0 … 0

x1 … xN

x1 … xN

0 … 0

… … …

… … …

1/9/2022 Chapter 5 - Vector Calculus 18

Gradient of Matrices with Respect to Matrices mn pq

For R ∈ MN and f : MN → NN d𝑲𝑝𝑞

∈ 1×(𝑀×𝑁)

with f(R) = RTR = K ∈ NN d𝑹

Compute the gradient dK/dR.

The gradient has the dimensions

R

dK/dR ∈ (NN)MN K

Kpq

1/9/2022 Chapter 5 - Vector Calculus 19

Gradient of Matrices with Respect to Matrices mn pq

dK/dR ∈ (NN)MN K= RTR

R = [r1 r2 … rN], ri is ith column of R

dK𝑝𝑞 1×(𝑀×𝑁)

∈

dR Kpq

Rij

dK𝑝𝑞

∈ 1

dR𝑖𝑗 𝑀

Kpq = rpTrq = 𝑚=1 𝑅𝑚𝑝𝑅𝑚𝑞

𝑅𝑖𝑞 𝑖𝑓𝑗 = 𝑝 𝑞

dK𝑝𝑞 𝑅𝑖𝑝 𝑖𝑓 𝑗 = 𝑞𝑝

= pqij =

* dR𝑖𝑗 2𝑅𝑖𝑞 𝑖𝑓 𝑗 = 𝑝 = 𝑞

0 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

1/9/2022 Chapter 5 - Vector Calculus 20

Backpropagation - Introduction

• Probably the single most important algorithm in all of Deep Learning

• In many machine learning applications, we find good model

parameters by performing gradient descent compute the gradient

of a learning objective w.r.t. the parameters of the model. For

example, an ANN (single Hidden Layer with 150 nodes) for

128x128x3 color image needs at least 128x128x3x150 = 7,372,800

weights.

• The backpropagation algorithm is an efficient way to compute the

gradient of an error function with respect to the parameters of the

model.

1/9/2022 Chapter 5 - Vector Calculus 21

Given training data ML Needs Gradients

{(x1, y1), (x2, y2), …, (xm, ym)}

Choose decision and cost functions

𝒚𝑖 = 𝑓𝜃 (𝒙𝑖 )

C(𝒚𝑖, yi)

Define the goal

1

Find * that minimizes iC(𝒚𝑖, yi)

𝑚

! The backpropagation Train the model with (stochastic) gradient

descent to update ,

algorithm is an efficient 𝜕𝐶

way to compute the (t+1) = (t) - (xi, yi)

gradient 𝜕(t)

1/9/2022 Chapter 5 - Vector Calculus 22

Epochs

• Backpropagation algorithm consists of many cycles, each cycle is

called an epoch with two processes:

forward phase

a(0) z(1), a(1) z(2), a(2) … C

𝜕𝐶 𝜕𝐶 𝜕𝐶

…

𝜕 (1) 𝜕 (2) 𝜕 (𝑁)

backward phase

1/9/2022 Chapter 5 - Vector Calculus 23

Deep Network (ANN with hidden layers)

Activation equations (matrix version)

Layer (1) = hidden layer

z(1) = W(1)a(0) + b(1)

a(1) = 1(z(1))

Layer (2) = output layer

z(2) = W(2)a(1) + b(2)

a(2) = 2(z(2))

The cost for example number k

1 (2) 1

Ck = 𝑖(𝑎𝑖 −𝑦𝑖 )2 = a(2) – y2

2 2

1/9/2022 Chapter 5 - Vector Calculus 24

Forward phase

For L = 1..N, a(0) = x

z(L) = W(L)a(L-1) + b(L)

a(L) = L(z(L))

1

C: cost function (i.e., C = a(N) – y2)

2

1/9/2022 Chapter 5 - Vector Calculus 25

Backpropagation

Layer 1 Layer 2 … Layer K-1 Layer K … Layer N-1 Layer N

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1)

=

𝜕𝑾(𝑁−1) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝑾(𝑁−1) 𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁)

=

𝜕𝑾(𝑁) 𝜕𝒂(𝑁) 𝜕𝑾(𝑁)

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1)

=

𝜕𝒃(𝑁−1) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒃(𝑁−1) 𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁)

=

𝜕𝒃(𝑁) 𝜕𝒂(𝑁) 𝜕𝒃(𝑁)

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝑁−2)

= Benefit of backpropagation:

𝜕𝑾(𝑁−2) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝑁−2) 𝜕𝑾(𝑁−2)

Reused terms outside the box

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝑁−2)

=

𝜕𝒃(𝑁−2) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝑁−2) 𝜕𝒃(𝑁−2)

1/9/2022 Chapter 5 - Vector Calculus 26

Backpropagation Activation equations

z(L) = W(L)a(L-1) + b(L)

Layer 1 Layer 2 … Layer K-1 Layer K … Layer N-1 Layer N a(L) = L(z(L))

C: cost function

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝐿+3) 𝜕𝒂(𝐿+2) 𝜕𝒂(𝐿+1)

= … (𝐿+2) (𝐿+1) (𝐿+1)

𝜕𝑾(𝐿+1) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝑁−2) 𝜕𝒂 𝜕𝒂 𝜕𝑾

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝐿+2) 𝜕𝒂(𝐿+1) 𝜕𝒂(𝐿)

= …

𝜕𝑾(𝐿) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝑁−2) 𝜕𝒂(𝐿+1) 𝜕𝒂(𝐿) 𝜕𝑾(𝐿)

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝐿+2) 𝜕𝒂(𝐿+1) 𝜕𝒂(𝐿)

= … Backpropagation

𝜕𝒃(𝐿) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝑁−2) 𝜕𝒂(𝐿+1) 𝜕𝒂(𝐿) 𝜕𝒃(𝐿)

At each layer (L), need to compute Compute e(L+1) (at layer

𝜕𝐶 𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝒂(𝐿+2) 𝜕𝒂(𝐿+1) 𝜕𝒂(𝐿+1) L+1) before computing

e(L) := = … = e(L+1)

e(L) (at layer L)

𝜕𝒂(𝐿) 𝜕𝒂(𝑁) 𝜕𝒂(𝑁−1) 𝜕𝒂(𝐿+1) 𝜕𝒂(𝐿) 𝜕𝒂(𝐿)

1/9/2022 Chapter 5 - Vector Calculus 27

Backpropagation algorithm

For each example in training examples

1. Feed forward

2. Backpropagation

At output layer (N), compute and store:

𝜕𝐶

e(N) = (𝑁)

𝜕𝒂

𝜕𝐶 𝜕𝒂(𝑁) 𝜕𝐶 (𝑁)

𝜕𝒂

= e (N) , = e(N)

𝜕𝑾(𝑁) 𝜕𝑾(𝑁) 𝜕𝒃(𝑁) 𝜕𝒃(𝑁)

For layer (L) from N-1 to 1:

𝜕𝒂 (𝐿+1)

• Compute e(L) using e(L) = e(L+1)

Activation equations 𝜕𝒂(𝐿)

z(L) = W(L)a(L-1) + b(L) 𝜕𝐶 𝜕𝒂(𝐿) , 𝜕𝐶

• Compute (𝐿) = e (L) = e(L)

a(L) = L(z(L)) (𝐿)

𝜕𝑾 𝜕𝑾 (𝐿) 𝜕𝒃(𝐿)

𝜕𝒂

C: cost function

𝜕𝒃(𝐿)

1/9/2022 Chapter 5 - Vector Calculus 28

Higher-order partial derivatives

Consider a function f : 2

of two variables x, y.

Second order partial derivatives:

2 f 2 f Ex.

f: 2 , f(x, y) = x3y – 3xy2 + 5y,

x 2 xy 𝜕𝑓 𝜕𝑓

= 3x2y – 3y2, = x3 – 6xy +5

𝜕𝑥 𝜕𝑦

2 f 2 f 2

𝜕𝑓 2

𝜕𝑓

= 3𝑥2 − 6𝑦,

2 = 6𝑥𝑦,

yx y 2

𝜕𝑥 𝜕𝑥𝜕𝑦

2

𝜕𝑓 2 𝜕2𝑓

= 3𝑥 − 6𝑦, 2 = −6𝑥

𝜕𝑦𝜕𝑥 𝜕𝑦

n f

is the nth partial derivative of f with respect to x

x n

1/9/2022 Chapter 5 - Vector Calculus 29

The Hessian

• The Hessian is the collection of all second-order partial derivatives

Hessian matrix is symmetric for twice

continuously differentiable functions,

that is,

𝜕2𝑓 𝜕2𝑓

Hessian matrix =

𝜕𝑥𝜕𝑦 𝜕𝑦𝜕𝑥

1/9/2022 Chapter 5 - Vector Calculus 30

Gradient vs Hessian of f: n

Consider a function f : n

Gradient Hessian

2 f 2 f 2 f

f f f ...

f ... 1x 2

x1x2 x1xn

x1 x2 xn 2 f 2 f 2 f

...

Dimension: 1 n f x2 x1

2

x2 2 x2 xn

... ... ... ...

2 f 2 f f

2

x x xn x2

...

xn 2

n 1

Dimension: n n

1/9/2022 Chapter 5 - Vector Calculus 31

Gradient vs Hessian of f: n m

Consider (vector-valued) function f : n

m

x1 f1

Gradient x2 f2 Hessian

x3

m n matrix m (n n) tensor

Dimension: 2 3

Dimension: 2 (3 3)

1/9/2022 Chapter 5 - Vector Calculus 32

Example

• Compute the Hessian of the function z = f(x, y) = x2 + 6xy – y3 and

evaluate at the point (x = 1, y = 2, z = 5).

1/9/2022 Chapter 5 - Vector Calculus 33

Taylor series for f:

Taylor polynomials

Approximation problems

1/9/2022 Chapter 5 - Vector Calculus 34

Taylor series for f: D

Consider a function f (smooth at x0)

multivariate Taylor series of f at x0 is defined as

where

1/9/2022 Chapter 5 - Vector Calculus 35

Example

Find the Taylor series for the function

f(x, y) = x2 + 2xy + y3 at x0 = 1, y0 = 2.

1/9/2022 Chapter 5 - Vector Calculus 36

Taylor series of f(x, y) = x2 + 2xy + y3

1/9/2022 Chapter 5 - Vector Calculus 37

Taylor series of f(x, y) = x2 + 2xy + y3

*

* *

[i].[j].[k]

3[i,j,k]

1/9/2022 Chapter 5 - Vector Calculus 38

Taylor series of f(x, y) = x2 + 2xy + y3

The Taylor series expansion of f at (x0, y0) = (1, 2) is

1/9/2022 Chapter 5 - Vector Calculus 39

Summary

Differentiation of Univariate Functions

Partial Differentiation and Gradients

Gradients of Matrices

Backpropagation

Higher-Order Derivatives

Linearization and Multivariate Taylor Series

1/11/2022 Chapter 5 - Vector Calculus 40

THANKS

1/9/2022 Chapter 5 - Vector Calculus 41

You might also like

- UKEC Annual Report 2011/2012Document118 pagesUKEC Annual Report 2011/2012ukecatomNo ratings yet

- Application of IntegrationDocument52 pagesApplication of IntegrationrafiiiiidNo ratings yet

- MT 1112: Calculus: IntegralsDocument23 pagesMT 1112: Calculus: IntegralsJustin WilliamNo ratings yet

- Continuous OptimizationDocument51 pagesContinuous Optimizationlaphv494No ratings yet

- SPM Add Maths: Using FormulaeDocument13 pagesSPM Add Maths: Using FormulaeMalaysiaBoleh100% (6)

- Chapter 7Document37 pagesChapter 7Hoang Quoc TrungNo ratings yet

- 3 - 1 Exponential Functions and Their GraphsDocument17 pages3 - 1 Exponential Functions and Their GraphsMar ChahinNo ratings yet

- Busmath Session 11Document7 pagesBusmath Session 11testNo ratings yet

- MAT1320 Fall 2003 Sample Final Exam PDFDocument11 pagesMAT1320 Fall 2003 Sample Final Exam PDFTurabNo ratings yet

- Divergence and Curl: Intermediate MathematicsDocument29 pagesDivergence and Curl: Intermediate MathematicsSunil NairNo ratings yet

- Divergence and Curl: Intermediate MathematicsDocument29 pagesDivergence and Curl: Intermediate MathematicsguideNo ratings yet

- Tipo de Ejercicios 1 - Integrales InmediatasDocument7 pagesTipo de Ejercicios 1 - Integrales Inmediatasalbeiro moreno de hoyosNo ratings yet

- Press 1Document13 pagesPress 1pauljustine091No ratings yet

- Add Math DifferentiationDocument22 pagesAdd Math Differentiationkamil muhammad100% (1)

- SCM Xii Applied Math 202324 Term2Document74 pagesSCM Xii Applied Math 202324 Term2mouseandkeyboardopNo ratings yet

- Logarithmic DifferentiationDocument6 pagesLogarithmic DifferentiationjeffconnorsNo ratings yet

- Definite Indefinite Integration (Area) (12th Pass)Document131 pagesDefinite Indefinite Integration (Area) (12th Pass)inventing new100% (1)

- 6.definite Integration - Its Application (English)Document48 pages6.definite Integration - Its Application (English)Shival KatheNo ratings yet

- Gauss Quadrature MethodDocument12 pagesGauss Quadrature MethodMirza Shahzaib ShahidNo ratings yet

- 15.1 - Vector Calculus - Div & CurlDocument29 pages15.1 - Vector Calculus - Div & Curlkhanshaheer477No ratings yet

- IntegralDocument45 pagesIntegralgoksu13kzlsNo ratings yet

- 13 Approximating Irregular Spaces: Integration: 1 The Graph Shows The Velocity/time Graph in M/s ForDocument3 pages13 Approximating Irregular Spaces: Integration: 1 The Graph Shows The Velocity/time Graph in M/s ForCAPTIAN BIBEKNo ratings yet

- 2 - IntegrationDocument19 pages2 - IntegrationSri Devi NagarjunaNo ratings yet

- MAT 1320 DGD WorkbookDocument120 pagesMAT 1320 DGD WorkbookAurora BedggoodNo ratings yet

- QA20091 Em2 28 - 1Document3 pagesQA20091 Em2 28 - 1api-25895802No ratings yet

- Chapter 5: Applications of Integration: Vietnam National Universities at Ho Chi Minh City International UniversityDocument48 pagesChapter 5: Applications of Integration: Vietnam National Universities at Ho Chi Minh City International UniversityLương Anh VũNo ratings yet

- Integration PDFDocument8 pagesIntegration PDFAre Peace El MananaNo ratings yet

- Math 31 Chapter 10 and 11 LessonsDocument26 pagesMath 31 Chapter 10 and 11 LessonsSam PanickerNo ratings yet

- AssignmentDocument1 pageAssignmentHatem SalemNo ratings yet

- Mat 121-FunctionsDocument45 pagesMat 121-Functionsakinleyedavid2007No ratings yet

- Chapter 5: Applications of Integration: Vietnam National Universities at Ho Chi Minh City International UniversityDocument48 pagesChapter 5: Applications of Integration: Vietnam National Universities at Ho Chi Minh City International UniversityKensleyTsangNo ratings yet

- Tailieuxanh Dap An Calculus 1 Final Test hk2 2019 2020 7797Document5 pagesTailieuxanh Dap An Calculus 1 Final Test hk2 2019 2020 7797Tâm Phạm ThànhNo ratings yet

- Test1 2008 and SolnsDocument14 pagesTest1 2008 and SolnsmplukieNo ratings yet

- Solution Final ExamDocument9 pagesSolution Final ExamArbi HassenNo ratings yet

- College Algebra8Document25 pagesCollege Algebra8AbchoNo ratings yet

- 1.1.2 Integration: Indefinite IntegralsDocument6 pages1.1.2 Integration: Indefinite IntegralsRoba Jirma BoruNo ratings yet

- C3 Functions C - QuestionsDocument2 pagesC3 Functions C - Questionspillboxsesame0sNo ratings yet

- 13 BBMP1103 T9Document18 pages13 BBMP1103 T9elizabeth lizNo ratings yet

- Chapter 2 TestDocument6 pagesChapter 2 TestJose SegalesNo ratings yet

- Integral Calculus (Module 1)Document4 pagesIntegral Calculus (Module 1)Panda ExcaliburNo ratings yet

- 1.fun Itf 17.05.21 Ans&solDocument4 pages1.fun Itf 17.05.21 Ans&solBramha ArpnamNo ratings yet

- Integral CalculusDocument13 pagesIntegral Calculusgech95465195No ratings yet

- Chapter 3Document16 pagesChapter 3Michael S. G. ForhNo ratings yet

- 201 NYA 05 Dec2013Document4 pages201 NYA 05 Dec2013rhl5761No ratings yet

- AP Calc CramDocument14 pagesAP Calc CramshubaansridharNo ratings yet

- SSB10203 Chapter 1 Basic of Differentiation and Integration MethodDocument23 pagesSSB10203 Chapter 1 Basic of Differentiation and Integration MethodNad IsmailNo ratings yet

- Chapter 4 IlearnDocument53 pagesChapter 4 IlearnSyahir HamidonNo ratings yet

- Advance Mathematics - 1 PDFDocument56 pagesAdvance Mathematics - 1 PDFVivek ChauhanNo ratings yet

- C 06 Anti DifferentiationDocument31 pagesC 06 Anti Differentiationebrahimipour1360No ratings yet

- A. Illustrate The Graphs of The Following Function. Determine Also Their Domain and RangeDocument16 pagesA. Illustrate The Graphs of The Following Function. Determine Also Their Domain and RangeRalph Michael CondinoNo ratings yet

- Quiz Like Questions From Unit 6-10Document8 pagesQuiz Like Questions From Unit 6-10Lucie StudgeNo ratings yet

- MatlabQuad PDFDocument7 pagesMatlabQuad PDFANGAD YADAVNo ratings yet

- Chapter 0Document30 pagesChapter 0Mc VinothNo ratings yet

- Assignment Booklet: School of Sciences Indira Gandhi National Open University New DelhiDocument5 pagesAssignment Booklet: School of Sciences Indira Gandhi National Open University New DelhiSiba Prasad HotaNo ratings yet

- Chapter 3 Numerical Integration: in This Chapter, You Will LearnDocument6 pagesChapter 3 Numerical Integration: in This Chapter, You Will LearnArvin 97No ratings yet

- Differentiation (Soalan 3)Document21 pagesDifferentiation (Soalan 3)paulusjemis2005No ratings yet

- IntegrationDocument8 pagesIntegrationapi-298592212No ratings yet

- Factoring and Algebra - A Selection of Classic Mathematical Articles Containing Examples and Exercises on the Subject of Algebra (Mathematics Series)From EverandFactoring and Algebra - A Selection of Classic Mathematical Articles Containing Examples and Exercises on the Subject of Algebra (Mathematics Series)No ratings yet

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Chapter 7Document37 pagesChapter 7Hoang Quoc TrungNo ratings yet

- Chapter 4Document47 pagesChapter 4Hoang Quoc TrungNo ratings yet

- Music Database: Salary:money)Document2 pagesMusic Database: Salary:money)Hoang Quoc TrungNo ratings yet

- Chapter 3Document25 pagesChapter 3Hoang Quoc TrungNo ratings yet

- SQL LabDocument83 pagesSQL LabHoang Quoc TrungNo ratings yet

- LAB 211 Assignment: Title BackgroundDocument3 pagesLAB 211 Assignment: Title BackgroundHoang Quoc TrungNo ratings yet

- LAB 211 Assignment: Title BackgroundDocument2 pagesLAB 211 Assignment: Title BackgroundHoang Quoc TrungNo ratings yet

- LAB 211 Assignment: Title BackgroundDocument3 pagesLAB 211 Assignment: Title BackgroundHoang Quoc TrungNo ratings yet

- Nilai Pendidikan Agama Hindu Dalam Lontar Siwa Sasana: Ida Bagus Putu Eka Suadnyana, I Putu Ariyasa DarmawanDocument21 pagesNilai Pendidikan Agama Hindu Dalam Lontar Siwa Sasana: Ida Bagus Putu Eka Suadnyana, I Putu Ariyasa DarmawanKomang gde TantraNo ratings yet

- Abu Dhabi and Dubai 2020 MFDocument1 pageAbu Dhabi and Dubai 2020 MFYaryna Telenko de OvelarNo ratings yet

- Experiment 3Document5 pagesExperiment 3Simran RaiNo ratings yet

- Associate Membership Candidate GuideDocument20 pagesAssociate Membership Candidate GuideKanagha Raj K100% (1)

- Griffith Health Assignment Format Presentation GuidelinesDocument3 pagesGriffith Health Assignment Format Presentation GuidelinesSiddiqa ZubairNo ratings yet

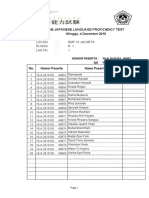

- Adoc - Pub - The Japanese Language Proficiency Test Minggu 4 deDocument24 pagesAdoc - Pub - The Japanese Language Proficiency Test Minggu 4 deSushi Ikan32No ratings yet

- Conflict ResolutionDocument37 pagesConflict Resolutionapi-491162111No ratings yet

- Peer Pressure Research 1Document30 pagesPeer Pressure Research 1Ezequiel GonzalezNo ratings yet

- Tutors Exams Fees 2022-23-31 May 23Document16 pagesTutors Exams Fees 2022-23-31 May 23Farooq AhmedNo ratings yet

- Direct Instruction Lesson PlanDocument2 pagesDirect Instruction Lesson Planmbatalis1894No ratings yet

- Week 7 SB - Tinuod Nga Managhigala (True Friends)Document20 pagesWeek 7 SB - Tinuod Nga Managhigala (True Friends)Abegail Caneda100% (1)

- Passage Picker Role SheetDocument2 pagesPassage Picker Role Sheetapi-294696867No ratings yet

- Creative Problem SolvingDocument40 pagesCreative Problem SolvingKhushbu SainiNo ratings yet

- Zones of Regulation Lesson Plan ExampleDocument5 pagesZones of Regulation Lesson Plan Exampleapi-533709691No ratings yet

- Lesson Plan DemoDocument3 pagesLesson Plan Democristine carig100% (1)

- Brochure JSSDocument27 pagesBrochure JSSjaya1816No ratings yet

- AMEE Guide No 14 Outcome Based Education Part 5 From Competency To Meta Competency A Model For The Specification of Learning OutcomesDocument8 pagesAMEE Guide No 14 Outcome Based Education Part 5 From Competency To Meta Competency A Model For The Specification of Learning OutcomesHamad AlshabiNo ratings yet

- Subject: General Chemistry Test,: Date: May 2015Document7 pagesSubject: General Chemistry Test,: Date: May 2015PHƯƠNG ĐẶNG YẾNNo ratings yet

- Araling Panlipunan Action Plan SY: 2021-2022Document3 pagesAraling Panlipunan Action Plan SY: 2021-2022MICHELE PEREZNo ratings yet

- 2012 Bhawuk India and The Culture of PeaceDocument102 pages2012 Bhawuk India and The Culture of PeaceDharm BhawukNo ratings yet

- Week 5 Spain & The ReconquistaDocument6 pagesWeek 5 Spain & The ReconquistaABigRedMonsterNo ratings yet

- Mathematics Art-Integrated ProjectDocument13 pagesMathematics Art-Integrated ProjectKeshav DeoraNo ratings yet

- Pursuit of HappinessDocument2 pagesPursuit of HappinessHarvey A LiNo ratings yet

- Translation - Transcription WorksheetDocument7 pagesTranslation - Transcription Worksheethanatabbal19No ratings yet

- 03 幼童華語讀本3 (英文版) PDFDocument48 pages03 幼童華語讀本3 (英文版) PDFDonna Chiang TouatiNo ratings yet

- Front Pages Front PageDocument9 pagesFront Pages Front PageDeepanshu GoyalNo ratings yet

- IapromptDocument2 pagesIapromptapi-265619443No ratings yet

- ENG 467 - Course OutlineDocument7 pagesENG 467 - Course OutlineAbdullah Khalid Turki AlmutairiNo ratings yet

- 19752-Article Text-42885-1-10-20191231Document9 pages19752-Article Text-42885-1-10-20191231Sunita Aprilia DewiNo ratings yet