Professional Documents

Culture Documents

Software Techniques For Good Practice in Audio and Music Research

Software Techniques For Good Practice in Audio and Music Research

Uploaded by

mewarulesOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Software Techniques For Good Practice in Audio and Music Research

Software Techniques For Good Practice in Audio and Music Research

Uploaded by

mewarulesCopyright:

Available Formats

Audio Engineering Society

Convention Paper 8872

Presented at the 134th Convention

2013 May 4–7 Rome, Italy

This Convention paper was selected based on a submitted abstract and 750-word precis that have been peer reviewed

by at least two qualified anonymous reviewers. The complete manuscript was not peer reviewed. This convention

paper has been reproduced from the author’s advance manuscript without editing, corrections, or consideration by the

Review Board. The AES takes no responsibility for the contents. Additional papers may be obtained by sending request

and remittance to Audio Engineering Society, 60 East 42nd Street, New York, New York 10165-2520, USA; also see

www.aes.org. All rights reserved. Reproduction of this paper, or any portion thereof, is not permitted without direct

permission from the Journal of the Audio Engineering Society.

Software Techniques for Good Practice in

Audio and Music Research

Luı́s A. Figueira, Chris Cannam, and Mark D. Plumbley

Queen Mary University of London

Correspondence should be addressed to Luı́s A. Figueira (luis.figueira@eecs.qmul.ac.uk)

ABSTRACT

In this paper we discuss how software development can be improved in the audio and music research commu-

nity by implementing tighter and more effective development feedback loops. We suggest first that researchers

in an academic environment can benefit from the straightforward application of peer code review, even for

ad-hoc research software; and second, that researchers should adopt automated software unit testing from

the start of research projects. We discuss and illustrate how to adopt both code reviews and unit testing

in a research environment. Finally, we observe that the use of a software version control system provides

support for the foundations of both code reviews and automated unit tests. We therefore also propose that

researchers should use version control with all their projects from the earliest stage.

1. INTRODUCTION some 40% said they took steps towards reproducibil-

ity, but of those only 35% (i.e. less than 15% of the

total) had actually published any code. In the same

The need to develop software to support experi- survey, 51% of the respondents who reported devel-

mental work is almost universal in audio and music oping software said that their code never left their

research. Many published methods comprise both own computer. While there are many examples of

written material and software implementations. Yet active researchers who do publish their software, in-

the software part of the work often goes unpublished formal observations suggest that the lack of publi-

and, even if published, it is not always clear whether cation of research software is widespread in many

it works correctly. In our earlier survey of the UK areas of audio research.

audio and music research community [1], of the 80%

of respondents who said they developed software, Research papers typically carry out validation of the

Figueira et al. Software Techniques for Good Practice in Audio and Music Research

implemented model, evaluating how closely it cor- are used to facilitate short development cycles, with

responds with observations from reality. But any regular and quick software releases, making develop-

requirement to verify the implementation, to estab- ment as flexible as possible.

lish that the model described in the publication is

the same as that actually implemented, usually goes Even in software companies that do not adopt Agile

unmet. procedures, however, there are some techniques very

widely used in commercial development—including

We have previously argued [2] that a lack of quality in companies throughout the audio and music soft-

or confidence in the implementation may be a cause ware industry—that are not widely adopted in

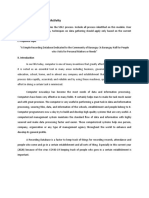

of failure to publish code. Similarly, studies of data academia. Fig. 1 sketches some of these techniques,

sharing in other fields have reported a relationship making a hierarchy of feedback mechanisms operat-

between willingness to share supporting data and ing at different speeds, from most immediate (cycle

quality of reporting in the paper [3]. A cause for a—compiler errors) to least (cycle g—reports from

lack of confidence with code may be the lack of for- users of published software).

mal training in software development for researchers

working in academic fields outside computer science.

A recent study [4] found a great deal of variation

in the level of understanding of standard software

engineering concepts by scientists, and found that

in developing and using scientific software, informal

self-study or learning from peers was commonplace.

The audio and music research community is par-

ticularly heterogeneous in terms of academic back-

grounds, with researchers having very different ori-

gins: as well as those from computing and engineer-

ing fields, many researchers in this community come

from backgrounds with no formal training in soft-

ware, such as music, dance or performance [1]. Such

a wide variety of backgrounds poses a big challenge,

given that many of these researchers do not have Fig. 1: Software development feedback cycles in in-

the skills or desire to become involved in traditional dustry

software development practice or in publication and

maintenance of code. Researchers are familiar with a similar set of feed-

In the remainder of this paper we will look into the back processes in the development of written publi-

software development practices of industry and dis- cations: collaborative review and editing with imme-

cuss how some of these can be adopted by the audio diate peer researchers and supervisors, formal peer-

and music research community. review cycles, and so on. These processes provide

new perspectives which help to produce quality re-

2. FEEDBACK CYCLES sults more quickly and help to remove potentially

serious errors.

In commercial software development, much of exist-

ing “best practice” consists of trying to shorten or 3. FEEDBACK CYCLES IN RESEARCH

simplify feedback cycles so that the developer learns

about mistakes as soon as possible. This is made The three most typical feedback cycles in research

explicit in incremental software development meth- software development are illustrated in Fig. 2: (a)

ods such as the Agile process [5], where there is a compiler errors, or immediate feedback from an in-

strong emphasis on individuals and interactions and teractive development environment; (f) using the

use of techniques such as pair programming, contin- code to run experiments, examining the results, and

uous integration and unit testing. These techniques judging whether or not they appear to make sense;

AES 134th Convention, Rome, Italy, 2013 May 4–7

Page 2 of 8

Figueira et al. Software Techniques for Good Practice in Audio and Music Research

and (g) obtaining feedback from users following pub- itably be applied even for small informal projects in

lication of the software. an academic research environment.

4. CODE REVIEWS

A code review (Fig. 1, loop d) simply consists of hav-

ing another person read one’s code before running

any significant experiments with it. This is analo-

gous to copy-editing a paper publication.

4.1. Code reviews can be quick and informal

Studies have shown that the first hour of code review

is the most productive. In [6] and [7], Dunsmore et al

found that defects were found at a roughly constant

rate through the first 60 minutes of inspection, then

tailing off so that no further defects were discovered

after the first 90 minutes. This result suggests that

code reviews need not be too great a burden on time.

Fig. 2: Common feedback cycles in research soft-

ware development In [7], the same authors compared three methods of

formal code review, which they identified as “check-

Compiler feedback, as depicted in Fig. 2, loop a, list”, “systematic” (or “abstraction”) and “use-case”

is highly immediate, but contains no information methods. The checklist method requires preparing

about program correctness other than basic syntax and following a list of common sources of error in the

and type checking. language under inspection; the systematic method

calls for the reviewer to reverse-engineer a specifica-

Manually running and inspecting the output, as rep- tion for each function from its source code; and the

resented in Fig. 2, loop g, is a fundamental part of use-case method examines the context in which code

any software development procedure, but it is hard is used by tracing the flow of data, line by line, from

to interpret the feedback it provides. If we don’t likely inputs to expected outputs of each function.

know the expected output of an experiment, we may

The use-case method formalises the intuitive reading

be unable to distinguish between genuine experimen-

approach taken by a peer code reviewer familiar with

tal results and artefacts caused by errors in the code.

the problem domain but not with the implementa-

We also obtain no feedback on aspects of the code

tion: working from the likely inputs to each function,

not formally evaluated in the experimental design.

toward the expected outputs. Academic peers who

The last loop, represented in Fig. 2, loop g, has its are familiar with the field in which a program oper-

own limitations. It requires that the researcher pub- ates can apply this method informally by considering

lishes the code, which as noted in Sec. 1 does not input data typical of the domain and tracking how

always occur—often for reasons relating to the lack it is transformed through the lines of code within

of applicable feedback during development [2]. Sec- a function. Although the use-case method did not

ondly, the speed at which feedback can be obtained perform as well as the checklist method in the Dun-

and amount of feedback available are heavily depen- smore et al study [7], the difference was small and

dent on publication times and uptake among users. we would suggest it as a good starting point for an

informal code review process to be applied within a

We believe that researchers can take better advan- research group.

tage of other available feedback mechanisms to give

them more confidence in the quality of their code as 4.2. Reviewing code contributes to learning

quickly as possible. Specifically, we now introduce Effective code reviews require some ability to read

two of these techniques—peer code reviews and unit code. Reviewers reporting a high level of code com-

testing—and explain why and how they may prof- prehension have been shown to find more defects [8],

AES 134th Convention, Rome, Italy, 2013 May 4–7

Page 3 of 8

Figueira et al. Software Techniques for Good Practice in Audio and Music Research

and training developers in “how to read code” makes A unit test is a means of automating and repeating

a substantial difference [9] to the number of errors tests of the individual parts of a program. It consists

found during code review. of code that calls a function in the program, gives

it some input, and tells the developer whether it re-

This suggests that learning to read code is a valu-

turns the expected result. If all non-trivial functions

able part of learning to write and debug software,

have tests, and if those tests provide a reasonable

a principle supported by [10] and others. Formal

coverage of both likely inputs and edge cases, this

training in code comprehension may be of benefit to

provides a baseline assurance that the components

the researcher-developer who wishes to review code,

of a program work as expected, even if the program

but in addition to any formal training, the exercise

as a whole is complex or changing.

of code review is itself a learning opportunity.

Although the studies cited here show improvements 5.1. Testing research software can be hard

in review performance due to improved code com- Much research software is written to perform novel

prehension and review methods, a high level of com- work: potentially complex experiments with previ-

prehension is not a prerequisite for taking part in ously unknown outputs. How do we test such soft-

reviews: in [8] it is noted that some reviewers who ware, given that the authors are not able to predict

did not fully understand the code nonetheless found its output?

around half of the defects in it.

Unit testing goes some way toward helping with this.

4.3. Reviews are effective For code to be testable through unit tests it must be

In a well known article, Fagan [11] shows that code written in sufficiently small functions, with clearly

reviews can remove 60−90% of errors before the first defined inputs and outputs, for each to be tested

test is run [12]. Comparative studies of code re- mechanically. At this level it should indeed be pos-

view techniques and reviewers support the proposi- sible to calculate the expected output of a function,

tion that code review is generally effective, showing given sufficiently simple choices of input. Further,

for example that “median” reviewers found 60−70% code written in this way is easier to read and reuse.

of defects [8] or that reviews exposed all but one Unit tests written during software development also

defect in the sample code [7]. provide early sanity-checking for code. A program to

4.4. Recommendations read data from a file and calculate a result might fail,

not only because the basic algorithm is wrong, but

We therefore suggest code reviews as appropriate for because the input is read wrongly or the code fails

academic software development for three reasons: to deal with unusual cases such as short or empty

datasets or missing values.

1. Code reviews can be carried out quickly and

informally in a peer setting such as a research Although unit testing cannot guarantee that a pro-

environment; gram produces the correct results, ensuring that it

is built from correct components is a useful step to-

2. Carrying out reviews gives the reviewer and au- ward ensuring it actually implements the method

thor practice in reading code and in writing described in the publication.

code that can be read, both of which are valu-

able in improving software development ability; 5.2. Unit tests help avoid regressions

In software development, a regression is an error

3. Code reviews are more effective at finding errors

introduced accidentally while modifying software—

than any other commonly used technique.

something which used to work but has since become

broken, such as a new bug introduced while fixing

5. UNIT TESTING something else.

Unit testing is a second technique, widely used in Regressions are common: in [13] Robert Glass iden-

non-academic settings, for providing software devel- tified 8.5% of errors identified after delivery of

opers with feedback (Fig. 1, loop c). software from a substantial commercial aerospace

AES 134th Convention, Rome, Italy, 2013 May 4–7

Page 4 of 8

Figueira et al. Software Techniques for Good Practice in Audio and Music Research

project as regressions. Regressions may also be dan- perspective to that usually used when simply writ-

gerous, because they change behaviour that may be ing code. Rather than begin by considering how

relied upon or that has been already published; and to implement a solution and then think about how

regressions are dispiriting, because they are exam- to test whether it works, the test-driven approach

ples of unambiguously wrong behaviour that suggest begins by considering what would be necessary to

the developer is making negative progress. establish whether a solution was correct or not, be-

fore filling in the implementation. We believe this

Although regression testing is especially important

approach may be a mental tool worth considering

when working in a team, such errors also occur in

when approaching hard-to-implement problems, re-

solo projects, and regressions can also arise from fac-

gardless of whether it ultimately produces better

tors outside a developer’s own control such as plat-

code or not.

form dependencies or changes between versions of a

third-party library. 5.4. Recommendations

Automated tests are vital in avoiding regressions, We propose unit testing as part of a practical,

because regression testing is simply too difficult and research-driven development environment for the

tedious to perform manually. It would require test- following reasons:

ing every use case by hand after every code change,

which is not feasible. Unit tests can be run quickly 1. Research software can be hard to perform high-

after every build, and can identify with some preci- level testing for: unit tests are a relatively easy

sion the part of the code that has been broken by a way to obtain any assurance of correctness;

change.

2. Unit testing is a good way to defend against

5.3. Test-driven development may be a useful regressions, a common class of error which can

analytical tool be hard to discover by manual testing;

A fundamental discussion around unit testing con-

3. A form of unit test development, test-driven de-

cerns when to write the tests.

velopment, can provide an additional analytical

Some developers, e.g. [14], advocate test-driven (or perspective when solving difficult problems.

test-first) development, a process in which the unit

tests are written before the rest of the code. The

act of writing tests constructs a contract which the 6. VERSION CONTROL

implementation is then written to satisfy. In princi- A version control system is a tool that tracks the his-

ple, this ensures the code will be correct as it is first tory of all files in a software project, logging changes

written. and different versions, thus allowing researchers to

Erdogmus et al. [15] found that those developers who easily compare different versions of the same file.

adopted a test-driven strategy wrote more tests; that Version control systems also help developers to share

the developers who wrote more tests tended to be and merge changes, making projects easier to man-

more productive; and that the quality of software age when several researchers are working together

produced was roughly proportional to the number of on them. Commonly used version control systems

tests provided. A further survey [16] found that the include git1 , Mercurial2 , and Subversion3 .

quality of tests in a test-driven approach was often Besides making general management of code easier

higher than that of those written after the implemen- and more pleasant, a version control system provides

tation. However, we are unaware of any studies that a necessary foundation for effective code reviews and

show a direct link between the test-driven approach unit testing.

and higher quality or productivity, controlling for

In allowing its user to see the differences between

other factors.

respective revisions of a project, a version control

Nonetheless, what makes test-driven development 1 http://git-scm.com/

interesting for research software is the opportunity 2 http://mercurial.selenic.com/

it affords to approach a problem from the opposite 3 http://subversion.apache.org/

AES 134th Convention, Rome, Italy, 2013 May 4–7

Page 5 of 8

Figueira et al. Software Techniques for Good Practice in Audio and Music Research

system makes it possible to isolate a set of changes already proven problematic or where bugs have been

and be sure that a code review is covering every- found in “finished” code.

thing that has been changed in a particular piece of Be careful to avoid exact comparisons when test-

work. It also provides a mechanism for sharing code ing outputs from inexact calculations or those using

between developers, such that they can be sure they floating-point arithmetic. The error margin for a

are looking at the same version of the same software. calculation is part of the test, just as the expected

In the context of unit testing, especially for regres- result is.

sion testing, a version control system provides the Because it can be hard to get started in writing

necessary support to compare two versions of code unit tests, we propose adopting the simplest avail-

and identify the source of a change in behaviour. able unit test framework. In some cases, it may

even be easiest to write unit tests without a frame-

7. RECOMMENDED ACTIONS FOR A RE- work: call a function; complain if the result is wrong.

SEARCH GROUP More usually the simplest method will be to adopt a

7.1. Code reviews standard test framework, such as Nose for Python4 .

Other authors [17] advise using a test framework for

As noted above in Sec. 4, in a research environment all unit testing.

we advocate the adoption of an informal style of

“use-case” code review. Here the reviewer follows 7.3. Version control

data paths through the code, from an imagined in- A version control system should be used from the

put to the result output, with the original developer very beginning of any project, regardless of its com-

available to explain what each step is for if neces- plexity. Recent reports from other fields show en-

sary. This technique can be conducted without much couraging trends in adoption of such systems in an

preparation, because the reviewer does not need an academic setting [20]. In general, the right system

in-depth knowledge of the specifics of the implemen- to choose is whichever is available in the research

tation and there is no requirement to make checklists group or in use by peer researchers. In the absence

or other aids prior to the review process. of a local standard, we recommend the use of a mod-

ern distributed version control system such as git or

Wilson et al [17] propose the use of “pre-merge” code

Mercurial.

reviews, in which reviews are a requirement for code

to be included into a shared repository. When using Similar guidelines apply to the choice of hosting ser-

a modern distributed version control system, this vices. Several free solutions are available on-line,

could be set up either by committing to a user’s such as GitHub5 for git, or Bitbucket6 for both git

own branch and then merging to a main branch after and Mercurial projects with good support for pri-

review, or else by having the developer commit only vate work. In addition to these generic hosting sites,

on their local machine and get the code reviewed there are subject-specific sites such as the Sound-

in person by another researcher before pushing to Software project site7 for UK-based researchers in

a central repository. In either case, it is important the audio and music field.

to review before performing experiments using the

code. 8. CONCLUSIONS

7.2. Unit testing Throughout this paper we argue that code reviews

and unit testing are valuable techniques that can

Unit tests should be small and as simple as possible. and should be adopted in academic contexts. We

The aim is to exercise the code in unexpected ways, introduce these techniques in the context of a set

focusing on tricky small cases rather than easy large of feedback loops that give the researcher-developer

ones. Studies show that areas of code where bugs timely information about problems in their code.

are found are more likely to be fragile in general 4 https://nose.readthedocs.org/en/latest/

[18, 19] and bugs that have already been found are 5 https://github.com/

relatively likely to reappear as regressions, so more 6 https://bitbucket.org/

time should be focused on testing areas that have 7 http://code.soundsoftware.ac.uk/

AES 134th Convention, Rome, Italy, 2013 May 4–7

Page 6 of 8

Figueira et al. Software Techniques for Good Practice in Audio and Music Research

We have also given some advice and pointers to how IEEE 2012 International Conference on Acous-

to adopt such techniques in a research environment, tics, Speech, and Signal Processing (ICASSP

such as: 2012), pp. 2745–2748, IEEE Signal Processing

Society, March 2012.

• apply an informal style of “use-case” code re-

view technique, calling on peer researchers to [3] J. M. Wicherts, M. Bakker, and D. Molenaar,

review code before using it in substantial ex- “Willingness to share research data is related

periments; to the strength of the evidence and the quality

of reporting of statistical results,” PLoS ONE,

• adopt the simplest available unit testing regime, vol. 6, p. e26828, 11 2011.

keep unit tests small and concise, and apply a

standard test framework for unit tests on larger [4] J. E. Hannay, C. MacLeod, J. Singer, H. P.

pieces of code; Langtangen, D. Pfahl, and G. Wilson, “How do

scientists develop and use scientific software?,”

• support these techniques with the use of a ver- in Proceedings of the 2009 ICSE Workshop on

sion control system from early in a project. Software Engineering for Computational Sci-

ence and Engineering, SECSE ’09, (Washing-

Techniques such as these rely on becoming comfort- ton, DC, USA), pp. 1–8, IEEE Computer Soci-

able with the idea that others will be looking at one’s ety, 2009.

research code. We believe that this is an important

[5] K. Beck, M. Beedle, A. van Bennekum,

step, and one that makes documentation and unit

A. Cockburn, W. Cunningham, M. Fowler,

testing easier, code reviews possible, and publica-

J. Grenning, J. Highsmith, A. Hunt, R. Jeffries,

tion and peer review of software less scary.

J. Kern, B. Marick, R. C. Martin, S. Mellor,

As part of our ongoing UK-based SoundSoftware K. Schwaber, J. Sutherland, and D. Thomas,

project8 , we have developed several materials to add “Manifesto for Agile Software Development,”

to this subject, such as handouts with practical guid- 2001.

ance, video tutorials, and slides. You can freely ac-

cess these on the project’s website9 . [6] A. Dunsmore, M. Roper, and M. Wood,

“Object-oriented inspection in the face of de-

9. ACKNOWLEDGMENTS localisation,” in Proceedings of the 22nd in-

This work was supported by EPSRC Grant ternational conference on Software engineering,

EP/H043101/1. Prof. Mark D. Plumbley is pp. 467–476, ACM, 2000.

also supported by EPSRC Leadership Fellowship

EP/G007144/1. [7] A. Dunsmore, M. Roper, and M. Wood, “Prac-

tical code inspection techniques for object-

oriented systems: An experimental compari-

10. REFERENCES son,” IEEE Software, vol. 20, pp. 21–29, 7–8

2003.

[1] I. Damnjanovic, L. A. Figueira,

C. Cannam, and M. D. Plumbley, [8] A. Dunsmore, M. Roper, and M. Wood, “The

“SoundSoftware.ac.uk Survey Report.” role of comprehension in software inspection,”

http://code.soundsoftware.ac.uk/documents/17, Journal of Systems and Software, vol. 52,

2011. pp. 121–129, 2000.

[2] C. Cannam, L. A. Figueira, and M. D. Plumb- [9] S. Rifkin and L. Deimel, “Applying program

ley, “Sound software: Towards software reuse in comprehension techniques to improve software

audio and music research,” in Proceedings of the inspections,” in In Proceedings of the 19th An-

8 http://soundsoftware.ac.uk nual NASA Software Engineering Laboratory

9 http://soundsoftware.ac.uk/resources Workshop, pp. 3–8, 1994.

AES 134th Convention, Rome, Italy, 2013 May 4–7

Page 7 of 8

Figueira et al. Software Techniques for Good Practice in Audio and Music Research

[10] L. E. Deimel and J. F. Naveda, “Reading com- [20] K. Ram, “git can facilitate greater reproducibil-

puter programs: Instructor’s guide and exer- ity and increased transparency in science,”

cises,” Tech. Rep. CMU/SEI-90-EM-3, Soft- Source Code for Biology and Medicine, 2013.

ware Engineering Institute, Carnegie Mellon

University, August 1990.

[11] M. E. Fagan, “Design and code inspections to

reduce errors in program development,” IBM

Systems Journal, vol. 15, no. 3, pp. 182–211,

1976.

[12] R. L. Glass, “Inspections - some surprising find-

ings,” Commun. ACM, vol. 42, no. 4, pp. 17–19,

1999.

[13] R. L. Glass, “Persistent software errors,” IEEE

Trans. Software Eng., vol. 7, no. 2, pp. 162–168,

1981.

[14] K. Beck, Test-Driven Development By Exam-

ple, vol. 2 of The Addison-Wesley Signature Se-

ries. Addison-Wesley, 2003.

[15] H. Erdogmus, M. Morisio, and M. Torchiano,

“On the effectiveness of the test-first approach

to programming,” IEEE Transactions on Soft-

ware Engineering, vol. 31, pp. 226–237, 2005.

[16] B. Turhan, L. Layman, M. Diep, F. Shull, and

H. Erdogmus, “How effective is test driven de-

velopment?,” in Making Software: What Really

Works, and Why We Believe It (G. Wilson and

A. Orham, eds.), OReilly Press, 2010.

[17] G. Wilson, D. A. Aruliah, C. T. Brown, N. P. C.

Hong, M. Davis, R. T. Guy, S. H. Had-

dock, K. Huff, I. M. Mitchell, M. D. Plumb-

ley, B. Waugh, E. P. White, and P. Wil-

son, “Best practices for scientific comput-

ing,” Computing Research Repository (CoRR),

vol. abs/1210.0530, 2012.

[18] A. Endres, “An analysis of errors and their

causes in system programs,” in Proceedings of

the international conference on Reliable soft-

ware, (New York, NY, USA), pp. 327–336,

ACM, 1975.

[19] B. W. Boehm and V. R. Basili, “Software defect

reduction top 10 list,” IEEE Computer, vol. 34,

no. 1, pp. 135–137, 2001.

AES 134th Convention, Rome, Italy, 2013 May 4–7

Page 8 of 8

You might also like

- The Spectral Recording ProcessDocument42 pagesThe Spectral Recording ProcessmewarulesNo ratings yet

- Test Bank For Information Technology Auditing 4Th Edition by James A.HallDocument1 pageTest Bank For Information Technology Auditing 4Th Edition by James A.HallMoira VilogNo ratings yet

- The Role of Inspection in Software Quality AssuranceDocument3 pagesThe Role of Inspection in Software Quality Assuranceadisu tsagayeNo ratings yet

- 1 s2.0 S0950584923002495 MainDocument27 pages1 s2.0 S0950584923002495 MainkalaipmcetNo ratings yet

- Analytical Survey On Bug Tracking SystemDocument10 pagesAnalytical Survey On Bug Tracking SystemInternational Journal of Computer and Communication System EngineeringNo ratings yet

- EBug Tracking SystemDocument31 pagesEBug Tracking SystemArun NagarajanNo ratings yet

- Software Testing Methodologies PDFDocument122 pagesSoftware Testing Methodologies PDFPAVANNo ratings yet

- Flaky TestDocument65 pagesFlaky TestRakib RashidNo ratings yet

- Research Article: Open-Source Software in Computational Research: A Case StudyDocument11 pagesResearch Article: Open-Source Software in Computational Research: A Case StudySagar SrinivasNo ratings yet

- Summary ArticleDocument8 pagesSummary ArticleAnnakayNo ratings yet

- A Comparative Study On Testing Techniques of SoftwareDocument6 pagesA Comparative Study On Testing Techniques of SoftwareInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Review On Structural Software Testing Coverage Approaches: ISSN: 2454-132X Impact Factor: 4.295Document6 pagesReview On Structural Software Testing Coverage Approaches: ISSN: 2454-132X Impact Factor: 4.295Sidra UsmanNo ratings yet

- Review On Structural Software Testing Coverage Approaches: ISSN: 2454-132X Impact Factor: 4.295Document6 pagesReview On Structural Software Testing Coverage Approaches: ISSN: 2454-132X Impact Factor: 4.295Sidra UsmanNo ratings yet

- 1.1 What Is Empirical Software Engineering?Document142 pages1.1 What Is Empirical Software Engineering?Sahil YadavNo ratings yet

- Automated Test Input Generation For Android: Are We There Yet?Document12 pagesAutomated Test Input Generation For Android: Are We There Yet?Tanaka Ian KahwaiNo ratings yet

- Nadeem's SynopsisDocument7 pagesNadeem's SynopsisMuhammad Faisal SaleemNo ratings yet

- Software Testing Research: Achievements, Challenges & DreamsDocument21 pagesSoftware Testing Research: Achievements, Challenges & DreamsRabiaNo ratings yet

- Paper - Spin - Model Checking - An IntroductionDocument8 pagesPaper - Spin - Model Checking - An IntroductionGabardn asaNo ratings yet

- A Survey of The Use of Crwdsourcing in Software Engineering PDFDocument30 pagesA Survey of The Use of Crwdsourcing in Software Engineering PDFFawwad AhmedNo ratings yet

- Survey SoftwareDocument24 pagesSurvey SoftwarejohnkevinsalomonlNo ratings yet

- M3:L1-L3 - Enrichment Activity: I. Proposed TopicDocument4 pagesM3:L1-L3 - Enrichment Activity: I. Proposed TopicDohndeal OcampoNo ratings yet

- Emerging Trends in Software Testing ToolDocument5 pagesEmerging Trends in Software Testing ToolNaresh Kumar PegadaNo ratings yet

- Using Architectural Runtime Verification For Offline Data AnalysisDocument14 pagesUsing Architectural Runtime Verification For Offline Data AnalysisLars StockmannNo ratings yet

- Expectations, Outcomes and ChallengesDocument10 pagesExpectations, Outcomes and ChallengestresampNo ratings yet

- Analysis of Different Software Testing Techniques: Research PaperDocument4 pagesAnalysis of Different Software Testing Techniques: Research PaperMuzammal SadiqNo ratings yet

- Software Traceability A RoadmapDocument36 pagesSoftware Traceability A RoadmapCarlos Grinder GonzalezNo ratings yet

- When and Why Your Code Starts To Smell Bad (And Whether The Smells Go Away)Document28 pagesWhen and Why Your Code Starts To Smell Bad (And Whether The Smells Go Away)Marcio OliveiraNo ratings yet

- An Examination of Software Engineering Work PracticesDocument18 pagesAn Examination of Software Engineering Work PracticesNicole WitthuhnNo ratings yet

- Soft Tetsing PaperDocument24 pagesSoft Tetsing PaperJaved KhanNo ratings yet

- Proposal For A Scientific Software Lifecycle Model: November 2017Document6 pagesProposal For A Scientific Software Lifecycle Model: November 2017Ue ucuNo ratings yet

- Why We Need New Software Testing Technologies: Carol Oliver, PH.DDocument22 pagesWhy We Need New Software Testing Technologies: Carol Oliver, PH.DNaresh Kumar PegadaNo ratings yet

- A Software Repository and Toolset For Empirical ResearchDocument16 pagesA Software Repository and Toolset For Empirical ResearchArthur MolnarNo ratings yet

- An Innovative Approach To Investigate VaDocument9 pagesAn Innovative Approach To Investigate VaAlvaro Daniel Berrios ZunigaNo ratings yet

- All Units NotesDocument104 pagesAll Units Notespiserla.srinivas2201No ratings yet

- Generalizing Agile Software Development Life CycleDocument6 pagesGeneralizing Agile Software Development Life CycleJaipalReddyNo ratings yet

- MSR 2017 BDocument12 pagesMSR 2017 BОлександр СамойленкоNo ratings yet

- Software Process: A RoadmapDocument8 pagesSoftware Process: A RoadmapVarsha DwivediNo ratings yet

- Test Case Review Processes in Software TestingDocument6 pagesTest Case Review Processes in Software TestingLavanya 18MIS0182No ratings yet

- A Comparative Study of The Effectiveness of Checklist-Based and Ad Hoc Code Inspection Reading Techniques in Paper-And Tool-Based Inspection Artifact EnvironmentsDocument12 pagesA Comparative Study of The Effectiveness of Checklist-Based and Ad Hoc Code Inspection Reading Techniques in Paper-And Tool-Based Inspection Artifact EnvironmentsJournal of Computer Science and EngineeringNo ratings yet

- A Methodology With Quality Tools To Support Post Implementation ReviewsDocument14 pagesA Methodology With Quality Tools To Support Post Implementation ReviewszeraNo ratings yet

- What Makes Good Research in SEDocument10 pagesWhat Makes Good Research in SEVanathi Balasubramanian100% (1)

- 2 - Advances in Software InspectionsDocument25 pages2 - Advances in Software InspectionsAsheberNo ratings yet

- 1st Part of Testing NotesDocument16 pages1st Part of Testing Noteskishore234No ratings yet

- Analysis of Efficiency of Automated Software Testing Methods Direction of ResearchDocument5 pagesAnalysis of Efficiency of Automated Software Testing Methods Direction of ResearchJuliana AbreuNo ratings yet

- Generating A Useful Theory of Software EngineeringDocument4 pagesGenerating A Useful Theory of Software EngineeringHIMYM FangirlNo ratings yet

- Introduction To Software Engineering.Document31 pagesIntroduction To Software Engineering.AmardeepSinghNo ratings yet

- A Review of Security Integration Technique in Agile Software Development PDFDocument20 pagesA Review of Security Integration Technique in Agile Software Development PDFGilmer G. Valderrama HerreraNo ratings yet

- ArtikelDocument5 pagesArtikelDavid WongNo ratings yet

- Generalizing Agile Software Development Life CycleDocument5 pagesGeneralizing Agile Software Development Life CycleInternational Journal on Computer Science and EngineeringNo ratings yet

- Code Smells and Detection Techniques: A Survey: Conference PaperDocument7 pagesCode Smells and Detection Techniques: A Survey: Conference PaperAzhar ZafarNo ratings yet

- Software Quality Assessment of Open Source SoftwareDocument13 pagesSoftware Quality Assessment of Open Source SoftwareZafr O'ConnellNo ratings yet

- A Review of Software Fault Detection and Correction Process, Models and TechniquesDocument8 pagesA Review of Software Fault Detection and Correction Process, Models and TechniquesDr Muhamma Imran BabarNo ratings yet

- Software Engineering NotesDocument61 pagesSoftware Engineering NotesAnonymous L7XrxpeI1z100% (3)

- Research Design and MethodologyDocument13 pagesResearch Design and MethodologyJepoy EnesioNo ratings yet

- Investigating The Role of Code Smells in Preventive MaintenanceDocument23 pagesInvestigating The Role of Code Smells in Preventive MaintenanceJunaidNo ratings yet

- Ijcttjournal V1i2p8Document5 pagesIjcttjournal V1i2p8surendiran123No ratings yet

- Industrial Applications of Formal Methods to Model, Design and Analyze Computer SystemsFrom EverandIndustrial Applications of Formal Methods to Model, Design and Analyze Computer SystemsNo ratings yet

- BTech CSE-software EngineeringDocument83 pagesBTech CSE-software EngineeringFaraz khanNo ratings yet

- A Walk Through of Software Testing TechniquesDocument6 pagesA Walk Through of Software Testing Techniquesraquel oliveiraNo ratings yet

- Chapter 3Document16 pagesChapter 3Brook MbanjeNo ratings yet

- A Systematic Mapping Review of Usability Evaluation Methods For Software Development ProcessDocument14 pagesA Systematic Mapping Review of Usability Evaluation Methods For Software Development ProcessLeslie MestasNo ratings yet

- Programming For Software EngineersDocument11 pagesProgramming For Software EngineersAakash SinghNo ratings yet

- Toward A More Realistic AudioDocument4 pagesToward A More Realistic AudiomewarulesNo ratings yet

- The Distributed Edge Dipole (DED) Model For Cabinet Diffraction EffectsDocument17 pagesThe Distributed Edge Dipole (DED) Model For Cabinet Diffraction EffectsmewarulesNo ratings yet

- Magic Arrays - Multichannel Microphone Array Design Applied To Microphone Arrays Generating Interformat CompatabilityDocument19 pagesMagic Arrays - Multichannel Microphone Array Design Applied To Microphone Arrays Generating Interformat CompatabilitymewarulesNo ratings yet

- Convention Paper 8839: Audio Engineering SocietyDocument14 pagesConvention Paper 8839: Audio Engineering SocietymewarulesNo ratings yet

- Novel 5.1 Downmix Algorithm With Improved Dialogue IntelligibilityDocument14 pagesNovel 5.1 Downmix Algorithm With Improved Dialogue IntelligibilitymewarulesNo ratings yet

- Digital Signal Processing Issues in The Context of Binaural and Transaural StereophonyDocument47 pagesDigital Signal Processing Issues in The Context of Binaural and Transaural Stereophonymewarules100% (1)

- Perceptual Objective Listening Quality Assessment (POLQA), The Third Generation ITU-T Standard For End-to-End Speech Quality Measurement Part II-Perceptual ModelDocument18 pagesPerceptual Objective Listening Quality Assessment (POLQA), The Third Generation ITU-T Standard For End-to-End Speech Quality Measurement Part II-Perceptual ModelmewarulesNo ratings yet

- Perceptual Objective Listening Quality Assessment (POLQA), The Third Generation ITU-T Standard For End-to-End Speech Quality Measurement Part I-Temporal AlignmentDocument19 pagesPerceptual Objective Listening Quality Assessment (POLQA), The Third Generation ITU-T Standard For End-to-End Speech Quality Measurement Part I-Temporal AlignmentmewarulesNo ratings yet

- Perception of Focused Sources in Wave Field SynthesisDocument12 pagesPerception of Focused Sources in Wave Field SynthesismewarulesNo ratings yet

- Microphone Array Design For Localization With Elevation CuesDocument6 pagesMicrophone Array Design For Localization With Elevation CuesmewarulesNo ratings yet

- Sound Fields Radiated by Multiple Sound Sources ArraysDocument45 pagesSound Fields Radiated by Multiple Sound Sources ArraysmewarulesNo ratings yet

- Minimization of Decorrelator Artifacts in Directional Audio Coding by Covariance Domain RenderingDocument10 pagesMinimization of Decorrelator Artifacts in Directional Audio Coding by Covariance Domain RenderingmewarulesNo ratings yet

- A Flexible Polyvinylidene Fluoride Film-Loudspeaker - JCSME-36-159Document8 pagesA Flexible Polyvinylidene Fluoride Film-Loudspeaker - JCSME-36-159mewarulesNo ratings yet

- A Novel Miniature Matrix Array Transducer System For Loudspeakers - Thesis W - LOTS OF TRANSDUCER INFODocument229 pagesA Novel Miniature Matrix Array Transducer System For Loudspeakers - Thesis W - LOTS OF TRANSDUCER INFOmewarulesNo ratings yet

- The Whys and Wherefores of Microphone Array Crosstalk in Multichannel Microphone Array DesignDocument6 pagesThe Whys and Wherefores of Microphone Array Crosstalk in Multichannel Microphone Array DesignmewarulesNo ratings yet

- Wide-Angle Dispersion of High Frequency SoundDocument3 pagesWide-Angle Dispersion of High Frequency SoundmewarulesNo ratings yet

- Loudspeaker Damping, Part 2Document5 pagesLoudspeaker Damping, Part 2mewarulesNo ratings yet

- Longitudinal Noise in Audio Circuits, Part 2Document5 pagesLongitudinal Noise in Audio Circuits, Part 2mewarulesNo ratings yet

- Wavefront Sculpture Technology - AES Version - Better Images But Missing SomeDocument21 pagesWavefront Sculpture Technology - AES Version - Better Images But Missing SomemewarulesNo ratings yet

- New Method of Measuring and Analyzing IntermodulationDocument3 pagesNew Method of Measuring and Analyzing IntermodulationmewarulesNo ratings yet

- Low Frequency Sound Field Enhancement System For Rectangular Rooms Using Multiple Loudspeakers - AC-phdDocument150 pagesLow Frequency Sound Field Enhancement System For Rectangular Rooms Using Multiple Loudspeakers - AC-phdmewarulesNo ratings yet

- Optimization Design of SurfaceWave Electromagnetic Acoustic Sensors-22-00524-V2Document13 pagesOptimization Design of SurfaceWave Electromagnetic Acoustic Sensors-22-00524-V2mewarulesNo ratings yet

- A Comparison and Evaluation of The Approached To The Automatic Formal Analysis of Musical AudioDocument170 pagesA Comparison and Evaluation of The Approached To The Automatic Formal Analysis of Musical AudiomewarulesNo ratings yet

- High Quality Recording and Reproducing of Music and Speech (1926) - Bell and Howell - Maxfield - HarrisonDocument33 pagesHigh Quality Recording and Reproducing of Music and Speech (1926) - Bell and Howell - Maxfield - HarrisonmewarulesNo ratings yet

- Why Feed Back So Far - Norman H. Crowhurst (Radio-Electronics, Sep 1953)Document2 pagesWhy Feed Back So Far - Norman H. Crowhurst (Radio-Electronics, Sep 1953)jimmy67musicNo ratings yet

- VHDL CompDocument46 pagesVHDL CompRamesh ChandrakasanNo ratings yet

- MV-CS020-10GMGC GigE Area Scan Camera - Datasheet - 20221012Document2 pagesMV-CS020-10GMGC GigE Area Scan Camera - Datasheet - 20221012Dilip KumarNo ratings yet

- Introduction To Robotics - Presentation - IndianDocument26 pagesIntroduction To Robotics - Presentation - IndianMurad QəhramanovNo ratings yet

- Net Console CM GuideDocument21 pagesNet Console CM GuideArokiaraj RajNo ratings yet

- Installation & Operational Manual ALPHAWIND MF ENG v1.4 A4 PDFDocument52 pagesInstallation & Operational Manual ALPHAWIND MF ENG v1.4 A4 PDFAjay Singh0% (1)

- TimerDocument2 pagesTimerRICHARDNo ratings yet

- DO Automating SALT PDFDocument96 pagesDO Automating SALT PDFkela_hss86No ratings yet

- Ss Rel NotesDocument48 pagesSs Rel Notesahmed_497959294No ratings yet

- Michael Sochor - ResumeDocument2 pagesMichael Sochor - ResumeMichael SochorNo ratings yet

- 3.1 Sensor BasicsDocument41 pages3.1 Sensor BasicsAnonn NymousNo ratings yet

- What Is TWAMPDocument11 pagesWhat Is TWAMPrlo77No ratings yet

- Stopwatch Using Logic GatesDocument7 pagesStopwatch Using Logic Gatesakarairi83% (6)

- Only For GATE Qualified StudentsDocument1 pageOnly For GATE Qualified StudentsKartik NaithaniNo ratings yet

- IP Multicast Troubleshooting GuideDocument28 pagesIP Multicast Troubleshooting Guidesurge031No ratings yet

- Chapter 3 Data RepresentationDocument23 pagesChapter 3 Data RepresentationVansh GuptaNo ratings yet

- DASDMigration GuideDocument17 pagesDASDMigration GuideRageofKratosNo ratings yet

- Sap S4hana Cloud SDK - Sap Meetup - 2018!06!28Document23 pagesSap S4hana Cloud SDK - Sap Meetup - 2018!06!28javatwojavaNo ratings yet

- Return Instruction, RC, RNC, RP, RM, RZ, RNZ, Rpe, Rpo, RetDocument2 pagesReturn Instruction, RC, RNC, RP, RM, RZ, RNZ, Rpe, Rpo, Retpad pestNo ratings yet

- Flasher stm32 PDFDocument3 pagesFlasher stm32 PDFZalNo ratings yet

- Brosur Dixion Babyguard IncubatorDocument1 pageBrosur Dixion Babyguard IncubatormyrnaganiNo ratings yet

- Future of ComputingDocument42 pagesFuture of ComputingDan Arvin PolintanNo ratings yet

- DIGITAL ELECTRONICS Questions and Answers PDFDocument5 pagesDIGITAL ELECTRONICS Questions and Answers PDFBryanOviedoNo ratings yet

- 1D - ArraysDocument39 pages1D - ArraysabcdNo ratings yet

- Xseries 345 Type 8670 - Hardware Maintenance ManualDocument182 pagesXseries 345 Type 8670 - Hardware Maintenance ManualRay CoetzeeNo ratings yet

- DIY Mini CNC Laser EngraverDocument50 pagesDIY Mini CNC Laser Engraverjulio hdtNo ratings yet

- Funai Smart TV 40fdb7555 User ManualDocument13 pagesFunai Smart TV 40fdb7555 User ManualAchim F.No ratings yet

- User Exit Enhancement AIST0002 Step by StepDocument10 pagesUser Exit Enhancement AIST0002 Step by StepGilberto FolgosoNo ratings yet

- Visual ProgrammingDocument192 pagesVisual Programmingnarendra299No ratings yet