Professional Documents

Culture Documents

Online Job-Migration For Reducing The Electricity Bill in The Cloud

Online Job-Migration For Reducing The Electricity Bill in The Cloud

Uploaded by

weimings_1Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Online Job-Migration For Reducing The Electricity Bill in The Cloud

Online Job-Migration For Reducing The Electricity Bill in The Cloud

Uploaded by

weimings_1Copyright:

Available Formats

Online Job-Migration for Reducing

the Electricity Bill in the Cloud

[Extended technical report provided for review. Please do not redistribute. For the nal version of the paper, we will produce and publish

a publicly-available technical report with these extensions.]

Niv Buchbinder

Microsoft Research, New England

Navendu Jain

Microsoft Research, Redmond

Ishai Menache

MIT

AbstractEnergy costs are becoming the fastest-growing el-

ement in data center operation costs. One basic approach for

reducing these costs is to exploit the variation of electricity prices

both temporally and geographically by moving computation to

data-centers in which energy is available at a cheaper price.

However, injudicious job migration between data centers might

increase the overall operation cost due to the bandwidth costs

of transferring the application state and data over the wide-

area network. To address this challenge, we propose novel online

algorithms for migrating jobs between data centers, which handle

the fundamental tradeoff between energy and bandwidth costs.

A distinctive feature of our algorithms is that they consider not

only the current availability and cost of (possibly multiple) energy

sources, but also the future variability and uncertainty thereof.

Using the framework of competitive-analysis, we establish worst-

case performance bounds for our basic online algorithm. We

then propose a practical, easy-to-implement version of the basic

algorithm, and evaluate it through simulations on real electricity

pricing and job workload data. Our simulation results indicate

that our algorithm outperforms plausible greedy algorithms that

ignore future outcomes. Notably, the actual performance of our

approach is signicantly better than the theoretical guarantees,

typically only 4%6% off the optimal ofine-solution.

I. INTRODUCTION

Energy costs are becoming a substantial factor in the

operation costs of modern data centers (DCs). It is expected

that by 2014, the infrastructure and energy cost (I&E) will

become 75% of the total operation cost, while information

technology (IT) expenses, i.e., the equipment itself, will induce

only 25% of the cost (compared with 20% I&E and 80% IT

in the early 90s) [10]. Moreover, the operating cost per server

will be double its capital cost (even over its amortized lifespan

of 3-5 years). According to an EPA report, servers and data

centers consumed 61 billion Kilowatt at a cost of $4.5 billion

in 2007, with demand expected to double by 2011 [2]. In

addition to the direct economic consequences of the above

factors, the data-center operation cost may further increase due

to carbon taxes which are being imposed by several countries

to reduce the environmental impact of electricity generation

and consumption [3, 4].

To reduce the energy costs, research efforts are being made

in two main directions:

1) Combine traditional electricity-grid power with renew-

able energy sources such as solar, wave, tidal, and wind,

motivated by long-term cost reductions and growing

unreliability of the electric grid [17, 18].

2) Exploit the temporal and geographical variation of elec-

tricity prices. As demonstrated in Figure 1, energy prices

can signicantly vary over time and location (even

within 15 minute windows [19, 20]). A natural objective

is to shift the computational load to data centers where

the current energy prices are the cheapest.

In this paper, we combine the above two directions, by

suggesting efcient algorithms for dynamic placement of ap-

plications (or jobs) in data-centers, based on their energy cost

and availability. Note that to perform dynamic placement (or

migration) of jobs in data-centers, we need to account for the

bandwidth costs associated with moving the application state

and data across data centers. In this context, two fundamentally

different job execution models can be identied.

The rst model is the replicated execution model, suitable

for interactive applications, where at each data center, persis-

tent application data is replicated and multiple application in-

stances may be kept active to process user requests. This model

focuses on long-running, stateless services (e.g., web search

engines, chat applications and three-tier web applications) that

either do not need the user data to be copied when a process is

migrated, or can easily recreate the dynamic state (e.g., using

a clients cookie). To perform job migration, the operator can

send a control signal to stop an application instance at one

location and start/resume a new instance at another location.

The second model, which we consider in this paper, is the

live migration model, suitable for batch jobs (e.g., MapRe-

duce [15]) and stateful services (e.g., online gaming and

cloud hosting of applications [1, 23]), where the application

state and data needs to be migrated to the destination data

center. The memory footprint under this model depends on

the underlying application, and can range from a few KBs

up to hundreds of MBs [14]. Here, the bandwidth costs are

directly proportional to the number of bytes that need to be

sent between data centers in the migration process. We note

that there are known migration techniques (e.g., pipelining and

incremental snapshots) that can minimize process interruption

latency during the migration process. These techniques allow

for transparent migration of application instances between data

centers (without impacting the end-user) [14].

2

0

100

200

300

400

$

/M

W

H

0

200

400

600

800

1000

$

/M

W

H

0 100 200 300 400 500 600 700

0

50

100

150

200

Hours

$

/M

W

H

Fig. 1: Electricity pricing trends across three US locations

(CA (top), TX (middle), and MA (bottom)) over a month in

2009. The graphs indicate signicant spatio-temporal variation

in electricity prices.

In this paper, we propose online algorithms for the live

migration model within a cloud of multiple data centers. The

input for these algorithms includes the current energy prices

and power availability of different data centers. The algorithms

accordingly determine which jobs should be migrated and

to which hosting location, while taking into account the

bandwidth costs and the current job-allocation. Our model

accommodates data centers which are possibly co-located

with multiple energy sources, e.g., renewable sources. More

generally, we assume that the total energy cost in each data

center is generally a non-linear function of the utilization level.

Energy-cost reduction would thus depend on the ability to

exploit the instantaneously cheaper energy sources to their

full availability. A distinctive feature of our approach is

that we do not settle for an intuitive greedy approach to

optimize the overall energy and bandwidth costs, yet take into

account future deviations in energy-price and availability. We

emphasize that our electricity-pricing model is distribution-

free (i.e., we do not make probabilistic assumptions on future

electricity prices), as prices can often vary unexpectedly.

Related work. Motivated by the notable spatio-temporal vari-

ation in electricity prices, the networking research community

has recently identied the potential of reducing the cost in

data-centers operation. Qureshi et al. [19] consider the repli-

cated execution model and show that judicious locationing of

computation may save millions of dollars on the total operation

costs of data centers. They describe greedy heuristics for that

purpose and evaluate them on historical electricity prices and

network trafc data. Rao et al. [20] consider load-balancing of

delay sensitive applications with the objective of minimizing

the current energy cost subject to delay constraints. They use

linear programming techniques and report cost savings through

simulations with real data.

Our paper signicantly differs from the above references in

several aspects. First, we incorporate the bandwidth cost into

the optimization problem, as it might be a signicant factor

under the live migration model. Second, we consider a general

model of energy availability and cost which encompasses the

possibility of multiple energy sources per data-center. Third,

and most importantly, our algorithms take into account not

only the immediate savings of job migration, but also the

future consequences thereof, thereby accounting for future

uncertainty in the cost parameters.

Our solution method lies within the framework of online

computation [11], which has been successfully used for solv-

ing problems with uncertainty, not only in communication

networks, but also in nance, mechanism design and other

elds. Specically, we employ online primal-dual techniques

[13], including recent ideas from [7, 8]. Our problem formu-

lation generalizes the two well-studied online problems of

weighted paging/caching [5, 21] and Metrical Task System

(MTS) [9, 12, 16]. Our job migration problem includes as

special case both of these problems and extends their scope,

thereby requiring new algorithmic solutions; see Appendix A

for a detailed discussion of these problems and their relation

to our framework. Since the problem is a generalization of the

MTS problem we get from [12] a lower bound of (log n) on

the competitiveness of any online algorithm for our problem

(including randomized algorithms), where n is the number of

data centers. A tighter lower bound is currently out of our

reach and a subject for future examination.

Contribution and Paper Organization. In this paper, we

show, both analytically and empirically, that existing cloud

computing infrastructure can combine dynamic application

placement, based on energy cost and availability and band-

width costs, for signicant economic gains. Specically,

We design a basic online algorithm which is O(log H

0

)-

competitive (compared to the optimal ofine), where H

0

is

the total number of servers in the cloud.

To reduce the extensive computation required by the basic

algorithm in each iteration, we construct a computationally

efcient version of the basic algorithm, which can be easily

implemented in practice.

We evaluate our algorithms using real electricity pricing

data obtained from 30 US locations over three years, and

job-demand data for data-centers, and show signicant im-

provements over greedy-optimization algorithms. Overall, the

performance of our online algorithm is typically only 5% off

the optimal solution.

The rest of the paper is organized as follows. In Section II

we introduce the model and formulate the online optimization

problem. The basic online job-migration algorithm is presented

and analyzed in Section III. In Section IV we design an

efcient version of the basic algorithm, which is then evaluated

through real-data simulations in Section V. We conclude in

Section VI.

II. THE MODEL

In this section we formally dene the job migration prob-

lem. In Section II-A we describe the elements of the problem.

In Section II-B we formalize the online optimization problem,

the solution of which is the main objective of this paper.

3

A. Preliminaries

We consider a large computing facility (henceforth re-

ferred to as a cloud, for simplicity), consisting of n data

centers (DCs). Each DC i {1, . . . , n} contains a set

H

i

= {1, . . . , H

i

} of H

i

servers which can be activated

simultaneously. We denote by H

0

=

n

i=1

H

i

the total

number of servers in the cloud, and often refer to H

0

as the

capacity of the cloud. All servers in the cloud are assumed to

have equal resources (in terms of CPU, memory, etc.).

A basic assumption of our model is that energy (or elec-

tricity) costs may vary in time, yet remain xed within time

intervals of a xed length of (say 15 minutes, or one hour).

Accordingly, we shall consider a discrete time system, where

each time-step (or time) t {1, . . . , T} represents a different

time interval of length . We denote by B

t

the total job-load

at time t measured in server units, namely the total number

of servers which need to be active at time t. For simplicity,

we assume that each job requires a single server, thus B

t

is

also the total number of jobs at time t. We note, however, that

the latter assumption can be relaxed by considering a single

job per-processor similar to the virutalization setup. In Section

III we consider a simplied scenario where B

t

= B, namely

the job-load in the cloud is xed. We will address the case

where B

t

changes in time, which corresponds to job arrivals

and departures to/from the cloud in Section IV.

At each time t, the control decision is to assign jobs to data

centers, given the current electricity and bandwidth costs. We

rst describe these costs and then formulate our optimization

problem.

Energy costs. We assume that the energy cost at every data

center is a function of the number of servers that are being

utilized. Let y

i,t

be the number of servers that are utilized

in data center i at time t. The (total) energy cost is given

by

C

it

(y

i,t

) (measured in dollars per hour). Let C

it

: R

+

R

+

be the interpolation of

C

it

(); for convenience, we shall

consider this function in the sequel. We assume throughout

that C

it

(y

i,t

) is a non-negative, (weakly) convex increasing

function. Note that the function itself can change with t, which

allows for time variation in energy prices. We emphasize that

we do not make any probabilistic assumptions on C

it

(), i.e.,

this function can change arbitrarily over time.

The convexity assumption above means that the marginal

energy cost is non-decreasing with the number of servers being

utilized. We next briey motivate this assumption through

possible cost scenarios. The simplest example is the linear

model, in which the total energy cost is linearly proportional

to the number of servers utilized. Assuming that servers

not utilized are switched off and consume zero power, we

have C

it

(y

i,t

) = c

i,t

y

i,t

, where c

i,t

is the cost of using a

single server at time t. A more complicated cost model is

is the following. The marginal energy cost remains constant

while the utilization in the DC is not too high (say, around

90 percent). Above some utilization level, the energy cost

increases sharply. Practical reasons for such an increase may

include the usage of backup resources (processors or energy

Servers

utilized

Elec. cost

Fig. 2: A possible electricity cost model for the case of

multiple energy sources connected to a data center. The

marginal electricity-cost might rise sharply when starting to

utilize a more expensive energy source.

sources), enhanced cooling requirements, etc.

The last model we discuss in this context is the model where

each data center i is connected to multiple energy sources

(e.g., wind, solar, grid, etc.), see Figure 2. Under this model,

it is plausible to assume that marginal electricity-prices would

strictly increase whenever a more expensive energy resource

has to be utilized (e.g., when solar energy is not sufcient and

the grid has to be utilized as well). Formally, such model can

be described as follows. Denote by P

k

i,t

the availability of

energy source k at data-center i (measured as the number of

servers that can be supported by energy source k), and by c

k

i,t

the cost of activating a server at data-center i using energy

source k. Then up to P

k

i,t

servers can be utilized at a price

of c

k

i,t

per server. Noting that cheaper energy sources will be

always preferred, the total energy cost in readily seen to be a

convex increasing function.

Bandwidth Costs. The migration of jobs between data centers

induces bandwidth costs. In practice, bandwidth fees are paid

both for outgoing trafc and incoming trafc. Accordingly,

we assume the following cost model. For every data center

i, we denote by d

i,out

the bandwidth cost of transferring a

job out of data-center i, and by d

i,in

the bandwidth cost of

migrating a job into this data center

1

. Thus, the overall cost

of migrating a job from data center i to data center j is given

by d

i,out

+d

j,in

; see Figure 3 for an illustration. We note that

there are also bandwidth costs associated with the arrival of

jobs into the cloud (e.g., from a central dispatcher) and leaving

the cloud (in case of a job departure). However, these costs

are constant and do not depend on the migration control, and

are thus ignored in our formulation.

Our goal is to minimize the total operation cost in the cloud,

which is the sum of the energy costs at the data center and the

bandwidth costs of migrating jobs between data centers. It is

assumed that the job migration time is negligible with regard

to the time interval in which the energy costs are xed. This

means that migrated jobs at time t incur the energy prices at

their new data center, starting from time t.

1

In practice, bandwidth charging is based on computing the 95-th percentile

of incoming/outgoing bandwidth over sample periods of 5 minutes. In many

scenarios, d

i,in

and d

i,out

could be the same.

4

i

k

d

k,in

d

i,out

Fig. 3: An illustration of our model. Moving data from any

data-center i to any data-center k costs d

i,out

and d

k,in

per

unit.

We refer to the above minimization problem as the mi-

gration problem. If all future energy costs were known in

advance, then the problem could have optimally be solved

as a standard convex program. However, our basic assumption

is that electricity prices change in an unpredicted manner. We

therefore tackle the migration problem as an online optimiza-

tion problem. The problem is formulated below.

B. Problem Formulation

In this section we formalize the migration problem. We shall

consider a slightly different version of the original problem,

which is algorithmically easier to handle. Nonetheless, the

solution of the modied version leads to proven performance

guarantees for the original problem. Recall that we consider

the case of xed job-load, namely B

t

= B for every t. We

argue that it is possible to dene a modied model in which

we charge a migration cost d

i

= d

i,in

+ d

i,out

whenever the

algorithm migrates a job out of data center i, and do not

charge for migrating jobs into the data center. The following

lemma states that this bandwidth-cost model is equivalent to

the original bandwidth-cost model up to an additive constant.

Lemma 2.1: The original and modied bandwidth-cost

model, in which we charge d

i

for migrating jobs out of data

center i, are equivalent up to additive constant factors. In

particular, a c-competitive algorithm for the former results in

a c-competitive algorithm for the latter.

A detailed proof of this lemma can be found in Appendix

B. We note that the same result holds for the model which

charges d

i

for migrating jobs into the data center, and nothing

for migrating jobs out of the data center.

Using Lemma 2.1, we rst formulate a convex program

whose solution provides a lower bound on the optimal solution

for the (original) migration problem. Let z

i,t

be the number

of jobs that are migrated from data center i at time t. This

variable is multiplied by d

i

for the respective bandwidth cost.

The optimization problem is dened as

min

n

i=1

T

t=1

d

i

z

i,j

+

n

i=1

T

t=1

C

it

(y

i,t

),

subject to the following constraints:

n

i=1

y

i,t

B for every

t (i.e., meet job demand); z

i,t

y

i,t1

y

i,t

for every i, t

(i.e., z

i,t

reects the number of jobs left data center i at time

t); y

i,t

H

i

for every i, t (i.e., meet capacity constraint);

z

i,t

, y

i,t

{0, 1, . . . , H

i

} for every i, t. We shall consider

a relaxation of the above optimization problem, where the

last constraint is replaced with z

i,t

, y

i,t

0 for every i, t. A

solution to the relaxed problem is by denition a lower bound

on the value of the original optimization problem.

We next replace the above described convex program (with

the relaxation) by an LP that will be more convenient to work

with. Instead of representing the (total) energy cost through

the function C

it

(y

i,t

), we consider hereafter the following

equivalent cost model. For every time t, the cost of using the j-

th server at data center i is given by c

i,j,t

, where c

i,j,t

[0, ]

is increasing in j (1 j H

i

). We assume that c

i,j,t

may

change arbitrarily over time (while keeping monotonicity in j

as described above). Note that we allow c

i,j,t

to be unbounded,

to account for the case where the total energy availability in

the data center is not sufcient to run all of its servers.

Let 0 y

i,j,t

1 be the utilization level of the j-th

server of data-center i (we allow for partial utilization, yet

note that at most a single server will be partially utilized

in our solution). Accordingly, the energy cost of using this

server at time t is given by c

i,j,t

y

i,j,t

. Dene z

i,j,t

to be the

workload that is migrated from the jth server of data center

i to a different location. This variable is multiplied by d

i

for

the respective bandwidth cost. The optimization problem is

dened as follows.

(P) : min

n

i=1

Hi

j=1

T

t=1

d

i

z

i,j,t

+

n

i=1

Hi

j=1

T

t=1

c

i,j,t

y

i,j,t

(1)

subject to t :

n

i=1

Hi

j=1

y

i,j,t

B (2)

i, j, t : z

i,j,t

y

i,j,t1

y

i,j,t

(3)

i, j, t : y

i,j,t

1 (4)

i, j, t : z

i,j,t

, y

i,j,t

0 (5)

Observe that the rst term in the objective function corre-

sponds to the total bandwidth cost, whereas the second term

corresponds to the total energy cost.

One may notice that the above problem formulation might

pay the bandwidth cost d

i

for migrating data within each

data center i, which is clearly not consistent with our model

assumptions. Nevertheless, since the energy costs c

i,j,t

in each

data center i are non-decreasing in j for every t, an optimal

solution will never prefer servers with higher indexes over

lower-index servers. Consequently, jobs will not be migrated

within the data-center. Thus, solving (P) is essentially equiva-

lent to solving the original convex problem. This observation,

together with Lemma 2.1 allows us to consider the optimiza-

tion problem (P). More formally,

Lemma 2.2: The value of (P) is a lower bound on the value

of the optimal solution to the migration problem.

5

We refer to the optimization problem (1) as our primal problem

(or just(P)). The dual of (P) is given by

(D) : max

T

t=1

Ba

t

n

i=1

Hi

j=1

T

t=1

s

i,j,t

, (6)

s.t i, j, t c

i,j,t

+ a

t

+ b

i,j,t

b

i,j,t+1

s

i,j,t

0, (7)

i, j, t b

i,j,t

d

i

, (8)

i, j, t b

i,j,t

, s

i,j,t

0. (9)

By Lemma 2.2 and the weak duality theorem (see, e.g.,

[22]), an algorithm that is c-competitive with respect to a

feasible solution of (D), would be c-competitive with respect

to the ofine optimal solution.

III. AN ONLINE JOB-MIGRATION ALGORITHM

In this section we design and analyze an online algorithm

for the migration problem (P). The algorithm is presented in

Section III-A. We then show in Section III-B that the algorithm

is O(log H

0

)-competitive.

A. Algorithm Description

The algorithm we design is based on a primal-dual ap-

proach. This means that at each time t, the new primal vari-

ables and the new dual variables are simultaneously updated.

The general idea behind the algorithm is to maintain a feasible

dual solution to (D), and to upper-bound the operation cost at

time t (consisting of bandwidth cost and the energy cost) as a

function of the value of (D) at time t. Since a feasible solution

to (D) is a lower bound on the optimal solution, this procedure

would immediately lead to a bound on the competitive ratio

of the online algorithm.

The online algorithm outputs a fractional solution to the

variables y

i,j,t

. To obtain the total number of servers that

should be activated in data-center i, we simply calculate the

sum

Hi

j=1

y

i,j,t

at every time t. Since this sum is generally

a fractional number, one can round it to the nearest integer.

Because the number of servers in each data-center (H

i

) is

fairly large, the effect of the rounding is negligible and thus

ignored in our analysis.

The online algorithm receives as input the energy cost vector

{c

i,j,t

}

i,j

at every time t dening the energy cost at each server

(i, j) at time interval t. As rst step we use a well known

reduction that allows us to consider only elementary cost

vectors [11, Sec. 9.3.1]. Elementary cost vectors are vectors of

the form (0, . . . , 0, c

it,jt

, 0, . . . , 0), namely vectors with only

a single non-zero entry. The reduction allows us to split any

general cost vector into a nite number of elementary cost

vectors without changing the value of the optimal solution.

Furthermore, we may translate any online migration decisions

done for these elementary task vectors to online decisions on

the original cost vectors without increasing our cost. Thus,

we may only consider from now on elementary cost vectors.

Abusing our notations, we use the original time index t to

describe these elementary cost vectors. We use c

t

instead of

c

it,jt

to describe the (single) non-zero cost at server (i

t

, j

t

) at

time t. Thus, the input for our algorithm consists of i

t

, j

t

, c

t

.

Input: Data-center it, server jt, cost ct (at time t).

Initialization:

Set at = 0.

For all i, j, set b

i,j,t+1

= b

i,j,t

.

For all i, j, set s

i,j,t

= 0.

Loop:

Keep increasing at, while termination condition is not satised.

Update the other dual variables as follows:

For every i, j = it, jt such that y

i,j,t

< 1, increase b

i,j,t+1

with rate

db

i,j,t+1

da

t

= 1.

For every i, j = it, jt such that y

i,j,t

= 1, increase s

i,j,t

with rate

ds

i,j,t

da

t

= 1.

For i, j = it, jt, decrease b

i

t

,j

t

,t+1

so that

i,j=i

t

,j

t

dy

i,j,t

da

t

=

dy

i

t

,j

t

,t

da

t

.

Termination condition: Exit when either y

i

t

,j

t

,t

= 0, or ct + at +

b

i

t

,j

t

,t

b

i

t

,j

t

,t+1

= 0.

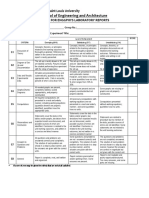

TABLE I: The procedure for updating the dual variables of

the online optimization problem.

The algorithm we present updates at every time t the (new)

dual variables a

t

, s

t

and b

i,j,t+1

. The values of these variables

are determined incrementally, via a continuous update rule

(closed-form one-shot updates seems hard to obtain). We

summarize in Table I the procedure for the update of the dual

variables at any time t. We refer to the procedure at time t as

the t-th iteration. We note that the continuous update rule is

mathematically more convenient to handle. Nonetheless, it is

possible to implement the online algorithm through discrete-

time iterations, by searching for the scalar value a

t

up to any

required precision level.

Each primal variable y

i,j,t

is continuously updated as well,

alongside with the continuous update of the respective dual

variable b

it,jt,t+1

. The following relation between b

it,jt,t+1

and y

i,j,t

is preserved throughout the iteration:

y

i,j,t

:=

1

H

0

exp

ln(1 + H

0

)

b

i,j,t+1

d

i

. (10)

The variable y

it,jt,t

is updated so that the amount of work that

is migrated to other servers is equal to the amount of work

leaving j

t

(i.e, total work conservation). We next provide a

brief description of the inner-working of the algorithm, along

with some intuitive insights. The objective in each iteration

is to maximize the dual prot. To that end, a

t

is increased

as much as possible, that is, without violating the primal and

dual constraints. The variable b

it,jt,t+1

decreases throughout

the procedure; since y

it,jt,t

is proportional to b

it,jt,t+1

(via

(10)), we obtain a decrease in the workload on server j

t

at

data-center i

t

, while adding workload to other servers. The

stopping condition is when either there is no workload left in

the costly server, or when the constraint (7) becomes tight for

that server. Note that the constraint (7) is never violated for the

other servers, as either b

i,j,t+1

or s

i,j,t

are increased together

with a

t

. Obviously, one would prefer increasing b

i,j,t+1

rather

than s

i,j,t

, as increasing the latter results in a dual-prot

loss. Thus, the procedure increases b

i,j,t+1

as long as its

value is below the upper limit d

i

(or equivalently, as long

as y

i,j,t

1). If the server reaches its upper limit (in terms of

6

b

i,j,t+1

or equivalently y

i,j,t

), we must increase s

i,j,t

, which

can intuitively be viewed as the price of utilizing the server

to its full capacity. Since the number of servers that have

reached their full capacity is bounded by B, it is always

protable to keep increasing a

t

despite the dual-prot loss

of increasing s

i,j,t

, as long as no constraint is violated.

We conclude this subsection by focusing on the initialization

of the dual variable b

i,j,t+1

, namely b

i,j,t+1

= b

i,j,t

. As

b

i,j,t+1

is proportional to y

i,j,t

, setting the initial value of

b

i,j,t+1

to be equal to the value of the previous variable

suggests that servers that have been fairly utilized continue

to be trusted, at least initially. This observation indicates

that although the online algorithm is designed to perform well

against any adversary output, the algorithm puts signicant

weight on past costs, which are manifested through past

utilization. We therefore expect the algorithm to work well

even when energy prices are more predictable and do not

change much between subsequent iterations.

B. Performance Bound

In this subsection we obtain a performance bound for our

online algorithm, which is summarized in the next theorem.

Theorem 3.1: The online algorithm is O(log (H

0

))-

competitive.

The proof proceeds in a number of steps. We rst show that our

algorithm preserves a feasible primal solution and a feasible

dual solution. We then relate the change in the dual variable

b

i,j,t+1

to the change in the primal variable y

i,j,t

, and the

change of the latter to the change in the value of the feasible

dual solution. This allows us to upper bound the bandwidth

cost at every time t as a function of the change in the value

of the dual. Finally, we nd a proper relation between the

bandwidth cost and the energy cost, which allows us to also

bound the latter as a function of the change in the dual value.

A full proof is provided below.

Proof of Theorem 3.1:

Step 1: Primal Solution is feasible. Note rst that we always

keep the sum of

i

j

y

i,j,t

equal to B. This equation holds

since at each time t we enforce work conservation through

i,j=it,jt

dyi,j,t

dat

=

dyi

t

,j

t

,t

dat

, namely the decrease in the load

of server j

t

in data-center i

t

is equivalent to the increase in

the total load at the other servers. Note further that we keep

y

i,j,t

1 for every for each i, j, t, as y

i,j,t

is directly propor-

tional to b

i,j,t+1

via the primal-dual relation (10), and we stop

increasing the latter when y

i,j,t

reaches one.Step 2: Dual So-

lution is feasible. s

i,j,t

0, as it is initially set to zero and can

only increase within the iteration; similarly, b

i,j,t+1

0 for

every i, j = i

t

, j

t

. As to b

it,jt,t+1

, it is initially non-negative.

As y

it,jt,t

0 at the end of the iteration, it immediately

follows from the primal-dual relation (10) that b

it,jt,t+1

0

for every t. It also follows from (10) that b

i,j,t+1

d

i

,

since b

i,j,t+1

is monotone increasing in y

i,j,t

(and vise-versa),

and b

i,j,t+1

= d

i

if y

i,j,t

= 1. It remains to show that the

constraint (7) is preserved for every i, j. It is trivially preserved

for i, j = i

t

, j

t

, as activating the constraint is a stopping

condition for the iteration. For i, j = i

t

, j

t

, note that every

iteration is initiated with s

i,j,t

= 0, b

it,jt,t

b

it,jt,t+1

= 0

and a

t

= 0 so c

t

+ a

t

+ b

it,jt,t

b

it,jt,t+1

s

i,j,t

0, as

required. Throughout the iteration, we increase either b

it,jt,t+1

or s

i,j,t

at the same rate that a

t

is increased, which insures

that the constraint is not violated.Step 3: Relating the dual

and primal variables, and the primal variables to the dual

value. Differentiating (10) with respect to b

i,j,t+1

leads to the

following relation

dy

i,j,t

db

i,j,t+1

=

ln(1 + H

0

)

d

i

y

i,j,t

+

1

H

0

. (11)

Let D

t

denote the increment in the value of the dual solution,

obtained by running the online algorithm at iteration t. Noting

that

dsi,j,t

dat

= 1 for i, j, t such that y

i,j,t

= 1, the derivative of

D

t

with respect to a

t

is given by

D

t

a

t

= B

i,j=it,jt

1(y

i,j,t

= 1) = y

it,jt

+

i,j=it,jt|yi,t<1

y

i,j,t

,

(12)

where 1() is the indicator function

2

. The last two equations

combined allow us to relate the change in the dual variables

to the change in the dual value. We next use these equations

to bound the bandwidth cost.

Step 4: Bounding the bandwidth cost. Since B is an

integer, and since

i,j=it,jt

1(y

i,j,t

= 1) is also an

integer (not larger than B), it follows from (12) that

y

it,jt

+

i,j=it,jt|yi,t<1

y

i,j,t

is a nonnegative integer; be-

cause y

it,jt

> 0, the latter integer is strictly positive. In view

of (12),

Dt

at

1. We now analyze the derivative of the

bandwidth cost with respect to the change in the dual variables

b

i,j,t+1

. Instead of considering the actual bandwidth costs, we

pay d

i

for moving workload into i, j = i

t

, j

t

. We refer to

the latter cost model as the modied bandwidth-cost model.

As shown in Lemma 2.1, the offset in total cost between the

original and the modied bandwidth-cost model is constant.

Let I

t

= {i, j = i

t

, j

t

|y

i,j,t

< 1}. The derivative of the

modied bandwidth-cost is given by

It

d

i

dy

i,j,t

db

i,j,t+1

=

It

ln (1 + H

0

)

y

i,j,t

+

1

H

0

(13)

ln (1 + H

0

)

1 +

It

y

i,j,t

2 ln (1 + H

0

)

D

t

a

t

, (14)

Where (13) follows from (11). Inequality (14) follows by

noting that

Dt

at

It

y

i,j,t

, and

Dt

at

1. Since the

derivative of the is always bounded by 2 ln(1 + H

0

) times

the derivative of the dual, we conclude by integration that the

bandwidth cost is within O(ln(H

0

)) of the dual value.

Step 5: Bounding the energy cost.

We restrict attention to iterations in which y

it,jt,t

is eventu-

ally non-zero and energy cost incurs. We use the notation x

2

Note that the set {i, j = it, jt|y

i,t

< 1} generally does not remain

constant during an iteration.

7

to denote the value of variable x at the end of the iteration,

thereby distinguishing the nal values from intermediate ones.

Since y

it,jt,t

> 0 (at the end of the iteration) the iteration

ends with the condition b

it,jt,t

b

it,jt,t+1

= c

t

a

t

, which

after multiplying both sides by y

it,jt,t

is equivalent to

c

t

y

it,jt,t

=

b

it,jt,t

b

it,jt,t+1

it,jt,t

+ a

t

y

it,jt,t

. (15)

The left-hand-side of this equation is nothing but the en-

ergy cost. Note further that the term a

t

y

it,jt,t

in the right-

hand-side is bounded by D

t

(the increment in the dual

value for iteration t). Thus in order to obtain a bound on

the energy cost at time t, it remains to bound the term

b

it,jt,t

b

it,jt,t+1

it,jt,t

with respect to D

t

. We next bound

this term by considering the bandwidth cost at iteration t.

Instead of the true bandwidth cost, we will assume that the cost

for bandwidth is (y

it,jt,t1

y

it,jt,t

)d

it

, namely we assume

that the algorithm pays for moving workload outside of i

t

, j

t

.

Let M

t

= (y

it,jt,t1

y

it,jt,t

)d

it

. We have

M

t

=

t=a

t

t=0

d

it

dy

it,jt,t

db

it,jt,t+1

db

it,jt,t+1

d

t

d

t

(16)

d

it

b

it,jt,t

b

it,jt,t+1

dy

it,jt,t

db

it,jt,t+1

(b

it,jt,t+1

) (17)

= ln(1 + H

0

)

b

it,jt,t

b

it,jt,t+1

it,jt,t

+

1

H

0

,

(18)

where

dyi

t

,j

t

,t

dbi

t

,j

t

,t+1

(b

it,jt,t+1

) is the derivative of y

it,jt,t

with

respect to b

it,jt,t+1

, evaluated at the end of the iteration (where

b

it,jt,t+1

= b

it,jt,t+1

and y

it,jt,t

= y

it,jt,t

). Equation (16)

follows by integrating the movement cost from i

t

, j

t

while

increasing a

t

from zero to its nal value a

t

. Inequality (17)

is justied as follows. In view of (11), the smallest value of

the derivative

dyi

t

,j

t

,t

dbi

t

,j

t

,t+1

within an iteration is when y

it,jt,t

is

the smallest. Since y

it,jt,t

monotonously decreases within the

iteration, we take the nal value of the derivative and multiply

it by the total change in the dual variable b

it,jt,t+1

, given

by

b

it,jt,t

b

it,jt,t+1

. Plugging (11) leads to (18). sing the

above inequality, we may bound the required term:

b

it,jt,t

b

it,jt,t+1

it,jt,t

M

t

y

it,jt,t

ln(1 + H

0

)

it,jt

+

1

H0

M

t

1

ln(1 + H

0

)

Substituting this bound in (15) yields

c

t

y

it,jt,t

M

t

ln(1 + H

0

)

+ a

t

y

it,jt,t

. (19)

Recall that the actual bandwidth cost is only a constant factor

away from

t

M

t

(cf. Lemma 2.1). Summing (19) over t and

reusing (14) indicates that the total energy cost is at most three

times the dual value (plus a constant), namely O(1) times the

dual value.

Combining Steps 4 and 5, we conclude that our online

algorithm is O(log (H

0

))-competitive.

IV. AN EFFICIENT ONLINE ALGORITHM

Theorem 3.1 indicates that the online algorithm is expected

to be robust against any possible deviation in energy prices.

However, its complexity might be too high for an efcient

implementation with an adequate level of precision: First, the

reduction of [11, Sec. 9.3.1] generally splits each cost vector

at any given time t into O(H

2

0

) vectors, thereby requiring a

large number of iterations per each actual time step t. Second,

the need for proper discretization of the continuous update rule

of the dual variables (see Table I) might make each iteration

computationally costly. Therefore we design in this section an

easy-to-implement online algorithm, which inherits the main

ideas of our original algorithm, yet decreases signicantly the

running complexity per time step.

We employ at the heart of the algorithm several ideas and

mathematical relations from the original online algorithm. The

algorithm can accordingly be viewed as an efcient variant

of the original online algorithm, thus would be henceforth

referred to as the Efcient Online Algorithm (EOA). While we

do not provide theoretical guarantees for the performance of

the EOA, we shall demonstrate through real-data simulations

its superiority over plausible greedy heuristics (see Section

V). The EOA provides a complete algorithmic solution which

also includes the allocation of newly arrived jobs; the way

job arrivals are handled is described towards the end of this

section.

For simplicity, we describe the EOA for linear electricity

prices (i.e., the electricity price for every DC i and time t is

c

i,t

, regardless of the number of servers that are utilized). At

the end of this section, we describe the adjustments needed

to support non-linear prices. The input for the algorithm

at every time t is thus an n dimensional vector of prices,

c

t

= (c

1,t

, c

2,t

, . . . , c

n,t

). For ease of illustration, we re-

enumerate the data-centers in each iteration according to the

present electricity prices, so that c

1

c

2

c

n

.

For exposition purposes we omit the time index t from all

variables (e.g., we write c = (c

1

, c

2

, . . . , c

n

) instead of

c

t

= (c

1,t

, c

2,t

, . . . , c

n,t

)). We denote by d

i,k

= d

i,out

+d

k,in

the bandwidth cost per unit for transferring load from data

center i to data center k. We further denote by c

i,k

the

difference in electricity prices between the two data centers,

namely c

i,k

= c

i

c

k

, and refer to c

i,k

as the differential

energy cost between i and k. The iteration of the EOA is

described in Table II.

Before elaborating on the logic behind the EOA, we note

that the running complexity of each iteration is O(n

2

). Ob-

serve that the inner loop makes sure that data is migrated from

the currently expensive data-centers to cheaper ones (in terms

of the corresponding electricity prices). We comment that the

fairly simple migration rule (20) could be implemented in a

distributed manner, where data centers exchange information

on their current load and electricity price; we however do not

focus on a distributed implementation in the current paper.

We now proceed to discuss the rational behind the EOA,

and in particular the relation between (20) (which will be

8

Input:

Cost vector c = (c

1

, c

2

, . . . , cn).

Let y = (y

1

, y

2

, . . . , yn be the current load vector on the data

centers.

Job Migration Loop:

Outer loop: k = 1 to n,

Inner loop: i = n to k + 1

Move load from DC i to DC k according the following migration

rule.

min

y

i

, H

(k)

y

k

, s

1

c

i,k

y

i

d

i,k

(y

k

+ s

2

)

, (20)

where s

1

, s

2

> 0 are positive parameters.

TABLE II: The iteration of the EOA. Time indexes are

omitted. Note that the data-centers are ordered in increasing

electricity-cost, namely c

1

c

n

.

henceforth referred to as the migration rule) and the original

online algorithm described earlier. We rst motivate the use

of the term c

i,k

y

i

in the migration rule. Note rst that this

term corresponds to the energy cost that could potentially be

saved by migrating all jobs in data center i to data center k.

Observe further from Eq. (19) that the energy cost at each

iteration is proportional to the migration cost at the same

iteration

3

. The reason that we use differential energy costs

c

i,k

= c

i

c

k

is directly related to the reduction from general

cost vectors to elementary cost vectors (see Section III-A). The

reduction creates elementary cost vectors by iterating over the

differential energy costs c

i

c

i1

. Without going into all

details, the reduction creates elementary cost vectors by rst

taking the smallest cost c

1

and dividing it to such vectors

which are spread in small pieces into all data-centers. The

second to be spread is c

2

c

1

, which is used for creating

cost vectors for all data centers but the rst one (whose entire

cost has already been covered at the previous step). This

allocation proceeds iteratively until spreading the last cost of

c

n

c

n1

. Since the inputs which are used for the original

online algorithm are created by using the marginal additional

costs, c

i,k

, it is natural to use this measure for determining the

job migration policy.

Further examination of the migration rule (20) reveals that

the amount of work that is migrated from DC i to DC k

is proportional to y

k

(the load at the target data-center).

The motivation for incorporating y

k

in the migration rule

follows directly from (11), which implies that the change

in the load in data center k should be proportional to the

current load, y

k

. As discussed earlier, this feature essentially

encourages the migration of jobs into data centers that were

cheap in the past, and consequently loaded in the present.

Another idea which appears in (11) and is incorporated in

the current migration rule is that the migrated workload is

inversely proportional to the bandwidth cost. we use here the

actual per-unit bandwidth cost from DC i to DC k, given by

d

i,k

= d

i,out

+ d

k,in

. Taking d

i,k

into account prevents job

3

Indeed, in view of (14), the migration cost is approximately proportional to

a

t

(y

i

t

,j

t

+

i,j=i

t

,j

t

|y

i,t

<1

y

i,j,t

), which is always larger than a

t

y

i

t

,j

t

,t

.

migration between data centers with high bandwidth cost.

The last idea which is borrowed from (11) is to include an

additive term s

2

, which reduces the effect of y

k

. This additive

term enables the migration of jobs to data centers, even if

they are currently empty. The value of s

2

could in principle be

adjusted throughout the execution of the algorithm. Intuitively,

the value of s

2

manifests the natural tradeoff between making

decisions according to current energy prices (high s

2

) or

relaying more on usage history (low s

2

). The other parameter

in the migration rule, s

1

, sets the aggressiveness of the

algorithm. Increasing s

1

makes the algorithm greedier in

exploiting the currently cheaper electricity prices (even at

the expense of high bandwidth costs). This parameter can be

optimized as well (either ofine or online) to yield the best

performance.

To complete the description of the EOA, we next specify

how newly arrived trafc is distributed. We make here the

simplifying assumption that jobs arrive to a single global

dispatcher, and then allocated to the different data-centers. The

case of multiple dispatchers is outside the scope of this paper,

nonetheless algorithms for this case can be deduced from

the rule we describe below. A newly arrived job is assigned

through the following probabilistic rule:

At every time t, assign the job to data center i with probability

proportional to:

1

di

(y

i

+ s

2

), where d

i

= d

i,in

+ d

i,out

. The

reasoning behind this rule is the same as elaborated above for

the migration rule (20).

We conclude this section by outlining how the EOA can

handle non-linear pricing of electricity. Consider data center

that is supported by energy sources. At any time each source

has energy cost c

t

and availability P

t

(i.e., P

t

is the number

of servers that we can support using this energy source).

The simple idea is to treat each energy source as a virtual

data center. For each data center i we take the cheapest

energy sources whose total availability is H

i

(truncating the

availability of the last energy source used). We partition the

current load of the data center on the energy sources preferring

cheaper energy sources. Finally, we treat each such energy

source as a virtual data center (with capacity P

t

and cost

c

t

) simulating migration between different energy sources of

different data centers.

V. SIMULATIONS

In this section, we evaluate our proposed online algorithm

on real datasets, while comparing it to two plausible greedy

algorithms.

A. Alternative Job-Migration Algorithms

As a rst simple benchmark for our algorithm, we use

a greedy algorithm referred to as Move to Cheap (MTC)

that always keeps the load on data centers having the lowest

electricity prices (without exceeding their capacity). While

its energy costs are the lowest possible by denition, the

migration costs are eventually high and its overall perfor-

mance is poor. This is expected since this algorithm does

not consider the bandwidth cost. The second greedy heuristic

9

0

5000

10000

15000

20000

25000

0 100 200 300 400 500 600 700

T

a

s

k

D

e

m

a

n

d

Hours

Fig. 4: Hourly task demand data from a cluster running

MapReduce tasks. The Y-axis represents the number of tasks

per hour.

that we consider is MimicOPT. This heuristic tries to mimic

the behavior of the optimal solution. That is, at any given

time, MimicOPT solves an LP with the energy costs until

the present time t, and migrates jobs so as to mimic the

job allocation of the current optimal solution up to time

t. As MimicOPT does not have the data on future power

costs and does not consider future outcomes, it is obviously

not optimal. However, this heuristic is reasonable in non-

adversarial scenarios and, indeed, performs fairly well. To

make the running time of MimicOPT feasible, we restrict the

LP to use a window of the last 80 hours (an increase in the

window size does not seem to signicantly change the results).

In general, we expect MimicOPT to perform well on inputs

in which electricity prices are highly correlated in time. In

what follows, we shall compare the performance of MTC,

MimicOPT and our EOF algorithms. Our reference is naturally

the optimal ofine solution, denoted OPT.

B. Electricity Prices and Job-Demand Data

We obtained the historical hourly electricity price data

($/MWh) from publicly available government agencies for 30

US locations, covering January 2006 through March 2009. We

omit here the organization details of the electricity system

in the US; please refer to [19]. In addition to the hourly

electricity market, there are also 15-minute real-time markets

which exhibit more volatility with high-frequency variation.

Since these markets account for a small fraction of the total

energy transactions (below 10%), we do not consider them in

our simulations.

The snapshot of the evolvement of electricity prices in

different locations (Figure 1) demonstrates that (i) prices are

relatively stable in the long-term, but exhibit high day-to-day

volatility, (ii) there are short-term spikes, and (iii) hourly prices

are not correlated across different locations.

For our simulations, we use task-demand data obtained

from a clusters running MapReduce jobs for a large online

1

1.1

1.2

1.3

1.4

1.5

1.6

1.7

1.8

0 50 100 150 200 250 300 350 400 450 500 550

P

e

r

f

o

r

m

a

n

c

e

r

a

t

i

o

Hours

EOA

Mimic

MTC

Fig. 5: Performance ratios of EOA, MimicOPT, and MTC

(with respect to OPT).

services provider. Fig. 4 shows the hourly task demand data

obtained from a cluster running MapReduce jobs for a large

online services provider. We observe a signicant variation

in the number of tasks corresponding to job submissions and

different MapReduce phases.

C. Performance Evaluation

We rst compare the performance ratio of the total elec-

tricity and bandwidth costs incurred by different algorithms

over pricing data from 10 locations. We assume that each

location has 25K servers, each server consumes 200 Watts,

bandwidth price is $0.20 per GB [1], and the task memory

footprint is 100 MB (corresponding to intermediate results in

MapReduce phases). To compute total job demand, we use

xed background demand of 60% (15K servers) per location

and a dynamic component from MapReduce traces which run

on remaining 40% capacity.

Evaluating the total cost. Fig. 5 compares the total cost

(energy + bandwidth) for EOA, MimicOPT and MTC algo-

rithms on a typical run. For each algorithm, we show the

ratio between the obtained cost and the cost of the ofine

optimal solution OPT. We observe that all algorithms exhibit

high variation initially due to high migration cost, whereas the

optimal approach migrates rarely and stabilizes quickly due

to knowledge of all future timesteps. The EOA algorithm has

the best performance ratio (about 1.04) amongst all the online

algorithms. The superiority of our algorithms was observed in

all of our simulations (regardless of the locations chosen and

the price history).

Fig. 6 shows the workload evolution for the (Boston, MA)

location, while the total workload is kept around 60%. In

the top graph, we depict the (normalized) electricity costs

of the location (which has up to 10x variation), along with

the (normalized) load that MTC puts on the Boston location.

Recall that MTC always uses the cheapest data centers. Thus,

a load of one indicates that Boston is one of the 60% cheaper

locations, while a load of zero indicates that it is among the

40% more expensive locations. The bottom graph of the same

10

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0 25 50 75 100 125 150

Energy Cost

MTC

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0 25 50 75 100 125 150

L

o

a

d

Hours

OPT

EOA

Mimic

Fig. 6: Job-load and normalized electricity price over time

at (Boston, MA). Upper graph depicts the price at this loca-

tion, and also indicates the load that MTC puts on the data

center. Lower graph shows the load of the DC for the other

algorithms.

gure shows the fraction of servers which are kept active

in this location by the other algorithms. As may have been

expected, we observe rst that the number of active servers

for OPT and MimicOPT varies signicantly with electricity

pricing (the DC is either full or empty), indicating that the

optimal job assignment varies with time across locations.

Further, MimicOPT shows a delay in the assignment as well

as high variation, as it tries to catch-up with the optimal

assignment. Finally, the EOA algorithm exhibits relatively

stable job assignment, as it takes into account the relative

pricing of this location, and carefully balances the energy costs

with the associated bandwidth costs of migrating jobs between

locations.

Performance as a function of the Number of Data Centers.

Fig. 7 illustrates the performance ratio (compared to OPT)

as we increase the number of data centers from 5 to 25.

In general, all algorithms exhibit slight performance degra-

dation as the number of data centers increases, possibly due

to increased scale as the problem becomes harder to solve

optimally. Specically, the EOA algorithm incurs costs 1.04x

while the MimicOPT incurs costs of about 1.07x, compared

to OPT.

Performance as a function of utilization. Fig. 8 shows

the performance ratio as we vary the background utilization

of the data centers from 15% to 75%. We observe that the

performance ratio improves (decreases) as the load increases

due to the reduced exibility in the job assignment.

Overall, we have observed that the job assignment changes

signicantly with the variation in electricity prices, as all

plausible algorithms increase the number of servers at lo-

cations with lower prices. Importantly, the EOA algorithm

1

1.1

1.2

1.3

1.4

1.5

1.6

1.7

0 5 10 15 20 25 30

P

e

r

f

o

r

m

a

n

c

e

r

a

t

i

o

Number of DCs

EOA

Mimic

MTC

Fig. 7: Performance as a function of the number of data

centers.

1

1.2

1.4

1.6

1.8

2

2.2

2.4

0% 20% 40% 60% 80%

P

e

r

f

o

r

m

a

n

c

e

r

a

t

i

o

Load

EOA

Mimic

MTC

Fig. 8: Performance with respect to the ofine optimal solution

as a function of the DC utilization.

provides the best performance ratio and a relatively stable job

assignment. While the performance of MimicOPT is close to

that of EOA, we note that the performance gap between these

two algorithms becomes larger when subtracting the minimal

energy cost that has to be paid by any algorithm. Specically,

the performance-ratio becomes around 1.55 and 1.8 for EOA

and MimicOPT, respectively, indicating that the actual cost

saving by our algorithm is very signicant.

VI. CONCLUDING REMARKS

This paper introduces novel online job-migration algorithms

for reducing the overall operation cost in a cloud of multi-

ple data centers. We provide a competitive-ratio bound for

the performance of a basic online algorithm, indicating its

robustness against any future deviation in energy prices. An

easy-to-implement version of the basic algorithm is designed,

which can be seamlessly integrated as part of existing control

mechanisms for data-center operation.

11

Our simulations on real electricity pricing data and job

demand data demonstrate that the actual performance of

our algorithm could be very close to the minimal opera-

tion cost (obtained through ofine optimization). Importantly,

our online algorithm outperforms several reasonable greedy

heuristics, thereby emphasizing the need for a rigorous online

optimization approach.

The algorithms we suggest solve the fundamental energy-

cost vs. bandwidth-cost tradeoff associated with job migration.

Yet, we have certainly not covered herein some important

aspects and constraints which may need to be considered

in the design of future online job-migration algorithms. For

example, we make in this paper a simplifying assumption

that every job can be placed in every data-center. In some

cases, however, some practical constraints might arise, which

prevent certain jobs from being run at certain locations.

Such constraints could be due to delay restrictions (e.g., if

a particular data-center is too far from the location of the

source), security considerations, and other factors. On the

other hand, a different type of constraint might require certain

jobs to be placed adjacent to others (e.g., to accommodate

low inter-application communication delay). Complementary

to the above, are restrictions on the migration paths, due to the

possible delay overhead of the migration process. Overall, the

general framework of online job-migration opens up new the-

oretical and algorithmic challenges, as proper consideration of

the dynamically evolving electricity prices, could signicantly

contribute to further cost reductions in cloud environments.

REFERENCES

[1] http://aws.amazon.com/ec2.

[2] http://www.eia.doe.gov/cneaf/electricity/page/co2 report/co2report.html.

[3] http://www.nature.com/news/2009/090911/full/news.2009.905.html.

[4] www.carbontax.org.

[5] N. Bansal, N. Buchbinder, and J. Naor, A primal-dual randomized

algorithm for weighted paging, in FOCS, 2007, pp. 507517.

[6] , Randomized competitive algorithms for generalized caching,

in Proceedings of the 40th annual ACM symposium on Theory of

computing, 2008, pp. 235244.

[7] , Metrical task systems and the k-server problem on hsts, in

ICALP, 2010.

[8] , Towards the randomized k-server conjecture: a primal-dual

approach, in SODA, 2010.

[9] Y. Bartal, A. Blum, C. Burch, and A. Tomkins, A polylog(n)-

competitive algorithm for metrical task systems, in STOC, 1997, pp.

711719.

[10] C. Belady, In the data center, power and cooling costs more than it

equipment it supports, Feb 2007.

[11] A. Borodin and R. El-Yaniv, Online computation and competitive

analysis. Cambridge University Press, 1998.

[12] A. Borodin, N. Linial, and M. E. Saks, An optimal on-line algorithm for

metrical task system, Journal of the ACM, vol. 39, no. 4, pp. 745763,

1992.

[13] N. Buchbinder and J. Naor, The design of competitive online algorithms

via a primaldual approach, Foundations and Trends in Theoretical

Computer Science, vol. 3, no. 2-3, pp. 93263, 2009.

[14] C. Clark, K. Fraser, S. H, J. G. Hansen, E. Jul, C. Limpach, I. Pratt,

and A. Wareld, Live migration of virtual machines, in NSDI, 2005.

[15] J. Dean and S. Ghemawat, Mapreduce: simplied data processing on

large clusters, in OSDI, 2004.

[16] A. Fiat and M. Mendel, Better algorithms for unfair metrical task

systems and applications, SIAM Journal on Computing, vol. 32, no. 6,

pp. 14031422, 2003.

[17] C. Kahn, As power demand soars, grid holds up... so far, 2010, http:

//www.news9.com/global/story.asp?s=12762338.

[18] P. McGeehan, Heat wave report: 102 degrees in central

park, 2010, http://cityroom.blogs.nytimes.com/2010/07/06/

heatwave-report-the-days-rst-power-loss/?hp.

[19] A. Qureshi, R. Weber, H. Balakrishnan, J. V. Guttag, and B. V. Maggs,

Cutting the electric bill for internet-scale systems, in SIGCOMM,

2009, pp. 123134.

[20] L. Rao, X. Liu, L. Xie, and W. Liu, Minimizing Electricity Cost:

Optimization of Distributed Internet Data Centers in a Multi-Electricity-

Market Environment, in INFOCOM, 2010.

[21] D. D. Sleator and R. E. Tarjan, Amortized efciency of list update and

paging rules, Communications of the ACM, vol. 28, no. 2, pp. 202208,

1985.

[22] V. V. Vazirani, Approximation Algorithms. Springer, 2001.

[23] Windows Azure Platform, http://www.azure.com.

APPENDIX A

RELATION BETWEEN OUR PROBLEM AND OTHER

CLASSICAL PROBLEMS

In this section we compare the online job migration problem

in the current paper to classical online problems that were

considered in the literature.

The Paging/Caching problem. Paging is a classical and well

studied problem [11, 21]. In the classical paging problem we

are given a collection of n pages, and a fast memory (cache)

which can hold up to k of these pages. At each time step one

of the pages is requested. If the requested page is already in

the cache then no cost is incurred, otherwise the algorithm

must bring the page into the cache, possibly evicting some

other page, and a cost of one unit is incurred. In the weighted

version of the problem, each page has an associated weight

that indicates the cost of bringing it into the cache.

The paging problem is actually a special case of our job

migration problem. Consider a setting in which there are

n data centers of capacity 1 and the total load is always

n k. At each point in time the energy cost is zero in all

places, but one location in which energy cost is arbitrary

large (practically innity). By putting innity cost on the

data center that corresponds to the page that is requested and

considering the complement of the loads as the probability of

each page being in the cache, it is possible to simulate the

original caching problem. This shows that our job migration

problem is strictly harder than the weighted caching problem.

Nevertheless, our algorithm draws ideas from recent online

primal-dual approaches for caching [58].

The Metrical Task Systems (MTS) problem. In the MTS

problem we are given a nite metric space M = (V, d),

where |V | = n. We view the points of M as states in which

the algorithm may be situated in. The distance between the

points of the metric measures the cost of transition between the

possible states. The algorithm has to keep a single server in one

of the states. Each task (request) r in a metrical task system

is associated with a vector (r(1), r(2), . . . , r(N)), where r(i)

denotes the cost of serving r in state i V . In order to serve

request r in state i the server has to be in state i. Upon arrival

of a new request, the state of the system can rst be changed

to a new state (paying the transition cost), and only then the

request is served (paying for serving the request in the new

12

state). The objective is to minimize the total cost which is the

sum of the transition costs and the service costs.

It is not hard to see that a solution to the MTS problem

on a weighted star metric corresponds to a job migration

problem when total load of jobs on the points is normalized

to 1. The main problem preventing us from using the pure

MTS framework to solve our current problem are the capacity

constraints, which do not exist in the original MTS problem.

Capacity constraints that limit the amount of load we can put in

each data center are hard to handle. In particular, this is what

makes our problem a generalization of the paging problem

(see above). An additional complication of our job-migration

model is the non-linear (convex) formulation of the energy

costs. We note that in the original MTS the cost of having y

i

units of load in location i is linear, namely r(i)y

i

.

In spite of the above differences, we draw ideas from

the original solutions to the MTS problem [9, 12, 16], and

especially from the primal-dual approach in [5, 7, 8].

APPENDIX B

PROOF OF LEMMA 2.1

We consider some solution S that migrates jobs. We will

consider the cost of the same solution in both model and prove

that cost of this solution is the same up to additive constant

factors. Therefore, proving our desired claim. For each data

center i, let M

i,out

and M

i,in

be the total job-migration out

and into data center i from time 0 until time T (when the

algorithm ends). For each data center i, let W

i,0

and W

i,T

be the number of servers that are initially active on the data

center and are active at time T. Then for each data center i

and for any solution S to the problem we have:

M

i,in

M

i,out

= W

i,T

W

i,0

= q

i

,

where |q

i

| H

i

is independent of the actual migration

sequence. The above equation follows by a simple ow

based argument. Let S be a migration solution. Since in

both models the energy cost is the same we only consider

the bandwidth costs. Let c

1

(S) and c

2

(S) be the bandwidth

costs of S in the original and the new model respectively.

Then:

c

1

(S) =

n

i=1

d

i,out

M

i,out

+ d

i,in

M

i,in

=

n

i=1

d

i,out

M

i,out

+ d

i,in

(M

i,out

+ W

i,T

W

i,0

)

=

n

i=1

d

i,out

q

i

+

n

i=1

(d

i,in

+ d

i,out

)M

i,out

i=1

d

i,out

H

i

+ c

2

(S).

Dene D =

n

i=1

d

i,out

H

i

. Similar to the above, one can

also establish that c

1

(S) c

2

(S) D. The above inequalities

indicates that the total cost in both models is different by

at most an additive constant D. Consequently, executing a

solution that is c-competitive in one model will be also c-

competitive (with only additional additive terms) in the second

model.

You might also like

- TOPIC: 1. The Akamai Network: A Platform For High-Performance InternetDocument5 pagesTOPIC: 1. The Akamai Network: A Platform For High-Performance InternetsureshNo ratings yet

- 10 Optimizing InfocomDocument9 pages10 Optimizing InfocomNoe JoseNo ratings yet

- Energy-Aware Resource Adaptation For Next-Generation Network EquipmentDocument6 pagesEnergy-Aware Resource Adaptation For Next-Generation Network Equipmentbeck_hammerNo ratings yet

- Abstract Cloud Computing Is An Emerging Paradigm That Provides ComputingDocument8 pagesAbstract Cloud Computing Is An Emerging Paradigm That Provides ComputingSudhansu Shekhar PatraNo ratings yet

- Energy Optimization For Software-Defined Data CentDocument16 pagesEnergy Optimization For Software-Defined Data CentManish PaliwalNo ratings yet