Professional Documents

Culture Documents

Kami Export - LogisticRegression

Kami Export - LogisticRegression

Uploaded by

YENI SRI MAHARANI -Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Kami Export - LogisticRegression

Kami Export - LogisticRegression

Uploaded by

YENI SRI MAHARANI -Copyright:

Available Formats

Logistic Regression

FSD Team

Informatics, UII

October 9, 2022

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 1 / 10

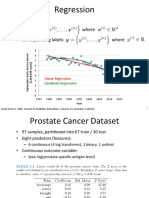

Linear Regression: the procedure

1 Pick an initial line/model h(✓) by randomly choosing parameter ✓

2 Compute the corresponding cost function, e.g.,

m

1X

J(✓) = (h✓ (x(i) ) y (i) )2 ,

2

i=1

3 Update the line/model h(✓) by updating ✓ that makes J(✓) smaller,

using, e.g., the gradient descent that reads

@

✓j := ✓j ↵ J(✓),

@✓j

where ↵ is the learning rate.

4 Repeat steps 2 and 3 until converges

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 3 / 10

Linear regression: a summary

In more detail

The learning procedure above is a typical approach in mostly (parametric)

models of machine and deep learning.

Note that we have been so far assuming the y in our data set

(x(i) , y (i) ); i = 1, . . . , m is continuous, e.g., house price, height,

temperature, etc.

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 4 / 10

Linear regression: a summary

In more detail

The learning procedure above is a typical approach in mostly (parametric)

models of machine and deep learning.

Note that we have been so far assuming the y in our data set

(x(i) , y (i) ); i = 1, . . . , m is continuous, e.g., house price, height,

temperature, etc.

What if y is discrete? E.g., pass/fail, yes/true, 1/0, etc, that leads to

nonlinear fashion and a classification task.

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 4 / 10

Linear regression: a summary

In more detail

The learning procedure above is a typical approach in mostly (parametric)

models of machine and deep learning.

Note that we have been so far assuming the y in our data set

(x(i) , y (i) ); i = 1, . . . , m is continuous, e.g., house price, height,

temperature, etc.

What if y is discrete? E.g., pass/fail, yes/true, 1/0, etc, that leads to

nonlinear fashion and a classification task.

Let’s see the plot example.

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 4 / 10

If y is a discrete variable

1

Exam status

0

2 4 6 8 10

Study hour

Exam status: 0 (fail), 1 (pass)

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 5 / 10

If y is a discrete variable

1

Do you think the linear

model y = ✓0 + ✓1 ⇥ x will

fit well?

Exam status

0

2 4 6 8 10

Study hour

Exam status: 0 (fail), 1 (pass)

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 5 / 10

If y is a discrete variable

Do you think the linear

1 model y = ✓0 + ✓1 ⇤ x will fit

well?

Exam status

Let’s try one.

0

2 4 6 8 10

Study hour

Exam status: 0 (fail), 1 (pass)

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 6 / 10

If y is a discrete variable

Do you think the linear

model y = ✓0 + ✓1 ⇥ x will

1

fit well?

Let’s try one

Exam status

Looks bad, try another one.

0

2 4 6 8 10

Study hour

Exam status: 0 (fail), 1 (pass)

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 7 / 10

If y is a discrete variable

Do you think the linear

model y = ✓0 + ✓1 ⇥ x will

1

fit well?

Let’s try one

Exam status

Looks bad, try another one.

We see that such a linear

model won’t fit well, because

0 of the nonlinear fashion. We

2 4 6 8 10 need another model!

Study hour

Exam status: 0 (fail), 1 (pass)

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 8 / 10

If y is a discrete variable

We can use the logistic

(sigmoid) function that

1

reads

1

h✓ (x) = g(✓T x) =

Exam status

1+e ✓T x

We typically predict “1” if

h✓ (x) 0.5

0

2 4 6 8 10

Study hour

Exam status: 0 (fail), 1 (pass)

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 9 / 10

Logistic Regression: the procedure

1 Pick an initial line/model h(✓) by randomly choosing parameter ✓

2 Compute the corresponding cost function, e.g.,

m

X

J(✓) = y log h✓ (x(i) ) (1 y) log(1 h✓ (x(i) ))

i=1

3 Update the line/model h(✓) by updating ✓ that makes J(✓) smaller,

using, e.g., the gradient descent that reads

@

✓j := ✓j ↵ J(✓),

@✓j

where ↵ is the learning rate.

4 Repeat steps 2 and 3 until converges

FSD Team (Informatics, UII) Logistic Regression October 9, 2022 10 / 10

You might also like

- C1M5 Peer Reviewed OthersDocument27 pagesC1M5 Peer Reviewed OthersAshutosh KumarNo ratings yet

- VCE Project Report FinalDocument17 pagesVCE Project Report FinalPuneet Singh Dhani25% (4)

- 04 LogisticRegressionDocument46 pages04 LogisticRegressiondfcuervooNo ratings yet

- Class 2 BDocument10 pagesClass 2 BAgathaNo ratings yet

- 07 SVMsDocument68 pages07 SVMsnguyen van truongNo ratings yet

- Test 1 Week 3Document3 pagesTest 1 Week 3Cristian CabelloNo ratings yet

- Chapter5 PDFDocument13 pagesChapter5 PDFMoein RazaviNo ratings yet

- 05 LogisticRegression PDFDocument23 pages05 LogisticRegression PDFAlka ChoudharyNo ratings yet

- 04 LinearRegression PDFDocument61 pages04 LinearRegression PDFAlka ChoudharyNo ratings yet

- 01 Machine Learning BasicsDocument51 pages01 Machine Learning Basicsanirudh917No ratings yet

- ch2 PDFDocument22 pagesch2 PDFJorge Vega RodríguezNo ratings yet

- IP FormulationDocument7 pagesIP FormulationPrachi AgarwalNo ratings yet

- Machine Learning Foundations (機器學習基石) : Lecture 15: ValidationDocument27 pagesMachine Learning Foundations (機器學習基石) : Lecture 15: ValidationPin BenNo ratings yet

- Machine Learning PYQ 2022 AnsDocument17 pagesMachine Learning PYQ 2022 Ansnitob90303No ratings yet

- 11 Ethem Linear SVM 2015Document66 pages11 Ethem Linear SVM 2015aycaizeNo ratings yet

- PYL122 Minor 1 Solutions PDFDocument12 pagesPYL122 Minor 1 Solutions PDFYash ChandNo ratings yet

- CS230: Deep Learning: Winter Quarter 2019 Stanford University Midterm Examination 180 MinutesDocument29 pagesCS230: Deep Learning: Winter Quarter 2019 Stanford University Midterm Examination 180 MinutesEducation VietCoNo ratings yet

- Single Step Method PDFDocument35 pagesSingle Step Method PDFMisgana GetachewNo ratings yet

- Class 2 ADocument32 pagesClass 2 AAgathaNo ratings yet

- L4 - Logistic Regression - BDocument45 pagesL4 - Logistic Regression - BBùi Tấn PhátNo ratings yet

- Chapter - 5 (New) PDFDocument17 pagesChapter - 5 (New) PDFMoein RazaviNo ratings yet

- Dynamic Programming (Optimization Problem)Document24 pagesDynamic Programming (Optimization Problem)Andi SulasikinNo ratings yet

- Cs230exam Win19 SolnDocument29 pagesCs230exam Win19 SolnSana NNo ratings yet

- Lecture 03 - SelectionDocument28 pagesLecture 03 - SelectionSüleyman GolbolNo ratings yet

- MA205 Midterm F2022Document9 pagesMA205 Midterm F2022234fold234No ratings yet

- Tma4140 Mock-Exam 2022 SolutionsDocument16 pagesTma4140 Mock-Exam 2022 Solutionssamsonhatyoka100No ratings yet

- Lecture 1, Part 3: Training A Classifier: Roger GrosseDocument11 pagesLecture 1, Part 3: Training A Classifier: Roger GrosseShamil shihab pkNo ratings yet

- How Well Do LLM Perform Iin Arithmetic TasksDocument10 pagesHow Well Do LLM Perform Iin Arithmetic TasksMaria Karla BeloNo ratings yet

- This Study Resource Was: Final ExaminationDocument7 pagesThis Study Resource Was: Final ExaminationPuwa CalvinNo ratings yet

- Career Launcher's: CAT 2007 AnalysisDocument4 pagesCareer Launcher's: CAT 2007 Analysisapi-19854986No ratings yet

- Solutions To Deep LearningDocument25 pagesSolutions To Deep LearningiqbalNo ratings yet

- Calculus II 2018-2019 S2 Exam 2Document3 pagesCalculus II 2018-2019 S2 Exam 2Frank WanNo ratings yet

- Data Science AISample Question PaperDocument28 pagesData Science AISample Question PaperPrashanthNo ratings yet

- Math5 - ws21 - Ex 1+2+3Document7 pagesMath5 - ws21 - Ex 1+2+3Batkhongor ChagnaaNo ratings yet

- 204ufrep ME GATE-2020 Session-2 PDFDocument43 pages204ufrep ME GATE-2020 Session-2 PDFAlok SinghNo ratings yet

- 204ufrep ME GATE-2020 Session-2 Revised PDFDocument44 pages204ufrep ME GATE-2020 Session-2 Revised PDFSaurabh KalitaNo ratings yet

- Final Mock ExamDocument4 pagesFinal Mock ExamDiego Murillo ContrerasNo ratings yet

- MedTerm Machine LearningDocument14 pagesMedTerm Machine LearningMOhmedSharafNo ratings yet

- Final 2006Document15 pagesFinal 2006Muhammad MurtazaNo ratings yet

- Part4 ChoosingH SlidesDocument24 pagesPart4 ChoosingH Slidesnitingautam1907No ratings yet

- Econometrics - Review Sheet ' (Main Concepts)Document5 pagesEconometrics - Review Sheet ' (Main Concepts)Choco CheapNo ratings yet

- Friday, February 10, 2012 Calculus 1501B First Midterm ExaminationDocument8 pagesFriday, February 10, 2012 Calculus 1501B First Midterm ExaminationYousef KhanNo ratings yet

- Kma 252 Exam 18 NewstyleDocument19 pagesKma 252 Exam 18 NewstyleSebin GeorgeNo ratings yet

- 1 ModuleEcontent - Session5Document24 pages1 ModuleEcontent - Session5devesh vermaNo ratings yet

- 04 LinearRegressionDocument61 pages04 LinearRegressionjoselazaromrNo ratings yet

- DS 432 Assignment I 2020Document7 pagesDS 432 Assignment I 2020sristi agrawalNo ratings yet

- Classification and Clustering: CS109/Stat121/AC209/E-109 Data ScienceDocument28 pagesClassification and Clustering: CS109/Stat121/AC209/E-109 Data ScienceMatheus SilvaNo ratings yet

- Classification and Clustering: CS109/Stat121/AC209/E-109 Data ScienceDocument28 pagesClassification and Clustering: CS109/Stat121/AC209/E-109 Data ScienceMatheus SilvaNo ratings yet

- Lec12 LogregDocument41 pagesLec12 LogregYasmine A. SabryNo ratings yet

- Ps 1Document5 pagesPs 1Emre UysalNo ratings yet

- IOR Assignment 1Document3 pagesIOR Assignment 1jenny henlynNo ratings yet

- Compilation of Problems KMSTDocument55 pagesCompilation of Problems KMSTHaswanth VoonnaNo ratings yet

- Softadam Unifying SGD and Adam For Better Stochastic Gradient DescentDocument12 pagesSoftadam Unifying SGD and Adam For Better Stochastic Gradient DescentDavidNo ratings yet

- KactlDocument26 pagesKactlhotasshitNo ratings yet

- AIML 2nd IA Question BankDocument2 pagesAIML 2nd IA Question BankKalyan G VNo ratings yet

- Data Analytics With Python - Unit 13 - Week 11Document4 pagesData Analytics With Python - Unit 13 - Week 11D BarikNo ratings yet

- Microeconometrie Chapitre1 BinaryOutcomeModelsDocument42 pagesMicroeconometrie Chapitre1 BinaryOutcomeModelsAstrela MengueNo ratings yet

- Final 2006Document15 pagesFinal 2006유홍승No ratings yet

- Homework 4Document4 pagesHomework 4Jeremy NgNo ratings yet

- Homework 1Document7 pagesHomework 1Muhammad MurtazaNo ratings yet

- Kami Export - Finding - InsightDocument55 pagesKami Export - Finding - InsightYENI SRI MAHARANI -No ratings yet

- Kami Export - 5a - LinearRegressionDocument72 pagesKami Export - 5a - LinearRegressionYENI SRI MAHARANI -No ratings yet

- Kami Export - 5a - Supervised - LearningDocument14 pagesKami Export - 5a - Supervised - LearningYENI SRI MAHARANI -No ratings yet

- Kami Export - 13. Model EvaluationDocument25 pagesKami Export - 13. Model EvaluationYENI SRI MAHARANI -100% (1)

- Acct Lesson 9Document9 pagesAcct Lesson 9Gracielle EspirituNo ratings yet

- D16 DipIFR Answers PDFDocument8 pagesD16 DipIFR Answers PDFAnonymous QtUcPzCANo ratings yet

- Addmrpt 1 36558 36559Document7 pagesAddmrpt 1 36558 36559Anonymous ZGcs7MwsLNo ratings yet

- Salami Attacks and Their Mitigation - AnDocument4 pagesSalami Attacks and Their Mitigation - AnParmalik KumarNo ratings yet

- Affidavit of Loss - Bir.or - Car.1.2020Document1 pageAffidavit of Loss - Bir.or - Car.1.2020black stalkerNo ratings yet

- Summary WordingDocument5 pagesSummary WordingDaniel JenkinsNo ratings yet

- Hammond TexturesDocument37 pagesHammond TexturesMartin Zegarra100% (3)

- Meter Reading Details: Assam Power Distribution Company LimitedDocument1 pageMeter Reading Details: Assam Power Distribution Company LimitedPadum PatowaryNo ratings yet

- Catalog - Tesys Essential Guide - 2012 - (En)Document54 pagesCatalog - Tesys Essential Guide - 2012 - (En)Anonymous FTBYfqkNo ratings yet

- Ss T ConductmnbvjkbDocument7 pagesSs T ConductmnbvjkbFelix PartyGoerNo ratings yet

- Royal College Grade 07 English Second Term Paper (221119 110652Document10 pagesRoyal College Grade 07 English Second Term Paper (221119 110652sandeepsubasinghe23No ratings yet

- BOQ Cum Price Schedule (Annexure-I)Document22 pagesBOQ Cum Price Schedule (Annexure-I)Akd Deshmukh100% (1)

- Geologia 2Document194 pagesGeologia 2agvega69109No ratings yet

- Mongodb DocsDocument313 pagesMongodb DocsDevendra VermaNo ratings yet

- Ni Based Superalloy: Casting Technology, Metallurgy, Development, Properties and ApplicationsDocument46 pagesNi Based Superalloy: Casting Technology, Metallurgy, Development, Properties and ApplicationsffazlaliNo ratings yet

- Mod 1 0017to0018Document2 pagesMod 1 0017to0018Bren-RNo ratings yet

- 66 - Series Singer 66 Sewing Machine ManualDocument16 pages66 - Series Singer 66 Sewing Machine ManualCynthia PorterNo ratings yet

- CDP 22 FinalDocument8 pagesCDP 22 FinalAnonymous GMUQYq8No ratings yet

- Moist Heat Sterilization Validation and Requalification STERISDocument4 pagesMoist Heat Sterilization Validation and Requalification STERISDany RobinNo ratings yet

- Accounting Chapter 5: Internal Control, Cash and Receivables 1. Accounts & Notes ReveivableDocument7 pagesAccounting Chapter 5: Internal Control, Cash and Receivables 1. Accounts & Notes ReveivableMarine De CocquéauNo ratings yet

- CsToCpp ASomewhatShortGuide PDFDocument56 pagesCsToCpp ASomewhatShortGuide PDFIldar SakhapovNo ratings yet

- Introduction To Naming and Drawing of Carboxylic Acids and EsterDocument38 pagesIntroduction To Naming and Drawing of Carboxylic Acids and Esterkartika.pranotoNo ratings yet

- Business Environment Ch.3Document13 pagesBusiness Environment Ch.3hahahaha wahahahhaNo ratings yet

- 921-Article Text-3249-1-10-20220601Document14 pages921-Article Text-3249-1-10-20220601YuliaNo ratings yet

- Life With MathematicsDocument4 pagesLife With MathematicsHazel CuNo ratings yet

- 5E Lesson Plan Template: Replace These Directions With Your WorkDocument4 pages5E Lesson Plan Template: Replace These Directions With Your Workapi-583088531No ratings yet

- Luxury DIY Sulfate Shampoo - Workbook - VFDocument14 pagesLuxury DIY Sulfate Shampoo - Workbook - VFralucaxjsNo ratings yet

- Moisture States in AggregateDocument2 pagesMoisture States in AggregateJANET GTNo ratings yet

- DAF Electric Trucks XF XD Driving Zero Emissions ENDocument6 pagesDAF Electric Trucks XF XD Driving Zero Emissions ENStefan ChirutaNo ratings yet