Professional Documents

Culture Documents

FPT-HandBookData Engineer (11123)

FPT-HandBookData Engineer (11123)

Uploaded by

Thien LyCopyright:

Available Formats

You might also like

- Modern Data Engineering With Apache Spark (For - .)Document604 pagesModern Data Engineering With Apache Spark (For - .)cblessi chimbyNo ratings yet

- De Mod 1 Get Started With Databricks Data Science and Engineering WorkspaceDocument27 pagesDe Mod 1 Get Started With Databricks Data Science and Engineering WorkspaceJaya BharathiNo ratings yet

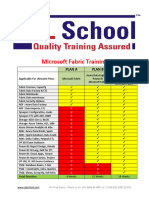

- MicrosoftFabric TrainingDocument16 pagesMicrosoftFabric TrainingAmarnath Reddy KohirNo ratings yet

- Nifi IntegrationDocument15 pagesNifi Integrationcutyre100% (1)

- Mining Your Data Lake For Analytics Insights v3 101420Document16 pagesMining Your Data Lake For Analytics Insights v3 101420Niru DeviNo ratings yet

- Snowflake PoV Brochure V6bgeneric TDDocument16 pagesSnowflake PoV Brochure V6bgeneric TDMohammed ImamuddinNo ratings yet

- Connect Databricks Delta Tables With DBeaverDocument10 pagesConnect Databricks Delta Tables With DBeaverValentinNo ratings yet

- Azure Synapse Course PresentationDocument261 pagesAzure Synapse Course Presentationsaok100% (1)

- Databricks State of Data Report 010524 v9 FinalDocument27 pagesDatabricks State of Data Report 010524 v9 Finalshuklay01No ratings yet

- Cloudera Introduction PDFDocument97 pagesCloudera Introduction PDFSanthosh KumarNo ratings yet

- Azure Cosmos DB: Technical Deep DiveDocument31 pagesAzure Cosmos DB: Technical Deep DivesurendarNo ratings yet

- Mittr-X-Databricks Survey-Report Final 090823Document25 pagesMittr-X-Databricks Survey-Report Final 090823Grisha KarunasNo ratings yet

- Jatin Aggarwal: Data EngineerDocument1 pageJatin Aggarwal: Data EngineerAkashNo ratings yet

- Set Your Data in MotionDocument8 pagesSet Your Data in Motionalamtariq.dsNo ratings yet

- Best Practices For Optimizing Your DBT and Snowflake DeploymentDocument30 pagesBest Practices For Optimizing Your DBT and Snowflake DeploymentGopal KrishanNo ratings yet

- TeradataStudioUserGuide 2041Document350 pagesTeradataStudioUserGuide 2041Manikanteswara PatroNo ratings yet

- Digital Finance - Data Engineering LeadDocument3 pagesDigital Finance - Data Engineering LeadAlejandro ManzanelliNo ratings yet

- Data Modeling 101:: Bringing Data Professionals and Application Developers TogetherDocument46 pagesData Modeling 101:: Bringing Data Professionals and Application Developers TogetherPallavi PillayNo ratings yet

- Introduction To Data EngineeringDocument28 pagesIntroduction To Data Engineeringsibuaya495No ratings yet

- Learning Journey For Machine Learning On Azure - 20210208Document13 pagesLearning Journey For Machine Learning On Azure - 20210208Husnain Ahmad CheemaNo ratings yet

- Microsoft - Practicetest.dp 201.v2020!08!07.by - Julissa.92qDocument126 pagesMicrosoft - Practicetest.dp 201.v2020!08!07.by - Julissa.92qrottyNo ratings yet

- LaunchingYourDataCareer 220502 094417Document119 pagesLaunchingYourDataCareer 220502 094417LawalNo ratings yet

- Python For Non-Programmers FinalDocument218 pagesPython For Non-Programmers FinalVinay KumarNo ratings yet

- Scope, and The Inter-Relationships Among These EntitiesDocument12 pagesScope, and The Inter-Relationships Among These EntitiesSyafiq AhmadNo ratings yet

- Azure InfrastructureDocument1 pageAzure InfrastructureUmeshNo ratings yet

- Databricks: Building and Operating A Big Data Service Based On Apache SparkDocument32 pagesDatabricks: Building and Operating A Big Data Service Based On Apache SparkSaravanan1234567No ratings yet

- Designing Data Integration The ETL Pattern ApproacDocument9 pagesDesigning Data Integration The ETL Pattern ApproacPanji AryasatyaNo ratings yet

- GCP Data Engineer Course ContentDocument7 pagesGCP Data Engineer Course ContentAMIT DHOMNENo ratings yet

- Data ModelingDocument87 pagesData ModelingCrish NagarkarNo ratings yet

- Datapipeline DGDocument337 pagesDatapipeline DGBalakrishna BalaNo ratings yet

- Data Modeler Release NotesDocument81 pagesData Modeler Release NotesRKNo ratings yet

- BIG DATA - 25.09.2020 (19 Files Merged)Document184 pagesBIG DATA - 25.09.2020 (19 Files Merged)Arindam MondalNo ratings yet

- Ey Actuarial Data Management BrochureDocument11 pagesEy Actuarial Data Management BrochureeliNo ratings yet

- A Guide To: Data Science at ScaleDocument20 pagesA Guide To: Data Science at ScaleTushar SethiNo ratings yet

- CCS316 Examination PaperDocument5 pagesCCS316 Examination Paper21UG0613 HANSANI L.A.K.No ratings yet

- Aws Certified Data Engineer SlidesDocument691 pagesAws Certified Data Engineer SlidesMansi RaturiNo ratings yet

- Oracle Big Data SQL Installation GuideDocument139 pagesOracle Big Data SQL Installation Guidepedro.maldonado.rNo ratings yet

- SparkInternals AllDocument90 pagesSparkInternals AllChristopher MilneNo ratings yet

- Databricks Data Engineer Guide Derar AlhusseinDocument63 pagesDatabricks Data Engineer Guide Derar AlhusseinNaweed KhanNo ratings yet

- Etl ArchitectureDocument76 pagesEtl ArchitectureBhaskar ReddyNo ratings yet

- AX 70 AdministratorGuide enDocument120 pagesAX 70 AdministratorGuide enPrateek SinhaNo ratings yet

- Ade Mod 1 Incremental Processing With Spark Structured StreamingDocument73 pagesAde Mod 1 Incremental Processing With Spark Structured StreamingAdventure WorldNo ratings yet

- Singapore DBTDocument137 pagesSingapore DBTsachin choubeNo ratings yet

- 5 Ways To Amplify Power BI With Azure Synapse AnalyticsDocument37 pages5 Ways To Amplify Power BI With Azure Synapse AnalyticsKarthik DarbhaNo ratings yet

- Lockhart ResumeDocument3 pagesLockhart ResumeEleanor Amaranth LockhartNo ratings yet

- C2 Databricks - Sparks - EEDocument9 pagesC2 Databricks - Sparks - EEyedlaraghunathNo ratings yet

- Kanishk ResumeDocument5 pagesKanishk ResumeHarshvardhini MunwarNo ratings yet

- Oreilly Technical Guide Understanding EtlDocument107 pagesOreilly Technical Guide Understanding EtlrafaelpdsNo ratings yet

- Master's Course IN: Microsoft AzureDocument12 pagesMaster's Course IN: Microsoft Azuremystic_guy100% (1)

- 21c SQL Tuning GuideDocument861 pages21c SQL Tuning GuidesuhaasNo ratings yet

- Data Model PatternsDocument25 pagesData Model PatternspetercheNo ratings yet

- Mit Data Science Machine Learning Program BrochureDocument17 pagesMit Data Science Machine Learning Program BrochureSai Aartie SharmaNo ratings yet

- Enterprise Data Catalog User Guide: Informatica 10.2.2Document107 pagesEnterprise Data Catalog User Guide: Informatica 10.2.2sanjay_r_gupta9007No ratings yet

- 7 Snowflake Reference Architectures For Application BuildersDocument13 pages7 Snowflake Reference Architectures For Application Buildersmilton_diaz5278No ratings yet

- Rules of Thumb in Data EngineeringDocument10 pagesRules of Thumb in Data EngineeringNavneet GuptaNo ratings yet

- Asif DotnetDocument1 pageAsif DotnetMD ASIF ALAMNo ratings yet

- Data Engineering & GCP Basic Services 2. Data Storage in GCP 3. Database Offering by GCP 4. Data Processing in GCP 5. ML/AI Offering in GCPDocument3 pagesData Engineering & GCP Basic Services 2. Data Storage in GCP 3. Database Offering by GCP 4. Data Processing in GCP 5. ML/AI Offering in GCPvenkat rajNo ratings yet

FPT-HandBookData Engineer (11123)

FPT-HandBookData Engineer (11123)

Uploaded by

Thien LyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

FPT-HandBookData Engineer (11123)

FPT-HandBookData Engineer (11123)

Uploaded by

Thien LyCopyright:

Available Formats

Data Engineer

© Copyright FHM.AVI – Data 1

Data Engineer

© Copyright FHM.AVI – Data 2

Data Engineer

Table of Contents

Data Engineer ................................................................................................................................. 4

How To Become A Data Engineer? ....................................................................................... 4

Data Sources .......................................................................................................................... 12

Data Staging Area ................................................................................................................. 63

Extract – Transform – Load (ETL) .................................................................................... 68

Data Warehouse .................................................................................................................... 84

SQL Server & SQL ServerIntegration Service ............................................................. 128

© Copyright FHM.AVI – Data 3

Data Engineer

Data Engineer

How To Become A Data Engineer?

Who is a Data Engineer?

Every data-driven business requires a framework for data science and data

analytics pipeline. The person responsible for building and maintaining

this framework is known as Data Engineer. These engineers are

responsible for an uninterrupted flow of data between servers and

applications.

Therefore, a data engineer builds, tests, maintains data structures and

architectures for data ingestion, processing, and deployment of large-scale

data-intensive applications.

Data engineers work in tandem with data architects, data analysts, and data

scientiststhrough data visualization and storytelling. The most crucial role

of the data engineer is to design, develop, construct, install, test, and

maintain the complete data management and processing systems.

© Copyright FHM.AVI – Data 4

Data Engineer

So what do they exactly do? They create the framework to make data consumable

for data scientists and analysts so they can use the data to derive insights from it. So,

data engineers are the builders of data systems.

Responsibilities of a Data Engineer

Management

Data engineer manages this position by creating optimal databases, implementing

changes in schema, and maintaining data architecture standards across all the

business’s databases. Data Engineer is also responsible for enabling migration of

data amongst different servers and different databases, for example, data migration

between SQL servers to MySQL. He also defines and implements data stores based

on system requirements and user requirements.

System Design

Data engineers should always build a system that is scalable, robust, and fault-

tolerant hence, the system can be scaled up without increasing the number of data

sources and can handle a massive amount of data without any failure. For instance,

imagine a situation where a source of data is doubled or tripled, but the system fails

to scale up, so it would cost a lot more time and resources to build up a system to

intake this extensive data. Big Data Engineers have a role here: they handle the

extract transform and load process, which is a blueprint for how the collected raw

data is processed and transformed into data ready for analysis.

Analytics

The Data Engineer performs ad-hoc analyses of data stored in the business’s

databases and writes SQL scripts, stored procedures, functions, and views. He is

responsible for troubleshooting data issues within the business and across the

business and presents solutions to these issues. Data engineer proactively analyzes

and evaluates the business’s databases in order to identify and recommend

improvements and optimization. He prepares activity and progress reports regarding

the business database status and health, which is later presented to senior data

engineers for review and evaluation. In addition, the Data Engineer analyzes

© Copyright FHM.AVI – Data 5

Data Engineer

complex data system and elements, dependencies, data flow, and relationships so as

to contribute to conceptual physical and logical data models.

Some of the other responsibilities also include improving foundational data

procedures and integrating new data management technologies and the software into

existing systems and building data collection pipelines and finally include

performance tuning and make the whole system more efficient.

Data Engineers are considered the “librarians” of data warehouse and cataloging and

organizing metadata, defining the processes by which one files or extracts data from

the warehouse. Nowadays, metadata management and tooling have become a vital

component of the modern-day platform.

Goals of a Data Engineer

Developing Data Pipelines

This skill set involves transferring data from one point to another. In other words,

taking data from the operating system and then moving it into something that can be

analyzed by the analyst or data scientist hence, leading to the next goal of managing

tables and data sets.

Managing tables and Data Sets

The transferred data through pipelines populates some sorts of sets of tables that are

then used by the analysts or data scientists to extract all of their insights from data.

Analyzing information of any product, for example, a blog site with questions like

what people are reading? How are they reading it? How long they are staying on

particular articles.

Designing the product

Data Engineers end up playing an important role to understand what users want to

gain from large datasets. Considering questions at the time of development, that

users might have while using the product. E.g., developing a dashboard, how are

people going to use the dashboard? What other features can be added and how far

fetched they are.

© Copyright FHM.AVI – Data 6

Data Engineer

Conceptual Skills Required to be a Data

Engineer

The most required skill in data engineering is the ability to design and build data

warehouses, where all the raw data is collected, stored, and retrieved. Without a data

warehouse, all the tasks that data scientists do would become obsolete. It is either

going to get too expensive or very very large to scale. However, other skills required

are:

1. Data Modelling

The data model is an essential part of the data science pipeline. It is the process of

converting a document of sophisticated software system design to a diagram that can

comprehend, using text and symbols to represent the flow of data. Data models are

built during the analysis and design phase of a project to ensure the requirements of

a new application are fully understood. These models can also be invoked later in

the data lifecycle to rationalize data designs that were initially created by the

programmers on an ad hoc basis.

Stages in Data Modelling

• Conceptual: This is the first step in data model processing, which imposes a

logical order on data as it exists in relationship to the entities.

• Logical: The logical modeling process attempts to impose order by

establishing discrete entities, fundamental values, and relationships into

logical structures.

• Physical: This step breaks the data down into the actual tables, clusters, and

indexes required for the data storage.

© Copyright FHM.AVI – Data 7

Data Engineer

Hierarchical Data Model: This data model array in a tree-like structure, one-to-

many arrangements marked these efforts and have replaced file-based systems. E.g.,

IBM’s Information Management System (IMS), which found extensive use in

business like banking.

Relational Data Model: They replaced hierarchical models, as it reduced program

complexity versus file-based systems and also didn’t require developers to define

data paths.

Entity-Relationship Model: Closely relatable to the relationship model, these

models use diagrams and flowcharts to graphically illustrate the elements of the

database to ease the understanding of underlying models.

Graph Data Model: It is a much-advanced version of the hierarchical model, which,

together with graph databases, is used for describing the complicated relationship

within the data sets.

2. Automation

Industries use automation to increase productivity, improve quality and consistency,

reduce costs, and speed delivery. It provides benefits in greater magnitude to every

team player in an organization including Testers, Quality Analysts, Developers, or

even Business Users.

Automation can provide the following benefits:

• Speed: It is fast, hence, dramatically reduces team development time.

• Flexibility: Respond to changing business requirements quickly and easily.

• Quality: Automation tools produce tested high performance, complete, and

readable code.

• Consistency: It is easy for a developer to understand another’s code.

In data science, designing a data warehouse and data warehouse architecture requires

a long time to complete as well as semi-automated steps result in a data warehouse

that was limited and inflexible. So, data engineers came up with a solution to

automate data warehouse involving every step involved in its life cycle, thus

reducing the effort required to manage it. The need for data engineers to implement

data warehouse automation (DWA) tools is growing as these tools eliminate hand-

© Copyright FHM.AVI – Data 8

Data Engineer

coding and custom design for planning, design, building, and documenting decision

support infrastructure.

3. Extraction, Transformation, And Load (ETL)

ETL is defined as the procedure of copying data from one or more source into the

destination system, which represents the data differently from the source or in a

different context than the source. ETL is often used in data warehousing.

Data extraction is the concept of extracting data from heterogeneous or homogenous

sources; data transformation processes data by cleansing data and transforming them

to proper storage structure for the purpose of querying and analysis, finally data

loading describes the insertion of data into the final target database such as

operational data store, a data mart, data lake or data warehouse.

In data science, ETL involves pulling out data from operational systems like MySQL

or Oracle and moving it into a data warehouse like SQL server or modern-day data

warehouses like Hadoop or RedShift and then format it in such a way that analyst

can get it. Eventually, the ETL process starts at the analytical data layer that does

more than extracting data, it performs things like aggregating data, running metrics

and algorithms on the data so that it can be easily fed into future dashboards.

4. Product Understanding

Data engineers look at the data as their product, so it is made in such a way that users

can use it. If we are building datasets for machine learning engineers or data

scientists, we need to understand how they are going to use it what are the models

© Copyright FHM.AVI – Data 9

Data Engineer

that they want to build is enough information is being provided at the customer level.

This is required because the data engineer looks at the things at the granularity and

aggregate things themselves.

Becoming a Data Engineer

1. Programming Language: Start with learning Programming Language, like

Python, as it has clear and readable syntax, versatility, and widely available

resources and a very supportive community.

2. Operating System: Mastery in at least one OS like Linux and UNIX OS is

recommended, RHEL is a prevalent OS adopted by the industry which can

also be learned.

3. DBMS: Enhance your DMBS skills and get your hands-on experience at least

one relational database, preferably MySQL or Oracle DB. Thorough with

database administrator skills as well as skills like capacity planning,

installation, configuration, database design, monitoring security,

troubleshooting such as backup and recovery of data.

4. NoSQL: This is the next skill to focus as it would help you understand how

to handle semi and unstructured data.

5. ETL: Understand to extract data using ETL and data warehousing tools from

various sources. Transform and clean data according to the user and then load

your data into the data warehouse. This is an important skill which data

engineers must possess. Since we are at the age of revolution where the data

© Copyright FHM.AVI – Data 10

Data Engineer

is the fuel of the 21st century, various data sources and numerous technologies

have evolved over the last two decades major ones being NoSQL databases

and big data frameworks.

6. Big Data Frameworks: Big data engineers are required to learn multiple big

data frameworks to create and design processing systems.

7. Real-time Processing Frameworks: Concentrate on learning frameworks

like Apache Spark, which is an open-source cluster computing framework for

real-time processing, and when it comes to real-time data analytics spark

stands as go-to-tool across all solutions.

8. Cloud: Next in the career path, one must learn cloud which will serve as a big

plus. A good understanding of cloud technology will provide the option of

stable significant amounts of data and allowing big data to be further

available, scalable and fault-tolerant.

© Copyright FHM.AVI – Data 11

Data Engineer

Data Sources

General about Data source

With the current strong development of science and technology, the data sources as

input to building a data warehouse are very diverse and rich. For each specific data

warehouse, data sources will be different for each field of data. For example, for a

data warehouse about music, the input will mainly revolve around songs' info, with

a data warehouse about painting, it will be images' info, with a data warehouse about

the weather, the data source will revolve around the location and features of time-

series of weather, with business data, the data source will be around transactions'

info.

Therefore, to simplify the study of data sources to provide data for the data

warehouse building process, we only need to consider and present the commonly

used data sources, and include the following types of data sources:

• Flat file with structured data (csv, json, xml/RDF) and flat file with

unstructured data (file txt, log)

• Binary data formatted as the table/ matrix (file xls/xlsx, file avro, file music,

file video, file photo)

© Copyright FHM.AVI – Data 12

Data Engineer

• Data from database/ data warehouse

• Data from websites

• Data from API

Below are details about each type of data source:

Flat Files

Flat file is a type of data used to store very commonly in the process of recording

raw information of the original documents, it is both simple and usable. For log files,

the data transfers between steps in the processing sequence.

Flat files are text files in plain text format, so we can read and edit them with simple

tools available on popular operating systems such as NotePad (Windows), Emas &

VIM (Linux/Unix or MacOs), …

The content contained inside a flat file is common to use in structured data format

and data fields will be separated with the delimited, fixed width, or mixed format.

Sometimes flat files are also used in unstructured data formats. In this case, they will

be processed to extract the necessary data contained within it into structured data

files. And then, the results are convenient for checking and using in the next steps.

© Copyright FHM.AVI – Data 13

Data Engineer

Flat file with structured data

The delimited format uses columns and rows delimiters to define columns and rows.

We have an example of structured data with the data contained in a table as follows:

TranID SaleDate SubID ProdID subtotal

10001 20210727 10:25:52 1 30015 50000

10001 20210727 10:25:52 2 30175 250000

10002 20210727 10:25:52 1 20042 175000

The flat file is a text file with columns delimited by Tab character as follows:

The flat file is a text file with columns delimited by Comma character as follows:

The flat file is a text file with columns delimited by fixed width format as follows

Fixed width format uses width to define columns and rows. This format also includes

a character for padding fields to their maximum width.

Ragged right format uses width to define all columns, except for the last column,

which is delimited by the row delimiter.

© Copyright FHM.AVI – Data 14

Data Engineer

Flat file with unstructured data

Sometimes we need to read flat files containing randomly distributed information,

in which case we will gather the necessary information into a structured data format

to facilitate their use for the next steps. To do that, we will rely on indications related

to the data samples to be read to capture/filter them. If we have a file containing the

following information:

To implement the above ideas, we run a script like the following to collect data into

a file and the output data only contains structured data. This result makes it very easy

to load data into a table or a matrix for next processing.

awk ' BEGIN {printf("SaleDate,Product,Count\n")}

n = split($0, record,":")

date = record[1]

values = record[2]

m = split(values, fields,",")

$0 = date

date = substr(date, length($1)+2)

for (id in fields) {

$0 = fields[id]

prodname = substr(fields[id], 2, length(fields[id])-length($NF)-2)

printf("%s,%s,%s\n", date, prodname, $NF)

} ' [FlatFileInput.txt] > [FlatFileOutput.csv]

And the result is a CSV file as follows:

© Copyright FHM.AVI – Data 15

Data Engineer

And opened it in MS Excel:

Common Structured Data Formats of Flat Files

CSV (Comma Separated Values)

Files with .csv (Comma Separated Values) extension represent plain text files that

contain records of data with comma separated values. Each line in a CSV file is a

new record from the set of records contained in the file. Such files are generated

when data transfer is intended from one storage system to another. Since all

applications can recognize records separated by comma, import of such data files to

database is done very conveniently. Almost all spreadsheet applications such as

Microsoft Excel or OpenOffice Calc can import CSV without much effort. Data

imported from such files is arranged in cells of a spreadsheet for representation to

user.

CSV file format is known to be specified under RFC4180. It defines any file to be

CSV compliant if:

• Each record is located on a separate line, delimited by a line break (CRLF).

For example:

o h1,h2,h3 CRLF

o v1,v2,v3 CRLF

© Copyright FHM.AVI – Data 16

Data Engineer

• The last record in the file may or may not have an ending line break. For

example:

o h1,h2,h3 CRLF

o v1,v2,v3

• There may be an optional header line appearing as the first line of the file with

the same format as normal record lines. This header will contain names

corresponding to the fields in the file and should contain the same number of

fields as the records in the rest of the file (the presence or absence of the header

line should be indicated via the optional “header” parameter of this MIME

type). For example:

o field_name,field_name,field_name CRLF

o h1,h2,h3 CRLF

o v1,v2,v3 CRLF

• Within the header and each record, there may be one or more fields, separated

by commas. Each line should contain the same number of fields throughout

the file. Spaces are considered part of a field and should not be ignored. The

last field in the record must not be followed by a comma. For example:

o h1,h2,h3

• Each field may or may not be enclosed in double quotes (however some

programs, such as Microsoft Excel, do not use double quotes at all). If fields

are not enclosed with double quotes, then double quotes may not appear inside

the fields. For example:

o “h1”,“h2”,“h3” CRLF

o v1,v2,v3

• Fields containing line breaks (CRLF), double quotes, and commas should be

enclosed in double-quotes. For example:

o “h1”,“h CRLF

o 2”,“h3” CRLF

o v1,v2,v3

© Copyright FHM.AVI – Data 17

Data Engineer

• If double-quotes are used to enclose fields, then a double-quote appearing

inside a field must be escaped by preceding it with another double quote. For

example:

o “h1”, “h”“2”,“h3”

Super CSV escapes double-quotes with a preceding double-quote. Please note that

the sometimes-used convention of escaping double-quotes as \" (instead of "") is not

supported.

JSON (JavaScript Object Notation)

JSON or JavaScript Object Notation is a lightweight text-based open standard

designed for human-readable data interchange. Conventions used by JSON are

known to programmers, which include C, C++, Java, Python, Perl, etc.

• JSON stands for JavaScript Object Notation.

• The format was specified by Douglas Crockford.

• It was designed for human-readable data interchange.

• It has been extended from the JavaScript scripting language.

• The filename extension is .json.

• JSON Internet Media type is application/json.

• The Uniform Type Identifier is public.json.

Uses of JSON

• It is used while writing JavaScript based applications that includes browser

extensions and websites.

• JSON format is used for serializing and transmitting structured data over

network connection.

• It is primarily used to transmit data between a server and web applications.

• Web services and APIs use JSON format to provide public data.

• It can be used with modern programming languages.

Characteristics of JSON

• JSON is easy to read and write.

© Copyright FHM.AVI – Data 18

Data Engineer

• It is a lightweight text-based interchange format.

• JSON is language independent.

Simple Example in JSON

The following example shows how to use JSON to store information related to books

based on their topic and edition.

{

"book": [

"id":"01",

"language": "Java",

"edition": "third",

"author": "Herbert Schildt"

},

"id":"07",

"language": "C++",

"edition": "second",

"author": "E.Balagurusamy"

After understanding the above program, we will try another example. Let's save the

below code as json.htm:

<html>

<head>

<title>JSON example</title>

<script language = "javascript" >

var object1 = { "language" : "Java", "author" : "herbert schildt" };

© Copyright FHM.AVI – Data 19

Data Engineer

document.write("<h1>JSON with JavaScript example</h1>");

document.write("<br>");

document.write("<h3>Language = " + object1.language+"</h3>");

document.write("<h3>Author = " + object1.author+"</h3>");

var object2 = { "language" : "C++", "author" : "E-Balagurusamy" };

document.write("<br>");

document.write("<h3>Language = " + object2.language+"</h3>");

document.write("<h3>Author = " + object2.author+"</h3>");

document.write("<hr />");

document.write(object2.language + " programming language can be studied " + "from

book written by " + object2.author);

document.write("<hr />");

</script>

</head>

<body>

</body>

</html>

Now let's try to open json.htm using IE or any other javascript enabled browser that

produces the following result:

© Copyright FHM.AVI – Data 20

Data Engineer

XML (Extensible Markup Language)

XML is a specification that has been developed for transmitting information over the

Web. XML is based on the Standard Global Markup Language (SGML) and is a

cross-platform, software- and hardware-independent tool. In addition, it has the

following attributes:

• Is a markup language much like HTML.

• Is designed to describe data.

• Can be used for all types of data and graphics.

• Is independent of applications, platforms, or vendors.

• Allows designers to create their own customized tags, enabling the definition,

transmission, validation, and interpretation of data between applications and

between organizations.

XML tags identify the data and are used to store and organize the data, rather than

specifying how to display it like HTML tags, which are used to display the data.

XML is not going to replace HTML in the near future, but it introduces new

possibilities by adopting many successful features of HTML.

© Copyright FHM.AVI – Data 21

Data Engineer

There are three important characteristics of XML that make it useful in a variety of

systems and solutions −

• XML is extensible − XML allows you to create your own self-descriptive

tags, or language, that suits your application.

• XML carries the data, does not present it − XML allows you to store the

data irrespective of how it will be presented.

• XML is a public standard − XML was developed by an organization called

the World Wide Web Consortium (W3C) and is available as an open standard.

XML Usage

A short list of XML usage says it all −

• XML can work behind the scene to simplify the creation of HTML documents

for large web sites.

• XML can be used to exchange the information between organizations and

systems.

• XML can be used for offloading and reloading of databases.

• XML can be used to store and arrange the data, which can customize your

data handling needs.

• XML can easily be merged with style sheets to create almost any desired

output.

• Virtually, any type of data can be expressed as an XML document.

What is Markup?

XML is a markup language that defines set of rules for encoding documents in a

format that is both human-readable and machine-readable. So what exactly is a

markup language? Markup is information added to a document that enhances its

meaning in certain ways, in that it identifies the parts and how they relate to each

other. More specifically, a markup language is a set of symbols that can be placed in

the text of a document to demarcate and label the parts of that document.

Following example shows how XML markup looks, when embedded in a piece of

text −

© Copyright FHM.AVI – Data 22

Data Engineer

<message>

<text>Hello, world!</text>

</message>

This snippet includes the markup symbols, or the tags such as

<message>...</message> and <text>... </text>. The tags <message> and

</message> mark the start and the end of the XML code fragment. The tags <text>

and </text> surround the text Hello, world!

Why Use XML?

An industry typically uses data exchange methods that are meaningful and specific

to that industry. With the advent of e-commerce, businesses conduct an increasing

number of relationships with a variety of industries and, therefore, must develop

expert knowledge of the various protocols used by those industries for electronic

communication.

The extensibility of XML makes it a very effective tool for standardizing the format

of data interchange among various industries. For example, when message brokers

and workflow engines must coordinate transactions among multiple industries or

departments within an enterprise, they can use XML to combine data from disparate

sources into a format that is understandable by all parties.

Is XML a Programming Language?

A programming language consists of grammar rules and its own vocabulary which

is used to create computer programs. These programs instruct the computer to

perform specific tasks. XML does not qualify to be a programming language as it

does not perform any computation or algorithms. It is usually stored in a simple text

file and is processed by special software that is capable of interpreting XML.

The following sample XML file describes the contents of an address book:

<?xml version="1.0"?>

<address_book>

<person gender="f">

<name>Jane Doe</name>

© Copyright FHM.AVI – Data 23

Data Engineer

<address>

<street>123 Main St.</street>

<city>San Francisco</city>

<state>CA</state>

<zip>94117</zip>

</address>

<phone area_code=415>555-1212</phone>

</person>

<person gender="m">

<name>John Smith</name>

<phone area_code=510>555-1234</phone>

<email>johnsmith@somewhere.com</email>

</person>

</address_book>

How Do You Describe an XML Document?

There are two ways to describe an XML document: XML Schemas and DTDs.

XML Schemas define the basic requirements for the structure of a particular XML

document. A Schema describes the elements and attributes that are valid in an XML

document, and the contexts in which they are valid. In other words, a Schema

specifies which tags are allowed within certain other tags, and which tags and

attributes are optional. Schemas are themselves XML files.

The schema specification is a product of the World Wide Web Consortium (W3C).

For detailed information on XML schemas,

see http://www.w3.org/TR/xmlschema-0/.

The following example shows a schema that describes the preceding address book

sample XML document:

<xsd:schema xmlns:xsd="http://www.w3.org/1999/XMLSchema">

<xsd:element name="address_book" type="bookType"/>

<xsd:complexType name="bookType">

<xsd:element name=name="person" type="personType"/>

</xsd:complexType>

© Copyright FHM.AVI – Data 24

Data Engineer

<xsd:complexType name="personType">

<xsd:element name="name" type="xsd:string"/>

<xsd:element name="address" type="addressType"/>

<xsd:element name="phone" type="phoneType"/>

<xsd:element name="email" type="xsd:string"/>

<xsd:attribute name="gender" type="xsd:string"/>

</xsd:complexType>

<xsd:complexType name="addressType">

<xsd:element name="street" type="xsd:string"/>

<xsd:element name="city" type="xsd:string"/>

<xsd:element name="state" type="xsd:string"/>

<xsd:element name="zip" type="xsd:string"/>

</xsd:complexType>

<xsd:simpleType name="phoneType">

<xsd:restriction base="xsd:string"/>

<xsd:attribute name="area_code" type="xsd:string"/>

</xsd:simpleType>

</xsd:schema>

You can also describe XML documents using Document Type Definition (DTD)

files, a technology older than XML Schemas. DTDs are not XML files.

The following example shows a DTD that describes the preceding address book

sample XML document:

<!DOCTYPE address_book [

<!ELEMENT person (name, address?, phone?, email?)>

<!ELEMENT name (#PCDATA)>

<!ELEMENT address (street, city, state, zip)>

<!ELEMENT phone (#PCDATA)>

<!ELEMENT email (#PCDATA)>

<!ELEMENT street (#PCDATA)>

<!ELEMENT city (#PCDATA)>

<!ELEMENT state (#PCDATA)>

<!ELEMENT zip (#PCDATA)>

<!ATTLIST person gender CDATA #REQUIRED>

<!ATTLIST phone area_code CDATA #REQUIRED>

]>

© Copyright FHM.AVI – Data 25

Data Engineer

An XML document can include a Schema or DTD as part of the document itself,

reference an external Schema or DTD, or not include or reference a Schema or DTD

at all. The following excerpt from an XML document shows how to reference an

external DTD called address.dtd:

<?xml version=1.0?>

<!DOCTYPE address_book SYSTEM "address.dtd">

<address_book>

...

XML documents only need to be accompanied by Schema or DTD if they need to

be validated by a parser or if they contain complex types. An XML document is

considered valid if 1) it has an associated Schema or DTD, and 2) it complies with

the constraints expressed in the associated Schema or DTD. If, however, an XML

document only needs to be well-formed, then the document does not have to be

accompanied by a Schema or DTD. A document is considered well-formed if it

follows all the rules in the W3C Recommendation for XML 1.0. For the full XML

1.0 specification, see http://www.w3.org/XML/.

YAML (YAML Ain’t Markup Language)

YAML is a digestible data serialization language that is often utilized to create

configuration files and works in concurrence with any programming language.

YAML is a data serialization language designed for human interaction. It’s a strict

superset of JSON, another data serialization language. But because it’s a strict

superset, it can do everything that JSON can and more. One major difference is that

newlines and indentation actually mean something in YAML, as opposed to JSON,

which uses brackets and braces.

The format lends itself to specifying configuration, which is how we use it at

CircleCI.

Basic Components of YAML File

The basic components of YAML are described below − Conventional Block Format

This block format uses hyphen+space to begin a new item in a specified list. Observe

the example shown below −

--- # Favorite movies

© Copyright FHM.AVI – Data 26

Data Engineer

- Casablanca

- North by Northwest

- The Man Who Wasn't There

Inline Format

Inline format is delimited with comma and space and the items are enclosed in

JSON. Observe the example shown below −

--- # Shopping list

[milk, groceries, eggs, juice, fruits]

Folded Text

Folded text converts newlines to spaces and removes the leading whitespace.

Observe the example shown below −

- {name: John Smith, age: 33}

- name: Mary Smith

age: 27

The structure which follows all the basic conventions of YAML is shown below −

men: [John Smith, Bill Jones]

women:

- Mary Smith

- Susan Williams

Synopsis of YAML Basic Elements

• The synopsis of YAML basic elements is given here: Comments in YAML

begins with the (#) character.

• Comments must be separated from other tokens by whitespaces.

• Indentation of whitespace is used to denote structure.

• Tabs are not included as indentation for YAML files.

• List members are denoted by a leading hyphen (-).

• List members are enclosed in square brackets and separated by commas.

• Associative arrays are represented using colon ( : ) in the format of key value

pair. They are enclosed in curly braces {}.

© Copyright FHM.AVI – Data 27

Data Engineer

• Multiple documents with single streams are separated with 3 hyphens (---).

• Repeated nodes in each file are initially denoted by an ampersand (&) and by

an asterisk (*) mark later.

• YAML always requires colons and commas used as list separators followed

by space with scalar values.

• Nodes should be labelled with an exclamation mark (!) or double exclamation

mark (!!), followed by string which can be expanded into an URI or URL.

XML vs JSON vs YAML vs CSV

Here are some guiding considerations to make decisions when choosing a flat file

format for the data to be stored:

Choose XML

• You have to represent mixed content (tags mixed within text). [This would

appear to be a major concern in your case. You might even consider HTML

for this reason.]

• There's already an industry standard XSD to follow.

• You need to transform the data to another XML/HTML format. (XSLT is

great for transformations.)

Choose JSON

• You have to represent data records, and a closer fit to JavaScript is valuable

to your team or your community.

Choose YAML

• You have to represent data records, and you value some additional features

missing from JSON: comments, strings without quotes, order-preserving

maps, and extensible data types.

Choose CSV

• You have to represent data records, and you value ease of import/export

with databases and spreadsheets.

The flat file is easy to use, but it has the disadvantage that Input / Output speed is

not good, so it should be considered carefully before using it for tasks that need to

© Copyright FHM.AVI – Data 28

Data Engineer

process fast speed or big size data. This disadvantage will be overcome for some

other data sources, which will be presented below.

Binary data formatted as the table/ matrix

Another common type of data is a binary table. The file types mentioned in this

section are files containing information in binary format and the data inside it is

arranged in standard structures to make them easy to create and use. These files have

great advantages in terms of data exchange speed and capacity.

Some common formats of Binary files are commonly used:

XLS/XLSX files

The XLSX and XLS file extensions are used for Microsoft Excel spreadsheets, part

of the Microsoft Office Suite of software.

XLSX/XLS files are used to store and manage data such as numbers, formulas, text,

and drawing shapes.

XLSX is part of Microsoft Office Open XML specification (also known as OOXML

or OpenXML), and was introduced with Office 2007. XLSX is a zipped, XML-based

file format. Microsoft Excel 2007 and later uses XLSX as the default file format

when creating a new spreadsheet. Support for loading and saving legacy XLS files

is also included.

XLS is the default format used with Office 97-2003. XLS is a Microsoft proprietary

Binary Interchange File Format. Microsoft Excel 97-2003 uses XLS as the default

file format when creating a new document.

The default extension used by this format is: XLSX or XLS.

How to load XLS/XLSX files with Python

Excel files can be read easier by using the Pandas module in Python

Read Excel column names

We import the Pandas module, including ExcelFile. The method read_excel() reads

the data into a Pandas Data Frame, where the first parameter is the filename and the

second parameter is the sheet.

© Copyright FHM.AVI – Data 29

Data Engineer

The list of columns will be called df.columns.

import pandas as

from pandas import ExcelWr

from pandas import Excel

df = pd.read_excel('File.xlsx', sheetname='Shee

print("Column headings

print(df.columns)

Using the data frame, we can get all the rows below an entire column as a list. To

get such a list, simply use the column header

print(df['Sepal width'])

Read Excel data

We start with a simple Excel file, a subset of the Iris dataset.

To iterate over the list, we can use a loop:

for i in df.index:

print(df['Sepal width'][i])

We can save an entire column into a list:

listSepalWidth = df['Sepal width']

print(listSepalWidth[0])

We can simply take entire columns from an excel sheet:

© Copyright FHM.AVI – Data 30

Data Engineer

import pandas as pd

from pandas import ExcelWriter

from pandas import ExcelFile

df = pd.read_excel('File.xlsx', sheetname='Sheet1')

sepalWidth = df['Sepal width']

sepalLength = df['Sepal length']

petalLength = df['Petal length']

AVRO files

Avro is an open source project that provides data serialization and data exchange

services for Apache Hadoop. These services can be used together or independently.

Avro facilitates the exchange of big data between programs written in any language.

With the serialization service, programs can efficiently serialize data into files or

into messages. The data storage is compact and efficient. Avro stores both the data

definition and the data together in one message or file.

Avro stores the data definition in JSON format making it easy to read and interpret;

the data itself is stored in binary format making it compact and efficient. Avro files

include markers that can be used to split large data sets into subsets suitable for

Apache MapReduce processing. Some data exchange services use a code generator

to interpret the data definition and produce code to access the data. Avro doesn't

require this step, making it ideal for scripting languages.

A key feature of Avro is robust support for data schemas that change over time —

often called schema evolution. Avro handles schema changes like missing fields,

added fields and changed fields; as a result, old programs can read new data and new

programs can read old data. Avro includes APIs for Java, Python, Ruby, C, C++ and

more. Data stored using Avro can be passed from programs written in different

languages, even from a compiled language like C to a scripting language like Apache

Pig.

Working with Avro Data

Apache Avro is a data serialization framework where the data is serialized in a

compact binary format. Avro specifies that data types be defined in JSON. Avro

© Copyright FHM.AVI – Data 31

Data Engineer

format data has an independent schema, also defined in JSON. An Avro schema,

together with its data, is fully self-describing.

Data Type Mapping

Avro supports both primitive and complex data types.

To represent Avro primitive data types in Greenplum Database, map data values to

Greenplum Database columns of the same type.

Avro supports complex data types including arrays, maps, records, enumerations,

and fixed types. Map top-level fields of these complex data types to the Greenplum

Database TEXT type. While Greenplum Database does not natively support these

types, you can create Greenplum Database functions or application code to extract

or further process subcomponents of these complex data types.

The following table summarizes external mapping rules for Avro data.

Avro Data Type PXF/Greenplum Data Type

boolean boolean

bytes bytea

double double

float real

int int or smallint

long bigint

string text

Complex type: Array,

text, with delimiters inserted between collection

Map, Record, or

items, mapped key-value pairs, and record data.

Enum

Complex type: Fixed bytea

Follows the above conventions for primitive or

Union complex data types, depending on the union;

supports Null values.

© Copyright FHM.AVI – Data 32

Data Engineer

Avro Schemas and Data

Avro schemas are defined using JSON, and composed of the same primitive and

complex types identified in the data type mapping section above. Avro schema files

typically have a .avsc suffix.

Fields in an Avro schema file are defined via an array of objects, each of which is

specified by a name and a type.

Handling Avro files in Python

This is an example usage of avro-python3 in a Python 3 environment.

# Python 3 with `avro-python3` package available

import copy

import json

import avro

from avro.datafile import DataFileWriter, DataFileReader

from avro.io import DatumWriter, DatumReader

# Note that we combined namespace and name to get "full name"

schema = {

'name': 'avro.example.User',

'type': 'record',

'fields': [

{'name': 'name', 'type': 'string'},

{'name': 'age', 'type': 'int'}

# Parse the schema so we can use it to write the data

schema_parsed = avro.schema.Parse(json.dumps(schema))

# Write data to an avro file

with open('users.avro', 'wb') as f:

© Copyright FHM.AVI – Data 33

Data Engineer

writer = DataFileWriter(f, DatumWriter(), schema_parsed)

writer.append({'name': 'Pierre-Simon Laplace', 'age': 77})

writer.append({'name': 'John von Neumann', 'age': 53})

writer.close()

# Read data from an avro file

with open('users.avro', 'rb') as f:

reader = DataFileReader(f, DatumReader())

metadata = copy.deepcopy(reader.meta)

schema_from_file = json.loads(metadata['avro.schema'])

users = [user for user in reader]

reader.close()

print(f'Schema that we specified:\n {schema}')

print(f'Schema that we parsed:\n {schema_parsed}')

print(f'Schema from users.avro file:\n {schema_from_file}')

print(f'Users:\n {users}')

# Schema that we specified:

# {'name': 'avro.example.User', 'type': 'record',

# 'fields': [{'name': 'name', 'type': 'string'}, {'name': 'age', 'type': 'int'}]}

# Schema that we parsed:

# {"type": "record", "name": "User", "namespace": "avro.example",

# "fields": [{"type": "string", "name": "name"}, {"type": "int", "name": "age"}]}

# Schema from users.avro file:

# {'type': 'record', 'name': 'User', 'namespace': 'avro.example',

# 'fields': [{'type': 'string', 'name': 'name'}, {'type': 'int', 'name': 'age'}]}

# Users:

# [{'name': 'Pierre-Simon Laplace', 'age': 77}, {'name': 'John von Neumann', 'age': 53}]

Issue with name, namespace, and full name

© Copyright FHM.AVI – Data 34

Data Engineer

An interesting thing to note is what happens with the name and namespace fields.

The schema we specified has the full name of the schema that has both

name and namespace combined, i.e., 'name': 'avro.example.User'. However, after

parsing with avro.schema.Parse(), the name and namespace are separated into

individual fields. Further, when we read back the schema from the users.avro file,

we also get the name and namespace separated into individual fields.

Avro specification, for some reason, uses the name field for both the full name and

the partial name. In other words, the name field can either contain the full name or

only the partial name. Ideally, Avro specification should have kept partial_name,

namespace, and full_name as separate fields.

This behind-the-scene separation and in-place modification may cause unexpected

errors if your code depends on the exact value of name. One common use case is

when you’re handling lots of different schemas and you want to

identify/index/search by the schema name.

A best practice to guard against possible name errors is to always parse a dict

schema into a avro.schema.RecordSchema using avro.schema.Parse(). This will

generate the namespace, fullname, and simple_name (partial name), which you can

then use with peace of mind.

print(type(schema_parsed))

# <class 'avro.schema.RecordSchema'>

print(schema_parsed.avro_name.fullname)

# avro.example.User

print(schema_parsed.avro_name.simple_name)

# User

print(schema_parsed.avro_name.namespace)

# avro.example

This problem of name and namespace deepens when we use a third-party package

called fastavro, as we will see in the next section.

Avro <> DataFrame

As we have seen above, Avro format simply requires a schema and a list of records.

We don’t need a dataframe to handle Avro files. However, we can write

© Copyright FHM.AVI – Data 35

Data Engineer

a pandas dataframe into an Avro file or read an Avro file into a pandas dataframe.

To begin with, we can always represent a dataframe as a list of records and vice-

versa

• List of records – pandas.DataFrame.from_records() –> Dataframe

• List of records <– pandas.DataFrame.to_dict(orient='records') – Dataframe

Using the two functions above in conjunction with avro-python3 or fastavro, we can

read/write dataframes as Avro. The only additional work wewould need to do is to

inter-convert between pandas data types and Avro schema types ourselves.

An alternative solution is to use a third-party package called pandavro, which does

some of this inter-conversion for us.

import copy

import json

import pandas as pd

import pandavro as pdx

from avro.datafile import DataFileReader

from avro.io import DatumReader

# Data to be saved

users = [{'name': 'Pierre-Simon Laplace', 'age': 77},

{'name': 'John von Neumann', 'age': 53}]

users_df = pd.DataFrame.from_records(users)

print(users_df)

# Save the data without any schema

pdx.to_avro('users.avro', users_df)

# Read the data back

users_df_redux = pdx.from_avro('users.avro')

print(type(users_df_redux))

# <class 'pandas.core.frame.DataFrame'>

© Copyright FHM.AVI – Data 36

Data Engineer

# Check the schema for "users.avro"

with open('users.avro', 'rb') as f:

reader = DataFileReader(f, DatumReader())

metadata = copy.deepcopy(reader.meta)

schema_from_file = json.loads(metadata['avro.schema'])

reader.close()

print(schema_from_file)

# {'type': 'record', 'name': 'Root',

# 'fields': [{'name': 'name', 'type': ['null', 'string']},

# {'name': 'age', 'type': ['null', 'long']}]}

In the above example, we didn’t specify a schema ourselves and pandavro assigned

the name = Root to the schema. We can also provide a schema dict to

pandavro.to_avro() function, which will preserve the name and namespace

faithfully.

Avro with PySpark

Using Avro with PySpark is fraught with a sequence of issues. Let’s see the common

issues step-by-step.

we will use Scala 2.11, Spark 2.4.4, and Apache Spark’s spark-avro 2.4.4 withina

pyspark shell.

$ $SPARK_INSTALLATION/bin/pyspark --packages org.apache.spark:spark-avro_2.11:2.4.4

Within the pyspark shell, we can run the following code to write and read Avro.

# Data to store

users = [{'name': 'Pierre-Simon Laplace', 'age': 77},

{'name': 'John von Neumann', 'age': 53}]

# Create a pyspark dataframe

users_df = spark.createDataFrame(users, 'name STRING, age INT')

# Write to a folder named users

© Copyright FHM.AVI – Data 37

Data Engineer

users_df.write.format('avro').mode("overwrite").save('users-folder')

# Read the data back

users_df_redux = spark.read.format('avro').load('./users-folder')

Image, Video & Audio files

There is another rich vein of data available, however, in the form of multi-media.

This collection of ‘binary based’ data includes images, videos, audio.

- An image, digital image, or still image is a binary representation of visual

information, such as drawings, pictures, graphs, logos, or individual video

frames.

- A video is a sequence of images (called frames) captured and eventually

displayed at a given frequency. However, by stopping at a specific frame of

the sequence, a single video frame, i.e. an image, is obtained.

- Any digital information with speech or music stored on and played through a

computer is known as an audio file or sound file.

Digital image (video) structure:

In digital world, an image is represented as a two-dimensional function f(x,y) , where

x and y are spatial coordinates, and the value of f at any pair of coordinates (x,y) is

called the intensity of the image at that point. For a gray-range image, the intensity

is given by just one value (one channel). For color images, the intensity is a 3D

vector (three channels), usually distributed in the order RGB.

There are three main types of images:

• Intensity image is a data matrix whose values have been scaled to represent

intensities. When the elements of an intensity image are of class uint8 or class

uint16, they have integer values in the

range [0,255][0,255] and [0,65535][0,65535], respectively. If the image is of

class float32, the values are single-precission floating-point numbers. They

are usually scaled in the range [0,1][0,1], although it is not rare to use the sclae

[0,255][0,255] too.

© Copyright FHM.AVI – Data 38

Data Engineer

• Binary image is a black and white image. Each pixel has one logical value,

00 or 11.

• Color image is like intensity image but with three chanels, i.e. to each pixel

corresponds three intensity values (RGB) instead of one.

The result of sampling and quantization is a matrix of real numbers. The size of the

image is the number of rows by the number of columns, M×NM×N. The indexation

of the image in Python follows the usual convention:

© Copyright FHM.AVI – Data 39

Data Engineer

When performing mathematical transformations of images, we often need the image

to be of double type. But when reading and writing we save space by using integer

codification.

Working with Images in Python

(Link: https://www.geeksforgeeks.org/working-images-python/)

PIL is the Python Imaging Library which provides the python interpreter with image

editing capabilities. It was developed by Fredrik Lundh and several other

contributors. Pillow is the friendly PIL fork and an easy to use library developed by

Alex Clark and other contributors. We’ll be working with Pillow.

Installation:

• Linux: On Linux terminal type the following:

pip install Pillow

Installing pip via terminal:

sudo apt-get update

sudo apt-get install python-pip

• Windows: Download the appropriate Pillow package according to your

python version. Make sure to download according to the python version you

have.

We’ll be working with the Image Module here which provides a class of the same

name and provides a lot of functions to work on our images.To import the Image

module, our code should begin with the following line:

from PIL import Image

Operations with Images:

• Open a particular image from a path:

© Copyright FHM.AVI – Data 40

Data Engineer

#img = Image.open(path)

# On successful execution of this statement,

# an object of Image type is returned and stored in img variable)

try:

img = Image.open(path)

except IOError:

pass

# Use the above statement within try block, as it can

# raise an IOError if file cannot be found,

# or image cannot be opened.

Retrieve size of image: The instances of Image class that are created have many

attributes, one of its useful attribute is size.

from PIL import Image

filename = "image.png"

with Image.open(filename) as image:

width, height = image.size

#Image.size gives a 2-tuple and the width, height can be obtained

• Some other attributes are: Image.width, Image.height, Image.format,

Image.info etc.

• Save changes in image: To save any changes that you have made to the

image file, we need to give path as well as image format.

img.save(path, format)

# format is optional, if no format is specified,

#it is determined from the filename extension

• Rotating an Image: The image rotation needs angle as parameter to get the

image rotated.

from PIL import Image

def main():

try:

© Copyright FHM.AVI – Data 41

Data Engineer

#Relative Path

img = Image.open("picture.jpg")

#Angle given

img = img.rotate(180)

#Saved in the same relative location

img.save("rotated_picture.jpg")

except IOError:

pass

if name == " main ":

main()

Digital audio structure

(Link: https://www.kdnuggets.com/2020/02/audio-data-analysis-

deep-learning-python-part-1.html)

The sound excerpts are digital audio files in audio format. Sound waves are digitized

by sampling them at discrete intervals known as the sampling rate (typically 44.1kHz

for CD-quality audio meaning samples are taken 44,100 times per second).

Each sample is the amplitude of the wave at a particular time interval, where the bit

depth determines how detailed the sample will be also known as the dynamic range

of the signal (typically 16bit which means a sample can range from 65,536 amplitude

values).

© Copyright FHM.AVI – Data 42

Data Engineer

Handle audio file by Python:

Python has some great libraries for audio processing like Librosa and PyAudio.There

are also built-in modules for some basic audio functionalities.

We will mainly use two libraries for audio acquisition and playback:

1. Librosa

It is a Python module to analyze audio signals in general but geared more towards

music. It includes the nuts and bolts to build a MIR(Music information retrieval)

system. It has been very well documented along with a lot of examples and

tutorials.

Installation:

pip install librosa

or

conda install -c conda-forge librosa

To fuel more audio-decoding power, you can install ffmpeg which ships with many

audio decoders.

2. IPython.display.Audio

IPython.display.Audio lets you play audio directly in a jupyter notebook.

© Copyright FHM.AVI – Data 43

Data Engineer

I have uploaded a random audio file on the below page. Let us now load the file in

your jupyter console.

Vocaroo | Online voice recorder

Vocaroo is a quick and easy way to share voice messages over the interwebs.

Loading an audio file:

import librosa

audio_data = '/../../gruesome.wav'

x , sr = librosa.load(audio_data)

print(type(x), type(sr))#<class 'numpy.ndarray'> <class 'int'>print(x.shape, sr)#(94316,) 22050

This returns an audio time series as a numpy array with a default sampling rate(sr)

of 22KHZ mono. We can change this behavior by resampling at 44.1KHz.

librosa.load(audio_data, sr=44100)

or to disable resampling.

librosa.load(audio_path, sr=None)

The sample rate is the number of samples of audio carried per second, measured in

Hz or kHz.

Data from SQL database and data

warehouse

Data from Data Warehouse:

A data warehouse (DW) is a digital storage system that connects and harmonizes

large amounts of data from many different sources. Its purpose is to feed business

intelligence (BI), reporting, and analytics, and support regulatory requirements – so

companies can turn their data into insight and make smart, data-driven decisions.

Data warehouses store current and historical data in one place and act as the single

source of truth for an organization.

© Copyright FHM.AVI – Data 44

Data Engineer

Most data-warehousing projects combine data from different source systems. Each

separate system may also use a different data organization and/or format. Common

data-source formats include relational databases, XML, JSON and flat files, but may

also include non-relational database structures such as Information Management

System (IMS) or other data structures such as Virtual Storage Access Method

(VSAM) or Indexed Sequential Access Method (ISAM), or even formats fetched

from outside sources by means such as web spidering or screen-scraping.

There are two scenarios when getting data from a DW:

- Extract data directly from DW with valid credentials to access databases.

- Extract data indirectly and limited (data size, extract speed, permissions) via

given APIs, please refer part: Web Services: Data from API for more

information.

* API-specific challenges: While it may be possible to extract data from a database

using SQL, the extraction process for SaaS products relies on each platform’s

application programming interface (API). Working with APIs can be challenging:

• APIs are different for every application.

• Many APIs are not well documented. Even APIs from reputable, developer-

friendly companies sometimes have poor documentation.

• APIs change over time. For example, Facebook’s “move fast and break

things” approach means the company frequently updates its reporting APIs –

and Facebook doesn’t always notify API users in advance.

* The Steps that you should follow for extracting data from a DW:

Step 1: Purpose of extracting data:

Determine the objective of the data, for example: which process you want to analyze.

Indicate the scope of the process (where it starts and where it ends).

Step 2: Selection Method

Determine whether you can extract all activities in a certain timeframe or whether

you will select cases based on a particular start or end activity. If you select cases

based on a start or end activity, write down which start or end activity will be used.

Step 3: Databases, Tables and Fields

© Copyright FHM.AVI – Data 45

Data Engineer

Bases on the data description of the DW, define the Databases, Tables and Fields

that related to the data that you want to extract.

Step 4: Timeframe

Read the chapter on How Much Data Do You Need? and determine the timeframe

based on your data selection method. Write down the start and the end date of the

timeframe you want to extract the data for.

Step 5: Required Format

When you ask someone to extract data for you, they will ask about the required

format. With “format” they typically mean everything, including the content, the

timeframe, etc.—not just the actual file format.

* Query data from Data Warehouse: please refer the below section Data from SQL

database

Data from SQL database:

A database is a systematic collection of data. They support electronic storage and

manipulation of data. Databases make data management easy.

Database files store data in a structured format, organized into tables and fields.

Individual entries within a database are called records. Databases are commonly

used for storing data referenced by dynamic websites.

Structure of a database:

© Copyright FHM.AVI – Data 46

Data Engineer

Microsoft SQL Server is a relational database management systems (RDBMS) that,

at its fundamental level, stores the data in tables. The tables are the database objects

that behave as containers for the data, in which the data will be logically organized

in rows and columns format. Each row is considered as an entity that is described by

the columns that hold the attributes of the entity. For example, the customers table

contains one row for each customer, and each customer is described by the table

columns that hold the customer information, such as the CustomerName and

CustomerAddress. The table rows have no predefined order, so that, to display the

data in a specific order, you would need to specify the order that the rows will be

returned in. Tables can be also used as a security boundary/mechanism, where

database users can be granted permissions at the table level.

Table basics

SQL Server tables are contained within database object containers that are called

Schemas. The schema also works as a security boundary, where you can limit

database user permissions to be on a specific schema level only. You can imagine

the schema as a folder that contains a list of files. You can create up to 2,147,483,647

tables in a database, with up to 1024 columns in each table. When you design a

database table, the properties that are assigned to the table and the columns within

the table will control the allowed data types and data ranges that the table accepts.

© Copyright FHM.AVI – Data 47

Data Engineer

Proper table design, will make it easier and faster to store data into and retrieve data

from the table.

Special table types

In addition to the basic user defined table, SQL Server provides us with the ability

to work with other special types of tables. The first type is the Temporary Table that

is stored in the tempdb system database. There are two types of temporary tables: a

local temporary table that has the single number sign prefix (#) and can be accessed

by the current connection only, and the Global temporary table that has two number

signs prefix (##) and can be accessed by any connection once created.

A Wide Table is a table the uses the Sparse Column to for optimized storage for the

NULL values, reducing the space consumed by the table and increasing the number

of columns allowed in that table to 30K columns.

System Tables are a special type of table in which the SQL Server Engine stores

information about the SQL Server instance configurations and objects information,

that can be queried using the system views.

Partitioned Tables are tables in which the data will be divided horizontally into

separate unites in the same filegroup or different filegroups, based on a specific key,

to enhance the data retrieval performance.

Database Query

A query is a way of requesting information from the database. A database query can

be either a select query or an action query. A select query is a query for retrieving

data, while an action query requests additional actions to be performed on the data,

like deletion, insertion, and updating.

For example, a manager can perform a query to select the employees who were hired

5 months ago. The results could be the basis for creating performance evaluations.

Query Language

Many database systems expect you to make requests for information through a

stylised query written in a specific query language. This is the most complicated

method because it compels you to learn a specific language, but it is also the most

flexible.

© Copyright FHM.AVI – Data 48

Data Engineer

Query languages are used to create queries in a database.

Examples of Query Languages

Microsoft Structured Query Language (SQL) is the ideal query language. Other

expansions of the language under the SQL query umbrella include:

• MySQL

• Oracle SQL

• NuoDB

Query languages for other types of databases, such as NoSQL databases and graph

databases, include the following:

• Cassandra Query Language (CQL)

• Neo4j’s Cypher

• Data Mining Extensions (DMX)

• XQuery

Power of Queries

A database has the possibility to uncover intricate movements and actions, but this

power is only utilised through the use of query. A complex database contains

multiple tables storing countless amounts of data. A query lets you filter it into a

single table, so you can analyse it much more easily.

Queries also can execute calculations on your data, summarise your data for you,

and even automate data management tasks. You can also evaluate updates to your

data prior to committing them to the database, for still more versatility of usage.

Queries can perform a number of various tasks. Mainly, queries are used to search

through data by filtering specific criteria. Other queries contain append, crosstab,

delete, make table, parameter, totals, and update tools, each of which performs a

specific function. For example, a parameter query executes the distinctions of a

specific query, which triggers a user to enter a field value, and then it makes use of

that value to make the criteria. In comparison, totals queries let users organise and

summarise data.

© Copyright FHM.AVI – Data 49

Data Engineer

In a relational database, which is composed of records or rows of data, the SQL

SELECT statement query lets the user select data and deliver it to an application

from the database. The resulting query is saved in a result-table, which is referred to

as the result-set. The SELECT statement can be divided into other specific

statements, like FROM, ORDER BY and WHERE. The SQL SELECT query can

also group and combine data, which could be useful for creating analyses or

summaries.

Create table in database:

The SQL CREATE TABLE statement is used to create a new table.

Syntax

The basic syntax of the CREATE TABLE statement is as follows −

CREATE TABLE table_name(

column1 datatype,

column2 datatype,

column3 datatype,

.....

columnN datatype,

PRIMARY KEY( one or more columns )

);

CREATE TABLE is the keyword telling the database system what you want to do.

In this case, you want to create a new table. The unique name or identifier for the

table follows the CREATE TABLE statement.

Then in brackets comes the list defining each column in the table and what sort of

data type it is. The syntax becomes clearer with the following example.

A copy of an existing table can be created using a combination of the CREATE

TABLE statement and the SELECT statement.

Example

The following code block is an example, which creates a CUSTOMERS table with

an ID as a primary key and NOT NULL are the constraints showing that these fields

cannot be NULL while creating records in this table −

© Copyright FHM.AVI – Data 50

Data Engineer