Professional Documents

Culture Documents

Ijarcce.2019.81220text Gen Model

Ijarcce.2019.81220text Gen Model

Uploaded by

Uma MaheshwarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ijarcce.2019.81220text Gen Model

Ijarcce.2019.81220text Gen Model

Uploaded by

Uma MaheshwarCopyright:

Available Formats

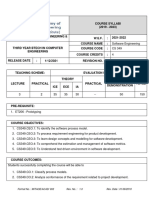

ISSN (Online) 2278-1021

IJARCCE ISSN (Print) 2319-5940

International Journal of Advanced Research in Computer and Communication Engineering

Vol. 8, Issue 12, December 2019

Review on Text Generation Models

Alma Nasrin P M1, Krishnendu K J2, Pooja Jeevan3, Sneha George4

Student, Dept. of Computer science and Engineering, Universal Engineering College, Thrissur, Kerala, India1,2,3

Assistant Professor, Computer science and Engineering, Universal Engineering College, Thrissur, Kerala, India 4

Abstract: The generation of natural language text from the given data has become common today. The text generation

system find out the required information to must include in the output. The automatic text generation system has variety

of applications. The researchers found that in the 192 countries in the private colleges and university college 56.7 million

students are studying. The number of students in the world is increasing. And all students should have a proper Text

Books. The number of Text Books production is limited and it is not sufficient to satisfy the student’s requirements. The

text books as per the syllabus make students to learn easy. Here we are attempt to develop a Text Book generator software.

The software is to get a pdf formatted Text Book as per their syllabus. In which the syllabus of our subjects are given as

the input to the system, and generate a text document by collecting the information using web crawling method from the

trusted educational websites. The generated text document is summarized to get a precise and crisp summary with the

required data.

Keywords: Educational Websites, Summary, Text Book Generator, Web Crawling

I. INTRODUCTION

The generation of natural language text from the given data has become common today. Its use has become more

ubiquitous. The input to the natural language generation system can be in many forms such as tables, dataset of records

etc. The text generation system has wide variety of application such as to generate the product description, weather

forecasting, generating cooking recipes etc. In this paper we are attempting a research on the automatic text generation

to apply on the field of education to solve a problem that is the numbers of students who are studying in private and

government colleges under the university are very large. So that they will face a big problem is that they do not get proper

study material as per their syllabus. For producing a large number of Text Books play a crucial role in the current scenario.

The number of Text Books produced is limited and it is not sufficient to satisfy the requirements of students. So they

depend on GOOGLE or other educational websites for study. So here we are attempt to overcome this problem by trying

to develop software for the creation of a textbook. Mainly it contains two parts: text generation and text summarization.

The main idea of this to generate text from the different data set and gather it to the text document and finally it

summarizes to get the short and crisp summary in pdf format output. In which the syllabus of our subjects are given as

the input to the system in the form of an image. And the technique OCR is used to recognize each character that are

present in the given input image. After that the result of the OCR will transfer into the text generation module. The text

generation module will collect data from a variety of datasets that are present on the web using web crawler. A web

crawler is a mechanism that is used to access different data pages from the Internet. When the web crawler collects the

web pages from the web the URL of each page should be copied. And this is used for further reference. All the

downloaded pages are transferred into the text summarization module to get the crisp and short summary. In this paper

we are attempting to study and compare the different automatic text generation systems.

II. LITERATURE SURVEY

In [1], it generates a natural language summary based on the information in an RNN. It contains an order planning method

for table- to- text generation, and also contains a linking mechanism and copying mechanism. The linking mechanism

provide the relationship between different fields in the table and enable the neural network for plan which of them is first

and which is next. Then the copy mechanism is used to cope with rare words. For generating the summary it takes table

as the input and generate natural language summary based on the information contained in the RNN. Mainly it contains

three components: an encoder, dispatcher and a decoder. The encoder will capture the information in the table. The

dispatcher plans what to generate next. After encoding the information in the table the RNN is used to decode the natural

language summary and it predicts the target words in the sentence. It contains a visualization analysis to understand the

order planning mechanism. Here use a neural encoder for representing the information in the table. First split the contents

in each field into separate words and transform this table into a large sequence. The RNN used in this method has a Long

Shot Term Memory (LSTM) units. It used to read the contents in the table and also used to read the field name. For

representing the words and fields use different or separate matrices. The dispatcher used here is an attention mechanism.

Copyright to IJARCCE DOI 10.17148/IJARCCE.2019.81220 104

ISSN (Online) 2278-1021

IJARCCE ISSN (Print) 2319-5940

International Journal of Advanced Research in Computer and Communication Engineering

Vol. 8, Issue 12, December 2019

The content based attention is based on the content representation. For generating the text calculates the attention weights

based on the encoded vector and contents in the table. And also contains a link based attention which distinguishes the

relationships between the fields in the table.

In [2], it contains a sequence to sequence technique for model the table content and structure by using the local and global

addressing scheme. Local addressing is used to determine which particular word in the table should be focused while

generating the description. And global addressing is used to determine which particular word in the table should be

focused more for generating the summary. It contains an encoder- decoder framework using the long short term memory.

It first encodes the field values in the table and the LSTM decoder is used to generate natural language summary of the

table. This decoding phase will contains a novel dual attention mechanism, which consist of two parts: word level

attention and field level attention. Word level attention is for local addressing and field level attention is for global

addressing. The experiments on WIKIBIO data set provide substantial improvement over the baselines. In the case of

local addressing, the table which are used here contains a set of fields. The local addressing is used to encode each record

in the table. The encoder which used here is a LSTM encoder which identifies the dependencies between different words

that are included in the table. For building a connection between different words in the table word level attention and

dual attention mechanism is used. The global addressing is used to represent the inner record information in the table.

For enabling the global addressing field level attention mechanisms are used here. The table structure is encoded for table

representation and also contains a novel field gating LSTM which used to embedding the field into the cell memory of

LSTM unit. Then the table information are reduced and the information in the table are unimportant which are not used

for generating the description. Basically dual attention mechanism is used to generate the description from the table.

In [3], it is used to generate natural language summary from the structured data for the address specific characteristics of

the problem. It proposes a neural component to address ‘stays on’ and ‘never look back’ behaviour while decoding. And

it introduces a dataset for French and German and it gives the state of the art result on the dataset. It gives an improved

performance on the target domain.

In [4], it generates a model for the natural language sentence which describes the region of a particular table. It maps

each rows in the table to particular vectors and then generates the natural language sentence. If the table contains rare

words then a flexible copying mechanism is used to replicate those rare words from the table to the output sequence.

Mainly the table to text generation has three challenges: what was the good representation of a table, how to generate

natural language sentences automatically, how to use the low frequency words in the table. In this paper it uses a neural

network model which takes each row from the table and generates a natural language which describes that particular row.

It describes an encoder-decoder framework. The encoder part represents each row in a vector and decoder part for

generating rare words from the table it uses a copying mechanism. GRU based RNN is the backbone of the decoder. In

the attention mechanism while generating a word the decoder use the important information from the given table. For

increase the relevance between the sentence and the table use this information into decoder, global and local. Basically

the encoder-decoder approach is used to generate the sentence which describes the entire rows in the table.

In [5], the content selection determines which data is produced to the output system. Here for learning the content

selection rules it uses an efficient method. This model allows capturing the dependencies between the items to be inputted.

In the case of concept- to-text generation, the content selection is the major task. In this paper for learning the content-

selection component a data-driven method is proposed. In the case of a database each entities in the database has a type

and for each type there will be a set of attributes associated with it. Also each entry has a label and which specifies that

whether that entry is included in the generated text or not. A learning algorithm is provided for the database entities

during the training process. To select the database entities is the main aim of the content selection component. The content

selection component takes every entity in the database in isolation. Then formulate the content to be selected. In the case

of isolation some of the entities of the database are to be selected than the others. By considering the entity attribute

values the individual preference scores are calculated. For discovering the links automatically uses a corpus-driven

method. Also by using the generate-and-prune approach induces the important links. This paper mainly describes about

the data-driven method for automatic content selection.

In [6], it presents a robust generation system which is used for content selection and surface realization. Here the end-to-

end generation process is divided into a sequence of local decisions, arranged hierarchically and each trained

discriminatively. Also it has three domains: robocup, technical weather forecasts and common weather forecast. For

combining both the content selection and surface realization a powerful generation system is used. Also improving the

performance feature-based design is additionally used. The main advantage of this approach is the ability to use the global

features. By measuring the BLEU score we can evaluate the surface realization. Also by measuring the F1 score we can

evaluate the macro content selection. Then conduct a human evaluation by using the Amazon Mechanical Turk. To

capture some properties of the domain the feature-based approach is straightforward to the domain specific features. This

was one of the advantages of feature-based approach.

In [7], it mainly describes the generation framework that was used to create five versions of a weather forecast generator.

The used generation framework combines the probabilistic generation methodology with a comprehensive model. This

generator was evaluated by using or measuring the output quality. This paper mainly summarizes the pcRU framework.

The pcRU is a language generation framework. By applying the transformations to the representations the NGL systems

Copyright to IJARCCE DOI 10.17148/IJARCCE.2019.81220 105

ISSN (Online) 2278-1021

IJARCCE ISSN (Print) 2319-5940

International Journal of Advanced Research in Computer and Communication Engineering

Vol. 8, Issue 12, December 2019

to be composed the generation rules. The main aim of this approach is by using the NLG method it is used to reducing

the development time and increasing the reusability of systems and components. Finally it improves the development

time, produces better output and it is computationally more efficient. By compared to the n-gram-based generate-and-

select NLG it reduce the computational expense and also improves the generated language quality. This was the aim of

this approach. The structured probabilistic model makes the informed decisions during the generation phase.

In [8], it automatically produces an output in the form of text from the non-linguistic input. In this paper it deals with an

unsupervised domain-independent fashion for capture the content selection here we will use a joint model. Content

selection means that what to say and surface realization mean that how to say. For describing the inherent structure of

the input here uses a probabilistic context-free grammar. Here the grammar can be represented as a weighted hyper graph.

And for finding the best derivation tree for the given input recast generation are used. For finding the best derivation by

using the hyper graphs framework we formulate the input as a PCFG. At the decoding time this model takes the k best

derivations.

In [9], this paper introduces a neural model that scales large and rich domains. It generates biographical sentences from

the Wikipedia from the tables. This paper describes a conditional neural language model for the generation of text. This

model mixes a fixed number of vocabularies with the copy actions by extending this model. Then from the input database

to the produced output sentence transfer the words. Depending on the data fields this paper allows the model to embed

the words. Both the local and global conditioning are improves this model. This paper mainly focused for generating only

the first sentence.

In [10] this paper presents a platform Data2Text Studio for automated text generate from the input which is structured

data. This will improve outputted generated text. Using this Data2Text generation technology which produce a text from

the structured data and the output is efficiently describe the input data in the generated text. The generated text can be

used in the many applications. The architecture of the Data2Text Studio consist mainly three components which are

model training, model revision and text generation. . The platform is equipped with a semi-HMMs model which extract

automatically the high quality templates and corresponding trigger conditions from parallel data and it can support

learning for how can use the some specific phrase in some particular situations.

In [11] this paper presents a statistical framework that produces a text description from the attributes of the product as

the input to the system. It is used to generate a product description from the product attributes. To generate the product

description a system needs to aware of the relative importance of the attributes in the input which is given to the system.

By using the statistical frame work the system has the advantages those are coherent with facts, fluency and it is highly

automated. For deciding what to say and how to say for the generation of product description from the product attribute

data the proposed structured information and template information are helpful.

In [12] this paper presents a system that generates the natural language text using semantic data as input. The main task

of this system is to find out the right information to include in the generated text which is the output of the Natural

Language Generation (NLG) is known as content selection and it is very highly domain independent. That means the

new rules have to be developed for each new domain and this is done by manually so that it is very difficult task. So that

in this paper presented a method for learning the content selection rules for text generation in different domains. His

methodology how that the data available from the internet for various domain is using here. In this experiment they are

using three methods that are exact matching, statistical selection and rule induction to get the rule from the indirect

observations from the data. The content selection has the significance importance in the acceptance of the generated text

by the final user.

In [13] this paper presents a data - driven method for the concept to text generation. That means automatically creating

text output from the non-linguistic input. They defined a probabilistic context-free grammar that describes the structure

of the input and represent it as a weighted hyper graph. The non-linguistic input means such as databases of records, logic

form and expert system knowledge bases. The method data driven approach to text generation is simple, flexible and it

is also a domain independent method. In this method the key concept is to reduce the content selection and surface

realization into a common parsing problem. They define the probabilistic context free grammar PCFG that capture the

structure of the input databases and it correspondence to natural language. For generation they first learn the weight of

the PCFG by maximizing the joint likelihood of the model. By finding the best derivation tree in the hyper graph the

generation of text is performed. The improvement to the system by using discriminative reranking. In this method

typically create a list of n-best candidates from a generative model and reranking with arbitrary features. This model

result has significantly higher fluency and semantic correctness. It has the flexibility to incorporate to additional, more

elaborate features.

In [14] this paper, Artificial intelligence are interested in text generation for a long time but heat produced lower levels

of activities. Now AI understood that this text generation is essential and it is needed for uses. Text generation with

penman has some merits as it is portable, reusable and embedded in many of the system. Penman make sure to generate

multi paragraph in English language there are for modules in penman knowledge acquisition module providing an area

of knowledge connecting with what is all about the goal in communication. Text planning module is an arrangements to

make attention towards readers on the basis of which portion to be presented and knowledge in the content. The sentence

generation module for representing the output in English language is defined with proper grammar.

Copyright to IJARCCE DOI 10.17148/IJARCCE.2019.81220 106

ISSN (Online) 2278-1021

IJARCCE ISSN (Print) 2319-5940

International Journal of Advanced Research in Computer and Communication Engineering

Vol. 8, Issue 12, December 2019

In [15] The inventions in the category of text generations have paved for a drastic grammatical system in English. This

paper includes augmentations of a vivid kind of precedents in the systematic outlay. Nigel who is encoded in the computer

program emphasizes on the text control purposes.

In [16] this paper, RNN use storing and updating the list of items is discussed at formal of text string for modelling the

global quality of being logical and consistent. Two models- language model having a remark interjected in a conversation

for the adjusting to make generation of output by dynamic. That encourages reference of agenda items and assessment

on cooking recipes and dialogue system responses in demonstration at high coherence with great improvement in

semantic coverage of agenda. Checklist consist of different levels at its high level RNN language model for encoding

and its main goal is for bag-of-words and produce an output in the form of token. Vector is represented as a soft checklist

that performs according to agenda during its generation and updating every time.

In [17] this paper, Recent Empirical approaches are used without any ordering. Generation of text from database and a

system having capable of being end to end generation, which contain ordering as well as content selection. Operations

are performed in document levels and mostly by set of grammar rules and these rules are used for planning the content.

There comes near or nearer 2 ways for develop approach. One is to form a tree where documents are nodes on the

relationship between database records and this comes near or nearer inspiration from rhetorical structure theory. Other

one has a combination of tree structure.

In [18] this paper proposes an entity-centric neural architecture for data-to-text generation. In this model creates an entity

specific representation which is dynamically updated. The output text is generated based on the data which given as the

input and entity memory representations using the hierarchical attention at each step. This model generates a descriptive

text with a decoder argument with a memory cell and a processor for the each entity. It generates text using hierarchical

attention over the input table and entity memory. This model generates the output text which is coherent, concise and

factually correct by the human evaluation.

In [19], it deals with from the annotated data how to take the decisions for ordering the word and word choice in the

generated natural language. Here the system contains mainly four trainable systems for the generation of natural language.

It can be done in the travel domain called the NLG. For expressing the concept uses a lookup table, which is used to store

the most frequent phrases and is also used for comparison purposes. Here NLG1 is the lookup table. For finding the most

probability word NLG1 and NLG2 are used with respect to the maximum entropy probability model. From this NLG2 is

used to predict the words from left-to-right and NLG3 are used to predict the words which are in the dependency tree

order. And the final domain NLG4 is implemented in a dialogue strategy. In which the order of the word is modified

dynamically. So basically from the annotated data the NLG system is used to represent the practicality of learning the

decisions for ordering the word and word choice. At the runtime we are reordering the words for reflecting the dialogue

sate. Then it will make a conversational system which speaks more naturally.

In [20] this paper, generate answers for the question. A neural network model is used for storing the knowledge. It is

impossible to store all the knowledge present in the real world. Questions are simple and factoid. Here follows sequence

to sequence learning. Answers only towards the questions, an end to end format gives fluent response. Neural generative

question answering model has various stages. In Interpreter question convert to a representation and store in short term

memory. Enquirer takes the representation as its output and communication with long term memory and then

summarization. In answers the final stage of creating answer by the interaction with generator and attention model.

In [21], for describing the content of the image this paper introduces an attention based model. This attention based model

automatically learns the image content. By using a back propagations technique we can train the model which is in a

deterministic manner. While generating the words in the output the model automatically fix the salient objects. Here we

use a hard attention mechanism and a soft attention mechanism. These hard and soft attention mechanisms are the two

image caption generators. By using the three benchmark datasets such as Flickr30k, Flickr8k and the MS COCO dataset

validate the usefulness of the attention in the generation of caption. In this paper by using the BLUE and METOR metric

this approach gives the state of the three benchmark datasets.

In [22], from a large or complex data the neural language generation (NLG) systems are used to generate the texts. The

major difficulties that phase this system are while generating the geographic descriptions which refer the location or

patterns in the given data. So for generating the geographic descriptions with involving the regions a domain of

methodology is used here. The NLG systems analyse the given input data. After analysing the given input the system

produces the geographic descriptions by human experts. For generating the geographic descriptions which involves the

regions this paper presents a two staged approach. The first stage involves, for selecting a frame of reference it uses

domain knowledge. The second phase involves by using the constraints the user select the values within that framework.

In [23] this paper presents a texygen, a branch-marking platform for text generation, in an open domain format. This

always makes sure that it covers wide varieties, quality of always having the same standards, behaviour in text generation.

It helps in standardization, research, improving reproductivity and reliability of its future work. There are many

applications like machine translation, question answering, and information retrieval and so on. This generation process

mainly makes three challenges on evaluation. First one is losing of clarity in ultimate goal or state of feeling confused

because of not getting a proper understands in something to be used for. Second one, there is no need for researchers to

explore the source code as publicly available 10 may the already reported experimental result to reproduce which makes

Copyright to IJARCCE DOI 10.17148/IJARCCE.2019.81220 107

ISSN (Online) 2278-1021

IJARCCE ISSN (Print) 2319-5940

International Journal of Advanced Research in Computer and Communication Engineering

Vol. 8, Issue 12, December 2019

difficult full stop the third issue is its quality in the wide variety that is it makes a chance of getting a bad quality instead

of producing good. Texygen already experiment And designs various evaluation methods.

In [24] this paper, we discuss about the generation of paraphrases from predicate structure using a symbol, uniform

generation methodology. Here sentence generation takes as input. Some semantic representation we generate some

assumption that the input is a hierarchical predicate or argument structure. Output of this process will be informed of

grammatical sentence. Meaning of this output matches the original semantic input. Here we use a simple algorithm to

generate sentences from predicate or argument structures. Input can be decomposed into the top predicate which is

identified by using and transitive vub. Wide variety of expression is there. In order to overcome such challenges our

lexicon grammatical resources play an important role in such face of generation.

In [25] this paper, generate natural language sentence bi a tree structure. Hybrid encodes meaning representation

components in simple and natural way as well as natural language. The two important issue cost for the ultimate goal is

to represent the meaning of natural language by a computer is essential full stop and the other one is computer should

also have the ability to convert the represented meaning into human understandable natural language. Saudi goal is to

produce the proper communication between the computer and human to natural language. The method used is statistical

methods which contain tyre of natural language and formal meaning representation. The merits when compared with

other previous approaches. By using hybrid tree it is effective for both semantic parsing as well as NLG.

In [26] this paper, we propose a new framework called LeakGAN to restore the problem for long text generation.

Extensive experiment in various real life world task and synthetic data is Highly Effective in long text generation. Here

formalize Hindi text generation problem as a sequential decision making process. Here we consider time step. At each

time step t, the agents take the previously generated content as its current state. The process is less informative when the

sentence length goes larger. To address this proposed Framework allows discriminator due to that provides additional

information. The proposed paper generates long text via adversarial training.

III. CONCLUSION

Text book as per the syllabus of the subject are very important for the students. There are only limited number of text

books are produced. It is not sufficient to satisfy the requirement of the large number of students. Therefore the students

are facing the problem of non-availability of the text books. This proposes a solution for this problem by try to generate

a pdf formatted text book from the syllabus, which is given as the input to the system. In which this paper combine the

idea of web crawling, data mining, automatic extractive text summarization approaches. The system will be helping the

students who are facing the non-availability of the text book as re their syllabus.

ACKNOWLEDGEMENT

We would like to express our sincere thanks to our teachers they guide us and helped us to complete this paper. We would

like to thank our parents and friends who motivated us at hard times and guide us to remain focused.

REFERENCES

[1]. Lei Sha, Lili Mou, Tianyu Liu, Pascal Poupart, Sujian Li Baobao Chang, Zhifang Sui “Order- planning Neural Text Generation From Structured

Data” Sep 2017.

[2]. Tianyu Liu, Kexiang Wang, Lei Sha, Baobao Chang, Zhifang Sui “Table- to- Text Generation by Structure- Aware Seq2seq Learning” The

Thirty-second AAAI Conference on Artificial Intelligence (AAAI-18) 2018

[3]. Preksha Nema, Shreyas Shetty, Parag Jain, Anirban Laha, Karthik Sankaranarayanan, Mitesh M. Khapra, ”Generating Descriptions from

Structured Data Using a Bifocal Attention Mechanism and Gated Orthogonalization”,arXiv:1804.07789v1 [cs.CL] 20 Apr 2018.

[4]. Junwei Bao, Duyu Tang, Nan Duan, Zhao Yan, Yuanhua Lv, Ming Zhou, Tiejun Zhao,”Table-to-Text: Describing Table Region with Natural

Language”

[5]. Barzilay,R.; and Lapata,M. 2005.”Collective Content Selection for Concept-To-Text Generation” Conference on Human Language Technology

and Empirical Methods in Natural Language Processing, 331-338. Association for Computational Linguistics.

[6]. Angeli, G.; Liang, P.; and Klein,D. 2010.”A Simple Domain-Independent Probabilistic Approach to Generation”. 2010 Conference on Empirical

Methods in Natural Language Processing, 502-512. Association for Computational Linguistics.

[7]. Anja Belz,”Automatic Generation of Weather Forecast Texts Using Comprehensive Probabilistic Generation-Space Models”, Natural Language

Engineering 1 (1): 1-26. Printed in the United Kindom

[8]. Konstas,I., and Lapta, M, “Unsupervised concept-to-text generation with hypergraphs”, 2012 Conference of the North American Chapter of the

Association for Computational Linguistics: Human Language Technologies,752-761.

[9]. Lebert,R; Grangier,D; and Auli,M. 2016.,”Neural text generation from structured data with application to the biography domain”, arXiv preprint

arXiv:1603.07771

[10]. Longxu Dou , Guanghui Qin , Jinpeng Wang , Jin-Ge Yao3 , and Chin-Yew Lin3,” Data2Text Studio: Automated Text Generation from Structured

Data”, Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing (System Demonstrations), pages 13–18

[11]. Jinpeng Wang , Yutai Hou , Jing Liu , Yunbo Cao3 and Chin-Yew Lin1,” A Statistical Framework for Product Description Generation”,

Proceedings of the The 8th International Joint Conference on Natural Language Processing, pages 187–192,

[12]. Pablo A. Duboue and Kathleen R. McKeown. 2003. Statistical acquisition of content selection rules for natural language generation. In

Proceedings of the 2003 Conference on Empirical Methods in Natural Language Processing, EMNLP ’03, pages 121–128, Stroudsburg, PA,

USA. Association for Computational Linguistics.

Copyright to IJARCCE DOI 10.17148/IJARCCE.2019.81220 108

ISSN (Online) 2278-1021

IJARCCE ISSN (Print) 2319-5940

International Journal of Advanced Research in Computer and Communication Engineering

Vol. 8, Issue 12, December 2019

[13]. Konstas, I., and Lapata, M. 2012. Concept-to-text generation via discriminative reranking. In ACL, 369–378. Association for Computational

Linguistics.

[14]. WC MANN - Proceedings of National Conference on Artificial …, 1983,AN OVERVIEW OF THE PENMAS TEXT GENERATION SYSTEM

- ci.nii.ac.jp,AAAI, 261-265, 1983

[15]. William C. Mann.1983. An Overview of the Nigel Text Generation Grammar. Association for Computational Linguistics, Pages79–84.

https://doi.org/ 10.3115/981311.981326

[16]. Chloe Kiddon, Luke Zettlemoyer, and Yejin Choi. ´2016. Globally coherent text generation with neural checklist models. In EMNLP. The

Association for Computational Linguistics, pages 329–339

[17]. LIoannisKonstas and MirellaLapata. 2013. Inducing document plans for concept-to-text generation. In EMNLP. ACL, pages 1503–1514.

[18]. Ratish Puduppully and Li Dong and Mirella Lapata “Data-to-text Generation with Entity Modeling”, Proceedings of the 57th Annual Meeting of

the Association for Computational Linguistics, pages.

[19]. Adwait Ratnaparkhi,”Trainable approaches to surface natural language generation and their application to conversational dialog systems”, 2002

Computer Speech & Language, 16(3): 435-455.

[20]. J. Yin, X. Jiang, Z. Lu, L. Shang, H. Li, and X. Li, “Neural generative question answering,” in Proc. IJCAI,2016, pp. 2972–2978.

[21]. Kelvin Xu, Jimmy Lei Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard S. Zemel, Yoshua Bengio, “Show,

Attend and Tell: Neural Image Caption Generation with Visual Attention”, arXiv: 1502.03044v3 [cs.LG] 19 Apr 2016.

[22]. Ross Turner, Somayajulu Sripada, Ehud Reiter, “Generating Approximate Geographic Sescriptions” Empirical Methods in NLG, LNAI 5790,

pp. 121-140, 2010

[23]. [Zhu et al., 2018] Yaoming Zhu, Sidi Lu, Lei Zheng, Jiaxian Guo, Weinan Zhang, Jun Wang, and Yong Yu. Texygen: A benchmarking platform

for text generation models.arXiv:1802.01886, 2018

[24]. R. Kozlowski, K. F. McCoy, and K. Vijay-Shanker. 2003. Generation of Single-sentence Paraphrases from Predicate/Argument Structure Using

Lexico-grammatical Resources. In Proceedings of the 2nd International Workshop on Paraphrasing, pages 1–8.

[25]. Wei Lu, Hwee Tou Ng, and Wee Sun Lee. 2009. Natural language generation with tree conditional random fields. In Proceedings of the

Conference on Empirical Methods in Natural Language Processing (EMNLP),pages 400–409.

[26]. Jiaxian Guo, Sidi Lu, Han Cai, Weinan Zhang, Jun Wang, and Yong Yu. 2017. Long Text Generation via Adversarial Training with Leaked

Information. arXiv preprint arXiv:1709.08624.

BIOGRAPHIES

Alma Nasrin P M is currently pursuing Bachelor of engineering in Computer Science and Engineering

stream from Universal Engineering College, Thrissur, Kerala

Krishnendu K J is currently pursuing Bachelor of engineering in Computer Science and Engineering

stream from Universal Engineering College, Thrissur, Kerala

Pooja Jeevan is currently pursuing Bachelor of engineering in Computer Science and Engineering stream

from Universal Engineering College, Thrissur, Kerala

Sneha George is an Assistant professor in Computer science and engineering stream at Universal

Engineering College, Thrissur, Kerala

Copyright to IJARCCE DOI 10.17148/IJARCCE.2019.81220 109

You might also like

- PLC Controls with Structured Text (ST): IEC 61131-3 and best practice ST programmingFrom EverandPLC Controls with Structured Text (ST): IEC 61131-3 and best practice ST programmingRating: 4 out of 5 stars4/5 (11)

- Encyclopedia of Systems BiologyDocument2,417 pagesEncyclopedia of Systems Biologyanjaneya punekarNo ratings yet

- 30 CÂU HỎI VẤN ĐÁPDocument31 pages30 CÂU HỎI VẤN ĐÁPThu ThảoNo ratings yet

- Computerized System Life Cycle ManagementDocument107 pagesComputerized System Life Cycle ManagementQcNo ratings yet

- Deep Learning Based TTS-STT Model With Transliteration For Indic LanguagesDocument9 pagesDeep Learning Based TTS-STT Model With Transliteration For Indic LanguagesIJRASETPublicationsNo ratings yet

- Extractive Text Summarization Using Word FrequencyDocument6 pagesExtractive Text Summarization Using Word Frequencyhagar.hesham.extNo ratings yet

- Sem4 ReportDocument15 pagesSem4 Reportvedant sharmaNo ratings yet

- V3I10201482Document3 pagesV3I10201482Aritra DattaguptaNo ratings yet

- Deep Learning Based NLP TechniquesDocument7 pagesDeep Learning Based NLP Techniquesabaynesh mogesNo ratings yet

- Research Article: Abstractive Arabic Text Summarization Based On Deep LearningDocument14 pagesResearch Article: Abstractive Arabic Text Summarization Based On Deep LearningyousraajNo ratings yet

- Conceptual Framework For Abstractive Text SummarizationDocument11 pagesConceptual Framework For Abstractive Text SummarizationDarrenNo ratings yet

- Term Paper ModifiedDocument30 pagesTerm Paper Modifiedsoumya duttaNo ratings yet

- Summarization of Text Based On Deep Neural NetworkDocument12 pagesSummarization of Text Based On Deep Neural NetworkIJRASETPublicationsNo ratings yet

- Malayalam 2Document4 pagesMalayalam 2irontmp6zxslNo ratings yet

- Text Summarization Using Python NLTKDocument8 pagesText Summarization Using Python NLTKIJAR JOURNALNo ratings yet

- An Intelligent TLDR Software For SummarizationDocument6 pagesAn Intelligent TLDR Software For SummarizationIJRASETPublicationsNo ratings yet

- Keyphrase Extraction From Document Using Rake and Textrank AlgorithmsDocument11 pagesKeyphrase Extraction From Document Using Rake and Textrank Algorithmsikhwancules46No ratings yet

- 2016 Kituku, Muchemi & Nganga - Review On Machine Translation ApproachesDocument8 pages2016 Kituku, Muchemi & Nganga - Review On Machine Translation Approachesveritas.traducoesNo ratings yet

- Camera Ready PaperDocument5 pagesCamera Ready PaperAdesh DhakaneNo ratings yet

- 2ete ResearchDocument9 pages2ete Researchvedant sharmaNo ratings yet

- Abstractive Text Summary Generation With Knowledge Graph RepresentationDocument9 pagesAbstractive Text Summary Generation With Knowledge Graph RepresentationPrachurya NathNo ratings yet

- The Analysis of Web Page Information Processing Based On Natural Language ProcessingDocument4 pagesThe Analysis of Web Page Information Processing Based On Natural Language Processingshrisha.profNo ratings yet

- Text Interpreter & ConverterDocument13 pagesText Interpreter & ConverterIJRASETPublicationsNo ratings yet

- 1 PDFDocument28 pages1 PDFqaro kaduNo ratings yet

- Compusoft, 3 (7), 1012-1015 PDFDocument4 pagesCompusoft, 3 (7), 1012-1015 PDFIjact EditorNo ratings yet

- Ijet V2i3p7Document6 pagesIjet V2i3p7International Journal of Engineering and TechniquesNo ratings yet

- Hybrid Semantic Text SummarizationDocument12 pagesHybrid Semantic Text SummarizationIJRASETPublicationsNo ratings yet

- Report 116 SmitDocument11 pagesReport 116 SmitParth VlogsNo ratings yet

- Mini Project ReportDocument26 pagesMini Project ReportAkash DahadNo ratings yet

- Text Interpreter and ConverterDocument6 pagesText Interpreter and ConverterIJRASETPublicationsNo ratings yet

- White PaperDocument9 pagesWhite PaperKannadas PuthuvalNo ratings yet

- Text Mining Through Semi Automatic Semantic AnnotationDocument12 pagesText Mining Through Semi Automatic Semantic AnnotationsatsriniNo ratings yet

- Abstractive Document Summarization Using Divide and Conquer ApproachDocument7 pagesAbstractive Document Summarization Using Divide and Conquer ApproachIJRASETPublicationsNo ratings yet

- Comparative Study of Text Summarization MethodsDocument6 pagesComparative Study of Text Summarization Methodshagar.hesham.extNo ratings yet

- Research Article: N-GPETS: Neural Attention Graph-Based Pretrained Statistical Model For Extractive Text SummarizationDocument14 pagesResearch Article: N-GPETS: Neural Attention Graph-Based Pretrained Statistical Model For Extractive Text SummarizationIssiaka Faissal CompaoréNo ratings yet

- ETCW23Document4 pagesETCW23Editor IJAERDNo ratings yet

- Information Extraction On Tourism Domain Using SpaCy and BERTDocument15 pagesInformation Extraction On Tourism Domain Using SpaCy and BERTwia sofianNo ratings yet

- Accessing Database Using NLPDocument6 pagesAccessing Database Using NLPInternational Journal of Research in Engineering and TechnologyNo ratings yet

- PDC Review2Document23 pagesPDC Review2corote1026No ratings yet

- The Observed Preprocessing Strategies For Doing Automatic Text SummarizingDocument8 pagesThe Observed Preprocessing Strategies For Doing Automatic Text SummarizingCSIT iaesprimeNo ratings yet

- Re-Enactment of Newspaper ArticlesDocument4 pagesRe-Enactment of Newspaper ArticlesATSNo ratings yet

- Machine Translation Using Natural Language ProcessDocument6 pagesMachine Translation Using Natural Language ProcessFidelzy MorenoNo ratings yet

- Concordancer John PDFDocument12 pagesConcordancer John PDFRobDavidNovisNo ratings yet

- Automated Generation of Summary in Six Different Ways From Any Paragraph and Automated Generation of Fill in The Blanks From Any ParagraphDocument7 pagesAutomated Generation of Summary in Six Different Ways From Any Paragraph and Automated Generation of Fill in The Blanks From Any ParagraphIJRASETPublicationsNo ratings yet

- OperatingDocument3 pagesOperatingHemang JainNo ratings yet

- Comparative Study of Text Summarization MethodsDocument6 pagesComparative Study of Text Summarization MethodsNaty Dasilva Jr.No ratings yet

- Automatic Summarization of Document Using Machine LearningDocument3 pagesAutomatic Summarization of Document Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Base PaperDocument7 pagesBase PaperRamit VijayNo ratings yet

- 5bbb PDFDocument6 pages5bbb PDFAdesh DhakaneNo ratings yet

- Analysis On Text SummarizationDocument10 pagesAnalysis On Text SummarizationIJRASETPublicationsNo ratings yet

- Seminar Text Summarization 1Document21 pagesSeminar Text Summarization 1bhanuprakash15440No ratings yet

- Concurrent Context Free Framework For Conceptual Similarity Problem Using Reverse DictionaryDocument4 pagesConcurrent Context Free Framework For Conceptual Similarity Problem Using Reverse DictionaryEditor IJRITCCNo ratings yet

- Text Summarizing Using NLPDocument8 pagesText Summarizing Using NLPNimai ChaitanyaNo ratings yet

- Review On Language Translator Using Quantum Neural Network (QNN)Document4 pagesReview On Language Translator Using Quantum Neural Network (QNN)International Journal of Engineering and TechniquesNo ratings yet

- TC6 PROJECT SYNOPSIS KrishShetty VedantLandge 231106 101402Document13 pagesTC6 PROJECT SYNOPSIS KrishShetty VedantLandge 231106 101402Geeta patilNo ratings yet

- English for Information Technology 1 Курс 2 СеместрDocument40 pagesEnglish for Information Technology 1 Курс 2 СеместрКаухар Касымова100% (1)

- 3465-Article Text-21203-5-10-20240401Document12 pages3465-Article Text-21203-5-10-20240401akhtarfaiza23No ratings yet

- Transformation of Requirement Specifications Expressed in Natural Language Into An EER ModelDocument2 pagesTransformation of Requirement Specifications Expressed in Natural Language Into An EER ModelMian HassanNo ratings yet

- Symbol Table ImplementationDocument14 pagesSymbol Table ImplementationHenry T. EsquivelNo ratings yet

- An Algorithm To Transform Natural Languages To SQL Queries For Relational DatabasesDocument7 pagesAn Algorithm To Transform Natural Languages To SQL Queries For Relational DatabasesShabir AhmadNo ratings yet

- Automatic Template Extraction Using Hyper Graph Technique From Heterogeneous Web PagesDocument7 pagesAutomatic Template Extraction Using Hyper Graph Technique From Heterogeneous Web PagesshanriyanNo ratings yet

- Multi-Document Extractive Summarization For News Page 1 of 59Document59 pagesMulti-Document Extractive Summarization For News Page 1 of 59athar1988No ratings yet

- I9ksaxrymmqjd0synisq Signature Poli 180817162756Document12 pagesI9ksaxrymmqjd0synisq Signature Poli 180817162756Uma MaheshwarNo ratings yet

- Week 01 Assignment 01Document1 pageWeek 01 Assignment 01Uma MaheshwarNo ratings yet

- Week 11 Assignment 7Document1 pageWeek 11 Assignment 7Uma MaheshwarNo ratings yet

- Week 06 Assignment 02Document1 pageWeek 06 Assignment 02Uma MaheshwarNo ratings yet

- HindawiDocument11 pagesHindawiUma MaheshwarNo ratings yet

- IJARCCE.2021.101009 Hatecont Removal SVM TWITTERDocument6 pagesIJARCCE.2021.101009 Hatecont Removal SVM TWITTERUma MaheshwarNo ratings yet

- IJARCCE.2021.101025 Comp SurviveDocument5 pagesIJARCCE.2021.101025 Comp SurviveUma MaheshwarNo ratings yet

- Seetharam Ramesh: Certificate in Advanced Business Analytics With RDocument1 pageSeetharam Ramesh: Certificate in Advanced Business Analytics With RUma MaheshwarNo ratings yet

- IJARCCE.2019.81222SMart Shopping TrolyDocument8 pagesIJARCCE.2019.81222SMart Shopping TrolyUma MaheshwarNo ratings yet

- IJARCCE.2019.81218 Human Activity Recog TechnqDocument7 pagesIJARCCE.2019.81218 Human Activity Recog TechnqUma MaheshwarNo ratings yet

- KerasDocument2 pagesKerasUma Maheshwar100% (1)

- Pgd-Ds Schedule B2aDocument2 pagesPgd-Ds Schedule B2aUma MaheshwarNo ratings yet

- Cluster Dendrogram: Hclust (, "Ward.d2") DDDocument1 pageCluster Dendrogram: Hclust (, "Ward.d2") DDUma MaheshwarNo ratings yet

- Dbms Assignment 2 Sarbajeet BiswalDocument11 pagesDbms Assignment 2 Sarbajeet BiswalUma MaheshwarNo ratings yet

- Link To Download The Data Set:: Data Visualization (Batch 2A) - Assignment 2 Due Date: 16 December 2017Document1 pageLink To Download The Data Set:: Data Visualization (Batch 2A) - Assignment 2 Due Date: 16 December 2017Uma MaheshwarNo ratings yet

- Data Visualization Assignment 1 (Batch 2A)Document1 pageData Visualization Assignment 1 (Batch 2A)Uma MaheshwarNo ratings yet

- SW PDFDocument3 pagesSW PDFUma MaheshwarNo ratings yet

- Personal ProfileDocument1 pagePersonal ProfileUma MaheshwarNo ratings yet

- Principles of ManagementDocument129 pagesPrinciples of ManagementSuma Ganesan100% (1)

- ORDocument716 pagesORSelva Raj100% (1)

- Lecture 1 (Basic Concepts of Measurement Methods)Document14 pagesLecture 1 (Basic Concepts of Measurement Methods)Abdullah ShaukatNo ratings yet

- Johnsons TheoryDocument3 pagesJohnsons TheorydfgdfjggkhNo ratings yet

- Panarchy. Complexity and Regime Change in Human SocietiesDocument9 pagesPanarchy. Complexity and Regime Change in Human SocietiestherealwinnettouNo ratings yet

- Complex ThinkingDocument24 pagesComplex ThinkingGloria C Valencia GNo ratings yet

- Toolbox For Power System Dynamics and Control Engineering Education and ResearchDocument6 pagesToolbox For Power System Dynamics and Control Engineering Education and ResearchLuis Alonso Aguirre LopezNo ratings yet

- Disinformation Society, Communication and Cosmopolitan DemocracyDocument24 pagesDisinformation Society, Communication and Cosmopolitan DemocracyFábio Amaral de OliveiraNo ratings yet

- Object-Process Methodology and Its Applications For Complex, Real-Time Systems ModelingDocument13 pagesObject-Process Methodology and Its Applications For Complex, Real-Time Systems Modelingjohn dooNo ratings yet

- Capstone Project by (3631)Document15 pagesCapstone Project by (3631)Arvind YadavNo ratings yet

- Review of LiteratureDocument5 pagesReview of LiteratureAnonymous 9VWd1oNo ratings yet

- KF7014 - Assignment 2022-23 Sem 1Document18 pagesKF7014 - Assignment 2022-23 Sem 1Tumuhimbise MosesNo ratings yet

- Systems Design, Analysis and AdministrationDocument47 pagesSystems Design, Analysis and AdministrationTALEMWA SADICKNo ratings yet

- Indoor Climate SystemsDocument132 pagesIndoor Climate SystemssedgehammerNo ratings yet

- CS 349 Software Engineering Syllabus VER 1.0Document4 pagesCS 349 Software Engineering Syllabus VER 1.0YASH GAIKWADNo ratings yet

- Modularity and CognitionDocument6 pagesModularity and CognitionEnnio FioramontiNo ratings yet

- HCI - Gattech09 Cognitive Model GOMSDocument36 pagesHCI - Gattech09 Cognitive Model GOMSAdarsh SrivastavaNo ratings yet

- AOOP-4340701-Lab ManualDocument302 pagesAOOP-4340701-Lab Manualyuvrajsinh jadejaNo ratings yet

- Uzima Borehole Drilling SystemDocument39 pagesUzima Borehole Drilling Systemhanskwesha92No ratings yet

- Operational ResearchDocument16 pagesOperational Research123123azxcNo ratings yet

- RCM XXXDocument11 pagesRCM XXXOmar KhaledNo ratings yet

- 20230225DSLG2088Document33 pages20230225DSLG2088Tubai BhattacharjeeNo ratings yet

- Key Concepts in Environmental Planning: © Ecopolis 2009 ® Page 1Document53 pagesKey Concepts in Environmental Planning: © Ecopolis 2009 ® Page 1alsemNo ratings yet

- Barrios Briceno Javier 201909 MSCDocument262 pagesBarrios Briceno Javier 201909 MSCAswin Lorenso Gultom NamoralotungNo ratings yet

- Prachi Admm Conference PaperDocument4 pagesPrachi Admm Conference PaperGanesh AnandNo ratings yet

- GSMB Srs V 2.6 PDFDocument123 pagesGSMB Srs V 2.6 PDFKhalil DaiyobNo ratings yet

- Importance of SoftwareDocument12 pagesImportance of SoftwareALI HASANNo ratings yet