Professional Documents

Culture Documents

Drawing Waveforms: But First, A Little Story

Drawing Waveforms: But First, A Little Story

Uploaded by

Patrick CusackCopyright:

Available Formats

You might also like

- An Evaluation of Factors Significant To Lamellar TearingDocument7 pagesAn Evaluation of Factors Significant To Lamellar TearingpjhollowNo ratings yet

- Create A Slick Motion Graphics Reel: Adobe After Effects Cs4 Quicktime Pro 7Document4 pagesCreate A Slick Motion Graphics Reel: Adobe After Effects Cs4 Quicktime Pro 7Cameron BellingNo ratings yet

- Rundown of Handbrake SettingsDocument11 pagesRundown of Handbrake Settingsjferrell4380No ratings yet

- D ADesignExampleDocument4 pagesD ADesignExampleCharles TolmanNo ratings yet

- ConvertToMooV Read Me (1991)Document3 pagesConvertToMooV Read Me (1991)VintageReadMeNo ratings yet

- Fuzzing Sucks!Document70 pagesFuzzing Sucks!Yury ChemerkinNo ratings yet

- Tutorial - Audio Editing MasterclassDocument7 pagesTutorial - Audio Editing MasterclassxamalikNo ratings yet

- Auto TuneDocument2 pagesAuto TuneSamir NomanNo ratings yet

- Week 12 ConclusionDocument4 pagesWeek 12 Conclusionrafiqueuddin93No ratings yet

- Wot, No Sampler?: Using Sample Loops With Logic AudioDocument3 pagesWot, No Sampler?: Using Sample Loops With Logic AudioPetersonNo ratings yet

- Webtoon Creator PDFDocument32 pagesWebtoon Creator PDFMorena RotarescuNo ratings yet

- Stereoscopic 3D With Sony VegasDocument3 pagesStereoscopic 3D With Sony VegasJack BakerNo ratings yet

- Creating Rendered Animations in AutoCAD Architecture 2009Document8 pagesCreating Rendered Animations in AutoCAD Architecture 2009245622No ratings yet

- Gleetchlab3: Operator's Manual V 1.0Document26 pagesGleetchlab3: Operator's Manual V 1.0Tomotsugu NakamuraNo ratings yet

- Multi-Pass in c4dDocument5 pagesMulti-Pass in c4dqaanaaqNo ratings yet

- A Beginners Guide To Setting Up Your Own Home StudioDocument11 pagesA Beginners Guide To Setting Up Your Own Home StudioAntonioPalloneNo ratings yet

- Level Headed: Gain Staging in Your Daw SoftwareDocument5 pagesLevel Headed: Gain Staging in Your Daw SoftwareLovely GreenNo ratings yet

- After Effects Tutorial For BeginnersDocument12 pagesAfter Effects Tutorial For BeginnersDaniel ValentineNo ratings yet

- How To Do A High Quality DivX RipDocument2 pagesHow To Do A High Quality DivX RipUOFM itNo ratings yet

- Sampling TutorialsDocument38 pagesSampling Tutorialsbolomite100% (1)

- Stackoverflow PDFDocument10 pagesStackoverflow PDFjudapriNo ratings yet

- Focus - 0027 - 050604 Plotting Ansys FilesDocument24 pagesFocus - 0027 - 050604 Plotting Ansys FilessirtaeNo ratings yet

- Homer Reid's Ocean TutorialDocument3 pagesHomer Reid's Ocean TutorialModyKing99No ratings yet

- Made From Scratch: Where To Start With Audio ProgrammingDocument12 pagesMade From Scratch: Where To Start With Audio ProgrammingvillaandreNo ratings yet

- gOpenMol3 00Document209 pagesgOpenMol3 00Kristhian Alcantar MedinaNo ratings yet

- Trucos SonarDocument15 pagesTrucos SonarAlberto BrandoNo ratings yet

- Peter Shirley-Ray Tracing in One Weekend 1Document5 pagesPeter Shirley-Ray Tracing in One Weekend 1Kilya ZaludikNo ratings yet

- 4 B DX 9 VisDocument16 pages4 B DX 9 Vispulp noirNo ratings yet

- RVC v2 AI Cover Guide (By Kalomaze)Document8 pagesRVC v2 AI Cover Guide (By Kalomaze)faltuforallsiteNo ratings yet

- A Best Settings Guide For Handbrake 0.9.9Document19 pagesA Best Settings Guide For Handbrake 0.9.9stefka1234No ratings yet

- Corona Render ThesisDocument8 pagesCorona Render Thesisdwt5trfn100% (2)

- 101 HandbrakeDocument27 pages101 HandbrakeLeong KmNo ratings yet

- Gpuimage Swift TutorialDocument3 pagesGpuimage Swift TutorialRodrigoNo ratings yet

- Mastering On Your PCDocument9 pagesMastering On Your PCapi-3754627No ratings yet

- "Shoot To Edit": Best Videos Have A Beginning, Middle, and EndDocument12 pages"Shoot To Edit": Best Videos Have A Beginning, Middle, and Endsunilpradhan.76No ratings yet

- Optimization - Your Worst EnemyDocument6 pagesOptimization - Your Worst EnemyGaurav SrivastavaNo ratings yet

- Using Windows Live Movie Maker HandoutDocument12 pagesUsing Windows Live Movie Maker Handoutparekoy1014No ratings yet

- The Art of SoundfontDocument29 pagesThe Art of Soundfontrichx7No ratings yet

- Introduction To Color Management: Tips & TricksDocument5 pagesIntroduction To Color Management: Tips & TricksThomas GrantNo ratings yet

- EAC - Exact AudioDocument7 pagesEAC - Exact AudioDede ApandiNo ratings yet

- 3 - Animation TipsDocument10 pages3 - Animation Tipsapi-248675623No ratings yet

- Creative Workflow Hacks Final Cut Pro To After Effects Scripting Without The HassleDocument35 pagesCreative Workflow Hacks Final Cut Pro To After Effects Scripting Without The HassleJesús Odremán, El Perro Andaluz 101No ratings yet

- Quicktime Gamma Problema VIDEO COPILOT After Effects Tutorials, Plug-Ins and Stock Footage For Post ProDocument21 pagesQuicktime Gamma Problema VIDEO COPILOT After Effects Tutorials, Plug-Ins and Stock Footage For Post ProAlvaro CalandraNo ratings yet

- Thesis Audio HimaliaDocument5 pagesThesis Audio HimaliaPaperWritersMobile100% (2)

- C# - How Can I Embed Icon and Video Stream - Stack OverflowDocument3 pagesC# - How Can I Embed Icon and Video Stream - Stack OverflowanasNo ratings yet

- Resolume Avenue 3 Manual - EnglishDocument53 pagesResolume Avenue 3 Manual - EnglishNuno GoncalvesNo ratings yet

- Tutorial: Walking A Centipede Along A Path - Or-Building Complex Animations From Simple PiecesDocument28 pagesTutorial: Walking A Centipede Along A Path - Or-Building Complex Animations From Simple PiecescetelecNo ratings yet

- Cinelerra - Manual BásicoDocument3 pagesCinelerra - Manual Básicoadn67No ratings yet

- Video Editing Notes PDFDocument16 pagesVideo Editing Notes PDFknldNo ratings yet

- DP Audio Production TricksDocument4 pagesDP Audio Production TricksArtist RecordingNo ratings yet

- PowerQuest ManualDocument19 pagesPowerQuest ManualRobertoNo ratings yet

- MCompressorDocument58 pagesMCompressorDaniel JonassonNo ratings yet

- Doki Timing GuideDocument10 pagesDoki Timing GuideTheFireRedNo ratings yet

- Assingment 2 Setting Up A Project in DAWDocument6 pagesAssingment 2 Setting Up A Project in DAWMathias HernandezNo ratings yet

- VST To SF2Document8 pagesVST To SF2Zino DanettiNo ratings yet

- Polymer GravureDocument36 pagesPolymer GravurePepabuNo ratings yet

- Gjoel Svendsen Rendering of Inside PDFDocument187 pagesGjoel Svendsen Rendering of Inside PDFVladeK231No ratings yet

- Beginners Guide To XmonadDocument25 pagesBeginners Guide To XmonadAvery LairdNo ratings yet

- Wireless Room Freshener Spraying Robot With Video VisionDocument5 pagesWireless Room Freshener Spraying Robot With Video Visionsai thesisNo ratings yet

- Ion ExchangeDocument12 pagesIon ExchangepruthvishNo ratings yet

- Transistor MCQDocument5 pagesTransistor MCQMark BelasaNo ratings yet

- All Document 'TYPES' & DescriptionsDocument5 pagesAll Document 'TYPES' & DescriptionsPhilipNo ratings yet

- FCS-8005 User ManualDocument11 pagesFCS-8005 User ManualGustavo EitzenNo ratings yet

- The Cut Off List of Allotment in Round 03 For Programme B.Tech (CET Code-131) For Academic Session 2023-24Document42 pagesThe Cut Off List of Allotment in Round 03 For Programme B.Tech (CET Code-131) For Academic Session 2023-24addisarbaNo ratings yet

- Intake-Air System (ZM)Document13 pagesIntake-Air System (ZM)Sebastian SirventNo ratings yet

- CL 6 NSTSE-2021-Paper 464Document18 pagesCL 6 NSTSE-2021-Paper 464PrajNo ratings yet

- GDF SuezDocument36 pagesGDF SuezBenjaminNo ratings yet

- Flight Management and NavigationDocument18 pagesFlight Management and NavigationmohamedNo ratings yet

- 32 Samss 011Document27 pages32 Samss 011naruto256No ratings yet

- MAD Lab SyllabusDocument2 pagesMAD Lab SyllabusPhani KumarNo ratings yet

- Diagram SankeyDocument5 pagesDiagram SankeyNur WidyaNo ratings yet

- Cruis'n Exotica (27in) Operations) (En)Document105 pagesCruis'n Exotica (27in) Operations) (En)bolopo2No ratings yet

- NEC IDU IPASOLINK 400A Minimum ConfigurationDocument5 pagesNEC IDU IPASOLINK 400A Minimum ConfigurationNakul KulkarniNo ratings yet

- TFH220AEn112 PDFDocument4 pagesTFH220AEn112 PDFJoseph BoshehNo ratings yet

- MID 185 - PSID 3 - FMI 8 Renault VolvoDocument3 pagesMID 185 - PSID 3 - FMI 8 Renault VolvolampardNo ratings yet

- CHAPTER 8: Checkboxes and Radio Buttons: ObjectivesDocument7 pagesCHAPTER 8: Checkboxes and Radio Buttons: Objectivesjerico gaspanNo ratings yet

- Topic 2 Beam DesignDocument32 pagesTopic 2 Beam DesignMuhd FareezNo ratings yet

- Graduate Program CoursesDocument11 pagesGraduate Program CoursesAhmed Adel IbrahimNo ratings yet

- S 4 Roof Framing Plan Schedule of FootingDocument1 pageS 4 Roof Framing Plan Schedule of FootingJBFPNo ratings yet

- Cherokee Six 3oo Information ManualDocument113 pagesCherokee Six 3oo Information ManualYuri AlexanderNo ratings yet

- Mte 3152 Electric Drives Mid TermDocument2 pagesMte 3152 Electric Drives Mid TermAjitash TrivediNo ratings yet

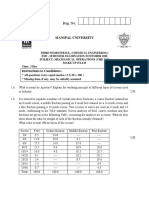

- Mechanical Operations (CHE-205) (Makeup) (EngineeringDuniya - Com)Document3 pagesMechanical Operations (CHE-205) (Makeup) (EngineeringDuniya - Com)Cester Avila Ducusin100% (1)

- Topic 6 Fields and Forces and Topic 9 Motion in FieldsDocument30 pagesTopic 6 Fields and Forces and Topic 9 Motion in Fieldsgloria11111No ratings yet

- Optocoupler Input Drive CircuitsDocument7 pagesOptocoupler Input Drive CircuitsLuis Armando Reyes Cardoso100% (1)

- Manual Conefor 26Document19 pagesManual Conefor 26J. Francisco Lavado ContadorNo ratings yet

- Truck Unloading Station111Document4 pagesTruck Unloading Station111ekrem0867No ratings yet

- Surfcam 2014 r2 - 32bitDocument152 pagesSurfcam 2014 r2 - 32bitClaudio HinojozaNo ratings yet

Drawing Waveforms: But First, A Little Story

Drawing Waveforms: But First, A Little Story

Uploaded by

Patrick CusackOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Drawing Waveforms: But First, A Little Story

Drawing Waveforms: But First, A Little Story

Uploaded by

Patrick CusackCopyright:

Available Formats

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

Road Worn, eh? Looking for TapeDeck Beta Testers

Software

Drawing Waveforms

I get asked about drawing waveforms from time to time. Over the years, I came to realize that this is a black art of sorts, and it requires a combination of some audio and drawing know-how on the Mac to get it right.

Capo

But first, a little story.

Once upon a time I used to write audio software for BeOS while I was in university. As almost every audio software author eventually does, I came to a point where I needed to render audio waveforms to the screen. I hacked up a straightforward drawing algorithm, and it worked well. When I started working on a follow-on project, I decided to re-use the algorithm I wrote for the first application, but it didnt work so well. The trouble is, when I originally wrote that algorithm, the audio clips in question were all very tinyless than 2s. Now I was dealing with much longer clips (up to a few minutes, in practice), and the algorithm didnt scale well at all. Around this time, I interviewed with Sonic Foundry, with the hopes of joining the Vegas team. During my interview, I asked, How do you guys draw waveforms on-screen for large audio clips, and so quickly!? Thats proprietary information, sorry. At the time, I just figured the guys were just avoiding a long, drawn-out response. I coded this up myself, except for the fact that it wasnt so fast so it cant be that difficult, right? Unfortunately, I got similar responses from other people I had asked afterwards. Regardless of whether youre new to audio, or youve been doing it for a while, you are aware that there arent too many books on the topic. Furthermore, you probably arent going to find too much in the way of detailed algorithms, or even pseudocode, to help you out. Im starting to realize that the reason is two-fold.

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/

TapeDeck

FuzzMeasure Pro

Search This Blog

Search

Subscribe RSS Categories

ABFiller (5) Acoustics (3) Capo (10) Funny (6) FuzzMeasure (106) Gainclone (6) General (80) Guitar (3) Loudspeaker Software (81) Mac (21) Server Administration (4)

Page 1 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

Im starting to realize that the reason is two-fold. First off, there really arent a lot of people out there who need to draw audio waveforms (or large data sets, for that matter) to screen. Second, its really not all that hard once you think about it for a while.

Software Development (19) TapeDeck (10) Uncategorized (34) VGA-LCD (4)

Overview

Drawing waveforms boils down to a few major stages: acquisition, reduction, storage, and drawing. For each of the stages, you have many implementation options, and youll choose the simplest one thatll serve your application. I dont know what your application is, so Ill use Capo as the main example for this post, and throw around some hypothetical situations where necessary. Early on, you have to set some priorities: Speed, Accuracy, and Display Quality. The order of those priorities will help you decide how to build your drawing algorithm, down to the individual stages. In Capo, I wanted to make Display Quality the top priority, followed by Speed, and then Accuracy. Because Capo would never be used to do sample-precise edits, I could throw away a whole lot of data, and then make the waveform look as good as possible in a short time frame. If I were writing an audio editor, my priorities might be Accuracy, followed by Speed, and then Display Quality. For a sequencer (like Garage Band), Id choose Speed, Display Quality, then Accuracy, because youre only viewing the audio at a high level, and its part of a larger group of parts. Make sense? Once you have an idea of what you need, you will have a clear picture of how to proceed.

Archives

(select)

Meta

Log in Valid XHTML XFN WordPress

Acquisition

This is almost worth a post of its own. I like using the ExtAudioFile{Open,Seek,Read,Close} API set from AudioToolbox.framework to open various audio file formats, but you may choose a combo of AudioFile+AudioConverter (ExtAudioFile wraps these for you), or QuickTimes APIs, or whatever else floats your boat. Your decision of API to get the source data is entirely up to your application. You cant extract movie audio with (Ext)AudioFile APIs, for instance, so they might not help much when writing a video editing UI. Alternatively, you may have your own proprietary format, or record short samples into memory, etc. Given the above, Im going to assume youre working with a list of floating-point values representing the audio, because thatll be helpful later on. Using ExtAudioFile, or an AudioConverter, make sure that your host format is set for floats, and you should be good. When youre pulling data from a file, keep in mind that its not going to be very quick, even on an SSD drive, thanks to format conversions. Id advise doing all this work in an auxiliary thread, no matter how you get your audio, because itll keep your application responsive. In Capos case, there is a separate thread that walks the entire audio file, doing the acquisition, reduction, and storage steps all at once. Because

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/ Page 2 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

Display Quality and Performance were high on the priority list, the drawing step is done only when needed.

Reduction

Audio contains tons of delicious data. Unfortunately, when accuracy isnt the top priority, its far too much data to be shown on the screen. With 44,100 samples/second, a second of audio would span ~17 30 Cinema Displays if you displayed one sample value per each horizontal pixel. If accuracy is your top priority, youre still going to be throwing lots of data away most of the time, except when your user wants to maintain a 1:1 sample:pixel ratio (or, in some cases, Ive seen a sample take up more than 1 pixel, for very fine editing). If youre writing an editor, or some other application that needs high-detail access to the source data, you will have to re-run the reduction step as the user changes the zoom level. When the user wants to see 1:1 samples:pixels, you wont throw anything away. When the user wishes to see 200:1 samples:pixels, youll throw away 199 samples for every pixel youre displaying. In the case of Capo, I chose to create an overview data set for the maximum zoom level, and keep that on the heap (a 5 minute song should take ~1MB RAM). In my case, I chose a maximum resolution of 50 samples per pixel, and created a data set from that. As the user zooms out, I then sample the overview data set to get the lower-resolution versions of the data. Accuracy isnt great, but its pretty fast. Now, when I talk about throwing away, or sampling the data set, Im not simply discarding data. In some cases, randomly choosing samples to include in the final output will work just fine. However, you may encounter some pretty annoying artifacts (missing transients, jumping peaks, etc) when you change zoom levels or resize the display. If Display Quality is low on your listwho cares? If you do care, you have a few options. Within each bin of the original audio, you can take a min/max pair, just the maximum magnitude, or an average. I have found the maximum magnitude to work well for the majority of cases. Heres an example of what I do in Capo (in pseudocode, of sorts): // source_audio contains the raw sample data // overview_waveform will be filled with the 'sampled' waveform data // N is the 'bin size' determined by the current zoom level for ( i = 0; i < sizeof(source_audio); i += N ) { overview_waveform[i/N] = take_max_value_of( &(source_audio[i]), N ) } Once you have your reduced data set, then you can put it on the screen.

Display

Here's where you have the most leeway in your implementation. I use the Quartz API to do my drawing. I prefer the family of C CoreGraphics CG* calls, because they're portable to CoreAnimation/iPhone coding, the most feature-rich, and generally quicker than their Cocoa equivalents. I won't get into any alternatives here (e.g. OpenGL), to keep it simple. If we stick with the Capo example, then we've chosen to use the maximum

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/

Page 3 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

magnitude data to draw our waveform. By doing so, we can exploit the fact that the waveform is going to be symmetric along the X axis, and only create one half of the final waveform path using some CGAffineTransform magic. In the past, developers would create waveforms in pixel buffers using a series of vertical lines to represent the magnitudes of the samples. I like to call this the "traditional waveform drawing". It's still used quite a bit today, and in some cases it works great (especially when showing very small waveforms, and pixels are scarce like in a multitrack audio editor).

I personally prefer to utilize Quartz paths so that I get some nice antialiasing to the waveform edge. Because Capo features the waveform so prominently in the display, I wanted to ensure I got top-notch output. Quartz paths gave me that guarantee. To build the half-path, we'll also be exploiting the fact that both CoreAudio and Quartz represent points using floating-point values. Sadly, this code is slightly less awesome in 64-bit mode, since CGFloats become doubles, and you have to convert the single-precision audio floats over to doubleprecision pixels. Luckily there are quick routines for that conversion in Accelerate.framework (A whole 'nother blog post, I know...). < p> - (CGPathRef)giveMeAPath { // Assume mAudioPoints is a float* with your audio points // (with {sampleIndex,value} pairs), and mAudioPointCount // contains the # of points in the buffer.

CGMutablePathRef path = CGPathCreateMutable(); CGPathAddLines( path, NULL, mAudioPoints, mAudioPointCount ); // magic!

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/ Page 4 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

return path;

} < p> Because magnitudes are represented in the range [0,1], and we're using Quartz, we can build a transform that'll scale the waveform path to fit inside half the height of the view, and then append another transform that'll translate/scale the path so it's flipped upside-down, and appears below the X axis line (which corresponds to a sample value of 0.0). Here's a zoomed in example of what I'm talking about.

And here's some code to give you an idea of what's going on to create the whole path: // Get the overview waveform data (taking into account the level of detail to // create the reduced data set) CGPathRef halfPath = [waveform giveMeAPath]; // Build the destination path CGMutablePathRef path = CGPathCreateMutable(); // Transform to fit the waveform ([0,1] range) into the vertical space // ([halfHeight,height] range) double halfHeight = floor( NSHeight( self.bounds ) / 2.0 ); CGAffineTransform xf = CGAffineTransformIdentity; xf = CGAffineTransformTranslate( xf, 0.0, halfHeight ); xf = CGAffineTransformScale( xf, 1.0, halfHeight ); // Add the transformed path to the destination path CGPathAddPath( path, &xf, halfPath );

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/

Page 5 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

// // xf xf xf

Transform to fit the waveform ([0,1] range) into the vertical space ([0,halfHeight] range), flipping the Y axis = CGAffineTransformIdentity; = CGAffineTransformTranslate( xf, 0.0, halfHeight ); = CGAffineTransformScale( xf, 1.0, -halfHeight );

// Add the transformed path to the destination path CGPathAddPath( path, &xf, halfPath ); CGPathRelease( halfPath ); // clean up! // Now, path contains the full waveform path. Once you have this path, you have a bunch of options for drawing it. For instance, you could fill the path with a solid color, turn the path into a mask and draw a gradient (that's how Capo does it), etc. Keep in mind, though, that a complex path with lots of points can be slow to draw. Be certain that you don't include more data points in your path than there are horizontal pixels on the screenthey won't be visible, anyway. If necessary, draw in a separate thread to an image, or use CoreAnimation to ensure your drawing happens asynchronously. Use Shark/Instruments to help you decide whether this needs to be done it's complicated work, and tough code to get working correctly with very few drawing artefacts. You don't even want to know the crazy code I had to get working in TapeDeck to have chunks of the waveform paged onto the screen. (Well, you might, but that's proprietary information, sorry. ;))

In Conclusion

People have suggested to me in the past that Apple should step up and hand us an API that would give waveform-drawing facilities (and graphs, too!). I disagree, and if Apple were to ever do this, I'd probably never use it. There are simply far too many application-specific design decisions that go into creating a waveform display engine, and whatever Apple would offer would probably only cover a small handful of use cases. Hopefully the above information can help you build a waveform algorithm that suits your application well. I think that by breaking the problem up into separate sub-problems, you can build a solution that'll work best for your needs.

This entry was posted on Tuesday, October 6th, 2009 at 12:48 pm and is filed under Software Development. You can follow any responses to this entry through the RSS 2.0 feed. You can leave a response, or trackback from your own site.

16 Responses to Drawing Waveforms

1. alastair Says:

October 7th, 2009 at 8:44 am

An even better option than minimaxing if you want really high quality results would be to bin the sample values in each pixel and then shade the pixels according to the frequency with which the value was above (or below) that point.

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/ Page 6 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

Of course, drawing this is then going to require a somewhat different approach. 2. chris Says:

October 7th, 2009 at 9:46 am

@alastair: Got an example of what that looks like? My gut says thatd result in all kinds of display artifacts 3. Uli Kusterer Says:

October 7th, 2009 at 2:59 pm

Hi, great article! Thanks for sharing this knowledge with the world. While most of this is information that can be found after searching, it is great to have the positive reinforcement that this is the general way to go about it. I was afraid Id have to deal with sample rates and other stuff, but its really just a bunch of floats that I can grab from ExtAudioFile, great! :-) As to Alastairs approach, I think hes essentially anti-aliasing. The areas that would have more lines get condensed into darker, while the areas that have fewer get lighter. That should work fine, if you like the fuzziness that anti-aliasing gives you. Tempted to sit down and write my own audio file view now dang! Thanks for this article! Uli 4. Andrew Kimpton Says:

October 7th, 2009 at 9:51 pm

Taking a straight magnitude and flipping it is quick (and halves the data storage) however it can cause the display to misrepresent any sort of DC bias thats present in the audio for many apps this is fine but if your scenario is more high end then this could be a concern. Equally a lot of people expect to see a mirror image waveform, and youd be surprised how uneven even well mastered audio can look ! Color coding according spectral power (via an FFT) has been done in a couple of high end audio editors (IIRC Pyramix might do this, and I think Waves does it in one of their plugins). Unless you know how to read what your looking at it can be confusing for a user. 5. Volker Says:

October 8th, 2009 at 5:35 am

Hi, been thru this nearly a year ago. I have to deal with 500 kHz sampled data files as well, which makes the down-sampling process for proper drawing a pain in the ***. In the end I found a way that works well and is fast. I use the flipping approach until a certain zoom level is reached and switch to draw all samples, what the hell mode. This approach is necessary since my users need to analyse the sound files for contained bat calls and do need to access stuff like DC offset, which is producing the impression of noise in the flipped display. see: http://www.ecoobs.de/art/her-bcAnalyzehttp://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/ Page 7 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

Screenshot.png 6. Volker Says:

October 8th, 2009 at 5:39 am

In addition: I dismissed using the min/max approach and favor an approach based on the RMS value of a window of samples. basically, with some tricks like overlapping windows and such. gives more appropriate results, but then, i am dealing not with music. 7. links for 2009-10-08 | manicwave.com Says:

October 8th, 2009 at 8:03 am

[...] SuperMegaUltraGroovy Blog Archive Drawing Waveforms (tags: cocoa audio graphics visualization) Share/Save [...] 8. chris Says:

October 8th, 2009 at 8:04 am

@Andrew Good to hear from an Ex-Be reader :) Youre very right about the DC bias. I was going to give an example of a low-frequency 20Hz sine wave modulated with a highfrequency 1kHz sine wave, which would not render properly with the maximum magnitude method I walked through. Min/max (value, not magnitude) tends to catch the DC issue well enough. Youre right about those fancy spectral displays being confusing for most users. I leave all the fancy visualizations up to FuzzMeasure. However, I might have some tricks up my sleeve for Capo soon enough ;) 9. chris Says:

October 8th, 2009 at 8:06 am

@Volker Yes, the hybrid approach is often called for beyond a certain threshold. In FuzzMeasure, I deal with a logarithmic X axis, where the curvedrawing approach must change as the data becomes more dense for higher frequencies. In the low-frequency range I can get real fancy, approximating the missing waveform data using a Bezir path, and in the highfrequency range I move to min/max. Seems to work real well. 10. Andrew Kimpton Says:

October 8th, 2009 at 8:17 am

Hi Chris ! Us Ex-Be folk are distributed fairly far and wide . Though Dan Sandler & I are now only seperated by about 10 floors though weve both end up in Boston (via very different routes). A while after leaving Be I worked at BIAS for 4 years so Ive seen a lot of waveforms in my time. When we looked st spectral coloring at BIAS I remember that we were concerned about one or more patents in the same area. I

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/

Page 8 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

cannot know remember the details though sorry 8-( 11. ChrisM Says:

October 15th, 2009 at 2:26 pm

Im using max/min pairs in my app. The difficulty Im experiencing at the moment, however, is how to get efficient interactive resizing. My waveform is scaled in the x and y directions, and since repainting on each resize can be expensive I cache an image and scale that. This is very fast, but unless the waveform is initially drawn at full size the waveform appears under-sampled when resized upwards. 12. chris Says:

October 15th, 2009 at 7:28 pm

@ChrisM: This is tough to deal with. I try to avoid scaling an image of the waveform curve as the anti-aliasing done to the original curve just doesnt behave well when resized. I recommend an intermediate representation that can be rendered to the screen quickly (in the form of a CGPath, or something like that). Perhaps while live-resizing (you can query an NSView to see if its mid-resize) you can avoid resampling the data until the resize is doneIve done something similar to this in an earlier FuzzMeasure version. 13. Fred Says:

November 1st, 2009 at 9:35 am

Does anyone know of a good code example that shows how to open an audio file (aif, wave, mp3, and aac) and then convert it to a buffer of Float32 as suggested in this article? If the incoming file is stereo, Id like two buffers and if mono, just one. So far, Im having terrible luck using AudioConverterFillComplexBuffer. Ive followed numerous examples from the web and AudioConverterFillComplexBuffer keeps returning osErr = -50. The callback seems to get called once, but never again (because of the error). Or, if I twiddle the channel count manually, I can get osErr=0, but the buffer seems to be empty and the callback gets called only once. Any ideas where I can go for help? Thanks! 14. chris Says:

November 1st, 2009 at 11:15 am

@Fred: I dont know of any offhand. If youre struggling, I recommend you first try using the ExtAudioFile API, and then later move to a separate AudioFile / AudioConverter approach (which Ive yet to find necessary, FWIW). With ExtAudioFile, the process boils down to opening the file, specifying your desired client format (Float32, deinterleaved in your case for two separate buffers), and then calling ExtAudioFileRead repeatedly to get your data. 15. Bastian Says:

March 26th, 2010 at 1:27 pm

Your post just solved a huge problem I was struggling with for the last few days. Thanks a lot!

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/ Page 9 of 10

SuperMegaUltraGroovy Blog Archive Drawing Waveforms

12/24/10 4:09 PM

(There really is not too much information out there on these topics) 16. John Myers Says:

November 2nd, 2010 at 2:34 am

Can you elaborate on the ExtAudioFile extraction process? Specifically, specifying your desired client format? I can get the data out but it seems to be random numbers which I suspect is because I am interpreting it wrong.

Leave a Reply

Name (required) Mail (will not be published) (required) Website

Anti-Spam Quiz: What is the acronym for SuperMegaUltraGroovy?

Submit Comment

Please contact website@supermegaultragroovy.com if you have any issues viewing this page. Powered by WordPress.

http://supermegaultragroovy.com/blog/2009/10/06/drawing-waveforms/

Page 10 of 10

You might also like

- An Evaluation of Factors Significant To Lamellar TearingDocument7 pagesAn Evaluation of Factors Significant To Lamellar TearingpjhollowNo ratings yet

- Create A Slick Motion Graphics Reel: Adobe After Effects Cs4 Quicktime Pro 7Document4 pagesCreate A Slick Motion Graphics Reel: Adobe After Effects Cs4 Quicktime Pro 7Cameron BellingNo ratings yet

- Rundown of Handbrake SettingsDocument11 pagesRundown of Handbrake Settingsjferrell4380No ratings yet

- D ADesignExampleDocument4 pagesD ADesignExampleCharles TolmanNo ratings yet

- ConvertToMooV Read Me (1991)Document3 pagesConvertToMooV Read Me (1991)VintageReadMeNo ratings yet

- Fuzzing Sucks!Document70 pagesFuzzing Sucks!Yury ChemerkinNo ratings yet

- Tutorial - Audio Editing MasterclassDocument7 pagesTutorial - Audio Editing MasterclassxamalikNo ratings yet

- Auto TuneDocument2 pagesAuto TuneSamir NomanNo ratings yet

- Week 12 ConclusionDocument4 pagesWeek 12 Conclusionrafiqueuddin93No ratings yet

- Wot, No Sampler?: Using Sample Loops With Logic AudioDocument3 pagesWot, No Sampler?: Using Sample Loops With Logic AudioPetersonNo ratings yet

- Webtoon Creator PDFDocument32 pagesWebtoon Creator PDFMorena RotarescuNo ratings yet

- Stereoscopic 3D With Sony VegasDocument3 pagesStereoscopic 3D With Sony VegasJack BakerNo ratings yet

- Creating Rendered Animations in AutoCAD Architecture 2009Document8 pagesCreating Rendered Animations in AutoCAD Architecture 2009245622No ratings yet

- Gleetchlab3: Operator's Manual V 1.0Document26 pagesGleetchlab3: Operator's Manual V 1.0Tomotsugu NakamuraNo ratings yet

- Multi-Pass in c4dDocument5 pagesMulti-Pass in c4dqaanaaqNo ratings yet

- A Beginners Guide To Setting Up Your Own Home StudioDocument11 pagesA Beginners Guide To Setting Up Your Own Home StudioAntonioPalloneNo ratings yet

- Level Headed: Gain Staging in Your Daw SoftwareDocument5 pagesLevel Headed: Gain Staging in Your Daw SoftwareLovely GreenNo ratings yet

- After Effects Tutorial For BeginnersDocument12 pagesAfter Effects Tutorial For BeginnersDaniel ValentineNo ratings yet

- How To Do A High Quality DivX RipDocument2 pagesHow To Do A High Quality DivX RipUOFM itNo ratings yet

- Sampling TutorialsDocument38 pagesSampling Tutorialsbolomite100% (1)

- Stackoverflow PDFDocument10 pagesStackoverflow PDFjudapriNo ratings yet

- Focus - 0027 - 050604 Plotting Ansys FilesDocument24 pagesFocus - 0027 - 050604 Plotting Ansys FilessirtaeNo ratings yet

- Homer Reid's Ocean TutorialDocument3 pagesHomer Reid's Ocean TutorialModyKing99No ratings yet

- Made From Scratch: Where To Start With Audio ProgrammingDocument12 pagesMade From Scratch: Where To Start With Audio ProgrammingvillaandreNo ratings yet

- gOpenMol3 00Document209 pagesgOpenMol3 00Kristhian Alcantar MedinaNo ratings yet

- Trucos SonarDocument15 pagesTrucos SonarAlberto BrandoNo ratings yet

- Peter Shirley-Ray Tracing in One Weekend 1Document5 pagesPeter Shirley-Ray Tracing in One Weekend 1Kilya ZaludikNo ratings yet

- 4 B DX 9 VisDocument16 pages4 B DX 9 Vispulp noirNo ratings yet

- RVC v2 AI Cover Guide (By Kalomaze)Document8 pagesRVC v2 AI Cover Guide (By Kalomaze)faltuforallsiteNo ratings yet

- A Best Settings Guide For Handbrake 0.9.9Document19 pagesA Best Settings Guide For Handbrake 0.9.9stefka1234No ratings yet

- Corona Render ThesisDocument8 pagesCorona Render Thesisdwt5trfn100% (2)

- 101 HandbrakeDocument27 pages101 HandbrakeLeong KmNo ratings yet

- Gpuimage Swift TutorialDocument3 pagesGpuimage Swift TutorialRodrigoNo ratings yet

- Mastering On Your PCDocument9 pagesMastering On Your PCapi-3754627No ratings yet

- "Shoot To Edit": Best Videos Have A Beginning, Middle, and EndDocument12 pages"Shoot To Edit": Best Videos Have A Beginning, Middle, and Endsunilpradhan.76No ratings yet

- Optimization - Your Worst EnemyDocument6 pagesOptimization - Your Worst EnemyGaurav SrivastavaNo ratings yet

- Using Windows Live Movie Maker HandoutDocument12 pagesUsing Windows Live Movie Maker Handoutparekoy1014No ratings yet

- The Art of SoundfontDocument29 pagesThe Art of Soundfontrichx7No ratings yet

- Introduction To Color Management: Tips & TricksDocument5 pagesIntroduction To Color Management: Tips & TricksThomas GrantNo ratings yet

- EAC - Exact AudioDocument7 pagesEAC - Exact AudioDede ApandiNo ratings yet

- 3 - Animation TipsDocument10 pages3 - Animation Tipsapi-248675623No ratings yet

- Creative Workflow Hacks Final Cut Pro To After Effects Scripting Without The HassleDocument35 pagesCreative Workflow Hacks Final Cut Pro To After Effects Scripting Without The HassleJesús Odremán, El Perro Andaluz 101No ratings yet

- Quicktime Gamma Problema VIDEO COPILOT After Effects Tutorials, Plug-Ins and Stock Footage For Post ProDocument21 pagesQuicktime Gamma Problema VIDEO COPILOT After Effects Tutorials, Plug-Ins and Stock Footage For Post ProAlvaro CalandraNo ratings yet

- Thesis Audio HimaliaDocument5 pagesThesis Audio HimaliaPaperWritersMobile100% (2)

- C# - How Can I Embed Icon and Video Stream - Stack OverflowDocument3 pagesC# - How Can I Embed Icon and Video Stream - Stack OverflowanasNo ratings yet

- Resolume Avenue 3 Manual - EnglishDocument53 pagesResolume Avenue 3 Manual - EnglishNuno GoncalvesNo ratings yet

- Tutorial: Walking A Centipede Along A Path - Or-Building Complex Animations From Simple PiecesDocument28 pagesTutorial: Walking A Centipede Along A Path - Or-Building Complex Animations From Simple PiecescetelecNo ratings yet

- Cinelerra - Manual BásicoDocument3 pagesCinelerra - Manual Básicoadn67No ratings yet

- Video Editing Notes PDFDocument16 pagesVideo Editing Notes PDFknldNo ratings yet

- DP Audio Production TricksDocument4 pagesDP Audio Production TricksArtist RecordingNo ratings yet

- PowerQuest ManualDocument19 pagesPowerQuest ManualRobertoNo ratings yet

- MCompressorDocument58 pagesMCompressorDaniel JonassonNo ratings yet

- Doki Timing GuideDocument10 pagesDoki Timing GuideTheFireRedNo ratings yet

- Assingment 2 Setting Up A Project in DAWDocument6 pagesAssingment 2 Setting Up A Project in DAWMathias HernandezNo ratings yet

- VST To SF2Document8 pagesVST To SF2Zino DanettiNo ratings yet

- Polymer GravureDocument36 pagesPolymer GravurePepabuNo ratings yet

- Gjoel Svendsen Rendering of Inside PDFDocument187 pagesGjoel Svendsen Rendering of Inside PDFVladeK231No ratings yet

- Beginners Guide To XmonadDocument25 pagesBeginners Guide To XmonadAvery LairdNo ratings yet

- Wireless Room Freshener Spraying Robot With Video VisionDocument5 pagesWireless Room Freshener Spraying Robot With Video Visionsai thesisNo ratings yet

- Ion ExchangeDocument12 pagesIon ExchangepruthvishNo ratings yet

- Transistor MCQDocument5 pagesTransistor MCQMark BelasaNo ratings yet

- All Document 'TYPES' & DescriptionsDocument5 pagesAll Document 'TYPES' & DescriptionsPhilipNo ratings yet

- FCS-8005 User ManualDocument11 pagesFCS-8005 User ManualGustavo EitzenNo ratings yet

- The Cut Off List of Allotment in Round 03 For Programme B.Tech (CET Code-131) For Academic Session 2023-24Document42 pagesThe Cut Off List of Allotment in Round 03 For Programme B.Tech (CET Code-131) For Academic Session 2023-24addisarbaNo ratings yet

- Intake-Air System (ZM)Document13 pagesIntake-Air System (ZM)Sebastian SirventNo ratings yet

- CL 6 NSTSE-2021-Paper 464Document18 pagesCL 6 NSTSE-2021-Paper 464PrajNo ratings yet

- GDF SuezDocument36 pagesGDF SuezBenjaminNo ratings yet

- Flight Management and NavigationDocument18 pagesFlight Management and NavigationmohamedNo ratings yet

- 32 Samss 011Document27 pages32 Samss 011naruto256No ratings yet

- MAD Lab SyllabusDocument2 pagesMAD Lab SyllabusPhani KumarNo ratings yet

- Diagram SankeyDocument5 pagesDiagram SankeyNur WidyaNo ratings yet

- Cruis'n Exotica (27in) Operations) (En)Document105 pagesCruis'n Exotica (27in) Operations) (En)bolopo2No ratings yet

- NEC IDU IPASOLINK 400A Minimum ConfigurationDocument5 pagesNEC IDU IPASOLINK 400A Minimum ConfigurationNakul KulkarniNo ratings yet

- TFH220AEn112 PDFDocument4 pagesTFH220AEn112 PDFJoseph BoshehNo ratings yet

- MID 185 - PSID 3 - FMI 8 Renault VolvoDocument3 pagesMID 185 - PSID 3 - FMI 8 Renault VolvolampardNo ratings yet

- CHAPTER 8: Checkboxes and Radio Buttons: ObjectivesDocument7 pagesCHAPTER 8: Checkboxes and Radio Buttons: Objectivesjerico gaspanNo ratings yet

- Topic 2 Beam DesignDocument32 pagesTopic 2 Beam DesignMuhd FareezNo ratings yet

- Graduate Program CoursesDocument11 pagesGraduate Program CoursesAhmed Adel IbrahimNo ratings yet

- S 4 Roof Framing Plan Schedule of FootingDocument1 pageS 4 Roof Framing Plan Schedule of FootingJBFPNo ratings yet

- Cherokee Six 3oo Information ManualDocument113 pagesCherokee Six 3oo Information ManualYuri AlexanderNo ratings yet

- Mte 3152 Electric Drives Mid TermDocument2 pagesMte 3152 Electric Drives Mid TermAjitash TrivediNo ratings yet

- Mechanical Operations (CHE-205) (Makeup) (EngineeringDuniya - Com)Document3 pagesMechanical Operations (CHE-205) (Makeup) (EngineeringDuniya - Com)Cester Avila Ducusin100% (1)

- Topic 6 Fields and Forces and Topic 9 Motion in FieldsDocument30 pagesTopic 6 Fields and Forces and Topic 9 Motion in Fieldsgloria11111No ratings yet

- Optocoupler Input Drive CircuitsDocument7 pagesOptocoupler Input Drive CircuitsLuis Armando Reyes Cardoso100% (1)

- Manual Conefor 26Document19 pagesManual Conefor 26J. Francisco Lavado ContadorNo ratings yet

- Truck Unloading Station111Document4 pagesTruck Unloading Station111ekrem0867No ratings yet

- Surfcam 2014 r2 - 32bitDocument152 pagesSurfcam 2014 r2 - 32bitClaudio HinojozaNo ratings yet