Professional Documents

Culture Documents

R SquaredandStandarError

R SquaredandStandarError

Uploaded by

DellOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

R SquaredandStandarError

R SquaredandStandarError

Uploaded by

DellCopyright:

Available Formats

The standard error of the regression (S) and R-squared are two key goodness-of-fit measures for

regression analysis. But according to me the often overlooked standard error of the regression can

tell us things that the high R-squared simply can’t.

Let me explain why I think so.

1. The standard error of the regression provides the absolute measure of the typical distance

that the data points fall from the regression line. S is in the units of the dependent

variable.

R-squared provides the relative measure of the percentage of the dependent variable

variance that the model explains.

2. The standard error of the regression has several advantages. S tells us how precise the

model’s predictions are using the units of the dependent variable. This statistic indicates

how far the data points are from the regression line on average. We usually prefer lower

values of S because it signifies that the distances between the data points and the fitted

values are smaller. S is also valid for both linear and nonlinear regression models.

High R-squared values indicate that the data points are closer to the fitted values. But they

don’t inform us how far the data points are from the regression line. Moreover R-squared

is valid for only linear models, so we can't use it to compare a linear model to a nonlinear

model.

3. Finally , even if R-squared value is low but we have statistically significant predictors , we

may still draw important conclusions about how changes in the predictor values are

associated with changes in the response value.

I offer my sincere thanks to Jim Frost who helped me come to the above specific conclusion.

You might also like

- RegressionDocument3 pagesRegressionPankaj2cNo ratings yet

- Introduction To GraphPad PrismDocument33 pagesIntroduction To GraphPad PrismHening Tirta KusumawardaniNo ratings yet

- Regression AnalysisDocument7 pagesRegression AnalysisshoaibNo ratings yet

- What Is RDocument4 pagesWhat Is Raaditya01No ratings yet

- RDocument5 pagesRsatendra12791_548940No ratings yet

- Coefficient of Determination and Interpretation of Determination CoefficientDocument7 pagesCoefficient of Determination and Interpretation of Determination CoefficientJasdeep Singh BainsNo ratings yet

- Coefficient of DeterminationDocument4 pagesCoefficient of Determinationapi-140032165No ratings yet

- R Squared and Adjusted R SquaredDocument2 pagesR Squared and Adjusted R SquaredAmanullah Bashir Gilal100% (1)

- 5-LR Doc - R Sqared-Bias-Variance-Ridg-LassoDocument26 pages5-LR Doc - R Sqared-Bias-Variance-Ridg-LassoMonis KhanNo ratings yet

- ACC 324 Wk8to9Document18 pagesACC 324 Wk8to9Mariel CadayonaNo ratings yet

- Coefficient of DeterminationDocument4 pagesCoefficient of Determinationapi-150547803No ratings yet

- Experiment No 7Document7 pagesExperiment No 7Apurva PatilNo ratings yet

- Summary: Correlation and RegressionDocument6 pagesSummary: Correlation and RegressionDam DungNo ratings yet

- Statistical Testing and Prediction Using Linear Regression: AbstractDocument10 pagesStatistical Testing and Prediction Using Linear Regression: AbstractDheeraj ChavanNo ratings yet

- Linear Regression - Lok - 2Document31 pagesLinear Regression - Lok - 2LITHISH RNo ratings yet

- DS-203: E2 Assignment - Linear Regression Report: Sahil Barbade (210040131) 29th Jan 2024Document18 pagesDS-203: E2 Assignment - Linear Regression Report: Sahil Barbade (210040131) 29th Jan 2024sahilbarbade.ee101No ratings yet

- Metrices of The ModelDocument9 pagesMetrices of The ModelNarmathaNo ratings yet

- Coefficient of DeterminationDocument11 pagesCoefficient of DeterminationKokKeiNo ratings yet

- Halifean Rentap 2020836444 Tutorial 2Document9 pagesHalifean Rentap 2020836444 Tutorial 2halifeanrentapNo ratings yet

- Thesis Using Multiple RegressionDocument5 pagesThesis Using Multiple Regressionrwzhdtief100% (2)

- 36.how To Interpret Adjusted R-Squared and Predicted R-Squared in Regression AnalysisDocument41 pages36.how To Interpret Adjusted R-Squared and Predicted R-Squared in Regression Analysismalanga.bangaNo ratings yet

- Regression AnalysisDocument25 pagesRegression AnalysisAnkur Sharma100% (1)

- Assignment of Quantitative Techniques: Questions For DiscussionDocument3 pagesAssignment of Quantitative Techniques: Questions For Discussionanon_727430771No ratings yet

- Linear RegressionDocument28 pagesLinear RegressionHajraNo ratings yet

- Coefficient of DeterminationDocument7 pagesCoefficient of Determinationleekiangyen79No ratings yet

- RegressionDocument34 pagesRegressionRavi goel100% (1)

- Coefficient of DeterminationDocument8 pagesCoefficient of DeterminationPremlata NaoremNo ratings yet

- UNIT-III Lecture NotesDocument18 pagesUNIT-III Lecture Notesnikhilsinha789No ratings yet

- Machine Learning AlgorithmDocument20 pagesMachine Learning AlgorithmSiva Gana100% (2)

- Regression OutputDocument3 pagesRegression Outputgurkiratsingh013No ratings yet

- Notes On Linear Regression - 2Document4 pagesNotes On Linear Regression - 2Shruti MishraNo ratings yet

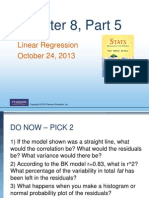

- Chapter08 Part 5Document19 pagesChapter08 Part 5api-232613595No ratings yet

- Theory Week 9Document1 pageTheory Week 9SergioLópezNo ratings yet

- Regression Analysis Homework SolutionsDocument7 pagesRegression Analysis Homework Solutionsafnawvawtfcjsl100% (1)

- Module 4: Regression Shrinkage MethodsDocument5 pagesModule 4: Regression Shrinkage Methods205Abhishek KotagiNo ratings yet

- Regression Model EvaluationDocument1 pageRegression Model EvaluationMr. QuitNo ratings yet

- Simple Linear Regression Homework SolutionsDocument6 pagesSimple Linear Regression Homework Solutionscjbd7431100% (1)

- ML - LLM Interview: Linear RegressionDocument43 pagesML - LLM Interview: Linear Regressionvision20200512No ratings yet

- Fitting & Interpreting Linear Models in Rinear Models in RDocument8 pagesFitting & Interpreting Linear Models in Rinear Models in RReaderRat100% (1)

- Linear - Regression & Evaluation MetricsDocument31 pagesLinear - Regression & Evaluation Metricsreshma acharyaNo ratings yet

- Lasso and Ridge RegressionDocument30 pagesLasso and Ridge RegressionAartiNo ratings yet

- Unit-III (Data Analytics)Document15 pagesUnit-III (Data Analytics)bhavya.shivani1473No ratings yet

- Definitions and Uses of Statistical Data: - The Notes Were Made From SMP Series, CUPDocument3 pagesDefinitions and Uses of Statistical Data: - The Notes Were Made From SMP Series, CUPmathsgeek1992No ratings yet

- What Is Regression AnalysisDocument18 pagesWhat Is Regression Analysisnagina hidayatNo ratings yet

- 03 Logistic RegressionDocument23 pages03 Logistic RegressionHarold CostalesNo ratings yet

- Multiple Linear Regression: ApplicationDocument22 pagesMultiple Linear Regression: Applicationdanna ibanezNo ratings yet

- Module 1 NotesDocument73 pagesModule 1 Notes20EUIT173 - YUVASRI KB100% (1)

- Simple Linear RegressionDocument20 pagesSimple Linear RegressionLarry MaiNo ratings yet

- Module 3 EDADocument14 pagesModule 3 EDAArjun Singh ANo ratings yet

- Linear Regression Basic Interview QuestionsDocument36 pagesLinear Regression Basic Interview Questionsayush sainiNo ratings yet

- ML Activity 2 - 2006205Document3 pagesML Activity 2 - 2006205tanishk ranjanNo ratings yet

- Coefficient of Determination PDFDocument7 pagesCoefficient of Determination PDFRajesh KannaNo ratings yet

- 20200715114408D4978 Prac11Document19 pages20200715114408D4978 Prac11Never MindNo ratings yet

- Big Data - Sources and OpportunitiesDocument30 pagesBig Data - Sources and OpportunitiesmsiskastockerssNo ratings yet

- Multiple Regression: X X, Then The Form of The Model Is Given byDocument12 pagesMultiple Regression: X X, Then The Form of The Model Is Given byezat syafiqNo ratings yet

- BRM Multivariate NotesDocument22 pagesBRM Multivariate Notesgdpi09No ratings yet

- Econometrics ProjectDocument17 pagesEconometrics ProjectAkash ChoudharyNo ratings yet

- Copie de Executive Summary of Marketing Plan by Slidesgo 1Document50 pagesCopie de Executive Summary of Marketing Plan by Slidesgo 1Imen BoussandelNo ratings yet

- What Is Multiple Linear Regression (MLR) ?Document2 pagesWhat Is Multiple Linear Regression (MLR) ?TandavNo ratings yet