Professional Documents

Culture Documents

Itc (Unit 1 and Unit 2)

Itc (Unit 1 and Unit 2)

Uploaded by

raushan0 ratings0% found this document useful (0 votes)

25 views50 pagesOriginal Title

ITC (UNIT 1 AND UNIT 2)

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

Download as pdf

0 ratings0% found this document useful (0 votes)

25 views50 pagesItc (Unit 1 and Unit 2)

Itc (Unit 1 and Unit 2)

Uploaded by

raushanCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

Download as pdf

You are on page 1of 50

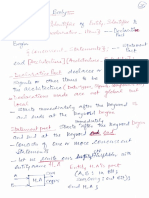

Information Theory ri

and Coding —

Ca TS

maton?

n Sources

ees of Source and their Models

ematical Models for the

) —— nfommaton Sources

slormaton content ofa Discrete

Memaryess Soures (OMS)

n Content of a Symbol (Le,

Measure oflnformation)

ved Examples on Entropy and

Ilormaton Rat

Memorytess Channels,

pdtional and Joint Entropies

information

Capacity

‘Channel: Shannon-

Ay A detailed Study

.on of Continuous Signals

y in the Transmission

of Bandwidth for Signal-to-

nication Systems in

n's Equation

9

-0 Compression

n encoding

21 Coding

16.1. INTRODUCTION

As discussed in Chapter 1,

carry information-bearing.

Place over a communicatior

the purpose of a communication system is to

baseband signals from one place to another

in channel. In the last fow chapters, we have

discussed a number of modulation schemes to accomplish thie purpoce

But whatiis the meaning ofthe word Information. To answer this question

‘we need to discuss information theory.

Infact, information theory is a branch of probability theory which

may be applied to the study of the communication systems. This broad

mathematical discipline has made fundamental.contributions, not only

to communications, but also computer science, statistical physics and

probability and statistics.

Further, in the context of communications, information theory deals

‘with mathematical modelling and analysis of a communication system

rather than with physical sources and physical channels. As a matter of

fact, Information theory was invented by communication scientists while

they were studying the statistical structure of electronic communication

equipment. When the communique is readily measurable, such as an

electric current, the study of the communication system is relatively

easy. But, when the communique is information, the study becomes rather

difficult. How to define the measure for an amount of information? And

also having described a suitable measure, how can it be applied to improve

the communication of information? Information theory provides answers

to all these questions. Thus, this chapter is devoted to a detailed discussion

of information theory.

16.2. WHAT IS INFORMATION?

Before discussing the quantitative measure of information, let us review

‘a basic concept about the amount of information in a message. Few messages

produced by an information source contain more information than others

This may be best understood with the help of following example:

Consider you are planning a tour a city located in such an area where

rain fall is very rare. To know about the weather forecast you will call

the weather bureau and may receive one of the following information:

(i) It would be hot and sunny

Gi) There would be scattered rain

(ii) There would be a cyclone with thunderstorm

Itmay be observed that the amount of information received is clearly

different for the three messages. The first message, just for instance,

contains very little information because the weather in a desert city in

summer is expected to be hot and sunny for maximum time. The second.

805

808 - se it is not an event that

formation because :

2 601 net Mvompared to the second message,

or nan conceptual basis the amount oti

ili \¢ likelihood or probability of

related 19 consists of maximum

ty of the underlying

formation theory in the se

rain conta

contains oven

tan he city. Hen

aa event may be related to the 1A

east likelihood event

uh the Teast hry upon the neta

Maportant concepts related to in

message forecasting a scatte

The farvenst of a evelonie storm c

the thind foreoast is a rarest event

from the knowledge of occurrence

event, The message associated wit

amount of information in a messaRe

actual content, Now, let us discuss few iny

16.3. INFORMATION SOURCES

7 baliiiss a

ved as an object which produces an he ou

Ansnfnmation source maybe lowes 98 al suree ina communication sy

Caesars er analog or discrete. In this chapter, we deal mainly with

produces messages, and it can be cited jiserete sources through the use of sampling and quant

since analog sources can be transformed to di isa source which has only a finite set of :

Aro ee. rman 2M 4 hab athe ment ech et

outputs, Th

letters,

(ii) Classification of Information Sources

‘classified as having memory or being memoryless.

1 which a current symbol depends on the previous

each symbol produced is

Information sources can be

Aeource with memory is one fo

symb moryless source is one for which

independent of the previous symbols

Ut discrete memoryless source (DMS) can be characterized by the list of the

symbols, the probability assignment to these symbols, and the specification of

the rate of generating these symbols by the source.

16.4 TYPES OF SOURCES AND THEIR MODELS

In the field of communication, we come across different types of sources. The classification of

shown below:

Sources

‘Analog information sources Discrote information sources

ne

Discrete mamoryiess ‘Static +a

‘sources (OMS) abet!

1, Analog Information Sources

‘As a matter of fact, communication systems are designed to transmit the information generated

‘estination, Information sources may take a variety of different forms. As an example, in the radio

i, the source is generally an audio (voice or music) or moving hese

of music) or moving images. The output of these sot

‘analog signals and therefore, these sources are called as ‘Analog sources” 2 °4

2, Discrete Information Sources i

If source of information is a digital computer or a stora

‘output is in the diserete form such as bina

the discrete sources. Thus, the output of « di

1010111

(i) Discrete Memoryless Sources (DMS)

For a discrete source, ifthe «

ttre opts tan, the ote cada des eat) atte independant tom

ed as ‘discrete mi y i

generate a rad sequence 101101000,. such that eash Uaneey tee eae exami

digits, then the source is called ws a binary memoryless souree noe

Gi) Stationary Soures

Ifthe output of a discrete source is statist

s statistical

discrete stationary source, The example af a dicereyoe eae 8 is

Property of a discrete stationary source is that the je

outputs remain same even if the timo origin is shifted

[SSE

807

Floneer in Communications: Glau

rin

Bor in Gaylord Mary ide Elwood Shannon (1916-1972)

with degrees in merge Ett, Claude Shannon graduated from Michigan University

nati of Technology (MATT) i nee and, in 1040, from the Massachusetts

Shannon’ theories effecneay

Agta electronica

th master and doctoral degrees in mathematics

its tl Provided the mathematical foundation for designing

i fetch form the basis of modern-day information processing.

; Shannon served as a National Resenrch Fello

mn University nnd, in 1941, joined Bell

cian, While at Bell Labs, Shannon

ar of his research for improving the

39 long-distance telephone and

transmission and

telegraph lines,

MATHEMATICAL MODELS FOR THE INFORMATION SOURCES

The most important property of an information sourea

Jredicted exactly). In other words, we an say that fi

that it produces an output that js random (which eannot be

ea jouree output ean be characterized in statistical terms. Ifthe

source prods a our whichis Knawn exatys veallhcrelwodlA bi Biased fs Gattenit ee eee oe

simplest-type of deere con ced Tau ilu laay OUreaEAL aaa cin laa enue eat nee

Fa a ae nL aTTSPSaaET pe Meroe BEAESES [OM SWaeeaten snd tg sere raaitigecieis oeoatla

CE ee reece tes ake BN qlee riitauia bared ise cuca ete

selected from those ottors| For constructing almat forties tandal fxrw alveria seiree lebanese lta oak

Tce in the et 0 Xa) has @ probability of eerrone equal te tce

‘This means that

P(x) = PK =x), 1sisLand Fp(q)=1

‘The above expression represents a discrete source of information mathematically

[[155. INFORMATION CONTENT OF A DISCRETE MEMORYLESS SOURCE (DMS)*

‘The amount of information contained in an event is closely related to ita uncertainty

surprise and information are all related to each other, Before an event oceurs, there ig

event occurs there is an amount of surprise and after the occurrence of an event there is a gain of information,

Messages containing knowledge of high probability of occurrence convey relatively little information. We note that

sfan event is certain (that is, the event occurs with probability 1) it eonvoys zero information, Thus, a mathematical

measure of information should be a function of the probability of the outcome and should satisty the following

() Information should be proportional to the uncertainty of an outcome.

(j)) Information contained in independent outeomes should add.

[[16.7._ INFORMATION CONTENT OF A SYMBOL (ic.,

1. Definition

Asa matter of fa

‘The words uncertainity,

in uncertainty, when the

LOGARITHMIC MEASURE OF INFORMATION)

the quantitative measure ofthe information is dependent on our notion ofthe word ‘information’

let us consider a discrete memoryless source (DMS) denoted by Xand having alphabet (x, x... xq).TThe information

content of a symbol x, denoted by I(x, is lined by

1s) = lors Fars =~ lou, Px) (6.1)

where, Ps) is the probability of occurrence of symbol x,.'T

2 Properties of I(x)

The information content of a symbol x,, denoted by I(x) satisfies the following properties:

I(x) = 0 for PG,

Tex) 20

I(x) > Icy) if Px) 74 = AHO) symbol Ans.

ith two symbols x, and x,, Prove that H(X) is maximum when

EXAMPLE 16.10. Given a binary memoryless source X wit

‘both x, and x, equiprobable.

Solution: Here, let us assume that

P(x) = a #0 that PC

We know that entropy is given by

Ps) logs PCs) bitleymba

HO)

: 1

‘Using the relation (x) = 108 egay"

Communication System,

a12 i

Thu 00 = = aor, a=(1 =a) 10m (=) afi

Differentiating abovo equation with rospect toc, we get

ANC) 8 toga (1 aloga(t= 1

a da de

a Lion of

Using tho relation alony = Slomvg

a)

/ cot log =

We get we ol

Noto that the maximum val of H(X) requires that ;

aH)

aOR

aa

tea

‘This means that beet

Note that H(X) = 0 when «= 0 or 1

HX) is maximum and ia given by

When Pax)

HOO,

Ligg, 24+ Hoa2 = 1 Yaymbol Hence Froved

2

2

EXAMPLE 16.11. Verify the following exprossion:

0 5 HQX)s lox, m

where m is the size of the alphabet of X. ‘|

roof of the lower bound

0< Paps,

1 1

21 and lone 20

Peay 22 and OER

‘Then, it follows that

1

Predloe aes = 0

EEE CREO

‘Thue, HH) =

Next, we note that

1

Pox loge pe =

TrCpiee

Hand only if P(x) = 0 or 1

Since P)) =

when P(x) = 1 then P(x) = 0for j +i, Thus, only in this cane, H(X) = 0,

Proof of th

upper bound

Lot us consider two probability distributions (P(x) = Pj] and [Q(x) = Q] on tho alphabet (x).

1, 2. mm, such that

DR a1 ond DQ

i) |

that $ Plog @t = 1 ¥ pin % |

We know that Blows 7 nay |

Next, using the inequality

Inasa-1 azo co) \

‘nd noting thatthe equality hole only ifa.= 1, wo obtain

§ indis SpA SQ) ¥

Bing Sn(t | 2a Ba-DN =o by using equations (i), oi)

ss Q

Honco, wo have loys Ls i

I Snes TET STS S SS

srmaton Theory and Coding

813

sect ines

‘ ieee S . set

Wore 2 PetEs ~D Pik Slog m

= HO)-lorsm 3°, = Hon)

srefore, we get HQ) < log, m :

‘ality bold nly ithe symbols in aro ofp abay

Prove that the entropy of

exasurt 16.12 Py of extremely likely an

se ofthe extremely likely message, there is onhe neem unlikely message is zero.

a cre is only one single.

The entropy ofa most likely message sig greycne Hele pomible messages tobe naraitied. Therefore its

lop, m <0

wr rsan(2)eame

I 2. Foran extremely unlikely message x. its probability pix.) 5g, a

Toerefore, H = p(x.) lo Ap )

"\Pia))

i)

the average information or entropy ofthe most ily and most unlikely wessages

IAMPLE 16.13. For a source transmitting two independent mes

respectively, prove that the entropy is maximum when both the me

{siropy (Hi) as a function of probability (p) of one ofthe messages

Solution: Itis given that,

Messages m, has a probability of p and message m, has a probability of (1—

Therefore, the average information per message is givenag sn?

H = p log, (V/p) + (1 ~ p) log, (Wp)

1 Entropy when both the messages are equally likely a

‘Ween m, and mare equally likely,

Pp —P)

Hee p=12

Si .g in equation (i), we get ws

1

Jog, @)+ Hoes

H 2 182 (2), gies (2)

: H = 1 bitimessage «

tcwillbe proved in the next part ofthe example that this value of His its maximum value

2.Variation of H with probability p

Following table ws values of H for different values of (p). These values are obtained by using equation (i)

H otmessage

Hae

to}—

a8!

= [© [02] 04 [os [os ool aoe

‘-p} 1 | 08] 06 |05 | 04] 02} 0 oo inal

Ho a7 [ora | 0

7 o72|os7| a oso od

02

p

v 02 040506 08 10

Fig, 16.1, Average information H for two messages ploted as a function of

probably p of one of the messages wt

: =i

«_.'* varation of H is graphically shown in figure 16.1, This clearly shows that the maximum value of ent70P¥ Haas

and itis obtained when both the messages are equally likely.

Communication Systems

814 —

Cn are ae equation (i is frequently encountered in tho information theoreti problems,

‘The function of pon the RHS of equation (i) is

Its called ns the entropy function and denoted by H(P).

sym gv avi

EXAMPLE 16.14, For a souree transmitting M messages, my 4

information/message when all the messages are equally,

probabilities ofp

Paro find OWE the average

Sites hanne onc sr fcr ae mani f

= Ymoedlnn)

Thon 11 = po (i) yl + oD)

= Fag 09 hog #9

Here = es, a

Important Point: It can be shown that for M transmitted messages, the maximum value of His obtained when all sa

are equally likey,

‘Therefore, Haas = log, QD

46.10.2, Extension of a Discrete Memoryless Source

It is often useful to consider blocks of symbols (messages) rather than considering the individual messages,

Ench such block will be consisting of n successive symbols.

For example, figure 16.2 shows a stream of binary bits, in which cach bit (0 or 1) ean be considered as an individual

symbol.

‘And we can make a block of 2 or 3 or 4 successive bits to form one block. So that n= 2, 3, of 4.

[Bet rena rb

| sistem [Of] fo [7 [1 [| Jo] [o]°]o]*]+]1]o]o[1 [|e

fof [ols fifofs Jolt fofofofay+]1 polos loys

va 162

We can view each such block as being produced by an extended source having M" distinct blocks, where, M is the

number of distinct messages (symbols) in the original source, For example,

thn, the number of tie! sree ha pesee wc 2 Sera

seed wre whats, tdi canes 9.08 Lana

Ifn = 3, then the extended source will have M" = 2° = 8 distinct blocks (000 to Hi).

In case of a discrete memoryless channel, all the source symbols are statistically independent. So, probability of a.

i mus sibel of an extended source (¢.g. p(00) or p(01) ete. is equal to the probability of the product of n source symbols.

}| ‘That means if n = 2, then the souree symbols produced by the

} probabilities are as follows

if the original source is a binary source,

wo form groups of 2, i.e., n= 2, thon, the

extended source are 00, 01, 10 or 11 and their

(00) = p(0) x p(0)

(01) = p(0) x p(t)

(10) = p) * p(0)

(11) = p(t) * pa)

‘This is because the joint probability p(AB) for statistically independent events A and Bis

P(AB) = p(A)- p(B)

16.10.3. Entropy of the Extended Source

Let the entropy of the original discrete

from the discu

‘memoryless source be Hi

ion we had till now, wo can write that the et

HG") = non)

I(X) and that of the extended source be (X*), Then,

intropy of extended source is

son Theory and Coding

a 815

11. INFORMATION RATE

on and Mathematical Expression

Definite aime rate at which aouree3X fy

rahe time Fat Te cmt symbole ia (ysbolas),theitormati »

BCCI ation rate Rof the source is given by

Here Ris information rate (16.10)

HO) is entropy oF average information

is rate at which symbols are generated,

Information ate Re rpretentd In aversrenimber tile stinenatinnerreoen Itis calculated as under:

= [rw senbols Fc p Baformalion bite

second caleba

a as.

x R = Information bits/second (16.12)

[[15.12. FEW SOLVED EXAMPLES ON ENTROPY AND INFORMATION RATE

heii etal eloot ot aeearce eBeane taaibeecoens resumes teenie

HOD

$e r bisa

1

16 P= 2

Here, given that =

‘Sobstituting all the values in equation (), we get

Toe rate, rat which symbols are generated is given by

F = 2(10°)(82) = 64(10% element/second

Hence, the average rate of information conveyed is given by

R = rHO0 = 64(10(4) = 256(109 be = 256 Mb/s Ans.

EIAMPLE 16.16. Given a telegraph source having two symbols, dot and dash. The dot duration Is 0.2 sec. The dash

duration is 3 times the dot duration. The probability of the dot's occurring is twies that of the dash, aad the tine

between symbols is 0.2 sec. Calculate the information rate of the telegraph source,

Solation: Given that

P{dot) = 2P(dash)

(dot) + Pash) = SP(dash) =1

From above two equations, we have

1 2

P(dash) = 5 and P(dot)= 5

fants no Lon

Cope ee ate nee

Expanding accordingly, we get

HOO) = ~ Pdot) log, Pot) —P(dash) log, P(dash)

Substituting al the values, we get

HQ) = 0,667(0.585) + 0.839(1.885) = 0.92 b/symbo

Also, given that

0,25, fain = 0.65 typcy = 0.200

Thus, the average time per symbol will be

1,

(dot), + P(A) An * type = 0.9933 s/symbol

Ponot suppose opportunity will knoek twice at your doors “

—

‘Communication Systems

816 re

isnt '

Further, the average symbol rate willbe given by

x 875 symbols/s

the telegraph source

ill be

“Therefore, the average information rate of fas

T= rH) = 1,876(0292) = 1.726 b/s Ai ;

He and sampled at arose aan

Hand arp mnbols. The probabilities of OCeUrFENCe ofthese

ere are

3 Obtain information rate of the source.

3

Nyquist rate. The sampl

EXAMPLE 16.17. An analog signal is bandlimited t0 fy

into 4 levels. Each level represent one symbols. Thu

1

2 snd P(x) = PCS)

J and PCs) = PC

4 levels (symbols) are P(x,) = Px) Bion

Pox, = g and Play) = POs)

Solution: We are given four symbols with probabilities PCs)

Average information HX) (or entropy) is expressed a8,

1 pale

Hog = Paes yt PHD Fy

L 1

+P) ltr pany PDE re

Substituting all the given values, we get :

1

ie sc) = Hows 8+ 2 (8) + $i (8) +5 Hes DO YOUKN

Messages produced b

or HO) = 1.8 bital symbol Ans. mation sources consi

Icisiven thatthe signa sampled Nyquist rate Npguitreteforf, Hebandimited | gequences of eymbolae Wh

signal in receiver of @ mest

interpret the entire message a

a single unit, communicat

system often have to d

individual symbols,

Nyquist rate = 21,, samplesisec

Since every sample generates one source symbol,

‘Thorefore, symbols por second, r = 2, symbolslse.

Information rate is given by

R= rHO0.

Putting, values of r and H(X) in this equation, we get

R= 2f, aymbola/sec x 1.8 bits/symbol = 3.6 f, bits/sec Ans.

In this example, there are four Jovels. Those four levels may be coded using binary PCM as shown in Table 16.1.

Table 164

SNo,_| Symbolorlevel |) Probability | Binary digite

1 a i 00

2 & 3 o1

3 Q 3 10

4 a i 1

Hence, two binary digits (binits) are required to send each symbol. We know that symbols

of 2f,, symbole/sec. Therefore, transmission rate of binary digits will be, ae ee

Binary digits (binits) rate = 2 binits/symbol x 2f,, symbols/sec, = 4f,, binits/sec

con Bacaust one bnit is enpable of conveying 1 bit of information therefore, the above coding scheme ia capable of

fanweying Af bie of information per second Buti thisexnmple, we have obtained that we ae transmiting 8.6 fy

penne coe ree aca he information carrying ability of binary PCM is not completely

EXAMPLE 16.18. In the transmission scheme of last example o i

Exaue example, obtain the information rate ifall symbols are equally

Also, comment on the result

Solution: Wo are given that there are four symbols Since they an

; y! they are equally likely, therefore, their probabilities will be equal to

4

Pox) = Play)

1 Pa)

Boga, since Pls) = P(e) =P)

os og, (4) = 2 biter

Tey ow that the information rate is expressed ay mel

R= HQ)

ere,r=2, symbolfse as obtained in last example,

sfeututing these values in above equation, we ge,

B= 2f, tvaboliltes x2 bitaleyabol = 4 bituses “Ane:

int: Just before this example we Have seen i

Important point: Just nformnatble We have seen that a binary toded PC

ble of conveying 4B-bits of information eae cuted PCM

ara ge AB bits of information per second sant transmission schome discussed in above example

lively, Thus with binary PCMelding, the maximum information sateen oes aaa:

wnuawonaate%s achieved ifall messages are equally like

PLE 16.19. A discrete source emits one of five «

Ennai respectively. Deter ng enon eae

auton: We know thatthe source entropy is given as

ols once every millisecond with

second with probabilities

aaa Sees p ities 1/2, 1/4, U8,

HO) = DPedoer Fe = Pape diteleymbol

ao Do Serr

ee HOY) pie) ors) Roe) og 19+ tes06

or Hop = 345 +g+tet 25 <1 ere biessymtel Ans. |

Te ymbel ate r= m= 5 = 1000 eymboslce ‘ |

‘Therefore, the information rate is expressed as

R = rHO0) = 1000 1.875 = 1875 bits/sec. Ans.

EXAMPLE 16.20. The probabilities of the five possible outcomes of an experiment are given as

1

1 1 1

POR) = 3 Play) = 7s Play)= BP) = Play) = 35

Determine the entropy and information rate if there are 16 outcomes per second.

Sclution: The entropy of the system is given as

. 1

Hop = 5, Plxdlors 1 bits/aymbot

ist PCY)

is Flog: 2+ logs 4+ = loge 8+ lone 16+ logs 16

HOO = 5 log. 2 4% 4 gine Brel 82 16 + logs

Hoo) 24 8 16 16 2844

Now, rate of outcomes r = 16 outcomes/sec.

‘Therefore, the rate of information R will be

R = sHQX) = 16x72 = 20 bita/soo Ans.

EAUPLE 16.21. An analog signal bandlimited to 10 kifz is quantized in 8 levels of a POM system with probabilities

“(6,15 15, 110,110, 420-1120 and 1/20 respectively. Find the entropy and the rate of information.

Sean: We know that gccording to the sampling theorem, the signal must bo sampled nt a frequency given ax

f,= 10x2kH2=20 ke

is said to be “discrete” when the alphabets of X and ¥ are both fi 0

‘current output depends on only the current SSyaPaRE ROE en at hs pee

fo, the entropy of the source may written as

Considering each of the eight quantized levels

1 1 0

ree 1 9g, 10+ togg10+ 2 tgs 20 + ls 20+ 55 Joa

Hox = Lowy 4+ Elon, 5 +E lor 5+ logs 10+ 7506210 7512935 20

1 2 1 I

= tog; 4+2 og, 5+ ~ logs 20 = 2.84 bite/message

or O09 = loss4 +5 lors 6+ 55 lon

[As the sampling frequency is 20 kHz then the rate at which message is produced, will be given as.

R= rH(X) = 20000 x 2.84 = 56800 bitel/sec. Ans.

[[s.13. THE DISCRETE MEMORYLESS CHANNELS (DMC)

In this section, let us discuss different aspects related to discrete memoryless channels (DMC),

16.13.1. Channel Representation

i i ugh

‘A communication channel may be defined as the path or medium throug!

which the symbols flow to the receiver end. A discrete memoryless channel (DMC)

is a statistical model with an input X and an output ¥ as shown in figure 16.3.

During each unit of the time (signaling interval), the channel accepts an input

symbol from X, and in response it generates an output symbal from Y. The channel

inputs.

A diagram of a DMC with m inputs and n outputs has been illustrated in

figure 16.3. The input X consists of input symbols x, X,,.-y- The probabilities

ofthese source symbols P(x) are assumed to be known, The outputs Y consists of %

output symbols y,, 2: ¥y Bach possible input-to-output path is indicated along

with a conditional probability P(,1x), where PG, Ix) isthe conditional probability

of obtaining output y, given that the input is x, and is called a channel transition %

probability. Fig. 16.3. Representation of a o

16.13.2. The Channel Matrix memoryieas pannel

A channel is completely specified by the complete set of transition probabilities. Accordingly, the channel

16.3 is often specified by the matrix of transition probabilities (PCYX)]. This matrix is given by

Poilx) Poin) = Pala)

Poy 1x2) Pye 1x2) PUyq 1X2)

(PCy 1x) =

PO Im) Ply2lxm) «Pn IX,

‘This matrix [P(Y|X)] is called the channel matrix. i rimuat

Since each input to the channel results in some output, each row of the channel matrix must sum to unit

means that

DPI) = 1 for ali

it

Now, if the input probabilities P(X) are represented by the row matrix, then we have

[P00) = (P(x) PG) «. Pee}

Also, the output probabilities P(Y) are represented by the row matrix as under:

POD) = (PG) Poy)... PO)

then (PC)] = (PEO|IPCY 1X)

Now, if P(X) is represented as a diagonal matrix, then we have “a

Poy) 0 0

Information Theory and Coding

819

then

srhere the G:i clement of eats eee CEI)

‘The matrix (POC, Y)] is nowy oo te pan mE

cransmitGne andrecrngay” © N2J204 Probaiiy mec nd the element Pe x) the int OPT of

[L8.14. TYPES OF CHANNELS

16.19)

;

16.14.1. Lossless Channel

A channel described by a channel matrix with o1 lement in

iatrix with only

ly one non-zero element i

ich column is called a lossless channel, An example of a lossless channel has a

been shown in figure 16.4, and the corresponding channel matrix is gi

trix is given in x

‘equation (16.20) as under: ies

7

Ye

31

Bt ooo

Fig. 164, Lossless channel

PEIN =|o 9 129 (1620)

33

00001

It can be shown that in the lossless channel, no source information is lost in transmission.

16.14.2. Deterministic Channel y

(A channel deel pe ae ee eer essen a

. ;

‘cach row is called a deterministic channel, An example of a deterministic channel 7

has been shown in figure 16.5, and the corresponding channel matrix is given

by equation (16.21) as under: % 7 v

0 0 a

00 * J Yo

10 (16.21) ee

10 % 4

01

Fig. 16.5 Deterministic Channel

Important point: It may be noted that since each tow has only one non-26r0, @lement, therefore, this element must

be unity by equation (16.21), Thus, when a given source symbol is sentin the: ‘deterministic channel, itis clear which

output symbol will be received. sonia.

16.143, Noiseless Channel E

ee is a

‘A channel is called noiseless if itis both lossless and deterministic. A” y

noiseless channel has been shown in figure 16.6. The channel matrix bes 1 "

poly one element in each row andin each column, and this element is unity.

Note that the input and output alphabets areof the same size, tha

wae the solnelesa denn: eae

m=n

eal

‘The matrix for a noiseless channel is given bY Fig, 166. Noiseless channel

10 0 0

010 0

0010

1

0 0 via

16.14.4. Binary Symmetric Channel (BSC)

‘The binary symmetric channel (BSC) is defined by the channel

diagram shown in figure 16.7, and its channel matrix is given by "i By

me

inp P

eown= [ea] 10523)” pg ay tony same eu

aawopeninia hanbetni 3 TTT Eitan Bacon”

wits iman will make more opportunites

(Communication Systems

js channel is symmetric because the

Thi

y (0 ifa Lis sent. This common

y of receiving &

820

tig the same as

OS) in figure 16.7.

‘A BSC channel has two inputs (1

probability of receiving a 1 sf 0.0 is sem

prrnaition probability is denoted by Pas s)

exaul vn in fig

PLE 16:22. Given a binary channel shown in 08

{) Find the channel matrix ofthe channel

6.

i) Find P(y,) and POs) when Posy) = POs) en Pla) = PO) = 05:

Teen Pep 39 and PCy 71) BOR PO in

A Fe ene Joint probabilities :

ep ug tat the channel mars is ee BY Po) 5 i

‘P(g; 11) at

pros) = [ngran Peale |

2S %

‘Substituting all the given values, we get

fo.9 0.1 Plx,) %

leer aa

en

fon) = (POO) [POO po You KNOW?

Sbettatng al the value, we get Be |

joo 02) ‘The cepacity of a noisy (discrete,

a ton) = (05 05) |o2 03; memoryless) channel is defined as

5 0.45)= (PO) fe maximum possible rate of

or Pon) = [0.55 0.48)=[P,) Pa) the maxis

Hence Mey = O88 and Pl,)=045 Ans ifraton enenison over

anne

wehave

(i Aga

. (POD), [PCO]

Po)

Substituting all the values, we get

(° celes os

(P&MI=|o osjo2 08)

os 005)

oe pawi=[o1° 0+ | ®

Now, Int ua write standard atric form asunder

Po33) Pe)

S wo = [Re Be] @

Comparing ( nd (9, we eet

tsy99) = 008 and Pay y)=01. Ans.

figure 16.9

EXAMPLE 1623, Two binary channels of example 16.22 are connected in cascade as show!

09 ay 09

08 % 08

Fig. 16.9. Two binary channels in cascade

() Find the overall channel matrix of the resultant channel, and draw the resultant equivalent channel dingram

(i) Find PG@,) and P(e) when Px) = Pex) = 0.5

Solution: () We know that

[POOIPCY 1X) oe

Pon) %

{POOIPCY 120) (P21 ¥)] = (POOP1X))

‘Thus, rom igure 169, we have

(PIN) = (POMPEY)

Sabetltstng all the values, wo gst oe

% 2

(2 le a] es 047) 066

P@IX= loo os|lo2 o8|=|osi ose) An® i

16:10, The resultant equiva

os renltantsqlvalet channel dlagram has bes shown in gure 18.10 a a a

schon is guy 1S A sc aioe ia a

= Anonymous)

Information Theory and Codi

Fy and Coding Bs

anneal eaet

Stating lhe aueg wep

y= 9 05 [28 22]

Let us write standard expression as eer eee) $

[P@] = (Pee,) Pe)

‘equation (ii), we obtain

Daca eerie

DEsMPLE 1024, conanal a

[a0] = lige e Pa Ai)

‘Comparing equation (a

() Draw the channel diagram.

(i) If the source has equal

ao has equally likely outputs, compute the probabiliti

Solution: () The channel diagram has been shown in sresonted by equation () is

Settler: Oh Bese 161.Temey be oad tat ie canoe ted by eat ,

‘The binary erasure channel has two inputs x, = 0 and x, = 1 and three %=0: ad

outputs y, = 0, ¥2= 0, and y, = 1, where indicates an erasute, This means a

that the output isin doubt, and hence it should be erased. Ans.

(4) We know that [PQ] is expressed as

(ro)

Substituting all the values, we get

Jated with the channel outputs for

POBITECEI30) PB

08 02 0 = yan

‘Simplifying, we got Fig. 16.11, Binary erasure chennol

Pon) = (04 02 0.4)

‘Thus, wo got

and

[[6.15. THE CONDITIONAL AND JOINT ENTROPIES

‘Using the input probabilites PQz), output probabilities PU), transition probabilities PG lx), and joint probabilities

P(x, 3) Jet us define the following various entropy functions for a channel with m inputs and outPuta:

Hoy = -SyPaploes Peay < Weuge voielorty pannel sl (1628)

ae -SPU)}orP) Pig: Wek ef Canned 0] P

porn = 3 Srv pleesPealyy Fig. 1614 Binary symmeine channel (@SC)

wan=lo iallp i=

a-p) ap POxyy) Plx,y2)

« ran [i ah Gnccnl-[ey ea)

i Welknow that H(YIX) = - )) >) P(xi,y,)Ions Ply; 1x,)

| By above expression, we have

POs. ¥1) loge Py 1x) — Posy. ¥_) log, Plvglx)— Pox y,) logs PO, 1x2) ~ P(xa, ¥3) logs Plats)

780. =P) loge (= P)~ ap logs p~ 1 ~a)p Logg p~ (1 = a}(1~p) log, (1 =p)

=p log,p~(1~p) log, (=p)

TOGY) = HO) —HOV1X)= HO) + p logs p+ (1—p) log, —p)

We know that

Gi) We know that

i)

PC) = (PCO) (PCI)

when a= 0.5 and p=0.1, then, we have

(Pq) = [0.5 oa}:

Information Theory and Coding,

825

Thus ne

Now, using the expression en ao

5 elephone channels that are

HOD = — 3?) ga P) me have sifected by switching transients

5 fand dropouts, and microwave

NO) = =P) or, Po) - Pe, te, Pe) radio links that are subjected 0

, % HOD = = 08 og, 08-05 ioe, 05 fading are examples of channels

logy p+ (= pon, (1 p)

11063 0.1 + 0.9 og, 0.9= 0469 with memory.

~0.469= 0.581 Ans.

we have

PO) = [05 051/25 05] -

(08 059° 95) <5 051

HO)

log, P+ (1 =p) logs (1=p)

Thus, IY)

Important point: It may be noted that in this ease) (p=015) no information ie Bein mr

ase, (p=015) no information is being transmitted at all. An equally

; acceptable decision could be made by dispensing with the channel entirely and “flipping a coin” at the receiver.

| ‘When IY) =0, the channel is said tobe useless. ace te

[L8.17._ THE CHANNEL CAPACITY

In this

Thus 1¥)

(i) When a= 0.5 and p.

5 log, 0.5+ 0.5 log, 0.5=—1

Hence Proved.

ection, Jet us discuss various aspects regarding channel capacity.

16.171. Channel Capacity Per Symbol C,

‘The channel capacity per symbol of a discrete memoryless channel (DMC) is defined as

C= max | laym| e

+= iahy LAND bloymbol (16.35)

\where the maximization is over all possible input probability distributions {P(x)} on X. Note that the channel eapacity

C, is «function of only the channel transition probabilities which define the channel

16.172. Channel Capacity Per Second ¢

Ifr symbols are being transmitted per second, then the maximum rate of transmission of information per second is

0, This is the channel capacity per second and is denoted by Cis), ie.,

© =rC, bis (16.36)

16.17.3. Capacities of Special Channel

In this subsection, let us discuss capacities of various special channel.

1. Lossless Channel

For a lossless channel, H(XI¥)=0, and

TOGY) = HO) (16.37)

‘Thus, the mutual information (information transfer) is equal tothe input (source) entropy, and no source information

is lost in transmission.

Consequently, the channel eapacity per symbol will be

@

fray HOO =

where m is the number of symbols in X.

2, Deterministic Channel

For a deterministic channel, H(Y1) = 0 for all input distributions P(x), and

TcG:¥) = HC)

log, m (16.38)

(16.39)

‘Thus, the information transfer is equal to the output entropy. The channel capacity per symbol will be

(16.40)

= Bacon

Communication System,

826

8. Noiseless Channel ae

Since a noiseless channel is both lossless and deterministic, we ha

K H&) =H) (16.41)

and the channel capacity per symbol is te

©, = log,m = logyn (16.42)

4. Binary Symmetric Channel (BSC)

For the binary symmetric channel (BSC), the mutual information

1Q:Y) = H(Y) + p log, p + (1 - p) log, (1p) .

and the channel eapacity per symbol will be

C, = 1+ p log, p + (1 -p) log, (1-p) .

EXAMPLE 16.32. Verify the following expression:

©, = log,m |

tants channel end ms the numberof symbols inX.

where ©, is the channel capacity of

Solution: For a lossless channel, we have

HaXIY)

Then, by equation (16.30), we have |

LOGY) = HOX)~ Hex|Y) = HX)

ol)

Hence, by equations (16.35) and (16.89), we have !

©, = (rian 10GY)_ or C, HQ) =log,m_ Hence proved, /

EXAMPLE 16.32. Verify the following expression:

C, = 1+ p log, p+ (1~p) lox, (1—p)

here C, isthe channel capacity of a BSC (figure 16.14)

Solution: We know that the mutual information 1K: ¥) ofa BSC is given by

1OGY) = HOD) + p log, p+ (1 =p) log (1p)

which is maximum when H(Y) is maximum. Since, th

0 05 and is achieved for equally likely inputs,

For this case H(¥) = 1, and the channel capacity is

jschannel outputs binary, HCY) is maximum when each output hae a probability

EBHCSY) = 1 plogy p+ (1p) logy (Lp) Honco Proved, !

EXAMPLE 16.34, Find the chan

nel capacity of the binary erasure channel of figure 16,16.

Solution: Let Pix,) = @. Then Pix

Py) =a x,

Lo pi-p|

| bam tt es pease

= [POrls) Postm) Poy is)

[Po4181) Posts) Fos ts P)=1—a 4

Using equation 16.17), we have

1p

%

to fea

pp o oe)

eal

By using equation (16.19), we have

mowi=[s |? 2 ual

|

| > fait P) p(1- aX —p)} = 1P,) PY.) Poy)

. wae = [

op 0

-ep a-aa~p)

0) Pye) Playa)

* Eaaalbe ayy pen

Tm addition, from equations (16.24) and (1626), wo can ealeuleag

Hoy =

)

vs

Ys

n Theory and Coding

a iW cee 11 =P) = ploy B= (1 =a) p) logy (A= «Ud

2 @=(1 =A) logs (I= a) = p log, p= (0 =p) lors (1 = PP

1 ne a W) p logy p= (1 = (1 = p) lows (= 9)

1)

‘And by equations (16.85) and (16,

5 QO =O) == hg = (1 on =a} == 11)

= pax

+ may 1 HO) = 1

ToT Emama

annel, N= 0 and t

However, practically, Nis always finite

- ther NOW?

fe, Tee exorein in Suan BED alg Teer ae aaa

law and is treated as the central theorem ot ‘asthe Hartley-Shannon ‘The communication system is

annon law, itis obvious tha om Hartley. designed to reproduce at the

cxchnged for ane another, To easgemathad the tigml power an ee | Seu at ersten sma

sy reduce the sigue] poReoee information at a given rate, we mately the message emitte

a aint Paver transmitted provided that habanteisivin tasers | ey ree

Banana ca vers Aw ait bandwidth may be reduced if we |

exchange between the bandwidth and REE Process of modulation is actually a means of effecting this

Important Point:lt may be noted that

that ean be transmitted by a chusaor a ne chanel capicty representa the maximum amount of infermation

processed properly or eoded in the soo seco: To achieve this rate of transmission, the information has tbe

jst efficient manner.

[L18.21. TRANSMISSION OF CONTINUOUS siGNALS

‘Theoretical Aspect

‘ow, one important question arises: How much information doce ash sample con

many discrete levels or values the samples may assume. In fact, the ; ms

transmit such samples, wo require putes capable of msaming inne lvea, Clearly, information carried Uy

cach sample infinite bits, Therefore, the information contained ina continuous bandied signal sna

In the presence of noise, the channel capacity is finite, Therefore, ti imponaile to tranamt complete

information in a bandlimited signal bya piyniealchannal in tho presence anoles

In the absence of oise,N'=0, the channel capaity infinite and hence, any desire signal canbe transmitted

cis quite obvios that tis imposible otranamitthe cole aformation eonined i continuous unless

the transmitted signal powr i made infinite Because of presence of nie, chore iw aay m certain amount of

Uncertainty in the reeled signa. Inc, the tanaminion of compete information in igual wil mean Zoo

umount of uncertainty. Actually the amount of uncertainty canbe made aritrarilysmally increasing the channel

capacity": However, ibenn never be made srw

in. It depends upon how

samples can assume any value and hence to

= Thins tran even he md A aa Tha nub wgaal Mala WN a nlara omer dance) pon

over the entre frequency ranted iCiaesecegasie andr ivin-rtieeN eso Increaes and benca the aval

capecty romain nts rane

itv ia El pee de etter

N= nB, and

s $B u,(t+S)

C= whe) me poe 28m

‘The above limit may be found with the help of following standard expre

Line Ce eppeeataa

Therefore, we have

5 5

Sige = 1448

Lim 5 Rea

“©The improvement in the signal-to-noise ratio in wideband FM and PCM can be properly understood,

“That finite value of N.

*"** Existing over the same band.

“By increasing the bandwidth and/or incre

[[15.22_ UNCERTAINTY IN THE TRANSMISSION PROCESS

-ed in the process of transmit

inuous signal at the transmit

‘Asa matter of fnet, the uncertainty is introducs

to transmit the complete information in a conti

id. The ami

infinite amount of information at the receiver ené in

the receiver is C bits per second where C is the channel capacity.

‘Communication Systems

jssion. Therefore, although, itis possible

‘ter end, it is impossible to recover this

vjunt of information that can be recovered per second at

tor, we can approximate the signal so that its

f transmitting all of the information at the transmitt. Peel aignal which aioe

In place o it this appre

information contents are reused to bits per secon

i : ent. Now, it will be possible to recover all of tl

information con

happens in pulse code modulation (PCM) system

‘Now, the question arises: How can we approximate a signal so that the approximated si

content per second?

In fact, this can be done by a process known as

quantization. For illustration, let us consider the

continuous signal bandlimited to f,, Hz as shown in

figure 16.16

Now, to transmit the information in this signal,

we require to transmit only 2f,, samples per second.

Figure 16.16 also shows samples.

The samples can take any value, and to transmit

them directly, we require pulses which can assume

an infinite number of levels.

‘Therefore, instead of trans-mitting the exact values

of these pulses, we round off the amplitudes to the

nearest one of the finite number of permitted values

In this example, all the pulses are approximated

to the nearest tenth of a volt,

1 may be noted fom the figure that each of the

pulses transmitted assumes any one of the 16 levels,

and thus, carries an amount of information of log,

16 = 4 bits. Also, since, there are 2f,, samples per

second, the total information content of the

approximated signal i 8f

id and transi

he information that has been transmitted. In fact, this

ygnal has a finite information

Vots% 59.015

(0.92008)

Host 901

1

Net

Fig. 16.16.

bits per second. If the channel eapacity is greater than or equal to 8 fy bits per second,

all the information that his been transmitted will be recovered completely without any uncertainty, This means

that the received signal will be an exact replica of the approximated signal that was transmitted. It can be shown.

that if the channel capacity is 8 f,, bits per second, the process of transmission docs not introduce an additional

degree of uncertainty. Now, let us consider that we are using a channel of bandwidth f,, Hz to transmit these

samples, then, since the channel capacity required will be 8 f, bits per second, the required signal-to-noise power

ratio will be given by

ci

v-tmn(2

Important Point: It is obvious that in this

although the process of transmission introduces

to be distinguishable at the receiver. In other wo!

‘second can transmit information of 8 f, bits per s

already discussed that the number of levels that can be distinguished at the receiver is

the receiver can distinguish the 16 states without erro

ome noise in the desired signal, the |

rds, we can say that a channel of the eay

econd virtually without error,

:

are far enoug!

city of Bf, bits

[[16.23. EXCHANGE oF BANDWIDTH FOR SIGNAL-TO-NOISE RATIO.

As a matter of fact, a given signal can be transmith

capacity. We have already discussed that a given cha

of bandwidth and signal power. Actually, itis

exchange can be affected. Again, let us con:

fed with a given amount of uncertainty by a channel of fini

ble a naabacity may be obtained by any number of combinations

ible to excl

!ange one for the other. Now, let us discuss how such an

Information Theory and Coding

bits per second. Now, let us

‘and signal power, One impr

of the samples can assume

distinguish 16 states, states, In this ease,

Clearly,

S4N

C= fal

2

a C=, log, (16)? =

shor mathe ok ae eae ea nie eg CE. cual x0. 10

tou four ahaa SES aa eee pte ee aE eee

that cnn onbiaget EGS Se EMER eet am ie tutag ee nanan oles ake

‘atea Ta this cat the sigoalto nolo rao required the reoaer to SaGogulsh plea that

aN

ar

81, bits per second,

assume four distinet states is

Henee, in this mode of transmission,

sas many pulses per second i.e, 4f, pula

channel capacity C will be

the required signal power is reduced. But, now, we have to transmit twice

# per second. Hence, the required bandwidth is 2 f,, Hz. In this ease, the

C= 2p log, StN

flog, (4)? = 8, bits per second.

[L18.24. PRACTICAL COMMUNICATION SYSTEMS IN LIGHT OF SHANNON'S EQUATION

Let us now discuss about the im

plications of the information capacity theorem in context of a Gaussian channel which

is bandlimited as well as power

limited. To do so, we have to define an ideal system

Ideal System

An ideal system may be defined as the system that transmits data at a bit rate R which is equal to the information

capacity C.

Therefore, Bit rate R= Information capacity C

‘The average transmitted power of an ideal system can be expressed as,

S= Bitrate x Energy per bit=Rx B

where, B, is the transmitted energy per bit.

But R= Cforanideal system

or S=CE, (16.56)

Hence, information eapacity of an ideal system is given by,

: 08

obra ing] - ame

NB

© Bc

CAN fies 1057)

ee g ve, 24] (

ey

o

a. (16558)

16.24.1. Bandwidth Efficiency Diagram

‘Communication

32

Region lor which A > C

(Practicaly impossible

systems)

Capacity boundary for which F = G (Ideal system) t

Region or which {

Rec

(Protea posse system)

Fig. 16.17. Bandwidth efficiency diagram ;

Observation based on figure 16,17

Some important observations based on the bandwidth efficiency diagram may be listed as under:

1. Shannons limit: For an infinite bandwidth, the ratio (B,/N,) approaches its limiting value which is denoted

ae,

(EJN) >= ah

{

nce, 2) = tim (2) = tog 2=0.698 or -1.6 0B

es (2) - ae (B)-ve2 os ,

This is called as the Shannon limit for an AWGN channel, as shown in figure 16.17. The corresponding limiting

value of the channel capacity is denoted by C_ which corresponds to « bandwidth. We have already obtained the: |

of C_ and it is given by, 1 ae |

co = 144s > bale

2, The capacity boundary: Figure 16.17 shows the capacity boundary curve. Itis the curve corresponding tothe |

critical bitrate R= C, which corresponds to an ideal system. The capacity boundary separates the practically im |

systems (R > C) from the practically possible systems (R C, error-free transmission

Git) The required SIN ratio can be found by

c= 1061+ Jest

ps

mi +5 20% = 256

8

S 2 256 (= 24.1 0B) As

a w= 256 )

oo transmission,

ust be greater than or equal to 24.1 dB for error

‘Thus, the required S/N ratio m

{iv) Tho roquired bandwidth B ean be found bY

: ( = Blog, (1 + 100) 2 8110")

sao’) ,

Bie ope ent) 7 220" He 2 12 Ks

rod bandwidth of the chanae] must be greater ‘an or equal 012 Kit, Ana

‘and the requi

Faia oie a power Se

Tis onan wats Band

16.25.

1. Definition

‘A conversion of the output of

‘code word) is called source coding.

shows a source encoder.

2. Objective of Source Coding

‘THE SOURCE CODING

a discrete memoryless source

"The device that performs this com

‘An objective of source coding is to minimize the average bi

“rate required for representation of the source

by reducing the X= (ty %--% Xn

to a sequence of binary symbols (i.e, bi

yi un an

Discrete

memory less | ——=——*]

source (OMS)

21

redundancy of the information source

16.25.1. Few Terms Related to Source Coding Process

Tn this sub section, let us study the following terms which are r

source coding process:

@ Codeword length

(ii) Code efficiency

1. Codeword Length

five independent symbols

form of a Table 16.15

A,B,C, D and E with

ae or code-word lengths ts minimurs,

Information Theory and Coding

Table 16.18,

‘Step Step it

08 0 08 os mal

04 19 04—~ 0419 07.

0.081110 0.4

i

‘Therefore, in compact form, Huffman coding can bo represented ay ner

Table 16.16

Symbol Probability [| Code word

c 08. 0

A oa 10

B 02 110

D 0.08 2110

zg 0.02 ni

‘We know that the average code word length is given by

er L=08%1+04x2+02%9+ 008x4 +002%4

ie L226. Anu

Now, variance is given by

ot= Ypio-be

or oF = 0.8 (1 ~2.6)4+ 0.4 (2-26) +02 (32.6) + 0,08 4 -2.6)+ 0.02 (4 -2.0)2

or of = 204 + 0,144 + 0,032 + 0.166 + 0.0392

24112, Ans.

EXAMPLE 16.53. An event has six possible outcomes with probabilities

1 eae 1 1

Pye pePan gePan gePum agi Pen gy and Pye

Find the entropy of the system. Also find the rate of information if there are 1

Solution: Given that

utcomes per second,

ey

Redes 6,

1 1

md Py=

= 16 outcomeslece.

We know that the entropy is given by

He

‘Substituting all the given values, we get

2 7 by, 8+

He 3 loe,2+ 3 lor, 4+ 5 lor, 8+ 75 log, 10+ 2 tog, 92

Mesvreth «10

or Wegtate tic

or H= 1.9375 bita/mess

We also know that information rate i given by

Re Entropy x message rate =H x r= 1.9975 x 16

1 bitslaoe. Ans

Be.

dg x, with probabilities 0.3, 0.4 nd 0.3, The soy

mesa THO).

ce trans e. Calculate

a ierete

Pe A penal ven nie 1622

So Cn at

rane Pix.)

‘We know that the valu of H(X) ean be found. ‘

1 oe

ee Tres oer

+ Poss lots Bias) 7 PO? 82 Po)

1

03% (¢3)

= st (25) +04 (g} “09 (a

toozosesosisos2=18 rain Ane

ay maint et

1

HO) = Pts) 8s Fis)

Substituting values, meget

1 grees edt rota Js

nov Ms

[08 02 0

mofo 1 0

POIs Lo 03 07

Pa)=l03 04 03)

Tae POX ¥) is obtained by multplring P(YX) and POX) as under:

08x03 02x03 0 024 006 0

0 x04 0 |_| 0 04 0

© 03x03 07x03] | 0 009 0.21

Po), Po) and PG) are obtained by adding column of P(X, Y).

Therefore, we have

POY): [

Po,)= 021+0+0=024

P6s,)= 0.06 +0.4+0.09= 055

Py,)= 0+0+021=0.21

Now, the value of H(¥) can be ebtained as under

Hop= - SP )bs.Fo) = - Y Pup lors Pey)

or HY) = ~ (0.24 log, 0.24 0.55 og, 0.55 0.21

: 7 1085-021 og, 0.2

HO) = ~[-0.49-0.47-0.47) Ce

S HO)=— 14s iertel Ane

EXAMPLE 1655. Consider a

0.15, 018) ider a discrete memoryless source with s

Ho9,

Substituting all the values, we get

Information Theory and Coding 49

EXAMPLE 16.56. State Hartley-Shannon Law,

ener ppahcnaceoe rail (neal Seen ere eerS taecgnIe Gecancannoel ove 7

:

c= ai ae

B= channel bandwidth KNOW?

P= sina power neyo

N= noise within the channel bandwidch ‘The Shannon Hartley theorem i

cf fundamental importance and

has wo important implications for

Power, ‘

ei communication system engineers.

»

J) Power spectral density

Using above expression, the noise power N is as under:

None porernn= [Mbar

4s the power spectral density (pad) of the white noise.

Therefore, we write

0B

Using equations (j) and (i), we obtain

P

C= Blog, (1+

vb

Dae

EXAWPLE 1657, A source emits ono of four symbols Sy 5,5, and S, with probabiticies 2,2," and + respectively. The

successive symbols emitted by the source are independent. Caleulate the entropy of source.

Solution: The given probabilities of the rymbols are ax under

Symbol

6

We know that the expression for soures entropy H(X) is given by

Probabiiy | fa

y L

HG)= DIPS) e875 bitsorbol

Substituting all the given vale, we get

1 1 1 1

WS) lors 3+ Goss 6+% be, 4+ lor, 4

1

1 Bena,

or HE) = 5 x 1559+ F «2585+ 21+ 21

7 = 1901S Ans

AMPLE S658, Find Shannon Fao coe for fre menage gen by probbiies$, 1, ctgat ne

es

eae eee

ce Ge oo a toe

Tie 617, Shon Fn Coag

aU NEUE toy [step] Bs | cats

ras eS

7

n

i

7

‘We know that the average number of bits por message is given by

Le SPn,

Sabatitng lhe vals, we gt as

(2*}+(P-2) +(e) ¢(ioe4) (jet) = Seek as 506 be, ng,

EXAMPLE 1689:A voce er

capacity of the

olution: Given that

mil of the telephone network has a bandwidth of 3.4 kit

Phone channel for w signal-to-noise ratio of 30 dB,

B= 94Kllz, and

8

7 90dB

Metnor hat § tin xpd

eee

8 = ping 30 = 100

‘We know that the information capacity ofthe telephone channel ia wiven by

8

= Bim, (i2)

9.4103 log, (1 + 1000)

bot

C= 5419 MEN op gore

+ 008557 bu = 3480p, Anu

EXAMPLE 690 aDus

egabet of 8 letters, 1=1,,.6 with probabilities 025, 9.

gS Determine an optimum binary code forthe neitce using Huffman coding procedure,

olutl

Substituting all the values, wo got

15, 0.12, 0.19,09

Information Theory and Coding a

EXAMPLE 16.61. Prove that for a a

pee oot neta ct breton ceevreegeen IS ELT

‘Solution: Huffman code is an exai ipl of ee

Ravenel ang ofopinum ad Wacom eal wows hat stn ym ert

‘ron coded ing moro numer afta whores symbols a higher ebabliy harcore cated ntcomaler catered

e cae

EXAMPLE 16.62. A ‘emits one of four symbols Sy, S, S, and S, with probabilities 1/3, 1/6, U4 and 1/4 respectively.

The successive symbols emitted bs

‘is emitted by the source are independent. Calewlate the entropy of souree.

Soliton: The probable ofthe gyno aratgunderS UIE MING es ee

Symbol 5. 5

Probability | v8

2 |

A

Source entropy H= 2log,3+ tog, 6+! tog, 442

my H= Flow, 9+ Flog, 6+ 4 log, 141 lg, 4

H

1 1 242,

= Dirssi9+ b25a5+ 2x1 +2n1 = itsfeymbol Ans.

ned Gx 2586+ Fa1 +2 = 1.0591 bitelaymbol A

EXAMPLE 16.63, Develop Shannon-Fa

the average number of bitsmessage,

Solution: Let us consider Table 16.19 for Shannon-Fano coding.

Table 16.19,

‘Message | Probability, |) | Column Column

I m1

on 12 0

Partition

By va 1

sno coule for five messages given by probabilities 1/2, 1/4, U8, U16, 1/16. Calculate

Partition

8 z T

16 1 0

Partition

16 r

Average number of bits per mess:

y a B Bey, eu ties pat

= (Bxa}+(2e2}s(Bxs}e(axdca) 2,242 ah Ten

us (ba}(tr2} (G2) (apes) odeSe stout a

EXAMPLE 16.64. A volce grade channel ofthe telephone network has a bandwidth of 34 kHz. Determine the information

capacity ofthe telephone channel fora signal-to-noise ratio of 30 dB,

Solution:

Given that B= 9.4 ke, SIN=80 dB

Wehave to find C

First, we calculate the ratio SN:

Ss

ae Clem

20 = 10 bog10 [S/N]

Therefor, {SIN} = Antilog 9 = 1000

Now, lets calculate Cas under:

= Bh, [ie§] =20430"b4 (00

c= anit Se sao aon

(C= 9888857 btslsec. oF 33.89 kbps Ans.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5820)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

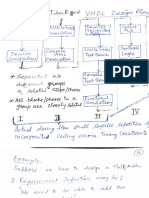

- VHDL Design L6-L7 29,30-08-2022Document12 pagesVHDL Design L6-L7 29,30-08-2022raushanNo ratings yet

- VHDL Design L3-L4 22,23-08-20221Document14 pagesVHDL Design L3-L4 22,23-08-20221raushanNo ratings yet

- VHDL Design L11 13-09-2022Document13 pagesVHDL Design L11 13-09-2022raushanNo ratings yet

- VHDL Design L7 30-08-2022Document9 pagesVHDL Design L7 30-08-2022raushanNo ratings yet

- VHDL Design L8 31-08-2022Document6 pagesVHDL Design L8 31-08-2022raushanNo ratings yet

- DocScanner 06-Dec-2022 7-39 PMDocument29 pagesDocScanner 06-Dec-2022 7-39 PMraushanNo ratings yet