Professional Documents

Culture Documents

Sketch To Image Using GAN

Sketch To Image Using GAN

Copyright:

Available Formats

You might also like

- Official Business Form TemplateDocument1 pageOfficial Business Form TemplateBEN100% (1)

- Android Base Voting SystemDocument63 pagesAndroid Base Voting SystemAadenNo ratings yet

- Irregular VerbDocument2 pagesIrregular VerbzulsahiraNo ratings yet

- List of Verbs Adapted By: Teacher Lea SoidiDocument6 pagesList of Verbs Adapted By: Teacher Lea SoidiLea SoidiNo ratings yet

- Model - Day Wise Session Plan FOR FTCPDocument42 pagesModel - Day Wise Session Plan FOR FTCPJaveed AhamedNo ratings yet

- Main VerbDocument15 pagesMain Verbsharmaankit0154No ratings yet

- Verbs Chart-1-1Document11 pagesVerbs Chart-1-1Monika KaurNo ratings yet

- Java VersionsDocument5 pagesJava VersionsVikram VarmaNo ratings yet

- Irregular VerbDocument5 pagesIrregular VerbAkmila Meidina100% (1)

- Types of Adverbs With Hindi TranslationDocument10 pagesTypes of Adverbs With Hindi TranslationRahul ChoudharyNo ratings yet

- Irregular Verb ListDocument6 pagesIrregular Verb ListJarrNo ratings yet

- English Grammar Tenses Hindi Explanation - WWW - ExamTyaari.inDocument20 pagesEnglish Grammar Tenses Hindi Explanation - WWW - ExamTyaari.inPooja RathoreNo ratings yet

- Kata Kerja Tak Beraturan / Irregular Verb DariDocument6 pagesKata Kerja Tak Beraturan / Irregular Verb DariMuas Najudin RifaiNo ratings yet

- Demo Complete PowerPoint 2013 in HindiDocument61 pagesDemo Complete PowerPoint 2013 in HindisunnyNo ratings yet

- Bec Irreguler VerbsDocument2 pagesBec Irreguler VerbsDwi AyuNo ratings yet

- 22215eec W22Document13 pages22215eec W22SN NNo ratings yet

- Computer Science Class 9th Chapter 1 NotesDocument14 pagesComputer Science Class 9th Chapter 1 NotesAmna FaisalNo ratings yet

- Infinitive Past Tense Past Participle MeaningDocument4 pagesInfinitive Past Tense Past Participle MeaningGaboEstrattoD'sourca100% (1)

- Irregular Verbs: Infinitive Preterite Past Participle MeaningDocument10 pagesIrregular Verbs: Infinitive Preterite Past Participle MeaningBhisma PermanaNo ratings yet

- Adjective in Hindi PDFDocument14 pagesAdjective in Hindi PDFThakur Kumar PappuNo ratings yet

- Reg, Irreguler Verbs, RaflessDocument16 pagesReg, Irreguler Verbs, RaflessAlvianNo ratings yet

- TC Unit 5 - Dimensions of Oral Communication & Voice Dynamics by Kulbhushan (Krazy Kaksha & KK World)Document5 pagesTC Unit 5 - Dimensions of Oral Communication & Voice Dynamics by Kulbhushan (Krazy Kaksha & KK World)Amul VermaNo ratings yet

- Paper 21-Fake Face GeneratorDocument6 pagesPaper 21-Fake Face GeneratorAakrit DongolNo ratings yet

- Realistic Face Image Generation Based On Generative Adversarial NetworkDocument4 pagesRealistic Face Image Generation Based On Generative Adversarial NetworkRaghavendra ShettyNo ratings yet

- Learning Adversarial 3D Model Generation With 2D Image EnhancerDocument8 pagesLearning Adversarial 3D Model Generation With 2D Image EnhancerLadyBruneNo ratings yet

- Sketch-To-Face Image Translation and Enhancement Using A Multi-GAN ApproachDocument7 pagesSketch-To-Face Image Translation and Enhancement Using A Multi-GAN ApproachIJRASETPublicationsNo ratings yet

- An Analysis On The Use of Image Design With Generative AI TechnologiesDocument4 pagesAn Analysis On The Use of Image Design With Generative AI TechnologiesEditor IJTSRDNo ratings yet

- Fabric Color and Design Identification For Vision ImpairedDocument6 pagesFabric Color and Design Identification For Vision ImpairedIJRASETPublicationsNo ratings yet

- Photo-Realistic Photo Synthesis Using Improved Conditional Generative Adversarial NetworksDocument8 pagesPhoto-Realistic Photo Synthesis Using Improved Conditional Generative Adversarial NetworksIAES IJAINo ratings yet

- GenAI - ABSTRACT AMRITHADocument2 pagesGenAI - ABSTRACT AMRITHAAmrithaNo ratings yet

- Development of Image Super-Resolution FrameworkDocument5 pagesDevelopment of Image Super-Resolution FrameworkIAES International Journal of Robotics and AutomationNo ratings yet

- GAN Based State-Of-Art Image ColorizationDocument7 pagesGAN Based State-Of-Art Image ColorizationIJRASETPublicationsNo ratings yet

- Generative Adversarial Network For MedicDocument19 pagesGenerative Adversarial Network For Medicmariano cristoboNo ratings yet

- Anime Face Generation Using DC-GANsDocument6 pagesAnime Face Generation Using DC-GANsfacoNo ratings yet

- A Generative Adversari AL Network Based Deep Learning Method For Low Quality Defect Image Reconstruction and RecognitionDocument4 pagesA Generative Adversari AL Network Based Deep Learning Method For Low Quality Defect Image Reconstruction and RecognitionGabriel DAnnunzioNo ratings yet

- Report CVDocument9 pagesReport CVyawepa6474No ratings yet

- Criminal Face Recognition Using GANDocument3 pagesCriminal Face Recognition Using GANInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Satellite Image Stitcher Using ORBDocument6 pagesSatellite Image Stitcher Using ORBInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Fashion Clothes Generation System Using Deep Convolutional GANDocument11 pagesFashion Clothes Generation System Using Deep Convolutional GANIJRASETPublicationsNo ratings yet

- NeurIPS 2020 Swapping Autoencoder For Deep Image Manipulation PaperDocument14 pagesNeurIPS 2020 Swapping Autoencoder For Deep Image Manipulation PaperhhhNo ratings yet

- Image Caption Generator Using AI: Review - 1Document9 pagesImage Caption Generator Using AI: Review - 1Anish JamedarNo ratings yet

- A Survey On Generative Adversarial Networks (GANs)Document5 pagesA Survey On Generative Adversarial Networks (GANs)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Real Time Object Detection Using CNNDocument5 pagesReal Time Object Detection Using CNNyevanNo ratings yet

- Duplicate Image Detection and Comparison Using Single Core, Multiprocessing, and MultithreadingDocument8 pagesDuplicate Image Detection and Comparison Using Single Core, Multiprocessing, and MultithreadingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Sketch To Image Using GANDocument5 pagesSketch To Image Using GANmedo.tarek1122No ratings yet

- Orthomosaic From Generating 3D Models With PhotogrammetryDocument13 pagesOrthomosaic From Generating 3D Models With PhotogrammetryInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Data Augmentation On Plant Leaf Disease Image Dataset Using Image Manipulation and Deep Learning TechniquesDocument6 pagesData Augmentation On Plant Leaf Disease Image Dataset Using Image Manipulation and Deep Learning Techniqueszoric99No ratings yet

- IET ICETA 2022 - A Conditional Generative Adversarial Network Method For Image Segmentation in Blurry Image - REDUCEDDocument2 pagesIET ICETA 2022 - A Conditional Generative Adversarial Network Method For Image Segmentation in Blurry Image - REDUCEDcinfonNo ratings yet

- Text To Image Synthesis Using Generative Adversarial NetworksDocument10 pagesText To Image Synthesis Using Generative Adversarial NetworksIJRASETPublicationsNo ratings yet

- Visual Image Caption Generator Using Deep LearningDocument7 pagesVisual Image Caption Generator Using Deep LearningSai Pavan GNo ratings yet

- Automated Student Attendance Using Facial Recognition Based On DRLBP &DRLDPDocument4 pagesAutomated Student Attendance Using Facial Recognition Based On DRLBP &DRLDPDarshan PatilNo ratings yet

- SSRN Id3354412Document8 pagesSSRN Id3354412Aung Myo KhantNo ratings yet

- Suggestive GAN For Supporting Dysgraphic Drawing SkillsDocument12 pagesSuggestive GAN For Supporting Dysgraphic Drawing SkillsIAES IJAINo ratings yet

- A Survey of Image Synthesis and Editing With Generative Adversarial Networks PDFDocument15 pagesA Survey of Image Synthesis and Editing With Generative Adversarial Networks PDFzhenhua wuNo ratings yet

- Proceedings of Spie: A Survey On Generative Adversarial Networks and Their Variants MethodsDocument8 pagesProceedings of Spie: A Survey On Generative Adversarial Networks and Their Variants Methodswilliamkin14No ratings yet

- Review Paper On Image Processing in Distributed Environment: Sandeep Kaur, Manisha BhardwajDocument2 pagesReview Paper On Image Processing in Distributed Environment: Sandeep Kaur, Manisha Bhardwajmenilanjan89nLNo ratings yet

- Narrative Paragraph GenerationDocument13 pagesNarrative Paragraph Generationsid202pkNo ratings yet

- Recognition of Front and Rear IJEIR-13Document4 pagesRecognition of Front and Rear IJEIR-13Vivek DeshmukhNo ratings yet

- Heuristic Based Image Stitching Algorithm With Autom - 2024 - Expert Systems WitDocument12 pagesHeuristic Based Image Stitching Algorithm With Autom - 2024 - Expert Systems WitPhi MaiNo ratings yet

- (IJCST-V10I5P62) :raghava SaiNikhil, Dr.S.Govinda RaoDocument7 pages(IJCST-V10I5P62) :raghava SaiNikhil, Dr.S.Govinda RaoEighthSenseGroupNo ratings yet

- Image Processing: Dept - of Ise, DR - Ait 2018-19 1Document16 pagesImage Processing: Dept - of Ise, DR - Ait 2018-19 1Ramachandra HegdeNo ratings yet

- Oral Anti-Diabetic Semaglutide: A GLP-1 RA PeptideDocument11 pagesOral Anti-Diabetic Semaglutide: A GLP-1 RA PeptideInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Environmental Sanitation – A Therapy for Healthy Living for Sustainable Development. A Case Study of Argungu Township Kebbi State, North Western NigeriaDocument15 pagesEnvironmental Sanitation – A Therapy for Healthy Living for Sustainable Development. A Case Study of Argungu Township Kebbi State, North Western NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Prevalence of Microorganisms in UTI and Antibiotic Sensitivity Pattern among Gram Negative Isolates: A Cohort StudyDocument4 pagesPrevalence of Microorganisms in UTI and Antibiotic Sensitivity Pattern among Gram Negative Isolates: A Cohort StudyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Shift from Traditional to Modern Building Concepts and Designs in Ringim Town: A Comparative Study of Aesthetics, Values, Functions and DurabilityDocument9 pagesShift from Traditional to Modern Building Concepts and Designs in Ringim Town: A Comparative Study of Aesthetics, Values, Functions and DurabilityInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Association Between Gender and Depression Among College StudentsDocument4 pagesAssociation Between Gender and Depression Among College StudentsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Design and Analysis of Various Formwork SystemsDocument6 pagesDesign and Analysis of Various Formwork SystemsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Reintegration of Dairy in Daily American Diets: A Biochemical and Nutritional PerspectiveDocument1 pageReintegration of Dairy in Daily American Diets: A Biochemical and Nutritional PerspectiveInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Biometric Security Systems Enhanced by AI: Exploring Concerns with AI Advancements in Facial Recognition and Other Biometric Systems have Security Implications and VulnerabilitiesDocument5 pagesBiometric Security Systems Enhanced by AI: Exploring Concerns with AI Advancements in Facial Recognition and Other Biometric Systems have Security Implications and VulnerabilitiesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Appraisal of Nursing Care Received and it’s Satisfaction: A Case Study of Admitted Patients in Afe Babalola Multisystem Hospital, Ado Ekiti, Ekiti StateDocument12 pagesAppraisal of Nursing Care Received and it’s Satisfaction: A Case Study of Admitted Patients in Afe Babalola Multisystem Hospital, Ado Ekiti, Ekiti StateInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Salivary Diagnostics in Oral and Systemic Diseases - A ReviewDocument5 pagesSalivary Diagnostics in Oral and Systemic Diseases - A ReviewInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Evaluation of Lateral and Vertical Gingival Displacement Produced by Three Different Gingival Retraction Systems using Impression Scanning: An in-Vivo Original StudyDocument6 pagesEvaluation of Lateral and Vertical Gingival Displacement Produced by Three Different Gingival Retraction Systems using Impression Scanning: An in-Vivo Original StudyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Nurturing Corporate Employee Emotional Wellbeing, Time Management and the Influence on FamilyDocument8 pagesNurturing Corporate Employee Emotional Wellbeing, Time Management and the Influence on FamilyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Occupational Injuries among Health Care Workers in Selected Hospitals in Ogbomosho, Oyo StateDocument6 pagesOccupational Injuries among Health Care Workers in Selected Hospitals in Ogbomosho, Oyo StateInternational Journal of Innovative Science and Research Technology100% (1)

- AI Robots in Various SectorDocument3 pagesAI Robots in Various SectorInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- MSMEs and Rural Prosperity: A Study of their Influence in Indonesian Agriculture and Rural EconomyDocument6 pagesMSMEs and Rural Prosperity: A Study of their Influence in Indonesian Agriculture and Rural EconomyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Explanatory Sequential Study of Public Elementary School Teachers on Deped Computerization Program (DCP)Document7 pagesAn Explanatory Sequential Study of Public Elementary School Teachers on Deped Computerization Program (DCP)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Fuzzy based Tie-Line and LFC of a Two-Area Interconnected SystemDocument6 pagesFuzzy based Tie-Line and LFC of a Two-Area Interconnected SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Intrusion Detection and Prevention Systems for Ad-Hoc NetworksDocument8 pagesIntrusion Detection and Prevention Systems for Ad-Hoc NetworksInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Effects of Liquid Density and Impeller Size with Volute Clearance on the Performance of Radial Blade Centrifugal Pumps: An Experimental ApproachDocument16 pagesThe Effects of Liquid Density and Impeller Size with Volute Clearance on the Performance of Radial Blade Centrifugal Pumps: An Experimental ApproachInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Dynamic Analysis of High-Rise Buildings for Various Irregularities with and without Floating ColumnDocument3 pagesDynamic Analysis of High-Rise Buildings for Various Irregularities with and without Floating ColumnInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Innovative Mathematical Insights through Artificial Intelligence (AI): Analysing Ramanujan Series and the Relationship between e and π\piDocument8 pagesInnovative Mathematical Insights through Artificial Intelligence (AI): Analysing Ramanujan Series and the Relationship between e and π\piInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Geotechnical Assessment of Selected Lateritic Soils in Southwest Nigeria for Road Construction and Development of Artificial Neural Network Mathematical Based Model for Prediction of the California Bearing RatioDocument10 pagesGeotechnical Assessment of Selected Lateritic Soils in Southwest Nigeria for Road Construction and Development of Artificial Neural Network Mathematical Based Model for Prediction of the California Bearing RatioInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Diode Laser Therapy for Drug Induced Gingival Enlaregement: A CaseReportDocument4 pagesDiode Laser Therapy for Drug Induced Gingival Enlaregement: A CaseReportInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Integration of Information Communication Technology, Strategic Leadership and Academic Performance in Universities in North Kivu, Democratic Republic of CongoDocument7 pagesIntegration of Information Communication Technology, Strategic Leadership and Academic Performance in Universities in North Kivu, Democratic Republic of CongoInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Analysis of Factors Obstacling Construction Work in The Tojo Una-Una Islands RegionDocument9 pagesAnalysis of Factors Obstacling Construction Work in The Tojo Una-Una Islands RegionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Importance of Early Intervention of Traumatic Cataract in ChildrenDocument5 pagesImportance of Early Intervention of Traumatic Cataract in ChildrenInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Breaking Down Barriers To Inclusion: Stories of Grade Four Teachers in Maintream ClassroomsDocument14 pagesBreaking Down Barriers To Inclusion: Stories of Grade Four Teachers in Maintream ClassroomsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Novel Approach to Template Filling with Automatic Speech Recognition for Healthcare ProfessionalsDocument6 pagesA Novel Approach to Template Filling with Automatic Speech Recognition for Healthcare ProfessionalsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Comparison of JavaScript Frontend Frameworks - Angular, React, and VueDocument8 pagesComparison of JavaScript Frontend Frameworks - Angular, React, and VueInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Occupational Safety: PPE Use and Hazard Experiences Among Welders in Valencia City, BukidnonDocument11 pagesOccupational Safety: PPE Use and Hazard Experiences Among Welders in Valencia City, BukidnonInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- LTE Calculator Inc VoLTEDocument29 pagesLTE Calculator Inc VoLTESaran KhalidNo ratings yet

- TPM Road MapDocument4 pagesTPM Road Mapsanjeev singhNo ratings yet

- TVL Comprog11 Q1 M2Document16 pagesTVL Comprog11 Q1 M2Sherry-Ann TulauanNo ratings yet

- ECE 2115 Tutorial 6 Designing A Common Collector AmplifierDocument2 pagesECE 2115 Tutorial 6 Designing A Common Collector AmplifierCooper1k3 -No ratings yet

- What Is Functional Dependency?: Re Exivity: If Y Is A Subset of X, Then X Y Holds by Re Exivity RuleDocument17 pagesWhat Is Functional Dependency?: Re Exivity: If Y Is A Subset of X, Then X Y Holds by Re Exivity RuleDivya SinghNo ratings yet

- BCFP in A Nutshell 4gbit 0707Document41 pagesBCFP in A Nutshell 4gbit 0707Loris StrozziniNo ratings yet

- PODAI Active, Invited Students-ListDocument30 pagesPODAI Active, Invited Students-ListSouradeep GuptaNo ratings yet

- Safari. The Best Way To See The SitesDocument10 pagesSafari. The Best Way To See The SitesJotaNo ratings yet

- GBSS13.0 Troubleshooting Guide (02) (PDF) - enDocument381 pagesGBSS13.0 Troubleshooting Guide (02) (PDF) - enBenjie AlmasaNo ratings yet

- MJNV1W03 - Business Mathematics: Class Branding 1ADocument26 pagesMJNV1W03 - Business Mathematics: Class Branding 1AKatarina KimNo ratings yet

- 1-What Is An API?: //asked in InterviewDocument10 pages1-What Is An API?: //asked in Interviewdewang goelNo ratings yet

- Productivity Improvement Through Line Balancing by Using Simulation Modeling (Case Study Almeda Garment Factory)Document14 pagesProductivity Improvement Through Line Balancing by Using Simulation Modeling (Case Study Almeda Garment Factory)Ronald LiviaNo ratings yet

- Ledcenterm Software Operation Manual: C-PowerDocument44 pagesLedcenterm Software Operation Manual: C-Powerconcordeus meyselvaNo ratings yet

- R12 Table ChangesDocument5 pagesR12 Table ChangesreddymadhuNo ratings yet

- NS2Document11 pagesNS2Nikumani ChoudhuryNo ratings yet

- Gym Management SystemDocument9 pagesGym Management Systemsrishti24jaiswalNo ratings yet

- Sharp Al 2020 BrochureDocument2 pagesSharp Al 2020 BrochurenickypanzeNo ratings yet

- 797 FDocument10 pages797 FRonny Leon NuñezNo ratings yet

- NTPC CBT 1result Level 5Document165 pagesNTPC CBT 1result Level 5Nishant KushwahaNo ratings yet

- Web DesignDocument2 pagesWeb DesignCSETUBENo ratings yet

- JWeb ServicesDocument52 pagesJWeb ServicesUday KumarNo ratings yet

- ThinkScript Strategy With PAR SARDocument4 pagesThinkScript Strategy With PAR SARbollingerbandNo ratings yet

- All About Logic Families PDFDocument32 pagesAll About Logic Families PDFSaiKanthG100% (1)

- Current Issues and Challenges of Supply Chain ManagementDocument6 pagesCurrent Issues and Challenges of Supply Chain ManagementmohsinziaNo ratings yet

- UPRM LEAPRMidProjectReviewRASC AL2019Document18 pagesUPRM LEAPRMidProjectReviewRASC AL2019La Isla OesteNo ratings yet

- Arrow Diagrams in PowerpointDocument29 pagesArrow Diagrams in PowerpointSlidebooks Consulting100% (7)

- Transformer Protection Application GuideDocument33 pagesTransformer Protection Application GuideDulon40100% (2)

- Graphic DesignInformation TechnologyDocument97 pagesGraphic DesignInformation TechnologyRaihan MahmoodNo ratings yet

- Juniper: JN0-663 ExamDocument8 pagesJuniper: JN0-663 ExamAmine BoubakeurNo ratings yet

Sketch To Image Using GAN

Sketch To Image Using GAN

Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Sketch To Image Using GAN

Sketch To Image Using GAN

Copyright:

Available Formats

Volume 8, Issue 1, January – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Sketch to Image using GAN

Sumit Gunjate1, Tushar Nakhate2, Tushar Kshirsagar3, Yash Sapat4

Information Technology

G H Raisoni College of Engineering Nagpur, India

Prof. Sonali Guhe5

Assistant Professor,

G H Raisoni College of Engineering Nagpur, India

Abstract:- With the development of the modern age and The system describes a technique for translating

its technologies, people are discovering ways to improve, sketches into images using generative adversarial networks.

streamline, and de-stress their lives. A difficult issue in The translation of a person's sketch into an image that

computer vision and graphics is the creation of realistic contains the trait or feature connected with the sketch

visuals from hand-drawn sketches. There are numerous requires the assistance of classes of machine learning

uses for the technique of creating facial sketches from algorithms. In this technique, a realistic photograph for any

real images and its inverse. Due to the differences sketch may be quickly and easily created with meticulous

between a photo and a sketch, photo/sketch synthesis is detail. Since the entire procedure is automated, using the

still a difficult problem to solve. Existing methods either system requires little human effort. The models included in

require precise edge maps or rely on retrieving this project condense the sketch-to-photo production process

previously taken pictures. In order to get around the into a few lines and include the following:

shortcomings of current systems, the system proposed in A generator that produces a realistic image of a forensic

this paper uses generative adversarial networks. A type sketch from an input forensic sketch.

of machine learning method is called a generative A Discriminator that is used to train the generator or to

adversarial network (GAN). This algorithm pits two or simply put, to increase the accuracy of the photograph

more neural networks against one another in the context being generated by the generator.

of a zero-sum game. Here, we provide a generative

adversarial network (GAN) method for creating The project is broken up into various components

convincing images. Recent GAN-based techniques for where users contribute sketches to be converted into lifelike

sketch-to-image translation issues have produced images. Uploading the ground truth trains the system. GANs

promising results. Our technology produces photos that are employed by the system during this training phase. The

are more lifelike than those made by other techniques. mechanism generates a large number of potential outcomes.

According to experimental findings, our technology can As a result, these GANs compete among numerous possible

produce photographs that are both aesthetically pleasing outcomes to create the output that is most plausible and

and identity-Preserving using a variety of difficult data accurate. The system developer delivers a real-world image

sets. as the "ground truth," and after training, the computer is

expected to anticipate it.

Keywords:- Image Processing, Photo/Sketch Synthesis.

These modules can be used for research and analysis

I. INTRODUCTION purposes as well as other societal issues. To produce the

exact snapshot of the foreground sketch given into the

Automation is really necessary in this fast-paced world system, these two system components cooperate and

with all the technological breakthroughs. It is necessary to compete with one another. Pitting two classes of neural

train machines to work alongside men due to the daily networks against one another is the fundamental concept

increase in human effort. This would lead to improved behind an adversarial network. The foundation of generative

productivity, quick work, and expanded capabilities. An adversarial networks (GANs) is a game- theoretic situation

essential tool for improving or, as we would say, refining in which a rival network must be defeated. Samples are

the image is image processing. The effort of processing generated directly by the generator network. As its name

images has been greatly streamlined with the emergence of implies, it is a discriminator (classifier) since its opponent,

machine learning tools. A key area of study in computer the discriminator network, seeks to differentiate between

vision, image processing, and machine learning has always samples taken from the training data and samples taken from

been the automatic production, synthesis, and identification the generator.

of face sketch- photos. Image processing techniques like

sketch- to-image translation have a variety of applications. While the generator attempts to produce realistic

One of them involves using image generators and images so that the discriminator would classify them as real,

discriminators in conjunction with generative adversarial the discriminator's objective is to determine whether a

networks to map edges to photographs in order to create particular image is false or real. It is possible to describe

realistic- looking images. This approach is adaptable and sketch-based picture synthesis as an image translation

can be used as software in a variety of image processing problem conditioned on an input drawing. There are various

applications. ways to translate photos from one domain to another using

GAN.

IJISRT23JAN1094 www.ijisrt.com 772

Volume 8, Issue 1, January – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

We suggest an end-to-end trainable GAN-based sketch sketches and photos, we are able to overcome the first

for image synthesis in this paper. An object sketch serves as problem. Edge maps are created from a collection of

the input, and the result is a realistic image of the same pictures to create this augmentation dataset. For the second

object in a similar position. This presents a challenge since: challenge, we create a GAN-based model that is conditioned

There isn't a huge database to draw from because on an input sketch and includes many extra loss terms that

matching images and sketches are challenging to obtain. enhance the quality of the synthesized signal.

For the synthesis of sketches into other types of images,

there is no well-established neural network approach. GANs are algorithmic architecture that used two

neural networks in order to generated new data for the real

By adding a bigger dataset of coupled edge maps and data. GAN has two parts:

images to the database, which already contains human

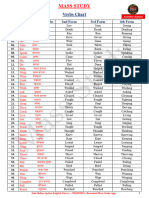

Fig. 1: GAN Module

Discrimination: - A GAN's discriminator is only a encoder and it layer of the decoder in the generator. Here,

classifier. It makes an effort to discern between actual the sketch is given as input, and then the image is created

data and data generated by the generator. Any network from it to see if it is similar to the target image. In

design suitable for the classification of the data could be accordance with their calculations, they have formulated

used. their loss function.

The training data for the discriminator comes from two The study concluded that [2] they use Joint Sketch-

sources: Image Representation. They train Generator and

Real data instances, such as real pictures of people. Discriminator networks with the complete joint images; the

The discriminator uses these instances as positive network then automatically predicts the corrupted image

examples during training. portion based on the context of the corresponding sketch

Fake data instances created by the generator. The portion. They actually trained on sketches which were not

discriminator uses these instances as negative examples draw by humans but they obtained it through edge detection

during training. and other technique which had more details in it. It’s also

seen that it retains the large part of the distorted structure

Generator: The generator component of a GAN learns to from sketch. It may be well suited where sketch data doesn’t

produce fictitious data. It gains the ability to get the contain many details and when the sketch is bad. It produce

discriminator to label its output as real. In comparison to bad images, because it can’t recognize which sketch it is and

discriminator training, generator training necessitates a tries to produce image with retaining many parts from

tighter integration between the generator and the sketch.

discriminator.

Xing Di. [3], [10] presented a deep generative

II. LITERATURE REVIEW framework for the reproduction of facial images using visual

features. His method used a middle representation to

Over the years, many scholars and entrepreneurs have produce photorealistic visuals. GANs and VAEs were

made many discussions and research on how to generate introduced into the framework. The system, however,

image using GAN technology to improve and manage the generated erraticresults.

current situation

Kokila R. [4] provide a study on matching sketches to

The Study Concluded in [1] conditional GANs are images for investigative purposes. The system's low level of

trained on input-output image pairs with the U-Net complexity was a result of its strong application focus. A

architecture, which is a Network Encoder and Decoder, and number of sketches were compared to pictures of the face

a Custom Discriminator, which is described in the paper. taken from various angles, producing highly precise results.

Two components make up the generator: an encoder, which The technique was utterly dependent on the calibre of the

down-samples a sketch to create a lower-dimensional used sketches, which led to disappointing outcomes for

representation X, and an image decoder, which takes the sketches of poor calibre.

vector X. A skip connection exists between layer 8 - i of the

IJISRT23JAN1094 www.ijisrt.com 773

Volume 8, Issue 1, January – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Christian Galea. [5] demonstrate a system for

automatically matching computer-generated sketches to System Generated Image:

accurate images Since the majority of the attributes Generate images by using the model.

remained unchanged in the software-generated sketches, the Display the generated images in 4x4 grid by using

system found it easier to match them to the real-life matplotlib.

photographs. However, the findings were not sufficient

when there was just one sketch per photo for matching. With

the use of generative adversarial networks, Phillip Isola

suggested a framework for image-to-image translation that Fig. 2: System Architecture and Flow

could anticipate results that were remarkably accurate to the

original image. Due to a generalized approach to picture IV. PROPOSED METHODOLOGY

translation, the system exhibited an extremely high level of

complexity. A. Discrimination

Six convolution layers with filters of (C64, C128, C256,

III. SYSTEM OVERVIEW C512, C512, C1) make up the discriminator, and the final

convolution layer is used to transfer the output to a single

Sketch Input: dimension. All layers employ a kernel size of (4, 4), strides

Take a random sketch Image. of (2, 2), and padding of "same." All convolutions,

It is typically created with quick marks and are excluding the first and last layer, use batch normalization

usually lacking some of the details (C64, C1).

Generator: Adam optimizer and the Keras library are used to

Generator network, which transforms the random compile the model. Two loss functions are connected to the

input into a data instance. discriminator. The discriminator only employs the

Discriminator network, which classifies the discriminator loss during training, ignoring the generator

generated data loss. During generator training, we make use of the

generator loss.

Discriminator: The discriminator classifies both real data and fake data

The discriminator classifies both real data and fake from the generator.

data from the generator. The discriminator loss penalizes the discriminator for

The discriminator loss penalizes the discriminator for misidentifying a real instance as fake or a fake instance as

misidentifying a real instance as fake or a fake real.

instance as real. The discriminator updates its weights through

backpropagation from the discriminator loss through the

discriminator network.

Fig. 3: Back propagation in discriminator training.

IJISRT23JAN1094 www.ijisrt.com 774

Volume 8, Issue 1, January – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

B. Generator layers with filters (C64, C128, C256, C512, C512, C512,

The generator model is implemented with encoder and C512) for down sampling the image. The encoding layers use

decoder layers using U-Net architecture, it receives an batch normalization except the first layer (C64).

image (sketch) as an input, the model applies 7 encoding

Fig. 4: U-Net Architecture

By incorporating feedback from the discriminator, the additional portion of the network. By evaluating the

generator component of a GAN learns to produce fictitious influence of each weight on the output and how the output

data. It gains the ability to get the discriminator to label its would vary if the weight were changed, backpropagation

output as real. adjusts each weight in the proper direction. However, a

generator weight's effect is influenced by the discriminator

In comparison to discriminator training, generator weights it feeds into. As a result, backpropagation begins at

training necessitates a tighter integration between the the output and travels via the discriminator and generator

generator and the discriminator. The GAN's generator before returning.

training section consists of:

Random input However, we don't want the discriminator to alter

Generator network, which transforms the while the generator is being trained. The generator would

random input into a data instance have a difficult task made even more difficult by attempting

Discriminator network, which classifies to strike a moving target.

the generated data

Discriminator output Therefore, we use the following process to train the

generator:

Generator loss, which penalizes the generator

for failing to fool the discriminator. Sample random noise.

Produce generator output from sampled random noise.

A neural network is trained by changing its weights to Get discriminator "Real" or "Fake" classification for

lower the output's error or loss. However, in our GAN, the generator output.

loss that we're aiming to reduce is not a direct result of the Calculate loss from discriminator classification.

generator. The generator feeds into the discriminator net, Back propagate through both the discriminator and

which then generates the output that we want to influence. generator to obtain gradients.

The discriminator network classifies the generator's sample Use gradients to change only the generator weights.

as fraudulent, therefore the generator suffers a loss. This is one iteration of generator training.

Backpropagation must take into account for this

Fig. 5: Backpropagation in generator training

IJISRT23JAN1094 www.ijisrt.com 775

Volume 8, Issue 1, January – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

C. Data Augumentation V. RESULT

A regularization approach called data augmentation is

used to lessen overfitting and boost a model's precision. To A. Comparison on Hand-Drawing Sketches

produce additional data, it is often applied to tiny datasets. We looked into our model's ability to create graphics

The method involves creating duplicates of already-existing from sketches made by hand. We accumulate 50 doodles.

data that have undergone a few adjustments, including angle Each sketch was created using a different random image. To

rotation, scale, vertical and horizontal flips, Gaussian noise compare our findings (pix2pix) Context Encoder (CE) and

addition, image shearing, etc. The model seeks to take hand- Image-to-Image Translation are used. The appropriate image

drawn sketches, and hand-drawn sketches will never be for the provided sketch is alsoreturned.

ideal in comparison to the original training data, therefore

data augmentation would be quite helpful in the dataset. One B. Verification Accuracy

hand-drawn sketch will differ from another in terms of the The goal of this study is to determine whether the

sketch's edges, form, rotation, and size, for instance. Scale, generated faces have the same identity label as the real faces

picture shear, and horizontal flip are the characteristics that if they are believable. Utilizing the pertained light CNN, the

are employed for data augmentation. identity-preserving attributes were retrieved, and the L2

norm was used for comparison. We outperformed Pix2Pix,

By using an approach called data augmentation, demonstrating that our model is more tolerant to various

practitioners can greatly broaden the variety of data that is sketches as well as learning to capture the key details.

readily available for training models without having to

actually acquire new data. In order to train massive neural

networks, data augmentation methods including cropping,

padding, and horizontal flipping are frequently used.

Fig. 6: Final Result

VI. CONCLUSION improves visual quality and photo/sketch matching rates.

Using the recently developed generative models, we The aforementioned GAN framework can produce

investigated the issue of photo-sketch synthesis. The images that are distinct from, or perhaps we should say

suggested technique was created expressly to help GAN more diversified than, common generative models.

produce high-resolution images. This is accomplished by

giving the generator sub-hidden network's layers hostile The main goal of GAN at the moment is to discover

supervision. In order to adapt to the task's input and function better probability metrics as objective functions, although

in a variety of settings, the network uses the loss it generates there haven't been many studies looking to improve network

throughout the entire process. Current automated architectures in GAN. For our generative challenge, we

frameworks contain features that can be applied to various suggested a network structure, and testing revealed that it

scenarios. outperformed existing arrangements.

The suggested framework can be improved by honing So, to summarize, this research offered a way to

its aptitude for identifying object outlines. The enhance the performance of producing images while also

recommended system's lack of texture identification makes providing a brief explanation of the architecture of generative

it difficult for the framework to appropriately identify the adversarial networks (GAN).

objects. The outcome depends on various elements,

including the level of noise in the sketch, its boundaries, and VII. FUTURE SCOPE

its accuracy. These factors can occasionally result in GAN won't have the same breadth in the foreseeable

unsatisfactory output. The observations and findings are in future. The hardware and software restrictions will be

their preliminary stages, and more research would overcome, and it will be able to operate at any scale and can

adequately reveal theadvantages and disadvantages. filter into domains outside picture and video generation and

Datasets have been assessed, and the outcomes are into broader use cases in scientific, technical, or enterprise

contrasted with current, cutting-edge generative techniques. sectors. And in regard to GAN, Catanzaro notes that "despite

It is evident that the suggested strategy significantly the interest, it is still too early to assume that GAN will filter

into these other sectors anytime soon." In a recent discussion

IJISRT23JAN1094 www.ijisrt.com 776

Volume 8, Issue 1, January – 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

we had regarding the potential of GAN in drug

development, this generation of sequences served as the

central theme.The hardware aspect of the GAN challenge is

a little bit more difficult to dispute, but the GAN

overpowering problem is one that can be tweaked over time.

We intend to research more potent generative models and

consider more application scenarios in the future.

REFERENCES

[1.] Lisa Fan, Jason Krone, Sam Woolf. Sketch to Image

Translation using GANs 2016. Project Report at

Stanford University.

[2.] Yongyi Lu, Shangzhe Wu, Yu-Wing Tai, Chi- Keung

Tang. Sketch-to-Image Generation Using Deep

Contextual Completion 2017. Submitted to Computer

Vision and Pattern Recognition (CVPR) 2018 IEEE

Con-ference.

[3.] Xing Di, Vishal M. Patel, Vishwanath A. Sindagi,

“Gender Preserving GAN for Synthesizing Faces

from Landmarks”, 2018.

[4.] Kokila R, Abhir Bhandary, Sannidhan M S, “A Study

and Analysis of Various Techniques to Match

Sketches to Mugshot Photos”, 2017, pp. 41-43.

[5.] Christian Galea, Reuben A. Farrugia, “Matching

Software-Generated Sketches to Face Photos with a

Very Deep CNN, Morphed Faces and Transfer

Learning”, 2017, pp. 1-10.

[6.] Chaofeng Chen, Kwan-Yee K. Wong, Xiao Tan,

“Face Sketch Synthesis with Style Transfer using

Pyramid Column Feature”, 2018.

[7.] Hadi Kazemi, Nasser M. Nasrabadi, Fariborz

Taherkhani, “Unsupervised Facial Geometry

Learning for Sketch to Photo Synthesis”, 2018, pp.3-

9.

[8.] Phillip Isola, Jun-Yan Zhu, Alexei A. Efros, Tinghui

Zhou, “Image-to-Image Translation with Conditional

Adversarial Networks”, 2018.

[9.] Yue Zhang, Guoyao Su, Jie Yang, Yonggang Qi,

“Unpaired Image-to-Sketch Translation Network for

Sketch Synthesis”, 2019.

[10.] Xing Di, Vishal M. Patel, “Face Synthesis from

Visual Attributes via Sketch using Conditional VAEs

and GANs”, 2017

[11.] Goodfellow, M. Mirza, J. Pouget-Abadie, B. Xu, S.

Ozair, D.Warde-Farley, A. Courville, and Y. Bengio,

“Generative adversarial nets”, 2014.

[12.] W. Zhang, X. Wang and X. Tang. Coupled

Information-Theoretic Encoding for Face Photo-

Sketch Recognition. “Proceedings of IEEE

Conference on Computer Vision and Pattern

Recognition (CVPR)”, 2011.

IJISRT23JAN1094 www.ijisrt.com 777

You might also like

- Official Business Form TemplateDocument1 pageOfficial Business Form TemplateBEN100% (1)

- Android Base Voting SystemDocument63 pagesAndroid Base Voting SystemAadenNo ratings yet

- Irregular VerbDocument2 pagesIrregular VerbzulsahiraNo ratings yet

- List of Verbs Adapted By: Teacher Lea SoidiDocument6 pagesList of Verbs Adapted By: Teacher Lea SoidiLea SoidiNo ratings yet

- Model - Day Wise Session Plan FOR FTCPDocument42 pagesModel - Day Wise Session Plan FOR FTCPJaveed AhamedNo ratings yet

- Main VerbDocument15 pagesMain Verbsharmaankit0154No ratings yet

- Verbs Chart-1-1Document11 pagesVerbs Chart-1-1Monika KaurNo ratings yet

- Java VersionsDocument5 pagesJava VersionsVikram VarmaNo ratings yet

- Irregular VerbDocument5 pagesIrregular VerbAkmila Meidina100% (1)

- Types of Adverbs With Hindi TranslationDocument10 pagesTypes of Adverbs With Hindi TranslationRahul ChoudharyNo ratings yet

- Irregular Verb ListDocument6 pagesIrregular Verb ListJarrNo ratings yet

- English Grammar Tenses Hindi Explanation - WWW - ExamTyaari.inDocument20 pagesEnglish Grammar Tenses Hindi Explanation - WWW - ExamTyaari.inPooja RathoreNo ratings yet

- Kata Kerja Tak Beraturan / Irregular Verb DariDocument6 pagesKata Kerja Tak Beraturan / Irregular Verb DariMuas Najudin RifaiNo ratings yet

- Demo Complete PowerPoint 2013 in HindiDocument61 pagesDemo Complete PowerPoint 2013 in HindisunnyNo ratings yet

- Bec Irreguler VerbsDocument2 pagesBec Irreguler VerbsDwi AyuNo ratings yet

- 22215eec W22Document13 pages22215eec W22SN NNo ratings yet

- Computer Science Class 9th Chapter 1 NotesDocument14 pagesComputer Science Class 9th Chapter 1 NotesAmna FaisalNo ratings yet

- Infinitive Past Tense Past Participle MeaningDocument4 pagesInfinitive Past Tense Past Participle MeaningGaboEstrattoD'sourca100% (1)

- Irregular Verbs: Infinitive Preterite Past Participle MeaningDocument10 pagesIrregular Verbs: Infinitive Preterite Past Participle MeaningBhisma PermanaNo ratings yet

- Adjective in Hindi PDFDocument14 pagesAdjective in Hindi PDFThakur Kumar PappuNo ratings yet

- Reg, Irreguler Verbs, RaflessDocument16 pagesReg, Irreguler Verbs, RaflessAlvianNo ratings yet

- TC Unit 5 - Dimensions of Oral Communication & Voice Dynamics by Kulbhushan (Krazy Kaksha & KK World)Document5 pagesTC Unit 5 - Dimensions of Oral Communication & Voice Dynamics by Kulbhushan (Krazy Kaksha & KK World)Amul VermaNo ratings yet

- Paper 21-Fake Face GeneratorDocument6 pagesPaper 21-Fake Face GeneratorAakrit DongolNo ratings yet

- Realistic Face Image Generation Based On Generative Adversarial NetworkDocument4 pagesRealistic Face Image Generation Based On Generative Adversarial NetworkRaghavendra ShettyNo ratings yet

- Learning Adversarial 3D Model Generation With 2D Image EnhancerDocument8 pagesLearning Adversarial 3D Model Generation With 2D Image EnhancerLadyBruneNo ratings yet

- Sketch-To-Face Image Translation and Enhancement Using A Multi-GAN ApproachDocument7 pagesSketch-To-Face Image Translation and Enhancement Using A Multi-GAN ApproachIJRASETPublicationsNo ratings yet

- An Analysis On The Use of Image Design With Generative AI TechnologiesDocument4 pagesAn Analysis On The Use of Image Design With Generative AI TechnologiesEditor IJTSRDNo ratings yet

- Fabric Color and Design Identification For Vision ImpairedDocument6 pagesFabric Color and Design Identification For Vision ImpairedIJRASETPublicationsNo ratings yet

- Photo-Realistic Photo Synthesis Using Improved Conditional Generative Adversarial NetworksDocument8 pagesPhoto-Realistic Photo Synthesis Using Improved Conditional Generative Adversarial NetworksIAES IJAINo ratings yet

- GenAI - ABSTRACT AMRITHADocument2 pagesGenAI - ABSTRACT AMRITHAAmrithaNo ratings yet

- Development of Image Super-Resolution FrameworkDocument5 pagesDevelopment of Image Super-Resolution FrameworkIAES International Journal of Robotics and AutomationNo ratings yet

- GAN Based State-Of-Art Image ColorizationDocument7 pagesGAN Based State-Of-Art Image ColorizationIJRASETPublicationsNo ratings yet

- Generative Adversarial Network For MedicDocument19 pagesGenerative Adversarial Network For Medicmariano cristoboNo ratings yet

- Anime Face Generation Using DC-GANsDocument6 pagesAnime Face Generation Using DC-GANsfacoNo ratings yet

- A Generative Adversari AL Network Based Deep Learning Method For Low Quality Defect Image Reconstruction and RecognitionDocument4 pagesA Generative Adversari AL Network Based Deep Learning Method For Low Quality Defect Image Reconstruction and RecognitionGabriel DAnnunzioNo ratings yet

- Report CVDocument9 pagesReport CVyawepa6474No ratings yet

- Criminal Face Recognition Using GANDocument3 pagesCriminal Face Recognition Using GANInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Satellite Image Stitcher Using ORBDocument6 pagesSatellite Image Stitcher Using ORBInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Fashion Clothes Generation System Using Deep Convolutional GANDocument11 pagesFashion Clothes Generation System Using Deep Convolutional GANIJRASETPublicationsNo ratings yet

- NeurIPS 2020 Swapping Autoencoder For Deep Image Manipulation PaperDocument14 pagesNeurIPS 2020 Swapping Autoencoder For Deep Image Manipulation PaperhhhNo ratings yet

- Image Caption Generator Using AI: Review - 1Document9 pagesImage Caption Generator Using AI: Review - 1Anish JamedarNo ratings yet

- A Survey On Generative Adversarial Networks (GANs)Document5 pagesA Survey On Generative Adversarial Networks (GANs)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Real Time Object Detection Using CNNDocument5 pagesReal Time Object Detection Using CNNyevanNo ratings yet

- Duplicate Image Detection and Comparison Using Single Core, Multiprocessing, and MultithreadingDocument8 pagesDuplicate Image Detection and Comparison Using Single Core, Multiprocessing, and MultithreadingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Sketch To Image Using GANDocument5 pagesSketch To Image Using GANmedo.tarek1122No ratings yet

- Orthomosaic From Generating 3D Models With PhotogrammetryDocument13 pagesOrthomosaic From Generating 3D Models With PhotogrammetryInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Data Augmentation On Plant Leaf Disease Image Dataset Using Image Manipulation and Deep Learning TechniquesDocument6 pagesData Augmentation On Plant Leaf Disease Image Dataset Using Image Manipulation and Deep Learning Techniqueszoric99No ratings yet

- IET ICETA 2022 - A Conditional Generative Adversarial Network Method For Image Segmentation in Blurry Image - REDUCEDDocument2 pagesIET ICETA 2022 - A Conditional Generative Adversarial Network Method For Image Segmentation in Blurry Image - REDUCEDcinfonNo ratings yet

- Text To Image Synthesis Using Generative Adversarial NetworksDocument10 pagesText To Image Synthesis Using Generative Adversarial NetworksIJRASETPublicationsNo ratings yet

- Visual Image Caption Generator Using Deep LearningDocument7 pagesVisual Image Caption Generator Using Deep LearningSai Pavan GNo ratings yet

- Automated Student Attendance Using Facial Recognition Based On DRLBP &DRLDPDocument4 pagesAutomated Student Attendance Using Facial Recognition Based On DRLBP &DRLDPDarshan PatilNo ratings yet

- SSRN Id3354412Document8 pagesSSRN Id3354412Aung Myo KhantNo ratings yet

- Suggestive GAN For Supporting Dysgraphic Drawing SkillsDocument12 pagesSuggestive GAN For Supporting Dysgraphic Drawing SkillsIAES IJAINo ratings yet

- A Survey of Image Synthesis and Editing With Generative Adversarial Networks PDFDocument15 pagesA Survey of Image Synthesis and Editing With Generative Adversarial Networks PDFzhenhua wuNo ratings yet

- Proceedings of Spie: A Survey On Generative Adversarial Networks and Their Variants MethodsDocument8 pagesProceedings of Spie: A Survey On Generative Adversarial Networks and Their Variants Methodswilliamkin14No ratings yet

- Review Paper On Image Processing in Distributed Environment: Sandeep Kaur, Manisha BhardwajDocument2 pagesReview Paper On Image Processing in Distributed Environment: Sandeep Kaur, Manisha Bhardwajmenilanjan89nLNo ratings yet

- Narrative Paragraph GenerationDocument13 pagesNarrative Paragraph Generationsid202pkNo ratings yet

- Recognition of Front and Rear IJEIR-13Document4 pagesRecognition of Front and Rear IJEIR-13Vivek DeshmukhNo ratings yet

- Heuristic Based Image Stitching Algorithm With Autom - 2024 - Expert Systems WitDocument12 pagesHeuristic Based Image Stitching Algorithm With Autom - 2024 - Expert Systems WitPhi MaiNo ratings yet

- (IJCST-V10I5P62) :raghava SaiNikhil, Dr.S.Govinda RaoDocument7 pages(IJCST-V10I5P62) :raghava SaiNikhil, Dr.S.Govinda RaoEighthSenseGroupNo ratings yet

- Image Processing: Dept - of Ise, DR - Ait 2018-19 1Document16 pagesImage Processing: Dept - of Ise, DR - Ait 2018-19 1Ramachandra HegdeNo ratings yet

- Oral Anti-Diabetic Semaglutide: A GLP-1 RA PeptideDocument11 pagesOral Anti-Diabetic Semaglutide: A GLP-1 RA PeptideInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Environmental Sanitation – A Therapy for Healthy Living for Sustainable Development. A Case Study of Argungu Township Kebbi State, North Western NigeriaDocument15 pagesEnvironmental Sanitation – A Therapy for Healthy Living for Sustainable Development. A Case Study of Argungu Township Kebbi State, North Western NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Prevalence of Microorganisms in UTI and Antibiotic Sensitivity Pattern among Gram Negative Isolates: A Cohort StudyDocument4 pagesPrevalence of Microorganisms in UTI and Antibiotic Sensitivity Pattern among Gram Negative Isolates: A Cohort StudyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Shift from Traditional to Modern Building Concepts and Designs in Ringim Town: A Comparative Study of Aesthetics, Values, Functions and DurabilityDocument9 pagesShift from Traditional to Modern Building Concepts and Designs in Ringim Town: A Comparative Study of Aesthetics, Values, Functions and DurabilityInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Association Between Gender and Depression Among College StudentsDocument4 pagesAssociation Between Gender and Depression Among College StudentsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Design and Analysis of Various Formwork SystemsDocument6 pagesDesign and Analysis of Various Formwork SystemsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Reintegration of Dairy in Daily American Diets: A Biochemical and Nutritional PerspectiveDocument1 pageReintegration of Dairy in Daily American Diets: A Biochemical and Nutritional PerspectiveInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Biometric Security Systems Enhanced by AI: Exploring Concerns with AI Advancements in Facial Recognition and Other Biometric Systems have Security Implications and VulnerabilitiesDocument5 pagesBiometric Security Systems Enhanced by AI: Exploring Concerns with AI Advancements in Facial Recognition and Other Biometric Systems have Security Implications and VulnerabilitiesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Appraisal of Nursing Care Received and it’s Satisfaction: A Case Study of Admitted Patients in Afe Babalola Multisystem Hospital, Ado Ekiti, Ekiti StateDocument12 pagesAppraisal of Nursing Care Received and it’s Satisfaction: A Case Study of Admitted Patients in Afe Babalola Multisystem Hospital, Ado Ekiti, Ekiti StateInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Salivary Diagnostics in Oral and Systemic Diseases - A ReviewDocument5 pagesSalivary Diagnostics in Oral and Systemic Diseases - A ReviewInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Evaluation of Lateral and Vertical Gingival Displacement Produced by Three Different Gingival Retraction Systems using Impression Scanning: An in-Vivo Original StudyDocument6 pagesEvaluation of Lateral and Vertical Gingival Displacement Produced by Three Different Gingival Retraction Systems using Impression Scanning: An in-Vivo Original StudyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Nurturing Corporate Employee Emotional Wellbeing, Time Management and the Influence on FamilyDocument8 pagesNurturing Corporate Employee Emotional Wellbeing, Time Management and the Influence on FamilyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Occupational Injuries among Health Care Workers in Selected Hospitals in Ogbomosho, Oyo StateDocument6 pagesOccupational Injuries among Health Care Workers in Selected Hospitals in Ogbomosho, Oyo StateInternational Journal of Innovative Science and Research Technology100% (1)

- AI Robots in Various SectorDocument3 pagesAI Robots in Various SectorInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- MSMEs and Rural Prosperity: A Study of their Influence in Indonesian Agriculture and Rural EconomyDocument6 pagesMSMEs and Rural Prosperity: A Study of their Influence in Indonesian Agriculture and Rural EconomyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Explanatory Sequential Study of Public Elementary School Teachers on Deped Computerization Program (DCP)Document7 pagesAn Explanatory Sequential Study of Public Elementary School Teachers on Deped Computerization Program (DCP)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Fuzzy based Tie-Line and LFC of a Two-Area Interconnected SystemDocument6 pagesFuzzy based Tie-Line and LFC of a Two-Area Interconnected SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Intrusion Detection and Prevention Systems for Ad-Hoc NetworksDocument8 pagesIntrusion Detection and Prevention Systems for Ad-Hoc NetworksInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Effects of Liquid Density and Impeller Size with Volute Clearance on the Performance of Radial Blade Centrifugal Pumps: An Experimental ApproachDocument16 pagesThe Effects of Liquid Density and Impeller Size with Volute Clearance on the Performance of Radial Blade Centrifugal Pumps: An Experimental ApproachInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Dynamic Analysis of High-Rise Buildings for Various Irregularities with and without Floating ColumnDocument3 pagesDynamic Analysis of High-Rise Buildings for Various Irregularities with and without Floating ColumnInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Innovative Mathematical Insights through Artificial Intelligence (AI): Analysing Ramanujan Series and the Relationship between e and π\piDocument8 pagesInnovative Mathematical Insights through Artificial Intelligence (AI): Analysing Ramanujan Series and the Relationship between e and π\piInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Geotechnical Assessment of Selected Lateritic Soils in Southwest Nigeria for Road Construction and Development of Artificial Neural Network Mathematical Based Model for Prediction of the California Bearing RatioDocument10 pagesGeotechnical Assessment of Selected Lateritic Soils in Southwest Nigeria for Road Construction and Development of Artificial Neural Network Mathematical Based Model for Prediction of the California Bearing RatioInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Diode Laser Therapy for Drug Induced Gingival Enlaregement: A CaseReportDocument4 pagesDiode Laser Therapy for Drug Induced Gingival Enlaregement: A CaseReportInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Integration of Information Communication Technology, Strategic Leadership and Academic Performance in Universities in North Kivu, Democratic Republic of CongoDocument7 pagesIntegration of Information Communication Technology, Strategic Leadership and Academic Performance in Universities in North Kivu, Democratic Republic of CongoInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Analysis of Factors Obstacling Construction Work in The Tojo Una-Una Islands RegionDocument9 pagesAnalysis of Factors Obstacling Construction Work in The Tojo Una-Una Islands RegionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Importance of Early Intervention of Traumatic Cataract in ChildrenDocument5 pagesImportance of Early Intervention of Traumatic Cataract in ChildrenInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Breaking Down Barriers To Inclusion: Stories of Grade Four Teachers in Maintream ClassroomsDocument14 pagesBreaking Down Barriers To Inclusion: Stories of Grade Four Teachers in Maintream ClassroomsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Novel Approach to Template Filling with Automatic Speech Recognition for Healthcare ProfessionalsDocument6 pagesA Novel Approach to Template Filling with Automatic Speech Recognition for Healthcare ProfessionalsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Comparison of JavaScript Frontend Frameworks - Angular, React, and VueDocument8 pagesComparison of JavaScript Frontend Frameworks - Angular, React, and VueInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Occupational Safety: PPE Use and Hazard Experiences Among Welders in Valencia City, BukidnonDocument11 pagesOccupational Safety: PPE Use and Hazard Experiences Among Welders in Valencia City, BukidnonInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- LTE Calculator Inc VoLTEDocument29 pagesLTE Calculator Inc VoLTESaran KhalidNo ratings yet

- TPM Road MapDocument4 pagesTPM Road Mapsanjeev singhNo ratings yet

- TVL Comprog11 Q1 M2Document16 pagesTVL Comprog11 Q1 M2Sherry-Ann TulauanNo ratings yet

- ECE 2115 Tutorial 6 Designing A Common Collector AmplifierDocument2 pagesECE 2115 Tutorial 6 Designing A Common Collector AmplifierCooper1k3 -No ratings yet

- What Is Functional Dependency?: Re Exivity: If Y Is A Subset of X, Then X Y Holds by Re Exivity RuleDocument17 pagesWhat Is Functional Dependency?: Re Exivity: If Y Is A Subset of X, Then X Y Holds by Re Exivity RuleDivya SinghNo ratings yet

- BCFP in A Nutshell 4gbit 0707Document41 pagesBCFP in A Nutshell 4gbit 0707Loris StrozziniNo ratings yet

- PODAI Active, Invited Students-ListDocument30 pagesPODAI Active, Invited Students-ListSouradeep GuptaNo ratings yet

- Safari. The Best Way To See The SitesDocument10 pagesSafari. The Best Way To See The SitesJotaNo ratings yet

- GBSS13.0 Troubleshooting Guide (02) (PDF) - enDocument381 pagesGBSS13.0 Troubleshooting Guide (02) (PDF) - enBenjie AlmasaNo ratings yet

- MJNV1W03 - Business Mathematics: Class Branding 1ADocument26 pagesMJNV1W03 - Business Mathematics: Class Branding 1AKatarina KimNo ratings yet

- 1-What Is An API?: //asked in InterviewDocument10 pages1-What Is An API?: //asked in Interviewdewang goelNo ratings yet

- Productivity Improvement Through Line Balancing by Using Simulation Modeling (Case Study Almeda Garment Factory)Document14 pagesProductivity Improvement Through Line Balancing by Using Simulation Modeling (Case Study Almeda Garment Factory)Ronald LiviaNo ratings yet

- Ledcenterm Software Operation Manual: C-PowerDocument44 pagesLedcenterm Software Operation Manual: C-Powerconcordeus meyselvaNo ratings yet

- R12 Table ChangesDocument5 pagesR12 Table ChangesreddymadhuNo ratings yet

- NS2Document11 pagesNS2Nikumani ChoudhuryNo ratings yet

- Gym Management SystemDocument9 pagesGym Management Systemsrishti24jaiswalNo ratings yet

- Sharp Al 2020 BrochureDocument2 pagesSharp Al 2020 BrochurenickypanzeNo ratings yet

- 797 FDocument10 pages797 FRonny Leon NuñezNo ratings yet

- NTPC CBT 1result Level 5Document165 pagesNTPC CBT 1result Level 5Nishant KushwahaNo ratings yet

- Web DesignDocument2 pagesWeb DesignCSETUBENo ratings yet

- JWeb ServicesDocument52 pagesJWeb ServicesUday KumarNo ratings yet

- ThinkScript Strategy With PAR SARDocument4 pagesThinkScript Strategy With PAR SARbollingerbandNo ratings yet

- All About Logic Families PDFDocument32 pagesAll About Logic Families PDFSaiKanthG100% (1)

- Current Issues and Challenges of Supply Chain ManagementDocument6 pagesCurrent Issues and Challenges of Supply Chain ManagementmohsinziaNo ratings yet

- UPRM LEAPRMidProjectReviewRASC AL2019Document18 pagesUPRM LEAPRMidProjectReviewRASC AL2019La Isla OesteNo ratings yet

- Arrow Diagrams in PowerpointDocument29 pagesArrow Diagrams in PowerpointSlidebooks Consulting100% (7)

- Transformer Protection Application GuideDocument33 pagesTransformer Protection Application GuideDulon40100% (2)

- Graphic DesignInformation TechnologyDocument97 pagesGraphic DesignInformation TechnologyRaihan MahmoodNo ratings yet

- Juniper: JN0-663 ExamDocument8 pagesJuniper: JN0-663 ExamAmine BoubakeurNo ratings yet