Professional Documents

Culture Documents

2011 Countering JPEG Counter Forensics Darren Chaker

2011 Countering JPEG Counter Forensics Darren Chaker

Uploaded by

Counter_ForensicsCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2011 Countering JPEG Counter Forensics Darren Chaker

2011 Countering JPEG Counter Forensics Darren Chaker

Uploaded by

Counter_ForensicsCopyright:

Available Formats

Countering Counter-Forensics:

The Case of JPEG Compression

ShiYue Lai and Rainer Bohme

European Research Center for Information System (ERCIS)

University of M unster, Leonardo-Campus 3, 48149 M unster, Germany

{shiyue.lai|rainer.boehme}@ercis.uni-muenster.de

Abstract. This paper summarizes several iterations in the cat-and-

mouse game between digital image forensics and counter-forensics re-

lated to an images JPEG compression history. Building on the counter-

forensics algorithm by Stamm et al. [1], we point out a vulnerability in

this scheme when a maximum likelihood estimator has no solution. We

construct a targeted detector against it, and present an improved scheme

which uses imputation to deal with cases that lack an estimate. While

this scheme is secure against our targeted detector, it is detectable by a

further improved detector, which borrows from steganalysis and uses a

calibrated feature. All claims are backed with experimental results from

2 800 never-compressed never-resampled grayscale images.

Key words: Image Forensics, Counter-Forensics, JPEG Compression

1 Introduction

Advances in information technology, the availability of high-quality digital cam-

eras, and powerful photo editing software have made it easy to fabricate digital

images which appear authentic to the human eye. Such forgeries are clearly un-

acceptable in areas like law enforcement, medicine, private investigations, and

the mass media. As a result, research on computer-based forensic algorithms to

detect forgeries of digital images has picked up over the past couple of years.

The development of digital image forensics soon became accompanied by

so-called tamper hiding [2] or digital image counter-forensics. The aim of

digital image counter-forensics, as the name suggests, is to fool current digital

image forensics by skillfully taking advantage of their limitations against intel-

ligent counterfeiters. In other words, counter-forensics challenge the reliability

of forensic algorithms in situations where the counterfeiter has a suciently

strong motive, possesses a background in digital signal processing, and disposes

of detailed knowledge of the relevant digital forensic algorithms. Hence, study-

ing counter-forensics is essential to assess the reliability of forensics methods

comprehensively, and eventually to improve their reliability.

However, almost all counter-forensic algorithms are imperfect. This means

they may successfully mislead specic forensic algorithms against which they

are designed. But their applications either skips over some traces of counterfeit

2 ShiYue Lai and Rainer B ohme

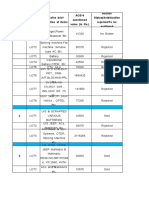

progress

standard

JPEG

compression

JPEG

quantization

estimation [7]

DCT

histogram

smoothing [1]

rst kind

detector

improved

histogram

smoothing

second kind

detector

Legend: detects

inspired by

steganalysis [46]

Fig. 1. Succession of advances in JPEG forensics and counter-forensics: contributions

of this paper are shaded in gray

uncovered or leaves additional traces in the resulting images. These traces, in

turn, can be exploited to detect that counter-forensics has been applied. This

limitation is mentioned in the early literature on counter-forensics [2] and we are

aware of one specic publication in the area of digital camera identication [3].

This paper contributes new targeted detectors of counter-forensics against the

forensic analysis of an images JPEG compression history.

Due to its popularity for storing digital images of natural scenes, JPEG com-

pression is an image processing operation that is of great interest to researchers

in both forensics and counter-forensics. In this paper, rst, by exploring features

of the high-frequency AC coecient distributions, a novel targeted detector is

presented to detect counter-forensics of JPEG compression proposed by Stamm

et al. [1]. We shall refer to this detector as rst kind detector throughout this

paper. Then we improve the original counter-forensic algorithm by estimating

the relation between the distributions of AC coecients. This novel algorithm

avoids obvious identifying traces of the original counter-forensics method and,

as a result, it is undetectable by our rst kind detector. Finally, a more powerful

second kind detector is presented, which can be used to detect both the orig-

inal method and the improved method. This novel forensic tool borrows from

techniques in steganalysis, namely the so-called calibration of JPEG DCT

coecient distributions [46]. To maintain better overview, Figure 1 visualizes

the above-described succession of advances in forensics and counter-forensics by

depicting the relations between our contributions and existing work. The eec-

tiveness of all presented methods is validated experimentally on a large set of

images for dierent JPEG quality factors.

This paper is organized as follows. Section 2 briey reviews related work.

A targeted detector against Stamm et al.s [1] counter-forensics of JPEG com-

pression is presented in Section 3. An improved variant of the counter-forensics

Countering Counter-Forensics: The Case of JPEG Compression 3

method and a more powerful detector are proposed in Sections 4 and 5, respec-

tively. Section 6 concludes with a discussion.

2 Related Work

We refrain from repeating the details of standard JPEG compression here. Foren-

sics and counter-forensics of JPEG compression have attracted the interest of

researchers recently. Regarding forensic techniques, Fan and Queiroz [7] propose

a way to detect JPEG compression history of an image. The authors provide

a maximum-likelihood (ML) method to estimate the quantization factors from

a spatial domain bitmap representation of the image. Farid [8] veries the au-

thenticity of JPEG images by comparing the quantization table used in JPEG

compression to a database of tables employed by selected digital camera mod-

els and image editing software. He et al. [9] demonstrate how local evidence of

double JPEG compression can be used to identify image forgeries. Pevny and

Fridrich [10] present a method to detect double JPEG compression using a max-

imum likelihood estimator of the primary quality factor.

Note that all these fruits of researches on forensics of JPEG compression are

obtained under the assumption that no counter-forensics algorithms has been

applied to suppress evidence of image tampering or to change other forensically

signicant image properties.

Regarding counter-forensics of JPEG compression, Stamm et al. [1] propose a

counter-forensics operation capable of disguising key evidence of JPEG compres-

sion by restoring the DCT coecients according to their model distribution. In a

separate publication [11], the same team further proposes an anti-forensic opera-

tion capable of removing blocking artifacts from a previously JPEG-compressed

image. They also demonstrate that by combining this operation with the method

in [1], one can mislead a range of forensic methods designed to detect a) traces

of JPEG compression in decoded images, b) double JPEG compression, and c)

cut-and-paste image forgeries.

At the time of submission we were not aware of any prior work on countering

counter-forensics specic to an images JPEG compression history. Only after

the presentation of our work at the Information Hiding Conference we learned

about independent research of another counter-forensic technique against Stamm

et al.s [1] method: Valenzise et al. [12] present a detector based on noise measures

after recompression with dierent quality factors.

3 Detecting Stamm et al.s Counter-Forensics of JPEG

Compression

3.1 Stamm et al.s DCT Histogram Smoothing Method

The intuition of Stamm et al.s counter-forensic method is to smooth out gaps

in the individual DCT coecient histograms by adding noise according to a dis-

tribution function which is conditional to the DCT subband, the quantization

4 ShiYue Lai and Rainer B ohme

factor, and the actual value of each DCT coecient [1]. The conditional noise dis-

tribution for the AC coecients is derived from a Laplacian distribution model

of AC DCT coecients in never-quantized digital images.

1

The maximum like-

lihood estimates of the Laplacian parameter

(i,j)

for the frequency subbands

I

(i,j)

, (i, j) {0, . . . , 7}

2

, are obtained from the quantized AC coecients using

the formula:

ML(i,j)

=

2

Q

(i,j)

ln

(i,j)

, (1)

where

(i,j)

is dened as

(i,j)

=

Z

2

(i,j)

Q

2

(i,j)

(2V

(i,j)

Q

(i,j)

4S

(i,j)

)(2NQ

(i,j)

+ 4S

(i,j)

)

2NQ

(i,j)

+ 4S

(i,j)

Z

(i,j)

Q

(i,j)

2NQ

(i,j)

+ 4S

(i,j)

. (2)

Here, S

(i,j)

=

N

k=1

|y

k

|, y

k

is the k-th quantized coecient in subband (i, j),

N is the total number of the quantized (i, j) AC coecients. Z

(i,j)

is the number

of coecient that take value zero and V

(i,j)

is the number of nonzero coecients

in this subband. Q

(i,j)

is the quantization factor for subband (i, j) which is either

known (if the image is given in JPEG format) or has to be estimated, e. g., with

the method in [7]. Once

ML(i,j)

is known, the AC coecients can be adjusted

within their quantization step size to t the histogram as close as possible to a

Laplacian distribution with parameter

ML(i,j)

.

In the high frequency subbands, because Q

(i,j)

is high, it is more likely that

all coecients are quantized to zero. This happens more often the smaller the

overall JPEG quality factor (QF) is. If all the coecients are zero, i. e., V =

0, Z = N, S = 0, then according to Eq. (2),

(i,j)

= 0. Hence the parameter

estimate

ML(i,j)

is undened in Eq. (1). Our experiments suggest that this

happens pretty often. Table 1 shows the average share of all-zero frequency

subbands with dierent QF for 1600 tested images.

In this situation, Stamm et al.s method just allows these coecients to re-

main unmodied for that the perturbations to each DCT coecient caused by

mapping all decompressed pixel values to the integer set {0, . . . , 255} in the

spatial domain will result in a plausible DCT coecient distribution after re-

transformation into the DCT domain by the forensic investigator. However, as

we shall see, this conjecture is too optimistic. It leaves us a clue to build our

rst kind targeted detector.

1

A non-parametric model is employed for the DC coecients, which in general do not

follow any parsimonious distribution model. This part is not touched in this paper.

Countering Counter-Forensics: The Case of JPEG Compression 5

Table 1. Average share of all-zero frequency subbands for dierent JPEG qualities

JPEG quality factor (QF) 60 70 80 90

All-zero frequency subbands (in %) 23.4 19.2 11.5 2.5

i

j

Fig. 2. The high frequency subbands according to our denition (shaded cells)

3.2 Targeted Detector Based on Zeros in High Frequency AC

Coecients

Throughout this paper, we dene a high frequency subband as subband that lies

below the anti-diagonal of the 8 8 matrix of DCT subbands (cf. Fig. 2). Using

this denition, there are 28 high frequency subbands.

All high frequency subbands where

ML(i,j)

is undened exhibit a high rate of

zero coecientseven though the perturbations to each DCT coecient caused

by mapping all decompressed pixel values to the set {0, . . . , 255} will result in

a plausible DCT coecient distribution. Based on this observation, a targeted

detector with dierent threshold T can be constructed as follows. If the rate R

of zero coecients in high frequency subbands is less or equal to T (R T),

then the detector classies a given input image as unsuspicious. Otherwise, it is

agged as image after compression and counter-forensics.

All experiments reported in this paper draw on a set of 1600 test images

captured with a single Minolta DiMAGE A1 camera. The images were stored

in raw format and extracted as 12-bit grayscale bitmaps to avoid inference of

color lter interpolation or noise reduction. One test image sized 640480 pixels

has been cropped from each raw image at a random location to avoid resizing

artifacts. The test images were made available to us by Ker [13]. All JPEG

compression and decompression operations were carried out by us using libjpeg

version 6b with its default DCT method on an Intel Mac OS 10.6 platform.

The 1600 never-compressed images were randomly and equally divided into

two groups. The 800 images in the rst group were rst compressed with dierent

quality factor (QF= 60, 70, 80, 90), then subject to counter-forensics of JPEG

compression using Stamm et al.s method. Finally the original uncompressed

images in the rst group and the images after counter-forensics were tested

6 ShiYue Lai and Rainer B ohme

QF=60

QF=70

QF=80

QF=90

0.0 0.2 0.4 0.6 0.8 1.0

0.0

0.2

0.4

0.6

0.8

1.0

PFA

P

D

(a) ROC for dierent QF

0.0 0.2 0.4 0.6 0.8 1.0

0.0

0.2

0.4

0.6

0.8

1.0

PFA

P

D

(b) The average ROC

Fig. 3. The ROC of the detector based on the rate of zeros in high frequency subbands

0.1 0.2 0.3 0.4 0.5 0.6

0.0

0.2

0.4

0.6

0.8

1.0

T

P

F

A

Fig. 4. Empirical P

FA

as a function of threshold T

together. Let P

FA

and P

MD

(P

D

= 1 P

MD

) denote the probabilities of false

alarm and missed detection, respectively. Fig. 3 shows the receiver operation

characteristics (ROC) curve for this rst kind detector. Fig. 4 shows the empirical

P

FA

of detectors with dierent threshold T, and Fig. 5 shows P

MD

as a function

of threshold T for dierent QF.

The gures suggest that to minimize the overall error (P

E

, which is calculated

by dividing the number of the tested images by the number of misclassid im-

ages), T should be chosen around T 0.2. The 800 images in the second group

were used to test the targeted detectors with T = {0.15, 0.20, 0.25}. Table 2

reports the results.

Countering Counter-Forensics: The Case of JPEG Compression 7

0.0 0.2 0.4 0.6 0.8 1.0

0.0

0.2

0.4

0.6

0.8

1.0

QF=90

QF=80

QF=70

QF=60

T

P

M

D

Fig. 5. Empirical P

MD

as a function of threshold T

Table 2. P

FA

, P

MD

and P

E

for the detectors with T = {0.15, 0.20, 0.25}: (%)

T P

E

P

FA

P

MD

QF=60 QF=70 QF=80 QF=90 Average

0.15 20.6 54.6 1.4 5.4 20.0 21.6 12.1

0.20 21.8 24.7 2.3 9.9 27.5 44.5 21.0

0.25 25.0 7.6 3.8 12.1 32.8 68.8 29.3

3.3 Targeted Detector Based on the Magnitude of High Frequency

AC Coecients

Another feature of the frequency subbands whose

ML(i,j)

are undened is that

the magnitude of the their AC coecients is very small. Experiments on the

rst 800 images indicated that the magnitude is never larger than |y

k

| 2.

Based on this observation, another targeted detector can be established like

this: for a given image, the absolute values of the (unquantized) AC coecients

are examined. If there exists a subband where the maximum absolute value of

its AC coecients is not larger than 2, then the image is judged to be a forgery.

Otherwise it can be classied as authentic. To test this detector, the second

group of 800 images were used. Table 3 shows the value of the two kinds of

errors for dierent QF. It is can be seen that the P

MD

is very high if QF is larger

than 80. This is so because with increasing QF, there are fewer subbands where

ML(i,j)

is undened.

3.4 Combination of the Two Targeted Detectors

Judging from the above discussion, it can be seen that with QF increasing, both

detectors become less sensitive to the counter-forensics operation. This is intu-

itive because the higher the QF the more similar are the original image and the

8 ShiYue Lai and Rainer B ohme

Table 3. P

FA

, P

MD

and P

E

for the second targeted detector: (%)

P

E

P

FA

P

MD

QF=60 QF=70 QF=80 QF=90 Averagel

22.9 0.3 0.8 2.5 20.3 90.4 28.5

Table 4. The performance of the detectors combining the rst targeted detector with

dierent T and the second targeted detector: (%)

T P

E

P

FA

P

MD

QF=60 QF=70 QF=80 QF=90 Average

0.15 19.6 54.1 0.8 2.5 18.9 21.6 10.9

0.20 18.4 24.3 0.8 2.5 20.2 44.4 16.9

0.25 19.6 7.3 0.8 2.5 20.2 67.5 22.8

image after counter-forensics. It is also noticeable that P

MD

for the rst detector

with proper T are lower than the second one, whereas the second detector gen-

erates a very low P

FA

. Based on this observation, a targeted detector combining

the two approaches can be built like this: we rst pass a given image though

the second detector. If the result is negative (that is, the image is considered a

forgery), then we trust the decision because P

FA

of the second detector is very

low. Conversely, if the result is positive, then the image is passed on to the rst

detector with threshold T. Table 4 reports the performance of a detector combin-

ing the rst detector with dierent T and the second detector. The experiment

is done on the second group of images. It can be seen that the performance of

the combined detector is better than of any single detector. Our detector is good

at detecting the counter-forensics operation of JPEG compression when QF is

not too high, while keeping the P

FA

reasonably low.

4 Improved Counter-Forensics of JPEG Compression

To overcome the obvious vulnerability in the high frequency subbands, which

persists in Stamm et al.s method due to undened

ML(i,j)

, we propose a way

to impute estimates for the Laplacian parameter if Eq. (1) has no solution.

This part is builts on prior research by Lam and Goodman [14]. They argue

that the distribution of DCT coecients I

(i,j)

is approximately Gaussian for

stationary signals in the spatial domain. Hence, if the variance of all 88 blocks

2

block

in a image was constant, then the variance

2

(i,j)

of frequency subband

I

(i,j)

would be proportional to

2

block

. Suppose

2

block

= K

(i,j)

2

(i,j)

and let

K

(i,j)

= k

2

(i,j)

to simplify notation. Then

block

= k

(i,j)

(i,j)

, where k

(i,j)

is a

scaling parameter. Now adding one important characteristic of natural images,

namely that the variance of the blocks varies between blocks, leads us to Lam

Countering Counter-Forensics: The Case of JPEG Compression 9

and Goodmans double-stochastic model [14]. Let p(.) denote the probability

density function, then

p(I

(i,j)

) =

0

p(I

(i,j)

|

2

block

)p(

2

block

)d(

2

block

). (3)

As discussed above, p(I

(i,j)

|

2

block

) is approximately zero-mean Gaussian, i. e.,

p(I

(i,j)

|

2

block

) =

1

2

(i,j)

exp

I

2

(i,j)

2

2

(i,j)

. (4)

Following the argument in [14] further, we can approximate the distribution

function of the variance p(

2

block

) by exponential distributions and half-Gaussian

distributions. Adapted to our notation we obtain for the exponential distribution:

p(x) = exp (x) ; (5)

and for the half-Gaussian distribution:

p(x) =

2

exp

x

2

2

2

for x 0

0 for x < 0.

(6)

Plugging Eqs. (5) or (6) into Eq. (4), respectively, yields the same Laplacian

shape for the AC coecient distribution in natural images (proofs in [14]),

p(I

(i,j)

) =

k

(i,j)

2

2

exp

k

(i,j)

2|I

(i,j)

|

. (7)

So the relationship of the Laplacian parameters

(i,j)

for frequency subbands

I

(i,j)

are only determined by k

(i,j)

. For any two AC DCT subbands I

(i,j)

and

I

(m,n)

it holds that

(i,j)

(m,n)

=

k

(i,j)

2

k

(m,n)

2

=

k

(i,j)

k

(m,n)

. (8)

To learn the scaling parameters k

(i,j)

from our images, we rst select blocks

with dierent variance randomly from our image database while keeping the

number of blocks for each variance bracket approximately constant. We then

transform the selected blocks to the DCT domain and calculate the variance

(i,j)

. With

block

and

(i,j)

known for a balanced sample,

k

(i,j)

can be estimated

with the least squares method. Once

k

(i,j)

is determined,

(i,j)

can be imputed

using Eq. (8) in cases where

ML(i,j)

cannot be calculated from Eq. (1). This

procedure avoids the obvious vulnerability in the high frequency subbands that

made the original DCT histogram smoothing method of [1] detectable with our

rst kind detector. All other parts of the algorithm remain the same in our

improved variant of Stamm et al.s method.

As the obvious singularity in the high frequency subbands no longer exist

with the improved method, our targeted detector of Sect. 3.4 fails to detect it

or produces unacceptably many false positives (see Table 5).

10 ShiYue Lai and Rainer B ohme

Table 5. The performance of targeted detectors with dierent T for improved method:

(%)

T P

E

P

FA

P

MD

QF=60 QF=70 QF=80 QF=90 Average

0.15 28.7 54.1 24.0 22.1 22.0 21.0 22.3

0.20 43.1 24.3 50.1 48.2 50.0 42.3 47.8

0.25 56.5 7.3 68.8 70.1 68.7 67.5 68.8

5 Second Kind Detector Using Calibration

The nal contribution of this paper is a more powerful detector. This time we

seek inspiration from steganalysis of JPEG images, since both forensics and

steganalysis strive for closely related goals [15, 16].

The notion of calibration was coined by Fridrich et al. in 2002 in conjunction

with their attack against the steganographic algorithm F5 [4]. The idea is to

desynchronize the block structure of JPEG images, e. g., by slightly cropping

it in the spatial domain. This way one obtains a reference image which, after

transformation to the DCT domain, shares macroscopic characteristics with the

original unprocessed JPEG image. Kodovsky and Fridrich [6] give a detailed

analysis of the nature of calibration in steganalysis. While pointing out some

fallacies of calibration, they also studied in depth how, why, and when calibration

works. Based on their research and the fact that calibration has been successfully

used to construct many steganalysis schemes [4, 5, 17], we decided to investigate

the usefulness of calibrated features to set up a detector for JPEG compression

counter-forensics.

In this paper, a single calibrated feature, the ratio of the variance of high

frequency subbands, is utilized to establish a detector. For a given image I, rst,

in spatial domain, we crop it by 4 pixels in both horizontal and vertical direction

(similar to [5]) to obtain a new image J. Then we calculate the variance of 28

high frequency subbands for both images I and J. The calibrated feature F is

calculated as follows:

F =

1

28

28

i=1

v

I,i

v

J,i

v

I,i

, (9)

where v

I,i

is the variance of i-th high frequency subband in I and v

J,i

is the

variance of i-th high frequency subband in J.

According to Lam and Goodman [14], the variance of frequency subbands is

determined by the variance of pixels in the spatial domain (see also Sect. 4). In

digital images of natural scenes, the variances of pixels in spatial proximity are

often very similar. Hence, cropping the image can be treated as a transposition

of the spatial pixels. So, for a authentic image, the changes of the variance of fre-

quency subbands should be negligible. However, for images that have undergone

counter-forensics, even though DCT coecients have been smoothed to match

Countering Counter-Forensics: The Case of JPEG Compression 11

QF=60

QF=70

QF=80

QF=90

0.0 0.2 0.4 0.6 0.8 1.0

0.0

0.2

0.4

0.6

0.8

1.0

PFA

P

D

(a) ROC for dierent QF

0.0 0.2 0.4 0.6 0.8 1.0

0.0

0.2

0.4

0.6

0.8

1.0

PFA

P

D

(b) The average ROC

Fig. 6. The ROC of detectors with dierent G

the marginal distribution of authentic images, this variance feature is violated

after cropping. The change of the variance of frequency subbands is notable,

especially in high frequency subbands.

Our second kind detector with threshold G works as follows. If F G, then

the detector decides for a normal image. Otherwise the detector ags an image

as after compression and counter-forensics.

To test our new detector, the rst 800 images are rst compressed with dif-

ferent quality factor (QF= 60, 70, 80, 90), then processed with counter-forensics

of JPEG compression using our improved method of Sect. 4. Finally the original

uncompressed images, together with images after counter-forensics are used for

testing. Figure 6 shows the ROC curve for the new detector. Figure 7 shows the

P

FA

of detectors with dierent G and Fig. 8 reports the information of P

MD

for

dierent QF of detectors with dierent G.

It can be seen from the ROC curve (Fig. 6) that our new detector is pretty

good at detecting our improved method of JPEG compression counter-forensics.

The two kinds of errors P

FA

and P

MD

can be kept very low simultaneously.

Table 6 shows the result using the second group of 800 images with G =

{0.10, 0.15, 0.20}. Observe that both P

FA

and P

MD

are very low for our choice of

G. Even for QF=95, with G = 0.1, P

MD

is below 0.2 %, which is a satisfactory

value.

We also tested our new detector against the original histogram smoothing

method by Stamm et al. [1]. Table 7 reports the results, which are also very sat-

isfactory. We have no explanation, though, why the calibrated feature produces

slightly higher P

MD

for the original methods compared to the improved method

which uses imputed values if

ML(i,j)

is undened.

Note that all results refer to detection rates of counter-forensics. Our new

methods do not aim to detect JPEG compression as such, but attempts to con-

12 ShiYue Lai and Rainer B ohme

0.05 0.15 0.25 0.35

0.0

0.2

0.4

0.6

0.8

1.0

G

P

F

A

Fig. 7. The P

FA

of detectors with dierent G

0.5 1.0 1.5

0.0

0.2

0.4

0.6

0.8

1.0

G

P

M

D

QF=60

QF=70

QF=80

QF=90

Fig. 8. The P

MD

of detectors with dierent G

ceal it. Therefore, in practice, our detectors should be used in combination with

existing methods to detect plain JPEG compression, e. g., [7].

6 Discussion and Conclusion

The contribution of this paper is to document several incremental advances in

the area of forensics and counter-forensics of JPEG compression. We decided to

cut the dialectical iterations at a stage where a suciently powerful detector

of counter-forensics could be found. This second kind detector uses a single

calibrated feature, which was inspired from the literature on JPEG steganalysis.

According to the performance measures of this second kind detector and

the preceding analysis, it is reasonable to state that our second kind detector

is eective in detecting counter-forensics of JPEG compression, which modies

Countering Counter-Forensics: The Case of JPEG Compression 13

Table 6. P

FA

, P

MD

and P

E

for the detector with G = {0.10, 0.15., 0.20}: (%)

G P

E

P

FA

P

MD

QF=60 QF=70 QF=80 QF=90 Average

0.10 0.3 1.3 0.0 0.0 0.0 0.3 0.1

0.15 0.8 0.4 0.0 0.0 0.1 3.8 1.0

0.20 2.9 0.3 0.0 0.1 1.5 12.4 3.5

Table 7. Performance of the detectors with G = {0.10, 0.15, 0.20} for detecting the

method by Stamm et al. [1]: (%)

G P

E

P

FA

P

MD

QF=60 QF=70 QF=80 QF=90 Average

0.10 0.5 1.3 0.0 0.0 0.0 1.3 0.3

0.15 1.7 0.4 0.0 0.0 0.8 7.5 2.0

0.20 4.2 0.3 0.0 0.3 1.5 18.9 5.2

DCT coecients independently to smooth the marginal distributions of AC sub-

bands. In reaction to this detector, future research on counter-forensic of JPEG

compression needs to nd way to keep both our calibrated feature F and the

distribution of frequency subbands similar to authentic (i. e., never-compressed)

images. This is a similar problem as faced by steganographic embedding func-

tions for JPEG images. As for JPEG compression forensics, it is likely that more

reliable and distinguishable calibrated features can be found so that detectors

keep on a level playing eld with advances in counter-forensics. Like in almost

any security eld, the cat-and-mouse game between attackers and defenders will

continue.

Another open research question is a systematic comparison of the detection

performance of our method and the more recent approach by Valenzise et al. [12].

As for every digital forensics paper, we deem it appropriate to close with

the usual limitation that digital forensics, despite its mathematical formalisms,

remains an inexact science. Hence the results of its methods should rather be un-

derstood as indications (subject to underlying technical assumptions and social

conventions), never as outright proofs of facts.

Acknowledgements

The rst author gratefully receives a CSC grant for PhD studies in Germany

and an IH student travel grant supporting her trip to Prague.

References

1. Stamm, M., Tjoa, S., Lin, W., Ray Liu, K.J.R.: Anti-forensics of JPEG compres-

sion. In: IEEE International Conference on Acoustics Speech and Signal Processing

14 ShiYue Lai and Rainer B ohme

(ICASSP), 2010, IEEE Press (2010) 16941697

2. Kirchner, M., B ohme, R.: Tamper hiding: Defeating image forensics. In Furon, T.,

Cayre, F., Doerr, G., Bas, P., eds.: Information Hiding, 9th International Workshop,

IH 2007. Volume 4567 of Lecture Notes in Computer Science., Berlin, Heidelberg,

Springer-Verlag (2007) 326341

3. Goljan, M., Fridrich, J., Chen, M.: Sensor noise camera identication: Countering

counter-forensics. In Memon, N.D., Dittmann, J., Alattar, A.M., Delp, E.J., eds.:

Media Forensics and Security II. Volume 7541 of Proceedings of SPIE., Bellingham,

WA, SPIE (2010) 75410S

4. Fridrich, J., Goljan, M., Hogea, D.: Steganalysis of JPEG images: Breaking the

F5 algorithm. In Petitcolas, F.A.P., ed.: Information Hiding, 5th International

Workshop. Volume LNCS 2568., Berlin Heidelberg, Springer-Verlag (2002) 301

323

5. Fridrich, J.: Feature-based steganalysis for JPEG images and its implications for

future design of steganographic schemes. In: Information Hiding, 6th International

Workshop. Volume LNCS 3200., Springer (2004) 6781

6. Kodovsky, J., Fridrich, J.: Calibration revisited. In: Proceedings of the 11th ACM

workshop on Multimedia and Security, New York, ACM Press (2009) 6373

7. Fan, Z., Queiroz, R.: Identication of bitmap compression history: JPEG detection

and quantizer estimation. IEEE Transactions on Image Processing 12 (2003) 230

235

8. Farid, H.: Digital image ballistics from JPEG quantization. Tech.Rep. TR2006-583

(2006)

9. He, J., Lin, Z., Wang, L., Tang, X.: Detecting doctored JPEG images via DCT

coecient analysis. Proc. of ECCV 3593 (2006) 425435

10. Pevny, T., Fridrich, J.: Detection of double-compression in JPEG images for appli-

cations in steganography. IEEE Tansactions on Information Forensics and Security

3 (2008) 247258

11. Stamm, M., Tjoa, S., Lin, W., Ray Liu, K.J.R.: Undetectable image tampering

through JPEG compression anti-forensics. In: IEEE Int. Conf. on Image Processing

(ICIP). (2010)

12. Valenzise, G., Nobile, V., Tagliasacchi, M., Tubaro, S.: Countering JPEG anti-

forensics. In: IEEE Int. Conf. on Image Processing (ICIP). (2011) (to appear)

13. Ker, A.D., B ohme, R.: Revisiting weighted stego-image steganalysis. In Delp, E.J.,

Wong, P.W., Dittmann, J., Memon, N.D., eds.: Security, Forensics, Steganography

and Watermarking of Multimedia Contents X (Proc. of SPIE). Volume 6819., San

Jose, CA (2008)

14. Lam, E.Y.and Goodman, J.: A mathematical analysis of the DCT coecient

distributions for images. IEEE Transactions on image processing 9 (2000) 1661

1665

15. Kirchner, M., Bohme, R.: Hiding traces of resampling in digital images. IEEE

Transactions on Information Forensics and Security 3 (2008) 582592

16. Barni, M., Cancelli, G., Esposito, A.: Forensics aided steganalysis of heteroge-

neous images. In: IEEE International Conference on Acoustics Speech and Signal

Processing (ICASSP), 2010, IEEE Press (2010) 16901693

17. Pevny, T., Fridrich, J.: Merging Markov and DCT features for multi-class JPEG

steganalysis. In: Proceeding SPIE, Electronic Imaging, Security, Steganography,

and Watermarking of Multimedia Contents( IX ). (2007) 313314

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Quiz 1 PSWD - Attempt ReviewDocument23 pagesQuiz 1 PSWD - Attempt Reviewfirza rahmanNo ratings yet

- 4G Technology Promote Mobile LearningDocument5 pages4G Technology Promote Mobile LearningSharath Kumar N100% (1)

- Make A Simple 2.425GHz HelicalDocument12 pagesMake A Simple 2.425GHz Helicalf.lastNo ratings yet

- LR ENR 6 2 en 2009-05-07Document1 pageLR ENR 6 2 en 2009-05-07Alexandra GeorgescuNo ratings yet

- Fdocuments - in Low Power Vlsi Design PPT 250 259Document10 pagesFdocuments - in Low Power Vlsi Design PPT 250 259B VasuNo ratings yet

- Effect of Normac ILS Due To Change in Environment PDFDocument4 pagesEffect of Normac ILS Due To Change in Environment PDFkanishka asthanaNo ratings yet

- Measurement System BehaviourDocument48 pagesMeasurement System BehaviourpitapitulNo ratings yet

- Status of ACE-9 SanctionedDocument7 pagesStatus of ACE-9 SanctionedPuneet NirajNo ratings yet

- Kenwood TH-D7AG Users Manual NEWDocument30 pagesKenwood TH-D7AG Users Manual NEWSupolNo ratings yet

- Tata Teleservices LTD PresentationDocument88 pagesTata Teleservices LTD PresentationIshankNo ratings yet

- Wi-Fi 6 Terminal: Advantages and Disadvantages of OFDMADocument3 pagesWi-Fi 6 Terminal: Advantages and Disadvantages of OFDMAzekariasNo ratings yet

- Product Specifications Product Specifications: MR1818U MR1818U - 7600606 7600606Document2 pagesProduct Specifications Product Specifications: MR1818U MR1818U - 7600606 7600606Duval FortesNo ratings yet

- Linkwitz Riley CrossoversDocument6 pagesLinkwitz Riley Crossoversdonald141No ratings yet

- 2G KPI Formula Used in ReportDocument4 pages2G KPI Formula Used in ReportMaq AzadNo ratings yet

- As Se Xe MFDocument34 pagesAs Se Xe MFShimmer CrossbonesNo ratings yet

- GeoMax Zenith 25proDocument2 pagesGeoMax Zenith 25probbutros_317684077No ratings yet

- Ic - Tea6880h PDFDocument92 pagesIc - Tea6880h PDFkotor1No ratings yet

- Infinera BR DTN X FamilyDocument5 pagesInfinera BR DTN X Familym3y54m100% (1)

- Case Study - Measurements of Radio Frequency Exposure From Wi-Fi Devices PDFDocument41 pagesCase Study - Measurements of Radio Frequency Exposure From Wi-Fi Devices PDFpuljkeNo ratings yet

- Practical RF Circuit Design PDFDocument47 pagesPractical RF Circuit Design PDFLOUKILkarimNo ratings yet

- BBU3910 Description - 25 (20190330)Document89 pagesBBU3910 Description - 25 (20190330)JosueMancoBarrenechea100% (1)

- Chap 5Document12 pagesChap 5Ayman Younis0% (1)

- Radio MobiRake Range Calculator V10 WEB PDocument8 pagesRadio MobiRake Range Calculator V10 WEB PMODOU KABIR KANo ratings yet

- DCM305E: Earth Leakage ClampmeterDocument2 pagesDCM305E: Earth Leakage ClampmetermelanitisNo ratings yet

- The Path To 5G in Australia 03 August 2018 2Document28 pagesThe Path To 5G in Australia 03 August 2018 2a_sahai100% (2)

- TLEN 5830-AWL Lecture-05Document31 pagesTLEN 5830-AWL Lecture-05Prasanna KoratlaNo ratings yet

- Hertz DipoleDocument24 pagesHertz DipoleVikas SoniNo ratings yet

- ACTIVITY 6 GROUP With AnswersDocument3 pagesACTIVITY 6 GROUP With AnswersRey Vincent RodriguezNo ratings yet

- Basic of GSM Um, Abis and A InterfaceDocument1 pageBasic of GSM Um, Abis and A Interfaceaminou31No ratings yet

- The Scientist and Engineer's Guide To Digital Signal Processing.Document23 pagesThe Scientist and Engineer's Guide To Digital Signal Processing.Preethi MarudhuNo ratings yet