Professional Documents

Culture Documents

Download

Download

Uploaded by

Create AccountOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Download

Download

Uploaded by

Create AccountCopyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/37537514

Engineering A Testbank Of Engineering Questions

Article · January 2002

Source: OAI

CITATIONS READS

3 2,230

3 authors:

Hugh Davis su White

University of Southampton University of Southampton

778 PUBLICATIONS 11,784 CITATIONS 165 PUBLICATIONS 1,209 CITATIONS

SEE PROFILE SEE PROFILE

Kate Dickens

University of Southampton

20 PUBLICATIONS 181 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

MOOCAP View project

SemVP - semantic web-based feedback in virtual patients View project

All content following this page was uploaded by Hugh Davis on 02 February 2014.

The user has requested enhancement of the downloaded file.

Session 5, Stream Number 4

ENGINEERING A TESTBANK OF ENGINEERING QUESTIONS

Su White1 , Kate Dickens2 , H C Davis 3

Abstract e3an is a collaborative UK project developing a of the question, and provided authors with word processor

network of expertise in assessment issues within electrical templates which we planned to automatically process and

and electronic engineering. A major focus is the convert into the data items in the question database. If

development of a testbank of peer-reviewed questions for necessary authors could provide clear hand written detailed

diagnostic, formative and summative assessment. The feedback in the form of tutor’s notes to be scanned.

resulting testbank will contain thousands of well-constructed Authors were left to their own devices to write the

and tested questions and answers. Question types include questions, and we assumed that the peer review process

objective, numeric, short answer and examination. Initially a would identify and remedy any gaps in the quality of the

set of metadata descriptors were specified classifying question. We also assumed that this laissez faire approach

information such as subject, level and type of cognitive skills would enable us to better specify and explain requirements

being assessed. Academic consultants agreed key curriculum to future authors. However, when we came to transferring

areas (themes), identified important learning outcomes and the electronic versions of the completed into the database,

produced sets of exemplar questions and answers. Questions the number of issues we encountered were significantly

were authored in a word processor then converted to the larger than we had originally envisaged, and to an extent

database format. Major pedagogical, organisational and which would not be sustainable in the longer term.

technical issues were encountered, including specification of

appropriate interoperable data formats, the standards for PROCESS OVERVIEW

data entry and the design of an intuitive interface to enable

Activities associated with the production of the question

effective use.

database were divided into a number of distinct stages – the

pre-operational stage, the collection of questions themselves,

Index Terms Assessment, IMS, Interoperability,

and then the post processing of the questions. We introduce

Metadata, QTI, Testbanks, Usability

the phases here and then describe them more fully in the

BACKGROUND subsequent sections of this paper.

The pre-operational stage involved the project team in

The Electrical and Electronic Engineering Assessment answering some design questions:

Network (e3an) was established in May 2002 as a three year • Database contents and function and specification. What

initiative under Phase 3 of the Fund for the Development of formats did we wish to store in the database and how

Teaching and Learning (project no. 53/99) The project has would we wish to access and use that information?

been led by the University of Southampton in partnership • Target themes. What themes or subjects within the EEE

with three other UK south coast higher education domain did we wish to collect questions about?

institutions; Bournemouth University, The University of • Metadata. What descriptors about the questions did we

Portsmouth and Southampton Institute [1]. The project is wish to collect?

collating sets of peer-reviewed questions in electrical and • Question Templates. How would we get EEE

electronic engineering which have been authored by academics, some of whom may not have regular access

academics from UK Higher Education. The questions are to latest computing technology, to enter their questions?

stored in a database and available for export in a variety of Once these questions had been resolved, we set about:

formats chosen to enable widespread use across a sector • Building theme teams by recruiting and briefing

which does not have a single platform or engine with which academic consultants

it can present questions. • Authoring and peer reviewing the initial set of questions

We were aware of the potential difficulties associated

These activities were all carried out in parallel with the

with recruiting adequate numbers of question authoring

continuing specification and design of the question database.

consultants to produce well-formed questions and populate a

When the authoring task was complete, additional

demonstration version of the database. We deliberately

processing needed to be carried out by the core team to:

chose to use a word processor as our authoring tool since we

• process sample questions for inclusion in the e3an

assumed it would present the smallest barrier to question

testbank

production. We made a detailed specification of the contents

1

Su White, University of Southampton, Intelligence Agents and Multimedia, ECS Zepler Building, Southampton, SO17 1BJ saw@ecs.soton.ac.uk

2

Kate Dickens, University of Southampton, Intelligence Agents and Multimedia, ECS Zepler Building, Southampton, SO17 1BJ kpd@ecs.soton.ac.uk

3

Hugh Davis, University of Southampton, Intelligence Agents and Multimedia, ECS Zepler Building, Southampton, SO17 1BJ hcd@ecs.soton.ac.uk

e3an is funded under the joint Higher Education Funding Council for England (HEFCE) and Department for Employment and Learning in Northern Ireland

(DEL) initiative the Fund for Development of Teaching and Learning (FDTL), reference53/99

International Conference on Engineering Education August 18–21, 2002, Manchester, U.K.

1

Session 5, Stream Number 4

• partially automate the conversion process relevant to courses in electrical engineering. An electrical

• review of the success of the authoring process engineering subject specialist was therefore appointed to

• identify good practice for future authoring cycles work with the four theme teams and encourage consultants

to reflect “heavy current” interests.

DATABASE CONTENTS AND FUNCTIONS

M ETADATA

The testbank was conceived as a resource to enable

academics to quickly build tests and example sheets for the Metadata is data that describes a particular data item or

purpose of providing practice and feedback for students, and resource. Metadata about educational resources tends to

also to act as a resource for staff providing exemplars when consist of two parts.

creating new assessments, or redesigning teaching. The first part is concerned with classifying the resource

Because questions for the database were to be produced in much the same way as a traditional library, and has such

by a large number of academic authors, we chose a simple information as AUTHOR, KEYWORDS, SUBJECT etc. and

text format as the data entry standard. In addition, in order to such metadata has for some time been well defined by the

enable the widest possible use across the sector, it was Dublin Core initiative [4].

important that questions from the bank could be exported in The second part is the educational metadata, which

a range of formats describes the pedagogy and educational purpose of the

The question database was designed to allow users to resource, and standards in this area are only now starting to

create a 'set' of questions which might then be exported to emerge as organisations such as IMS, IEEE, Ariadne and

enable them to create either paper-based or electronic ADL have come to agreement. For example, the user might

formative, summative or diagnostic tests. Output formats see the ARIADNE Educational Metadata Recommendation

specified included RTF, for printing on paper (as the lowest [5] which is based on IEEE’s Learning Object Metadata

common denominator), html for use with web authoring, and (LOM) [6].

an interoperable format for use with standard computer The project team was keen not to allow the project to

based test engines which is a subset of the IMS Question become bogged down with arguments about which standard

Test and Interoperability (QTI) specification [2]. to adopt, or to overload their academics with requirements to

There are many types of objective question; provide enormous amounts of metadata, so instead decided

QuestionMark [3], one of the leading commercial test to define their own minimal metadata, secure in the

engines supports 19 different types, and the latest IMS QTI knowledge that it would later be possible to translate this to

specification (v 1.2) lists 21 different types. However, the a standard if required. The following list emerged.

project team felt that they would rather experiment with a

small number of objective question types, and decided to TABLE I

I NITIAL METADATA SPECIFICATION

confine their initial collection to multiple choice, multiple

Descriptor Contents

response and numeric answer. Type of Items Exam; Numeric; Multiple Choice; Multiple Response

In addition to objective questions, the project team Time Expected to take in minutes

wished to collect questions that required written answers. Level Introductory; Intermediate; Advanced

We felt that some areas of engineering, design in particular, Discrimination Threshold Students; Good Students; Excellent Students

Cognitive Knowledge; Understanding; Application; Analysis;

are difficult to test using objective questions. Furthermore, a Level Synthesis; Evaluation

collection of exam style questions, along with worked Style Formative; Summative; Formative or Summative;

answers, was felt to be a useful resource to teachers and Diagnostic

students alike. Theme Which subject theme was this question designed for?

Sub themes What part of that theme?

INITIAL SPECIFICATIONS Related What other themes might find this question useful?

Themes

Description Free text for use by people browsing the database

The four initial themes chosen were: Keywords Free text; for use by people searching the database. e.g.

• Analogue Electronics; mention theorem tested

• Circuit Theory;

• Digital Electronics and Microprocessors; A set of word processor templates were created which

• Signal Processing allowed the question to be specified and to incorporate the

These subjects were seen as being core to virtually e3an metadata [7]

every EEE degree programme, and were chosen to reflect We felt that the DISCRIMINATION field improved on

the breadth of the curriculum along with the teaching the DIFFICULTY field found in the standard metadata sets

interests of the project team members. A theme leader was in that it could be related specifically to the level descriptors

appointed for each subject area, drawn from each of the used in the UK Quality Assurance Agency’s (QAA)

partner institutions. A particular additional concern was that Engineering Benchmarking Statement [8]

the material produced should, as far as practicable, be

International Conference on Engineering Education August 18–21, 2002, Manchester, U.K.

2

Session 5, Stream Number 4

opportunity for question writers to append explanatory notes

BUILDING THEME TEAMS if they feel this will be appropriate.

Consultants were recruited from the partner institutions. AUTHORING AND PEER R EVIEW

Project team members were initially asked to identify and

canvass potential contributors from their own institution for Once the training and allocation of questions was completed

each of the four subject areas. authors took a few weeks to produce their questions and then

Prospective consultants were then invited to attend a met again to peer review each others questions, before the

half-day training session. Individual briefings were questions were accepted for publication in the database.

organised for prospective consultants who were unable to Consultants were paid on successful peer review of their

attend on either one of the two dates offered. allocation of questions.

The briefing session was divided into two main

sections; an introduction to the e3 an project and objective DATABASE ORGANISATION

testing, and a meeting between the theme leader and

Once the questions were delivered, there were two questions

members of the theme team to discuss and agree specific

objectives for their subject theme area. which the project team needed to resolve; what information

The introductory component included: to store in the database and what database architecture to

use.

• A description of the e3an project, objectives,

We had already agreed that users might require the

participants, timescales and deliverables;

output in the following formats:

• Overview of issues in student assessment, including the

• Rich Text Format – in order to allow the creation of

benefits of timely formative feedback and the outcomes-

paper-based tests, and that there would be two

based approach to assessment advocated by the Quality

presentations, namely the questions alone and the

Assurance Agency for Higher Education [9,10];

questions with worked answers.

• Familiarisation with guidelines for writing effective

• HTML – to enable questions to be put on the WWW,

objective test questions, question types: multiple choice,

again in question only and question with answer format.

multiple response, numeric answer and text response.

• XML to the Question & Test Interoperability (QTI)

The latter, practical components of the briefing, were

considered essential since the design of effective objective specification which will allow the questions to be used

test questions is an acquired skill [11]. Some general by a range of proprietary test engines including

Blackboard and version 3 of Question Mark Perception.

guidelines were presented [12], along with additional

examples drawn from the electrical and electronic We also acknowledged that many users might wish to

engineering curriculum. Specific examples demonstrated import the questions into earlier versions of Question Mark,

which do not support QTI, and for this reason we decided

how indicative questions from a “traditional” examination

paper might be converted into objective test format, and how that we should also provide QML output, which is Question

an author might design a question to test a specific learning Mark’s proprietary XML format.

We wrote a programme to read the Document Object

outcome.

The second part of the briefing session involved Model (DOM) of the questions that had been provided in the

members of theme teams meeting with the theme leader to Word templates, and from this to create the XML QTI

version of the question.

discuss and agree the key curriculum areas within their

themes and the spread of question types that would most In theory it would have been adequate to store only the

usefully support these sub-themes. This activity was original Word documents as all the other formats could have

been created dynamically from these documents. However

conducted over the two separate events, with details

finalised by email. Theme leaders initially proposed the speed limitations persuaded us that it was best to batch

main sub-themes or topics and their indicative level. process the input documents and to store all the possible

output formats.

Questions were classified as “Introductory”, “Intermediate”

and “Advanced”; levels which may broadly correspond to The question of what architecture to use was decided by

the three levels of a full-time undergraduate programme in the fact that we needed to be able to distribute the database

to individual academics, maybe working without system

electrical/electronic engineering. However the schema is

flexible enough to cope with the specialist nature of some support; we wanted to be able to send them a CD and them

degree programmes and acknowledge that both timing and to be able to install and query the database on their own.

We decided that this would be easiest using Microsoft

intensity of study may vary between institutions.

There was also some debate amongst members of the Access, and that we would deliver the system packaged with

project team about the designation of materials as being the Access runtime for those that did not have Access on

their machines. In parallel we have developed an SQL server

relevant the fourth year of an MEng programme. An

additional metadata item of tutor information provides an version of the database, so that it can be queried over the

International Conference on Engineering Education August 18–21, 2002, Manchester, U.K.

3

Session 5, Stream Number 4

Internet. We may also consider making this version available

The User Interface

to institutions for Intranet use.

Users are expected to follow a basic four-step process.

Question Retrieval and Export

• searching/browsing the database,

The user may assemble a question set either by browsing the • selecting a set of questions

whole database and making individual selections, searching • saving the set of questions, or adding the selection to a

using individual or multiple keywords or by a combination previously saved set

of both these methods. At this stage the user may view the • exporting the questions in one of the available formats.

descriptive metadata for each question and may also preview In order for the user to keep track of their progress

the tutor version of each question in Microsoft Word. through the system a "breadcrumb trail" is displayed which

Given the ubiquity of online shopping it was decided to shows each stage in order and highlights the process

use a trolley metaphor to enable the building of question currently being undertaken.

collections. If the user wishes to go on to make use of a We first produced a fully featured working prototype

question or set of questions this is achieved by adding the which was modified after an intensive evaluation cycle

item(s) to the "question trolley". The "question trolley" may (detailed further below). Initially the main menu provided

be viewed at any time during the process and questions may the following options:

be added/deleted. The question set may then be sent to the • opt to either create a new collection of questions;

"question checkout to be exported. The user is given an • browse through the question bank;

option of saving the question set so that it may be modified

• view/amend previous question collections;

or used again at a later date.

• exit the question bank.

At the "question checkout" the user may choose which

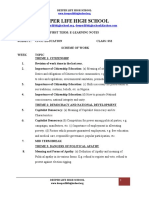

The example below (figure I) incorporates some

combination of versions and formats the questions are to be

additional features added after the initial usability trials

exported in. Each question has a unique identifier and the

The search interface allowed the user to search by:

suffix of each question identifies its version and format.

• subject theme;

Currently (summer 2002) each question can be found as an

individual file after being exported individually. Future • question type;

versions will also enable optional export of concatenated • level (i.e. introductory, intermediate, advanced).

collections of questions in the various formats. The search results were displayed indicating the search

parameters and links to both the question with the question

description and keywords and the question metadata.

FIGURE I

REVISED MAIN MENU

International Conference on Engineering Education August 18–21, 2002, Manchester, U.K.

4

Session 5, Stream Number 4

There were also options to select questions and then add usability problems which were then fixed and the third

them to the question trolley and also to search for additional prototype was produced.

questions. The question trolley interface was similar to the Prototype three was given an in-depth heuristic

search results interface but it allowed the user to remove evaluation with an HCI expert. Again problems were

questions if necessary and it also provided an option to "save identified and the prototype modified once more. The fourth

the question collection" and to "proceed to the question prototype was tested using scenarios and the simplified

collection checkout". The "question collection checkout" thinking aloud methods within the team which resulted in

interface was designed to allow the lecturer to first select the the fifth prototype which is the version which has been

export style; tutor, student or both and then the export format released for general usage.

(RTF, HTML, QML, or XML(QTI).

Issues Identified

USABILITY TESTING The evaluation process identified a number of issues when

users accessed the database.

Three different methods of usability testing were used all of

• Users were unsure what was meant generally by the

which may be classified as "discount usability engineering"

term metadata;

approaches [13]:

• Users did not always understand the purpose of specific

• Scenarios – where the test user follows a previously

metadata items (e.g. question level);

planned path

• Inconsistent naming conventions within the retrieval

• Simplified thinking aloud – where between three to five

process created confusion;

users are used to identified the majority of usability

• Users found different stages of the process indistinct;

problems by “thinking aloud” as they work through the

system • Users were sometimes presented with more information

than they needed.

• Heuristic evaluation – the test user (who in this case

require some experience with HCI principles) compares Explaining Meta Data

the interface to a small set of heuristics such as ten basic

usability principles. When browsing or after searching the testbank, users were

The majority of the test users where lecturers who were given an option to view questiondetails before selecting and

asked to follow scenarios and/or the simplified thinking saving. A key aspect of the database is the inclusion of

aloud method. Those who had experience of HCI methods metadata associated with each question. The usability trials

(whether a lecturer or not) were initially asked to complete a showed that although users understand the concept of

heuristic evaluation in order to take advantage of their providing structured information describing the form and

expertise. Evaluation contents of the question database, the majority of academics

are not yet familiar with the term metadata. Consequently an

The project is taking an integrative approach to evaluation additional information icon was added next to metadata

[14] and plans to review and revise the current buttons which pops-up explanation as the mouse passes over

implementation based on actual use. However in the earliest the icon. The button display was changed from 'metadata' to

stages the focus was to evaluate usability. The first prototype 'View question metadata?' as shown in figure III. A similar

was tested by three academics from UMIST and Manchester issue was identified associated with the metadata contents.

Metropolitan University a further six evaluations were Information buttons were added to the specific metadata

carried out at Loughborough University using the three items to provide explanations of terms used. See figure II

methods of discount usability engineering detailed above. below

Feedback was collated and the most obvious usability FIGURE II

problems were fixed before the second prototype was tested I NFORMATION BUTTONS ADDED TO METADATA SCREENS

at the partner institutions by a further twelve subjects.

The feedback was then collated again. A questionnaire

following Nielsen’s Severity Ratings procedure [15] was

sent to three project members (two academics and one

learning technologist) indicating where the problems existed,

their nature, and any actions that had so far been taken to

overcome them. These individuals were asked to rate the

usability problems for severity against a 0 – 4 rating scale

where 0 = I don't agree that this is a usability problem at all

and 4 = usability catastrophe: imperative to fix this before

product can be released; the mean of the set of ratings from

the three evaluators was used to identify the most critical

International Conference on Engineering Education August 18–21, 2002, Manchester, U.K.

5

Session 5, Stream Number 4

our original estimates, and authors variously showed

What do you call question collections?

imagination in the variety of problems they created for us.

There was originally some ambiguity in the naming However we have now incorporated our learning from that

conventions used to describe question collections. The term experience into our briefing sessions, a detailed guidance

proved confusing, perhaps because it was alliterative (c’s document, and a validation tool. We continue to use a word

and q’s sounding the same) long-winded, and it appeared processor and template, and are actively working to share

difficulty to recall. The term was simplified to question set. our learning with others in the HE community.

Keeping track of progress ACKNOWLEDGEMENT

Although a breadcrumb trail had been included to assist

We would like to acknowledge the hard work of our

users in keeping track of their progress, there were

colleagues in Southampton and our partner institutions; our

inconsistencies in the naming of processes on screen and on

initial group of authors, and all those who contributed to the

the breadcrumb trail, nor was it clear where along the trail a

evaluation of early versions of the database.

user was currently situated. The trail was changed to ensure

that it matched terms used elsewhere for search and REFERENCES

retrieval. A highlight was added to indicate to users their

current progress through the trail. The screen displayed after All url’s last accessed July 2002

a search and when a user was viewing the question trolley [1] e3an Additional project details at http://www.ecs.soton.ac.uk/e3an

were very similar. Screens were numbered to reinforce the

[2] Smythe C, Shepherd E, Brewer L and Lay S “IMS Question & Test

feedback on progress and an orange question trolley icon Interoperability: ASI Information Model”, Final Specification,

was added to the screen as shown in figure III. Version 1.2, IMS , February 2002 http://www.imsproject.org/question/

FIGURE III

TROLLEY SCREEN SHOWING BREADCRUMB TRAIL, PROGRESS NUMBER AND [3] Questionmark Computing http://www.questionmark.com

TROLLEY ICON [4] Dublin Core specification http://dublincore.org/

[5] Ariadne Educational Metadata http://ariadne.unil.ch/Metadata/

[6] IEEE LOM specification http://ltsc.ieee.org/doc/

[7] Wellington, S J, White S and Davis, H C “Populating the Testbank:

Experiences within the Electrical and Electronic Engineering

Curriculum” Proc. 3rd Annual CAA Conference 2001. Quality

Assurance Agency for Higher Education, “Subject Benchmark

Statement – Engineering”2000

http://www.qaa.ac.uk/crntwork/benchmark/engineering.pdf

[8] QAA for Higher Education “Handbook for Academic Review” 2000

http://www.qaa.ac.uk

[9] QAA for Higher Education “Code of practice for the assurance of

Users found the process of exporting questions and academic quality and standards in higher education. Section

saving a question set confusing. A dialogue box was added 6:Assessment of students” 2000 http://www.qaa.ac.uk

to prompt the user to save the question set before they [10] Zakrzewski S and Bull J. “The mass implementation and evaluation of

proceeded to export. computer-based assessments” Assessment & evaluation in higher

education. - Vol.23.2 1998 pp. 141-152.

CONCLUSIONS

[11] McKenna, C. and Bull, J. “Designing effective objective test

questions: an introductory workshop”. Proc. 3rd Annual CAA

Subsequent testing via the scenario and simplified thinking Conference 1999 253-257

aloud suggests that the usability problems originally found

[12] Nielsen, J. “Guerrilla HCI: Using Discount Usability Engineering to

have been solved without creating additional problems. Most Penetrate the Intimidation Barrier” Designing Web Usability: The

problems identified in each prototype were found by the first Practice of Simplicity edited by Jakob Nielsen and Robert L. Mack

five test users, testifying to the power of these methods in published by John Wiley & Sons, New York, NY, 1994.

identifying usability problems easily overlooked by users http://www.useit.com/papers/guerilla_hci.html

involved in the database specification. It show how a an [13] .Draper S.W, Brown M.I., Henderson F.P and McAteer E.,

"easy to use" database finding unexpected user problems. “Integrative evaluation: An emerging role for classroom studies of

Unfortunately there is not yet any similar common CAL” Computers & Education vol. 26,3 1996, pp.17-32.

methodology for the collaborative creation of database [14] Nielsen, J. (1995), Severity Ratings for Usability Problems,

contents. For e3an the objective achieving a high level of http://useit.com/papers/heuristic/severityrating.html

voluntary involvement among question authors was equally [15] White, S. and Davis, H. C. (2001) “Innovating Engineering

important to creating a useful testbank [16]. The time needed Assessment: A strategic approach”. Engineering Education:

to manually process the first batch of questions far exceeded Innovation in Teaching Learning and Assessment Proceeding First

Annual IEE Engineering Education Symposium. 2001.

International Conference on Engineering Education August 18–21, 2002, Manchester, U.K.

6

View publication stats

You might also like

- Distributed and Cloud Computing: From Parallel Processing to the Internet of ThingsFrom EverandDistributed and Cloud Computing: From Parallel Processing to the Internet of ThingsRating: 5 out of 5 stars5/5 (3)

- Few Gallup QuestionsDocument1 pageFew Gallup Questionsnishant100% (2)

- I. Student Applicant'S Father Full Name: Page 1 / 3Document3 pagesI. Student Applicant'S Father Full Name: Page 1 / 3JasNo ratings yet

- Session 5, Stream Number 4 Engineering A Testbank of Engineering QuestionsDocument7 pagesSession 5, Stream Number 4 Engineering A Testbank of Engineering QuestionssamadonyNo ratings yet

- The Calculator Project - Formal Reasoning About Programs: March 1995Document9 pagesThe Calculator Project - Formal Reasoning About Programs: March 1995alphxn cknNo ratings yet

- Data Mining For Software Engineering: Computer July 2009Document3 pagesData Mining For Software Engineering: Computer July 2009ZYSHANNo ratings yet

- Tec Nyscate Intro NetworksDocument30 pagesTec Nyscate Intro NetworksstauheedNo ratings yet

- RV College of Engineering: Database Development and Testing For Automobile IndustryDocument17 pagesRV College of Engineering: Database Development and Testing For Automobile IndustryMOHAN RNo ratings yet

- Naïve Bayes vs. Decision Trees vs. Neural Networks in The Classification of Training Web PagesDocument8 pagesNaïve Bayes vs. Decision Trees vs. Neural Networks in The Classification of Training Web PagesQaisar HussainNo ratings yet

- Able of OntentsDocument6 pagesAble of OntentsLaheeba ZakaNo ratings yet

- Final - DBMS UNIT-3Document170 pagesFinal - DBMS UNIT-3Sunil KumerNo ratings yet

- Advance Assessment Evaluation: A Deep-Learning Framework With Sophisticated Text Extraction For Unparalleled PrecisionDocument8 pagesAdvance Assessment Evaluation: A Deep-Learning Framework With Sophisticated Text Extraction For Unparalleled PrecisionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Final - DBMS UNIT-5Document181 pagesFinal - DBMS UNIT-5aryanrajputak103No ratings yet

- LLM For QnA ProposalDocument12 pagesLLM For QnA ProposalAkhil KumarNo ratings yet

- Working Session Information Retrieval BaDocument3 pagesWorking Session Information Retrieval Baqdr8y5mm44No ratings yet

- It4983 Capstone Report 2017Document21 pagesIt4983 Capstone Report 2017Sumit Kumar GuptaNo ratings yet

- CYB NetworkEssentials 01 LessonPlan IntroNetworkingDocument6 pagesCYB NetworkEssentials 01 LessonPlan IntroNetworkingperezmanny0614No ratings yet

- Dbms UPDATED MANUAL EWITDocument75 pagesDbms UPDATED MANUAL EWITMadhukesh .kNo ratings yet

- 00 - Introduction (Read ME!!!)Document50 pages00 - Introduction (Read ME!!!)mahammedNo ratings yet

- Online Examination 6Document31 pagesOnline Examination 6smitjpatel21No ratings yet

- 369 Ijar-29969Document13 pages369 Ijar-29969david limNo ratings yet

- Automatic Code Generation Within Student's Software Engineering ProjectsDocument6 pagesAutomatic Code Generation Within Student's Software Engineering Projectsxoteb11No ratings yet

- A Proposal of Electronic Examination System To Evaluate Descriptive AnswersDocument6 pagesA Proposal of Electronic Examination System To Evaluate Descriptive AnswersAndy FloresNo ratings yet

- Syno FinalDocument17 pagesSyno Finalrishipaul221No ratings yet

- Conf DictapDocument6 pagesConf DictapImran Hossain TuhinNo ratings yet

- Ieee Research Papers On Data MiningDocument4 pagesIeee Research Papers On Data Miningfzg9w62y100% (1)

- Online Quiz Management System: University of Engineering & Management (UEM)Document12 pagesOnline Quiz Management System: University of Engineering & Management (UEM)Tanay PaulNo ratings yet

- Issues and Strategies of E-LearningDocument9 pagesIssues and Strategies of E-LearningSunway University100% (2)

- Text Mining in Big Data Analytics (1) (1) - 1Document32 pagesText Mining in Big Data Analytics (1) (1) - 1azim mominNo ratings yet

- Ieee Research Paper On Mobile Adhoc NetworksDocument8 pagesIeee Research Paper On Mobile Adhoc Networksafeaxdhwl100% (1)

- Cover PageDocument8 pagesCover Page43FYCM II Sujal NeveNo ratings yet

- Software ProcessDocument10 pagesSoftware Processapi-3714165No ratings yet

- East West Institute of Technology: Department of Computer Science and EngineeringDocument78 pagesEast West Institute of Technology: Department of Computer Science and Engineeringdarshan.vvbmaNo ratings yet

- Online ExaminationDocument21 pagesOnline Examinationravan rajNo ratings yet

- Kbs Resecrch DevelopmentDocument22 pagesKbs Resecrch DevelopmentNamata Racheal SsempijjaNo ratings yet

- 'Chapter One 1.1 Background of The StudyDocument48 pages'Chapter One 1.1 Background of The StudyjethroNo ratings yet

- Research Paper On Data Mining IeeeDocument7 pagesResearch Paper On Data Mining Ieeesrxzjavkg100% (1)

- International School of Management and Technology Gairigaun, Tinkune, Kathmandu NepalDocument5 pagesInternational School of Management and Technology Gairigaun, Tinkune, Kathmandu NepalAman PokharelNo ratings yet

- Journalnx TextDocument3 pagesJournalnx TextJournalNX - a Multidisciplinary Peer Reviewed JournalNo ratings yet

- CO COMP9321 1 2024 Term1 T1 Online Standard KensingtonDocument11 pagesCO COMP9321 1 2024 Term1 T1 Online Standard Kensingtondavid.alukkalNo ratings yet

- CNE Micro ProjectDocument22 pagesCNE Micro ProjectAshish MavaniNo ratings yet

- DownloadDocument9 pagesDownloadaniketkumbhar657No ratings yet

- Dbms Lab Manual PDFDocument61 pagesDbms Lab Manual PDFPujit Gangadhar0% (1)

- Ntu Eee Thesis FormatDocument7 pagesNtu Eee Thesis FormatPayForAPaperAtlanta100% (2)

- A Systematic Literature Review On The Use of Deep Learning in Software Engineering ResearchDocument59 pagesA Systematic Literature Review On The Use of Deep Learning in Software Engineering Researchsuchitro mandalNo ratings yet

- Data Structures and Algorithms in Java: Third EditionDocument14 pagesData Structures and Algorithms in Java: Third EditionAmit SinghNo ratings yet

- Mirpur University of Science and Technology: Deparment Software EngineeringDocument11 pagesMirpur University of Science and Technology: Deparment Software Engineeringali100% (1)

- Syllabus: NT2799 Network Systems Administration Capstone ProjectDocument37 pagesSyllabus: NT2799 Network Systems Administration Capstone ProjectAlexander E WhitingNo ratings yet

- A Design For Evidence-Based Software Architecture ResearchDocument7 pagesA Design For Evidence-Based Software Architecture ResearchsanaNo ratings yet

- Teaching Network Storage Technology-Assessment Outcomes and DirectionsDocument5 pagesTeaching Network Storage Technology-Assessment Outcomes and DirectionsHerton FotsingNo ratings yet

- Synopsis RohitDocument30 pagesSynopsis RohitRaj Kundalwal0% (1)

- 1 - Unit 2 - Assignment Brief 2Document3 pages1 - Unit 2 - Assignment Brief 2Cục MuốiNo ratings yet

- A Random Generator of Resource-Constrained Multi-PDocument20 pagesA Random Generator of Resource-Constrained Multi-PKartik JainNo ratings yet

- Dbms Case Report Suryam 2Document14 pagesDbms Case Report Suryam 2The Trading FreakNo ratings yet

- A Comprehensive Project For CS2: Combining Key Data Structures and Algorithms Into An Integrated Web Browser and Search EngineDocument6 pagesA Comprehensive Project For CS2: Combining Key Data Structures and Algorithms Into An Integrated Web Browser and Search EngineG. NIHARINo ratings yet

- Social TopicDocument22 pagesSocial TopicGodwin OmaNo ratings yet

- Search Based Software Engineering: Techniques, Taxonomy, TutorialDocument55 pagesSearch Based Software Engineering: Techniques, Taxonomy, TutorialAlhassan AdamuNo ratings yet

- Search Based Software Engineering: Techniques, Taxonomy, TutorialDocument55 pagesSearch Based Software Engineering: Techniques, Taxonomy, TutorialAlhassan AdamuNo ratings yet

- A Methodology For The Selection of Requirements enDocument27 pagesA Methodology For The Selection of Requirements enBeckham ChaileNo ratings yet

- CYB NetworkEssentials 04 LessonPlan UnderstandingEthernetDocument6 pagesCYB NetworkEssentials 04 LessonPlan UnderstandingEthernetperezmanny0614No ratings yet

- Wa0004.Document12 pagesWa0004.Shaheer RizwanNo ratings yet

- 02 Networking Assignment 2 BriefDocument4 pages02 Networking Assignment 2 Briefpdtlong16No ratings yet

- Aai Atc 2022Document5 pagesAai Atc 2022rudraNo ratings yet

- Hs8151 Communicative English SyllabusDocument2 pagesHs8151 Communicative English SyllabusVignesh KNo ratings yet

- Conclusions and RecommendationsDocument7 pagesConclusions and RecommendationsRiohan NatividadNo ratings yet

- Thesis Statement For Toy Story 3Document6 pagesThesis Statement For Toy Story 3jilllyonstulsa100% (2)

- Assurance and Consulting ServicesDocument3 pagesAssurance and Consulting ServicesMarieJoiaNo ratings yet

- Conferring: Sherrie Schrimpf WSRA Conference February, 2011Document14 pagesConferring: Sherrie Schrimpf WSRA Conference February, 2011pennell30No ratings yet

- Narrative-Report-on-Brigada Kick-Off 22Document3 pagesNarrative-Report-on-Brigada Kick-Off 22Ucel CruzNo ratings yet

- Mathematics HelpDocument75 pagesMathematics HelpPankajNo ratings yet

- Unit01 Introduction 1 STDocument22 pagesUnit01 Introduction 1 STPhùng Bá NgọcNo ratings yet

- Hrmis FormDocument5 pagesHrmis FormMuhammad Nasir Rao40% (5)

- Applying For CEng-a Personal Story PDFDocument4 pagesApplying For CEng-a Personal Story PDFchong pak limNo ratings yet

- Theoretical Foundations in NursingDocument11 pagesTheoretical Foundations in NursingDennar Yen Gustilo Magracia IINo ratings yet

- Ea Formative Report - Sara Medin - Lesson Observations 2 and 3Document6 pagesEa Formative Report - Sara Medin - Lesson Observations 2 and 3api-404589966No ratings yet

- Simulia: Special Edition: Simulation Gets PersonalDocument28 pagesSimulia: Special Edition: Simulation Gets PersonalLobo LopezNo ratings yet

- Exam Grade08 2011 Maths AsDocument4 pagesExam Grade08 2011 Maths Asalemu yadessaNo ratings yet

- Personal History Form 1 0Document9 pagesPersonal History Form 1 0Lessu MalayNo ratings yet

- Teaching Intonation - The Theories Behind Intonation. PDFDocument7 pagesTeaching Intonation - The Theories Behind Intonation. PDFHumberto Cordova Panchana100% (3)

- User's Behavior in Social NetworksDocument2 pagesUser's Behavior in Social NetworksKarolis SimanaviciusNo ratings yet

- Orbit TrainingDocument39 pagesOrbit TrainingReece AllisonNo ratings yet

- Full Report - The Public Sector Amidst The COVID-19 Pandemic - A Case Study On The Adoption of Alternative Working ArrangementsDocument44 pagesFull Report - The Public Sector Amidst The COVID-19 Pandemic - A Case Study On The Adoption of Alternative Working ArrangementsCOE PSPNo ratings yet

- Kra4 Objective 12 For QualityefficiencyDocument5 pagesKra4 Objective 12 For QualityefficiencyBryan SmileNo ratings yet

- National Education Policy: Rohit SharmaDocument2 pagesNational Education Policy: Rohit SharmaAparannha RoyNo ratings yet

- Integrated Marketing Communication Director in NYC Resume Marly MillerDocument2 pagesIntegrated Marketing Communication Director in NYC Resume Marly MillerMarlyMillerNo ratings yet

- Manufacturing at A GlanceDocument6 pagesManufacturing at A GlanceMariamVTNo ratings yet

- Civic Education SS2 First Term .Docx (Reviewed)Document30 pagesCivic Education SS2 First Term .Docx (Reviewed)Max DiebeyNo ratings yet

- Aesthetic Object and Technical ObjectDocument11 pagesAesthetic Object and Technical ObjectAshley WoodwardNo ratings yet

- Department of Education: Republic of The PhilippinesDocument2 pagesDepartment of Education: Republic of The PhilippinesJelica AlvarezNo ratings yet

- CA Intermediate Paper-7BDocument202 pagesCA Intermediate Paper-7BAnand_Agrawal19No ratings yet