Professional Documents

Culture Documents

RDBMS Unit-V

RDBMS Unit-V

Uploaded by

vinoth kumarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

RDBMS Unit-V

RDBMS Unit-V

Uploaded by

vinoth kumarCopyright:

Available Formats

ST.

ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

UNIT - V

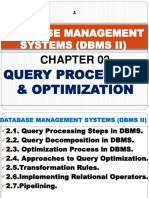

Query Processing

Query Processing would mean the entire process or activity which involves query

translation into low level instructions, query optimization to save resources, cost estimation

or evaluation of query, and extraction of data from the database.

Goal: To find an efficient Query Execution Plan for a given SQL query which would

minimize the cost considerably, especially time.

Cost Factors: Disk accesses [which typically consumes time], read/write operations [which

typically needs resources such as memory/RAM].

One of the types of DBMS is Relational Database Management System

(RDBMS) where data is stored in the form of rows and columns (in other words, stored in

tables) which have intuitive associations with each other. The users (both applications and

humans) have the liberty to select, insert, update and delete these rows and columns without

violating the constraints provided at the time of defining these relational tables. Let’s say you

want the list of all the employees who have a salary of more than 10,000.

SELECT

emp_name

FROM

employee

WHERE

salary>10000;

The problem here is that DBMS won't understand this statement. So for that, we have SQL

(Structured Query Language) queries. SQL being a High-Level Language makes it easier

not just for the users to query data based on their needs but also bridges the communication

gap between the DBMS which does not really understand human language. In fact, the

underlying system of DBMS won't even understand these SQL queries. For them to

understand and execute a query, they first need to be converted to a Low-Level Language.

The SQL queries go through a processing unit that converts them into low-level Language via

Relational Algebra. Since relational algebra queries are a bit more complex than SQL

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

queries, DBMS expects the user to write only the SQL queries. It then processes the query

before evaluating it.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

As mentioned in the above image, query processing can be divided into compile-

time and run-time phases. Compile-time phase includes:

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

1. Parsing and Translation (Query Compilation)

2. Query Optimization

3. Evaluation (code generation)

In the Runtime phase, the database engine is primarily responsible for interpreting and

executing the hence generated query with physical operators and delivering the query output.

A note here that as soon as any of the above stages encounters an error, they simply throw the

error and return without going any further (since warnings are not fatal/terminal, that is not

the case with warnings).

Parsing and Translation

The first step in query processing is Parsing and Translation. The fired queries undergo

lexical, syntactic, and semantic analysis. Essentially, the query gets broken down into

different tokens and white spaces are removed along with the comments (Lexical Analysis).

In the next step, the query gets checked for the correctness, both syntax and semantic wise.

The query processor first checks the query if the rules of SQL have been correctly followed

or not (Syntactic Analysis).

Finally, the query processor checks if the meaning of the query is right or not. Things like if

the table(s) mentioned in the query are present in the DB or not? if the column(s) referred

from all the table(s) are actually present in them or not? (Semantic Analysis)

Once the above mentioned checks pass, the flow moves to convert all the tokens into

relational expressions, graphs, and trees. This makes the processing of the query easier for the

other parsers.

Let's consider the same query (mentioned below as well) as an example and see how the flow

works.

Query:

SELECT

emp_name

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

FROM

employee

WHERE

salary>10000;

The above query would be divided into the following

tokens: SELECT, emp_name, FROM, employee, WHERE, salary, >, 10000.

The tokens (and hence the query) get validated for

The name of the queried table is looked into the data dictionary table.

The name of the columns mentioned (emp_name and salary) in the tokens are

validated for existence.

The type of column(s) being compared have to be of the same type (salary and the

value 10000 should have the same data type).

The next step is to translate the generated set of tokens into a relational algebra query. These

are easy to handle for the optimizer in further processes.

∏ emp_name (σ salary > 10000)

Relational graphs and trees can also be generated but for the sake of simplicity, let's keep

them out of the scope for now.

Query Evaluation

Once the query processor has the above-mentioned relational forms with it, the next step is to

apply certain rules and algorithms to generate a few other powerful and efficient data

structures. These data structures help in constructing the query evaluation plans. For example,

if the relational graph was constructed, there could be multiple paths from source to

destination. A query execution plan will be generated for each of the paths.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

As you can see in the above possible graphs, one way could be first projecting followed by

selection (on the right). Another way would be to do selection followed by projection (on the

left). The above sample query is kept simple and straightforward to ensure better

comprehension but in the case of joins and views, more such paths (evaluation plans) start to

open up. The evaluation plans may also include different annotations referring to the

algorithm(s) to be used. Relational Algebra which has annotations of these sorts is known

as Evaluation Primitives. You might have figured out by now that these evaluation

primitives are very essential and play an important role as they define the sequence of

operations to be performed for a given plan.

Query Optimization

In the next step, DMBS picks up the most efficient evaluation plan based on the cost each

plan has. The aim here is to minimize the query evaluation time. The optimizer also evaluates

the usage of index present in the table and the columns being used. It also finds out the best

order of subqueries to be executed so as to ensure only the best of the plans gets executed.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Simply put, for any query, there are multiple evaluation plans to execute it. Choosing the one

which costs the least is called Query Optimization. Some of the factors weighed in by the

optimizer to calculate the cost of a query evaluation plan is:

CPU time

Number of tuples to be scanned

Disk access time

number of operations

Conclusion

Once the query evaluation plan is selected, the system evaluates the generated low-level

query and delivers the output.

Even though the query undergoes different processes before it finally gets executed, these

processes take very little time in comparison to the time it actually would take to execute an

un-optimized and un-validated query. Read more on indexes and see how your queries would

perform in case you don't add relevant indexes. To summarise, the flow of a query processing

involves two steps:

Compile time

o Parsing and Translation: break the query into tokens and check for the

correctness of the query

o Query Optimisation: Evaluate multiple query execution plans and pick the best

out of them.

o Query Generation: Generate a low level, DB executable code

Runtime time (execute/evaluate the hence generated query)

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Transaction Concept

A transaction is a single logical unit of work that accesses and possibly

modifies the contents of a database. Transactions access data using read and write

operations.

In order to maintain consistency in a database, before and after the transaction,

certain properties are followed. These are called ACID properties.

Atomicity:

By this, we mean that either the entire transaction takes place at once or

doesn’t happen at all. There is no midway i.e. transactions do not occur partially.

Each transaction is considered as one unit and either runs to completion or is not

executed at all.

It involves the following two operations.

—Abort: If a transaction aborts, changes made to the database are not visible.

—Commit: If a transaction commits, changes made are visible.

Atomicity is also known as the ‘All or nothing rule’.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Consider the following transaction T consisting of T1 and T2: Transfer of 100 from

account X to account Y.

If the transaction fails after completion of T1 but before completion of T2.( say,

after write(X) but before write(Y)), then the amount has been deducted from X but

not added to Y. This results in an inconsistent database state. Therefore, the

transaction must be executed in its entirety in order to ensure the correctness of the

database state.

Consistency:

This means that integrity constraints must be maintained so that the database

is consistent before and after the transaction. It refers to the correctness of a

database. Referring to the example above,

The total amount before and after the transaction must be maintained.

Total before T occurs = 500 + 200 = 700.

Total after T occurs = 400 + 300 = 700.

Therefore, the database is consistent. Inconsistency occurs in case T1 completes

but T2 fails. As a result, T is incomplete.

Isolation:

This property ensures that multiple transactions can occur concurrently

without leading to the inconsistency of the database state. Transactions occur

independently without interference. Changes occurring in a particular transaction

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

will not be visible to any other transaction until that particular change in that

transaction is written to memory or has been committed. This property ensures that

the execution of transactions concurrently will result in a state that is equivalent to a

state achieved these were executed serially in some order.

Let X= 500, Y = 500.

Consider two transactions T and T”.

Suppose T has been executed till Read (Y) and then T’’ starts. As a result,

interleaving of operations takes place due to which T’’ reads the correct value

of X but the incorrect value of Y and sum computed by

T’’: (X+Y = 50, 000+500=50, 500)

is thus not consistent with the sum at end of the transaction:

T: (X+Y = 50, 000 + 450 = 50, 450).

This results in database inconsistency, due to a loss of 50 units. Hence, transactions

must take place in isolation and changes should be visible only after they have been

made to the main memory.

Durability:

This property ensures that once the transaction has completed execution, the updates

and modifications to the database are stored in and written to disk and they persist

even if a system failure occurs. These updates now become permanent and are stored

in non-volatile memory. The effects of the transaction, thus, are never lost.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Some important points:

Property Responsibility for maintaining properties

Atomicity Transaction Manager

Consistency Application programmer

Isolation Concurrency Control Manager

Durability Recovery Manager

The ACID properties, in totality, provide a mechanism to ensure the

correctness and consistency of a database in a way such that each transaction is a

group of operations that acts as a single unit, produces consistent results, acts in

isolation from other operations, and updates that it makes are durably stored.

Concurrency Control

Concurrency Control is the management procedure that is required for controlling concurrent

execution of the operations that take place on a database.

But before knowing about concurrency control, we should know about concurrent execution.

Concurrent Execution

o In a multi-user system, multiple users can access and use the same database at one

time, which is known as the concurrent execution of the database. It means that the

same database is executed simultaneously on a multi-user system by different users.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

o While working on the database transactions, there occurs the requirement of using the

database by multiple users for performing different operations, and in that case,

concurrent execution of the database is performed.

o The thing is that the simultaneous execution that is performed should be done in an

interleaved manner, and no operation should affect the other executing operations,

thus maintaining the consistency of the database. Thus, on making the concurrent

execution of the transaction operations, there occur several challenging problems that

need to be solved.

Problems with Concurrent Execution

In a database transaction, the two main operations are READ and WRITE operations. So,

there is a need to manage these two operations in the concurrent execution of the transactions

as if these operations are not performed in an interleaved manner, and the data may become

inconsistent. So, the following problems occur with the Concurrent Execution of the

operations:

Problem 1: Lost Update Problems (W - W Conflict)

The problem occurs when two different database transactions perform the read/write

operations on the same database items in an interleaved manner (i.e., concurrent execution)

that makes the values of the items incorrect hence making the database inconsistent.

For example:

Consider the below diagram where two transactions TX and TY, are performed on the

same account A where the balance of account A is $300.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

o At time t1, transaction TX reads the value of account A, i.e., $300 (only read).

o At time t2, transaction TX deducts $50 from account A that becomes $250 (only

deducted and not updated/write).

o Alternately, at time t3, transaction TY reads the value of account A that will be $300

only because TX didn't update the value yet.

o At time t4, transaction TY adds $100 to account A that becomes $400 (only added but

not updated/write).

o At time t6, transaction TX writes the value of account A that will be updated as $250

only, as TY didn't update the value yet.

o Similarly, at time t7, transaction TY writes the values of account A, so it will write as

done at time t4 that will be $400. It means the value written by TX is lost, i.e., $250 is

lost.

Hence data becomes incorrect, and database sets to inconsistent.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Dirty Read Problems (W-R Conflict)

The dirty read problem occurs when one transaction updates an item of the database, and

somehow the transaction fails, and before the data gets rollback, the updated database item

is accessed by another transaction. There comes the Read-Write Conflict between both

transactions.

For example:

Consider two transactions TX and TY in the below diagram performing read/write

operations on account A where the available balance in account A is $300:

o At time t1, transaction TX reads the value of account A, i.e., $300.

o At time t2, transaction TX adds $50 to account A that becomes $350.

o At time t3, transaction TX writes the updated value in account A, i.e., $350.

o Then at time t4, transaction TY reads account A that will be read as $350.

o Then at time t5, transaction TX rollbacks due to server problem, and the value changes

back to $300 (as initially).

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

o But the value for account A remains $350 for transaction TY as committed, which is

the dirty read and therefore known as the Dirty Read Problem.

Unrepeatable Read Problem (W-R Conflict)

Also known as Inconsistent Retrievals Problem that occurs when in a transaction, two

different values are read for the same database item.

For example:

Consider two transactions, TX and TY, performing the read/write operations on account

A, having an available balance = $300. The diagram is shown below:

o At time t1, transaction TX reads the value from account A, i.e., $300.

o At time t2, transaction TY reads the value from account A, i.e., $300.

o At time t3, transaction TY updates the value of account A by adding $100 to the

available balance, and then it becomes $400.

o At time t4, transaction TY writes the updated value, i.e., $400.

o After that, at time t5, transaction TX reads the available value of account A, and that

will be read as $400.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

o It means that within the same transaction TX, it reads two different values of account

A, i.e., $ 300 initially, and after updation made by transaction TY, it reads $400. It is

an unrepeatable read and is therefore known as the Unrepeatable read problem.

Thus, in order to maintain consistency in the database and avoid such problems that take

place in concurrent execution, management is needed, and that is where the concept of

Concurrency Control comes into role.

Concurrency Control

Concurrency Control is the working concept that is required for controlling and managing the

concurrent execution of database operations and thus avoiding the inconsistencies in the

database. Thus, for maintaining the concurrency of the database, we have the concurrency

control protocols.

Concurrency Control Protocols

The concurrency control protocols ensure the atomicity, consistency, isolation,

durability and serializability of the concurrent execution of the database transactions.

Therefore, these protocols are categorized as:

o Lock Based Concurrency Control Protocol

o Time Stamp Concurrency Control Protocol

o Validation Based Concurrency Control Protocol

Lock-Based Protocol

In this type of protocol, any transaction cannot read or write data until it acquires an

appropriate lock on it. There are two types of lock:

1. Shared lock:

o It is also known as a Read-only lock. In a shared lock, the data item can only read by

the transaction.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

o It can be shared between the transactions because when the transaction holds a lock,

then it can't update the data on the data item.

2. Exclusive lock:

o In the exclusive lock, the data item can be both reads as well as written by the

transaction.

o This lock is exclusive, and in this lock, multiple transactions do not modify the same

data simultaneously.

There are four types of lock protocols available:

1. Simplistic lock protocol

It is the simplest way of locking the data while transaction. Simplistic lock-based protocols

allow all the transactions to get the lock on the data before insert or delete or update on it. It

will unlock the data item after completing the transaction.

2. Pre-claiming Lock Protocol

o Pre-claiming Lock Protocols evaluate the transaction to list all the data items on which they

need locks.

o Before initiating an execution of the transaction, it requests DBMS for all the lock on all those

data items.

o If all the locks are granted then this protocol allows the transaction to begin. When the

transaction is completed then it releases all the lock.

o If all the locks are not granted then this protocol allows the transaction to rolls back and waits

until all the locks are granted.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

3. Two-phase locking (2PL)

o The two-phase locking protocol divides the execution phase of the transaction into

three parts.

o In the first part, when the execution of the transaction starts, it seeks permission for

the lock it requires.

o In the second part, the transaction acquires all the locks. The third phase is started as

soon as the transaction releases its first lock.

o In the third phase, the transaction cannot demand any new locks. It only releases the

acquired locks.

There are two phases of 2PL:

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Growing phase: In the growing phase, a new lock on the data item may be acquired by the

transaction, but none can be released.

Shrinking phase: In the shrinking phase, existing lock held by the transaction may be

released, but no new locks can be acquired.

In the below example, if lock conversion is allowed then the following phase can happen:

1. Upgrading of lock (from S(a) to X (a)) is allowed in growing phase.

2. Downgrading of lock (from X(a) to S(a)) must be done in shrinking phase.

Example:

The following way shows how unlocking and locking work with 2-PL.

Transaction T1:

o Growing phase: from step 1-3

o Shrinking phase: from step 5-7

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

o Lock point: at 3

Transaction T2:

o Growing phase: from step 2-6

o Shrinking phase: from step 8-9

o Lock point: at 6

4. Strict Two-phase locking (Strict-2PL)

o The first phase of Strict-2PL is similar to 2PL. In the first phase, after acquiring all the

locks, the transaction continues to execute normally.

o The only difference between 2PL and strict 2PL is that Strict-2PL does not release a

lock after using it.

o Strict-2PL waits until the whole transaction to commit, and then it releases all the

locks at a time.

o Strict-2PL protocol does not have shrinking phase of lock release.

It does not have cascading abort as 2PL does.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Deadlock in DBMS

A deadlock is a condition where two or more transactions are waiting indefinitely for one

another to give up locks. Deadlock is said to be one of the most feared complications in

DBMS as no task ever gets finished and is in waiting state forever.

For example: In the student table, transaction T1 holds a lock on some rows and needs to

update some rows in the grade table. Simultaneously, transaction T2 holds locks on some

rows in the grade table and needs to update the rows in the Student table held by Transaction

T1.

Now, the main problem arises. Now Transaction T1 is waiting for T2 to release its lock and

similarly, transaction T2 is waiting for T1 to release its lock. All activities come to a halt state

and remain at a standstill. It will remain in a standstill until the DBMS detects the deadlock

and aborts one of the transactions.

Deadlock Avoidance

o When a database is stuck in a deadlock state, then it is better to avoid the database rather than

aborting or restating the database. This is a waste of time and resource.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

o Deadlock avoidance mechanism is used to detect any deadlock situation in advance. A

method like "wait for graph" is used for detecting the deadlock situation but this method is

suitable only for the smaller database. For the larger database, deadlock prevention method

can be used.

Deadlock Detection

In a database, when a transaction waits indefinitely to obtain a lock, then the DBMS should

detect whether the transaction is involved in a deadlock or not. The lock manager maintains a

Wait for the graph to detect the deadlock cycle in the database.

39.9M

741

Java Try Catch

Wait for Graph

o This is the suitable method for deadlock detection. In this method, a graph is created based on

the transaction and their lock. If the created graph has a cycle or closed loop, then there is a

deadlock.

o The wait for the graph is maintained by the system for every transaction which is waiting for

some data held by the others. The system keeps checking the graph if there is any cycle in the

graph.

The wait for a graph for the above scenario is shown below:

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Deadlock Prevention

o Deadlock prevention method is suitable for a large database. If the resources are allocated in

such a way that deadlock never occurs, then the deadlock can be prevented.

o The Database management system analyzes the operations of the transaction whether they can

create a deadlock situation or not. If they do, then the DBMS never allowed that transaction to

be executed.

Wait-Die scheme

In this scheme, if a transaction requests for a resource which is already held with a conflicting

lock by another transaction then the DBMS simply checks the timestamp of both transactions.

It allows the older transaction to wait until the resource is available for execution.

Let's assume there are two transactions Ti and Tj and let TS(T) is a timestamp of any

transaction T. If T2 holds a lock by some other transaction and T1 is requesting for resources

held by T2 then the following actions are performed by DBMS:

1. Check if TS(Ti) < TS(Tj) - If Ti is the older transaction and Tj has held some resource, then

Ti is allowed to wait until the data-item is available for execution. That means if the older

transaction is waiting for a resource which is locked by the younger transaction, then the older

transaction is allowed to wait for resource until it is available.

2. Check if TS(Ti) < TS(Tj) - If Ti is older transaction and has held some resource and if Tj is

waiting for it, then Tj is killed and restarted later with the random delay but with the same

timestamp.

Wound wait scheme

o In wound wait scheme, if the older transaction requests for a resource which is held by the

younger transaction, then older transaction forces younger one to kill the transaction and

release the resource. After the minute delay, the younger transaction is restarted but with the

same timestamp.

o If the older transaction has held a resource which is requested by the Younger transaction,

then the younger transaction is asked to wait until older releases it.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

RECOVERY SYSTEM

DATABASE RECOVERY There can be any case in database system like any

computer system when database failure happens. So data stored in database should be

available all the time whenever it is needed. So Database recovery means recovering the data

when it get deleted, hacked or damaged accidentally. Atomicity is must whether is

transaction is over or not it should reflect in the database permanently or it should not effect

the database at all. So database recovery and database recovery techniques are must

in DBMS. So database recovery techniques in DBMS are given below.

Recovery is the process of restoring a database to the correct state in the event of a

failure.

It ensures that the database is reliable and remains in consistent state in case of a

failure.

Database recovery can be classified into two parts;

1. Rolling Forward applies redo records to the corresponding data blocks.

2. Rolling Back applies rollback segments to the datafiles. It is stored in transaction tables.

Crash recovery:

DBMS may be an extremely complicated system with many transactions being executed each

second. The sturdiness and hardiness of software rely upon its complicated design and its

underlying hardware and system package. If it fails or crashes amid transactions, it’s

expected that the system would follow some style of rule or techniques to recover lost

knowledge.

Database recovery in dbms and its techniques

Classification of failure:

To see wherever the matter has occurred, we tend to generalize a failure into numerous

classes, as follows:

Transaction failure

System crash

Disk failure

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Types of Failure

1. Transaction failure: A transaction needs to abort once it fails to execute or once it

reaches to any further extent from wherever it can’t go to any extent further. This is

often known as transaction failure wherever solely many transactions or processes are

hurt. The reasons for transaction failure are:

Logical errors

System errors

1. Logical errors: Where a transaction cannot complete as a result of its code error or

an internal error condition.

2. System errors: Wherever the information system itself terminates an energetic

transaction as a result of the DBMS isn’t able to execute it, or it’s to prevent due to

some system condition. to Illustrate, just in case of situation or resource

inconvenience, the system aborts an active transaction.

3. System crash: There are issues − external to the system − that will cause the system

to prevent abruptly and cause the system to crash. For instance, interruptions in power

supply might cause the failure of underlying hardware or software package failure.

Examples might include OS errors.

4. Disk failure: In early days of technology evolution, it had been a typical drawback

wherever hard-disk drives or storage drives accustomed to failing oftentimes. Disk

failures include the formation of dangerous sectors, unreachability to the disk, disk

crash or the other failure, that destroys all or a section of disk storage.

Storage structure:

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Classification of storage structure is as explained below:

Classification of Storage

1. Volatile storage: As the name suggests, a memory board (volatile storage) cannot

survive system crashes. Volatile storage devices are placed terribly near to the CPU;

usually, they’re embedded on the chipset itself. For instance, main memory and cache

memory are samples of the memory board. They’re quick however will store a solely

little quantity of knowledge.

2. Non-volatile storage: These recollections are created to survive system crashes.

they’re immense in information storage capability, however slower in the

accessibility. Examples could include hard-disks, magnetic tapes, flash memory, and

non-volatile (battery backed up) RAM.

Recovery and Atomicity:

When a system crashes, it should have many transactions being executed and numerous files

opened for them to switch the information items. Transactions are a product of numerous

operations that are atomic in nature. However consistent with ACID properties of a database,

atomicity of transactions as an entire should be maintained, that is, either all the operations

are executed or none.

When a database management system recovers from a crash, it ought to maintain the

subsequent:

It ought to check the states of all the transactions that were being executed.

A transaction could also be within the middle of some operation; the database

management system should make sure the atomicity of the transaction during this

case.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

It ought to check whether or not the transaction is completed currently or it must be

rolled back.

No transactions would be allowed to go away from the database management system

in an inconsistent state.

There are 2 forms of techniques, which may facilitate a database management system in

recovering as well as maintaining the atomicity of a transaction:

Maintaining the logs of every transaction, and writing them onto some stable storage

before truly modifying the info.

Maintaining shadow paging, wherever the changes are done on a volatile memory,

and later, and the particular info is updated.

Log-based recovery Or Manual Recovery):

Log could be a sequence of records, which maintains the records of actions performed by

dealing. It’s necessary that the logs area unit written before the particular modification and

hold on a stable storage media, that is failsafe. Log-based recovery works as follows:

The log file is unbroken on a stable storage media.

When a transaction enters the system and starts execution, it writes a log regarding it.

Recovery with concurrent transactions (Automated Recovery):

When over one transaction is being executed in parallel, the logs are interleaved. At the time

of recovery, it’d become exhausting for the recovery system to go back all logs, and so begin

recovering. To ease this example, the latest package uses the idea of ‘checkpoints’.

Automated Recovery is of two types

Deferred Update Recovery

Immediate Update Recovery

The database can be modified using two approaches −

Deferred database modification − All logs are written on to the stable storage and

the database is updated when a transaction commits.

Immediate database modification − Each log follows an actual database

modification. That is, the database is modified immediately after every operation.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

ST.ANN’S COLLEGE OF ARTS AND SCIENCE, TINDIVANAM

RELATIONAL DATABASE MANAGEMENT SYSTEM

Recovery with Concurrent Transactions

When more than one transaction are being executed in parallel, the logs are interleaved. At

the time of recovery, it would become hard for the recovery system to backtrack all logs, and

then start recovering. To ease this situation, most modern DBMS use the concept of

'checkpoints'.

Checkpoint

Keeping and maintaining logs in real time and in real environment may fill out all the

memory space available in the system. As time passes, the log file may grow too big to be

handled at all. Checkpoint is a mechanism where all the previous logs are removed from the

system and stored permanently in a storage disk. Checkpoint declares a point before which

the DBMS was in consistent state, and all the transactions were committed.

Recovery

When a system with concurrent transactions crashes and recovers, it behaves in the following

manner −

The recovery system reads the logs backwards from the end to the last checkpoint.

It maintains two lists, an undo-list and a redo-list.

If the recovery system sees a log with <Tn, Start> and <Tn, Commit> or just <Tn,

Commit>, it puts the transaction in the redo-list.

If the recovery system sees a log with <Tn, Start> but no commit or abort log found, it

puts the transaction in undo-list.

All the transactions in the undo-list are then undone and their logs are removed. All the

transactions in the redo-list and their previous logs are removed and then redone before

saving their logs.

DEPARTMENT OF COMPUTER SCIENCE SEMESTER: IV

You might also like

- VIM For SAP Solutions 20.4 Installation Guide English VIM200400 IGD en 09 PDFDocument266 pagesVIM For SAP Solutions 20.4 Installation Guide English VIM200400 IGD en 09 PDFyoeesefNo ratings yet

- Alpha BetaDocument4 pagesAlpha BetaAsowad Ullah100% (2)

- Query ProcessingDocument20 pagesQuery ProcessingAmanpreet DuttaNo ratings yet

- 36-Module-4 Query Optimization-16-03-2024Document6 pages36-Module-4 Query Optimization-16-03-2024d.nitin110804No ratings yet

- Query ProcessingDocument5 pagesQuery Processinganon_189503955No ratings yet

- Ivunit Query ProcessingDocument12 pagesIvunit Query ProcessingKeshava VarmaNo ratings yet

- ADBMS Chapter OneDocument21 pagesADBMS Chapter OneNaomi AmareNo ratings yet

- Query Processing and OptimizationDocument23 pagesQuery Processing and OptimizationMahMal FAtimaNo ratings yet

- SQL InterviewDocument9 pagesSQL Interviewjayadeepthiyamasani91No ratings yet

- Adir QBDocument27 pagesAdir QBAayush galaNo ratings yet

- Execution Plan Basics - Simple TalkDocument34 pagesExecution Plan Basics - Simple TalkKevin AndersonNo ratings yet

- What Is Query: Lecture's Name: Amanj Anwar AbdullahDocument6 pagesWhat Is Query: Lecture's Name: Amanj Anwar AbdullahNawroz AzadNo ratings yet

- Query Processing OptimizationDocument38 pagesQuery Processing OptimizationBalaram RathNo ratings yet

- Presentation9 - Query Processing and Query Optimization in DBMSDocument36 pagesPresentation9 - Query Processing and Query Optimization in DBMSsatyam singhNo ratings yet

- Chapter 2 Querry ProccessingDocument7 pagesChapter 2 Querry ProccessingMusariri TalentNo ratings yet

- Query Processing and OptimizationDocument28 pagesQuery Processing and OptimizationLubadri LmNo ratings yet

- Query Optimization: Admas University, Advanced DBMS Lecture NoteDocument5 pagesQuery Optimization: Admas University, Advanced DBMS Lecture NoteLubadri LmNo ratings yet

- Department of Computer Science and Engineering: Comprehensive Viva-Voce Database Management SystemDocument9 pagesDepartment of Computer Science and Engineering: Comprehensive Viva-Voce Database Management Systemjayaraj2024No ratings yet

- .Ua Articles SQL SQLDocument11 pages.Ua Articles SQL SQLMarouani AmorNo ratings yet

- Query Proc NotesDocument10 pagesQuery Proc NotesPrem Bahadur KcNo ratings yet

- IM Ch11 DB Performance Tuning Ed12Document17 pagesIM Ch11 DB Performance Tuning Ed12MohsinNo ratings yet

- CH - 2 Query ProcessDocument44 pagesCH - 2 Query ProcessfiraolfroNo ratings yet

- Execution Plan BasicsDocument29 pagesExecution Plan BasicsJegadhesan M Jamuna JegadhesanNo ratings yet

- RDBMS UnitvDocument20 pagesRDBMS UnitvrogithaNo ratings yet

- Introduction To Query Processing and OptimizationDocument4 pagesIntroduction To Query Processing and Optimizationashwanigarg2000No ratings yet

- DBMS Unit 4Document9 pagesDBMS Unit 4yabera528No ratings yet

- Query OptimizationDocument29 pagesQuery OptimizationIndra Kishor Chaudhary AvaiduwaiNo ratings yet

- Database Management Systems (Dbms Ii) : Query Processing & OptimizationDocument32 pagesDatabase Management Systems (Dbms Ii) : Query Processing & OptimizationWêēdhzæmē ApdyræhmæåñNo ratings yet

- Unit 10 PL-SQL Query Processing & Query OptimizationDocument8 pagesUnit 10 PL-SQL Query Processing & Query Optimizationmalav piyaNo ratings yet

- Database Lab AssignmentDocument26 pagesDatabase Lab AssignmentSYED MUHAMMAD USAMA MASOODNo ratings yet

- Fntdb07 Architecture of A Database SystemDocument119 pagesFntdb07 Architecture of A Database Systemspn100% (4)

- Adaptive Query ProcessingDocument140 pagesAdaptive Query Processingchandu619No ratings yet

- DBMS Unit-05: By: Mani Butwall, Asst. Prof. (CSE)Document27 pagesDBMS Unit-05: By: Mani Butwall, Asst. Prof. (CSE)Ronak MakwanaNo ratings yet

- PL-SQL Interview QuestionsDocument191 pagesPL-SQL Interview QuestionsscholarmasterNo ratings yet

- Chapter - 1 - Query OptimizationDocument38 pagesChapter - 1 - Query Optimizationmaki ababiNo ratings yet

- Advanced Database Systems Chapter 2Document16 pagesAdvanced Database Systems Chapter 2Jundu Omer100% (1)

- CH - 1 Query Process SWDocument43 pagesCH - 1 Query Process SWfiraolfroNo ratings yet

- Subject:Iot Topic:Data ProcessingDocument10 pagesSubject:Iot Topic:Data ProcessingharshithaNo ratings yet

- Ans: A: 1. Describe The Following: Dimensional ModelDocument8 pagesAns: A: 1. Describe The Following: Dimensional ModelAnil KumarNo ratings yet

- Database Testing GuideDocument6 pagesDatabase Testing GuideJong FrancoNo ratings yet

- Query Processing and Optimization: Chapter - 2Document42 pagesQuery Processing and Optimization: Chapter - 2jaf42747No ratings yet

- 1 Hend 4 F 3 Hru 8 Dfu 504 UnDocument22 pages1 Hend 4 F 3 Hru 8 Dfu 504 UnmandapatiNo ratings yet

- Principles and Techniques ofDocument4 pagesPrinciples and Techniques ofMartin MugambiNo ratings yet

- 4.query Processing and OptimizationDocument5 pages4.query Processing and OptimizationBHAVESHNo ratings yet

- Ora d2k QuestDocument148 pagesOra d2k Questprasanna220274No ratings yet

- Physical Database Design For Relational PDFDocument38 pagesPhysical Database Design For Relational PDFAlHasanNo ratings yet

- Tips On Performance Testing and OptimizationDocument5 pagesTips On Performance Testing and OptimizationsriramNo ratings yet

- PL SQL Interview QnsDocument182 pagesPL SQL Interview QnsChandra Babu Naidu VeluriNo ratings yet

- Rdbms Unit VDocument19 pagesRdbms Unit VrogithaNo ratings yet

- ReportDocument69 pagesReportAditya Raj SahNo ratings yet

- ManegemeneDocument26 pagesManegemeneTk ChichieNo ratings yet

- Query Optimization Research PaperDocument8 pagesQuery Optimization Research Paperafeasdvym100% (1)

- Performance Tuning Interview QuestionsDocument8 pagesPerformance Tuning Interview QuestionsAMEY100% (3)

- Main Phases of Database DesignDocument2 pagesMain Phases of Database DesignAnnapoorna GilkinjaNo ratings yet

- Dbms Notes RamamoorthyDocument33 pagesDbms Notes Ramamoorthyrama moorthyNo ratings yet

- ADE Unit-1: Entity Relational ModelDocument19 pagesADE Unit-1: Entity Relational ModelPradeepNo ratings yet

- Database Development ProcessDocument8 pagesDatabase Development ProcessdeezNo ratings yet

- High Performance SQL Server: Consistent Response for Mission-Critical ApplicationsFrom EverandHigh Performance SQL Server: Consistent Response for Mission-Critical ApplicationsNo ratings yet

- Advanced Analytics with Transact-SQL: Exploring Hidden Patterns and Rules in Your DataFrom EverandAdvanced Analytics with Transact-SQL: Exploring Hidden Patterns and Rules in Your DataNo ratings yet

- THE SQL LANGUAGE: Master Database Management and Unlock the Power of Data (2024 Beginner's Guide)From EverandTHE SQL LANGUAGE: Master Database Management and Unlock the Power of Data (2024 Beginner's Guide)No ratings yet

- 8 Microsoft VDI LicensingDocument22 pages8 Microsoft VDI LicensingRamachandranRamuNo ratings yet

- 802.11W - Protected Management FrameDocument4 pages802.11W - Protected Management FrameAbebe BelachewNo ratings yet

- Test Before You CodeDocument10 pagesTest Before You CodeAndrew DzyniaNo ratings yet

- Chemistry I Intro 2019Document20 pagesChemistry I Intro 2019Yutao LiuNo ratings yet

- LNL 1324e Product Overview - tcm841 145612Document2 pagesLNL 1324e Product Overview - tcm841 145612MoisesManuelBravoLeon100% (1)

- Fortinet Wireless Product MatrixDocument10 pagesFortinet Wireless Product MatrixViktor MahdalNo ratings yet

- Oop Lab ReportDocument6 pagesOop Lab ReportMd. Jahid Hasan PintuNo ratings yet

- Two Types of Image Segmentation Exist:: Semantic Segmentation. Objects Shown in An Image Are Grouped Based OnDocument25 pagesTwo Types of Image Segmentation Exist:: Semantic Segmentation. Objects Shown in An Image Are Grouped Based OnShivada JayaramNo ratings yet

- Formes 3D Et Trimesh - Documentation Py5Document13 pagesFormes 3D Et Trimesh - Documentation Py5Edmonico VelonjaraNo ratings yet

- Computer Science File KavyaDocument34 pagesComputer Science File Kavyau.ahmad0366No ratings yet

- Google Classroom: Helen O. SabadoDocument26 pagesGoogle Classroom: Helen O. SabadoNoemi SabadoNo ratings yet

- CodeVita 2017 Round 2 BDocument2 pagesCodeVita 2017 Round 2 BsirishaNo ratings yet

- A4 Nur Fatihah Binti Mat AhirDocument6 pagesA4 Nur Fatihah Binti Mat AhirNur FatihahNo ratings yet

- 2014 04 R Mould Base 6060 SeriesDocument10 pages2014 04 R Mould Base 6060 SeriesvinayakNo ratings yet

- Jose Moreira CVDocument3 pagesJose Moreira CVjgmoreira7619No ratings yet

- University PUNJAB (Gujranwala Campus) : Department Information Technology Academic Transcript (Information Technology)Document2 pagesUniversity PUNJAB (Gujranwala Campus) : Department Information Technology Academic Transcript (Information Technology)HAFIZ SHAHEER UL ISLAMNo ratings yet

- Code Coverage - Firefox Source Docs DocumentationDocument5 pagesCode Coverage - Firefox Source Docs DocumentationAdrian BirdeaNo ratings yet

- Code - Aster: Linear Solvor by The Method Multifrontale Mult - FrontDocument17 pagesCode - Aster: Linear Solvor by The Method Multifrontale Mult - FrontStefano MilaniNo ratings yet

- VERIM 4-Manual PDFDocument61 pagesVERIM 4-Manual PDFTatianaPadillaNo ratings yet

- User's Manual: Qseven Computer On Module (COM) With Embedded Intel® Atom™, Pentium®, and Celeron® ProcessorsDocument95 pagesUser's Manual: Qseven Computer On Module (COM) With Embedded Intel® Atom™, Pentium®, and Celeron® ProcessorsyoNo ratings yet

- Cobra ODE Installation Manual (English) v1.4Document49 pagesCobra ODE Installation Manual (English) v1.4sasa097100% (1)

- Python Vs C++Document4 pagesPython Vs C++JosephNo ratings yet

- Displaying Duplicate Member Names in Oracle Essbase Spreadsheet Add-In Release 11.1.1Document9 pagesDisplaying Duplicate Member Names in Oracle Essbase Spreadsheet Add-In Release 11.1.1Priyanka GargNo ratings yet

- Power PlanningDocument12 pagesPower PlanningMohammad JoharNo ratings yet

- Safety and SecurityDocument33 pagesSafety and Securitylatoya andersonNo ratings yet

- Zigbee Technology: Bull Temple Road, Basavangudi, Bangalore - 560 019, Karnataka, IndiaDocument12 pagesZigbee Technology: Bull Temple Road, Basavangudi, Bangalore - 560 019, Karnataka, IndiaBHOOMIKA PNo ratings yet

- The Importance of TDM TimingDocument6 pagesThe Importance of TDM TimingMarcos TokunagaNo ratings yet

- Saudi digitalSME PDFDocument118 pagesSaudi digitalSME PDFAdham AzzamNo ratings yet