Professional Documents

Culture Documents

Ai Unit-5 Notes

Ai Unit-5 Notes

Uploaded by

4119 RAHUL SCopyright:

Available Formats

You might also like

- Learn API Testing - Norms, Practices, and Guidelines For Building Effective Test AutomationDocument235 pagesLearn API Testing - Norms, Practices, and Guidelines For Building Effective Test AutomationIanNo ratings yet

- AutomationDocument36 pagesAutomationParvez AlamNo ratings yet

- Taj1 1 Karpman Options PDFDocument9 pagesTaj1 1 Karpman Options PDFDejan Nikolic100% (1)

- Unit-I Introduction To Iot and Python ProgrammingDocument77 pagesUnit-I Introduction To Iot and Python ProgrammingKranti KambleNo ratings yet

- Artificial Intelligence For Speech RecognitionDocument9 pagesArtificial Intelligence For Speech RecognitionNeha BhoyarNo ratings yet

- Jerusalem College of Engineering: ACADEMIC YEAR 2021 - 2022Document40 pagesJerusalem College of Engineering: ACADEMIC YEAR 2021 - 2022jayashree rukmaniNo ratings yet

- Skinput TechnologyDocument22 pagesSkinput Technologyprashanth100% (1)

- Mitali Child SafetyDocument23 pagesMitali Child SafetyVishal LabdeNo ratings yet

- CS8691 Unit1 ARTIFICIAL INTELLIGENCE Regulation 2017Document88 pagesCS8691 Unit1 ARTIFICIAL INTELLIGENCE Regulation 2017JEYANTHI maryNo ratings yet

- Thesis WorkDocument84 pagesThesis WorkKhushboo KhannaNo ratings yet

- Wirelesss Notice Board Using IotDocument13 pagesWirelesss Notice Board Using IotShri Harsh MudhirajNo ratings yet

- RTOS Class NotesDocument15 pagesRTOS Class NotesYogesh Misra100% (1)

- PythonDocument33 pagesPythonannaNo ratings yet

- IEEE 802.5 (Token Ring) LAN: Dr. Sanjay P. Ahuja, PH.DDocument13 pagesIEEE 802.5 (Token Ring) LAN: Dr. Sanjay P. Ahuja, PH.Ddevbrat anandNo ratings yet

- Bluetooth Based Smart Sensor NetworksDocument16 pagesBluetooth Based Smart Sensor Networkspankaj49sharma100% (1)

- Air Quality Prediction Using Machine Learning AlgorithmsDocument4 pagesAir Quality Prediction Using Machine Learning AlgorithmsATS100% (1)

- Mobile Computing Unit 2Document158 pagesMobile Computing Unit 2Sabhya SharmaNo ratings yet

- Microprocessors NotesDocument65 pagesMicroprocessors NoteswizardvenkatNo ratings yet

- 5CS4-03 - Operating System - Kajal MathurDocument157 pages5CS4-03 - Operating System - Kajal MathurIshaan KhandelwalNo ratings yet

- Android Based College Bell SystemDocument6 pagesAndroid Based College Bell SystemshrynikjainNo ratings yet

- 5G Technology: Danish Amin & MD ShahidDocument25 pages5G Technology: Danish Amin & MD ShahidRam PadhiNo ratings yet

- Question Bank Module-1: Department of Computer Applications 18mca53 - Machine LearningDocument7 pagesQuestion Bank Module-1: Department of Computer Applications 18mca53 - Machine LearningShiva ShankaraNo ratings yet

- Snake Game in CDocument13 pagesSnake Game in CHarsh GuptaNo ratings yet

- Course Material: Kwara State Polytechnic, IlorinDocument106 pagesCourse Material: Kwara State Polytechnic, IlorinYublex JnrNo ratings yet

- Survey On Identification of Gender of Silkworm Using Image ProcessingDocument12 pagesSurvey On Identification of Gender of Silkworm Using Image ProcessingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Fast Three-Step Search Algorithm With Minimum Checking Points Unimodal Error Surface AssumptionDocument11 pagesA Fast Three-Step Search Algorithm With Minimum Checking Points Unimodal Error Surface AssumptionmanikandaprabumeNo ratings yet

- Compititive Programming - I ManualDocument79 pagesCompititive Programming - I ManualArun ChaudhariNo ratings yet

- Mini ProjectDocument11 pagesMini Projectpabinshrisundar14No ratings yet

- Verbal Ability Placement PaperDocument33 pagesVerbal Ability Placement Paperkrithikgokul selvamNo ratings yet

- 2015 Scheme LTE - IA-1 27 - 05 - 2021Document9 pages2015 Scheme LTE - IA-1 27 - 05 - 2021Sanjana AkkoleNo ratings yet

- CBSE Class 12 Informatic Practices Computer Networking PDFDocument38 pagesCBSE Class 12 Informatic Practices Computer Networking PDFHimanshu SekharNo ratings yet

- Smart Pixel Arrays PDFDocument22 pagesSmart Pixel Arrays PDFAnil Kumar Gorantala100% (1)

- Final ReportDocument61 pagesFinal ReportALBYNo ratings yet

- EC8552 Computer Architecture and Organization Notes 1Document106 pagesEC8552 Computer Architecture and Organization Notes 1Ambedkar RajanNo ratings yet

- Bangalore House Price PredictionDocument14 pagesBangalore House Price PredictionChinnapu charan Teja ReddyNo ratings yet

- 1Document17 pages1MAHALAKSHMINo ratings yet

- Diploma Engineering: Laboratory ManualDocument112 pagesDiploma Engineering: Laboratory ManualDHRUV PATELNo ratings yet

- Notes Data Compression and Cryptography Module 6 System SecurityDocument23 pagesNotes Data Compression and Cryptography Module 6 System SecurityRohan BorgalliNo ratings yet

- Secure ATM Using Card Scanning Plus OTPDocument2 pagesSecure ATM Using Card Scanning Plus OTPPerez BonzoNo ratings yet

- Embedded Systems IareDocument137 pagesEmbedded Systems Iarerocky dNo ratings yet

- Microstrip Antenna Design For Ultra-Wide Band ApplicationsDocument4 pagesMicrostrip Antenna Design For Ultra-Wide Band ApplicationsinventionjournalsNo ratings yet

- Matlab Colour Sensing RobotDocument5 pagesMatlab Colour Sensing Robotwaqas67% (3)

- Python InheritanceDocument20 pagesPython InheritanceSarvesh KumarNo ratings yet

- Face Recognition Using Neural Network: Seminar ReportDocument33 pagesFace Recognition Using Neural Network: Seminar Reportnikhil_mathur10100% (3)

- Compression & DecompressionDocument118 pagesCompression & DecompressionAnurag Patsariya67% (3)

- ML Lab Manual (1-10) FINALDocument34 pagesML Lab Manual (1-10) FINALchintuNo ratings yet

- Documentation: Project Name: Snake Game Development Language: C#Document13 pagesDocumentation: Project Name: Snake Game Development Language: C#rajeswariNo ratings yet

- Blue BrainDocument16 pagesBlue BrainVarunNo ratings yet

- Traffic Sign Board Recognition and Voice Alert System Using Convolutional Neural NetworkDocument1 pageTraffic Sign Board Recognition and Voice Alert System Using Convolutional Neural NetworkWebsoft Tech-HydNo ratings yet

- GUI Calculator Project: Lab Report OnDocument6 pagesGUI Calculator Project: Lab Report OnRafsun Masum0% (1)

- EC6802 Wireless Networks Important PART-A &B Questions With AnswersDocument21 pagesEC6802 Wireless Networks Important PART-A &B Questions With AnswersohmshankarNo ratings yet

- Android Chat Application Documentation PDFDocument49 pagesAndroid Chat Application Documentation PDFArsalan rexNo ratings yet

- Dynamic Auto Selection and Auto Tuning of Machine Learning Models For Cloud Network Analytics SynopsisDocument9 pagesDynamic Auto Selection and Auto Tuning of Machine Learning Models For Cloud Network Analytics Synopsissudhakar kethanaNo ratings yet

- Introduction of PythonDocument217 pagesIntroduction of PythonRiya Santoshwar0% (1)

- Video Conferencing & ChattingDocument48 pagesVideo Conferencing & ChattingyrikkiNo ratings yet

- UE20CS314 Unit3 SlidesDocument238 pagesUE20CS314 Unit3 SlidesKoushiNo ratings yet

- Enhancing Data Security in Iot Healthcare Services Using Fog ComputingDocument36 pagesEnhancing Data Security in Iot Healthcare Services Using Fog ComputingMudassir khan MohammedNo ratings yet

- Automatic Car Theft Detection System Based On GPS and GSM TechnologyDocument4 pagesAutomatic Car Theft Detection System Based On GPS and GSM TechnologyEditor IJTSRDNo ratings yet

- WCMS Unit4..Document10 pagesWCMS Unit4..rakshithadahnuNo ratings yet

- Keywords: Computer Vision, Machine Learning, CNN, Kaggle, Network, Datasets, TensorflowDocument43 pagesKeywords: Computer Vision, Machine Learning, CNN, Kaggle, Network, Datasets, TensorflowAswin KrishnanNo ratings yet

- Network Management System A Complete Guide - 2020 EditionFrom EverandNetwork Management System A Complete Guide - 2020 EditionRating: 5 out of 5 stars5/5 (1)

- Unit 5Document46 pagesUnit 5jana kNo ratings yet

- Unit 3 Stresses in BeamDocument68 pagesUnit 3 Stresses in Beam4119 RAHUL SNo ratings yet

- Messagd: VarlableDocument1 pageMessagd: Varlable4119 RAHUL SNo ratings yet

- WAP - Wireless Application ProtocolDocument21 pagesWAP - Wireless Application Protocol4119 RAHUL SNo ratings yet

- Explain Different Mutual Exclusion Algorithms in Detail.: Lamports AlgorithmDocument2 pagesExplain Different Mutual Exclusion Algorithms in Detail.: Lamports Algorithm4119 RAHUL SNo ratings yet

- Wireless Mark-Up Language (WML)Document5 pagesWireless Mark-Up Language (WML)4119 RAHUL SNo ratings yet

- Wireless Transaction Protocol (WTP)Document9 pagesWireless Transaction Protocol (WTP)4119 RAHUL SNo ratings yet

- Roup Of: Extension Compatible 24Document7 pagesRoup Of: Extension Compatible 244119 RAHUL SNo ratings yet

- The Mechanism Of: Taking A Packet Consisting ofDocument5 pagesThe Mechanism Of: Taking A Packet Consisting of4119 RAHUL SNo ratings yet

- Unit 4Document27 pagesUnit 44119 RAHUL SNo ratings yet

- Ai Unit-2 NotesDocument32 pagesAi Unit-2 Notes4119 RAHUL SNo ratings yet

- Ai Unit-1 NotesDocument22 pagesAi Unit-1 Notes4119 RAHUL SNo ratings yet

- Target Costing TutorialDocument2 pagesTarget Costing TutorialJosephat LisiasNo ratings yet

- Introduction To PBLDocument21 pagesIntroduction To PBLChipego NyirendaNo ratings yet

- Applied Economics Module 1Document30 pagesApplied Economics Module 1愛結No ratings yet

- English Literature AnthologyDocument25 pagesEnglish Literature AnthologyShrean RafiqNo ratings yet

- Prediksi SOal UAS Kelas XII WAJIBDocument14 pagesPrediksi SOal UAS Kelas XII WAJIBDinda Anisa Meldya Salsabila100% (2)

- Calculating The Temperature RiseDocument8 pagesCalculating The Temperature Risesiva anandNo ratings yet

- Updated Resume - Kaushik SenguptaDocument3 pagesUpdated Resume - Kaushik SenguptaKaushik SenguptaNo ratings yet

- Prolink ShareHub Device ServerDocument33 pagesProlink ShareHub Device Serverswordmy2523No ratings yet

- Int Org 2Document60 pagesInt Org 2Alara MelnichenkoNo ratings yet

- Lesson 2 - Victimology and VictimDocument19 pagesLesson 2 - Victimology and VictimHernandez JairineNo ratings yet

- Management Accounting Costing and BudgetingDocument31 pagesManagement Accounting Costing and BudgetingnileshdilushanNo ratings yet

- Chapter-I Elections of 1936-37 and Muslim LeagueDocument63 pagesChapter-I Elections of 1936-37 and Muslim LeagueAdeel AliNo ratings yet

- Identification, Storage, and Handling of Geosynthetic Rolls: Standard Guide ForDocument2 pagesIdentification, Storage, and Handling of Geosynthetic Rolls: Standard Guide ForSebastián RodríguezNo ratings yet

- Hybrid Clutch Installation GuideDocument2 pagesHybrid Clutch Installation Guidebrucken1987No ratings yet

- Controllogix FestoDocument43 pagesControllogix FestoEdwin Ramirez100% (1)

- Auxiliary StructuresDocument19 pagesAuxiliary StructuresdarwinNo ratings yet

- Modeling and Analysis of DC-DC Converters Under Pulse Skipping ModulationDocument6 pagesModeling and Analysis of DC-DC Converters Under Pulse Skipping Modulationad duybgNo ratings yet

- Flow ControlDocument87 pagesFlow Controlibrahim_nfsNo ratings yet

- Improving Machine Translation With Conditional Sequence Generative Adversarial NetsDocument10 pagesImproving Machine Translation With Conditional Sequence Generative Adversarial Netsmihai ilieNo ratings yet

- Trompenaars' Cultural Dimensions in France: February 2021Document15 pagesTrompenaars' Cultural Dimensions in France: February 2021Như Hảo Nguyễn NgọcNo ratings yet

- (SAPP) Case Study F3 ACCA Báo Cáo Tài Chính Cơ BảnDocument4 pages(SAPP) Case Study F3 ACCA Báo Cáo Tài Chính Cơ BảnTài VõNo ratings yet

- SafeBoda Data Intern JDDocument2 pagesSafeBoda Data Intern JDMusah100% (1)

- MNE in Scotland ContextDocument16 pagesMNE in Scotland ContextmaduNo ratings yet

- Sky Star 300Document2 pagesSky Star 300Onitry RecordNo ratings yet

- CHP 15 MULTIDIMENSIONAL SCALING FOR BRAND POSITIONING Group 1 & 2Document16 pagesCHP 15 MULTIDIMENSIONAL SCALING FOR BRAND POSITIONING Group 1 & 2alfin luanmasaNo ratings yet

- Naiad GettingstartedDocument37 pagesNaiad GettingstartedYurivanovNo ratings yet

- 2009 SL School Release MaterialsDocument146 pages2009 SL School Release MaterialsAditiPriyankaNo ratings yet

Ai Unit-5 Notes

Ai Unit-5 Notes

Uploaded by

4119 RAHUL SOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ai Unit-5 Notes

Ai Unit-5 Notes

Uploaded by

4119 RAHUL SCopyright:

Available Formats

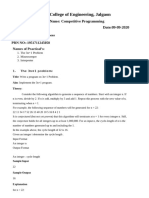

CS8691 ARTIFICIAL INTELLIGENCE

UNIT V

AI APPLICATIONS

AI applications — Language Models — Information Retrieval- Information Extraction — Natural

Language Processing — Machine Translation — Speech Recognition — Robot — Hardware —

Perception — Planning — Moving

1. AI APPLICATIONS

Artificial Intelligence has a wide range of applications. Some of the applications are:

1. Healthcare

2. Gaming

3. Finance

4. Data Security

5. Social Media

6. Travel and Transport

7. Automotive Industry

8. Robotics

9. Entertainment

10. Agriculture

11. E-commerce

12. Education

AI in Healthcare

o In Recent years, AI is becoming more advantageous for the healthcare industry and going to have

a significant impact on this industry.

o Healthcare Industries are applying AI to make a better and faster diagnosis than humans. AI can

help doctors with diagnoses and can inform when patients are worsening so that medical help

can reach to the patient before hospitalization.

AI in Gaming

o AI can be used for gaming purpose. The AI machines can play strategic games like chess, where

the machine needs to think of a large number of possible places.

AI in Finance

o AI and finance industries are the best matches for each other. The finance industry is

implementing automation, chatbot, adaptive intelligence, algorithm trading, and machine

learning into financial processes.

Department of CSE, R.M.D. Engineering College.

AI in Data Security

o The security of data is crucial for every company and cyber-attacks are growing very rapidly in

the digital world. AI can be used to make your data more safe and secure. Some examples such

as AEG bot, AI2 Platform,are used to determine software bug and cyber-attacks in a better way.

AI in Social Media

o Social Media sites such as Facebook, Twitter, and Snapchat contain billions of user profiles,

which need to be stored and managed in a very efficient way. AI can organize and manage

massive amounts of data. AI can analyze lots of data to identify the latest trends, hashtag, and

requirement of different users.

AI in Travel & Transport

o AI is becoming highly demanding for travel industries. AI is capable of doing various travel

related works such as from making travel arrangement to suggesting the hotels, flights, and best

routes to the customers. Travel industries are using AI-powered chatbots which can make human-

like interaction with customers for better and fast response.

AI in Automotive Industry

o Some Automotive industries are using AI to provide virtual assistant to their user for better

performance. Such as Tesla has introduced TeslaBot, an intelligent virtual assistant.

o Various Industries are currently working for developing self-driven cars which can make your

journey more safe and secure.

AI in Robotics

o Artificial Intelligence has a remarkable role in Robotics. Usually, general robots are programmed

such that they can perform some repetitive task, but with the help of AI, we can create

intelligent robots which can perform tasks with their own experiences without pre-programmed.

o Humanoid Robots are best examples for AI in robotics, recently the intelligent Humanoid robot

named as Erica and Sophia has been developed which can talk and behave like humans.

AI in Entertainment

o We are currently using some AI based applications in our daily life with some entertainment

services such as Netflix or Amazon. With the help of ML/AI algorithms, these services show the

recommendations for programs or shows.

Department of CSE, R.M.D. Engineering College.

AI in Agriculture

o Agriculture is an area which requires various resources, labor, money, and time for best result.

Now a day's agriculture is becoming digital, and AI is emerging in this field. Agriculture is

applying AI as agriculture robotics, solid and crop monitoring, predictive analysis. AI in

agriculture can be very helpful for farmers.

AI in E-commerce

o AI is providing a competitive edge to the e-commerce industry, and it is becoming more

demanding in the e-commerce business. AI is helping shoppers to discover associated products

with recommended size, color, or even brand.

AI in education

o AI can automate grading so that the tutor can have more time to teach. AI chat bot can

communicate with students as a teaching assistant.

o AI in the future can be work as a personal virtual tutor for students, which will be accessible

easily at any time and any place.

2. LANGUAGE MODELS

Introduction

A language model is a probability distribution over sequences of words.

A key feature of language modeling is that it is generative, meaning that it aims to predict the

next word given a previous sequence of words.

Language models determine word probability by analyzing text data.

Language models are a crucial component in the Natural Language Processing (NLP).

These language models power all the popular NLP applications we are familiar with –Google

Assistant, Siri, Amazon’s Alexa, etc.,

Uses of language models

1. Speech Recognition

Predicts right words according to the sentence.

Example : “I ate a cherry” is a more likely sentence than “Eye eight uh Jerry”.

2. OCR & Handwriting recognition

Predicts a word that is not easily readable in the given image.

3. Translation

Choosing appropriate synonym for the same word during translation.

4. Context sensitive spelling correction

Predicts the correct spelling according to the context.

Example: “There are problems with this sentence” is a more likely sentence than “Their are

problems wit this sentence”

3

Department of CSE, R.M.D. Engineering College.

N-gram Character Models

N-gram Character model is a Statistical Language Model, used to predict the nth word from ‘n-1’

words.

A sequence of written symbols (A written text is composed of characters- letters, digits,

punctuation and spaces) of length n is called an “n-gram“

N-gram Character model is one of the simplest language models is a probability distribution over

sequences of characters.

Variations of n-gram include : “unigram” for 1-gram, “bigram” for 2-gram, and “trigram” for 3-gram

etc.,

Working of n-gram Character Models

An N-gram language model predicts the probability of a given N-gram within any sequence of words

in the language.

We compute this probability in two ways:

Apply the chain rule of probability

Markov Model

The Chain Rule of Probability

Computes the joint probability of a sequence by using the conditional probability of a word

given previous words.

The chain rule of probability is:

P(w1...ws) = P(w1) .

P(w2 | w1) .

P(w3 | w1 w2) .

P(w4 | w1 w2 w3) .

…

P(wn | w1...wn-1)

Example :

P(He is going to school) = P(He).

P(is | He).

P(going | He is).

P(to | He is going).

P(school | He is going to)

Drawback:

To compute the exact probability of a word given a long sequence of preceding words is difficult

(sometimes impossible).

Markov Models

The assumption that the probability of a word depends only on the previous word(s) is called

Markov assumption.

Department of CSE, R.M.D. Engineering College.

In a Markov chain the probability of character ci depends only on the immediately preceding

characters, not on any other characters.

We can assume for all conditions, that:

P(wk | w1...wk-1) = P(wk | wk-1)

Here, we approximate the history (the context) of the word wk by looking only at the last word of

the context.

Example :

P(He is going to school) = P(He).

P(is | He).

P(going | is).

P(to | going).

P(school |to)

Markov Model Order and n-grams

Markov models are the class of probabilistic models that assume that we can predict the probability

of some future unit without looking too far into the past.

A unigram is called a zeroth order Markov model

A bigram is called a first-order Markov model (because it looks one token into the past)

A trigram is called a second-order Markov model (it looks one token into the past)

In general a N-Gram is called a N-1 order Markov model.

The order of a Markov model is defined by the length of its history.

Smoothing of n-gram Character Models

The major complication of n-gram models is that the training corpus provides only an estimate of

the true probability distribution.

Department of CSE, R.M.D. Engineering College.

Example:

“--th” Very common

“--ht” Uncommon but what if we see this sentence:

It doesn’t mean that we should assign(“ ht”)=0

If we did, then the text “The program issues an http request” would have an English probability of

zero, which seems wrong. We have a problem in generalization.

Thus, we will adjust our language model so that sequences that have a count of zero in the training

corpus will be assigned a small nonzero probability.

The process of adjusting the probability of low-frequency counts is called smoothing.

Interpolation and Back off

As N increases, the power (expressiveness)of an N-gram model increases, but the smoothing

problem gets worse.

A general approach is to combine the results of multiple N-gram models of increasing complexity

(i.e. increasing N).

A better approach is a backoff model, in which we start by estimating n-gram counts, but for any

particular sequence that has a low (or zero) count, we back off to (n −1) grams

Interpolated Trigram Model:

λi Can be fixed or can be trained.

Model Evaluation

The Language model need to be evaluated to decide what model to choose.

A language model can be evaluated through

Cross Validation

Perplexity

Cross Validation:

Split the corpus into a training corpus and a validation corpus.

Determine the parameters of the model from the training data.

Then evaluate the model on the validation corpus.

The evaluation can be a task-specific metric, such as measuring accuracy on language identification.

Perplexity:

Perplexity is a measurement of how well a probability model predicts a test data.

In the context of Natural Language Processing, perplexity is one way to evaluate language models.

Low perplexity is good and high perplexity is bad.

Department of CSE, R.M.D. Engineering College.

Example:

We have a very small corpus from a Author ‘Z’

What is the probability that the statement “I I am not” was also written by same Author ‘Z’ ?

Solution

Using Bigram Model

Department of CSE, R.M.D. Engineering College.

Perplexity:

3. INFORMATION RETRIEVAL

“Information retrieval is the task of finding documents that are relevant to a user’s need for

information”

The best-known examples of information retrieval systems are search engines on the Worldwide

Web.

An IR information retrieval (henceforth IR) system can be characterized by:

1. A corpus of documents

Each system must decide what it wants to treat as a document: a paragraph, a page, or a multi

page text.

Department of CSE, R.M.D. Engineering College.

2. Queries posed in a query language

A query specifies what the user wants to know. The query language can be just a list of words or it

can specify phrase of words that must be adjacent or it can contain Boolean operators or non-

Boolean operators.

3. A result set

This is the subset of documents that the IR system judges to be relevant to the query.

4. A presentation of the result set

This can be as simple as a ranked list of document titles or as complex as a rotating color map of

the result set projected onto a three-dimensional space.

Earliest IR Systems:

The earliest IR systems worked on a Boolean keyword model.

Each word in the document collection is treated as a Boolean feature that is true of a document if

the word occurs in the document and false if it does not.

Suppose we try [information AND retrieval AND models AND optimization] and get an empty result

set. We could try [information OR retrieval OR models OR optimization], but if that returns too

many results, it is difficult to know what to try next

Boolean expressions are unfamiliar to users who are not programmers or logicians.

IR scoring functions

BM25 scoring function

• A scoring function takes a document and a query and returns a numeric score; the most relevant

documents have the highest scores.

• In the BM25 function, the score is a linear weighted combination of scores for each of the

words that make up the query.

|dj | is the length of document dj in words,

L is the average document length in the corpus: L = ∑i|di|/N.

Two parameters, k and b, that can be tuned by cross-validation; typical values are k= 2.0 and b =

0.75.

IDF(qi) is the inverse document frequency of word qi, given by

Department of CSE, R.M.D. Engineering College.

IR system evaluation

To determine whether an IR is performing well, TWO measures are used

Precision

Precision measures the proportion of documents in the result set that are actually relevant.

Precision P = tp/(tp + fn)

Recall

Recall measures the proportion of all the relevant documents in the collection that are in

the result set.

Recall R = tp/(tp + fp)

Where tp = True Positive, fp =False Positive, fn = False Negative, tn = True Negative

Imagine that an IR system has returned a result set for a single query, for which we know which

documents are and are not relevant, out of a corpus of 100 documents. The document counts in each

category are given in the following table:

In our example,

The precision is 30/(30 + 10)=0.75

The false positive rate is 1 −0.75=0.25

The Recall is 30/(30 + 20)=0.60

The false negative rate is 1 −0.60=0.40

RANKING OF WEB PAGES

1. The Page Rank Algorithm

Page Rank was one of the two original ideas that set Google’s search apart from other Web search

engines

The idea behind Page Rank Algorithm is that a page that has many in-links from high quality sites

(links to the page),should be ranked higher.

Each in-link is a vote for the quality of the linked-to page.

where PR(p) is the Page Rank of page p, N is the total number of pages in the corpus, in i are the pages

that link in to p, and C(in i) is the count of the total number of out-links on page in i. The constant d is

a damping factor.

10

Department of CSE, R.M.D. Engineering College.

2. HITS (Hyperlink-Induced Topic Search) Algorithm

The Hyperlink-Induced Topic Search algorithm, also known as “Hubs and Authorities” or HITS, is

another influential link-analysis algorithm.

Where,

RELEVANT-PAGES fetches the pages that match the query

EXPAND-PAGES adds in every page that links to or is linked from one of the

relevant pages.

NORMALIZE divides each page’s score by the sum of the squares all pages’ scores

(separately for both the authority and hubs scores).

The HITS Algorithm works as follows:

1) HITS first finds set of pages that are relevant to the query.

2) Add pages in the link neighbourhood of these pages—pages that link to or are linked from one of

the pages in the original relevant set.

3) Each page in this set is considered AUTHORITY an authority on the query to the degree that other

HUB pages in the relevant set point to it.

4) A page is considered a hub to the degree that it points to other authoritative pages in the relevant

set.

5) Give more value to the high-quality hubs and authorities.

6) Iterate a process that updates the authority score and Hub score of the page.

11

Department of CSE, R.M.D. Engineering College.

4. INFORMATION EXTRACTION

Information extraction is the process of acquiring knowledge by skimming a text and looking for

occurrences of a particular class of object and for relationships among objects.

General Pipeline of the Information Extraction Process

(1) Finite-state automata for information extraction

The simplest type of information extraction system is an attribute-based extraction system

Attribute-based extraction systems are relational extraction systems, which deal with multiple

objects and the relations among them.

A typical relational-based extraction system is FASTUS, which handles news stories about corporate

mergers and acquisitions.

It can read the story “ Bridgestone Sports Co. said Friday it has set up a joint venture in Taiwan with

a local concern and a Japanese trading house to produce golf clubs to be shipped to Japan”

From the story, the relations are extracted as follows:

e ∈ Member(e, “Bridgestone Sports Co.”) ∧

Member(e, “Japan”) ∧

Member(e,”Local Concern”) ∧

Product(e,”golf clubs”)∧

Date(e,”Friday”)

FASTUS consists of five stages:

Tokenization

Complex-word handling

Basic-group handling

Complex-phrase handling

Structure merging

12

Department of CSE, R.M.D. Engineering College.

Stage 1 : Tokenization

Segments the stream of characters into tokens(words, numbers, and punctuation).

For English, tokenization can be fairly simple; just separating characters at white space or

punctuation.

Stage 2 : Complex-word handling

Handles complex words, including collocations such as “set up” and “joint venture,” as well as

proper names such as “Bridgestone Sports Co.”

These are recognized by a combination of lexical entries

For example: A company name might be recognized by the rule Capitalized Word+ (“Company” |

“Co” | “Inc” | “Ltd”)

Stage 3 : Basic-group handling

Handles basic groups, meaning noun groups, verb groups, prepositions and conjunctions.

For

NG: Bridgestone Sports Co.

VG: said

NG: Friday

NG: it

VG: had set up

NG: a joint venture

PR: in

NG: Taiwan

Stage 4 : Complex-phrase handling

Combines the basic groups into complex phrases.

For example, the rule Company+ Setup Joint Venture (“with” Company+)

Stage 5 : Structure merging

Merges structures that were built up in the previous step.

If the next sentence says “The joint venture will start production in January,” then this step will

notice that there are two references to a joint venture, and that they should be merged into one.

(2) Probabilistic models for information extraction

When information extraction must be attempted from noisy or varied input, simple finite-state

approaches fare poorly.

The simplest probabilistic model for sequences with hidden state is the Hidden Markov model, or

HMM.

To apply HMMs to information extraction, we can either build one big HMM for all the attributes or

build a separate HMM for each attribute.

Once, the HMMs have been learned, we can apply them to a text, using the Viterbi algorithm to find

the most likely path through the HMM states

13

Department of CSE, R.M.D. Engineering College.

The observations are the words of the text, and the hidden states are whether we are in the target,

prefix, or postfix part of the attribute template, or in the background (not part of a template).

For example, here is a brief text and the most probable (Viterbi) path for that text for two HMMs,

one trained to recognize the speaker in a talk announcement, and one trained to recognize dates.

The “-” indicates a background state:

HMMs have two big advantages over Finite State Automata for extraction.

HMMs are probabilistic, and thus tolerant to noise.

HMMs can be trained from data

(3) Conditional Random Fields (CRF) for information extraction

One issue with HMMs for the information extraction task is that they model a lot of probabilities

that we don’t really need.

An HMM is a generative model; it models the full joint probability of observations and hidden states,

and thus can be used to generate samples.

The HMM model can be used not only to parse a text and recover the speaker and date, but also to

generate a random instance of a text containing a speaker and a date.

Conditional random field, or CRF, which models a conditional probability distribution of a set of

target variables given a set of observed variables.

Let e1:N be the observations (e.g., words in a document), and x1:N be the sequence of hidden

states (e.g., the prefix, target, and postfix states).

A linear-chain conditional random field defines a conditional probability distribution:

where α is a normalization factor (to make sure the probabilities sum to 1), and F is a feature function.

For example one that produces a value of 1 if the current word is ANDREW and the current state is

SPEAKER:

This feature is true if the current state is SPEAKER and the next word is “said.”

(4) Ontology extraction from large corpora

A different application of extraction technology is building a large knowledge base or ontology of

facts from corpus. This is different in three ways:

14

Department of CSE, R.M.D. Engineering College.

First it is open-ended—we want to acquire facts about all types of domains, not just one

specific domain.

Second, with a large corpus, this task is dominated by precision, not recall—just as with

question answering on the Web.

Third, the results can be statistical aggregates gathered from multiple sources, rather than

being extracted from one specific text.

One of the most productive template:

This template matches the texts “diseases such as rabies affect your dog” and “supports network

protocols such as DNS,” concluding that rabies is a disease and DNS is a network protocol.

(5) Automated Template Construction

Learn templates from a few examples, then use the templates to learn more examples, from which

more templates can be learned, and so on.

Each match is defined as a tuple of seven strings,

(Author, Title, Order, Prefix, Middle, Postfix, URL) ,

where Order is true if the author came first and false if the title came first, Middle is the

characters between the author and title, Prefix is the 10 characters before the match, Suffix is the

10 characters after the match, and URL is the Web address where the match was made.

The biggest weakness in this approach is the sensitivity to noise. If one of the first few templates is

incorrect, errors can propagate quickly.

(6) Machine Reading

The extraction system behave less like a traditional information extraction system that is targeted

at a few relations and more like a human reader who learns from the text itself; because of this the

field has been called machine reading.

A representative machine-reading system is TEXTRUNNER

Given a set of labeled examples of this type, TEXTRUNNER trains to extract further examples from

unlabeled text.

For example, even though it has no predefined medical knowledge, it has extracted over 2000

answers to the query [what kills bacteria]; correct answers include antibiotics, ozone, chlorine,

Cipro, and broccoli sprouts.

15

Department of CSE, R.M.D. Engineering College.

5. NATURAL LANGUAGE PROCESSING

Natural Language Processing, usually shortened as NLP, is a branch of artificial intelligence that

deals with the interaction between computers and humans using the natural language.

The ultimate objective of NLP is to read, decipher, understand, and make sense of the human

languages in a manner that is valuable.

Most NLP techniques rely on machine learning to derive meaning from human languages.

The input and output of an NLP system can be −

Speech

Text

NLP Terminology

Phonology − It is study of organizing sound systematically.

Morphology − It is a study of construction of words from primitive meaningful units.

Morpheme − It is primitive unit of meaning in a language.

Syntax − It refers to arranging words to make a sentence. It also involves determining the structural

role of words in the sentence and in phrases.

Semantics − It is concerned with the meaning of words and how to combine words into meaningful

phrases and sentences.

Pragmatics − It deals with using and understanding sentences in different situations and how the

interpretation of the sentence is affected.

Discourse − It deals with how the immediately preceding sentence can affect the interpretation of

the next sentence.

World Knowledge − It includes the general knowledge about the world.

Steps in Natural Language Processing

16

Department of CSE, R.M.D. Engineering College.

Lexical Analysis

It involves identifying and analyzing the structure of words. Lexicon of a language means the

collection of words and phrases in a language. Lexical analysis is dividing the whole chunk of text

into paragraphs, sentences, and words.

Syntactic Analysis (Parsing)

It involves analysis of words in the sentence for grammar and arranging words in a manner that

shows the relationship among the words.

The sentence such as “The school goes to boy” is rejected by English syntactic analyzer. Computer

algorithms are used to apply grammatical rules to a group of words and derive meaning from them.

Here are some syntax techniques that can be used:

Lemmatization

Morphological segmentation

Word segmentation

Part-of-speech tagging

Parsing

Sentence breaking

Stemming

Semantic Analysis

It draws the exact meaning or the dictionary meaning from the text. The text is checked for

meaningfulness. It is done by mapping syntactic structures and objects in the task domain. The

semantic analyzer disregards sentence such as “hot ice-cream”.

Here are some techniques in semantic analysis:

Named entity recognition (NER): It involves determining the parts of a text that can be

identified and categorized into preset groups. Examples of such groups include names of

people and names of places.

Word sense disambiguation: It involves giving meaning to a word based on the context.

Natural language generation: It involves using databases to derive semantic intentions

and convert them into human language.

Discourse Integration

The meaning of any sentence depends upon the meaning of the sentence just before it. In addition, it

also brings about the meaning of immediately succeeding sentence.

Pragmatic Analysis − During this, what was said is re-interpreted on what it actually meant. It

involves deriving those aspects of language which require real world knowledge.

Applications of NLP

Machine Translation

Speech Recognition

Text Classification

Question Answering

Spell Checking

Sentiment Analysis

17

Department of CSE, R.M.D. Engineering College.

6. MACHINE TRANSLATION

Machine translation is the automatic translation of text from one natural language (the source) to

another (the target).

Representing the meaning of a sentence is more difficult for translation than it is for single-

language understanding.

A representation language that makes all the distinctions necessary for a set of languages is called

an Interlingua.

Machine translation systems

All translation systems must model the source and target languages, but systems vary in the type of

models they use.

Some systems attempt to analyse the source language text all the way into an Interlingua

knowledge representation and then generate sentences in the target language from that

representation.

This is difficult because it involves three unsolved problems:

1. Creating a complete knowledge representation of everything;

2. Parsing into that representation;

3. Generating sentences from that representation.

Transfer Model

Systems are based on a transfer model keeps a database of translation rules (or examples), and

whenever the rule (or example) matches, they translate directly.

Transfer can occur at the lexical, syntactic, or semantic level.

For example, a strictly syntactic rule maps English to French.

A mixed syntactic and lexical rule maps French to English

18

Department of CSE, R.M.D. Engineering College.

We start with the English text.

An Interlingua based System follows the solid lines

Parsing English into a Syntactic form then into a semantic representation and Interlingua

representation.

Generation to a semantic, syntactic and lexical form in French.

Statistical machine translation

Machine translation systems are built by training a probabilistic model using statistics gathered

from a large corpus of text.

This approach does not need a complex ontology of interlingua concepts

Given a source English sentence, e, finding a French translation f is a matter of three steps:

1. Break the English sentence into phrases e1, . . ., en.

2. For each phrase ei, choose a corresponding French phrase fi. We use the notation P(fi | ei)

for the phrasal probability that fi is a translation of ei.

3. Choose a permutation of the phrases f1, . . ., fn.

For each fi, we choose a distortion , which is the number of words that phrase fi has moved with

respect to fi−1;

• Positive for moving to the right

• Negative for moving to the left

• Zero if fi immediately follows fi−1.

“There is a smelly wumpus sleeping in 2 2” is broken into five phrases, e1, . . . , e5.

19

Department of CSE, R.M.D. Engineering College.

7. SPEECH RECOGNITION

Speech recognition is the task of identifying a sequence of words uttered by a speaker, given the

acoustic signal.

Speech recognition is difficult because the sounds made by a speaker are ambiguous and, well,

noisy. As a well-known example, the phrase “recognize speech” sounds almost the same as “wreck a

nice beach” when spoken quickly.

Even this short example shows several of the issues that make speech problematic.

First, segmentation: written words in English have spaces between them, but in fast speech

there are no pauses in “wreck a nice” that would distinguish it as a multiword phrase as

opposed to the single word “recognize.”

Second, coarticulation: when speaking quickly the “s” sound at the end of “nice” merges

with the “b” sound at the beginning of “beach,” yielding something that is close to a “sp.”

Another problem that does not show up in this example is homophones—words like “to,” “too,” and

“two” that sound t

Acoustic model

Sound waves are periodic changes in pressure that propagate through the air.

When these waves strike the diaphragm of a microphone, the back-and-forth movement generates

an electric current.

An analog-to-digital converter measures the size of the current—which approximates the amplitude

of the sound wave—at discrete intervals called the sampling rate.

To digitize the sound, the system depends on the Hidden Markov Model (HMM)

The digitized sound is then filtered to remove any unwanted noise while categorizing the sound into

different frequency bands.

The sound is also "normalized," or kept at a constant volume level.

20

Department of CSE, R.M.D. Engineering College.

Many modern speech recognition systems use neural networks to simplify the speech signal before

HMM recognition.

Neural networks use feature transformation and dimensionality reduction to simplify the speech

signals.

Voice Activity Detectors (VADs) are also applied to reduce an audio signal to the segments that

contain speech.

Language Model

For general-purpose speech recognition, the language model can be an n-gram model of text

learned from a corpus of written sentences.

However, spoken language has different characteristics than written language, so it is better to get

a corpus of transcripts of spoken language.

For task-specific speech recognition, the corpus should be task-specific: to build your airline

reservation system, get transcripts of prior calls.

21

Department of CSE, R.M.D. Engineering College.

It also helps to have task-specific vocabulary, such as a list of all the airports and cities served, and

all the flight numbers.

Building a speech recognizer

• The quality of a speech recognition system depends on the quality of all of its components—

The language model,

The word-pronunciation models,

The phone models, and

The signal processing algorithms used to extract spectral features from the acoustic signal.

Recent systems use expectation–maximization to automatically supply the missing data.

The idea is simple: given an HMM and an observation sequence, we can use the smoothing

algorithms to compute the probability of each state at each time step and, by a simple extension,

the probability of each state–state pair at consecutive time steps.

The systems with the highest accuracy work by training a different model for each speaker, thereby

capturing differences in dialect as well as male/female and other variations.

This training can require several hours of interaction with the speaker, so the systems with the most

widespread adoption do not create speaker-specific models.

Speech Recognition Applications

Dictation

Education

Kaldi- Kaldi is one of the newest speech recognition tool kits

In Healthcare

In language learning

22

Department of CSE, R.M.D. Engineering College.

8. Robot — Hardware — Perception — Planning — Moving

Robots are physical agents that perform tasks by manipulating the physical world.

They are equipped with effectors such as legs, wheels, joints, and grippers.

Effectors have a single purpose: to assert physical forces on the environment.

Robots are also equipped with sensors, which allow them to perceive their environment.

Most of today’s robots fall into one of three primary categories

1. Manipulators

Manipulator usually involves a chain of controllable joints, enabling such robots to place their

effectors in any position

Example: factory assembly line

2. Mobile robot

Mobile robots move about their environment

Example: delivering food in hospitals, moving containers, Unmanned ground vehicles, or UGVs,

drive autonomously on streets, highways, and off road. unmanned air vehicles (UAVs),

commonly used for surveillance, crop-spraying, Autonomous underwater vehicles (AUVs) are

used in deep sea exploration

3. Mobile Manipulator

Combines mobility with manipulation

Example: Humanoid Robot that mimic the human

23

Department of CSE, R.M.D. Engineering College.

ROBOT HARDWARE

The robotic hardware includes SENSORS and EFFECTORS

1. SENSORS

Sensors are the perceptual interface between robot and environment.

Passive sensors

They capture signals that are generated by other sources in the environment.

Example : cameras, are true observers of the environment

Active sensors

They rely on the fact that this energy is reflected back to the sensor. Active sensors tend to

provide more information than passive sensors

Example : SONAR, send energy into the environment.

Range finders

Range Finders are sensors that measure the distance to nearby objects. In the early days of

robotics, robots were commonly equipped with sonar sensors.

Sonar sensors emit directional sound waves, which are reflected by objects, with some of the

sound making it back into the sensor. The time and intensity of the returning signal indicates

the distance to nearby objects.

Other common range sensors include radar, which is often the sensor of choice for UAVs. Radar

sensors can measure distances of multiple kilometers

Stereo vision

Stereo vision relies on multiple cameras to image the environment from slightly different

viewpoints, analyzing the resulting parallax in these images to compute the range of

surrounding objects.

Time of flight camera

This camera acquires range images up to 60 frames per second.

Scanning lidars (Light detection and ranging)

Scanning lidars tend to provide longer ranges than time of flight cameras, and tend to perform

better in bright daylight.

Tactile sensors

These sensors measure range based on physical contact, and can be deployed only for sensing

objects very close to the robot.

Location sensors

Most location sensors use range sensing as a primary component to determine location.Outdoors,

the Global Positioning System (GPS) is the most common solution to the localization problem

24

Department of CSE, R.M.D. Engineering College.

Differential GPS

Involves a second ground receiver with known location, providing millimeter accuracy under

ideal conditions. Unfortunately, GPS does not work indoors or underwater.

Indoors, localization is often achieved by attaching beacons in the environment at known

locations.

Many indoor environments are full of wireless base stations, which can help robots localize

through the analysis of the wireless signal.

Underwater, active sonar beacons can provide a sense of location, using sound to inform

AUVs of their relative distances to those beacons

Proprioceptive sensors

Inform the robot of its own motion.

To measure the exact configuration of a robotic joint, motors are often equipped with shaft

decoders that count the revolution of motors in small increments.

On mobile robots, shaft decoders that report wheel revolutions can be used for odometry

the measurement of distance traveled.

External forces, such as the current for Autonomous Underwater Vehicles (AUVs) and the

wind for Unmanned Aerial Vehicles (UAVs), increase positional uncertainty.

Inertial sensors, such as gyroscopes, rely on the resistance of mass to the change of

velocity. They can help reduce uncertainty.

Force and Torque Sensors

Force sensors allow the robot to sense how hard it is gripping the bulb

Torque sensors allow it to sense how hard it is turning

2. EFFECTORS

Effectors are the means by which robots move and change the shape of their bodies.

(DOF) We count one degree of freedom for each independent direction in which a robot, or one of

its effectors, can move.

For example, a rigid mobile robot such as an AUV has six degrees of freedom, three for its (x, y, z)

location in space and three for its angular orientation, known as yaw, roll, and pitch. These six

degrees define the kinematic state or pose of the robot.

For example, the elbow of a human arm possesses two degree of freedom. It can flex the upper

arm towards or away, and can rotate right or left.

The wrist has three degrees of freedom. It can move up and down, side to side, and can also

rotate.

25

Department of CSE, R.M.D. Engineering College.

Robot joints also have one, two, or three degrees of freedom each. Six degrees of freedom are

required to place an object, such as a hand, at a particular point in a particular orientation.

Mobile robots have a range of mechanisms for locomotion, including wheels, tracks and legs.

Differential drive robots possess two independently actuated wheels (or tracks), one on each side,

as on a military tank.

If both wheels move at the same velocity, the robot moves on a straight line.

If they move in opposite directions, the robot turns on the spot.

Synchro drive, in which each wheel can move and turn around its own axis.

Both differential and synchro drives are nonholonomic.

To avoid chaos, the wheels are tightly coordinated. When moving straight, for example, all wheels

point in the same direction and move at the same speed

Robot is dynamically stable, meaning that it can remain upright while hopping around

A robot that can remain upright without moving its legs is called statically stable.

Sensors and effectors alone do not make a robot. A complete robot also needs a source of power to

drive its effectors.

The electric motor is the most popular mechanism for both manipulator actuation and locomotion,

but pneumatic actuation using compressed gas and hydraulic actuation using pressurized fluids

also have their application niches.

PERCEPTION

Perception is the process by which robots map sensor measurements intointernal representations of

the environment.

Perception is difficult because sensors are noisy, and the environment is partially observable,

unpredictable, and often dynamic.

In other words, robots have all the problems of state estimation (or filtering)

As a rule of thumb, good internal representations for robots have three properties:

1. They contain enough information for the robot to make good decisions

2. They are structured so that they can be updated efficiently

3. They are natural in the sense that internal variables correspond to natural state variables in the

physical world.

Both exact and approximate algorithms for updating the belief state—the posterior probability

distribution over the environment state variables. For robotics problems, we include the robot’s

own past actions as observed variables in the model.

26

Department of CSE, R.M.D. Engineering College.

To compute the new belief state, P(Xt+1| z1:t+1, a1:t), from the current belief state P(Xt| z1:t, a1:t−1)

and the new observation zt+1, we deal with continuous rather than discrete variables.

The posterior P(Xt| z1:t, a1:t−1) is a probability distribution over all states that captures what we

know from past sensor measurements and controls.

Localization and mapping

Localization is the problem of finding out where things are—including the robot itself.

Knowledge about where things are is at the core of any successful physical interaction with the

environment.

For example, robot manipulators must know the location of objects they seek to manipulate;

navigating robots must know where they are to find their way around.

The pose of such a mobile robot is defined by its two Cartesian coordinates with values x and y and

its heading with value θ. Any particular state is given by Xt=(xt, yt, θt)T.

Localization using particle filtering is called Monte Carlo localization - is an algorithm for robots to

localize using a particle filter.

Given a map of the environment, the Monte Carlo Localization algorithm estimates the position and

orientation of a robot as it moves and senses the environment. The algorithm uses a particle

filter to represent the distribution of likely states, with each particle representing a possible state.

Machine Learning in Robot Perception

Machine learning plays an important role in robot perception. This is particularly the case when the

best internal representation is not known.

27

Department of CSE, R.M.D. Engineering College.

Unsupervised Machine Learning Methods:

Map high dimensional sensor streams into lower- dimensional spaces using unsupervised

machine learning methods.

Such an approach is called low-dimensional embedding.

Machine learning makes it possible to learn sensor and motion models from data, while

simultaneously discovering a suitable internal representations.

Adaptive perception techniques:

Adaptive perception techniques enable robots to continuously adapt to broad changes in sensor

measurements.

Our perception quickly adapts to the new lighting conditions, and our brain ignores the differences.

Methods that make robots collect their own training data (with labels!) are called self-supervised.

PLANNING

All of a robot’s deliberations ultimately come down to deciding how to move effectors.

The point-to-point motion problem is to deliver the robot or its end effector to a designated target

location.

A greater challenge is the compliant motion problem, in which a robot moves while being in

physical contact with an obstacle. An example of compliant motion is a robot manipulator that

screws in a light bulb, or a robot that pushes a box across a table top.

The path planning problem is to find a path from one configuration to another in configuration

space.

The complication added by robotics is that path planning involves continuous spaces.

There are two main approaches: cell decomposition and skeletonization.

Cell decomposition methods

The first approach to path planning uses cell decomposition—that is, it decomposes the free

space into a finite number of contiguous regions, called cells.

These regions have the important property that the path-planning problem within a single

region can be solved by simple means (e.g., moving along a straight line).

The simplest cell decomposition consists of a regularly spaced grid.

28

Department of CSE, R.M.D. Engineering College.

Grayscale shading indicates the value of each free-space grid cell—i.e., the cost of the shortest

path from that cell to the goal. (These values can be computed by a deterministic form of the

algorithm shows the corresponding workspace trajectory for the arm. Of course, we can also

use the A* algorithm to find a shortest path.

start

Fig : Value Function and path found for a discrete grid cell approximation of the configuration space

Modified cost functions

A potential field is a function defined over state space, whose value grows with the distance to

the closest obstacle. The potential field can be used as an additional cost term in the shortest-

path calculation.

Skeletonization methods

The second major family of path-planning algorithms is based on the idea of skeletonization.

These algorithms reduce the robot’s free space to a one-dimensional representation, for which the

planning problem is easier. This lower-dimensional representation is called a skeleton of the

configuration space.

Example of skeletonization is a Voronoi graph of the free space—the set of all points that are

equidistant to two or more obstacles. To do path planning with a Voronoi graph, two points are

important

1. Robot first changes its present configuration to a point on the Voronoi graph.

2. Robot follows the Voronoi graph until it reaches the point nearest to the target

configuration.

Finally, the robot leaves the Voronoi graph and moves to the target.

29

Department of CSE, R.M.D. Engineering College.

MOVING

Dynamics and control

The notion of dynamic state, which extends the kinematic state of a robot by its velocity.

The transition model for a dynamic state representation includes the effect of forces on this rate of

change.

Such models are typically expressed via differential equations, which are equations that relate a

quantity (e.g., a kinematic state) to the change of the quantity over time (e.g., velocity).

However, the dynamic state has higher dimension than the kinematic space, and the curse of

dimensionality would render many motion planning algorithms inapplicable.

A common technique to compensate for the limitations of kinematic plans is to use a separate

mechanism, a controller, for keeping the robot on track.

Controllers are techniques for generating robot controls in real time using feedback from the

environment, so as to achieve a control objective.

If the objective is to keep the robot on a pre-planned path, it is often referred to as a reference

controller and the path is called a reference path.

Potential Field control

In potential field approach, the robot is considered as a point under the influences of the fields

produced by goals and obstacles in search space.

In this method, there are repulsive force generated by obstacles and attractive force generated by

goals.

Reactive control

Reactive Control is a technique for tightly coupling sensory inputs and effector outputs, to allow the

robot to respond very quickly to changing and unstructured environments.

Reinforcement learning control

Reinforcement learning (RL) enables a robot to autonomously discover an optimal behavior through

trial-and-error interactions with its environment.

*************

30

Department of CSE, R.M.D. Engineering College.

You might also like

- Learn API Testing - Norms, Practices, and Guidelines For Building Effective Test AutomationDocument235 pagesLearn API Testing - Norms, Practices, and Guidelines For Building Effective Test AutomationIanNo ratings yet

- AutomationDocument36 pagesAutomationParvez AlamNo ratings yet

- Taj1 1 Karpman Options PDFDocument9 pagesTaj1 1 Karpman Options PDFDejan Nikolic100% (1)

- Unit-I Introduction To Iot and Python ProgrammingDocument77 pagesUnit-I Introduction To Iot and Python ProgrammingKranti KambleNo ratings yet

- Artificial Intelligence For Speech RecognitionDocument9 pagesArtificial Intelligence For Speech RecognitionNeha BhoyarNo ratings yet

- Jerusalem College of Engineering: ACADEMIC YEAR 2021 - 2022Document40 pagesJerusalem College of Engineering: ACADEMIC YEAR 2021 - 2022jayashree rukmaniNo ratings yet

- Skinput TechnologyDocument22 pagesSkinput Technologyprashanth100% (1)

- Mitali Child SafetyDocument23 pagesMitali Child SafetyVishal LabdeNo ratings yet

- CS8691 Unit1 ARTIFICIAL INTELLIGENCE Regulation 2017Document88 pagesCS8691 Unit1 ARTIFICIAL INTELLIGENCE Regulation 2017JEYANTHI maryNo ratings yet

- Thesis WorkDocument84 pagesThesis WorkKhushboo KhannaNo ratings yet

- Wirelesss Notice Board Using IotDocument13 pagesWirelesss Notice Board Using IotShri Harsh MudhirajNo ratings yet

- RTOS Class NotesDocument15 pagesRTOS Class NotesYogesh Misra100% (1)

- PythonDocument33 pagesPythonannaNo ratings yet

- IEEE 802.5 (Token Ring) LAN: Dr. Sanjay P. Ahuja, PH.DDocument13 pagesIEEE 802.5 (Token Ring) LAN: Dr. Sanjay P. Ahuja, PH.Ddevbrat anandNo ratings yet

- Bluetooth Based Smart Sensor NetworksDocument16 pagesBluetooth Based Smart Sensor Networkspankaj49sharma100% (1)

- Air Quality Prediction Using Machine Learning AlgorithmsDocument4 pagesAir Quality Prediction Using Machine Learning AlgorithmsATS100% (1)

- Mobile Computing Unit 2Document158 pagesMobile Computing Unit 2Sabhya SharmaNo ratings yet

- Microprocessors NotesDocument65 pagesMicroprocessors NoteswizardvenkatNo ratings yet

- 5CS4-03 - Operating System - Kajal MathurDocument157 pages5CS4-03 - Operating System - Kajal MathurIshaan KhandelwalNo ratings yet

- Android Based College Bell SystemDocument6 pagesAndroid Based College Bell SystemshrynikjainNo ratings yet

- 5G Technology: Danish Amin & MD ShahidDocument25 pages5G Technology: Danish Amin & MD ShahidRam PadhiNo ratings yet

- Question Bank Module-1: Department of Computer Applications 18mca53 - Machine LearningDocument7 pagesQuestion Bank Module-1: Department of Computer Applications 18mca53 - Machine LearningShiva ShankaraNo ratings yet

- Snake Game in CDocument13 pagesSnake Game in CHarsh GuptaNo ratings yet

- Course Material: Kwara State Polytechnic, IlorinDocument106 pagesCourse Material: Kwara State Polytechnic, IlorinYublex JnrNo ratings yet

- Survey On Identification of Gender of Silkworm Using Image ProcessingDocument12 pagesSurvey On Identification of Gender of Silkworm Using Image ProcessingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Fast Three-Step Search Algorithm With Minimum Checking Points Unimodal Error Surface AssumptionDocument11 pagesA Fast Three-Step Search Algorithm With Minimum Checking Points Unimodal Error Surface AssumptionmanikandaprabumeNo ratings yet

- Compititive Programming - I ManualDocument79 pagesCompititive Programming - I ManualArun ChaudhariNo ratings yet

- Mini ProjectDocument11 pagesMini Projectpabinshrisundar14No ratings yet

- Verbal Ability Placement PaperDocument33 pagesVerbal Ability Placement Paperkrithikgokul selvamNo ratings yet

- 2015 Scheme LTE - IA-1 27 - 05 - 2021Document9 pages2015 Scheme LTE - IA-1 27 - 05 - 2021Sanjana AkkoleNo ratings yet

- CBSE Class 12 Informatic Practices Computer Networking PDFDocument38 pagesCBSE Class 12 Informatic Practices Computer Networking PDFHimanshu SekharNo ratings yet

- Smart Pixel Arrays PDFDocument22 pagesSmart Pixel Arrays PDFAnil Kumar Gorantala100% (1)

- Final ReportDocument61 pagesFinal ReportALBYNo ratings yet

- EC8552 Computer Architecture and Organization Notes 1Document106 pagesEC8552 Computer Architecture and Organization Notes 1Ambedkar RajanNo ratings yet

- Bangalore House Price PredictionDocument14 pagesBangalore House Price PredictionChinnapu charan Teja ReddyNo ratings yet

- 1Document17 pages1MAHALAKSHMINo ratings yet

- Diploma Engineering: Laboratory ManualDocument112 pagesDiploma Engineering: Laboratory ManualDHRUV PATELNo ratings yet

- Notes Data Compression and Cryptography Module 6 System SecurityDocument23 pagesNotes Data Compression and Cryptography Module 6 System SecurityRohan BorgalliNo ratings yet

- Secure ATM Using Card Scanning Plus OTPDocument2 pagesSecure ATM Using Card Scanning Plus OTPPerez BonzoNo ratings yet

- Embedded Systems IareDocument137 pagesEmbedded Systems Iarerocky dNo ratings yet

- Microstrip Antenna Design For Ultra-Wide Band ApplicationsDocument4 pagesMicrostrip Antenna Design For Ultra-Wide Band ApplicationsinventionjournalsNo ratings yet

- Matlab Colour Sensing RobotDocument5 pagesMatlab Colour Sensing Robotwaqas67% (3)

- Python InheritanceDocument20 pagesPython InheritanceSarvesh KumarNo ratings yet

- Face Recognition Using Neural Network: Seminar ReportDocument33 pagesFace Recognition Using Neural Network: Seminar Reportnikhil_mathur10100% (3)

- Compression & DecompressionDocument118 pagesCompression & DecompressionAnurag Patsariya67% (3)

- ML Lab Manual (1-10) FINALDocument34 pagesML Lab Manual (1-10) FINALchintuNo ratings yet

- Documentation: Project Name: Snake Game Development Language: C#Document13 pagesDocumentation: Project Name: Snake Game Development Language: C#rajeswariNo ratings yet

- Blue BrainDocument16 pagesBlue BrainVarunNo ratings yet

- Traffic Sign Board Recognition and Voice Alert System Using Convolutional Neural NetworkDocument1 pageTraffic Sign Board Recognition and Voice Alert System Using Convolutional Neural NetworkWebsoft Tech-HydNo ratings yet

- GUI Calculator Project: Lab Report OnDocument6 pagesGUI Calculator Project: Lab Report OnRafsun Masum0% (1)

- EC6802 Wireless Networks Important PART-A &B Questions With AnswersDocument21 pagesEC6802 Wireless Networks Important PART-A &B Questions With AnswersohmshankarNo ratings yet

- Android Chat Application Documentation PDFDocument49 pagesAndroid Chat Application Documentation PDFArsalan rexNo ratings yet

- Dynamic Auto Selection and Auto Tuning of Machine Learning Models For Cloud Network Analytics SynopsisDocument9 pagesDynamic Auto Selection and Auto Tuning of Machine Learning Models For Cloud Network Analytics Synopsissudhakar kethanaNo ratings yet

- Introduction of PythonDocument217 pagesIntroduction of PythonRiya Santoshwar0% (1)

- Video Conferencing & ChattingDocument48 pagesVideo Conferencing & ChattingyrikkiNo ratings yet

- UE20CS314 Unit3 SlidesDocument238 pagesUE20CS314 Unit3 SlidesKoushiNo ratings yet

- Enhancing Data Security in Iot Healthcare Services Using Fog ComputingDocument36 pagesEnhancing Data Security in Iot Healthcare Services Using Fog ComputingMudassir khan MohammedNo ratings yet

- Automatic Car Theft Detection System Based On GPS and GSM TechnologyDocument4 pagesAutomatic Car Theft Detection System Based On GPS and GSM TechnologyEditor IJTSRDNo ratings yet

- WCMS Unit4..Document10 pagesWCMS Unit4..rakshithadahnuNo ratings yet

- Keywords: Computer Vision, Machine Learning, CNN, Kaggle, Network, Datasets, TensorflowDocument43 pagesKeywords: Computer Vision, Machine Learning, CNN, Kaggle, Network, Datasets, TensorflowAswin KrishnanNo ratings yet

- Network Management System A Complete Guide - 2020 EditionFrom EverandNetwork Management System A Complete Guide - 2020 EditionRating: 5 out of 5 stars5/5 (1)

- Unit 5Document46 pagesUnit 5jana kNo ratings yet

- Unit 3 Stresses in BeamDocument68 pagesUnit 3 Stresses in Beam4119 RAHUL SNo ratings yet

- Messagd: VarlableDocument1 pageMessagd: Varlable4119 RAHUL SNo ratings yet

- WAP - Wireless Application ProtocolDocument21 pagesWAP - Wireless Application Protocol4119 RAHUL SNo ratings yet

- Explain Different Mutual Exclusion Algorithms in Detail.: Lamports AlgorithmDocument2 pagesExplain Different Mutual Exclusion Algorithms in Detail.: Lamports Algorithm4119 RAHUL SNo ratings yet

- Wireless Mark-Up Language (WML)Document5 pagesWireless Mark-Up Language (WML)4119 RAHUL SNo ratings yet

- Wireless Transaction Protocol (WTP)Document9 pagesWireless Transaction Protocol (WTP)4119 RAHUL SNo ratings yet

- Roup Of: Extension Compatible 24Document7 pagesRoup Of: Extension Compatible 244119 RAHUL SNo ratings yet

- The Mechanism Of: Taking A Packet Consisting ofDocument5 pagesThe Mechanism Of: Taking A Packet Consisting of4119 RAHUL SNo ratings yet

- Unit 4Document27 pagesUnit 44119 RAHUL SNo ratings yet

- Ai Unit-2 NotesDocument32 pagesAi Unit-2 Notes4119 RAHUL SNo ratings yet

- Ai Unit-1 NotesDocument22 pagesAi Unit-1 Notes4119 RAHUL SNo ratings yet

- Target Costing TutorialDocument2 pagesTarget Costing TutorialJosephat LisiasNo ratings yet

- Introduction To PBLDocument21 pagesIntroduction To PBLChipego NyirendaNo ratings yet

- Applied Economics Module 1Document30 pagesApplied Economics Module 1愛結No ratings yet

- English Literature AnthologyDocument25 pagesEnglish Literature AnthologyShrean RafiqNo ratings yet

- Prediksi SOal UAS Kelas XII WAJIBDocument14 pagesPrediksi SOal UAS Kelas XII WAJIBDinda Anisa Meldya Salsabila100% (2)

- Calculating The Temperature RiseDocument8 pagesCalculating The Temperature Risesiva anandNo ratings yet

- Updated Resume - Kaushik SenguptaDocument3 pagesUpdated Resume - Kaushik SenguptaKaushik SenguptaNo ratings yet

- Prolink ShareHub Device ServerDocument33 pagesProlink ShareHub Device Serverswordmy2523No ratings yet

- Int Org 2Document60 pagesInt Org 2Alara MelnichenkoNo ratings yet

- Lesson 2 - Victimology and VictimDocument19 pagesLesson 2 - Victimology and VictimHernandez JairineNo ratings yet

- Management Accounting Costing and BudgetingDocument31 pagesManagement Accounting Costing and BudgetingnileshdilushanNo ratings yet

- Chapter-I Elections of 1936-37 and Muslim LeagueDocument63 pagesChapter-I Elections of 1936-37 and Muslim LeagueAdeel AliNo ratings yet

- Identification, Storage, and Handling of Geosynthetic Rolls: Standard Guide ForDocument2 pagesIdentification, Storage, and Handling of Geosynthetic Rolls: Standard Guide ForSebastián RodríguezNo ratings yet

- Hybrid Clutch Installation GuideDocument2 pagesHybrid Clutch Installation Guidebrucken1987No ratings yet

- Controllogix FestoDocument43 pagesControllogix FestoEdwin Ramirez100% (1)

- Auxiliary StructuresDocument19 pagesAuxiliary StructuresdarwinNo ratings yet

- Modeling and Analysis of DC-DC Converters Under Pulse Skipping ModulationDocument6 pagesModeling and Analysis of DC-DC Converters Under Pulse Skipping Modulationad duybgNo ratings yet

- Flow ControlDocument87 pagesFlow Controlibrahim_nfsNo ratings yet

- Improving Machine Translation With Conditional Sequence Generative Adversarial NetsDocument10 pagesImproving Machine Translation With Conditional Sequence Generative Adversarial Netsmihai ilieNo ratings yet

- Trompenaars' Cultural Dimensions in France: February 2021Document15 pagesTrompenaars' Cultural Dimensions in France: February 2021Như Hảo Nguyễn NgọcNo ratings yet

- (SAPP) Case Study F3 ACCA Báo Cáo Tài Chính Cơ BảnDocument4 pages(SAPP) Case Study F3 ACCA Báo Cáo Tài Chính Cơ BảnTài VõNo ratings yet

- SafeBoda Data Intern JDDocument2 pagesSafeBoda Data Intern JDMusah100% (1)

- MNE in Scotland ContextDocument16 pagesMNE in Scotland ContextmaduNo ratings yet

- Sky Star 300Document2 pagesSky Star 300Onitry RecordNo ratings yet

- CHP 15 MULTIDIMENSIONAL SCALING FOR BRAND POSITIONING Group 1 & 2Document16 pagesCHP 15 MULTIDIMENSIONAL SCALING FOR BRAND POSITIONING Group 1 & 2alfin luanmasaNo ratings yet

- Naiad GettingstartedDocument37 pagesNaiad GettingstartedYurivanovNo ratings yet

- 2009 SL School Release MaterialsDocument146 pages2009 SL School Release MaterialsAditiPriyankaNo ratings yet