Professional Documents

Culture Documents

Explaining The StorMagic SvSAN Witness Digital PDF

Explaining The StorMagic SvSAN Witness Digital PDF

Uploaded by

pk bsdkOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Explaining The StorMagic SvSAN Witness Digital PDF

Explaining The StorMagic SvSAN Witness Digital PDF

Uploaded by

pk bsdkCopyright:

Available Formats

WHITE PAPER

EXPLAINING THE

STORMAGIC SvSAN WITNESS

Overview The SvSAN witness:

Businesses that require high service availability • Provides the arbitration service in a cluster

in their applications can design their storage leader election process

infrastructure in such a way as to create • Is a passive element of an SvSAN

redundancy and eliminate single points of configuration and does not service any I/O

failure. StorMagic SvSAN achieves this through requests for data

clustering - each instance of SvSAN is deployed • Maintains the cluster and mirror state

as a two node cluster, with storage shared across • Has the ability to provide arbitration for

the two nodes. thousands of SvSAN mirrors

• Can be local to the storage or at a remote

However, in order to properly mitigate against location:

failures and the threat of downtime, an additional • Used over a wide area network (WAN) link

element is required. This is the "witness". This • Can tolerate high latencies and low

white paper explores the SvSAN witness, its bandwidth network links

requirements and tolerances and the typical • Is an optional SvSAN component

failure scenarios that it helps to eliminate.

NOTE: It is possible to have SvSAN configurations

Avoiding a "split-brain" that do not use an SvSAN witness, however

Without a witness, even-numbered clustered implementation best practices must be followed

environments are at risk of a scenario known as and this is outside the scope of this white paper.

"split-brain" which can affect both the availability If you would like to explore this further, please

and integrity of the data. Split-brain occurs when reach out to the StorMagic team by emailing

the clustered, synchronously mirrored nodes lose sales@stormagic.com

contact with one another, becoming isolated.

The nodes then operate independently from one

another, and the data on each node diverges

becoming inconsistent, ultimately leading to

data corruption and potentially data loss.

To prevent split-brain scenarios from occurring a

witness is used. The witness acts as an arbitrator

or tiebreaker, providing a majority vote in the

event of a cluster leader election process,

ensuring there is only one cluster leader. If a

leader cannot be determined the storage under

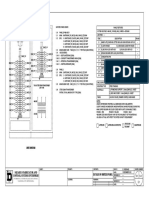

the cluster control is taken offline, preventing Fig. 1: The witness is located separately from

data corruption. the SvSAN nodes.

StorMagic. Copyright © 2018. All rights reserved.

SvSAN witness system requirements that can be transmitted, but does not govern

As the witness sits separately from the SvSAN the speed of the link. In general having more

nodes and does not service I/O requests, it has bandwidth reduces the likelihood of congestion.

noticably lower minimum requirements: Bandwidth is similar to lanes on a highway –

more lanes enable more vehicles (data packets)

CPU 1 x virtual CPU core (1 GHz) to use the highway at the same time. When all

Memory 512MB (reserved) lanes are full the bandwidth limit is reached,

which leads to congestion (traffic jams).

Disk 512MB

1 x 1Gb Ethernet NIC Latency or round trip time (RTT) is another

When using the witness over a WAN link

important factor and determines the speed

use the following recommendations for of the link – lower latency equates to better

optimal operation: network speeds. Referring back to the highway

Network

• Latency of less than 3000ms, this analogy, latency is the highway speed limit and

would allow the witness to be located impacts the time it takes to complete a round

anywhere in the world

• 9Kb/s of available network bandwidth

trip. Unfortunately, getting better network latency

between the VSA and witness (less is not as simple as increasing bandwidth as it is

than 100 bytes of data is transmitted affected by a number of factors, most of which

per second) are out of the user’s control. These include:

The SvSAN witness can be de-

ployed onto a physical server or • Propagation delay - the time that data takes

virtual machine with the following: to travel through a medium relative to the

Operating • Windows Server 2016 (64-bit) speed of light, such as fibre optic cable, or

System • Hyper-V Server 2016 (64-bit) copper wire.

• Raspbian Jessie (32-bit)1

• Raspbian Stretch (32-bit)2

• vCenter Server Appliance (vCSA)3 • Routing and switching delays - the number

• StorMagic SvSAN Witness Appliance of routers and switches data has to pass

through.

1

On Raspberry Pi 1, 2 and 3

2

On Raspberry Pi 2, 3 and 3+ • Data protocol conversions - decoding and re-

3

VMware vSphere 5.5 and higher encoding slows down data.

NOTE: The witness should be installed onto a • Network congestion and queuing -

server separate from the SvSAN VSA. bottlenecks caused by routers and switches.

Using the SvSAN witness remotely - • Application latency - some applications can

introduce or only tolerate a certain amount of

bandwidth and latency limitations

latency.

The SvSAN witness can be deployed both locally

and remotely, and this is of particular use in a

multi-site deployment where a single witness Putting the SvSAN witness' latency

handles every site from a central location. When tolerance to the test

deploying remotely however, restrictions on the StorMagic has conducted tests with the SvSAN

bandwidth and latency of the connection should witness to determine the bandwidth and latency

be taken into consideration. (and distance) that it can tolerate before service

is adversely affected.

When the network performance is poor, a

typical response to solve the issue is to increase Latency emulator – WANem

network bandwidth, which is a relatively simple To simulate different network latencies, the

thing to achieve. However, this only improves WANem (http://wanem.sourceforge.net) Wide

performance when there is network congestion. Area Network Emulator tool was used. WANem

Bandwidth addresses the amount of data can be used to simulate Wide Area Network

StorMagic. Copyright © 2018. All rights reserved.

conditions,

The SvSAN witness can withstand

latencies of up to: allowing

different

3,000ms network

characteristics,

This allows the witness to

such as network

be located almost any- delay (latency), Fig. 2: SvSAN witness testing configuration.

where in the world. bandwidth, requirements while tolerating high packet loss.

packet loss, Although these are extreme scenarios and

And the witness packet corruption,

requires network networks with these characteristics are rarely

bandwidth of just:

disconnections, packet used in practice, it shows how efficient the

re-ordering, jitter, etc. to witness is. The following are recommendations

9Kb/s be configured. to ensure optimal operation:

The configuration used for • Latency should ideally be less than 3,000ms,

the tests is illustrated in fig. 2. which allows the witness to be located almost

anywhere in the world.

The network characteristics of

latency, bandwidth and packet loss • The amount of data transmitted from the VSA

were increased until communication to the witness is small (under 100 bytes per

was lost between the VSA and the second). It is recommended that there is at

witness. Once a failure had been least 9Kb/s of available network bandwidth

observed the characteristic being tested between the VSA and witness.

was reset, allowing the connection to be

re-established. The following results are the

extreme limits and show the SvSAN witness'

Failure scenarios

This section discusses the common failure

tolerances:

scenarios relating to two node SvSAN

configurations with a witness. SvSAN in this

• The network latency between the VSA and

configuration is designed to withstand failures

the witness reached 3,000ms before the

for a single infrastructure component. However,

connection became unreliable and the VSA

for some scenarios it is possible to tolerate

and witness disconnected from one another.

multiple failures.

Reducing the latency ensured that the VSA

and witness reconnected.

Each scenario describes what happens during

the failure and subsequently what happens when

• The witness has minimal network bandwidth

the infrastructure is returned to the optimal state.

requirements. During the tests the bandwidth

was reduced to 9 kilobits per second (Kb/s),

The scenarios are as follows:

before connectivity was lost.

1. Network link failure between SvSAN VSA and

witness

• Ideally there would be zero packet loss across

2. Mirror network link interruption

the WAN, however factors such as excessive

3. Server failure

electromagnetic noise, signal degradation,

4. Witness failure

faulty hardware and packet corruption all

5. Network isolation

contribute to packet loss. The tests showed

6. Mirror network link and witness failure

that the witness connectivity could withstand

7. Server failure followed by witness failure

a packet loss of 20%.

8. Witness failure followed by a server failure

Recommendations For all the failure scenarios the following

In general the witness can function on very high assumptions are made:

latencies and has very low network bandwidth

StorMagic. Copyright © 2018. All rights reserved.

• The cluster/mirror leader is VSA1 • The VSAs and witness remain fully

• SvSAN is in the optimum state before the operational with the VSAs continuing to

failure occurs serve I/O requests, without degradation to

• There are multiple, resilient mirror network performance

links between servers/VSAs • All mirror targets remain synchronized

ensuring that the data is fully protected

For a full list of best practices when deploying and that the required service availability is

SvSAN, please refer to this white paper. maintained

• During the network interruption, VSA1

Optimum state continues to remain as the cluster leader and

Fig. 3 shows the optimum SvSAN cluster state. the quorum is maintained

In the optimal cluster state: • VSA1 makes periodic attempts to connect

to the witness and recover the network

connection

After recovery:

When the network connectivity is restored,

communication between VSA1 and the witness

is re-established. As the network interruption did

not affect the environment, operation continues

as normal.

Scenario #2: Mirror network link interruption

Fig. 3: The optimum SvSAN cluster state. The mirror traffic network link between the

servers is interrupted, as shown in fig. 5:

• All servers, VSAs, witness and network links

are fully operational

• Quorum is determined and one of the VSAs

(VSA1) is elected the cluster leader

• I/O can be performed by any of the VSAs

• Mirror state is synchronized

Scenario #1: Network link failure between VSA

and witness

This scenario occurs when the network link

between a single server/VSA (VSA1) and the

witness is interrupted, as shown in fig. 4:

Fig. 5: After a mirror network link interruption.

During the failure period, where there are

multiple redundant network connections

between VSA1 and VSA2:

• Both VSAs and witness remain fully

operational and the VSAs continue to serve

I/O requests

• Mirror traffic is automatically redirected over

the alternate network links if permitted

• During the network interruption, VSA1

Fig. 4: After a network link failure. continues to remain as the cluster leader and

the quorum is maintained

During the failure period: • The mirror state remains synchronized

StorMagic. Copyright © 2018. All rights reserved.

NOTE: Potential performance issues could arise I/O

if the alternative network links do not have the • Virtual machines that were running on the

same characteristics (speed and bandwidth) failed server (Server A) will be restarted on the

as the primary mirror network. Furthermore, surviving server (Server B)

using alternative network links for SvSAN mirror • Virtual machines running on Server B

traffic could potentially affect other applications continue to run uninterrupted

or users on the same network. Both of these • The mirror state becomes unsynchronized

are especially important when there is a high

rate of change of data such as a full mirror re- After the recovery of Server A:

synchronization. • VSA1 re-joins the cluster. Its mirror state is

marked as unsynchronized

After recovery: • VSA2 remains as cluster leader

When the network links are recovered, mirror • The VSAs will perform a fast re-

traffic will automatically fail back and use the synchronization of the mirrored targets and

primary mirror traffic network. issue initiator rescans to all hosts, mounting

those targets automatically

In the event that all network communication • On completion, the mirror state is marked

between VSA1 and VSA2 is lost (multiple failures), as synchronized

but they are able to communicate with the • NOTE: If the failure was caused by the

witness, one of the mirror plexes will be taken total loss of storage, then this will require a

offline to prevent split-brain from occurring and full re-synchronization of the data

avoid data corruption or loss. When recovering • The virtual machines remain running on

from this scenario, the node with offline plexes Server B

brings them online and its mirror state will be • Virtual machines can be moved to Server

unsynchronized. The VSAs will then perform a A manually (vMotion/Live Migration)

fast re-synchronization of the mirrored targets or automatically (VMware Distributed

and issue initiator rescans to all hosts, mounting Resource Scheduler or Microsoft Hyper-V

those targets automatically. Dynamic Optimization)

Scenario #3: Server failure Scenario #4: Witness failure

This occurs when a single server (Server A) fails, This occurs when the witness fails, as shown in

as shown in fig. 6: fig. 7:

Fig. 6: After a server failure. Fig. 7: After a witness failure.

During the failure period: During the failure period:

• The surviving VSA (VSA2) and the witness • Both servers (Server A & Server B) remain fully

remain fully operational operational

• VSA2 is promoted to cluster leader and the • I/O requests can be serviced by both

witness is updated to reflect the state change VSAs without disruption to service

• Only the surviving VSA (VSA2) can perform • Quorum is maintained, with VSA1 remaining

StorMagic. Copyright © 2018. All rights reserved.

as cluster leader • The VSAs will perform a fast re-

• The VSAs periodically retry to connect to the synchronization of the mirrored targets and

witness issue initiator rescans to all hosts, mounting

• Mirror state remains synchronized those targets automatically

• On completion, the mirror state is marked as

After recovery: synchronized

• The witness is recovered • The virtual machines remain running on

• VSA1 and VSA2 reconnect to the witness Server A

• Current cluster state is propagated to the • Virtual machines can be moved to Server

witness B manually (vMotion/Live Migraton)

or automatically (VMware Distributed

Scenario #5: Network isolation Resource Scheduler or Microsoft Hyper-V

This scenario leads to server isolation when Dynamic Optimization)

multiple network links fail between the servers

and witness, and the server (Server B) remains Scenario #6: Mirror network link and witness

operational. This is shown in fig. 8: failure

This multiple failure scenario explains what

happens when the mirror network link and the

witness fails. As shown in fig. 9:

Fig. 8: During network isolation.

During the failure period:

• VSA1 continues as normal accepting and Fig. 9: After a dual mirror network link and

servicing I/O requests witness failure.

• VSA1 remains as cluster leader

• As VSA2 cannot contact either VSA1 or the During the failure period:

witness, it identifies itself as being isolated • Both servers remain online, with VSA1

• VSA2 takes its mirror plexes offline to stop remaining as the cluster leader

updates to the storage and to prevent a • If there are other network links between the

split-brain condition occurring. VSA1 and VSA2:

• VSA1 marks VSA2 mirror plexes as • The mirror traffic will be redirected to

unsynchronized. VSA2 experiences loss of utilize those links and the mirror state will

quorum and has its volumes taken offline remain synchronized

until quorum is restored. • Either server can perform I/O requests

• The virtual machines that were running on • Both VSAs periodically poll for the

Server B experience a HA event and are presence of the witness

restarted on Server A. • If all the links between the servers (Server A

and Server B) are severed:

After recovery when the network connectivity to • All storage will be immediately taken

Server B is restored: offline to prevent data corruption and

• VSA2 re-joins the cluster. Its mirror state is split-brain scenarios occurring

marked as unsynchronized

StorMagic. Copyright © 2018. All rights reserved.

After recovery of the network links: updated with the cluster and mirror state

• The VSAs negotiate leadership

• Storage is brought back online Recovery Scenario 2 - Witness is recovered first,

• The VSAs will perform a fast re- followed by Server A:

synchronization of the mirrored targets and • The NSH is updated with the cluster and

issue initiator rescans to all hosts, mounting mirror status

those targets automatically • VSA1 re-joins the cluster

• On completion, the mirror state is marked as • Mirrors are re-synchronized

synchronized.

• Guest virtual machines will be restarted on Scenario #8: Witness failure followed by a

the servers server failure

This scenario occurs when multiple infrastructure

Scenario #7: Server failure followed by witness components fail. Here the witness or the link to

failure the witness fails first followed by a server (Server

This scenario occurs when multiple infrastructure A) failure. This is shown in fig. 11:

components fail. Here a server (Server A) fails

followed by a subsequent failure of the witness

or failure of communication to the witness. This

is shown in fig. 10:

Fig. 11: Here, the witness then the server fails.

During the failure period:

• The remaining VSA (VSA2) is unable to

Fig. 10: Here, the server then the witness fails. contact either its partner server or the

witness

After the failure of Server A: • VSA2 assumes it has become isolated and

• If VSA2 was able to update the cluster state takes its mirror plexes offline to prevent data

on the witness before it failed: corruption

• VSA2 remains online and is promoted to • Service disruption occurs - no I/O requests

leader are serviced by the VSAs

• The mirror state becomes unsynchronized

• I/O requests are serviced by VSA2 without Recovery Scenario 1 - Server A is recovered first,

service interruption followed by the witness:

• If VSA2 was NOT able to update the cluster • Servers renegotiate cluster leadership

state on the witness before it failed: • The storage is brought back online and the

• VSA2 takes its mirror plexes offline mirrors are re-synchronized

experiencing loss of quorum • When the witness is returned to service, it is

updated with the cluster and mirror state

Recovery Scenario 1 - Server A is recovered first,

followed by the witness: Recovery Scenario 2 - Witness is recovered first,

• VSA1 re-joins the cluster; its storage will be in followed by Server A:

an unsynchronized state • VSA2 elects itself as leader and brings its

• Mirrors are automatically re-synchronized mirror plexes online

• When the witness returns to service, it is • Witness is updated with the cluster and mirror

StorMagic. Copyright © 2018. All rights reserved.

status be used over high latency, low bandwidth WAN

• VSA1 re-joins the cluster and mirrors are re- links allowing the witness to be located nearly

synchronized anywhere in the world.

Conclusions Storage infrastructure for edge environments

SvSAN, deployed with a witness, has been should be simple, cost-effective and flexible and

developed to withstand single infrastructure SvSAN's witness is an integral part in ensuring

component failures. However, for some scenarios that SvSAN is perfectly designed for typical edge

it is possible to tolerate multiple component deployments such as remote sites, retails stores

failures, ensuring that service availability is and branch offices.

maintained wherever possible.

Further Reading

For single component failure scenarios, SvSAN There are many features that make up SvSAN,

preserves cluster stability, avoiding split- of which the witness is just one. Why not

brain conditions. When the failure is rectified, explore some of the others, such as Predictive

SvSAN automatically recovers and returns Storage Caching, or Data Encryption? These

the infrastructure back to the optimal state, features and more can be accessed through the

performing a fast re-synchronization of the extensive collection of white papers on the

mirrors where possible, reducing the time StorMagic website.

frame and exposure to subsequent failures and

avoiding potential service disruptions. Additional details on SvSAN are available in the

Technical Overview which details SvSAN's

As shown in the failure scenarios in this white capabilities and deployment options.

paper, SvSAN protects the integrity of the data

at all costs during an infrastructure failure, while If you're ready to test SvSAN in your

keeping the storage available. environment, you can do so totally free

of charge, with no obligations. Simply

The SvSAN witness is a key element in delivering download our fully-functioning free trial

this protection. It provides a significant of SvSAN from the website.

competitive advantage with its ability to be

located remotely and provide quorum to If you still have questions, or you'd like

hundreds or even thousands of clusters. The a demo of SvSAN you can contact

witness communication protocol is lightweight the StorMagic team directly by

and efficient in that it only requires a small sending an email to

amount of bandwidth and can tolerate very sales@stormagic.com

high latencies and packet losses enabling it to

StorMagic

Unit 4, Eastgate

Office Centre

Eastgate Road

Bristol

BS5 6XX

United Kingdom

+44 (0) 117 952 7396

sales@stormagic.com

www.stormagic.com

StorMagic. Copyright © 2018. All rights reserved.

You might also like

- Learning SD-WAN with Cisco: Transform Your Existing WAN Into a Cost-effective NetworkFrom EverandLearning SD-WAN with Cisco: Transform Your Existing WAN Into a Cost-effective NetworkNo ratings yet

- Meraki SD WanDocument35 pagesMeraki SD WanTadeu MeloNo ratings yet

- Assignment 2 Task C-D-EDocument9 pagesAssignment 2 Task C-D-ELone Grace Cuenca100% (1)

- Plant Design For Slurry HandlingDocument6 pagesPlant Design For Slurry HandlingJose BustosNo ratings yet

- Studi Kasus SCMDocument8 pagesStudi Kasus SCMMuflihul Khair0% (5)

- MT PPT2 - Switching Concepts and VLANsDocument52 pagesMT PPT2 - Switching Concepts and VLANsmj fullenteNo ratings yet

- Virtual Local Area NetworksDocument26 pagesVirtual Local Area Networksamar_mba10No ratings yet

- Virtual Local Area Networks: Varadarajan.5@osu - EduDocument19 pagesVirtual Local Area Networks: Varadarajan.5@osu - EduRahulDevNo ratings yet

- Networking For Virtualization: Deep DiveDocument7 pagesNetworking For Virtualization: Deep DiveAli TouseefNo ratings yet

- Transmission Algorithm For Video Streaming Over Cellular NetworksDocument17 pagesTransmission Algorithm For Video Streaming Over Cellular NetworksAyahna Ravi TennovNo ratings yet

- Switching Concepts, VLANs and Inter-VLAN RoutingDocument93 pagesSwitching Concepts, VLANs and Inter-VLAN RoutingMemo LOlNo ratings yet

- Transmission Algorithm For Video Streaming Over Cellular NetworksDocument17 pagesTransmission Algorithm For Video Streaming Over Cellular NetworksPhuong OtNo ratings yet

- 2 - Chapter TwoDocument31 pages2 - Chapter Twoeng.kerillous.samirNo ratings yet

- W AcronymsDocument4 pagesW AcronymsJessica a MillerNo ratings yet

- Unit1 VLAN VSANDocument63 pagesUnit1 VLAN VSANBhumika BiyaniNo ratings yet

- 5.1 Key Points of Wireless Coverage DeploymentDocument19 pages5.1 Key Points of Wireless Coverage Deploymentchristyan leonNo ratings yet

- Tutorial 9 TITLE: Implementation of Dynamic Tunnels: Kartikey Mandloi J028Document2 pagesTutorial 9 TITLE: Implementation of Dynamic Tunnels: Kartikey Mandloi J028HARSH MATHURNo ratings yet

- CNv6 Chapter6Document42 pagesCNv6 Chapter6Hannibal ImterNo ratings yet

- Meraki SD-WANDocument32 pagesMeraki SD-WANRaj Karan0% (1)

- NET301 Chapter6 QualityofServiceDocument42 pagesNET301 Chapter6 QualityofServiceKyla PinedaNo ratings yet

- Network Security: Hosted By: Ed-Lab Pakistan and Risk AssociatesDocument25 pagesNetwork Security: Hosted By: Ed-Lab Pakistan and Risk AssociatesHamzaZahidNo ratings yet

- Distributed Call Admission Control For Ad Hoc Networks: Shahrokh Valaee and Baochun LiDocument5 pagesDistributed Call Admission Control For Ad Hoc Networks: Shahrokh Valaee and Baochun Lijasneetk_1No ratings yet

- Module 2: Switching Concepts: Switching, Routing, and Wireless Essentials v7.0 (SRWE)Document14 pagesModule 2: Switching Concepts: Switching, Routing, and Wireless Essentials v7.0 (SRWE)jerwin dacumosNo ratings yet

- 3.1 MAC ProtocolDocument48 pages3.1 MAC ProtocolVENKATA SAI KRISHNA YAGANTINo ratings yet

- Cisco Datacenter Security: György Ács Security Consulting Systems Engineer 2016 JuneDocument38 pagesCisco Datacenter Security: György Ács Security Consulting Systems Engineer 2016 JuneVolvoxdjNo ratings yet

- CNv6 instructorPPT Chapter6Document44 pagesCNv6 instructorPPT Chapter6mohammedqundiNo ratings yet

- Unit 1 Continued 2Document59 pagesUnit 1 Continued 2Biraj RegmiNo ratings yet

- Net ch2Document61 pagesNet ch2Abdurehman B HasenNo ratings yet

- Asynchronous Transfer Mode Assignment No.1: Ques 1. Compare Various Switching ModelsDocument6 pagesAsynchronous Transfer Mode Assignment No.1: Ques 1. Compare Various Switching Modelsjkj2010No ratings yet

- Ds Wan Series inDocument9 pagesDs Wan Series inSujeet KumarNo ratings yet

- Unit 5 Mesh Networks Upto 802Document26 pagesUnit 5 Mesh Networks Upto 802pravi2010No ratings yet

- Chapter 5 Network TypesDocument33 pagesChapter 5 Network Typesبشار القدسيNo ratings yet

- Lecture 5 Vlan SanDocument59 pagesLecture 5 Vlan SanZeyRoX GamingNo ratings yet

- On The Price of Security in LargeDocument4 pagesOn The Price of Security in LargesathishNo ratings yet

- Module 2 - VLANs and VTPDocument38 pagesModule 2 - VLANs and VTPBinh Nguyen HuyNo ratings yet

- EN01 Data Center Network OverviewDocument63 pagesEN01 Data Center Network OverviewAlfi Triana MufidahNo ratings yet

- Slide 3Document32 pagesSlide 3leatherworld.z8No ratings yet

- ST PPT2-1 - Switching ConceptsDocument15 pagesST PPT2-1 - Switching Conceptsmj fullenteNo ratings yet

- 20-Storm ControlDocument9 pages20-Storm ControlKelvin YangNo ratings yet

- SM CAC Best PracticesDocument35 pagesSM CAC Best PracticesCarl CunninghamNo ratings yet

- Rajant SpecSheet Slipstream-041223Document2 pagesRajant SpecSheet Slipstream-041223javier.lopez.duranNo ratings yet

- (IJCST-V12I2P12) :A.Ashok KumarDocument9 pages(IJCST-V12I2P12) :A.Ashok Kumareditor1ijcstNo ratings yet

- Kratos DataDefender Network Packet Loss Protection Data SheetDocument2 pagesKratos DataDefender Network Packet Loss Protection Data SheetarzeszutNo ratings yet

- Unit 2cloudstack Architecture 3Document32 pagesUnit 2cloudstack Architecture 3Sahil Kumar GuptaNo ratings yet

- Ciena Waveserver - DSDocument2 pagesCiena Waveserver - DSrobert adamsNo ratings yet

- Itnet - Midterm ReviewerDocument15 pagesItnet - Midterm Reviewerandreajade.cawaya10No ratings yet

- Security Protocols For Wireless Sensor NetworkDocument39 pagesSecurity Protocols For Wireless Sensor NetworkChetan RanaNo ratings yet

- WAN Optimization FrameworkDocument40 pagesWAN Optimization FrameworkVinamra KumarNo ratings yet

- Network-Assisted Mobile Computing With Optimal Uplink Query ProcessingDocument16 pagesNetwork-Assisted Mobile Computing With Optimal Uplink Query ProcessingPonnu ANo ratings yet

- Virtual Local Area Network Technology and Applications: (VLAN)Document4 pagesVirtual Local Area Network Technology and Applications: (VLAN)ahedNo ratings yet

- 1b. Switching Concepts - Rev 2022Document15 pages1b. Switching Concepts - Rev 2022Rhesa FirmansyahNo ratings yet

- SD WanDocument4 pagesSD WanFernala Sejmen-BanjacNo ratings yet

- Module 2: Switching Concepts: Switching, Routing, and Wireless Essentials v7.0 (SRWE)Document17 pagesModule 2: Switching Concepts: Switching, Routing, and Wireless Essentials v7.0 (SRWE)Lama AhmadNo ratings yet

- Vsphere Challenge LabDocument4 pagesVsphere Challenge LabsivakumarNo ratings yet

- WAN TechnologiesDocument14 pagesWAN Technologiesrao raoNo ratings yet

- CSE423 Notes2Document7 pagesCSE423 Notes2Ranjith SKNo ratings yet

- 1.7 Switching DomainsDocument4 pages1.7 Switching DomainsSyifa FauziahNo ratings yet

- Brksan-2883 - 2018-1-15Document15 pagesBrksan-2883 - 2018-1-15anilNo ratings yet

- What Is SAN Zoning?: Hard Zoning Soft ZoningDocument10 pagesWhat Is SAN Zoning?: Hard Zoning Soft ZoningPabbathi Prabhakar0% (1)

- San Zoing-1Document3 pagesSan Zoing-1MadhuriNomulaNo ratings yet

- WLAN and PANDocument67 pagesWLAN and PANShashi Shekhar AzadNo ratings yet

- Data Encryption Best Practices For Edge EnvironmentsDocument7 pagesData Encryption Best Practices For Edge Environmentspk bsdkNo ratings yet

- DCIG - SvSAN - Vmw-Analyst-Comparison PDFDocument3 pagesDCIG - SvSAN - Vmw-Analyst-Comparison PDFpk bsdkNo ratings yet

- ESG Showcase StorMagic Apr 2020Document5 pagesESG Showcase StorMagic Apr 2020pk bsdkNo ratings yet

- 10th Marksheet PDFDocument1 page10th Marksheet PDFpk bsdkNo ratings yet

- Coursera ML Certificate T2LTUXAUCEQT PDFDocument1 pageCoursera ML Certificate T2LTUXAUCEQT PDFpk bsdkNo ratings yet

- Panel Features: Square-D Fabricator and Control Systems EnterpriseDocument1 pagePanel Features: Square-D Fabricator and Control Systems EnterpriseCallista CollectionsNo ratings yet

- Experiment 4 Turbine CharacteristicsDocument12 pagesExperiment 4 Turbine CharacteristicsChong Ru YinNo ratings yet

- P&Z Electronic (Dongguan) Co.,LtdDocument3 pagesP&Z Electronic (Dongguan) Co.,LtdTRMNo ratings yet

- ARANDANOSDocument5 pagesARANDANOSCarolinaNo ratings yet

- Research On Sustainable Development of Textile Industrial Clusters in The Process of GlobalizationDocument5 pagesResearch On Sustainable Development of Textile Industrial Clusters in The Process of GlobalizationSam AbdulNo ratings yet

- Turbodrain EnglDocument8 pagesTurbodrain EnglIonut BuzescuNo ratings yet

- Quinone-Based Molecular Electrochemistry and Their Contributions To Medicinal Chemistry: A Look at The Present and FutureDocument9 pagesQuinone-Based Molecular Electrochemistry and Their Contributions To Medicinal Chemistry: A Look at The Present and FutureSanti Osorio DiezNo ratings yet

- Covellite PDFDocument1 pageCovellite PDFRyoga RizkyNo ratings yet

- Full Download Strategies For Teaching Learners With Special Needs 11th Edition Polloway Test BankDocument36 pagesFull Download Strategies For Teaching Learners With Special Needs 11th Edition Polloway Test Banklevidelpnrr100% (30)

- Technical Data: Air ConditioningDocument17 pagesTechnical Data: Air ConditioningTomaž BajželjNo ratings yet

- Fil-Chin Engineering: To: Limketkai Attn: Mr. Eduard Oh Re: Heat ExchangerDocument6 pagesFil-Chin Engineering: To: Limketkai Attn: Mr. Eduard Oh Re: Heat ExchangerKeith Henrich M. ChuaNo ratings yet

- Daftar Harga 2021 (Abjad)Document9 pagesDaftar Harga 2021 (Abjad)Arahmaniansyah HenkzNo ratings yet

- Drop Tower MQP Final ReportDocument70 pagesDrop Tower MQP Final ReportFABIAN FIENGONo ratings yet

- EN ISO 13503-2 (2006) (E) CodifiedDocument8 pagesEN ISO 13503-2 (2006) (E) CodifiedEzgi PelitNo ratings yet

- Assignment2 NamocDocument5 pagesAssignment2 NamocHenry Darius NamocNo ratings yet

- RAS AQ DIYproteinskimmer ManualDocument8 pagesRAS AQ DIYproteinskimmer ManualBishri LatiffNo ratings yet

- Paper 2222Document16 pagesPaper 2222Abhijeet GholapNo ratings yet

- 2021 - 1 - Alternative Sampling Arrangement For Chemical Composition Tests For General Acceptance (GA) ApplicationDocument5 pages2021 - 1 - Alternative Sampling Arrangement For Chemical Composition Tests For General Acceptance (GA) Applicationkumshing88cwNo ratings yet

- Remedial Uas B.ing LM (Intani Julien Putri Xii Ipa 6)Document9 pagesRemedial Uas B.ing LM (Intani Julien Putri Xii Ipa 6)Intani JulienNo ratings yet

- 7.8.2 Example - Basic Column Control: Chapter 7 - C F I DDocument4 pages7.8.2 Example - Basic Column Control: Chapter 7 - C F I DnmulyonoNo ratings yet

- Urinary SystemDocument9 pagesUrinary SystemMary Joyce RamosNo ratings yet

- Lesson Plan Format (Acad)Document4 pagesLesson Plan Format (Acad)Aienna Lacaya MatabalanNo ratings yet

- PVC Pressure Pipes and Fittings Catalogue (Pannon Pipe)Document8 pagesPVC Pressure Pipes and Fittings Catalogue (Pannon Pipe)vuthy prakNo ratings yet

- EW74Ëó+ Á+ ÚDocument9 pagesEW74Ëó+ Á+ Úundibal rivasNo ratings yet

- Week 1: Introduction: NM NM Ev Ev E DT T P EDocument9 pagesWeek 1: Introduction: NM NM Ev Ev E DT T P EInstituto Centro de Desenvolvimento da GestãoNo ratings yet

- Circuito Integrado BA05STDocument10 pagesCircuito Integrado BA05STEstefano EspinozaNo ratings yet

- Lab 7 QUBE-Servo PD Control WorkbookDocument6 pagesLab 7 QUBE-Servo PD Control WorkbookLuis EnriquezNo ratings yet

- Ignition Loss of Cured Reinforced Resins: Standard Test Method ForDocument3 pagesIgnition Loss of Cured Reinforced Resins: Standard Test Method ForElida SanchezNo ratings yet