Professional Documents

Culture Documents

Reinforcement Learning Is Direct Adaptive Optimal Control: Richard S. Sutton, Andrew G. Barto, Ronald J. Williams

Reinforcement Learning Is Direct Adaptive Optimal Control: Richard S. Sutton, Andrew G. Barto, Ronald J. Williams

Uploaded by

hewitt0724Copyright:

Available Formats

You might also like

- Tenta 210826Document3 pagesTenta 210826InetheroNo ratings yet

- A Model To Determine Customer Lifetime Value in A Retail Banking ContextDocument14 pagesA Model To Determine Customer Lifetime Value in A Retail Banking ContextSim MiyokoNo ratings yet

- Optimal State Estimation For Stochastic Systems: An Information Theoretic ApproachDocument15 pagesOptimal State Estimation For Stochastic Systems: An Information Theoretic ApproachJanett TrujilloNo ratings yet

- 从LQR角度看RL和控制Document28 pages从LQR角度看RL和控制金依菲No ratings yet

- Model-Based Adaptive Critic Designs: Editor's SummaryDocument31 pagesModel-Based Adaptive Critic Designs: Editor's SummaryioncopaeNo ratings yet

- Reinforcement Learning and Adaptive Dynamic Programming For Feedback ControlDocument19 pagesReinforcement Learning and Adaptive Dynamic Programming For Feedback ControlRVn MorNo ratings yet

- Data Driven ControlDocument2 pagesData Driven Controljain jaiinNo ratings yet

- Rasmussen Vicente Human Errors EID PDFDocument18 pagesRasmussen Vicente Human Errors EID PDFMartin GriffinNo ratings yet

- ACODS 2014 GAndradeDocument7 pagesACODS 2014 GAndradesattanic666No ratings yet

- Vedula Xu 2009Document13 pagesVedula Xu 2009Vladimir VelascoNo ratings yet

- Loquasto IECDocument47 pagesLoquasto IECAllel BradleyNo ratings yet

- Data Driven Control of Interacting Two Tank Hybrid System Using Deep Reinforcement LearningDocument7 pagesData Driven Control of Interacting Two Tank Hybrid System Using Deep Reinforcement Learningayush sharmaNo ratings yet

- Stochastic Learning and Optimization: A Sensitivity-Based ApproachDocument575 pagesStochastic Learning and Optimization: A Sensitivity-Based ApproachLuis Dario Ortíz IzquierdoNo ratings yet

- constrainedLQR Vehicle Dynamics Applications of Optimal Control TheoryDocument40 pagesconstrainedLQR Vehicle Dynamics Applications of Optimal Control TheoryBRIDGET AUHNo ratings yet

- Model Predictive ControlDocument460 pagesModel Predictive ControlHarisCausevicNo ratings yet

- Revised Paper vs12Document16 pagesRevised Paper vs12Long Trần ĐứcNo ratings yet

- A Self-Leaning Fuzzy Logic Controller Using Genetic Algorithm With ReinforcementsDocument8 pagesA Self-Leaning Fuzzy Logic Controller Using Genetic Algorithm With Reinforcementsvinodkumar57No ratings yet

- Deep Reinforcement Learning With Guaranteed Performance: Yinyan Zhang Shuai Li Xuefeng ZhouDocument237 pagesDeep Reinforcement Learning With Guaranteed Performance: Yinyan Zhang Shuai Li Xuefeng ZhouAnkit SharmaNo ratings yet

- Control TheoryDocument12 pagesControl TheorymCmAlNo ratings yet

- (IJCST-V9I5P4) :monica Veronica Crankson, Olusegun Olotu, Newton AmegbeyDocument9 pages(IJCST-V9I5P4) :monica Veronica Crankson, Olusegun Olotu, Newton AmegbeyEighthSenseGroupNo ratings yet

- PlantWIde McAvoyDocument19 pagesPlantWIde McAvoydesigat4122No ratings yet

- ACCESS3148306Document9 pagesACCESS3148306atif6421724No ratings yet

- 1 s2.0 S0167278920300026 MainDocument11 pages1 s2.0 S0167278920300026 MainJuan ZarateNo ratings yet

- 10 Chapter 2Document30 pages10 Chapter 2Cheeranjeev MishraNo ratings yet

- Dynamics Modeling of Nonholonomic Mechanical SystemsDocument13 pagesDynamics Modeling of Nonholonomic Mechanical SystemsMarcelo PereiraNo ratings yet

- Borelli Predictive Control PDFDocument424 pagesBorelli Predictive Control PDFcharlesNo ratings yet

- Gaussian Process-Based Predictive ControDocument12 pagesGaussian Process-Based Predictive ContronhatvpNo ratings yet

- Robust Model Predictive Control For A Class of Discrete-Time Markovian Jump Linear Systems With Operation Mode DisorderingDocument13 pagesRobust Model Predictive Control For A Class of Discrete-Time Markovian Jump Linear Systems With Operation Mode DisorderingnhatvpNo ratings yet

- Automatica: D. Vrabie O. Pastravanu M. Abu-Khalaf F.L. LewisDocument8 pagesAutomatica: D. Vrabie O. Pastravanu M. Abu-Khalaf F.L. LewisLộc Ong XuânNo ratings yet

- MPC BookDocument464 pagesMPC BookNilay Saraf100% (1)

- Increased Roles of Linear Algebra in Control EducationDocument15 pagesIncreased Roles of Linear Algebra in Control Educationsantoshraman89No ratings yet

- Operations Research Lecture Notes 2-Introduction To Operations Research-2Document27 pagesOperations Research Lecture Notes 2-Introduction To Operations Research-2Ülvi MamedovNo ratings yet

- Soft Computing Techniques in Electrical Engineering (Ee571) : Topic-Lateral Control For Autonomous Wheeled VehicleDocument13 pagesSoft Computing Techniques in Electrical Engineering (Ee571) : Topic-Lateral Control For Autonomous Wheeled VehicleShivam SinghNo ratings yet

- Power System StabilityDocument9 pagesPower System StabilityMyScribdddNo ratings yet

- 978 3 642 33021 6 - Chapter - 1Document18 pages978 3 642 33021 6 - Chapter - 1Salman HarasisNo ratings yet

- Book Review: International Journal of Robust and Nonlinear Control Int. J. Robust Nonlinear Control 2008 18:111-112Document2 pagesBook Review: International Journal of Robust and Nonlinear Control Int. J. Robust Nonlinear Control 2008 18:111-112rubis tarekNo ratings yet

- Research Article: Direct Data-Driven Control For Cascade Control SystemDocument11 pagesResearch Article: Direct Data-Driven Control For Cascade Control SystemNehal ANo ratings yet

- Chapter 1 - 1Document34 pagesChapter 1 - 1bezawitg2002No ratings yet

- Output Feedback Reinforcement Learning Control For Linear SystemsDocument304 pagesOutput Feedback Reinforcement Learning Control For Linear SystemsESLAM salahNo ratings yet

- BBMbook Cambridge NewstyleDocument373 pagesBBMbook Cambridge Newstylejpvieira66No ratings yet

- GpopDocument12 pagesGpopmohamed yehiaNo ratings yet

- Adaptive Volterra Filters 265Document5 pagesAdaptive Volterra Filters 265Máximo Eduardo Morales PérezNo ratings yet

- Dechter Constraint ProcessingDocument40 pagesDechter Constraint Processingnani subrNo ratings yet

- Sensors: Efficient Force Control Learning System For Industrial Robots Based On Variable Impedance ControlDocument26 pagesSensors: Efficient Force Control Learning System For Industrial Robots Based On Variable Impedance ControldzenekNo ratings yet

- Explaining Performance of The Threshold Accepting Algorithm For The Bin Packing Problem: A Causal ApproachDocument5 pagesExplaining Performance of The Threshold Accepting Algorithm For The Bin Packing Problem: A Causal ApproachlauracruzreyesNo ratings yet

- Control TheoryDocument10 pagesControl TheoryAnonymous E4Rbo2sNo ratings yet

- Learning Robot Control - 2012Document12 pagesLearning Robot Control - 2012cosmicduckNo ratings yet

- 2014 Soft-Computing-Based-On-Nonlinear-Dynamic-Systems-Possible-Foundation-Of-New-Data-Mining-SystemsDocument8 pages2014 Soft-Computing-Based-On-Nonlinear-Dynamic-Systems-Possible-Foundation-Of-New-Data-Mining-SystemsDave LornNo ratings yet

- Renewal OptimizationDocument14 pagesRenewal Optimizationbadside badsideNo ratings yet

- (Ortega2001) Putting Energy Back in ControlDocument16 pages(Ortega2001) Putting Energy Back in ControlFernando LoteroNo ratings yet

- Research Article: Impulsive Controllability/Observability For Interconnected Descriptor Systems With Two SubsystemsDocument15 pagesResearch Article: Impulsive Controllability/Observability For Interconnected Descriptor Systems With Two SubsystemsKawthar ZaidanNo ratings yet

- System IdentificationDocument6 pagesSystem Identificationjohn949No ratings yet

- Algoritmos EvolutivosDocument26 pagesAlgoritmos EvolutivosspuzzarNo ratings yet

- Attention Switching During InterruptionsDocument6 pagesAttention Switching During InterruptionsroelensNo ratings yet

- Fea Ture Selection Neural Networks Philippe Leray and Patrick GallinariDocument22 pagesFea Ture Selection Neural Networks Philippe Leray and Patrick GallinariVbg DaNo ratings yet

- Hybrid Estimation of Complex SystemsDocument14 pagesHybrid Estimation of Complex SystemsJuan Carlos AmezquitaNo ratings yet

- Using Control Theory To Achieve Service Level Objectives in Performance ManagementDocument21 pagesUsing Control Theory To Achieve Service Level Objectives in Performance ManagementJose RomeroNo ratings yet

- Prin Env ModDocument26 pagesPrin Env Modmichael17ph2003No ratings yet

- Defining Intelligent ControlDocument31 pagesDefining Intelligent ControlJULIO ERNESTO CASTRO RICONo ratings yet

- Modern Anti-windup Synthesis: Control Augmentation for Actuator SaturationFrom EverandModern Anti-windup Synthesis: Control Augmentation for Actuator SaturationRating: 5 out of 5 stars5/5 (1)

- Chapter 14 15 HomeworkDocument8 pagesChapter 14 15 HomeworkAbidure Rahman Khan 170061041No ratings yet

- Bayesian Model Averaging: A Tutorial: Jennifer A. Hoeting, David Madigan, Adrian E. Raftery and Chris T. VolinskyDocument36 pagesBayesian Model Averaging: A Tutorial: Jennifer A. Hoeting, David Madigan, Adrian E. Raftery and Chris T. VolinskyOmo NaijaNo ratings yet

- B.E in Basic Science Engineer and Maths PDFDocument2 pagesB.E in Basic Science Engineer and Maths PDFvrsafeNo ratings yet

- Federated Deep Reinforcement Learning For User Access Control in Open Radio Access NetworksDocument6 pagesFederated Deep Reinforcement Learning For User Access Control in Open Radio Access NetworksAmardip Kumar SinghNo ratings yet

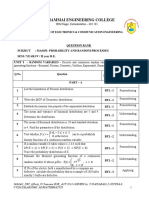

- MA6451-Probability and Random ProcessesDocument19 pagesMA6451-Probability and Random ProcessesmohanNo ratings yet

- Math ProjectDocument29 pagesMath ProjectSangeeta PandaNo ratings yet

- Ndu ThesisDocument8 pagesNdu Thesisoeczepiig100% (2)

- MIT6 262S11 Lec01Document10 pagesMIT6 262S11 Lec01Ankur BhartiyaNo ratings yet

- Spring 2006 Final SolutionDocument12 pagesSpring 2006 Final SolutionAndrew ZellerNo ratings yet

- Assignment 1Document2 pagesAssignment 1CONTACTS CONTACTSNo ratings yet

- M.E. Comm. SystemsDocument58 pagesM.E. Comm. SystemsarivasanthNo ratings yet

- 1 s2.0 S2666546821000641 MainDocument9 pages1 s2.0 S2666546821000641 MainSidharth MaheshNo ratings yet

- Analysis and Data Based Reconstruction of Complex Nonlinear Dynamical Systems Using The Methods of Stochastic Processes M. Reza Rahimi TabarDocument54 pagesAnalysis and Data Based Reconstruction of Complex Nonlinear Dynamical Systems Using The Methods of Stochastic Processes M. Reza Rahimi Tabarandrea.ramos537100% (7)

- 50 LangvillebookDocument1 page50 LangvillebookGhiță ȚipleNo ratings yet

- MIT18 445S15 Lecture8 PDFDocument11 pagesMIT18 445S15 Lecture8 PDFkrishnaNo ratings yet

- CT4 Models PDFDocument6 pagesCT4 Models PDFVignesh Srinivasan0% (1)

- Linearalgebra TT02 203Document54 pagesLinearalgebra TT02 203Trần TânNo ratings yet

- MarkovDocument34 pagesMarkovAswathy CjNo ratings yet

- VTU M4 Question PaperDocument3 pagesVTU M4 Question PaperVeda RevankarNo ratings yet

- Power Indices and The Measurement of Control in Corporate StructuresDocument16 pagesPower Indices and The Measurement of Control in Corporate Structuresdynamic openNo ratings yet

- Nonparametric Bayesian Models For Machine Learning: Romain Jean ThibauxDocument73 pagesNonparametric Bayesian Models For Machine Learning: Romain Jean ThibauxIgnatious MohanNo ratings yet

- CH 16 Markov AnalysisDocument37 pagesCH 16 Markov AnalysisRia Rahma PuspitaNo ratings yet

- Power System Planning and ReliabilityDocument58 pagesPower System Planning and ReliabilityGYANA RANJANNo ratings yet

- NB 11Document22 pagesNB 11Alexandra ProkhorovaNo ratings yet

- MT2 - Wk6 - S11 Notes - Lyapunov FunctionsDocument6 pagesMT2 - Wk6 - S11 Notes - Lyapunov FunctionsJuan ToralNo ratings yet

- Probability Via ExpectationDocument6 pagesProbability Via ExpectationMichael YuNo ratings yet

- Unit V Graphical ModelsDocument23 pagesUnit V Graphical ModelsIndumathy ParanthamanNo ratings yet

- Courant: S. R. S. VaradhanDocument16 pagesCourant: S. R. S. Varadhan马牧之0% (1)

Reinforcement Learning Is Direct Adaptive Optimal Control: Richard S. Sutton, Andrew G. Barto, Ronald J. Williams

Reinforcement Learning Is Direct Adaptive Optimal Control: Richard S. Sutton, Andrew G. Barto, Ronald J. Williams

Uploaded by

hewitt0724Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Reinforcement Learning Is Direct Adaptive Optimal Control: Richard S. Sutton, Andrew G. Barto, Ronald J. Williams

Reinforcement Learning Is Direct Adaptive Optimal Control: Richard S. Sutton, Andrew G. Barto, Ronald J. Williams

Uploaded by

hewitt0724Copyright:

Available Formats

Reinforcement Learning is Direct Adaptive Optimal Control

Richard S. Sutton, Andrew G. Barto, Ronald J. Williams Abstract

In this paper we present a control-systems perspective on one of the major neuralnetwork approaches to learning control, reinforcement learning. Control problems can be divided into two classes: 1) regulation and tracking problems, in which the objective is to follow a reference trajectory, and 2) optimal control problems, in which the objective is to extremize a functional of the controlled system's behavior that is not necessarily de ned in terms of a reference trajectory. Adaptive methods for problems of the rst kind are well known, and include self-tuning regulators and model-reference methods, whereas adaptive methods for optimalcontrol problems have received relatively little attention. Moreover, the adaptive optimal-control methods that have been studied are almost all indirect methods, in which controls are recomputed from an estimated system model at each step. This computation is inherently complex, making adaptive methods in which the

Presented at 1991 American Control Conference, Boston, MA, June 26-28, 1991. Richard Sutton is with GTE Laboratories Inc., Waltham, MA 02254. Andrew Barto is with University of Massachusetts, Amherst, MA 01003. Ronald Williams is with Northeastern University, Boston, MA 02115. The authors thank Erik Ydstie, Judy Franklin, and Oliver Selfridge for discussions contributing to this article. This research was supported in part by grants to A.G. Barto from the National Science Foundation (ECS-8912623) and the Air Force O ce of Scienti c Research, Bolling AFB (AFOSR-89-0526), and by a grant to R.J. Williams from the National Science Foundation (IRI-8921275).

optimal controls are estimated directly more attractive. We view reinforcement learning methods as a computationally simple, direct approach to the adaptive optimal control of nonlinear systems. For concreteness, we focus on one reinforcement learning method (Q-learning) and on its analytically proven capabilities for one class of adaptive optimal control problems (markov decision problems with unknown transition probabilities). All control problems involve manipulating a dynamical system's input so that its behavior meets a collection of speci cations constituting the control objective. In some problems, the control objective is de ned in terms of a reference level or reference trajectory that the controlled system's output should match or track as closely as possible. Stability is the key issue in these regulation and tracking problems. In other problems, the control objective is to extremize a functional of the controlled system's behavior that is not necessarily de ned in terms of a reference level or trajectory. The key issue in the latter problems is constrained optimization; here optimal-control methods based on the calculus of variations and dynamic programming have been extensively studied. In recent years, optimal control has received less attention than regulation and tracking, which have proven to be more tractable both analytically and computationally, and which pro-

INTRODUCTION

duce more reliable controls for many applications. When a detailed and accurate model of the system to be controlled is not available, adaptive control methods can be applied. The overwhelming majority of adaptive control methods address regulation and tracking problems. However, adaptive methods for optimal control problems would be widely applicable if methods could be developed that were computationally feasible and that could be applied robustly to nonlinear systems. Tracking problems assume prior knowledge of a reference trajectory, but for many problems the determination of a reference trajectory is an important part|if not the most important part|of the overall problem. For example, trajectory planning is a key and difcult problem in robot navigation tasks, as it is in other robot control tasks. To design a robot capable of walking bipedally, one may not be able to specify a desired trajectory for the limbs a priori, but one can specify the objective of moving forward, maintaining equilibrium, not damaging the robot, etc. Process control tasks are typically speci ed in terms of overall objectives such as maximizing yield or minimizing energy consumption. It is generally not possible to meet these objectives by dividing the task into separate phases for trajectory planning and trajectory tracking. Ideally, one would like to have both the trajectories and the required controls determined so as to extremize the objective function. For both tracking and optimal control, it is usual to distinguish between indirect and direct adaptive control methods. An indirect method relies on a system identi cation procedure to form an explicit model of the controlled system and determines then the control rule from the model. Direct methods determine the control rule without forming such a system model. In this paper we brie y describe learning methods known as reinforcement learning methods, and present them as a direct approach to adaptive optimal control. These

methods have their roots in studies of animal learning and in early learning control work (e.g., 22]), and are now an active area of research in neural networks and machine learning (e.g., see 1,41]). We summarize here an emerging deeper understanding of these methods that is being obtained by viewing them as a synthesis of dynamic programming and stochastic approximation methods. Reinforcement learning is based on the commonsense idea that if an action is followed by a satisfactory state of a airs, or by an improvement in the state of a airs (as determined in some clearly de ned way), then the tendency to produce that action is strengthened, i.e., reinforced. This idea plays a fundamental role in theories of animal learning, in parameter-perturbation adaptive-control methods (e.g., 12]), and in the theory of learning automata and bandit problems 8,26]. Extending this idea to allow action selections to depend on state information introduces aspects of feedback control, pattern recognition, and associative learning (e.g., 2,6]). Further, it is possible to extend the idea of being \followed by a satisfactory state of a airs" to include the long-term consequences of actions. By combining methods for adjusting action-selections with methods for estimating the long-term consequences of actions, reinforcement learning methods can be devised that are applicable to control problems involving temporally extended behavior (e.g., 3,4,7,13,14,30,34,35,36,40]). Most formal results that have been obtained are for the control of Markov processes with unknown transition probabilities (e.g., 31,34]). Also relevant are formal results showing that optimal controls can] often be computed using more asynchronous or incremental forms of dynamic programming than are conventionally used (e.g., 9,39,42]). Empirical (simulation) results using reinforcement learning combined with neural networks or other associative memory structures

REINFORCEMENT LEARNING

have shown robust e cient learning on a variety of nonlinear control problems (e.g., 5,13,19,20,24,25,29,32,38,43]). An overview of the role of reinforcement learning within neural-network approaches is provided by 1]. For a readily accessible example of reinforcement learning using neural networks the reader is referred to Anderson's article on the inverted pendulum problem 43]. Studies of reinforcement-learning neural networks in nonlinear control problems have generally focused on one of two main types of algorithm: actor-critic learning or Qlearning. An actor-critic learning system contains two distinct subsystems, one to estimate the long-term utility for each state and another to learn to choose the optimal action in each state. A Q-learning system maintains estimates of utilities for all state-action pairs and makes use of these estimates to select actions. Either of these techniques quali es as an example of a direct adaptive optimal control algorithm, but because Q-learning is conceptually simpler, has a better-developed theory, and has been found empirically to converge faster in many cases, we elaborate on this particular technique here and omit further discussion of actor-critic learning.

should specify action ak so as to maximize

E

nX

1

j

Q-LEARNING

One of the simplest and most promising reinforcement learning methods is called Qlearning 34]. Consider the following nitestate, nite-action Markov decision problem. At each discrete time step, k = 1; 2; : : :, the controller observes the state xk of the Markov process, selects action ak , receives resulant reward rk , and observes the resultant next state xk+1 . The probability distributions for rk and xk+1 depend only on xk and ak , and rk has nite expected value. The objective is to nd a control rule (here a stationary control rule su ces, which is a mapping from states to actions) that maximizes at each time step the expected discounted sum of future reward. That is, at any time step k, the control rule

where , 0 < 1, is a discount factor. Given a complete and accurate model of the Markov decision problem in the form of the state transition probabilities for each action and the probabilities specifying the reward process, it is possible to nd an optimal control rule by applying one of several dynamic programming (DP) algorithms. If such a model is not available a priori, it could be estimated from observed rewards and state transitions, and DP could be applied using the estimated model. That would constitute an indirect adaptive control method. Most of the methods for the adaptive control of Markov processes described in the engineering literature are indirect (e.g., 10,18,21,28]). Reinforcement learning methods such as Qlearning, on the other hand, do not estimate a system model. The basic idea in Q-learning is to estimate a real-valued function, Q, of states and actions, where Q(x; a) is the expected discounted sum of future reward for performing action a in state x and performing optimally thereafter. (The name \Qlearning" comes purely from Watkins's notation.) This function satis es the following recursive relationship (or \functional equation"): Q(x; a) = E frk + max Q(xk+1 ; b) j xk = x; ak = ag: b An optimal control rule can be expressed in terms of Q by noting that an optimal action for state x is any action a that maximizes Q(x; a). The Q-learning procedure maintains an es^ timate Q of the function Q. At each transtion from step k to k + 1, the learning system can observe xk , ak , rk , and xk+1 . Based on these ^ observations, Q is updated at time step k + 1 ^ (x; a) remains unchanged for all as follows: Q pairs (x; a) 6= (xk ; ak ) and ^ ^ Q(xk ; ak ) := Q(xk ; ak ) + (1)

=0

rk+j ;

rk +

^ ^ max Q(xk+1; b)?Q(xk ; ak )]; b

where

<

k is a gain sequence such that 0 < 2 1, P1 k = 1, and P1 k < 1. k k =1 k =1 ^ Watkins 34] has shown that Q converges to Q with probability one if all actions continue to be tried from all states. This is a weak condition in the sense that it would have to be met by any algorithm capable of solving this problem. The simplest way to satisfy this condition while also attempting to follow the current estimate for the optimal control rule is to use a stochastic control rule that \prefers," for state x, the action a that max^ imizes Q(x; a), but that occassionally selects an action at random. The probability of taking a random action can be reduced with time according to a xed schedule. Stochastic automata methods or exploration methods such as that suggested by Sato et al. 28] can also be employed 4]. Because it does not rely on an explicit model of the Markov process, Q-learning is a direct adaptive method. It di ers from the direct method of Wheeler and Narendra 37] in that their method does not estimate a value function, but constructs the control rule directly. Q-learning and other reinforcement learning methods are most closely related to|but were developed independently of|the adaptive Markov control method of Jalali and Ferguson 16], which they call \asynchronous transient programming." Asynchronous DP methods described by Bertsekas and Tsitsiklis 9] perform local updates of the value function asynchronously instead of using the systematic updating sweeps of conventional DP algorithms. Like Q-learning, asynchronous transient programming performs local updates on the state currently being visited. Unlike Q-learning, however, asynchronous transient programming is an indirect adaptive control method because it requires an explicit model of the Markov process. The Q function, on the other hand, combines information about state transitions and estimates of future reward without rely-

ing on explicit estimates of state transition probablities. The advantage of all of these methods is that they require enormously less computation at each time step than do indirect adaptive optimal control methods using conventional DP algorithms. Like conventional DP methods, the Qlearning method given by (1) requires memory and overall computation proportional to the number of state-action pairs. In large problems, or in problems with continuous state and action spaces which must be quantized, these methods becomes extremely complex (Bellman's \curse of dimensionality"). One approach to reducing the severity of this ^ problem is to represent Q not as a look-up table, but as a parameterized structure such as a low-order polynomial, k-d tree, decision tree, or neural network. In general, the lo^ cal update rule for Q given by (1) can be adapted for use with any method for adjusting parameters of function representations via supervised learning methods (e.g., see 11]). One can de ne a general way of moving from a unit of experience (xk , ak , rk , and xk+1, as ^ in (1)) to a training example for Q: ^ Q(xk ; ak ) ^ should be rk + max Q(xk+1; b): b This training example can then be input to any supervised learning method, such as a parameter estimation procedure based on stochastic approximation. The choice of learning method will have a strong e ect on generalization, the speed of learning, and the quality of the nal result. This approach has been used successfully with supervised learning methods based on error backpropagation 19], CMACs 34], and nearest-neighbor methods 25]. Unfortunately, it is not currently known how theoretical guarantees of convergence extend to various function representations, even representations in which the estimated function values are linear in the

REPRESENTING THE Q FUNCTION

representation's parameters. This is an important area of current research. Q-learning and other reinforcement learning methods are incremental methods for performing DP using actual experience with the controlled system in place of a model of that system 7,34,36]. It is also possible to use these methods with a system model, for example, by using the model to generate hypothetical experience which is then processed by Q-learning just as if it were experience with the actual system. Further, there is nothing to prevent using reinforcement learning methods on both actual and simulated experience simultaneously. Sutton 32] has proposed a learning architecture called \Dyna" that simultaneously 1) performs reinforcement learning using actual experiences, 2) applies the same reinforcement learning method to model-generated experiences, and 3) updates the system model based on actual experiences. This is a simple and e ective way to combine learning and incremental planning capabilities, an issue of increasing signi cance in arti cial intelligence (e.g., see 15]). Although its roots are in theories of animal learning developed by experimental psychologists, reinforcement learning has strong connections to theoretically justi ed methods for direct adaptive optimal control. When procedures for designing controls from a system model are computationally simple, as they are in linear regulation and tracking tasks, the distinction between indirect and direct adaptive methods has minor impact on the feasibility of an adaptive control method. However, when the design procedure is very costly, as it is in nonlinear optimal control, the distinction between indirect and direct methods becomes much more important. In this paper we presented reinforcement learning as an on-line DP method and a com-

HYBRID DIRECT/INDIRECT METHODS

putationally inexpensive approach to direct adaptive optimal control. Methods of this kind are helping to integrate insights from animal learning 7,33], arti cial intelligence 17,27,31,32], and perhaps|as we have argued here|optimal control theory.

References

1] Barto, A.G. (1990) Connectionist learning for control: An overview. In: Neural Networks for Control, edited by W.T. Miller, R.S. Sutton, and P.J. Werbos, pp. 5{58, MIT Press. 2] Barto, A.G., Anandan, P. (1985) Pattern recognizing stochastic learning automata. IEEE Transactions on Systems, Man, and Cybernetics, 15:360{375. 3] Barto, A.G., Singh, S.P. (1990) Reinforcement Learning and Dynamic Programming, Proceedings of the Sixth Yale Workshop on Adaptive and Learning Systems, 83{88, Yale University, New Haven, CT. 4] Barto, A.G., Singh, S.P. (1991) On the computational economics of reinforcement learning, In Connectionist Models: Proceedings of the 1990 Summer School, edited by D. S. Touretzky, J. L. Elman, T. J. Sejnowski, and G. E. Hinton, San Mateo, CA: Morgan Kaufmann. 5] Barto, A.G., Sutton R.S., Anderson, C.W. (1983) Neuronlike elements that can solve di cult learning control problems. IEEE Trans. on Systems, Man, and Cybernetics, SMC-13, No. 5, pp. 834{846. 6] Barto, A.G., Sutton, R.S., Brouwer, P.S. (1981) Associative search network: A reinforcement learning associative memory. Biological Cybernetics, 40, 201{211. 7] Barto, A.G., Sutton, R.S., Watkins, C.J.C.H. (1990) Learning and sequential decision making. In Learning and Computational Neuroscience, M. Gabriel and J.W. Moore (Eds.), MIT Press.

CONCLUSIONS

8] Berry, D.A., Fristedt, B. (1985) Bandit Problems: Sequential Allocation of Experiments. London: Chapman and Hall. 9] Bertsekas, D.P. Tsitsiklis, J.N. (1989) Parallel Distributed Processing: Numerical Methods, Prentice-Hall. 10] Borkar, V., Varaiya, P. (1979) Adaptive control of markov chains I: Finite parameter set. IEEE Transactions on Automatic Control, 24:953{957, 1979. 11] Duda, R.O., Hart, P.E. (1973) Pattern Classi cation and Scene Analysis. New York: Wiley. 12] Eveleigh, V.W. (1967) Adaptive Control and Optimization Techniques, New York: McGraw-Hill. 13] Grefenstette, J. J., Ramsey, C. L., & Schultz, A. C. (1990) Learning sequential decision rules using simulation models and competition. Machine Learning 5, 355{382. 14] Hampson, S.E. (1989) Connectionist Problem Solving: Computational Aspects of Biological Learning. Boston: Birkhauser. 15] Hendler, J. (1990) Planning in Uncertain, Unpredictable, or Changing Environments (Working notes of the 1990 AAAI Spring Symposium), Edited by J. Hendler, Technical Report SRC-TR-90-45, University of Maryland, College Park, MD. 16] Jalali, A., Ferguson, M. (1989) Computationally e cient adaptive control algorithms for markov chains, Proceedings of the 28th Conference on Decision and Control, 1283{ 1288. 17] Korf, R. E. (1990) Real-Time Heuristic Search. Arti cial Intelligence 42: 189{211. 18] Kumar, P.R., Lin, W. (1982) Optimal adaptive controllers for unknown markov chains. IEEE Transactions on Automatic Control, 25:765{774. 19] Lin, Long-Ji. (1991) Self-improving reactive agents: Case studies of reinforcement learning frameworks. In: Proceedings of the

International Conference on the Simulation of Adaptive Behavior, MIT Press. 20] Mahadevan, S. Connell, J. (1990) Automatic programming of behavior-based robots using reinforcement learning. IBM technical report. 21] Mandl, P. (1974) Estimation and control in markov chains. Advances in Applied Probability, 6:40{60. 22] Mendel, J.M., McLaren, R.W. (1970) Reinforcement learning control and pattern recognition systems. In: Adaptive, Learning and Pattern Recognition Systems: Theory and Applications, edited by J.M. Mendel & K.S. Fu, pp. 287{318. New York: Academic Press. 24] Millington, P.J. (1991) Associative Reinforcement Learning for Optimal Control, M.S. Thesis, Massachusetts Institute of Technology, Technical Report CSDL-T-1070. 25] Moore, A.W. (1990) E cient MemoryBased Learning for Robot Control. PhD thesis, Cambridge University Computer Science Department. 26] Narendra, K.S., Thathachar, M.A.L. (1989) Learning Automata: An Introduction. Prentice Hall, Englewood Cli s, NJ. 27] Samuel, A.L. (1959) Some studies in machine learning using the game of checkers. IBM Journal on Research and Development, 3, 210{229. (Reprinted in Computers and Thought, edited by E.A. Feigenbaum & J. Feldman, pp. 71{105. New York: McGrawHill, 1963.) 28] Sato, M., Abe, K., Takeda, H. (1988) Learning control of nite markov chains with explicit trade-o between estimation and control. IEEE Transactions on Systems, Man, and Cybernetics, 18:677{684. 29] Selfridge, O.G., Sutton,R.S., Barto, A.G. (1985) Training and Tracking in Robotics, Proceedings of IJCAI-85, International Joint Conference on Arti cial Intelligence, pp. 670{ 672.

30] Sutton, R.S. (1984) Temporal credit assignment in reinforcement learning. Doctoral dissertation, Department of Computer and Information Science, University of Massachusetts, Amherst, MA 01003. 31] Sutton, R.S. (1988) Learning to predict by the methods of temporal di erences. Machine Learning 3: 9{44. 32] Sutton, R.S. (1990) First results with Dyna: An integrated architecture for learning, planning, and reacting. Proceedings of the 1990 AAAI Spring Symposium on Planning in Uncertain, Unpredictable, or Changing Environments. 33] Sutton, R.S., Barto, A.G. (1990) Timederivative models of Pavlovian reinforcement. In Learning and Computational Neuroscience, M. Gabriel and J.W. Moore (Eds.), MIT Press. 34] Watkins, C.J.C.H. (1989) Learning with Delayed Rewards. PhD thesis, Cambridge University Psychology Department. 35] Werbos, P.J. (1987) Building and understanding adaptive systems: A statistical/numerical approach to factory automation and brain research. IEEE Transactions on Systems, Man, and Cybernetics, Jan-Feb. 36] Werbos, P.J. (1989) Neural networks for control and system identi cation. In Proceedings of the 28th Conference on Decision and Control, pages 260{265. 37] Wheeler, R.M., Narendra, K.S. (1986) Decentralized learning in nite markov chains. IEEE Transactions on Automatic Control, 31:519{526. 38] Whitehead, S.D., Ballard, D.H. (in press) Learning to perceive and act by trial and error. Machine Learning. 39] Williams, R.J., Baird, L.C. (1990) A mathematical analysis of actor-critic architectures for learning optimal controls through incremental dynamic programming, Proceedings of the Sixth Yale Workshop on Adaptive

and Learning Systems, 96{101, Yale University, New Haven, CT. 40] Witten, I.H. (1977) An adaptive optimal controller for discrete-time Markov environments. Information and Control, 34:286{295. 41] Sutton, R.S., Ed. (1992) Machine Learning 8, No. 3/4 (special issue on Reinforcement Learning), Kluwer Academic. 42] Barto, A.G., Bradtke, S.J., Singh, S.P. (1991) Real-time learning and control using asynchronous dynamic programming. University of Massachusetts at Amherst Technical Report 91-57. 43] Anderson, C.W. (1989) Learning to control an inverted pendulum using neural networks. Control Systems Magazine, 31{37, April.

You might also like

- Tenta 210826Document3 pagesTenta 210826InetheroNo ratings yet

- A Model To Determine Customer Lifetime Value in A Retail Banking ContextDocument14 pagesA Model To Determine Customer Lifetime Value in A Retail Banking ContextSim MiyokoNo ratings yet

- Optimal State Estimation For Stochastic Systems: An Information Theoretic ApproachDocument15 pagesOptimal State Estimation For Stochastic Systems: An Information Theoretic ApproachJanett TrujilloNo ratings yet

- 从LQR角度看RL和控制Document28 pages从LQR角度看RL和控制金依菲No ratings yet

- Model-Based Adaptive Critic Designs: Editor's SummaryDocument31 pagesModel-Based Adaptive Critic Designs: Editor's SummaryioncopaeNo ratings yet

- Reinforcement Learning and Adaptive Dynamic Programming For Feedback ControlDocument19 pagesReinforcement Learning and Adaptive Dynamic Programming For Feedback ControlRVn MorNo ratings yet

- Data Driven ControlDocument2 pagesData Driven Controljain jaiinNo ratings yet

- Rasmussen Vicente Human Errors EID PDFDocument18 pagesRasmussen Vicente Human Errors EID PDFMartin GriffinNo ratings yet

- ACODS 2014 GAndradeDocument7 pagesACODS 2014 GAndradesattanic666No ratings yet

- Vedula Xu 2009Document13 pagesVedula Xu 2009Vladimir VelascoNo ratings yet

- Loquasto IECDocument47 pagesLoquasto IECAllel BradleyNo ratings yet

- Data Driven Control of Interacting Two Tank Hybrid System Using Deep Reinforcement LearningDocument7 pagesData Driven Control of Interacting Two Tank Hybrid System Using Deep Reinforcement Learningayush sharmaNo ratings yet

- Stochastic Learning and Optimization: A Sensitivity-Based ApproachDocument575 pagesStochastic Learning and Optimization: A Sensitivity-Based ApproachLuis Dario Ortíz IzquierdoNo ratings yet

- constrainedLQR Vehicle Dynamics Applications of Optimal Control TheoryDocument40 pagesconstrainedLQR Vehicle Dynamics Applications of Optimal Control TheoryBRIDGET AUHNo ratings yet

- Model Predictive ControlDocument460 pagesModel Predictive ControlHarisCausevicNo ratings yet

- Revised Paper vs12Document16 pagesRevised Paper vs12Long Trần ĐứcNo ratings yet

- A Self-Leaning Fuzzy Logic Controller Using Genetic Algorithm With ReinforcementsDocument8 pagesA Self-Leaning Fuzzy Logic Controller Using Genetic Algorithm With Reinforcementsvinodkumar57No ratings yet

- Deep Reinforcement Learning With Guaranteed Performance: Yinyan Zhang Shuai Li Xuefeng ZhouDocument237 pagesDeep Reinforcement Learning With Guaranteed Performance: Yinyan Zhang Shuai Li Xuefeng ZhouAnkit SharmaNo ratings yet

- Control TheoryDocument12 pagesControl TheorymCmAlNo ratings yet

- (IJCST-V9I5P4) :monica Veronica Crankson, Olusegun Olotu, Newton AmegbeyDocument9 pages(IJCST-V9I5P4) :monica Veronica Crankson, Olusegun Olotu, Newton AmegbeyEighthSenseGroupNo ratings yet

- PlantWIde McAvoyDocument19 pagesPlantWIde McAvoydesigat4122No ratings yet

- ACCESS3148306Document9 pagesACCESS3148306atif6421724No ratings yet

- 1 s2.0 S0167278920300026 MainDocument11 pages1 s2.0 S0167278920300026 MainJuan ZarateNo ratings yet

- 10 Chapter 2Document30 pages10 Chapter 2Cheeranjeev MishraNo ratings yet

- Dynamics Modeling of Nonholonomic Mechanical SystemsDocument13 pagesDynamics Modeling of Nonholonomic Mechanical SystemsMarcelo PereiraNo ratings yet

- Borelli Predictive Control PDFDocument424 pagesBorelli Predictive Control PDFcharlesNo ratings yet

- Gaussian Process-Based Predictive ControDocument12 pagesGaussian Process-Based Predictive ContronhatvpNo ratings yet

- Robust Model Predictive Control For A Class of Discrete-Time Markovian Jump Linear Systems With Operation Mode DisorderingDocument13 pagesRobust Model Predictive Control For A Class of Discrete-Time Markovian Jump Linear Systems With Operation Mode DisorderingnhatvpNo ratings yet

- Automatica: D. Vrabie O. Pastravanu M. Abu-Khalaf F.L. LewisDocument8 pagesAutomatica: D. Vrabie O. Pastravanu M. Abu-Khalaf F.L. LewisLộc Ong XuânNo ratings yet

- MPC BookDocument464 pagesMPC BookNilay Saraf100% (1)

- Increased Roles of Linear Algebra in Control EducationDocument15 pagesIncreased Roles of Linear Algebra in Control Educationsantoshraman89No ratings yet

- Operations Research Lecture Notes 2-Introduction To Operations Research-2Document27 pagesOperations Research Lecture Notes 2-Introduction To Operations Research-2Ülvi MamedovNo ratings yet

- Soft Computing Techniques in Electrical Engineering (Ee571) : Topic-Lateral Control For Autonomous Wheeled VehicleDocument13 pagesSoft Computing Techniques in Electrical Engineering (Ee571) : Topic-Lateral Control For Autonomous Wheeled VehicleShivam SinghNo ratings yet

- Power System StabilityDocument9 pagesPower System StabilityMyScribdddNo ratings yet

- 978 3 642 33021 6 - Chapter - 1Document18 pages978 3 642 33021 6 - Chapter - 1Salman HarasisNo ratings yet

- Book Review: International Journal of Robust and Nonlinear Control Int. J. Robust Nonlinear Control 2008 18:111-112Document2 pagesBook Review: International Journal of Robust and Nonlinear Control Int. J. Robust Nonlinear Control 2008 18:111-112rubis tarekNo ratings yet

- Research Article: Direct Data-Driven Control For Cascade Control SystemDocument11 pagesResearch Article: Direct Data-Driven Control For Cascade Control SystemNehal ANo ratings yet

- Chapter 1 - 1Document34 pagesChapter 1 - 1bezawitg2002No ratings yet

- Output Feedback Reinforcement Learning Control For Linear SystemsDocument304 pagesOutput Feedback Reinforcement Learning Control For Linear SystemsESLAM salahNo ratings yet

- BBMbook Cambridge NewstyleDocument373 pagesBBMbook Cambridge Newstylejpvieira66No ratings yet

- GpopDocument12 pagesGpopmohamed yehiaNo ratings yet

- Adaptive Volterra Filters 265Document5 pagesAdaptive Volterra Filters 265Máximo Eduardo Morales PérezNo ratings yet

- Dechter Constraint ProcessingDocument40 pagesDechter Constraint Processingnani subrNo ratings yet

- Sensors: Efficient Force Control Learning System For Industrial Robots Based On Variable Impedance ControlDocument26 pagesSensors: Efficient Force Control Learning System For Industrial Robots Based On Variable Impedance ControldzenekNo ratings yet

- Explaining Performance of The Threshold Accepting Algorithm For The Bin Packing Problem: A Causal ApproachDocument5 pagesExplaining Performance of The Threshold Accepting Algorithm For The Bin Packing Problem: A Causal ApproachlauracruzreyesNo ratings yet

- Control TheoryDocument10 pagesControl TheoryAnonymous E4Rbo2sNo ratings yet

- Learning Robot Control - 2012Document12 pagesLearning Robot Control - 2012cosmicduckNo ratings yet

- 2014 Soft-Computing-Based-On-Nonlinear-Dynamic-Systems-Possible-Foundation-Of-New-Data-Mining-SystemsDocument8 pages2014 Soft-Computing-Based-On-Nonlinear-Dynamic-Systems-Possible-Foundation-Of-New-Data-Mining-SystemsDave LornNo ratings yet

- Renewal OptimizationDocument14 pagesRenewal Optimizationbadside badsideNo ratings yet

- (Ortega2001) Putting Energy Back in ControlDocument16 pages(Ortega2001) Putting Energy Back in ControlFernando LoteroNo ratings yet

- Research Article: Impulsive Controllability/Observability For Interconnected Descriptor Systems With Two SubsystemsDocument15 pagesResearch Article: Impulsive Controllability/Observability For Interconnected Descriptor Systems With Two SubsystemsKawthar ZaidanNo ratings yet

- System IdentificationDocument6 pagesSystem Identificationjohn949No ratings yet

- Algoritmos EvolutivosDocument26 pagesAlgoritmos EvolutivosspuzzarNo ratings yet

- Attention Switching During InterruptionsDocument6 pagesAttention Switching During InterruptionsroelensNo ratings yet

- Fea Ture Selection Neural Networks Philippe Leray and Patrick GallinariDocument22 pagesFea Ture Selection Neural Networks Philippe Leray and Patrick GallinariVbg DaNo ratings yet

- Hybrid Estimation of Complex SystemsDocument14 pagesHybrid Estimation of Complex SystemsJuan Carlos AmezquitaNo ratings yet

- Using Control Theory To Achieve Service Level Objectives in Performance ManagementDocument21 pagesUsing Control Theory To Achieve Service Level Objectives in Performance ManagementJose RomeroNo ratings yet

- Prin Env ModDocument26 pagesPrin Env Modmichael17ph2003No ratings yet

- Defining Intelligent ControlDocument31 pagesDefining Intelligent ControlJULIO ERNESTO CASTRO RICONo ratings yet

- Modern Anti-windup Synthesis: Control Augmentation for Actuator SaturationFrom EverandModern Anti-windup Synthesis: Control Augmentation for Actuator SaturationRating: 5 out of 5 stars5/5 (1)

- Chapter 14 15 HomeworkDocument8 pagesChapter 14 15 HomeworkAbidure Rahman Khan 170061041No ratings yet

- Bayesian Model Averaging: A Tutorial: Jennifer A. Hoeting, David Madigan, Adrian E. Raftery and Chris T. VolinskyDocument36 pagesBayesian Model Averaging: A Tutorial: Jennifer A. Hoeting, David Madigan, Adrian E. Raftery and Chris T. VolinskyOmo NaijaNo ratings yet

- B.E in Basic Science Engineer and Maths PDFDocument2 pagesB.E in Basic Science Engineer and Maths PDFvrsafeNo ratings yet

- Federated Deep Reinforcement Learning For User Access Control in Open Radio Access NetworksDocument6 pagesFederated Deep Reinforcement Learning For User Access Control in Open Radio Access NetworksAmardip Kumar SinghNo ratings yet

- MA6451-Probability and Random ProcessesDocument19 pagesMA6451-Probability and Random ProcessesmohanNo ratings yet

- Math ProjectDocument29 pagesMath ProjectSangeeta PandaNo ratings yet

- Ndu ThesisDocument8 pagesNdu Thesisoeczepiig100% (2)

- MIT6 262S11 Lec01Document10 pagesMIT6 262S11 Lec01Ankur BhartiyaNo ratings yet

- Spring 2006 Final SolutionDocument12 pagesSpring 2006 Final SolutionAndrew ZellerNo ratings yet

- Assignment 1Document2 pagesAssignment 1CONTACTS CONTACTSNo ratings yet

- M.E. Comm. SystemsDocument58 pagesM.E. Comm. SystemsarivasanthNo ratings yet

- 1 s2.0 S2666546821000641 MainDocument9 pages1 s2.0 S2666546821000641 MainSidharth MaheshNo ratings yet

- Analysis and Data Based Reconstruction of Complex Nonlinear Dynamical Systems Using The Methods of Stochastic Processes M. Reza Rahimi TabarDocument54 pagesAnalysis and Data Based Reconstruction of Complex Nonlinear Dynamical Systems Using The Methods of Stochastic Processes M. Reza Rahimi Tabarandrea.ramos537100% (7)

- 50 LangvillebookDocument1 page50 LangvillebookGhiță ȚipleNo ratings yet

- MIT18 445S15 Lecture8 PDFDocument11 pagesMIT18 445S15 Lecture8 PDFkrishnaNo ratings yet

- CT4 Models PDFDocument6 pagesCT4 Models PDFVignesh Srinivasan0% (1)

- Linearalgebra TT02 203Document54 pagesLinearalgebra TT02 203Trần TânNo ratings yet

- MarkovDocument34 pagesMarkovAswathy CjNo ratings yet

- VTU M4 Question PaperDocument3 pagesVTU M4 Question PaperVeda RevankarNo ratings yet

- Power Indices and The Measurement of Control in Corporate StructuresDocument16 pagesPower Indices and The Measurement of Control in Corporate Structuresdynamic openNo ratings yet

- Nonparametric Bayesian Models For Machine Learning: Romain Jean ThibauxDocument73 pagesNonparametric Bayesian Models For Machine Learning: Romain Jean ThibauxIgnatious MohanNo ratings yet

- CH 16 Markov AnalysisDocument37 pagesCH 16 Markov AnalysisRia Rahma PuspitaNo ratings yet

- Power System Planning and ReliabilityDocument58 pagesPower System Planning and ReliabilityGYANA RANJANNo ratings yet

- NB 11Document22 pagesNB 11Alexandra ProkhorovaNo ratings yet

- MT2 - Wk6 - S11 Notes - Lyapunov FunctionsDocument6 pagesMT2 - Wk6 - S11 Notes - Lyapunov FunctionsJuan ToralNo ratings yet

- Probability Via ExpectationDocument6 pagesProbability Via ExpectationMichael YuNo ratings yet

- Unit V Graphical ModelsDocument23 pagesUnit V Graphical ModelsIndumathy ParanthamanNo ratings yet

- Courant: S. R. S. VaradhanDocument16 pagesCourant: S. R. S. Varadhan马牧之0% (1)