Professional Documents

Culture Documents

Agents

Agents

Uploaded by

Taha Ali0 ratings0% found this document useful (0 votes)

3 views2 pagesThere are five main types of AI agents: simple reflex agents, model-based reflex agents, goal-based agents, utility-based agents, and learning agents. There are also several types of environments used to test agents, including fully/partially observable, deterministic/stochastic, episodic/continuous, static/dynamic, and discrete/continuous environments.

Original Description:

agents in AI

Original Title

agents

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThere are five main types of AI agents: simple reflex agents, model-based reflex agents, goal-based agents, utility-based agents, and learning agents. There are also several types of environments used to test agents, including fully/partially observable, deterministic/stochastic, episodic/continuous, static/dynamic, and discrete/continuous environments.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

0 ratings0% found this document useful (0 votes)

3 views2 pagesAgents

Agents

Uploaded by

Taha AliThere are five main types of AI agents: simple reflex agents, model-based reflex agents, goal-based agents, utility-based agents, and learning agents. There are also several types of environments used to test agents, including fully/partially observable, deterministic/stochastic, episodic/continuous, static/dynamic, and discrete/continuous environments.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

You are on page 1of 2

Simple reflex agents: These agents are the most basic type

of AI agent. They take input from the environment and

respond with an action based solely on the current percept.

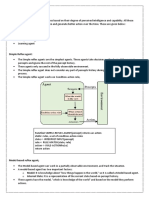

Model-based reflex agents: These agents are similar to

simple reflex agents, but they also have an internal model

of the environment. This model helps the agent to make

more informed decisions based on past experience.

Goal-based agents: These agents have a specific goal or

objective that they are trying to achieve. They take input

from the environment and decide on an action based on

their current goal.

Utility-based agents: These agents make decisions based

on a set of criteria, or utilities. They assign values to

different outcomes and choose the action that maximizes

the expected utility.

Learning agents: These agents are capable of learning

from their experiences. They use machine learning

algorithms to improve their performance over time.

There are many types of environments that can be used in AI to test and evaluate

agent performance. Here are a few common types:

Fully observable environments: In this type of environment, the agent

can observe the entire state of the environment at each time step.

Partially observable environments: In this type of environment, the

agent can only observe a portion of the environment's state. This often

requires the agent to maintain an internal state representation.

Deterministic environments: In this type of environment, the outcome of

each action is fully determined by the current state of the environment.

Stochastic environments: In this type of environment, the outcome of

each action has some degree of randomness or uncertainty.

Episodic environments: In this type of environment, the agent's

interaction with the environment is broken up into discrete episodes,

where each episode has a clear start and end point.

Continuous environments: In this type of environment, the agent's

interaction with the environment is continuous, and there is no clear

distinction between episodes.

Static environments: In this type of environment, the environment does

not change over time.

Dynamic environments: In this type of environment, the environment

changes over time in response to the actions of the agent or external

factors.

Discrete environments: In this type of environment, the agent's actions

and the environment's state are represented by discrete variables.

Continuous environments: In this type of environment, the agent's

actions and the environment's state are represented by continuous

variables.

You might also like

- Module 2 Ai Viva QuestionsDocument6 pagesModule 2 Ai Viva QuestionsRitika dwivediNo ratings yet

- Definition of Ai How AI Applications Work Major Fields of AIDocument6 pagesDefinition of Ai How AI Applications Work Major Fields of AISIDDHANT JAIN 20SCSE1010186No ratings yet

- Intelligent AgentDocument33 pagesIntelligent AgentMarshia MohanaNo ratings yet

- Intelligent Agents and EnvironmentDocument9 pagesIntelligent Agents and EnvironmentliwatobNo ratings yet

- Types of AgentsDocument2 pagesTypes of AgentsJosiah MwashitaNo ratings yet

- Lecture 02Document5 pagesLecture 02lirina4508No ratings yet

- Study Material3Document6 pagesStudy Material3Praju ThoratNo ratings yet

- Fundamentals of Artificial Intelligence: Intelligent AgentsDocument19 pagesFundamentals of Artificial Intelligence: Intelligent AgentsjollyggNo ratings yet

- Agents & Environment in Ai: Submitted byDocument14 pagesAgents & Environment in Ai: Submitted byCLASS WORKNo ratings yet

- Lect 04Document5 pagesLect 04Govind TripathiNo ratings yet

- Lecture - 3 - 6Document23 pagesLecture - 3 - 6spandansahil15No ratings yet

- Agents and Envirornments - PEAS, Types - Session 4Document32 pagesAgents and Envirornments - PEAS, Types - Session 4Deepakraj SNo ratings yet

- Module III - AI - Agents & EnvironmentsDocument18 pagesModule III - AI - Agents & EnvironmentsEric LaciaNo ratings yet

- Ai 2Document26 pagesAi 2starpapion369No ratings yet

- 2.1 Agent and EnvironmentDocument39 pages2.1 Agent and Environmentashish.rawatNo ratings yet

- Artificial IntelligenceDocument29 pagesArtificial IntelligenceAasthaNo ratings yet

- unit 1 - Strcuture of an agent - Session 2Document22 pagesunit 1 - Strcuture of an agent - Session 2ULAGANATHAN MSNo ratings yet

- INTELLIGENT AGENTS VER2Document9 pagesINTELLIGENT AGENTS VER2Nicholas OmondiNo ratings yet

- Artificial Intelligence NotesDocument5 pagesArtificial Intelligence NotesmianhunainhamzaNo ratings yet

- Intelligent AgentsDocument27 pagesIntelligent AgentsMalik AwanNo ratings yet

- Types of AI AgentsDocument6 pagesTypes of AI AgentsSwapnil DargeNo ratings yet

- CH 2 Agents Type and StrcutureDocument28 pagesCH 2 Agents Type and StrcutureYonatan GetachewNo ratings yet

- Chapter Two SlideDocument33 pagesChapter Two SlideOz GNo ratings yet

- Week 2Document5 pagesWeek 2susanabdullahi1No ratings yet

- Lecture 03Document4 pagesLecture 03AjiaisiNo ratings yet

- AI - AgentsDocument31 pagesAI - AgentsSpiffyladdNo ratings yet

- AI AgentsDocument9 pagesAI AgentsNirmal Varghese Babu 2528No ratings yet

- Agents EnvironmentDocument2 pagesAgents Environmentmilan mottaNo ratings yet

- Agents in AIDocument14 pagesAgents in AIRitam MajumderNo ratings yet

- Ai1 2Document41 pagesAi1 2Head CSEAECCNo ratings yet

- Mod 2Document11 pagesMod 2Abhishek0001No ratings yet

- Types of AI AgentsDocument3 pagesTypes of AI AgentsShoumiq DeyNo ratings yet

- Artificial Intelligence: Agents and EnvironmentDocument27 pagesArtificial Intelligence: Agents and EnvironmentHashim Omar AbukarNo ratings yet

- AI NotesDocument11 pagesAI Noteshihebi7136No ratings yet

- Intelligent AgentsDocument60 pagesIntelligent AgentsUser NameNo ratings yet

- Chapter02 Intelligent AgentsDocument24 pagesChapter02 Intelligent AgentsAalaa HasanNo ratings yet

- Ai 2Document35 pagesAi 2bandookNo ratings yet

- Lesson 2 - Introduction To AgentDocument13 pagesLesson 2 - Introduction To AgentEmil StankovNo ratings yet

- Chapter 2Document28 pagesChapter 2Estefen ErmiasNo ratings yet

- 2b Agents Short NotesDocument7 pages2b Agents Short Notesceyikep910No ratings yet

- Intelligent AgentsDocument62 pagesIntelligent AgentsRaunak DasNo ratings yet

- HIT3002: Introduction To Artificial IntelligenceDocument21 pagesHIT3002: Introduction To Artificial IntelligenceHuan NguyenNo ratings yet

- Agents Artificial IntelligenceDocument32 pagesAgents Artificial IntelligencemohitkgecNo ratings yet

- Types of Agents: What Are Agent and Environment?Document6 pagesTypes of Agents: What Are Agent and Environment?Nazmul SharifNo ratings yet

- Unit 1 Intelligent Agents: B.Sc.I.T. (Sem V) Prepared By: Shilpa NimbreDocument41 pagesUnit 1 Intelligent Agents: B.Sc.I.T. (Sem V) Prepared By: Shilpa NimbreRitesh ThakurNo ratings yet

- Natural Environment of Typical AgentDocument3 pagesNatural Environment of Typical AgentDEVARAJ PNo ratings yet

- 18AI71 - AAI INTERNAL 1 QB AnswersDocument14 pages18AI71 - AAI INTERNAL 1 QB AnswersSahithi BhashyamNo ratings yet

- Ai AgentsDocument31 pagesAi Agentsmohammed umairNo ratings yet

- m2 AgentsDocument42 pagesm2 AgentsTariq IqbalNo ratings yet

- Agents in Artificial IntelligenceDocument7 pagesAgents in Artificial IntelligenceJAYANTA GHOSHNo ratings yet

- Ai-Unit 1Document88 pagesAi-Unit 1maharajan241jfNo ratings yet

- AI - Agents & EnvironmentsDocument6 pagesAI - Agents & EnvironmentsRainrock BrillerNo ratings yet

- Agents and EnvironmentDocument35 pagesAgents and EnvironmentliwatobNo ratings yet

- Ai 4Document24 pagesAi 4Kashif MehmoodNo ratings yet

- Artificial IntelligenceDocument9 pagesArtificial IntelligenceWodari HelenaNo ratings yet

- 2023 Slide2 Agents EngDocument35 pages2023 Slide2 Agents Engde.minhduongNo ratings yet

- Types of AgentssDocument4 pagesTypes of AgentssLukasi DeoneNo ratings yet

- Teach 02 - 2020 Chapter 2 - Intelligent AgentUpdatedDocument41 pagesTeach 02 - 2020 Chapter 2 - Intelligent AgentUpdatedabuNo ratings yet

- Agents in Artificial IntelligenceDocument20 pagesAgents in Artificial Intelligence517 Anjali KushvahaNo ratings yet

- Reinforcement Learning Explained - A Step-by-Step Guide to Reward-Driven AIFrom EverandReinforcement Learning Explained - A Step-by-Step Guide to Reward-Driven AINo ratings yet

- School Learning Recovery & Continuity PlanDocument52 pagesSchool Learning Recovery & Continuity PlanLuis Novenario100% (4)

- Cours Anglais Some ConnectorsDocument4 pagesCours Anglais Some ConnectorsFagarou rekNo ratings yet

- Group 5 Xi Jasper Concept PaperDocument2 pagesGroup 5 Xi Jasper Concept PaperKaede TylerNo ratings yet

- Department of Education: Individual Performance Commitment and Review Form (Ipcrf) Part I-IvDocument47 pagesDepartment of Education: Individual Performance Commitment and Review Form (Ipcrf) Part I-IvGraceNo ratings yet

- Goldstein Chapter 13Document49 pagesGoldstein Chapter 13Suruchi Bapat MalaoNo ratings yet

- Lesson Plan Unit 7: Viet Nam and International OrganisationsDocument5 pagesLesson Plan Unit 7: Viet Nam and International OrganisationsNgọc LêNo ratings yet

- Effects of Having A Relationship in The Studies of High School StudentsDocument3 pagesEffects of Having A Relationship in The Studies of High School StudentsMizhar Gerardo100% (1)

- Teacher Stress and Wellbeing Literature Review: ©daniela Falecki 2015Document8 pagesTeacher Stress and Wellbeing Literature Review: ©daniela Falecki 2015Eenee ChultemjamtsNo ratings yet

- 3rd Grade Product 2Document11 pages3rd Grade Product 2Daniel IbarraNo ratings yet

- The Idea Matrix PDFDocument3 pagesThe Idea Matrix PDFdenisNo ratings yet

- What Is Situational LeadershipDocument6 pagesWhat Is Situational LeadershipNyeko Francis100% (1)

- Week 2 Needs AnalysisDocument44 pagesWeek 2 Needs AnalysisIzzati che harrisNo ratings yet

- Open Problems of Information Systems Research and TechnologyDocument10 pagesOpen Problems of Information Systems Research and TechnologymikelNo ratings yet

- Name Britshy Rosanadya Huka-22216251126 Articles Review ELTTDocument11 pagesName Britshy Rosanadya Huka-22216251126 Articles Review ELTTBritshy Rosanadya Huka britshyrosanadya.2022No ratings yet

- Jurnal Information RetrievalDocument4 pagesJurnal Information Retrievalkidoseno85No ratings yet

- Module 2 - Good Manners and Right ConductDocument15 pagesModule 2 - Good Manners and Right ConductMarkhill Veran Tiosan100% (1)

- Yuang Bat Book1Document796 pagesYuang Bat Book1Naeem Ali SajadNo ratings yet

- Choice Under UncertaintyDocument34 pagesChoice Under Uncertaintymerige673642No ratings yet

- Criticism PDFDocument118 pagesCriticism PDFSeyed Pedram Refaei SaeediNo ratings yet

- 5 Things You Won't Learn at SchoolDocument2 pages5 Things You Won't Learn at SchoolSinovuyoPatrickMbondaNo ratings yet

- Ncba Uts Midterm ExaminationDocument3 pagesNcba Uts Midterm ExaminationXiu MinNo ratings yet

- Excerpt From Hegel For BeginnersDocument4 pagesExcerpt From Hegel For BeginnersavidadollarsNo ratings yet

- Lesson Plan - Week 6Document5 pagesLesson Plan - Week 6api-302835201No ratings yet

- Unidad 02 02 01 MODSIDocument45 pagesUnidad 02 02 01 MODSIRicardo ChuquilingNo ratings yet

- Abhishek TiwariDocument10 pagesAbhishek TiwariAbhishek TiwariNo ratings yet

- Bel 213 ReviewerDocument4 pagesBel 213 ReviewerJun Martine SalcedoNo ratings yet

- Toulmin Method Essay With Annotated Bib AssignmentsDocument2 pagesToulmin Method Essay With Annotated Bib Assignmentsapi-214013163No ratings yet

- Academic Dos and DontsDocument2 pagesAcademic Dos and DontsKrisha MarucotNo ratings yet

- Case StudyDocument11 pagesCase StudyJosenia ConstantinoNo ratings yet

- Math Module PDFDocument28 pagesMath Module PDFvince casimeroNo ratings yet