Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

27 viewsStanford CME241

Stanford CME241

Uploaded by

Mindaugas ZickusThe document provides an overview of a course on reinforcement learning for stochastic control problems in finance taught by Ashwin Rao at Stanford University in Winter 2021. It covers the instructor's background, course requirements, structure, grading policy, and resources. The course uses the framework of Markov decision processes to model sequential decision making problems under uncertainty and introduces key concepts like the value function, Bellman equations, and optimal policies.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You might also like

- PMP Study SheetDocument3 pagesPMP Study SheetRobincrusoe88% (26)

- Test Bank For Cognitive Neuroscience The Biology of The Mind Fifth Edition Fifth EditionDocument36 pagesTest Bank For Cognitive Neuroscience The Biology of The Mind Fifth Edition Fifth Editionshashsilenceri0g32100% (50)

- PT Mora Telematika Indonesia ("Moratelindo") : Summary To Annual ReportDocument30 pagesPT Mora Telematika Indonesia ("Moratelindo") : Summary To Annual ReportSeye BassirNo ratings yet

- CAPM Quick Reference GuideDocument2 pagesCAPM Quick Reference GuideFlorea Dan Mihai89% (9)

- Landsknecht Soldier 1486-1560 - John RichardDocument65 pagesLandsknecht Soldier 1486-1560 - John RichardKenneth Davis100% (4)

- A Comparative Literature Analysis of Definitions For Green and Sustainable Supply Chain ManagementDocument13 pagesA Comparative Literature Analysis of Definitions For Green and Sustainable Supply Chain Managementbelcourt100% (1)

- 31 SecretsDocument16 pages31 Secretsaswath698100% (3)

- Soa Exam Ifm: Study ManualDocument44 pagesSoa Exam Ifm: Study ManualHang Pham100% (1)

- DP Phys Topic 2 1 Unit Planner STDNDocument5 pagesDP Phys Topic 2 1 Unit Planner STDNapi-196482229No ratings yet

- ECSE4040RI Syllabus-S21Document6 pagesECSE4040RI Syllabus-S21TahmidAzizAbirNo ratings yet

- Range and Standard Deviation Lesson PlanDocument7 pagesRange and Standard Deviation Lesson Planapi-266325800No ratings yet

- LSAT PrepTest 75 Unlocked: Exclusive Data, Analysis & Explanations for the June 2015 LSATFrom EverandLSAT PrepTest 75 Unlocked: Exclusive Data, Analysis & Explanations for the June 2015 LSATNo ratings yet

- LSAT PrepTest 81 Unlocked: Exclusive Data, Analysis & Explanations for the June 2017 LSATFrom EverandLSAT PrepTest 81 Unlocked: Exclusive Data, Analysis & Explanations for the June 2017 LSATNo ratings yet

- Information About The Fall 2011 Final Exam For MBA 8135Document4 pagesInformation About The Fall 2011 Final Exam For MBA 8135yazanNo ratings yet

- Information About The Fall 2008 Final Exam For MBA 8135Document4 pagesInformation About The Fall 2008 Final Exam For MBA 8135yazanNo ratings yet

- Information About The Summer 2010 Final Exam For MBA 8135Document4 pagesInformation About The Summer 2010 Final Exam For MBA 8135yazanNo ratings yet

- Textbook Solutions Expert Q&A Practice: Find Solutions For Your HomeworkDocument6 pagesTextbook Solutions Expert Q&A Practice: Find Solutions For Your HomeworkMalik AsadNo ratings yet

- Repetitive Questions in PMP ExamDocument9 pagesRepetitive Questions in PMP ExamEngrRyanHussainNo ratings yet

- Introduction To The Course Matlab For Financial EngineeringDocument30 pagesIntroduction To The Course Matlab For Financial EngineeringqcfinanceNo ratings yet

- ESE400/540 Midterm Exam #1Document7 pagesESE400/540 Midterm Exam #1Kai LingNo ratings yet

- Dues Lesson PlanDocument6 pagesDues Lesson PlanDevyn DuesNo ratings yet

- Information About The Fall 2010 Final Exam For MBA 8135Document4 pagesInformation About The Fall 2010 Final Exam For MBA 8135yazanNo ratings yet

- Applied Biostatistics 2020 - 02 The R EnvironmentDocument27 pagesApplied Biostatistics 2020 - 02 The R EnvironmentYuki NoShinkuNo ratings yet

- 4th QTRDocument6 pages4th QTRapi-316781445No ratings yet

- PEA302 Analytical Skills-Ii 16763::vishal Ahuja 2.0 1.0 0.0 2.0 Courses With Placement FocusDocument11 pagesPEA302 Analytical Skills-Ii 16763::vishal Ahuja 2.0 1.0 0.0 2.0 Courses With Placement FocusSiddhant SinghNo ratings yet

- Information About The Spring 2009 Final Exam For MBA 8135Document4 pagesInformation About The Spring 2009 Final Exam For MBA 8135yazanNo ratings yet

- CS 9A (Matlab) Study GuideDocument46 pagesCS 9A (Matlab) Study Guideravidadhich7No ratings yet

- UbD Lesson Plan Algebra IIDocument8 pagesUbD Lesson Plan Algebra IIunayNo ratings yet

- Lewis Project Guidelines Manage StakeholdersDocument4 pagesLewis Project Guidelines Manage Stakeholdersanselm59No ratings yet

- Data NotebookDocument3 pagesData Notebookapi-264115497No ratings yet

- Multilinear ProblemStatementDocument132 pagesMultilinear ProblemStatementSBS MoviesNo ratings yet

- Day 4 Homework Vectors AnswersDocument7 pagesDay 4 Homework Vectors Answersafetmeeoq100% (1)

- ECON 1003 - Maths For Social Sciences IDocument4 pagesECON 1003 - Maths For Social Sciences IKalsia RobertNo ratings yet

- mt2116 Exc12Document19 pagesmt2116 Exc12gattlingNo ratings yet

- Syllabus of Econ 203Document2 pagesSyllabus of Econ 203Mariam SaberNo ratings yet

- Full Download PDF of Test Bank For Essentials of Business Statistics, 2nd Edition, Sanjiv Jaggia, Alison Kelly All ChapterDocument55 pagesFull Download PDF of Test Bank For Essentials of Business Statistics, 2nd Edition, Sanjiv Jaggia, Alison Kelly All Chapterrozsbelini100% (5)

- Vectors Homework SheetDocument7 pagesVectors Homework Sheetafnahsypzmbuhq100% (1)

- Csat Planner Arun SharmaDocument7 pagesCsat Planner Arun SharmaKranti KumarNo ratings yet

- 201810170247062208fall2018 m2 OncampusDocument4 pages201810170247062208fall2018 m2 OncampusSunil KumarNo ratings yet

- Ebook Ms Excel Lets Advance To The Next Level 2Nd Edition Anurag Singal Online PDF All ChapterDocument69 pagesEbook Ms Excel Lets Advance To The Next Level 2Nd Edition Anurag Singal Online PDF All Chapterpenny.martin922100% (8)

- DM First Day HandoutDocument21 pagesDM First Day HandoutZohairAdnanNo ratings yet

- Maths Sample Papers PDFDocument110 pagesMaths Sample Papers PDFbhumika mehtaNo ratings yet

- Linear Regression Homework AnswersDocument8 pagesLinear Regression Homework Answersh685zn8s100% (1)

- Talg 2020: Njrao 1Document27 pagesTalg 2020: Njrao 1merlin234No ratings yet

- Justifications On The ExamDocument5 pagesJustifications On The Examapi-522410297No ratings yet

- Quantitative Analysis Lecture - 1-Introduction: Associate Professor, Anwar Mahmoud Mohamed, PHD, Mba, PMPDocument41 pagesQuantitative Analysis Lecture - 1-Introduction: Associate Professor, Anwar Mahmoud Mohamed, PHD, Mba, PMPEngMohamedReyadHelesyNo ratings yet

- RSCH 122 Week 11 19Document5 pagesRSCH 122 Week 11 19MemisaNo ratings yet

- How To Select SET Exam Books?Document7 pagesHow To Select SET Exam Books?Priyadharshini ANo ratings yet

- PHY222 Syllabus F16Document10 pagesPHY222 Syllabus F16Edd BloomNo ratings yet

- MTH IpDocument10 pagesMTH IpLuvrockNo ratings yet

- Vijay Maths ProjectDocument54 pagesVijay Maths ProjectVijay MNo ratings yet

- Maths ProjectDocument27 pagesMaths ProjectDivy KatariaNo ratings yet

- PCA EFA CFE With RDocument56 pagesPCA EFA CFE With RNguyễn OanhNo ratings yet

- Mathematics Preliminary & HSC Course OutlineDocument10 pagesMathematics Preliminary & HSC Course OutlineTrungVo369No ratings yet

- Rubrics PDFDocument21 pagesRubrics PDFallanNo ratings yet

- QM Course Outline Sandeep 2017Document7 pagesQM Course Outline Sandeep 2017Sumedh SarafNo ratings yet

- Algebra 2 Crns 12-13 4th Nine WeeksDocument19 pagesAlgebra 2 Crns 12-13 4th Nine Weeksapi-201428071No ratings yet

- Statistics For Business DecisionsDocument4 pagesStatistics For Business Decisionskelvinmutum1No ratings yet

- Course Syllabus - Fall 2022 - ACISDocument9 pagesCourse Syllabus - Fall 2022 - ACISNS RNo ratings yet

- 7th Grade Math Team Lesson Plan Aug 11 - 18 2014Document5 pages7th Grade Math Team Lesson Plan Aug 11 - 18 2014conyersmiddleschool_7thgradeNo ratings yet

- FNCE3477 S17 SyllabusDocument5 pagesFNCE3477 S17 SyllabusJavkhlangerel BayarbatNo ratings yet

- Math 1702 2018 1Document20 pagesMath 1702 2018 1api-405072615No ratings yet

- Credit Card StatementDocument4 pagesCredit Card Statementirshad khanNo ratings yet

- Occupational TherapyDocument11 pagesOccupational TherapyYingVuong100% (1)

- Home Security Evaluation ChecklistDocument10 pagesHome Security Evaluation ChecklistEarl DavisNo ratings yet

- Shell Sulphur Degassing Process: Applications Operating Conditions BenefitsDocument2 pagesShell Sulphur Degassing Process: Applications Operating Conditions BenefitsSudeep MukherjeeNo ratings yet

- Vantage BrunswickDocument72 pagesVantage BrunswickNathan Bukoski100% (1)

- ME Module 1Document104 pagesME Module 1vinod kumarNo ratings yet

- Document 5Document1 pageDocument 5Joycie CalluengNo ratings yet

- Sample Motion For Early ResolutionDocument3 pagesSample Motion For Early ResolutionMark Pesigan0% (1)

- Stereo Isomerism - (Eng)Document32 pagesStereo Isomerism - (Eng)Atharva WatekarNo ratings yet

- The Magnacarta For Public School Teachers v.1Document70 pagesThe Magnacarta For Public School Teachers v.1France BejosaNo ratings yet

- Design Methods in Engineering and Product DesignDocument1 pageDesign Methods in Engineering and Product DesignjcetmechanicalNo ratings yet

- Foo Fighters - BreakoutDocument4 pagesFoo Fighters - BreakoutVanNo ratings yet

- ArubaDocument153 pagesArubaJavier Alca CoronadoNo ratings yet

- The Italian Madrigal Volume I (Etc.) (Z-Library)Document417 pagesThe Italian Madrigal Volume I (Etc.) (Z-Library)First NameNo ratings yet

- Diabetic TestsDocument13 pagesDiabetic Testsgauravsharm4No ratings yet

- Allcv Service Manual: Propeller ShaftDocument10 pagesAllcv Service Manual: Propeller ShaftMohan CharanchathNo ratings yet

- Entity Relationship Modeling: ObjectivesDocument13 pagesEntity Relationship Modeling: Objectivesniravthegreate999No ratings yet

- Advent Lessons & Carols 2019 - December 8 (Year A)Document20 pagesAdvent Lessons & Carols 2019 - December 8 (Year A)HopeNo ratings yet

- The Ichorous Peninsula - Twilight ProwlersDocument5 pagesThe Ichorous Peninsula - Twilight Prowlersmoniquefailace325No ratings yet

- Anarchist FAQ - Appendix 4Document5 pagesAnarchist FAQ - Appendix 418462No ratings yet

- Writing A BlogDocument5 pagesWriting A BlogJoyce SyNo ratings yet

- Informatica PIM Integrated Scenario DW MDM Stefan ReinhardtDocument16 pagesInformatica PIM Integrated Scenario DW MDM Stefan ReinhardtukdealsNo ratings yet

- Percentages PracticeDocument8 pagesPercentages PracticeChikanma OkoisorNo ratings yet

- Term 3 Cambridge BilalDocument60 pagesTerm 3 Cambridge BilalniaNo ratings yet

- Artificial Intelligence-Opportunities and Challenges For TheDocument9 pagesArtificial Intelligence-Opportunities and Challenges For TheChris CocNo ratings yet

Stanford CME241

Stanford CME241

Uploaded by

Mindaugas Zickus0 ratings0% found this document useful (0 votes)

27 views35 pagesThe document provides an overview of a course on reinforcement learning for stochastic control problems in finance taught by Ashwin Rao at Stanford University in Winter 2021. It covers the instructor's background, course requirements, structure, grading policy, and resources. The course uses the framework of Markov decision processes to model sequential decision making problems under uncertainty and introduces key concepts like the value function, Bellman equations, and optimal policies.

Original Description:

Original Title

Stanford-CME241

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document provides an overview of a course on reinforcement learning for stochastic control problems in finance taught by Ashwin Rao at Stanford University in Winter 2021. It covers the instructor's background, course requirements, structure, grading policy, and resources. The course uses the framework of Markov decision processes to model sequential decision making problems under uncertainty and introduces key concepts like the value function, Bellman equations, and optimal policies.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

27 views35 pagesStanford CME241

Stanford CME241

Uploaded by

Mindaugas ZickusThe document provides an overview of a course on reinforcement learning for stochastic control problems in finance taught by Ashwin Rao at Stanford University in Winter 2021. It covers the instructor's background, course requirements, structure, grading policy, and resources. The course uses the framework of Markov decision processes to model sequential decision making problems under uncertainty and introduces key concepts like the value function, Bellman equations, and optimal policies.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 35

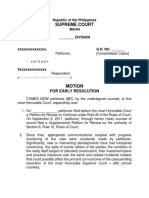

CME 241: Reinforcement Learning for

Stochastic Control Problems in Finance

Ashwin Rao

ICME, Stanford University

Winter 2021

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 1 / 35

Meet your Instructor

Joined Stanford ICME as Adjunct Faculty in Fall 2018

Research Interests: A.I. for Dynamic Decisioning under Uncertainty

Technical mentor to ICME students, partnerships with industry

Educational background: Algorithms Theory & Abstract Algebra

10 years at Goldman Sachs (NY) Rates/Mortgage Derivatives Trading

4 years at Morgan Stanley as Managing Director - Market Modeling

Founded Tech Startup ZLemma, Acquired by hired.com in 2015

One of our products was algorithmic jobs/career guidance for students

Teaching experience: Pure & Applied Math, CompSci, Finance, Mgmt

Current Industry Job: V.P. of A.I. at Target (the Retail company)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 2 / 35

Requirements and Setup

Pre-requisites:

Undergraduate-level background in Applied Mathematics (Multivariate

Analysis, Linear Algebra, Probability, Optimization)

Background in data structures/algorithms, fluency with numpy

Basic familiarity with Pricing, Portfolio Mgmt and Algo Trading, but

we will do an overview of the requisite Finance/Economics

No background required in MDP, DP, RL (we will cover from scratch)

Here’s last year’s final exam to get a sense of course difficulty

Register for the course on Piazza

Install Python 3 and supporting IDE/tools (eg: PyCharm, Jupyter)

Install LaTeX/Markdown and supporting editor for tech writing

Assignments and code in my book based on this open-source code

Fork this repo and get set up to use this code in assignments

Create separate directories for each assignment for CA (Sven Lerner)

to review - send Sven your forked repo URL and git push often

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 3 / 35

Housekeeping

Grade based on:

30% 48-hour Mid-Term Exam (on Theory, Modeling, Programming)

40% 48-hour Final Exam (on Theory, Modeling, Programming)

30% Assignments: Technical Writing and Programming

Lectures (on Zoom): Wed & Fri 4:00pm - 5:20pm, Jan 13 - Mar 19

Office Hours 1-4pm Fri (or by appointment) on Zoom

Course Web Site: cme241.stanford.edu

Ask Questions and engage in Discussions on Piazza

My e-mail: ashwin.rao@stanford.edu

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 4 / 35

Purpose and Grading of Assignments

Assignments shouldn’t be treated as “tests” with right/wrong answer

Rather, they should be treated as part of your learning experience

You will truly understand ideas/models/algorithms only when you

write down the Mathematics and the Code precisely

Simply reading Math/Code gives you a false sense of understanding

Take the initiative to make up your own assignments

Especially on topics you feel you don’t quite understand

Individual assignments won’t get a grade and there are no due dates

The CA will review once every 2 weeks and provide feedback

It will be graded less on correctness and completeness, and more on:

Coding and Technical Writing style that is clear and modular

Demonstration of curiosity and commitment to learning through the

overall body of assignments work

Engagement in asking questions and seeking feedback for improvements

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 5 / 35

Resources

Course based on the (incomplete) book I am currently writing

Supplementary/Optional reading: Sutton-Barto’s RL book

I prepare slides for each lecture (“guided tour” of respective chapter)

A couple of lecture slides are from David Silver’s RL course

Code in my book based on this open-source code

Reading this code as important as the reading of the theory

We will go over some classical papers on the Finance applications

Some supplementary/optional papers from Finance/RL

All resources organized on the course web site (“source of truth”)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 6 / 35

Stanford Honor Code - For Assignments versus Exams

Assignments: You can discuss solution approaches with other students

Because assignments are graded more for effort than correctness

Writing (answers/code) should be your own (don’t copy/paste)

You can invoke the core modules I have written (as instructed)

Exams: You cannot engage in any conversation with other students

Write to the CA if a question is unclear

Exams are graded on correctness and completeness

So don’t ask for help on how to solve exam questions

Open-internet Exams: Search for concepts, not answers to exam Qs

If you accidentally run into a strong hint/answer, state it honestly

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 7 / 35

A.I. for Dynamic Decisioning under Uncertainty

Let’s browse some terms used to characterize this branch of A.I.

Stochastic: Uncertainty in key quantities, evolving over time

Optimization: A well-defined metric to be maximized (“The Goal”)

Dynamic: Decisions need to be a function of the changing situations

Control: Overpower uncertainty by persistent steering towards goal

Jargon overload due to confluence of Control Theory, O.R. and A.I.

For language clarity, let’s just refer to this area as Stochastic Control

The core framework is called Markov Decision Processes (MDP)

Reinforcement Learning is a class of algorithms to solve MDPs

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 8 / 35

The MDP Framework

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 9 / 35

Components of the MDP Framework

The Agent and the Environment interact in a time-sequenced loop

Agent responds to [State, Reward] by taking an Action

Environment responds by producing next step’s (random) State

Environment also produces a (random) scalar denoted as Reward

Each State is assumed to have the Markov Property, meaning:

Next State/Reward depends only on Current State (for a given Action)

Current State captures all relevant information from History

Current State is a sufficient statistic of the future (for a given Action)

Goal of Agent is to maximize Expected Sum of all future Rewards

By controlling the (Policy : State → Action) function

This is a dynamic (time-sequenced control) system under uncertainty

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 10 / 35

Formal MDP Framework

The following notation is for discrete time steps. Continuous-time

formulation is analogous (often involving Stochastic Calculus)

Time steps denoted as t = 1, 2, 3, . . .

Markov States St ∈ S where S is the State Space

Actions At ∈ A where A is the Action Space

Rewards Rt ∈ R denoting numerical feedback

Transitions p(r , s 0 |s, a) = P[(Rt+1 = r , St+1 = s 0 )|St = s, At = a]

γ ∈ [0, 1] is the Discount Factor for Reward when defining Return

Return Gt = Rt+1 + γ · Rt+2 + γ 2 · Rt+3 + . . .

Policy π(a|s) is probability that Agent takes action a in states s

The goal is find a policy that maximizes E[Gt |St = s] for all s ∈ S

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 11 / 35

How a baby learns to walk

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 12 / 35

Many real-world problems fit this MDP framework

Self-driving vehicle (speed/steering to optimize safety/time)

Game of Chess (Boolean Reward at end of game)

Complex Logistical Operations (eg: movements in a Warehouse)

Make a humanoid robot walk/run on difficult terrains

Manage an investment portfolio

Control a power station

Optimal decisions during a football game

Strategy to win an election (high-complexity MDP)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 13 / 35

Self-Driving Vehicle

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 14 / 35

Why are these problems hard?

State space can be large or complex (involving many variables)

Sometimes, Action space is also large or complex

No direct feedback on “correct” Actions (only feedback is Reward)

Time-sequenced complexity (Actions influence future States/Actions)

Actions can have delayed consequences (late Rewards)

Agent often doesn’t know the Model of the Environment

“Model” refers to probabilities of state-transitions and rewards

So, Agent has to learn the Model AND solve for the Optimal Policy

Agent Actions need to tradeoff between “explore” and “exploit”

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 15 / 35

Value Function and Bellman Equations

Value function (under policy π) Vπ (s) = E[Gt |St = s] for all s ∈ S

X X

Vπ (s) = π(a|s) p(r , s 0 |s, a) · (r + γVπ (s 0 )) for all s ∈ S

a r ,s 0

Optimal Value Function V∗ (s) = maxπ Vπ (s) for all s ∈ S

X

V∗ (s) = max p(r , s 0 |s, a) · (r + γV∗ (s 0 )) for all s ∈ S

a

r ,s 0

There exists an Optimal Policy π∗ achieving V∗ (s) for all s ∈ S

Determining Vπ (s) known as Prediction, and V∗ (s) known as Control

The above recursive equations are called Bellman equations

In continuous time, refered to as Hamilton-Jacobi-Bellman (HJB)

The algorithms based on Bellman equations are broadly classified as:

Dynamic Programming

Reinforcement Learning

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 16 / 35

Dynamic Programming versus Reinforcement Learning

When Probabilities Model is known ⇒ Dynamic Programming (DP)

DP Algorithms take advantage of knowledge of probabilities

So, DP Algorithms do not require interaction with the environment

In the Language of A.I, DP is a type of Planning Algorithm

When Probabilities Model unknown ⇒ Reinforcement Learning (RL)

RL Algorithms interact with the Environment and incrementally learn

Environment interaction could be real or simulated interaction

RL approach: Try different actions & learn what works, what doesn’t

RL Algorithms’ key challenge is to tradeoff “explore” versus “exploit”

DP or RL, Good approximation of Value Function is vital to success

Deep Neural Networks are typically used for function approximation

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 17 / 35

Why is RL interesting/useful to learn about?

RL solves MDP problem when Environment Probabilities are unknown

This is typical in real-world problems (complex/unknown probabilities)

RL interacts with Actual Environment or with Simulated Environment

Promise of modern A.I. is based on success of RL algorithms

Potential for automated decision-making in many industries

In 10-20 years: Bots that act or behave more optimal than humans

RL already solves various low-complexity real-world problems

RL might soon be the most-desired skill in the technical job-market

Possibilities in Finance are endless (we cover 5 important problems)

Learning RL is a lot of fun! (interesting in theory as well as coding)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 18 / 35

Many Faces of Reinforcement Learning

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 19 / 35

Vague (but in-vogue) Classification of Machine Learning

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 20 / 35

Overview of the Course

Theory of Markov Decision Processes (MDPs)

Dynamic Programming (DP) Algorithms

Approximate DP and Backward Induction Algorithms

Reinforcement Learning (RL) Algorithms

Plenty of Python implementations of models and algorithms

Apply these algorithms to 5 Financial/Trading problems:

(Dynamic) Asset-Allocation to maximize Utility of Consumption

Pricing and Hedging of Derivatives in an Incomplete Market

Optimal Exercise/Stopping of Path-dependent American Options

Optimal Trade Order Execution (managing Price Impact)

Optimal Market-Making (Bids and Asks managing Inventory Risk)

By treating each of the problems as MDPs (i.e., Stochastic Control)

We will go over classical/analytical solutions to these problems

Then introduce real-world considerations, and tackle with RL (or DP)

Course blends Theory/Math, Algorithms/Coding, Real-World Finance

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 21 / 35

Optimal Asset Allocation to Maximize Consumption Utility

You can invest in (allocate wealth to) a collection of assets

Investment horizon is a fixed length of time

Each risky asset characterized by a probability distribution of returns

Periodically, you are re-allocate your wealth to the various assets

Transaction Costs & Constraints on trading hours/quantities/shorting

Allowed to consume a fraction of your wealth at specific times

Dynamic Decision: Time-Sequenced Allocation & Consumption

To maximize horizon-aggregated Risk-Adjusted Consumption

Risk-Adjustment involves a study of Utility Theory

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 22 / 35

MDP for Optimal Asset Allocation problem

State is [Current Time, Current Holdings, Current Prices]

Action is [Allocation Quantities, Consumption Quantity]

Actions limited by various real-world trading constraints

Reward is Utility of Consumption less Transaction Costs

State-transitions governed by risky asset movements

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 23 / 35

Derivatives Pricing and Hedging in an Incomplete Market

Classical Pricing/Hedging Theory assumes “frictionless market”

Technically, refered to as arbitrage-free and complete market

Complete market means derivatives can be perfectly replicated

But real world has transaction costs and trading constraints

So real markets are incomplete where classical theory doesn’t fit

How to price and hedge in an Incomplete Market?

Maximize “risk-adjusted-return” of the derivative plus hedges

Similar to Asset Allocation, this is a stochastic control problem

Deep Reinforcement Learning helps solve when framed as an MDP

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 24 / 35

MDP for Pricing/Hedging in an Incomplete Market

State is [Current Time, PnL, Hedge Qtys, Hedge Prices]

Action is Units of Hedges to be traded at each time step

Reward only at termination, equal to Utility of terminal PnL

State-transitions governed by evolution of hedge prices

Optimal Policy ⇒ Derivative Hedging Strategy

Optimal Value Function ⇒ Derivative Price

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 25 / 35

Optimal Exercise of Path-dependent American Options

An American option can be exercised anytime before option maturity

Key decision at any time is to exercise or continue

The default algorithm is Backward Induction on a tree/grid

But it doesn’t work for American options with complex payofss

Also, it’s not feasible when state dimension is large

Industry-Standard: Longstaff-Schwartz’s simulation-based algorithm

RL is an attractive alternative to Longstaff-Schwartz

RL is straightforward once Optimal Exercise is modeled as an MDP

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 26 / 35

MDP for Optimal American Options Exercise

State is [Current Time, History of Underlying Security Prices]

Action is Boolean: Exercise (i.e., Payoff and Stop) or Continue

Reward always 0, except upon Exercise (= Payoff)

State-transitions governed by Underlying Prices’ Stochastic Process

Optimal Policy ⇒ Optimal Stopping ⇒ Option Price

Can be generalized to other Optimal Stopping problems

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 27 / 35

Optimal Trade Order Execution (controlling Price Impact)

You are tasked with selling a large qty of a (relatively less-liquid) stock

You have a fixed horizon over which to complete the sale

Goal is to maximize aggregate sales proceeds over horizon

If you sell too fast, Price Impact will result in poor sales proceeds

If you sell too slow, you risk running out of time

We need to model temporary and permanent Price Impacts

Objective should incorporate penalty for variance of sales proceeds

Again, this amounts to maximizing Utility of sales proceeds

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 28 / 35

MDP for Optimal Trade Order Execution

State is [Time Remaining, Stock Remaining to be Sold, Market Info]

Action is Quantity of Stock to Sell at current time

Reward is Utility of Sales Proceeds (i.e., Variance-adjusted-Proceeds)

Reward & State-transitions governed by Price Impact Model

Real-world Model can be quite complex (Order Book Dynamics)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 29 / 35

Optimal Market-Making (controlling Inventory Buildup)

Market-maker’s job is to submit bid and ask prices (and sizes)

On the Trading Order Book (which moves due to other players)

Market-maker needs to adjust bid/ask prizes/sizes appropriately

By anticipating the Order Book Dynamics

Goal is to maximize Utility of Gains at the end of a suitable horizon

If Buy/Sell LOs are too narrow, more frequent but small gains

If Buy/Sell LOs are too wide, less frequent but large gains

Market-maker also needs to manage potential unfavorable inventory

(long or short) buildup and consequent unfavorable liquidation

This is a classical stochastic control problem

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 30 / 35

MDP for Optimal Market-Making

State is [Current Time, Mid-Price, PnL, Inventory of Stock Held]

Action is Bid & Ask Prices & Sizes at each time step

Reward is Utility of Gains at termination

State-transitions governed by probabilities of hitting/lifting Bid/Ask

Also governed by Order Book Dynamics (can be quite complex)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 31 / 35

Week by Week (Tentative) Schedule

W1: Markov Decision Processes

W2: Bellman Equations & Dynamic Programming Algorithms

W3: Backward Induction and Approximate DP Algorithms

W4: Optimal Asset Allocation & Derivatives Pricing/Hedging

W5: Options Exercise, Order Execution, Market-Making

Mid-Term Exam

W6: RL For Prediction (MC, TD, TD(λ))

W7: RL for Control (SARSA, Q-Learning)

W8: Batch Methods (DQN, LSTD/LSPI) and Gradient TD

W9: Policy Gradient and Actor-Critic Algorithms

W10: Model-based RL and Explore v/s Exploit

Final Exam

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 32 / 35

Getting a sense of the style and content of the lectures

A sampling of lectures to browse through and get a sense ...

Understanding Risk-Aversion through Utility Theory

HJB Equation and Merton’s Portfolio Problem

Derivatives Pricing and Hedging with Deep Reinforcement Learning

Stochastic Control for Optimal Market-Making

Policy Gradient Theorem and Compatible Approximation Theorem

Value Function Geometry and Gradient TD

Adapative Multistage Sampling Algorithm (Origins of MCTS)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 33 / 35

Some Landmark Papers we cover in this course

Merton’s solution for Optimal Portfolio Allocation/Consumption

Longstaff-Schwartz Algorithm for Pricing American Options

Almgren-Chriss paper on Optimal Order Execution

Avellaneda-Stoikov paper on Optimal Market-Making

Original DQN paper and Nature DQN paper

Lagoudakis-Parr paper on Least Squares Policy Iteration

Sutton, McAllester, Singh, Mansour’s Policy Gradient Theorem

Chang, Fu, Hu, Marcus’ AMS origins of Monte Carlo Tree Search

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 34 / 35

Similar Courses offered at Stanford

AA 228/CS 238 (Mykel Kochenderfer)

CS 234 (Emma Brunskill)

CS 332 (Emma Brunskill)

MS&E 338 (Ben Van Roy)

EE 277 (Ben Van Roy)

MS&E 251 (Edison Tse)

MS&E 348 (Gerd Infanger)

MS&E 351 (Ben Van Roy)

MS&E 339 (Ben Van Roy)

Ashwin Rao (Stanford) “RL for Finance” course Winter 2021 35 / 35

You might also like

- PMP Study SheetDocument3 pagesPMP Study SheetRobincrusoe88% (26)

- Test Bank For Cognitive Neuroscience The Biology of The Mind Fifth Edition Fifth EditionDocument36 pagesTest Bank For Cognitive Neuroscience The Biology of The Mind Fifth Edition Fifth Editionshashsilenceri0g32100% (50)

- PT Mora Telematika Indonesia ("Moratelindo") : Summary To Annual ReportDocument30 pagesPT Mora Telematika Indonesia ("Moratelindo") : Summary To Annual ReportSeye BassirNo ratings yet

- CAPM Quick Reference GuideDocument2 pagesCAPM Quick Reference GuideFlorea Dan Mihai89% (9)

- Landsknecht Soldier 1486-1560 - John RichardDocument65 pagesLandsknecht Soldier 1486-1560 - John RichardKenneth Davis100% (4)

- A Comparative Literature Analysis of Definitions For Green and Sustainable Supply Chain ManagementDocument13 pagesA Comparative Literature Analysis of Definitions For Green and Sustainable Supply Chain Managementbelcourt100% (1)

- 31 SecretsDocument16 pages31 Secretsaswath698100% (3)

- Soa Exam Ifm: Study ManualDocument44 pagesSoa Exam Ifm: Study ManualHang Pham100% (1)

- DP Phys Topic 2 1 Unit Planner STDNDocument5 pagesDP Phys Topic 2 1 Unit Planner STDNapi-196482229No ratings yet

- ECSE4040RI Syllabus-S21Document6 pagesECSE4040RI Syllabus-S21TahmidAzizAbirNo ratings yet

- Range and Standard Deviation Lesson PlanDocument7 pagesRange and Standard Deviation Lesson Planapi-266325800No ratings yet

- LSAT PrepTest 75 Unlocked: Exclusive Data, Analysis & Explanations for the June 2015 LSATFrom EverandLSAT PrepTest 75 Unlocked: Exclusive Data, Analysis & Explanations for the June 2015 LSATNo ratings yet

- LSAT PrepTest 81 Unlocked: Exclusive Data, Analysis & Explanations for the June 2017 LSATFrom EverandLSAT PrepTest 81 Unlocked: Exclusive Data, Analysis & Explanations for the June 2017 LSATNo ratings yet

- Information About The Fall 2011 Final Exam For MBA 8135Document4 pagesInformation About The Fall 2011 Final Exam For MBA 8135yazanNo ratings yet

- Information About The Fall 2008 Final Exam For MBA 8135Document4 pagesInformation About The Fall 2008 Final Exam For MBA 8135yazanNo ratings yet

- Information About The Summer 2010 Final Exam For MBA 8135Document4 pagesInformation About The Summer 2010 Final Exam For MBA 8135yazanNo ratings yet

- Textbook Solutions Expert Q&A Practice: Find Solutions For Your HomeworkDocument6 pagesTextbook Solutions Expert Q&A Practice: Find Solutions For Your HomeworkMalik AsadNo ratings yet

- Repetitive Questions in PMP ExamDocument9 pagesRepetitive Questions in PMP ExamEngrRyanHussainNo ratings yet

- Introduction To The Course Matlab For Financial EngineeringDocument30 pagesIntroduction To The Course Matlab For Financial EngineeringqcfinanceNo ratings yet

- ESE400/540 Midterm Exam #1Document7 pagesESE400/540 Midterm Exam #1Kai LingNo ratings yet

- Dues Lesson PlanDocument6 pagesDues Lesson PlanDevyn DuesNo ratings yet

- Information About The Fall 2010 Final Exam For MBA 8135Document4 pagesInformation About The Fall 2010 Final Exam For MBA 8135yazanNo ratings yet

- Applied Biostatistics 2020 - 02 The R EnvironmentDocument27 pagesApplied Biostatistics 2020 - 02 The R EnvironmentYuki NoShinkuNo ratings yet

- 4th QTRDocument6 pages4th QTRapi-316781445No ratings yet

- PEA302 Analytical Skills-Ii 16763::vishal Ahuja 2.0 1.0 0.0 2.0 Courses With Placement FocusDocument11 pagesPEA302 Analytical Skills-Ii 16763::vishal Ahuja 2.0 1.0 0.0 2.0 Courses With Placement FocusSiddhant SinghNo ratings yet

- Information About The Spring 2009 Final Exam For MBA 8135Document4 pagesInformation About The Spring 2009 Final Exam For MBA 8135yazanNo ratings yet

- CS 9A (Matlab) Study GuideDocument46 pagesCS 9A (Matlab) Study Guideravidadhich7No ratings yet

- UbD Lesson Plan Algebra IIDocument8 pagesUbD Lesson Plan Algebra IIunayNo ratings yet

- Lewis Project Guidelines Manage StakeholdersDocument4 pagesLewis Project Guidelines Manage Stakeholdersanselm59No ratings yet

- Data NotebookDocument3 pagesData Notebookapi-264115497No ratings yet

- Multilinear ProblemStatementDocument132 pagesMultilinear ProblemStatementSBS MoviesNo ratings yet

- Day 4 Homework Vectors AnswersDocument7 pagesDay 4 Homework Vectors Answersafetmeeoq100% (1)

- ECON 1003 - Maths For Social Sciences IDocument4 pagesECON 1003 - Maths For Social Sciences IKalsia RobertNo ratings yet

- mt2116 Exc12Document19 pagesmt2116 Exc12gattlingNo ratings yet

- Syllabus of Econ 203Document2 pagesSyllabus of Econ 203Mariam SaberNo ratings yet

- Full Download PDF of Test Bank For Essentials of Business Statistics, 2nd Edition, Sanjiv Jaggia, Alison Kelly All ChapterDocument55 pagesFull Download PDF of Test Bank For Essentials of Business Statistics, 2nd Edition, Sanjiv Jaggia, Alison Kelly All Chapterrozsbelini100% (5)

- Vectors Homework SheetDocument7 pagesVectors Homework Sheetafnahsypzmbuhq100% (1)

- Csat Planner Arun SharmaDocument7 pagesCsat Planner Arun SharmaKranti KumarNo ratings yet

- 201810170247062208fall2018 m2 OncampusDocument4 pages201810170247062208fall2018 m2 OncampusSunil KumarNo ratings yet

- Ebook Ms Excel Lets Advance To The Next Level 2Nd Edition Anurag Singal Online PDF All ChapterDocument69 pagesEbook Ms Excel Lets Advance To The Next Level 2Nd Edition Anurag Singal Online PDF All Chapterpenny.martin922100% (8)

- DM First Day HandoutDocument21 pagesDM First Day HandoutZohairAdnanNo ratings yet

- Maths Sample Papers PDFDocument110 pagesMaths Sample Papers PDFbhumika mehtaNo ratings yet

- Linear Regression Homework AnswersDocument8 pagesLinear Regression Homework Answersh685zn8s100% (1)

- Talg 2020: Njrao 1Document27 pagesTalg 2020: Njrao 1merlin234No ratings yet

- Justifications On The ExamDocument5 pagesJustifications On The Examapi-522410297No ratings yet

- Quantitative Analysis Lecture - 1-Introduction: Associate Professor, Anwar Mahmoud Mohamed, PHD, Mba, PMPDocument41 pagesQuantitative Analysis Lecture - 1-Introduction: Associate Professor, Anwar Mahmoud Mohamed, PHD, Mba, PMPEngMohamedReyadHelesyNo ratings yet

- RSCH 122 Week 11 19Document5 pagesRSCH 122 Week 11 19MemisaNo ratings yet

- How To Select SET Exam Books?Document7 pagesHow To Select SET Exam Books?Priyadharshini ANo ratings yet

- PHY222 Syllabus F16Document10 pagesPHY222 Syllabus F16Edd BloomNo ratings yet

- MTH IpDocument10 pagesMTH IpLuvrockNo ratings yet

- Vijay Maths ProjectDocument54 pagesVijay Maths ProjectVijay MNo ratings yet

- Maths ProjectDocument27 pagesMaths ProjectDivy KatariaNo ratings yet

- PCA EFA CFE With RDocument56 pagesPCA EFA CFE With RNguyễn OanhNo ratings yet

- Mathematics Preliminary & HSC Course OutlineDocument10 pagesMathematics Preliminary & HSC Course OutlineTrungVo369No ratings yet

- Rubrics PDFDocument21 pagesRubrics PDFallanNo ratings yet

- QM Course Outline Sandeep 2017Document7 pagesQM Course Outline Sandeep 2017Sumedh SarafNo ratings yet

- Algebra 2 Crns 12-13 4th Nine WeeksDocument19 pagesAlgebra 2 Crns 12-13 4th Nine Weeksapi-201428071No ratings yet

- Statistics For Business DecisionsDocument4 pagesStatistics For Business Decisionskelvinmutum1No ratings yet

- Course Syllabus - Fall 2022 - ACISDocument9 pagesCourse Syllabus - Fall 2022 - ACISNS RNo ratings yet

- 7th Grade Math Team Lesson Plan Aug 11 - 18 2014Document5 pages7th Grade Math Team Lesson Plan Aug 11 - 18 2014conyersmiddleschool_7thgradeNo ratings yet

- FNCE3477 S17 SyllabusDocument5 pagesFNCE3477 S17 SyllabusJavkhlangerel BayarbatNo ratings yet

- Math 1702 2018 1Document20 pagesMath 1702 2018 1api-405072615No ratings yet

- Credit Card StatementDocument4 pagesCredit Card Statementirshad khanNo ratings yet

- Occupational TherapyDocument11 pagesOccupational TherapyYingVuong100% (1)

- Home Security Evaluation ChecklistDocument10 pagesHome Security Evaluation ChecklistEarl DavisNo ratings yet

- Shell Sulphur Degassing Process: Applications Operating Conditions BenefitsDocument2 pagesShell Sulphur Degassing Process: Applications Operating Conditions BenefitsSudeep MukherjeeNo ratings yet

- Vantage BrunswickDocument72 pagesVantage BrunswickNathan Bukoski100% (1)

- ME Module 1Document104 pagesME Module 1vinod kumarNo ratings yet

- Document 5Document1 pageDocument 5Joycie CalluengNo ratings yet

- Sample Motion For Early ResolutionDocument3 pagesSample Motion For Early ResolutionMark Pesigan0% (1)

- Stereo Isomerism - (Eng)Document32 pagesStereo Isomerism - (Eng)Atharva WatekarNo ratings yet

- The Magnacarta For Public School Teachers v.1Document70 pagesThe Magnacarta For Public School Teachers v.1France BejosaNo ratings yet

- Design Methods in Engineering and Product DesignDocument1 pageDesign Methods in Engineering and Product DesignjcetmechanicalNo ratings yet

- Foo Fighters - BreakoutDocument4 pagesFoo Fighters - BreakoutVanNo ratings yet

- ArubaDocument153 pagesArubaJavier Alca CoronadoNo ratings yet

- The Italian Madrigal Volume I (Etc.) (Z-Library)Document417 pagesThe Italian Madrigal Volume I (Etc.) (Z-Library)First NameNo ratings yet

- Diabetic TestsDocument13 pagesDiabetic Testsgauravsharm4No ratings yet

- Allcv Service Manual: Propeller ShaftDocument10 pagesAllcv Service Manual: Propeller ShaftMohan CharanchathNo ratings yet

- Entity Relationship Modeling: ObjectivesDocument13 pagesEntity Relationship Modeling: Objectivesniravthegreate999No ratings yet

- Advent Lessons & Carols 2019 - December 8 (Year A)Document20 pagesAdvent Lessons & Carols 2019 - December 8 (Year A)HopeNo ratings yet

- The Ichorous Peninsula - Twilight ProwlersDocument5 pagesThe Ichorous Peninsula - Twilight Prowlersmoniquefailace325No ratings yet

- Anarchist FAQ - Appendix 4Document5 pagesAnarchist FAQ - Appendix 418462No ratings yet

- Writing A BlogDocument5 pagesWriting A BlogJoyce SyNo ratings yet

- Informatica PIM Integrated Scenario DW MDM Stefan ReinhardtDocument16 pagesInformatica PIM Integrated Scenario DW MDM Stefan ReinhardtukdealsNo ratings yet

- Percentages PracticeDocument8 pagesPercentages PracticeChikanma OkoisorNo ratings yet

- Term 3 Cambridge BilalDocument60 pagesTerm 3 Cambridge BilalniaNo ratings yet

- Artificial Intelligence-Opportunities and Challenges For TheDocument9 pagesArtificial Intelligence-Opportunities and Challenges For TheChris CocNo ratings yet