Professional Documents

Culture Documents

XCGDF

XCGDF

Uploaded by

nvbnvbnv0 ratings0% found this document useful (0 votes)

17 views8 pagesOriginal Title

xcgdf

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

17 views8 pagesXCGDF

XCGDF

Uploaded by

nvbnvbnvCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 8

Entity-driven Rewrite for Multi-document Summarization

Ani Nenkova

University of Pennsylvania

Department of Computer and Information Science

nenkova@seas.upenn.edu

Abstract as sentences depend on the length of the sentence

(Nenkova et al., 2006).

In this paper we explore the benefits from Sentence simplification or compaction algorithms

and shortcomings of entity-driven noun are driven mainly by grammaticality considerations.

phrase rewriting for multi-document sum- Whether approaches for estimating importance can

marization of news. The approach leads to be applied to units smaller than sentences and used

20% to 50% different content in the sum- in text rewrite in the summary production is a ques-

mary in comparison to an extractive sum- tion that remains unanswered. The option to operate

mary produced using the same underlying on smaller units, which can be mixed and matched

approach, showing the promise the tech- from the input to give novel combinations in the

nique has to offer. In addition, summaries summary, offers several possible advantages.

produced using entity-driven rewrite have Improve content Sometimes sentences in the in-

higher linguistic quality than a comparison put can contain both information that is very appro-

non-extractive system. Some improvement priate to include in a summary and information that

is also seen in content selection over extrac- should not appear in a summary. Being able to re-

tive summarization as measured by pyramid move unnecessary parts can free up space for better

method evaluation. content. Similarly, a sentence might be good over-

all, but could be further improved if more details

1 Introduction

about an entity or event are added in. Overall, a sum-

Two of the key components of effective summariza- marizer capable of operating on subsentential units

tions are the ability to identify important points in would in principle be better at content selection.

the text and to adequately reword the original text Improve readability Linguistic quality evalua-

in order to convey these points. Automatic text tion of automatic summaries in the Document Un-

summarization approaches have offered reasonably derstanding Conference reveals that summarizers

well-performing approximations for identifiying im- perform rather poorly on several readability aspects,

portant sentences (Lin and Hovy, 2002; Schiffman et including referential clarity. The gap between hu-

al., 2002; Erkan and Radev, 2004; Mihalcea and Ta- man and automatic performance is much larger for

rau, 2004; Daumé III and Marcu, 2006) but, not sur- linguistic quality aspects than for content selection.

prisingly, text (re)generation has been a major chal- In more than half of the automatic summaries there

lange despite some work on sub-sentential modifica- were entities for which it was not clear what/who

tion (Jing and McKeown, 2000; Knight and Marcu, they were and how they were related to the story.

2000; Barzilay and McKeown, 2005). An addi- The ability to add in descriptions for entities in the

tional drawback of extractive approaches is that es- summaries could improve the referential clarity of

timates for the importance of larger text units such summaries and can be achieved through text rewrite

of subsentential units. phrase realizations will be most appropriate depend-

IP issues Another very practical reason to be in- ing on what has been said in the summary up to the

terested in altering the original wording of sentences point at which rewrite takes place.

in summaries in a news browsing system involves in-

tellectual property issues. Newspapers are not will- 2 NP-rewrite enhanced frequency

ing to allow verbatim usage of long passages of summarizer

their articles on commercial websites. Being able to Frequency and frequency-related measures of im-

change the original wording can thus allow compa- portance have been traditionally used in text sum-

nies to include longer than one sentence summaries, marization as indicators of importance (Luhn, 1958;

which would increase user satisfaction (McKeown Lin and Hovy, 2000; Conroy et al., 2006). No-

et al., 2005). tably, a greedy frequency-driven approach leads to

These considerations serve as direct motivation very good results in content selection (Nenkova et

for exploring how a simple but effective summarizer al., 2006). In this approach sentence importance is

framework can accommodate noun phrase rewrite in measured as a function of the frequency in the in-

multi-document summarization of news. The idea put of the content words in that sentence. The most

is for each sentence in a summary to automatically important sentence is selected, the weight of words

examine the noun phrases in it and decide if a dif- in it are adjusted, and sentence weights are recom-

ferent noun phrase is more informative and should puted for the new weights beofre selecting the next

be included in the sentence in place of the original. sentence.

Consider the following example: This conceptually simple summarization ap-

proach can readily be extended to include NP rewrite

Sentence 1 The arrest caused an international con- and allow us to examine the effect of rewrite capa-

troversy. bilities on overall content selection and readability.

The specific algorithm for frequency-driven summa-

Sentence 2 The arrest in London of former Chilean

rization and rewrite is as follows:

dictator Augusto Pinochet caused an interna-

tional controversy. Step 1 Estimate the importance of each content

word wi based on its frequency in the input ni ,

Now, consider the situation where we need to ex- p(wi ) = nNi .

press in a summary that the arrest was controversial

and this is the first sentence in the summary, and sen- Step 2 For each sentence Sj in the input, estimate

tence 1 is available in the input (“The arrest caused its importance based on the words in the sen-

an international controversy”), as well as an unre- tence wi ∈ Sj : the weight of the sentence is

lated sentence such as “The arrest in London of for- equal to the average weight of content words

mer Chilean dictator Augusto Pinochet was widely appearing in it.

P

discussed in the British press”. NP rewrite can allow wi ∈Sj

p(wi )

us to form the rewritten sentence 2, which would be W eight(Sj ) = |wi ∈Sj |

a much more informative first sentence for the sum-

Step 3 Select the sentence with the highest weight.

mary: “The arrest in London of former Chilean dic-

tator Augusto Pinochet caused an international con- Step 4 For each maximum noun phrase N Pk in the

troversy”. Similarly, if sentence 2 is available in selected sentence

the input and it is selected in the summary after a

sentence that expresses the fact that the arrest took 4.1 For each coreferring noun phrase N Pi ,

place, it will be more appropriate to rewrite sentence such that N Pi ≡ N Pk from all

2 into sentence 1 for inclusion in the summary. input documents, compute a weight

This example shows the potential power of noun W eight(N Pi ) = FRW (wr ∈ N Pi ).

phrase rewrite. It also suggests that context will play 4.2 Select the noun phrase with the highest

a role in the rewrite process, since different noun weight and insert it in the sentence in

place of the original NP. In case of ties, The dependency tree definition of maximum noun

select the shorter noun phrase. phrase makes it easy to see why these are a good

unit for subsentential rewrite: the subtree that has

Step 5 For each content word in the rewritten sen- the head of the NP as a root contains only modifiers

tence, update its weight by setting it to 0. of the head, and by rewriting the noun phrase, the

Step 6 If the desired summary length has not been amount of information expressed about the head en-

reached, go to step 2. tity can be varied.

In our implementation, a context free grammar

Step 4 is the NP rewriting step. The function probabilistic parser (Charniak, 2000) was used to

FRW is the rewrite composition function that as- parse the input. The maximum noun phrases were

signs weights to noun phrases based on the impor- identified by finding sequences of <np>...</np>

tance of words that appear in the noun phrase. The tags in the parse such that the number of opening and

two options that we explore here are FRW ≡ Avr closing tags is equal. Each NP identified by such tag

and FRW ≡ Sum; the weight of an NP equals spans was considered as a candidate for rewrite.

the average weight or sum of weights of content Coreference classes A coreference class CRm is

words in the NP respectively. The two selections the class of all maximum noun phrases in the input

lead to different behavior in rewrite. FRW ≡ Avr that refer to the same entity Em . The general prob-

will generally prefer the shorter noun phrases, typ- lem of coreference resolution is hard, and is even

ically consisting of just the noun phrase head and more complicated for the multi-document summa-

it will overall tend to reduce the selected sentence. rization case, in which cross-document resolution

FRW ≡ Sum will behave quite differently: it will needs to be performed. Here we make a simplify-

insert relevant information that has not been con- ing assumption, stating that all noun phrases that

veyed by the summary so far (add a longer noun have the same noun as a head belong to the same

phrase) and will reduce the NP if the words in it coreference class. While we expected that this as-

already appear in the summary. This means that sumption would lead to some wrong decisions, we

FRW ≡ Sum will have the behavior close to what also suspected that in most common summarization

we expect for entity-centric rewrite: inluding more scenarios, even if there are more than one entities ex-

descriptive information at the first mention of the en- pressed with the same noun, only one of them would

tity, and using shorter references at subsequent men- be the main focus for the news story and will ap-

tions. pear more often across input sentences. References

Maximum noun phrases are the unit on which to such main entities will be likely to be picked in

NP rewrite operates. They are defined in a depen- a sentence for inclusion in the summary by chance

dency parse tree as the subtree that has as a root more often than other competeing entities. We thus

a noun such that there is no other noun on the used the head noun equivalance to form the classes.

path between it and the root of the tree. For ex- A post-evaluation inspection of the summaries con-

ample , there are two maximum NPs, with heads firmed that our assumption was correct and there

“police” and “Augusto Pinochet” in the sentence were only a small number of errors in the rewrit-

“British police arrested former Chilean dictator Au- ten summaries that were due to coreference errors,

gusto Pinochet”. The noun phrase “former chilean which were greatly outnumbered by parsing errors

dictator” is not a maximum NP, since there is a noun for example. In a future evaluation, we will evalu-

(augusto pinochet) on the path in the dependency ate the rewrite module assuming perfect coreference

tree between the noun “dictator” and the root of the and parsing, in order to see the impact of the core

tree. By definition a maximum NP includes all nom- NP-rewrite approach itself.

inal and adjectival premodifiers of the head, as well

as postmodifiers such as prepositional phrases, ap- 3 NP rewrite evaluation

positions, and relative clauses. This means that max-

imum NPs can be rather complex, covering a wide The NP rewrite summarization algorithm was ap-

range of production rules in a context-free grammar. plied to the 50 test sets for generic multi-document

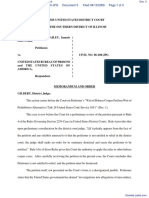

summarization from the 2004 Document Under- SYSTEM Q1 Q2 Q3 Q4 Q5

standing Conference. Two examples of its operation SUMId 4.06 4.12 3.80 3.80 3.20

with FRW ≡ Avr are shown below. SUMAvr 3.40 3.90 3.36 3.52 2.80

Original.1 While the British government defended SUMSum 2.96 3.34 3.30 3.48 2.80

the arrest, it took no stand on extradition of Pinochet peer 117 2.06 3.08 2.42 3.12 2.10

to Spain.

Table 1: Linguistic quality evaluation. Peer 117 was

NP-Rewite.1 While the British government de-

the only non-extractive system entry in DUC 2004;

fended the arrest in London of former Chilean dicta-

SUMId is the frequency summarizer with no NP

tor Augusto Pinochet, it took no stand on extradition

rewrite; and the two versions of rewrite with sum

of Pinochet to Spain.

and average as combination functions.

Original.2 Duisenberg has said growth in the euro

area countries next year will be about 2.5 percent,

lower than the 3 percent predicted earlier.

NP-Rewrite.2 Wim Duisenberg, the head of the new was the only real non-extractive summarizer partic-

European Central Bank, has said growth in the euro ipant at DUC 2004 (Vanderwende et al., 2004); the

area will be about 2.5 percent, lower than just 1 per- extractive frequency summarizer, and the two ver-

cent in the euro-zone unemployment predicted ear- sions of the rewrite algorithm (Sum and Avr). The

lier. evaluated rewritten summaries had potential errors

We can see that in both cases, the NP rewrite coming from different sources, such as coreference

pasted into the sentence important additional infor- resolution, parsing errors, sentence splitting errors,

mation. But in the second example we also see an as well as errors coming directly from rewrite, in

error that was caused by the simplifying assumption which an unsuitable NP is chosen to be included in

for the creation of the coreference classes accord- the summary. Improvements in parsing for exam-

ing to which the percentage of unemployment and ple could lead to better overall rewrite results, but

growth have been put in the same class. we evaluated the output as is, in order to see what

In order to estimate how much the summary is is the performance that can be expected in a realistic

changed because of the use of the NP rewrite, we setting for fully automatic rewrite.

computed the unigram overlap between the original The evaluation was done by five native English

extractive summary and the NP-rewrite summary. speakers, using the five DUC linguistic quality ques-

As expected, FF W ≡ Sum leads to bigger changes tions on grammaticality (Q1 ), repetition (Q2 ), refer-

and on average the rewritten summaries contained ential clarity (Q3 ), focus (Q4 ) and coherence (Q5 ).

only 54% of the unigrams from the extractive sum- Five evaluators were used so that possible idiosyn-

maries; for FRW ≡ Avr, there was a smaller change cratic preference of a single evaluator could be

between the extractive and the rewritten summary, avoided. Each evaluator evaluated all five sum-

with 79% of the unigrams being the same between maries for each test set, presented in a random order.

the two summaries. The results are shown in table 3.1. Each summary

was evaluated for each of the properties on a scale

3.1 Linguistic quality evaluation from 1 to 5, with 5 being very good with respect to

Noun phrase rewrite has the potential to improve the quality and 1, very bad.

the referential clarity of summaries, by inserting in Comparing NP rewrite to extraction Here we

the sentences more information about entities when would be interested in comparing the extractive fre-

such is available. It is of interest to see how the quency summarizer (SUMId ), and the two version of

rewrite version of the summarizer would compare systems that rewrite noun phrases: SUMAvr (which

to the extractive version, as well as how its linguis- changes about 20% of the text) and SUMSum (which

tic quality compares to that of other summarizers changes about 50% of the text). The general trend

that participated in DUC. Four summarizers were that we see for all five dimensions of linguistic qual-

evaluated: peer 117, which was a system that used ity is that the more the text is automatically altered,

generation techniques to produce the summary and the worse the linguistic quality of the summary

gets. In particular, the grammaticality of the sum- granularity for sentence changes and it can lead to

maries drops significantly for the rewrite systems. substantial altering of the text while preserving sig-

The increase of repetition is also significant between nificantly better overall readability.

SUMId and SUMSum. Error analysis showed that

sometimes increased repetition occurred in the pro- 3.2 Content selection evaluation

cess of rewrite for the following reason: the context We now examine the question of how the content in

weight update for words is done only after each noun the summaries changed due to the NP-rewrite, since

phrase in the sentence has been rewritten. Occasion- improving content selection was the other motiva-

ally, this led to a situation in which a noun phrase tion for exploring rewrite. In particular, we are in-

was augmented with information that was expressed terested in the change in content selection between

later in the original sentence. The referential clar- SUMSum and SUMId (the extractive version of the

ity of rewritten summaries also drops significantly, summarizer). We use SUMSum for the compari-

which is a rather disappointing result, since one of son because it led to bigger changes in the sum-

the motivations for doing noun phrase rewrite was mary text compared to the purely extractive version.

the desire to improve referential clarity by adding in- We used the pyramid evaluation method: four hu-

formation where such is necessary. One of the prob- man summaries for each input were manually ana-

lems here is that it is almost impossible for human lyzed to identify shared content units. The weight of

evaluators to ignore grammatical errors when judg- each content unit is equal to the number of model

ing referential clarity. Grammatical errors decrease summaries that express it. The pyramid score of

the overall readability and a summary that is given an automatic summary is equal to the weight of the

a lower grammaticality score tends to also receive content units expressed in the summary divided by

lower referential clarity score. This fact of quality the weight of an ideally informative summary of the

perception is a real challenge for summarizeration same length (the content unit identification is again

systems that move towards abstraction and alter the done manually by an annotator).

original wording of sentences since certainly auto- Of the 50 test sets, there were 22 sets in which

matic approaches are likely to introduce ingrammat- the NP-rewritten version had lower pyramid scores

icalities. than the extractive version of the summary, 23 sets

Comparing SUMSum and peer 117 We now turn in which the rewritten summaries had better scores,

to the comparison of between SUMSum and the gen- and 5 sets in which the rewritten and extractive sum-

eration based system 117. This system is unique maries had exactly the same scores. So we see that

among the DUC 2004 systems, and the only one in half of the cases the NP-rewrite actually improved

that year that experimented with generation tech- the content of the summary. The summarizer version

niques for summarization. System 117 is verb- that uses NP-rewrite has overall better content selec-

driven: it analizes the input in terms of predicate- tion performance than the purely extractive system.

argument triples and identifies the most important The original pyramid score increased from 0.4039 to

triples. These are then verbalized by a generation 0.4169 for the version with rewrite. This improve-

system originally developed as a realization compo- ment is not significant, but shows a trend in the ex-

nent in a machine translation engine. As a result, pected direction of improvement.

peer 117 possibly made even more changes to the The lack of significance in the improvement is due

original text then the NP-rewrite system. The results to large variation in performance: when np rewrite

of the comparison are consistent with the observa- worked as expected, content selection improved.

tion that the more changes are made to the original But on occasions when errors occurred, both read-

sentences, the more the readability of summaries de- ability and content selection were noticeably com-

creases. SUMSum is significantly better than peer promised. Here is an example of summaries for

117 on all five readability aspects, with notable dif- the same input in which the NP-rewritten version

ference in the grammaticality and referential quality, had better content. After each summary, we list the

for which SUMSum outperforms peer 117 by a full content units from the pyramid content analysis that

point. This indicates that NPs are a good candidate were expressed in the summary. The weight of each

content unit is given in brackets before the label of (4) Prodi lost a confidence vote

the unit and content units that differ between the ex- (4) Prodi will stay as caretaker until a new gov-

tractive and rewritten version are displayed in italic. ernment is formed

The rewritten version conveys high weight content (4) The Refounding Party is Italy’s Communist

units that do not appear in the extractive version, Party

with weights 4 (maximum weight here) and 3 re- (4) The Refounding Party rejected the govern-

spectively. ment’s budget

(3) Scalfaro must decide whether to hold new

Extractive summary Italy’s Communist Re- elections

founding Party rejected Prime Minister Prodi’s pro- (3) The dispute is over the 1999 budget

posed 1999 budget. By one vote, Premier Romano (2) Prodi’s coalition was center-left coalition

Prodi’s center-left coalition lost a confidence vote (2) The confidence vote was lost by only 1 vote

in the Chamber of Deputies Friday, and he went to (1) Prodi is the Italian Prime Minister

the presidential palace to rsign. Three days after the

collapse of Premier Romano Prodi’s center-left gov- Below is another example, showing the worse de-

ernment, Italy’s president began calling in political terioration of the rewritten summary compared to

leaders Monday to try to reach a consensus on a new the extractive one, both in terms of grammatical-

government. Prodi has said he would call a confi- ity and content. Here, the problem with repetition

dence vote if he lost the Communists’ support.” I during rewrite arises: the same person is mentioned

have always acted with coherence,” Prodi said be- twice in the sentence and at both places the same

fore a morning meeting with President Oscar Luigi. overly long description is selected during rewrie,

rendering the sentence practically unreadable.

(4) Prodi lost a confidence vote

(4) The Refounding Party is Italy’s Communist Extractive summary Police said Henderson and

Party McKinney lured Shepard from the bar by saying

(4) The Refounding Party rejected the govern- they too were gay and one of their girlfriends said

ment’s budget Shepard had embarrassed one of the men by mak-

ing a pass at him. 1,000 people mourned Matthew

(3) The dispute is over the 1999 budget

Shepherd, the gay University of Wyoming student

(2) Prodi’s coalition was center-left coalition who was severely beaten and left to die tied to a

(2) The confidence vote was lost by only 1 vote fence. With passersby spontaneously joining the

(1) Prodi is the Italian Prime Minister protest group, two women held another sign that

(1) Prodi wants a confidence vote from Parliament read,” No Hate Crimes in Wyoming.” Two candle-

light vigils were held Sunday night. Russell An-

derson, 21, and Aaron McKinney, 21, were charged

NP-rewrite version Communist Refounding, a

with attempted murder.

fringe group of hard-line leftists who broke with the

minstream Communists after they overhauled the

party following the collapse of Communism in East- (4) The victim was a student at the University of

ern Europe rejected Prime Minister Prodi’s proposed Wyoming

1999 budget. By only one vote, the center-left prime (4) The victim was brutally beaten

minister of Italy, Romano Prodi, lost The vote in the (4) The victim was openly gay

lower chamber of Parliament 313 against the con- (3) The crime was widely denounced

fidence motion brought by the government to 312 (3) The nearly lifeless body was tied to a fence

in favor in Parliament Friday and was toppled from (3) The victim died

power. President Oscar Luigi Scalfaro, who asked (3) The victim was left to die

him to stay on as caretaker premier while the head (2) The men were arrested on charges of kidnap-

of state decides whether to call elections. ping and attempted first degree murder

(2) There were candlelight vigils in support for putting pressure on system developers and prevent-

the victim ing them from fully exploring the strengths of gen-

(1) Russell Henderson and Aaron McKinney are eration techniques. It seems that if researchers

the names of the people responsible for the death in the field are to explore non-extractive methods,

they would need to compare their systems sepa-

NP-rewrite version Police said Henderson and rately from extractive systems, at least in the begin-

McKinney lured the The slight, soft-spoken 21- ning exploration stages. The development of non-

year-old Shepard, a freshman at the University of extractive approaches in absolutely necessary if au-

Wyoming, who became an overnight symbol of anti- tomatic summarization were to achieve levels of per-

gay violence after he was found dangling from the formance close to human, given the highly abstrac-

fence by a passerby from a bar by saying they too tive form of summaries written by people.

were gay and one of their girlfriends said the The Results also indicate that both extractive and non-

slight, soft-spoken 21-year-old Shepard, a fresh- extractive systems perform rather poorly in terms of

man at the University of Wyoming, who became an the focus and coherence of the summaries that they

overnight symbol of anti-gay violence after he was produce, identifying macro content planning as an

found dangling from the fence by a passerby had important area for summarization.

embarrassed one of the new ads in that supposedly

hate-free crusade.

References

(4) The victim was a student at the University of Regina Barzilay and Kathleen McKeown. 2005. Sen-

tence fusion for multidocument news summarization.

Wyoming Computational Linguistics, 31(3).

(3)The nearly lifeless body was tied to a fence (1)

A passerby found the victim Eugene Charniak. 2000. A maximum-entropy-inspired

parser. In NAACL-2000.

(1) Russell Henderson and Aaron McKinney are

the names of the people responsible for the death John Conroy, Judith Schlesinger, and Dianne O’Leary.

(1) The victim was 22-year old 2006. Topic-focused multi-document summarization

using an approximate oracle score. In Proceedings of

ACL, companion volume.

Even from this unsuccessful attempt for rewrite

we can see how changes of the original text can be Hal Daumé III and Daniel Marcu. 2006. Bayesian query-

focused summarization. In Proceedings of the Confer-

desirable, since some of the newly introduced infor- ence of the Association for Computational Linguistics

mation is in fact suitable for the summary. (ACL), Sydney, Australia.

4 Conclusions Gunes Erkan and Dragomir Radev. 2004. Lexrank:

Graph-based centrality as salience in text summa-

We have demonstrated that an entity-driven ap- rization. Journal of Artificial Intelligence Research

(JAIR).

proach to rewrite in multi-document summarization

can lead to considerably different summary, in terms Hongyan Jing and Kathleen McKeown. 2000. Cut

of content, compared to the extractive version of and paste based text summarization. In Proceedings

the same system. Indeed, the difference leads to of the 1st Conference of the North American Chap-

ter of the Association for Computational Linguistics

some improvement measurable in terms of pyramid (NAACL’00).

method evaluation. The approach also significantly

outperforms in linguistic quality a non-extractive Kevin Knight and Daniel Marcu. 2000. Statistics-based

summarization — step one: Sentence compression. In

event-centric system. Proceeding of The American Association for Artificial

Results also show that in terms of linguistic qual- Intelligence Conference (AAAI-2000), pages 703–710.

ity, extractive systems will be curently superior to

Chin-Yew Lin and Eduard Hovy. 2000. The automated

systems that alter the original wording from the in- acquisition of topic signatures for text summarization.

put. Sadly, extractive and abstractive systems are In Proceedings of the 18th conference on Computa-

evaluated together and compared against each other, tional linguistics, pages 495–501.

Chin-Yew Lin and Eduard Hovy. 2002. Automated

multi-document summarization in neats. In Proceed-

ings of the Human Language Technology Conference

(HLT2002 ).

H. P. Luhn. 1958. The automatic creation of literature

abstracts. IBM Journal of Research and Development,

2(2):159–165.

K. McKeown, R. Passonneau, D. Elson, A. Nenkova,

and J. Hirschberg. 2005. Do summaries help? a

task-based evaluation of multi-document summariza-

tion. In SIGIR.

R. Mihalcea and P. Tarau. 2004. Textrank: Bringing or-

der into texts. In Proceedings of EMNLP 2004, pages

404–411.

Ani Nenkova, Lucy Vanderwende, and Kathleen McKe-

own. 2006. A compositional context sensitive multi-

document summarizer: exploring the factors that influ-

ence summarization. In Proceedings of SIGIR.

Barry Schiffman, Ani Nenkova, and Kathleen McKeown.

2002. Experiments in multidocument summarization.

In Proceedings of the Human Language Technology

Conference.

Lucy Vanderwende, Michele Banko, and Arul Menezes.

2004. Event-centric summary generation. In Pro-

ceedings of the Document Understanding Conference

(DUC’04).

You might also like

- Introduction to Proof in Abstract MathematicsFrom EverandIntroduction to Proof in Abstract MathematicsRating: 5 out of 5 stars5/5 (1)

- Code of Ethics - Habib Bank Limited - PakistanDocument15 pagesCode of Ethics - Habib Bank Limited - PakistanPinkAlert100% (4)

- Automatic Text Summarization by Local Scoring and Ranking For Improving CoherenceDocument6 pagesAutomatic Text Summarization by Local Scoring and Ranking For Improving CoherenceWinda YulitaNo ratings yet

- Semantic Enrichment of Text With Background Knowledge: Anselmo Peñas Eduard HovyDocument9 pagesSemantic Enrichment of Text With Background Knowledge: Anselmo Peñas Eduard HovySanju KSNo ratings yet

- Garg Interspeech 2009Document4 pagesGarg Interspeech 2009Naning Putrinya Budi AstutikNo ratings yet

- Ji and Smith - 2017 - Neural Discourse Structure For Text CategorizationDocument10 pagesJi and Smith - 2017 - Neural Discourse Structure For Text CategorizationIlmNo ratings yet

- Automatic Summarisation of Legal DocumentsDocument10 pagesAutomatic Summarisation of Legal DocumentsShuklaNo ratings yet

- 37 127 1 PB PDFDocument25 pages37 127 1 PB PDFbhattajagdishNo ratings yet

- Chen, Bansal - 2018 - Fast Abstractive Summarization With Reinforce-Selected Sentence Rewriting-AnnotatedDocument12 pagesChen, Bansal - 2018 - Fast Abstractive Summarization With Reinforce-Selected Sentence Rewriting-AnnotatedMiguel Ignacio Valenzuela ParadaNo ratings yet

- A Corpus of Annotated Revisions For Studying Argumentative WritingDocument11 pagesA Corpus of Annotated Revisions For Studying Argumentative WritingcutestudentNo ratings yet

- Evaluation Measures For Text SummarizationDocument25 pagesEvaluation Measures For Text Summarizationsasok LeftNo ratings yet

- On Operationalizing Syntactic Complexity: Benedikt M. Szmrecs AnyiDocument8 pagesOn Operationalizing Syntactic Complexity: Benedikt M. Szmrecs AnyiDiego GruenfeldNo ratings yet

- Sem4 Coherence and Cohesion TextDocument11 pagesSem4 Coherence and Cohesion TextJohn TerryNo ratings yet

- Spanish Word Vectors From Wikipedia: Mathias Etcheverry, Dina WonseverDocument5 pagesSpanish Word Vectors From Wikipedia: Mathias Etcheverry, Dina WonseverPourya Pourya Love DiyanatiNo ratings yet

- FulltextDocument33 pagesFulltextRanjeet PandeyNo ratings yet

- Paper A Survey On ETSDocument6 pagesPaper A Survey On ETSmike yordanNo ratings yet

- Choubey Et Al. - 2020 - Discourse As A Function of Event Profiling Discourse Structure in NewsDocument13 pagesChoubey Et Al. - 2020 - Discourse As A Function of Event Profiling Discourse Structure in NewsIlmNo ratings yet

- Experiment 0Document1 pageExperiment 0202151085No ratings yet

- Word EmbeddingsDocument13 pagesWord EmbeddingsjoybbssNo ratings yet

- The Language Teacher Online 22.11: English For Technical WritingDocument3 pagesThe Language Teacher Online 22.11: English For Technical WritingAna Soledad MolderoNo ratings yet

- Modelling Second Language Performance: Integrating Complexity, Accuracy, Fluency, and LexisDocument23 pagesModelling Second Language Performance: Integrating Complexity, Accuracy, Fluency, and LexisenviNo ratings yet

- Automatic Summarization of Document Using Machine LearningDocument3 pagesAutomatic Summarization of Document Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- JC-Automatic Manifold Related Pages Reviewed by Jaccard's CoefficientDocument3 pagesJC-Automatic Manifold Related Pages Reviewed by Jaccard's Coefficienteditor_ijarcsseNo ratings yet

- Multinomial Naive Bayes For Text Categorization Revisited: (Amk14, Eibe, Bernhard, Geoff) @cs - Waikato.ac - NZDocument12 pagesMultinomial Naive Bayes For Text Categorization Revisited: (Amk14, Eibe, Bernhard, Geoff) @cs - Waikato.ac - NZBern Jonathan SembiringNo ratings yet

- 2020 Acl-Main 453 PDFDocument12 pages2020 Acl-Main 453 PDFFR FIRENo ratings yet

- Zhu, Bernhard, Gurevych - A Monolingual Tree-Based Translation Model For Sentence SimplificationDocument9 pagesZhu, Bernhard, Gurevych - A Monolingual Tree-Based Translation Model For Sentence SimplificationSamet Erişkin (samterk)No ratings yet

- Ai Unit - 5Document12 pagesAi Unit - 5KaranNo ratings yet

- 13zan PDFDocument9 pages13zan PDFElizabeth Sánchez LeónNo ratings yet

- Corpus TranslationDocument9 pagesCorpus TranslationElizabeth Sánchez LeónNo ratings yet

- A Survey On Methods of Text SummarizationDocument7 pagesA Survey On Methods of Text SummarizationInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- ExplanationDocument5 pagesExplanationAtefeHabibiNo ratings yet

- Project Note TemplateDocument8 pagesProject Note TemplateisaritaNo ratings yet

- Project Note TemplateDocument8 pagesProject Note TemplateisaritaNo ratings yet

- A Hybrid Bidirectional Recurrent Convolutional NeuDocument13 pagesA Hybrid Bidirectional Recurrent Convolutional NeusalmanppworkNo ratings yet

- A Study of Word Presentation in Vietnamese Sentiment AnalysisDocument6 pagesA Study of Word Presentation in Vietnamese Sentiment AnalysisDan NguyenNo ratings yet

- 2022 Coling-1 624Document9 pages2022 Coling-1 624lemNo ratings yet

- Conceptual Clustering of Text ClustersDocument9 pagesConceptual Clustering of Text ClustersheboboNo ratings yet

- Unsupervised Text Summarization Using Sentence Embeddings: Aishwarya Padmakumar Akanksha SaranDocument9 pagesUnsupervised Text Summarization Using Sentence Embeddings: Aishwarya Padmakumar Akanksha Saranpradeep_dhote9No ratings yet

- The Challenges of Using Neural Machine Translation For LiteratureDocument10 pagesThe Challenges of Using Neural Machine Translation For LiteratureTới TrầnNo ratings yet

- A Short Text Classification Method Based On Convolutional Neural Network and Semantic ExtensionDocument9 pagesA Short Text Classification Method Based On Convolutional Neural Network and Semantic ExtensionNguyễn Văn Hùng DũngNo ratings yet

- Synonym or Similar Word Detection in Assignment Papers: Gayatri BeheraDocument2 pagesSynonym or Similar Word Detection in Assignment Papers: Gayatri Beheraanil kasotNo ratings yet

- Document PDFDocument6 pagesDocument PDFTaha TmaNo ratings yet

- Light-Weight Multi-Document Summarization Based On Two-Pass Re-RankingDocument7 pagesLight-Weight Multi-Document Summarization Based On Two-Pass Re-RankingHelloprojectNo ratings yet

- Ambiguity Resolution in Incremental Parsing of Natural LanguageDocument13 pagesAmbiguity Resolution in Incremental Parsing of Natural LanguageMahalingam RamaswamyNo ratings yet

- A Semantic Theory of AdverbsDocument27 pagesA Semantic Theory of AdverbsGuillermo GarridoNo ratings yet

- Functionalpearl It's Easy As 1,2,3: Under Consideration For Publication in The Journal of Functional ProgrammingDocument16 pagesFunctionalpearl It's Easy As 1,2,3: Under Consideration For Publication in The Journal of Functional ProgrammingYaroslav GribovNo ratings yet

- Identifying Citing Sentences in Research Papers Using Supervised LearningDocument8 pagesIdentifying Citing Sentences in Research Papers Using Supervised LearningBrent FabialaNo ratings yet

- Paper Intro and Conclusion CorrectedDocument5 pagesPaper Intro and Conclusion CorrectedZafar HasanNo ratings yet

- Seminar ReportDocument31 pagesSeminar ReportManish Jha 2086No ratings yet

- What is Technical Text?Document33 pagesWhat is Technical Text?王睿麒No ratings yet

- PBL II (FinalPaper) - Group 73Document9 pagesPBL II (FinalPaper) - Group 73Adnan ShaikhNo ratings yet

- Totalrecall: A Bilingual Concordance For Computer Assisted Translation and Language LearningDocument4 pagesTotalrecall: A Bilingual Concordance For Computer Assisted Translation and Language LearningMichael Joseph Wdowiak BeijerNo ratings yet

- Creating Generic Text SummariesDocument5 pagesCreating Generic Text SummariesabhijitdasnitsNo ratings yet

- Features Selection and Weight Learning For Punjabi Text SummarizationDocument4 pagesFeatures Selection and Weight Learning For Punjabi Text Summarizationsurendiran123No ratings yet

- T5-Based Model For Abstractive Summarization A Semi-Supervised Learning Approach With Consistency Loss FunctionsDocument16 pagesT5-Based Model For Abstractive Summarization A Semi-Supervised Learning Approach With Consistency Loss Functionsonkarrborude02No ratings yet

- Text Summarization Using Sports Ontology With Graphical RepresentationDocument3 pagesText Summarization Using Sports Ontology With Graphical RepresentationInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Using Thematic Information in Statistical Headline GenerationDocument10 pagesUsing Thematic Information in Statistical Headline GenerationpostscriptNo ratings yet

- ConHAN: Contextualized Hierarchical Attention Networks For Authorship IdentificationDocument11 pagesConHAN: Contextualized Hierarchical Attention Networks For Authorship IdentificationJouaultNo ratings yet

- SocherBauerManningNg ACL2013 PDFDocument11 pagesSocherBauerManningNg ACL2013 PDFSr. RZNo ratings yet

- Combining Knowledge With Deep Convolutional Neural Networks For Short Text ClassificationDocument7 pagesCombining Knowledge With Deep Convolutional Neural Networks For Short Text ClassificationMatthew FengNo ratings yet

- A Proper GoodbyeDocument2 pagesA Proper GoodbyerichellehyattNo ratings yet

- KF Fs Eyid KL LK KD Eppwj Jpajhf: Ekj Nraw Ghlfs Mikal LK ! Xuq Fpize J Nraw GL KD Tukhw Gpujku NTZ Lnfhs !!Document34 pagesKF Fs Eyid KL LK KD Eppwj Jpajhf: Ekj Nraw Ghlfs Mikal LK ! Xuq Fpize J Nraw GL KD Tukhw Gpujku NTZ Lnfhs !!Jeya Balan AlagaratnamNo ratings yet

- Turning The Page - Why Are All The Black Kids Sitting Together Part 1 1.29.21Document16 pagesTurning The Page - Why Are All The Black Kids Sitting Together Part 1 1.29.21kshavers2tougalooNo ratings yet

- Faction War PDFDocument2 pagesFaction War PDFSophiaNo ratings yet

- The Ancient Mode of Production The City PDFDocument2 pagesThe Ancient Mode of Production The City PDFGilbert KirouacNo ratings yet

- GF3 Individual Inventory Record FormDocument2 pagesGF3 Individual Inventory Record FormAira Mae PustaNo ratings yet

- Elizabeth Darling Faith Based Sex Scandal Order Regarding George BushDocument3 pagesElizabeth Darling Faith Based Sex Scandal Order Regarding George BushBeverly TranNo ratings yet

- Samuel Colt's Peacemaker: The Advertising That Scared The WestDocument49 pagesSamuel Colt's Peacemaker: The Advertising That Scared The Westrad7998100% (1)

- Dancing Around DisabilitiesDocument9 pagesDancing Around DisabilitiesLuisa Fernanda Mondragon TenorioNo ratings yet

- Mx. Gad 2023Document3 pagesMx. Gad 2023Wany BerryNo ratings yet

- Indian National ArmyDocument2 pagesIndian National ArmybhagyalakshmipranjanNo ratings yet

- Research Paper Topics On CubaDocument5 pagesResearch Paper Topics On Cubaggteukwhf100% (1)

- Omandailyobserver 20231011 1Document20 pagesOmandailyobserver 20231011 1bskpremNo ratings yet

- (2018) 2 SLR 0655 PDFDocument128 pages(2018) 2 SLR 0655 PDFSulaiman CheliosNo ratings yet

- The Background of Human Rights: The Cyrus Cylinder (539 B.C.)Document7 pagesThe Background of Human Rights: The Cyrus Cylinder (539 B.C.)Mitch RoldanNo ratings yet

- Italy in The International System From Détente To The End of The Cold War: The Underrated Ally 1st Edition Antonio VarsoriDocument54 pagesItaly in The International System From Détente To The End of The Cold War: The Underrated Ally 1st Edition Antonio Varsoriwilbur.camacho849100% (15)

- Chapter-Ii Multiculturalism in UntouchableDocument55 pagesChapter-Ii Multiculturalism in Untouchablesangole.kNo ratings yet

- Multicultural Diversity:: A Challenge To Global TeachersDocument14 pagesMulticultural Diversity:: A Challenge To Global Teachersjessalyn ilada100% (1)

- Ah MahDocument24 pagesAh MahNathalie Alba0% (1)

- Andrew Michael Holness: Jamaica PresidentDocument2 pagesAndrew Michael Holness: Jamaica PresidentHoneylette MalabuteNo ratings yet

- Neill-Wycik Owner's Manual From 1989-1990 PDFDocument26 pagesNeill-Wycik Owner's Manual From 1989-1990 PDFNeill-Wycik100% (1)

- Tahun 4 2019Document24 pagesTahun 4 2019FAHIMAH BINTI ZAINON MoeNo ratings yet

- OBC FormatDocument2 pagesOBC FormatRahul KumarNo ratings yet

- Preservation of Native American LanguagesDocument7 pagesPreservation of Native American Languagesapi-573343523No ratings yet

- Lesson 2 Week 2 The Philippines in The 19th Century As Rizals Context by CSDDocument11 pagesLesson 2 Week 2 The Philippines in The 19th Century As Rizals Context by CSDMariel C. SegadorNo ratings yet

- Module 1 Cracks in The Revolution RPHDocument11 pagesModule 1 Cracks in The Revolution RPHChris William HernandezNo ratings yet

- Bailey v. United States Bureau of Prisons Et Al - Document No. 3Document3 pagesBailey v. United States Bureau of Prisons Et Al - Document No. 3Justia.comNo ratings yet

- Hard Core Power, Pleasure, and The Frenzy of The VisibleDocument340 pagesHard Core Power, Pleasure, and The Frenzy of The VisibleRyan Gliever100% (5)

- 1Document26 pages1chaitanya4friendsNo ratings yet