Professional Documents

Culture Documents

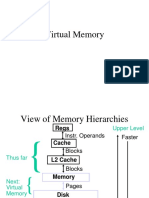

The Complete Memory Hierarchy

The Complete Memory Hierarchy

Uploaded by

Alexander TaylorOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Complete Memory Hierarchy

The Complete Memory Hierarchy

Uploaded by

Alexander TaylorCopyright:

Available Formats

Lecture 26

The Complete Memory Hierarchy

The Virtual Memory System

Virtual page number number

Valid 1 1 1 1 0 1 1 0 1 1 0 1 Virtual page Page table Physical page or disk address Physical memory

Disk storage

Page Size, Page Table and Disk Space

Large pages help to amortize disk access time. Large pages may lead to waste of memory. Small pages make the page table bigger. Large Virtual Address space means more space on disk may be used for Virtual Memory.

Handling Page Faults

Note: a cache miss is handled by the Control Unit (hardware), while a page fault is handled by an exception handler (software). Algorithm:

1. 2. 3. 4. 5. 6. Exception is raised. Instruction address is loaded into EPC. Locate page on disk. Is there space for loading a new page into physical memory? If not, choose a page to replace. Write replaced page to disk if necessary. Read new page. Restart instruction.

Implementing Page Replacement

Schemes:

FIFO Least Recently Used Most Recently Used Random ...

Hard to implement, but can be approximated in a number of ways. Second-chance is a commonly cited approximation.

Address Translation for Virtual Memory

Virtual address Virtual address

31 30 29 28 27 15 14 13 12 11 10 9 8 Page offset 3210

Virtual page number

Translation 29 28 27 15 14 13 12 Physical page number Physical address 11 10 9 8 Page offset 3210

Example: Page size = 4KB = 2^12 Bytes Physical memory size = 2^18 pages = 2^30 Bytes = 1 GB Virtual memory size = 2^32 Bytes = 4 GB

Mapping Mechanism for Virtual Memory

Page table register

Virtual address

31 30 29 28 27 15 14 13 12 11 10 9 8 Virtual page number 3 2 1 0 Page offset 12 Physical page number

20

Valid

Page table

(each program has its own)

Page table

18 If 0 then page is not present in memory 29 28 27 15 14 13 12 11 10 9 8 Physical page number Physical address 3 2 1 0 Page offset

Translation Lookaside Buffer

Some important points to notice:

Page tables reside somewhere in the Physical Memory. Physical Memory accesses are slow, thats why we have cache. To translate every Virtual Address into a Physical Address we need to access the page table.

To improve performance, create another cache:

The TLB is a cache containing frequently accessed entries in the page table. To translate a Virtual Address, we look for the page table info first in the TLB, if its not there, something like a cache miss happens.

If the TLB is a cache, how does one determine its degree of associativity?

Virtual page number

TLB TLB

Valid 1 1 1 1 0 1 Page table Physical page Validor disk address 1 1 1 1 0 1 1 0 1 1 0 1 Tag

Physical page address

Physical memory

Disk storage

Virtual address Virtual address 31 30 29 20 15 14 13 12 11 10 9 8 Virtual page number Page offset 12 3210

ValidDirty TLB TLB hit

Tag

Physical page number

20

Physical address tag 16

Physical page number Page offset Physical address Cache index 14 2

Byte offset

Valid

Tag

Data

Cache

32 Cache hit Data

10

Processing a Memory Reference

Virtual address TLB access

TLB miss exception

No

TLB hit?

Yes Physical address

No

Write?

Yes

Try to read data from cache

No

Write access bit on?

Yes

Cache miss stall

No

Cache hit?

Yes

Write protection exception

Write data into cache, update the tag, and put the data and the address into the write buffer

Deliver data to the CPU

11

Cache TLB Virtual Memory

Miss Miss Miss Miss Hit Hit Miss Miss Hit Hit Miss Miss Miss Hit Miss Hit Miss Hit

Possible?

TLB misses, then page fault, then the page is in, but the data is not in the cache. TLB misses, but the page is in memory, though not in the cache. IMPOSSIBLE: cant have a TLB hit if the page is not in memory. The data is not in the cache, but the page is in memory and we have a TLB hit. IMPOSSIBLE: the data cant be in the cache if its not in memory! TLB misses, but the entry is in the page table; when we retry we find the cached data. IMPOSSIBLE: cant have a TLB hit if the page is not in memory. TLB hit, so the page is in memory, and the data is in the cache.

Hit Hit

Hit Hit

Miss Hit

12

Cache, VM, Processes and Context Switch

Facts: There is only one TLB, shared by all running processes. There is only one cache memory, shared by all running processes. All running processes share the CPU (and FPU) registers.

When the CPU switches from one process to another, we must:

Save register contents, Update page table register, Flush the TLB (unless TLB entries are tagged with process id), Flush the cache (remember the cache stores physical addresses).

13

The Memory Hierarchy

Bus Split Cache Instruction Cache Data Cache Physical Memory

Level 2 Cache Disk Processor Harvard Architecture

14

high

Fine Tuning a Program for Memory Performance for (i=0; i<rows; i++)

A[2][1] A[2][0]

A[1][1] A[1][0]

for (j=0; j<columns; j++) A[i][j] = A[i][j] + i; for (j=0; j<columns; j++) for (i=0; i<rows; i++) A[i][j] = A[i][j] + i;

If you know how data is organized in memory, you can write your code in a way that minimizes cache misses and page faults.

A[0][1] A[0][0]

low

15

You might also like

- Scan Manual ALP CL 32 SDocument61 pagesScan Manual ALP CL 32 SPhong Le100% (2)

- Diversity Factor-Carrier Hadbook PDFDocument2 pagesDiversity Factor-Carrier Hadbook PDFالوليد الديسطىNo ratings yet

- Virtual Memory: CS151B/EE M116C Computer Systems ArchitectureDocument16 pagesVirtual Memory: CS151B/EE M116C Computer Systems ArchitecturetinhtrilacNo ratings yet

- PagingDocument76 pagesPagingAnonymous RuslwNZZlNo ratings yet

- Virtual MemoryDocument19 pagesVirtual Memoryah chongNo ratings yet

- Virtual MemoryDocument18 pagesVirtual MemoryAaditya UppalNo ratings yet

- Lecture 7 8405 Computer ArchitectureDocument7 pagesLecture 7 8405 Computer ArchitecturebokadashNo ratings yet

- Lecture12 Cda3101Document34 pagesLecture12 Cda3101BushraSNo ratings yet

- Virtual MDocument43 pagesVirtual MAkshay MehtaNo ratings yet

- CSE 380 Computer Operating Systems: Instructor: Insup LeeDocument66 pagesCSE 380 Computer Operating Systems: Instructor: Insup Leeah chongNo ratings yet

- Operating Systems Sina Meraji UoftDocument54 pagesOperating Systems Sina Meraji UoftRalphNo ratings yet

- 15.1.1 Single-Level Page TablesDocument2 pages15.1.1 Single-Level Page TablesDaveNo ratings yet

- Hardware Support For Virtual Memory: CS2100 - Computer OrganizationDocument25 pagesHardware Support For Virtual Memory: CS2100 - Computer OrganizationamandaNo ratings yet

- PagingDocument13 pagesPagingWaleed NadeemNo ratings yet

- Unit 3.2 Address Spaces, PagingDocument44 pagesUnit 3.2 Address Spaces, PagingPiyush PatilNo ratings yet

- Virtual Memory and Address Translation: ReviewDocument15 pagesVirtual Memory and Address Translation: Reviewarsi_marsNo ratings yet

- LEC78 Mem MGTDocument32 pagesLEC78 Mem MGTpaul mulwaNo ratings yet

- Virtual Memory: Use Main Memory As A "Cache" For Secondary (Disk) Storage Programs Share Main MemoryDocument7 pagesVirtual Memory: Use Main Memory As A "Cache" For Secondary (Disk) Storage Programs Share Main MemoryGurudas PaiNo ratings yet

- Presentation 3333Document20 pagesPresentation 3333mhmd2222No ratings yet

- Virtual Vs Physical AddressesDocument5 pagesVirtual Vs Physical Addressestapas_bayen9388No ratings yet

- Data and Instruction CachesDocument6 pagesData and Instruction Cachesmçmç ööçmçNo ratings yet

- Ch5 Memory Management - CONTINUEDocument47 pagesCh5 Memory Management - CONTINUEbibek gautamNo ratings yet

- FALLSEM2021-22 CSE2001 TH VL2021220103528 Reference Material I 13-10-2021 Virtual MemoryDocument23 pagesFALLSEM2021-22 CSE2001 TH VL2021220103528 Reference Material I 13-10-2021 Virtual MemoryISHITA GUPTA 20BCE0446No ratings yet

- 18 Cache1Document7 pages18 Cache1asdNo ratings yet

- Algorithm: Enhanced Second Chance Best Combination: Dirty Bit Is 0 and R Bit Is 0Document8 pagesAlgorithm: Enhanced Second Chance Best Combination: Dirty Bit Is 0 and R Bit Is 0SANA OMARNo ratings yet

- Virtual To Physical Address TranslationDocument36 pagesVirtual To Physical Address TranslationNagender GoudNo ratings yet

- Unit 4 2nd HalfDocument32 pagesUnit 4 2nd HalfSubin BacklashNo ratings yet

- TLB & Effective Access Time (EAT)Document4 pagesTLB & Effective Access Time (EAT)Nepal MalikNo ratings yet

- Windows Memory Management: Page Directory Page Tables Physical AddressDocument5 pagesWindows Memory Management: Page Directory Page Tables Physical Addressjesusda91No ratings yet

- VM PagingDocument14 pagesVM PagingciconelNo ratings yet

- Paging in OSDocument6 pagesPaging in OSPranjalNo ratings yet

- Paging: Introduction: 0 (Page 0 of The Address Space)Document15 pagesPaging: Introduction: 0 (Page 0 of The Address Space)Anonymous bbxuk9XNo ratings yet

- Virtual Memory and Memory Management Requirement: Presented By: Ankit Sharma Nitesh Pandey Manish KumarDocument32 pagesVirtual Memory and Memory Management Requirement: Presented By: Ankit Sharma Nitesh Pandey Manish KumarAnkit SinghNo ratings yet

- Windows Memory ManagementDocument31 pagesWindows Memory ManagementTech_MXNo ratings yet

- Virtual Memory: Illusion Much Larger Than The Physical MemoryDocument10 pagesVirtual Memory: Illusion Much Larger Than The Physical MemoryJimmie J MshumbusiNo ratings yet

- 2 Virtual MemoryDocument23 pages2 Virtual Memoryshailendra tripathiNo ratings yet

- Resume DilipDocument10 pagesResume Dilipkarlebabu1396No ratings yet

- ITEC352 Lecture28Document21 pagesITEC352 Lecture28ZulkarnineNo ratings yet

- Chapter 6Document37 pagesChapter 6shubhamvslaviNo ratings yet

- CS433: Computer System Organization: Main Memory Virtual Memory Translation Lookaside BufferDocument41 pagesCS433: Computer System Organization: Main Memory Virtual Memory Translation Lookaside Bufferapi-19861548No ratings yet

- More Elaborations With Cache & Virtual Memory: CMPE 421 Parallel Computer ArchitectureDocument31 pagesMore Elaborations With Cache & Virtual Memory: CMPE 421 Parallel Computer ArchitecturedjriveNo ratings yet

- 2 5 The TLBDocument6 pages2 5 The TLBPelebNo ratings yet

- Virtual MemoryDocument4 pagesVirtual MemoryMausam PokhrelNo ratings yet

- Assignment Unit 4Document18 pagesAssignment Unit 4Sachin KumarNo ratings yet

- CHAP8Document29 pagesCHAP8Vanu ShaNo ratings yet

- 7 Virtual Mem BWDocument17 pages7 Virtual Mem BWAlpana ChaudharyNo ratings yet

- Address Translation Mechanism of 80386Document49 pagesAddress Translation Mechanism of 80386prathmesh.vharkalcomp22No ratings yet

- Advance MPDocument10 pagesAdvance MPKhyatiNo ratings yet

- OS HW5 SolDocument5 pagesOS HW5 SolhammadNo ratings yet

- Computer Science 37 Lecture 25Document23 pagesComputer Science 37 Lecture 25Alexander TaylorNo ratings yet

- CSE 378 - Machine Organization & Assembly Language - Winter 2009 HW #4Document11 pagesCSE 378 - Machine Organization & Assembly Language - Winter 2009 HW #4Sia SharmaNo ratings yet

- Memory Paging and Replacement: Lawrence AngraveDocument23 pagesMemory Paging and Replacement: Lawrence Angravejoe natakaNo ratings yet

- Windows Memory ManagementDocument98 pagesWindows Memory ManagementSharon KarkadaNo ratings yet

- Virtual Memory (VM) : CIT 595 Spring 2007Document10 pagesVirtual Memory (VM) : CIT 595 Spring 2007psalmistryNo ratings yet

- Chapter 8 - Main Memory - Part IIDocument19 pagesChapter 8 - Main Memory - Part IIas ddadaNo ratings yet

- Lec4 Virt MemDocument8 pagesLec4 Virt Memah chongNo ratings yet

- Cache Memory: CS2100 - Computer OrganizationDocument45 pagesCache Memory: CS2100 - Computer OrganizationamandaNo ratings yet

- Virtual Memory Management: B.RamamurthyDocument34 pagesVirtual Memory Management: B.RamamurthymbaaswaniNo ratings yet

- The Informed Company: How to Build Modern Agile Data Stacks that Drive Winning InsightsFrom EverandThe Informed Company: How to Build Modern Agile Data Stacks that Drive Winning InsightsNo ratings yet

- Economics 1 Bentz Fall 2002 Topic 4Document63 pagesEconomics 1 Bentz Fall 2002 Topic 4Alexander TaylorNo ratings yet

- Economics 1 Bentz Fall 2002 Topic 2Document21 pagesEconomics 1 Bentz Fall 2002 Topic 2Alexander TaylorNo ratings yet

- Government 50: Vietnam WarDocument8 pagesGovernment 50: Vietnam WarAlexander TaylorNo ratings yet

- Government 50: World War I OverviewDocument9 pagesGovernment 50: World War I OverviewAlexander TaylorNo ratings yet

- Government 50: ClausewitzDocument3 pagesGovernment 50: ClausewitzAlexander TaylorNo ratings yet

- Economcs 21 Bentz X02 Topic4Document38 pagesEconomcs 21 Bentz X02 Topic4Alexander TaylorNo ratings yet

- Tuck Bridge Finance Module 12Document1 pageTuck Bridge Finance Module 12Alexander TaylorNo ratings yet

- Forging: Types of Forging 1. Hot Forging 2. Cold Forging Hot ForgingDocument22 pagesForging: Types of Forging 1. Hot Forging 2. Cold Forging Hot Forgingaman ubhiNo ratings yet

- Integration - YongyoonnotesDocument5 pagesIntegration - YongyoonnotesTeak TatteeNo ratings yet

- Linear Algebra ApplicationsDocument25 pagesLinear Algebra ApplicationsmeastroccsmNo ratings yet

- Condition of EquilibriumDocument15 pagesCondition of EquilibriumwengsungNo ratings yet

- Furukawa 1500-EDII - D20II - Engine Starting Circuit X PDFDocument28 pagesFurukawa 1500-EDII - D20II - Engine Starting Circuit X PDFthomaz100% (3)

- Chemical Thermodynamics (Chap 19) : Terms in This SetDocument5 pagesChemical Thermodynamics (Chap 19) : Terms in This SetNasir SarwarNo ratings yet

- AM012KNTDCHDocument1 pageAM012KNTDCHLindsay Elescano MartinezNo ratings yet

- Gas Turbine ControlDocument81 pagesGas Turbine ControlJonathan Anderson100% (1)

- Basic Helicopter Aerodynamics Power PoinDocument30 pagesBasic Helicopter Aerodynamics Power PoinANANTHA KRISHNAN O MNo ratings yet

- Registers & RecordsDocument11 pagesRegisters & RecordsShesha ShayanNo ratings yet

- Flow and LevellingDocument2 pagesFlow and LevellingKrushna KakdeNo ratings yet

- CP 4Document23 pagesCP 4Soll HaileNo ratings yet

- Etabs ManualDocument108 pagesEtabs ManualmajmunicahuhuhuNo ratings yet

- IIT-Roorkee-HR Analytics-29922Document25 pagesIIT-Roorkee-HR Analytics-29922Lakshav KapoorNo ratings yet

- JPJC H2 Chemistry P2 QPDocument18 pagesJPJC H2 Chemistry P2 QPantesipation ฅ'ω'ฅNo ratings yet

- Module 8 Computing The Point Estimate of A Population MeanDocument4 pagesModule 8 Computing The Point Estimate of A Population MeanLOUISE NICOLE ALCALANo ratings yet

- Simulation of Part2: No DataDocument10 pagesSimulation of Part2: No DataGOUAL SaraNo ratings yet

- Cisco Access Control Lists (ACL)Document54 pagesCisco Access Control Lists (ACL)Paul100% (2)

- Decision Tree For 3-D Connected Components LabelingDocument5 pagesDecision Tree For 3-D Connected Components LabelingPhaisarn SutheebanjardNo ratings yet

- Basic Understanding of P&IDDocument54 pagesBasic Understanding of P&IDkaezzar1093% (14)

- M3L3 Solving First Degree InequalitiesDocument3 pagesM3L3 Solving First Degree Inequalitiesdorris09No ratings yet

- TS1-OIL Rev. UDocument7 pagesTS1-OIL Rev. UMangBedjoNo ratings yet

- Array Waveguide Gratings (AWG)Document15 pagesArray Waveguide Gratings (AWG)Mukunda Saiteja AnnamNo ratings yet

- Knust School of Engineering: Abp Consult LTDDocument1 pageKnust School of Engineering: Abp Consult LTDKwaku frimpongNo ratings yet

- Safety Light Curtains: EOS4 903 XDocument3 pagesSafety Light Curtains: EOS4 903 XRogério FradiganoNo ratings yet

- Black Smithy ShopDocument3 pagesBlack Smithy ShopAmarjeet Singh (Assistant Professor- Mechanical Engineer)No ratings yet

- Exercises For Great Hands & Fills: By: Pat PetrilloDocument14 pagesExercises For Great Hands & Fills: By: Pat PetrilloYoon Ji-hoonNo ratings yet

- Kant's Views On Space and Time - Notes (Stanford Encyclopedia of Philosophy)Document4 pagesKant's Views On Space and Time - Notes (Stanford Encyclopedia of Philosophy)yurislvaNo ratings yet