Professional Documents

Culture Documents

Discriminant Analysis:, Y,, y Iid F I 1, H. Here, Let F

Discriminant Analysis:, Y,, y Iid F I 1, H. Here, Let F

Uploaded by

rishantghoshCopyright:

Available Formats

You might also like

- Working Capital Simulation: Managing Growth V2Document5 pagesWorking Capital Simulation: Managing Growth V2Abdul Qader100% (6)

- 101 FDI-SinDocument49 pages101 FDI-SinLê Hoàng Việt HảiNo ratings yet

- The Logic of Statistical Tests of SignificanceDocument19 pagesThe Logic of Statistical Tests of SignificanceWander Lars0% (1)

- Unit 4 Ci 2017Document22 pagesUnit 4 Ci 2017kshpv22No ratings yet

- Assignment 1 StatisticsDocument6 pagesAssignment 1 Statisticsjaspreet46No ratings yet

- Unit-8 IGNOU STATISTICSDocument15 pagesUnit-8 IGNOU STATISTICSCarbidemanNo ratings yet

- PredictionDocument28 pagesPredictionicelon NNo ratings yet

- Downloadartificial Intelligence - Unit 3Document20 pagesDownloadartificial Intelligence - Unit 3blazeNo ratings yet

- Block 7Document34 pagesBlock 7Harsh kumar MeerutNo ratings yet

- Unit 18Document12 pagesUnit 18Harsh kumar MeerutNo ratings yet

- Introducing ProbabilityDocument25 pagesIntroducing ProbabilityDebracel FernandezNo ratings yet

- Slides CH 14Document50 pagesSlides CH 14vasty.overbeekNo ratings yet

- Testing of Hypothesis Unit-IDocument16 pagesTesting of Hypothesis Unit-IanohanabrotherhoodcaveNo ratings yet

- Notes On EstimationDocument4 pagesNotes On EstimationAnandeNo ratings yet

- Assignment 2Document19 pagesAssignment 2Ehsan KarimNo ratings yet

- Chapter8 (Law of Numbers)Document24 pagesChapter8 (Law of Numbers)Lê Thị Ngọc HạnhNo ratings yet

- Binomial DistributionDocument6 pagesBinomial DistributionMd Delowar Hossain MithuNo ratings yet

- Introduction To Hypothesis Testing, Power Analysis and Sample Size CalculationsDocument8 pagesIntroduction To Hypothesis Testing, Power Analysis and Sample Size CalculationsFanny Sylvia C.No ratings yet

- Lecture 5 Bayesian Classification 3Document103 pagesLecture 5 Bayesian Classification 3musaNo ratings yet

- MLT Assignment 1Document13 pagesMLT Assignment 1Dinky NandwaniNo ratings yet

- StatsDocument25 pagesStatsVenu KumarNo ratings yet

- ProbabilityDistributions 61Document61 pagesProbabilityDistributions 61Rmro Chefo LuigiNo ratings yet

- Probability in Computing: Lecture 8: Central Limit TheoremsDocument16 pagesProbability in Computing: Lecture 8: Central Limit TheoremsLương Mạnh ĐạtNo ratings yet

- Unit Iii: Reasoning Under Uncertainty: Logics of Non-Monotonic Reasoning - Implementation-BasicDocument24 pagesUnit Iii: Reasoning Under Uncertainty: Logics of Non-Monotonic Reasoning - Implementation-BasicNANDHINI AKNo ratings yet

- Hypothesis Testing TopicDocument18 pagesHypothesis Testing TopicjermyneducusinandresNo ratings yet

- Statistics With R Unit 3Document11 pagesStatistics With R Unit 3Pragatheeswaran shankarNo ratings yet

- Question 8 PDFDocument9 pagesQuestion 8 PDFAleciafyNo ratings yet

- Stat Hypothesis TestingDocument14 pagesStat Hypothesis TestingJosh DavidNo ratings yet

- Statistic SimpleLinearRegressionDocument7 pagesStatistic SimpleLinearRegressionmfah00No ratings yet

- ES 209 Lecture Notes Week 14Document22 pagesES 209 Lecture Notes Week 14Janna Ann JurialNo ratings yet

- Basic Concepts in Hypothesis Testing (Rosalind L P Phang)Document7 pagesBasic Concepts in Hypothesis Testing (Rosalind L P Phang)SARA MORALES GALVEZNo ratings yet

- Lecture 13Document21 pagesLecture 13Syed Asad Asif HashmiNo ratings yet

- Topic4 Bayes With RDocument20 pagesTopic4 Bayes With RDanar HandoyoNo ratings yet

- ES714glm Generalized Linear ModelsDocument26 pagesES714glm Generalized Linear ModelsscurtisvainNo ratings yet

- Lecture 7 9Document16 pagesLecture 7 9kenkensayan143No ratings yet

- Statistics 21march2018Document25 pagesStatistics 21march2018Parth JatakiaNo ratings yet

- DS-2, Week 3 - LecturesDocument11 pagesDS-2, Week 3 - LecturesPrerana VarshneyNo ratings yet

- STAT2120: Categorical Data Analysis Chapter 1: IntroductionDocument51 pagesSTAT2120: Categorical Data Analysis Chapter 1: IntroductionRicardo TavaresNo ratings yet

- The T TEST An IntroductionDocument5 pagesThe T TEST An IntroductionthishaniNo ratings yet

- Hypothesis Testing Is One of The Most Important Concepts in Statistics Because It Is How You DecideDocument2 pagesHypothesis Testing Is One of The Most Important Concepts in Statistics Because It Is How You DecideUsman Younas0% (1)

- Chi-Square Test: Advance StatisticsDocument26 pagesChi-Square Test: Advance StatisticsRobert Carl GarciaNo ratings yet

- ECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1Document7 pagesECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1wandalexNo ratings yet

- 5.2 - Sampling Distribution of X ̅ - Introduction To Statistics-2021 - LagiosDocument15 pages5.2 - Sampling Distribution of X ̅ - Introduction To Statistics-2021 - LagiosAmandeep SinghNo ratings yet

- Lecture 11: Standard Error, Propagation of Error, Central Limit Theorem in The Real WorldDocument13 pagesLecture 11: Standard Error, Propagation of Error, Central Limit Theorem in The Real WorldWisnu van NugrooyNo ratings yet

- MMPC-5 ImpDocument32 pagesMMPC-5 ImpRajith K100% (1)

- qm2 NotesDocument9 pagesqm2 NotesdeltathebestNo ratings yet

- Wickham StatiDocument12 pagesWickham StatiJitendra K JhaNo ratings yet

- Module 2 in IStat 1 Probability DistributionDocument6 pagesModule 2 in IStat 1 Probability DistributionJefferson Cadavos CheeNo ratings yet

- Life Table AnalysisDocument13 pagesLife Table AnalysisYusfida Mariatul HusnaNo ratings yet

- Entropy: Statistical Information: A Bayesian PerspectiveDocument11 pagesEntropy: Statistical Information: A Bayesian PerspectiveMiguel Angel HrndzNo ratings yet

- Bin Pois PRIDocument19 pagesBin Pois PRIImdadul HaqueNo ratings yet

- Topic Probability DistributionsDocument25 pagesTopic Probability DistributionsIzzahIkramIllahi100% (1)

- RM Study Material - Unit 4Document66 pagesRM Study Material - Unit 4Nidhip ShahNo ratings yet

- Slide 1: Standard Normal DistributionDocument5 pagesSlide 1: Standard Normal DistributionShaira IwayanNo ratings yet

- Applications of ProbabilityDocument11 pagesApplications of ProbabilityJeffreyReyesNo ratings yet

- 18.443 Statistics For Applications: Mit OpencoursewareDocument2 pages18.443 Statistics For Applications: Mit OpencoursewareLionel CarlosNo ratings yet

- Thermal Physics Lecture 3Document7 pagesThermal Physics Lecture 3OmegaUserNo ratings yet

- Uzair Talpur 1811162 Bba 4B Statistical Inference AssignmentDocument15 pagesUzair Talpur 1811162 Bba 4B Statistical Inference Assignmentuzair talpurNo ratings yet

- Confidence Intervals-summer22-Lecture11Document23 pagesConfidence Intervals-summer22-Lecture11Abu Bakar AbbasiNo ratings yet

- Sampling DistributionDocument41 pagesSampling DistributionmendexofficialNo ratings yet

- Mathematical Foundations of Information TheoryFrom EverandMathematical Foundations of Information TheoryRating: 3.5 out of 5 stars3.5/5 (9)

- Learn Statistics Fast: A Simplified Detailed Version for StudentsFrom EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNo ratings yet

- Bangladesh Payment & Settlement System PDFDocument18 pagesBangladesh Payment & Settlement System PDFrokib0116No ratings yet

- Wittek Stereo Surround PDFDocument43 pagesWittek Stereo Surround PDFАлександр СидоровNo ratings yet

- Journal CorrectionsDocument6 pagesJournal CorrectionsChaitanya AlavalapatiNo ratings yet

- ACP-EU Business Climate Facility Awarded 'Investment Climate Initiative of The Year'-2009Document1 pageACP-EU Business Climate Facility Awarded 'Investment Climate Initiative of The Year'-2009dmaproiectNo ratings yet

- Blogs Sap Com 2019 07 28 Sap Hana DB Disk Persistence Shrink Hana Data VolumeDocument6 pagesBlogs Sap Com 2019 07 28 Sap Hana DB Disk Persistence Shrink Hana Data VolumePrasad BoddapatiNo ratings yet

- Art StylesDocument34 pagesArt StylesAdrienne Dave MojicaNo ratings yet

- Mycophenolate Information For Parents/CarersDocument12 pagesMycophenolate Information For Parents/CarersDavid LopezNo ratings yet

- Qualities of Good Measuring InstrumentsDocument4 pagesQualities of Good Measuring InstrumentsMaricar Dela Peña56% (9)

- Territorial and Extraterritorial Application of Criminal LawDocument8 pagesTerritorial and Extraterritorial Application of Criminal LawVirat SinghNo ratings yet

- FTII Acting Entance EXAM Notes - JET 2019Document61 pagesFTII Acting Entance EXAM Notes - JET 2019Vipul ShankarNo ratings yet

- Modern Optimization With R Use R 2nd Ed 2021 3030728188 9783030728182 - CompressDocument264 pagesModern Optimization With R Use R 2nd Ed 2021 3030728188 9783030728182 - CompresskarenNo ratings yet

- Shell Online Interview FormDocument5 pagesShell Online Interview FormgokulNo ratings yet

- SP 11-Control of Externally Provided Products, Process and ServicesDocument11 pagesSP 11-Control of Externally Provided Products, Process and Servicesisooffice38000No ratings yet

- DVB RodmDocument2 pagesDVB RodmAnanda BhattacharyyaNo ratings yet

- Hazard Mapping Checklist-1Document1 pageHazard Mapping Checklist-1John Matthew CerenoNo ratings yet

- 23 January - UGV Working ProgrammeDocument3 pages23 January - UGV Working ProgrammeJoão Ricardo NunesNo ratings yet

- Verbal and Nonverbal Communication and Their Functions-3 - Group 42 GE-PC - PURPOSIVE COMMUNICATIONDocument6 pagesVerbal and Nonverbal Communication and Their Functions-3 - Group 42 GE-PC - PURPOSIVE COMMUNICATIONLowell James TigueloNo ratings yet

- Bland Altman Measuring Comparison StudiesDocument27 pagesBland Altman Measuring Comparison StudiesirdinamarchsyaNo ratings yet

- Syllabus Hiv AidsDocument3 pagesSyllabus Hiv Aidsniketut alit arminiNo ratings yet

- Infotech JS2 Eclass Computer VirusDocument2 pagesInfotech JS2 Eclass Computer VirusMaria ElizabethNo ratings yet

- OS8 Gap AnalysisDocument3 pagesOS8 Gap AnalysisMihaela MikaNo ratings yet

- मजदुर २०७७-११-५ बर्ष २३ अंक २६Document8 pagesमजदुर २०७७-११-५ बर्ष २३ अंक २६Ganga DahalNo ratings yet

- The Big Muff π PageDocument16 pagesThe Big Muff π PageRobbyana 'oby' SudrajatNo ratings yet

- Cam Band Assembly: Lacing Webbing Bands Through Scuba Cam BucklesDocument10 pagesCam Band Assembly: Lacing Webbing Bands Through Scuba Cam BuckleshdhdhdNo ratings yet

- Potatoes: Free TemplatesDocument50 pagesPotatoes: Free TemplatesMichael AraujoNo ratings yet

- 006 PLI - Form - Inspection Lifting GearDocument3 pages006 PLI - Form - Inspection Lifting GearRicky Stormbringer ChristianNo ratings yet

- Unit 4 NotesDocument45 pagesUnit 4 Notesvamsi kiran100% (1)

- Instructor'S Guide To Teaching Solidworks Software Lesson 10Document27 pagesInstructor'S Guide To Teaching Solidworks Software Lesson 10Rafael Diaz RomeroNo ratings yet

Discriminant Analysis:, Y,, y Iid F I 1, H. Here, Let F

Discriminant Analysis:, Y,, y Iid F I 1, H. Here, Let F

Uploaded by

rishantghoshOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Discriminant Analysis:, Y,, y Iid F I 1, H. Here, Let F

Discriminant Analysis:, Y,, y Iid F I 1, H. Here, Let F

Uploaded by

rishantghoshCopyright:

Available Formats

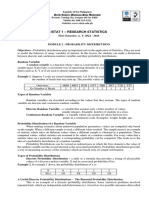

Discriminant Analysis

Suppose that we have two or more dierent populations

from which an observation could have come. Discrimi-

nant analysis seeks to determine which of the possible

populations an observation comes from while making as

few mistakes as possible.

One basic example of discriminant analysis arises every

time that you go to the doctor for an illness. The doctor

attempts to determine which disease you have given

your symptoms. In this case, the dierent illnesses that

you could have are the dierent populations, and the

symptoms that you display are the measurements taken

from your disease population.

Notation:

Similar to MANOVA, let y

i1

, y

i2

, , y

in

i

iid f

i

(y), for

i = 1, h. Here, let f

i

(y) is the density function for

population i. Note that each vector y will contain mea-

surements on all p traits.

Then, we wish to form a discriminant rule which will

divide

p

into h disjoint regions, R

1

, , R

h

such that

h

i=1

R

i

=

p

. We will then allocate an observation y to

population j when y R

j

.

For example, suppose that an individual go to the doctor

and he measures three variables, presence/absence of

fever, presence/absence of congestion in the lungs, and

presence/absence of spots on the tonsils.

E.1

Discriminant Analysis, Cont.

Then, a discriminant rule might say that the patient has

bronchitis if there is congestion in the lungs. If there is

no congestion in the lungs, but both fever and spots

on the tonsils are present, the diagnosis might be strep

throat, etc.

The easiest case in discriminant analysis would assume

that the population distributions are completely known,

but this is an unlikely assumption. Still, we will begin

with this case, and build up complexity from there.

Populations Known

The maximum likelihood discriminant rule for assigning

an observation y to one of the h populations is allocating

y to the population which gives the largest likelihood to

y.

To see why this is, lets consider the likelihood for a

single observation y. This has the form f

j

(y) where j

is the true population. Note that the point here is that

j is unknown. Then, to make the likelihood as large

as possible, we should choose the value j which causes

f

j

(y) to be as large as possible.

Lets consider a simple univariate example to solidify

your intuition for the process: suppose that we have

data from one of two binomial populations. The rst

population has n = 10 trials with success probability

p = .5, and the second population has n = 10 trials with

success probability p = .7. Which population would we

assign each of the following observations to? 1) y = 7

2) y = 4, 3) y = 6.

E.2

Examples, Cont.

Again, let us consider a univariate example. Suppose

that there are two populations, where the rst popula-

tion is N(

1

,

2

1

) and the second population is N(

2

,

2

2

).

Then, the likelihood for a single observation is

f

i

(y) = (2

2

i

)

1/2

exp

_

1

2

_

y

i

i

_

2

_

Similarly, the likelihood for the rst population is larger

than that for the second population when

1

exp

_

1

2

__

y

1

1

_

_

y

2

2

___

> 1.

Some signicant simplication occurs in the special case

where

1

=

2

. In this case, we just need the stu in the

exponent to be positive, meaning that the likelihood is

positive when

(y

2

)

2

> (y

1

)

2

or |y

2

| > |y

1

|.

There is an important multivariate analogue of this re-

sult, which will be the basis for much of our discussion

in this area.

E.3

Multivariate Normal Populations

Suppose that there are h possible populations, which are

distributed as N

p

(

p

, ). Then, the maximum likelihood

discriminant rule allocates y to population j where j

minimizes the square of the Mahalanobis distance

(y

j

)

1

(y

j

).

Bayes Discriminant Rules

Return to the example of the doctor diagnosing patients.

Suppose that he knows that the probability of a patient

having the common cold is much larger than the chance

that they have strep throat. He might wish to include

this information in his diagnosis.

This is equivalent to saying that various populations

have prior probabilities. If we know that population j

has prior probability

j

(assume that

j

> 0) we can

form the Bayes discriminant rule. This rule says allocate

an observation y to the population for which

j

f

j

(y) is

maximized.

Note the the maximum likelihood discriminant rule is a

special case of the Bayes discriminant rule. It sets all of

the

j

= 1/h.

Lets briey review the example from earlier, with two

binomial populations, each with n = 10 observations

and success probabilities p = .5 and p = .7. Suppose

that we know that 90% of all observations are from the

second population. Does this change which population

we assign observations with 1) y = 7 2) y = 4, 3) y = 6,

successes to?

E.4

Optimal Properties

Bayes Discriminant Rules (and the special case of Max-

imum Likelihood Rules) have a nice optimal property.

Let p

ii

be the probability of correctly allocating an

observation from population i.

We will say that one rule, (with probabilities p

ii

) is

as good as another rule (with probabilities p

ii

) if

p

ii

p

ii

for all i = 1, , h.

We will say that the rst rule is better than the

alternative if p

ii

> p

ii

for at least one i.

A rule for which there is no better alternative is

called admissible.

Bayes Discriminant Rules are admissible.

If we are interested in utilizing some prior probabil-

ities, then we can form the posterior probability of

a correct allocation,

h

i=1

i

p

ii

.

Bayes Discriminant Rules have the largest possible

posterior probability of correct allocation with re-

spect to the prior.

These properties tell us that we cannot do better

using a rule other than a Bayes Discriminant Rule.

E.5

Unequal Cost

Return briey to the medical example. Suppose that

the doctor has two possible diagnoses for a patient who

came into the oce presenting symptoms. One diag-

nosis is the common cold, the other is pneumonia. The

common cold will generally run its course in a week

or so, but pneumonia can kill the elderly and children.

Thus, mistaking pneumonia for the common cold is a

much greater concern than making the other mistake.

This brings to mind the idea of misallocation cost.

Dene

K(i, j) =

_

0, i = j,

c

ij

, i = j.

Here, c

ij

is the cost associated with allocating a

member of population j to population i. For the

denition to make sense, assume that c

ij

> 0 for all

i = j.

We could determine the expected amount of loss for

an observation allocated to population i as

j

c

ij

p

ij

,

where the p

ij

s are the probabilities of allocating an

observation from population j into population i.

We would wish to minimize the amount of loss ex-

pected for our rule. To do this for a Bayes Dis-

crimination, allocate y to the population j which

minimizes

k=j

c

jk

k

f

k

(y).

E.6

Unequal Cost, Cont.

We could assign equal probabilities to each group

and get a Maximum Likelihood type rule. Here, we

would allocate y to population j which minimizes

k=j

c

jk

f

k

(y).

Bayes Discriminant Rules of this type retain the

optimal properties from the previous discussion.

Let us return one nal time to the example with two

binomial populations, each of size n = 10 and with suc-

cess probabilities p

1

= .5 and p

2

= .7. Suppose that the

probability of being in the second population is still .90.

However, now, suppose that the cost of inappropriately

allocating into the rst population is 1 and the cost of

incorrectly allocating into the second population is 5.

Which population should we allocate 1) y = 7 2) y = 4,

3) y = 6, successes to?

E.7

Discrimination Under Estimation

Now, suppose that we know the form of the distributions

of the populations of interest, but we must estimate

the parameters. For example, we might know that the

distributions are multivariate normal, but we need to

estimate the means and variances.

We discussed in Chapter A that maximum likelihood

estimates are invariant. We can use this property to

form a maximum likelihood discriminant rule when the

parameters must be estimated.

The maximum likelihood discriminant rule allocates an

observation y to population j when j maximizes the

function

f

j

(y|

),

where

are the maximum likelihood estimates of the

unknown parameters.

For instance, suppose that we have two multivariate

normal populations with distinct means, but common

variance covariance matrix. We know that the MLEs

for

1

and

2

are y

1

and y

2

and the MLE for the com-

mon is S. Using this, nd the maximum likelihood

discriminant rule.

E.8

Likelihood Ratio Discriminant Rules

An alternative to the maximum likelihood discriminant

rule is the likelihood ratio discriminant rule.

Form the set of hypotheses

H

i

: y and y

ik

, k = 1, , n

i

are from population i

and y

jk

, k = 1, , n

j

are from population j = i.

Allocate y to population i for which the hypothesis,

H

i

, has the largest likelihood.

When we consider multivariate normality with com-

mon variance-covariance matrix, this rule is identi-

cal to the maximum likelihood discriminant rule for

two populations.

The procedures are asymptotically equivalent if n

1

and n

2

are both large.

The likelihood ratio discriminant rule has a slight

tendency to allocate y to the population with the

larger sample size.

This diers from the maximum likelihood discriminant

rule only in what values are used to calculate the param-

eter estimates. When the sample sizes are large there

is very little inuence of including another value. Thus,

the asymptotic results are intuitively reasonable.

E.9

Example

Suppose that we again have two binomial populations

with n = 10 trials, but now unknown success proba-

bilities p

1

and p

2

. First, let the data from the rst

population be 5, 2, 7, 8, 4 and the data from the second

population be 6, 5, 7, 7, 8. We wish to determine the

appropriate population for an observation with y = 6.

First, use the maximum likelihood discriminant rule:

Now, consider using the likelihood ratio discriminant

rule:

Is Discrimination Worthwhile?

Discrimination is pointless if, in fact, the popula-

tions are all the same.

Even if this is the case, the sample parameter esti-

mates will be dierent.

We could test to determine if the populations are

dierent using the methods of MANOVA prior to

attempting discrimination.

E.10

Fishers Linear Discriminant Function

If we do not wish to assume a distribution function for

our data, we can instead consider Fishers linear discrim-

inant function. In this case, we will look for sensible

rules.

Look for the linear function a

y which maximizes

the ratio of the model sums of squares to the error

sums of squares.

The a

y which maximizes

a

Ha

a

Ea

is called Fishers linear discriminant function.

a is the eigenvector of E

1

H which corresponds to

the largest eigenvector.

Once a has been identied, we could allocate a y

to population j if

|a

y a

y

j

| < |a

y a

y

i

| for all i = j.

It can be shown that when there are only two possi-

ble populations from the multivariate normal distri-

bution with dierent means and identical variance,

this rule and the maximum likelihood discriminant

rule are identical.

E.11

Probabilities of Misclassication

When the distributions are exactly known, we can de-

termine the misclassication probabilities exactly. How-

ever, when we need to estimate the population param-

eters, we will also have to estimate the probability of

misclassication.

Naive method:

We said that the probabilities can be calculated

exactly when the distribution is known.

When we dont know the parameters, we esti-

mate them. Plugging these estimates into the

form for the misclassication probabilities re-

sults in estimates of these probabilities.

This method tends to be optimistic when the

number of samples in one or more populations

is small.

Resubstitution Method:

We could use the proportion of the samples

from population i which would be allocated to

another population as an estimate of the mis-

classication probability.

This method is also optimistic when the number

of samples is small.

E.12

Probabilities of Misclassication, Cont.

Jack-knife estimates:

The problem with the previous method is that

the observations are used both to determine the

parameter estimates and also to determine the

misclassication probabilities based upon the dis-

criminant rule.

Instead, determine the discriminant rule based

upon all of the data except the kth observation

from the jth population.

Then, determine if the kth observation would

be misclassied under this rule.

Perform this for all n

j

observations in population

j. An estimate of the misclassication probabil-

ity would be the fraction of the n

j

observations

which were misclassied.

Repeat this procedure for the other i = j pop-

ulations.

This procedure is more reliable than the oth-

ers, but it is also much more computationally

intensive.

Note that estimates based upon all methods are more

accurate when the samples from each population are

large.

E.13

You might also like

- Working Capital Simulation: Managing Growth V2Document5 pagesWorking Capital Simulation: Managing Growth V2Abdul Qader100% (6)

- 101 FDI-SinDocument49 pages101 FDI-SinLê Hoàng Việt HảiNo ratings yet

- The Logic of Statistical Tests of SignificanceDocument19 pagesThe Logic of Statistical Tests of SignificanceWander Lars0% (1)

- Unit 4 Ci 2017Document22 pagesUnit 4 Ci 2017kshpv22No ratings yet

- Assignment 1 StatisticsDocument6 pagesAssignment 1 Statisticsjaspreet46No ratings yet

- Unit-8 IGNOU STATISTICSDocument15 pagesUnit-8 IGNOU STATISTICSCarbidemanNo ratings yet

- PredictionDocument28 pagesPredictionicelon NNo ratings yet

- Downloadartificial Intelligence - Unit 3Document20 pagesDownloadartificial Intelligence - Unit 3blazeNo ratings yet

- Block 7Document34 pagesBlock 7Harsh kumar MeerutNo ratings yet

- Unit 18Document12 pagesUnit 18Harsh kumar MeerutNo ratings yet

- Introducing ProbabilityDocument25 pagesIntroducing ProbabilityDebracel FernandezNo ratings yet

- Slides CH 14Document50 pagesSlides CH 14vasty.overbeekNo ratings yet

- Testing of Hypothesis Unit-IDocument16 pagesTesting of Hypothesis Unit-IanohanabrotherhoodcaveNo ratings yet

- Notes On EstimationDocument4 pagesNotes On EstimationAnandeNo ratings yet

- Assignment 2Document19 pagesAssignment 2Ehsan KarimNo ratings yet

- Chapter8 (Law of Numbers)Document24 pagesChapter8 (Law of Numbers)Lê Thị Ngọc HạnhNo ratings yet

- Binomial DistributionDocument6 pagesBinomial DistributionMd Delowar Hossain MithuNo ratings yet

- Introduction To Hypothesis Testing, Power Analysis and Sample Size CalculationsDocument8 pagesIntroduction To Hypothesis Testing, Power Analysis and Sample Size CalculationsFanny Sylvia C.No ratings yet

- Lecture 5 Bayesian Classification 3Document103 pagesLecture 5 Bayesian Classification 3musaNo ratings yet

- MLT Assignment 1Document13 pagesMLT Assignment 1Dinky NandwaniNo ratings yet

- StatsDocument25 pagesStatsVenu KumarNo ratings yet

- ProbabilityDistributions 61Document61 pagesProbabilityDistributions 61Rmro Chefo LuigiNo ratings yet

- Probability in Computing: Lecture 8: Central Limit TheoremsDocument16 pagesProbability in Computing: Lecture 8: Central Limit TheoremsLương Mạnh ĐạtNo ratings yet

- Unit Iii: Reasoning Under Uncertainty: Logics of Non-Monotonic Reasoning - Implementation-BasicDocument24 pagesUnit Iii: Reasoning Under Uncertainty: Logics of Non-Monotonic Reasoning - Implementation-BasicNANDHINI AKNo ratings yet

- Hypothesis Testing TopicDocument18 pagesHypothesis Testing TopicjermyneducusinandresNo ratings yet

- Statistics With R Unit 3Document11 pagesStatistics With R Unit 3Pragatheeswaran shankarNo ratings yet

- Question 8 PDFDocument9 pagesQuestion 8 PDFAleciafyNo ratings yet

- Stat Hypothesis TestingDocument14 pagesStat Hypothesis TestingJosh DavidNo ratings yet

- Statistic SimpleLinearRegressionDocument7 pagesStatistic SimpleLinearRegressionmfah00No ratings yet

- ES 209 Lecture Notes Week 14Document22 pagesES 209 Lecture Notes Week 14Janna Ann JurialNo ratings yet

- Basic Concepts in Hypothesis Testing (Rosalind L P Phang)Document7 pagesBasic Concepts in Hypothesis Testing (Rosalind L P Phang)SARA MORALES GALVEZNo ratings yet

- Lecture 13Document21 pagesLecture 13Syed Asad Asif HashmiNo ratings yet

- Topic4 Bayes With RDocument20 pagesTopic4 Bayes With RDanar HandoyoNo ratings yet

- ES714glm Generalized Linear ModelsDocument26 pagesES714glm Generalized Linear ModelsscurtisvainNo ratings yet

- Lecture 7 9Document16 pagesLecture 7 9kenkensayan143No ratings yet

- Statistics 21march2018Document25 pagesStatistics 21march2018Parth JatakiaNo ratings yet

- DS-2, Week 3 - LecturesDocument11 pagesDS-2, Week 3 - LecturesPrerana VarshneyNo ratings yet

- STAT2120: Categorical Data Analysis Chapter 1: IntroductionDocument51 pagesSTAT2120: Categorical Data Analysis Chapter 1: IntroductionRicardo TavaresNo ratings yet

- The T TEST An IntroductionDocument5 pagesThe T TEST An IntroductionthishaniNo ratings yet

- Hypothesis Testing Is One of The Most Important Concepts in Statistics Because It Is How You DecideDocument2 pagesHypothesis Testing Is One of The Most Important Concepts in Statistics Because It Is How You DecideUsman Younas0% (1)

- Chi-Square Test: Advance StatisticsDocument26 pagesChi-Square Test: Advance StatisticsRobert Carl GarciaNo ratings yet

- ECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1Document7 pagesECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1wandalexNo ratings yet

- 5.2 - Sampling Distribution of X ̅ - Introduction To Statistics-2021 - LagiosDocument15 pages5.2 - Sampling Distribution of X ̅ - Introduction To Statistics-2021 - LagiosAmandeep SinghNo ratings yet

- Lecture 11: Standard Error, Propagation of Error, Central Limit Theorem in The Real WorldDocument13 pagesLecture 11: Standard Error, Propagation of Error, Central Limit Theorem in The Real WorldWisnu van NugrooyNo ratings yet

- MMPC-5 ImpDocument32 pagesMMPC-5 ImpRajith K100% (1)

- qm2 NotesDocument9 pagesqm2 NotesdeltathebestNo ratings yet

- Wickham StatiDocument12 pagesWickham StatiJitendra K JhaNo ratings yet

- Module 2 in IStat 1 Probability DistributionDocument6 pagesModule 2 in IStat 1 Probability DistributionJefferson Cadavos CheeNo ratings yet

- Life Table AnalysisDocument13 pagesLife Table AnalysisYusfida Mariatul HusnaNo ratings yet

- Entropy: Statistical Information: A Bayesian PerspectiveDocument11 pagesEntropy: Statistical Information: A Bayesian PerspectiveMiguel Angel HrndzNo ratings yet

- Bin Pois PRIDocument19 pagesBin Pois PRIImdadul HaqueNo ratings yet

- Topic Probability DistributionsDocument25 pagesTopic Probability DistributionsIzzahIkramIllahi100% (1)

- RM Study Material - Unit 4Document66 pagesRM Study Material - Unit 4Nidhip ShahNo ratings yet

- Slide 1: Standard Normal DistributionDocument5 pagesSlide 1: Standard Normal DistributionShaira IwayanNo ratings yet

- Applications of ProbabilityDocument11 pagesApplications of ProbabilityJeffreyReyesNo ratings yet

- 18.443 Statistics For Applications: Mit OpencoursewareDocument2 pages18.443 Statistics For Applications: Mit OpencoursewareLionel CarlosNo ratings yet

- Thermal Physics Lecture 3Document7 pagesThermal Physics Lecture 3OmegaUserNo ratings yet

- Uzair Talpur 1811162 Bba 4B Statistical Inference AssignmentDocument15 pagesUzair Talpur 1811162 Bba 4B Statistical Inference Assignmentuzair talpurNo ratings yet

- Confidence Intervals-summer22-Lecture11Document23 pagesConfidence Intervals-summer22-Lecture11Abu Bakar AbbasiNo ratings yet

- Sampling DistributionDocument41 pagesSampling DistributionmendexofficialNo ratings yet

- Mathematical Foundations of Information TheoryFrom EverandMathematical Foundations of Information TheoryRating: 3.5 out of 5 stars3.5/5 (9)

- Learn Statistics Fast: A Simplified Detailed Version for StudentsFrom EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNo ratings yet

- Bangladesh Payment & Settlement System PDFDocument18 pagesBangladesh Payment & Settlement System PDFrokib0116No ratings yet

- Wittek Stereo Surround PDFDocument43 pagesWittek Stereo Surround PDFАлександр СидоровNo ratings yet

- Journal CorrectionsDocument6 pagesJournal CorrectionsChaitanya AlavalapatiNo ratings yet

- ACP-EU Business Climate Facility Awarded 'Investment Climate Initiative of The Year'-2009Document1 pageACP-EU Business Climate Facility Awarded 'Investment Climate Initiative of The Year'-2009dmaproiectNo ratings yet

- Blogs Sap Com 2019 07 28 Sap Hana DB Disk Persistence Shrink Hana Data VolumeDocument6 pagesBlogs Sap Com 2019 07 28 Sap Hana DB Disk Persistence Shrink Hana Data VolumePrasad BoddapatiNo ratings yet

- Art StylesDocument34 pagesArt StylesAdrienne Dave MojicaNo ratings yet

- Mycophenolate Information For Parents/CarersDocument12 pagesMycophenolate Information For Parents/CarersDavid LopezNo ratings yet

- Qualities of Good Measuring InstrumentsDocument4 pagesQualities of Good Measuring InstrumentsMaricar Dela Peña56% (9)

- Territorial and Extraterritorial Application of Criminal LawDocument8 pagesTerritorial and Extraterritorial Application of Criminal LawVirat SinghNo ratings yet

- FTII Acting Entance EXAM Notes - JET 2019Document61 pagesFTII Acting Entance EXAM Notes - JET 2019Vipul ShankarNo ratings yet

- Modern Optimization With R Use R 2nd Ed 2021 3030728188 9783030728182 - CompressDocument264 pagesModern Optimization With R Use R 2nd Ed 2021 3030728188 9783030728182 - CompresskarenNo ratings yet

- Shell Online Interview FormDocument5 pagesShell Online Interview FormgokulNo ratings yet

- SP 11-Control of Externally Provided Products, Process and ServicesDocument11 pagesSP 11-Control of Externally Provided Products, Process and Servicesisooffice38000No ratings yet

- DVB RodmDocument2 pagesDVB RodmAnanda BhattacharyyaNo ratings yet

- Hazard Mapping Checklist-1Document1 pageHazard Mapping Checklist-1John Matthew CerenoNo ratings yet

- 23 January - UGV Working ProgrammeDocument3 pages23 January - UGV Working ProgrammeJoão Ricardo NunesNo ratings yet

- Verbal and Nonverbal Communication and Their Functions-3 - Group 42 GE-PC - PURPOSIVE COMMUNICATIONDocument6 pagesVerbal and Nonverbal Communication and Their Functions-3 - Group 42 GE-PC - PURPOSIVE COMMUNICATIONLowell James TigueloNo ratings yet

- Bland Altman Measuring Comparison StudiesDocument27 pagesBland Altman Measuring Comparison StudiesirdinamarchsyaNo ratings yet

- Syllabus Hiv AidsDocument3 pagesSyllabus Hiv Aidsniketut alit arminiNo ratings yet

- Infotech JS2 Eclass Computer VirusDocument2 pagesInfotech JS2 Eclass Computer VirusMaria ElizabethNo ratings yet

- OS8 Gap AnalysisDocument3 pagesOS8 Gap AnalysisMihaela MikaNo ratings yet

- मजदुर २०७७-११-५ बर्ष २३ अंक २६Document8 pagesमजदुर २०७७-११-५ बर्ष २३ अंक २६Ganga DahalNo ratings yet

- The Big Muff π PageDocument16 pagesThe Big Muff π PageRobbyana 'oby' SudrajatNo ratings yet

- Cam Band Assembly: Lacing Webbing Bands Through Scuba Cam BucklesDocument10 pagesCam Band Assembly: Lacing Webbing Bands Through Scuba Cam BuckleshdhdhdNo ratings yet

- Potatoes: Free TemplatesDocument50 pagesPotatoes: Free TemplatesMichael AraujoNo ratings yet

- 006 PLI - Form - Inspection Lifting GearDocument3 pages006 PLI - Form - Inspection Lifting GearRicky Stormbringer ChristianNo ratings yet

- Unit 4 NotesDocument45 pagesUnit 4 Notesvamsi kiran100% (1)

- Instructor'S Guide To Teaching Solidworks Software Lesson 10Document27 pagesInstructor'S Guide To Teaching Solidworks Software Lesson 10Rafael Diaz RomeroNo ratings yet