Professional Documents

Culture Documents

A Look at Open-Source Alternatives To ChatGPT - TechTalks

A Look at Open-Source Alternatives To ChatGPT - TechTalks

Uploaded by

pnovelliOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Look at Open-Source Alternatives To ChatGPT - TechTalks

A Look at Open-Source Alternatives To ChatGPT - TechTalks

Uploaded by

pnovelliCopyright:

Available Formats

A look at open-source alternatives to ChatGPT

By Ben Dickson - April 17, 2023

6 min read

Since its release in November, ChatGPT has captured the imagination of the

world. People are using it for all kinds of tasks and applications. It has the

potential to change popular applications and create new ones.

But ChatGPT has also triggered an AI arms race between tech giants such as

Microsoft and Google. This has pushed the industry toward more competition and

less openness on large language models (LLM). The source code, model

architecture, weights, and training data of these instruction-following LLMs are

not available to the public. Most of them are available either through commercial

APIs or black-box web applications.

Closed LLMs such as ChatGPT, Bard, and Claude have many advantages, including

ease of access to sophisticated technology. But they also pose limits to research

labs and scientists who want to study and better understand LLMs. They are also

inconvenient for companies and organizations that want to create and run their

own models.

Fortunately, in tandem with the race to create commercial LLMs, there is also a

community effort to create open-source models that match the performance of

state-of-the-art LLMs. These models can help improve research by sharing

results. They can also help prevent a few wealthy organizations from having too

much sway and power over the LLM market.

LLaMa

One of the most important open-source language models comes from FAIR,

Meta’s AI research lab. In February, FAIR released LLaMA, a family of LLMs that

come in four different sizes: 7, 13, 33, and 65 billion parameters. (ChatGPT is

based on the 175-billion-parameter InstructGPT model.)

Privacy & Cookies Policy

FAIR researchers trained LLaMA 65B and LLaMA 33B on 1.4 trillion tokens, and

the smallest model, LLaMA 7B, on one trillion tokens. (GPT-3 175B, which is the

base model for InstructGPT, was trained on 499 billion tokens.)

LLaMa is not an instruction-following LLM like ChatGPT. But the idea behind the

smaller size of LLaMA is that smaller models pre-trained on more tokens are

easier to retrain and fine-tune for specific tasks and use cases. This has made it

possible for other researchers to fine-tune the model for ChatGPT-like

performance through techniques such as reinforcement learning from human

feedback (RLHF).

Meta released the model under “a noncommercial license focused on research use

cases.” It will only make it accessible to academic researchers, government-

affiliated organizations, civil society, and research labs on a case-by-case basis.

You can read the paper here, the model card here, and request access to the

trained models here.

(The model was leaked online shortly after its release, which effectively made it

available to everyone.)

Alpaca

In March, researchers at Stanford released Alpaca, an instruction-following LLM

based on LLaMA 7B. They fine-tuned the LLaMA model on a dataset of 52,000

instruction-following examples generated from InstructGPT.

They used a technique called self-instruct, in which an LLM generates instruction,

input, and output samples to fine-tune itself. Self-instruct starts with a small seed

of human-written examples that include instruction and output. The researchers

use the examples to prompt the language model to generate similar examples.

They then review and filter the generated examples, adding the high-quality

outputs to the seed pool and removing the rest. They repeat the process until

they obtain a large-enough dataset to fine-tune the target model.

Privacy & Cookies Policy

Alpaca LLM training process (source: Stanford.edu)

According to their preliminary experiments, Alpaca’s performance is very similar

to InstructGPT.

The Stanford researchers released the entire self-instruct data set, the details of

the data generation process, along with the code for generating the data and fine-

tuning the model. (Since Alpaca is based on LLaMA, you must obtain the original

model from Meta.)

According to the researchers, the sample-generation fine-tuning cost less than

$600, which is very convenient for cash-strapped labs and organizations.

However, the researchers stress that Alpaca “is intended only for academic

research and any commercial use is prohibited.” It was created from LLaMa,

which makes it subject to the same licensing rules as its base model. And since

the researchers used InstructGPT to generate the fine-tuning data, they are

subject to OpenAI’s terms of use, which prohibit developing models that compete

with OpenAI.

Vicuna

Researchers at UC Berkeley, Carnegie Mellon University, Stanford, and UC San

Diego released Vicuna, another instruction-following LLM based on LLaMA. Vicuna

comes in two sizes, 7 billion and 13 billion parameters.

The researchers fine-tuned Vicuna using the training code from Alpaca and

70,000 examples from ShareGPT, a website where users can share their

conversations with ChatGPT. They made some enhancements to the training

process to support longer conversation contexts. They also used the SkyPilot

machine learning workload manager to reduce the costs of training from $500 to

around $140.

Privacy & Cookies Policy

Vicuna LLM training process (source: lmsys.org)

Preliminary evaluations show that Vicuna outperforms LLaMA and Alpaca, and it is

also very close to Bard and ChatGPT-4. The researchers released the model

weights along with a full framework to install, train, and run LLMs. There is also a

very interesting online demo where you can test and compare Vicuna with other

open-source instruction LLMs.

Vicuna’s online demo is “a research preview intended for non-commercial use

only.” To run your own model, you must first obtain the LLaMA instance from Meta

and apply the weight deltas to it.

Dolly

In March, Databricks released Dolly, a fine-tuned version of EleutherAI’s GPT-J 6B.

The researchers were inspired by the work done by the teams behind LLaMA and

Alpaca. Training Dolly cost less than $30 and took 30 minutes on a single

machine.

The use of the EleutherAI base model removed the limitations Meta imposed on

LLaMA-derived LLMs. However, Databricks trained Dolly on the same data that the

Standford Alpaca team had generated through ChatGPT. Therefore, the model still

couldn’t be used for commercial purposes due to the non-compete limits OpenAI

imposes on data generated by ChatGPT.

In April, the same team released Dolly 2.0, a 12-billion parameter model based

on EleutherAI’s pythia model. This time, Databricks fine-tuned the model on a

15,000-example dataset instruction-following examples generated fully by

humans. They gathered the examples in an interesting, gamified process

involving 5,000 of Databricks’ own staff.

Databricks released the trained Dolly 2 model, which has none of the limitations

of the previous models and you can use it for commercial purposes. They also

released the 15K instruction-following corpus that they used to fine-tune the

pythia model. Machine learning engineers can use this corpus to fine-tune their

own LLMs.

Privacy & Cookies Policy

OpenAssistant

In all fairness, Open Assistant is such an interesting project that I think it

deserves its own independent article. It is a ChatGPT-like language model created

from the outset with the vision to prevent big corporations from monopolizing the

LLM market.

The team will open-source all their models, datasets, development, data

gathering, everything. It is a full, transparent, community effort. All the people

involved in the project were volunteers, dedicated to open science. It is a

different vision of what is happening behind the walled gardens of big tech

companies.

The best way to learn about Open Assistant is to watch the entertaining videos of

its co-founder and team lead Yannic Kilcher, who has long been an outspoken

critic of the closed approach of organizations such as OpenAI.

OpenAssistant has different versions based on LLaMA and pythia. You can use the

pythia version for commercial purposes. Most of the models can run on a single

GPU.

More than 13,000 volunteers from across the globe helped collect the examples

used to fine-tune the base models. The team will soon release all the data along

with a paper that explains the entire project. The trained models are available on

Hugging Face. The project’s GitHub page contains the full code for training the

model and the frontend to use the model.

The project also has a website where you can chat with Open Assistant and test

the model. And it has a task dashboard where you can contribute to the project

by creating prompts or labeling outputs.

The beauty of open source

The recent push to bring open-source LLMs has done a lot to revive the promise

of collaborative efforts and shared power that was the original promise of the

Privacy & Cookies Policy

internet. It shows how all these different communities can help each other and

help advance the field.

LLaMA’s open-source models helped spur the movement. The Alpaca project

showed that creating instruction-tuned LLMs did not require huge efforts and

costs. This in turn inspired the Vicuna project, which further reduced the costs of

training and gathering data. Dolly took the efforts in a different direction, showing

the benefits of community-led data-gathering efforts to work around the non-

compete requirements of commercial models.

There are several other models that are worth mentioning, including UC

Berkeley’s Koala and llama.cpp, a C++ implementation of the LLaMA models that

can run on ARM processors. It will be interesting to see how the open-source

movement develops in the coming months and how it will affect the LLM market.

Ben Dickson

Ben is a software engineer and the founder of TechTalks. He writes about technology, business and politics.

Privacy & Cookies Policy

You might also like

- Ebook Strategic Management Competitiveness and Globalization Concepts and Cases 14E PDF Full Chapter PDFDocument67 pagesEbook Strategic Management Competitiveness and Globalization Concepts and Cases 14E PDF Full Chapter PDFsamuel.chapman41697% (38)

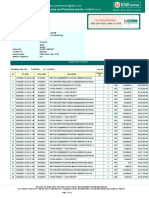

- Tirupati Telecom Primary Account Holder Name: Your A/C StatusDocument12 pagesTirupati Telecom Primary Account Holder Name: Your A/C StatusMy PhotosNo ratings yet

- (Signals and Communication Technology) Markus Rupp, Stefan Schwarz, Martin Taranetz (auth.)-The Vienna LTE-Advanced Simulators_ Up and Downlink, Link and System Level Simulation-Springer Singapore (20.pdfDocument383 pages(Signals and Communication Technology) Markus Rupp, Stefan Schwarz, Martin Taranetz (auth.)-The Vienna LTE-Advanced Simulators_ Up and Downlink, Link and System Level Simulation-Springer Singapore (20.pdfLiba Bali100% (1)

- John Carroll University Magazine Spring 2012Document54 pagesJohn Carroll University Magazine Spring 2012johncarrolluniversityNo ratings yet

- UniCel DxI and Access 2 LIS Vendor InformationDocument64 pagesUniCel DxI and Access 2 LIS Vendor InformationOmerNo ratings yet

- Ways To Use LLM in Finance OrganisationDocument5 pagesWays To Use LLM in Finance OrganisationMd Ahsan AliNo ratings yet

- Understand The Technology EcosystemDocument3 pagesUnderstand The Technology EcosystemGowtham ThalluriNo ratings yet

- OpenLLAMA-The Future of Large Language ModelsDocument5 pagesOpenLLAMA-The Future of Large Language ModelsMy SocialNo ratings yet

- 19 Data Science and Machine Learning Tools For People Who Don't Know ProgrammingDocument8 pages19 Data Science and Machine Learning Tools For People Who Don't Know ProgrammingNikhitha PaiNo ratings yet

- Building A PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide - ShakudoDocument13 pagesBuilding A PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide - ShakudoPocho OrtizNo ratings yet

- Generative AI Lifecycle Patterns. Part 2_ Maturing GenAI _ Patterns… _ by Ali Arsanjani _ Sep, 2023 _ MediumDocument24 pagesGenerative AI Lifecycle Patterns. Part 2_ Maturing GenAI _ Patterns… _ by Ali Arsanjani _ Sep, 2023 _ MediumUri KatsirNo ratings yet

- Red Pajama: An Open-Source Llama ModelDocument3 pagesRed Pajama: An Open-Source Llama ModelMy SocialNo ratings yet

- GPT4架构揭秘Document12 pagesGPT4架构揭秘texujingyingguanli126.comNo ratings yet

- Meet GorillaDocument7 pagesMeet GorillaPat AlethiaNo ratings yet

- Evaluation and Comparison of AutoML Approaches and ToolsDocument9 pagesEvaluation and Comparison of AutoML Approaches and ToolsMathias RosasNo ratings yet

- Open Assistant-Open-Source Chat AssistantDocument2 pagesOpen Assistant-Open-Source Chat AssistantMy SocialNo ratings yet

- Data ScienceDocument39 pagesData ScienceMohamed HarunNo ratings yet

- Dolly2.0 Ready For Commercial UseDocument3 pagesDolly2.0 Ready For Commercial UseMy SocialNo ratings yet

- Open Source System Dynamics With Simantics and Openmodelica: Teemu LempinenDocument17 pagesOpen Source System Dynamics With Simantics and Openmodelica: Teemu LempinenribozymesNo ratings yet

- Aryan A. What Is LLMOps. Large Language Models in Production 2024Document67 pagesAryan A. What Is LLMOps. Large Language Models in Production 2024studio1mungaiNo ratings yet

- Deepbots: A Webots-Based Deep Reinforcement Learning Framework For RoboticsDocument12 pagesDeepbots: A Webots-Based Deep Reinforcement Learning Framework For Roboticsbuihuyanh2018No ratings yet

- IntroductionDocument10 pagesIntroductionUjjwal Kumar SinghNo ratings yet

- Using Chatgpt, Gpt-4, & Large Language Models in The EnterpriseDocument20 pagesUsing Chatgpt, Gpt-4, & Large Language Models in The Enterpriseppriya4191No ratings yet

- Kaggle BookDocument57 pagesKaggle BookShahla AliNo ratings yet

- Modul 2 Data ScienceDocument10 pagesModul 2 Data Scienceandreas ryanNo ratings yet

- How ChatGPT Works The Model Behind The Bot by Molly Ruby Towards Data ScienceDocument15 pagesHow ChatGPT Works The Model Behind The Bot by Molly Ruby Towards Data SciencechonkNo ratings yet

- Llama 2: An Open-Source Commercially Usable Chat Model by Meta AIDocument7 pagesLlama 2: An Open-Source Commercially Usable Chat Model by Meta AIMy SocialNo ratings yet

- Acm Research Papers Software EngineeringDocument4 pagesAcm Research Papers Software Engineeringgz7p29p0100% (1)

- A Machine Learning Library in C++Document4 pagesA Machine Learning Library in C++jyotibose09No ratings yet

- Data Science IBMDocument157 pagesData Science IBMmonde lyitaNo ratings yet

- Chat GPTDocument37 pagesChat GPTsophia787No ratings yet

- Feu Et Al 21Document5 pagesFeu Et Al 21Angelica FernandezNo ratings yet

- Vicuna - Open-Source Chatbot - Alternative For GPT-4Document3 pagesVicuna - Open-Source Chatbot - Alternative For GPT-4My SocialNo ratings yet

- LLM UpdatesDocument6 pagesLLM UpdatesasimovidickNo ratings yet

- Fabric Data Science 1 150Document150 pagesFabric Data Science 1 150pascalburumeNo ratings yet

- Running Llama 2 On CPU Inference Locally For Document Q&A - by Kenneth Leung - Jul, 2023 - Towards Data ScienceDocument21 pagesRunning Llama 2 On CPU Inference Locally For Document Q&A - by Kenneth Leung - Jul, 2023 - Towards Data Sciencevan mai100% (1)

- Fabric Data ScienceDocument652 pagesFabric Data SciencepascalburumeNo ratings yet

- Exploring LIMA - Language Model With A Unique Training ApproachDocument5 pagesExploring LIMA - Language Model With A Unique Training ApproachMy SocialNo ratings yet

- 2023 GPT4All Technical ReportDocument3 pages2023 GPT4All Technical ReportLeonel rugamaNo ratings yet

- Data Analysis and Machine Learning With Kaggle How To Win Competitions On Kaggle and Build A Successful Career in Data Science 1801817472 9781801817479Document48 pagesData Analysis and Machine Learning With Kaggle How To Win Competitions On Kaggle and Build A Successful Career in Data Science 1801817472 9781801817479milosNo ratings yet

- Orca: A 13-Billion Parameter Model That Outperforms Other LLMs by Learning From GPT-4Document7 pagesOrca: A 13-Billion Parameter Model That Outperforms Other LLMs by Learning From GPT-4My SocialNo ratings yet

- System Simulation & Modeling: Name:Shubham Sharma Roll No.:11300490 Branch: Btech - Cse Section: A246Document12 pagesSystem Simulation & Modeling: Name:Shubham Sharma Roll No.:11300490 Branch: Btech - Cse Section: A246Ishaan SharmaNo ratings yet

- Machine Learning With Python A Practical Beginners' Guide (Machine Learning With Python For Beginners Book 2) (Oliver Theobald) (Z-Library)Document146 pagesMachine Learning With Python A Practical Beginners' Guide (Machine Learning With Python For Beginners Book 2) (Oliver Theobald) (Z-Library)Olusola AkintunlajiNo ratings yet

- 2019 Anaconda Buyers GuideDocument11 pages2019 Anaconda Buyers GuidebgpexpertNo ratings yet

- List of Open Sourced Fine-Tuned Large Language Models (LLM) - by Sung Kim - Geek Culture - Mar, 2023 - MediumDocument18 pagesList of Open Sourced Fine-Tuned Large Language Models (LLM) - by Sung Kim - Geek Culture - Mar, 2023 - MediumfmendesNo ratings yet

- Effortless Models Deployment With MLFlow - by Facundo Santiago - MediumDocument15 pagesEffortless Models Deployment With MLFlow - by Facundo Santiago - Mediumsalman kadayaNo ratings yet

- INTRODUCTION TO OPEN SOURCE TECHNOLOGIES-PHP, MysqlDocument12 pagesINTRODUCTION TO OPEN SOURCE TECHNOLOGIES-PHP, Mysqlanon_48973018No ratings yet

- A Testing Methodology For An Open Software E-LearnDocument18 pagesA Testing Methodology For An Open Software E-LearnAndressa MariaNo ratings yet

- SSRN Id4655822Document9 pagesSSRN Id4655822pengsongzhang96No ratings yet

- GPT-4 Architecture, Infrastructure, Training Dataset, Costs, Vision, MoEDocument4 pagesGPT-4 Architecture, Infrastructure, Training Dataset, Costs, Vision, MoEjohn clarityNo ratings yet

- Pillsbury Final Paper CapstoneDocument13 pagesPillsbury Final Paper Capstoneapi-754061642No ratings yet

- An architecture and platform for developing distributed recommendation algorithms on large-scale social networksDocument19 pagesAn architecture and platform for developing distributed recommendation algorithms on large-scale social networksYivofoNo ratings yet

- MahoutDocument6 pagesMahoutPappu KhanNo ratings yet

- ML Ops White Paper 4Document17 pagesML Ops White Paper 4Aleksandar StankovicNo ratings yet

- 2 - Data Science ToolsDocument21 pages2 - Data Science ToolsDaniel VasconcellosNo ratings yet

- Data ScienceDocument38 pagesData ScienceDINESH REDDYNo ratings yet

- McKynsey Exploring Opportunities in The Gen AI Value Chain - McKinseyDocument16 pagesMcKynsey Exploring Opportunities in The Gen AI Value Chain - McKinsey8kxzj9bfmrNo ratings yet

- NVIDIA RAG WhitepaperDocument7 pagesNVIDIA RAG Whitepaperl1h3n3No ratings yet

- Modelscope-Agent: Building Your Customizable Agent System With Open-Source Large Language ModelsDocument13 pagesModelscope-Agent: Building Your Customizable Agent System With Open-Source Large Language ModelsbilletonNo ratings yet

- How To Deploy and Test Your Models Using FastAPI and Google Cloud Run - by Antons Tocilins-Ruberts - Towards Data ScienceDocument25 pagesHow To Deploy and Test Your Models Using FastAPI and Google Cloud Run - by Antons Tocilins-Ruberts - Towards Data ScienceitsjustlibNo ratings yet

- s10270 023 01105 5 - NeweDocument13 pagess10270 023 01105 5 - NeweOmer IqbalNo ratings yet

- Literature Survey PetuumDocument10 pagesLiterature Survey PetuumSanjayNo ratings yet

- Machine Learning in Production: Master the art of delivering robust Machine Learning solutions with MLOps (English Edition)From EverandMachine Learning in Production: Master the art of delivering robust Machine Learning solutions with MLOps (English Edition)No ratings yet

- Valuing Internal Communication Management and Employee PerspectivesDocument23 pagesValuing Internal Communication Management and Employee PerspectivesKevin RuckNo ratings yet

- T.I.M.E. European Summer School June 27 To July 9, 2011: Ustainability ConomicsDocument4 pagesT.I.M.E. European Summer School June 27 To July 9, 2011: Ustainability ConomicsalpalpalpalpNo ratings yet

- Crastin PBT - Rynite Pet PDFDocument38 pagesCrastin PBT - Rynite Pet PDFkfaravNo ratings yet

- THINK L4 Unit 5 Grammar ExtensionDocument2 pagesTHINK L4 Unit 5 Grammar Extensionniyazi polatNo ratings yet

- FCE Exam 3 ListeningDocument6 pagesFCE Exam 3 ListeningSaul MendozaNo ratings yet

- A Astronom As EsDocument40 pagesA Astronom As EsareianoarNo ratings yet

- Ecology and Distribution of Sea Buck Thorn in Mustang and Manang District, NepalDocument58 pagesEcology and Distribution of Sea Buck Thorn in Mustang and Manang District, NepalSantoshi ShresthaNo ratings yet

- Victron With Pylon Configuration SettingsDocument3 pagesVictron With Pylon Configuration Settingswarick mNo ratings yet

- R Data Visualization Cookbook Sample ChapterDocument25 pagesR Data Visualization Cookbook Sample ChapterPackt PublishingNo ratings yet

- B787 MmelDocument215 pagesB787 Mmeljoker hotNo ratings yet

- U.S. Department of Transportation National Highway Traffic Safety AdministrationDocument39 pagesU.S. Department of Transportation National Highway Traffic Safety AdministrationDiego MontanezNo ratings yet

- Passage 2 For Grade 6Document3 pagesPassage 2 For Grade 6Irish Joy CachaperoNo ratings yet

- What Are The Benefits of An Intensive Outpatient ProgramDocument1 pageWhat Are The Benefits of An Intensive Outpatient ProgramJack williamNo ratings yet

- Lohia (Democracy)Document11 pagesLohia (Democracy)Madhu SharmaNo ratings yet

- Gear Tooth Contact PatternsDocument3 pagesGear Tooth Contact PatternsToua YajNo ratings yet

- Advance Data StructuresDocument184 pagesAdvance Data StructureskamsiNo ratings yet

- Environmental Conservation in Bhutan: Organization and PolicyDocument21 pagesEnvironmental Conservation in Bhutan: Organization and PolicyApriele Rose Gaudicos HermogenesNo ratings yet

- Ch. 9 Sampling Distributions and Confidence Intervals For ProportionsDocument22 pagesCh. 9 Sampling Distributions and Confidence Intervals For ProportionsThanh PhamNo ratings yet

- Mini Proj RCT 222 PDFDocument34 pagesMini Proj RCT 222 PDF4073 kolakaluru mounishaNo ratings yet

- Marketing Information SystemDocument3 pagesMarketing Information SystemsanjayNo ratings yet

- E 10durometerDocument14 pagesE 10durometerDika DrogbaNo ratings yet

- MODULE 4 - Sliding Contact BearingDocument14 pagesMODULE 4 - Sliding Contact BearingBoris PalaoNo ratings yet

- PHPA Polymer ConcentrationDocument15 pagesPHPA Polymer ConcentrationWaleedm MariaNo ratings yet

- Gilbert Erector Set GuidebookDocument72 pagesGilbert Erector Set Guidebookdomingojs233710No ratings yet

- Handout - 20475 - AU 2016 Class Handout - Revit and Dynamo For Landscape ArchitectureDocument58 pagesHandout - 20475 - AU 2016 Class Handout - Revit and Dynamo For Landscape ArchitectureKelvinatorNo ratings yet

- Oops FinalDocument47 pagesOops Finaludaya57No ratings yet