Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

5 viewsHaha

Haha

Uploaded by

jelyntabuena977Chatbots like ChatGPT have the potential to spread malware if their conversational interfaces are exploited by malicious actors. There are three key ways this could occur: (1) by manipulating vulnerabilities in interfaces, hackers could trick bots into executing malicious commands; (2) bots could be used as "Trojan horses" to embed harmful links or code in messages to unsuspecting users; and (3) bots could be leveraged for phishing attacks and social engineering to gather sensitive user information or distribute malware. Developers and users must implement robust security measures and promote awareness to combat this emerging threat.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You might also like

- Contemporary Calculus TextbookDocument526 pagesContemporary Calculus TextbookJosé Luis Salazar Espitia100% (2)

- New Synthesis PaperDocument10 pagesNew Synthesis Paperapi-460973473No ratings yet

- Cyber TerrorismDocument26 pagesCyber Terrorismsaurabh_parikh27100% (2)

- Law of Inheritance in Different ReligionsDocument35 pagesLaw of Inheritance in Different ReligionsSumbal83% (12)

- Soal Olimpiade Matematika Grade 4Document3 pagesSoal Olimpiade Matematika Grade 4wakidsNo ratings yet

- S4800 Supplementary Notes1 PDFDocument26 pagesS4800 Supplementary Notes1 PDFTanu OhriNo ratings yet

- Review of Peer-to-Peer Botnets and Detection MechaDocument8 pagesReview of Peer-to-Peer Botnets and Detection MechaDuy PhanNo ratings yet

- 2 24473 WP New Era of BotnetsDocument16 pages2 24473 WP New Era of Botnetssabdullah60No ratings yet

- Bonet Englis Case StudyDocument3 pagesBonet Englis Case Studycaaraaa fellNo ratings yet

- The Solution To Online Abuse - AI Needs Human Intelligence - World Economic ForumDocument13 pagesThe Solution To Online Abuse - AI Needs Human Intelligence - World Economic ForumMartinNo ratings yet

- Fifth Guide - Jenny GuzmanDocument3 pagesFifth Guide - Jenny GuzmanJenny Guzmán BravoNo ratings yet

- En La Mente de Un HackerDocument44 pagesEn La Mente de Un HackerdjavixzNo ratings yet

- ReportDocument10 pagesReportrawan.1989.dNo ratings yet

- A Hacker-Centric Approach To Securing Your Digital DomainDocument16 pagesA Hacker-Centric Approach To Securing Your Digital DomainZaiedul HoqueNo ratings yet

- Mirai BotnetDocument3 pagesMirai Botnetctmc999No ratings yet

- Security Challenges For IoTDocument35 pagesSecurity Challenges For IoTPraneeth51 Praneeth51No ratings yet

- 2 Observing The Presence of Mobile Malwares UsingDocument5 pages2 Observing The Presence of Mobile Malwares UsingshwetaNo ratings yet

- Abusegpt: Abuse of Generative Ai Chatbots To Create Smishing CampaignsDocument6 pagesAbusegpt: Abuse of Generative Ai Chatbots To Create Smishing Campaignsvofariw199No ratings yet

- CHAPTER 8 CASE STUDY Information SecuritDocument3 pagesCHAPTER 8 CASE STUDY Information SecuritFufu Zein FuadNo ratings yet

- Hacking-PP Document For Jury FinalDocument28 pagesHacking-PP Document For Jury FinalAnkit KapoorNo ratings yet

- Bots Botnets 5Document4 pagesBots Botnets 5serdal bilginNo ratings yet

- BTIT603: Cyber and Network Security: BotnetDocument15 pagesBTIT603: Cyber and Network Security: BotnetKajalNo ratings yet

- English 11Document9 pagesEnglish 11cknadeloNo ratings yet

- TrickBot Botnet Targeting Multiple IndustriesDocument3 pagesTrickBot Botnet Targeting Multiple IndustriesViren ChoudhariNo ratings yet

- Cyberwar and The Future of CybersecurityDocument83 pagesCyberwar and The Future of CybersecurityKai Lin TayNo ratings yet

- Botnet Group 5Document10 pagesBotnet Group 5Joy ImpilNo ratings yet

- Roleof Machine Learnig Algorithmin Digital Forensic Investigationof Botnet AttacksDocument10 pagesRoleof Machine Learnig Algorithmin Digital Forensic Investigationof Botnet AttacksEdi SuwandiNo ratings yet

- Botnets: The Dark Side of Cloud Computing: by Angelo Comazzetto, Senior Product ManagerDocument5 pagesBotnets: The Dark Side of Cloud Computing: by Angelo Comazzetto, Senior Product ManagerDavidLeeCoxNo ratings yet

- Emerging Trends in Digital Forensic and Cyber Security-An OverviewDocument5 pagesEmerging Trends in Digital Forensic and Cyber Security-An OverviewAthulya VenugopalNo ratings yet

- Computer Internet Compromised Hacker Computer Virus Trojan Horse BotnetDocument6 pagesComputer Internet Compromised Hacker Computer Virus Trojan Horse BotnetHaider AbbasNo ratings yet

- Best Practices To Address Online and Mobile Threats 0Document52 pagesBest Practices To Address Online and Mobile Threats 0Nur WidiyasonoNo ratings yet

- The Biggest Cybersecurity Threats of 2013: Difficult To DetectDocument3 pagesThe Biggest Cybersecurity Threats of 2013: Difficult To Detectjeme2ndNo ratings yet

- Chapter 1 Cybersecurity and The Security Operations CenterDocument9 pagesChapter 1 Cybersecurity and The Security Operations Centeradin siregarNo ratings yet

- Lesson A Cybercrime TsunamiDocument2 pagesLesson A Cybercrime Tsunamimateuszwitkowiak3No ratings yet

- Study of Cyber SecurityDocument8 pagesStudy of Cyber SecurityErikas MorkaitisNo ratings yet

- 2023 Mid Year Cyber Security ReportDocument53 pages2023 Mid Year Cyber Security ReporttmendisNo ratings yet

- Hacking - 201204Document75 pagesHacking - 201204Danilo CarusoNo ratings yet

- HackingDocument6 pagesHackingRodica MelnicNo ratings yet

- Cybercrime Trends 2024Document44 pagesCybercrime Trends 2024robertomihali39No ratings yet

- Ethical HackingDocument17 pagesEthical Hackingmuna cliff0% (1)

- Cyber Ethics in The Scope of Information TechnologyDocument5 pagesCyber Ethics in The Scope of Information TechnologyUnicorn ProjectNo ratings yet

- Computer Hacking Related To Fraud of RecordsDocument52 pagesComputer Hacking Related To Fraud of RecordsRobin LigsayNo ratings yet

- Bot Defense Insights Into Basic and Advanced Techniques For Thwarting Automated ThreatsDocument11 pagesBot Defense Insights Into Basic and Advanced Techniques For Thwarting Automated ThreatsEduardo KerchnerNo ratings yet

- Draft of Algorithm and Ai Chatbot Technologies and The Threats They PoseDocument6 pagesDraft of Algorithm and Ai Chatbot Technologies and The Threats They Poseapi-707718552No ratings yet

- Inbound 7787586483020553402Document28 pagesInbound 7787586483020553402Ashley CanlasNo ratings yet

- Hack The Planet FinalDocument11 pagesHack The Planet FinalLuke DanciccoNo ratings yet

- Cybersecurity in The AI-BasedDocument24 pagesCybersecurity in The AI-Basedxaxd.asadNo ratings yet

- IMPRESSIVE Botnet GuideDocument29 pagesIMPRESSIVE Botnet Guidebellava053No ratings yet

- Ai For Malware Development Analyst NoteDocument4 pagesAi For Malware Development Analyst Notepfmhsgxx9jNo ratings yet

- (IJCST-V10I5P20) :MR D.Purushothaman, K PavanDocument9 pages(IJCST-V10I5P20) :MR D.Purushothaman, K PavanEighthSenseGroupNo ratings yet

- Malware Malware, Short For Malicious Software, Is Software (Or Script or Code) Designed To DisruptDocument2 pagesMalware Malware, Short For Malicious Software, Is Software (Or Script or Code) Designed To DisruptChris AlphonsoNo ratings yet

- Cyber SecurityDocument32 pagesCyber SecuritySHUBHAM SHAKTINo ratings yet

- Rumour Detection Models and Tools For Social PDFDocument6 pagesRumour Detection Models and Tools For Social PDFMohammad S QaseemNo ratings yet

- IC - ChatGPT - AsenateDocument8 pagesIC - ChatGPT - AsenateMaritza DiazNo ratings yet

- WP Botnets at The GateDocument11 pagesWP Botnets at The GateNunyaNo ratings yet

- CYBER CRIME TopicsDocument8 pagesCYBER CRIME TopicsLoy GuardNo ratings yet

- Blockchain For CybersecurityDocument7 pagesBlockchain For CybersecurityYarooq AnwarNo ratings yet

- Designing ProjectDocument4 pagesDesigning Projectvinaydevrukhkar2629No ratings yet

- Critical Infrastructure Under Attack: Lessons From A HoneypotDocument2 pagesCritical Infrastructure Under Attack: Lessons From A HoneypotfnbjnhquilfyfyNo ratings yet

- Lab 1 - Cybersecurity at A GlanceDocument9 pagesLab 1 - Cybersecurity at A GlancePhạm Trọng KhanhNo ratings yet

- Review of Malware and Phishing in The Current and Next Generation of The InternetDocument6 pagesReview of Malware and Phishing in The Current and Next Generation of The InternetIJRASETPublicationsNo ratings yet

- NSE 1: The Threat Landscape: Study GuideDocument30 pagesNSE 1: The Threat Landscape: Study Guidejosu_rc67% (3)

- Internet Safety PDFDocument5 pagesInternet Safety PDFMangaiNo ratings yet

- Ch567 Cognitive SOCIAL Emotional INTERACTIONDocument177 pagesCh567 Cognitive SOCIAL Emotional INTERACTIONjelyntabuena977No ratings yet

- Integrative Programming and Technologies 9Document9 pagesIntegrative Programming and Technologies 9jelyntabuena977No ratings yet

- Integrative Programming and Technologies 7Document12 pagesIntegrative Programming and Technologies 7jelyntabuena977No ratings yet

- Enderes PlaquiaPPT - Docx 010617Document61 pagesEnderes PlaquiaPPT - Docx 010617jelyntabuena977No ratings yet

- 5 Brilliant Depictions of Lucifer in Art From The Past 250 YearsDocument1 page5 Brilliant Depictions of Lucifer in Art From The Past 250 Yearsrserrano188364No ratings yet

- Flipkart Case StudyDocument9 pagesFlipkart Case StudyHarshini ReddyNo ratings yet

- Capital MarketDocument16 pagesCapital Marketdeepika90236100% (1)

- Architectural Theory: Charles Jencks: Modern Movements in ArchitectureDocument32 pagesArchitectural Theory: Charles Jencks: Modern Movements in ArchitectureBafreen BnavyNo ratings yet

- The Brain TED TALK Reading Comprehension Questions and VocabularyDocument4 pagesThe Brain TED TALK Reading Comprehension Questions and VocabularyJuanjo Climent FerrerNo ratings yet

- Dr. Shayma'a Jamal Ahmed Prof. Genetic Engineering & BiotechnologyDocument32 pagesDr. Shayma'a Jamal Ahmed Prof. Genetic Engineering & BiotechnologyMariam QaisNo ratings yet

- Why Does AFRICA Called The Dark ContinentDocument11 pagesWhy Does AFRICA Called The Dark ContinentGio LagadiaNo ratings yet

- Codirectores y ActasDocument6 pagesCodirectores y ActasmarcelaNo ratings yet

- Parking Standards: Parking Stall Dimensions (See Separate Handout For R-1 Single Family)Document1 pageParking Standards: Parking Stall Dimensions (See Separate Handout For R-1 Single Family)Ali HusseinNo ratings yet

- SFMDocument132 pagesSFMKomal BagrodiaNo ratings yet

- Peckiana Peckiana Peckiana Peckiana PeckianaDocument29 pagesPeckiana Peckiana Peckiana Peckiana PeckianaWais Al-QorniNo ratings yet

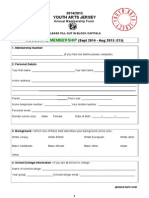

- Youth Arts Jersey - MembershipDocument2 pagesYouth Arts Jersey - MembershipSteve HaighNo ratings yet

- Case 580n 580sn 580snwt 590sn Service ManualDocument20 pagesCase 580n 580sn 580snwt 590sn Service Manualwilliam100% (39)

- Baby Food in India AnalysisDocument4 pagesBaby Food in India AnalysisRaheetha AhmedNo ratings yet

- International Council For Management Studies (ICMS), Chennai (Tamilnadu) Chennai, Fees, Courses, Admission Date UpdatesDocument2 pagesInternational Council For Management Studies (ICMS), Chennai (Tamilnadu) Chennai, Fees, Courses, Admission Date UpdatesAnonymous kRIjqBLkNo ratings yet

- Dokumen - Tips - Transfer Pricing QuizDocument5 pagesDokumen - Tips - Transfer Pricing QuizSaeym SegoviaNo ratings yet

- Brand ManagementDocument4 pagesBrand ManagementahmadmujtabamalikNo ratings yet

- Invoice Ebay10Document2 pagesInvoice Ebay10Ermec AtachikovNo ratings yet

- University of Santo Tomas Senior High School Documentation of Ust'S Artworks Through A Walking Tour Brochure and Audio GuideDocument30 pagesUniversity of Santo Tomas Senior High School Documentation of Ust'S Artworks Through A Walking Tour Brochure and Audio GuideNathan SalongaNo ratings yet

- Parental Presence Vs Absence - Dr. Julie ManiateDocument27 pagesParental Presence Vs Absence - Dr. Julie ManiateRooka82No ratings yet

- Chemical Engineering Department, Faculty of Engineering, Universitas Indonesia, Depok, West Java, IndonesiaDocument6 pagesChemical Engineering Department, Faculty of Engineering, Universitas Indonesia, Depok, West Java, IndonesiaBadzlinaKhairunizzahraNo ratings yet

- Prachi Ashtang YogaDocument18 pagesPrachi Ashtang YogaArushi BhargawaNo ratings yet

- November/December 2016Document72 pagesNovember/December 2016Dig DifferentNo ratings yet

- Populorum ProgressioDocument4 pagesPopulorum ProgressioSugar JumuadNo ratings yet

- Greeshma Vasu: Receptionist/Cashier/Edp Clerk/Customer ServiceDocument2 pagesGreeshma Vasu: Receptionist/Cashier/Edp Clerk/Customer Serviceoday abuassaliNo ratings yet

- Life Orientation September 2023 EngDocument9 pagesLife Orientation September 2023 EngmadzhutatakalaniNo ratings yet

Haha

Haha

Uploaded by

jelyntabuena9770 ratings0% found this document useful (0 votes)

5 views1 pageChatbots like ChatGPT have the potential to spread malware if their conversational interfaces are exploited by malicious actors. There are three key ways this could occur: (1) by manipulating vulnerabilities in interfaces, hackers could trick bots into executing malicious commands; (2) bots could be used as "Trojan horses" to embed harmful links or code in messages to unsuspecting users; and (3) bots could be leveraged for phishing attacks and social engineering to gather sensitive user information or distribute malware. Developers and users must implement robust security measures and promote awareness to combat this emerging threat.

Original Description:

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentChatbots like ChatGPT have the potential to spread malware if their conversational interfaces are exploited by malicious actors. There are three key ways this could occur: (1) by manipulating vulnerabilities in interfaces, hackers could trick bots into executing malicious commands; (2) bots could be used as "Trojan horses" to embed harmful links or code in messages to unsuspecting users; and (3) bots could be leveraged for phishing attacks and social engineering to gather sensitive user information or distribute malware. Developers and users must implement robust security measures and promote awareness to combat this emerging threat.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

5 views1 pageHaha

Haha

Uploaded by

jelyntabuena977Chatbots like ChatGPT have the potential to spread malware if their conversational interfaces are exploited by malicious actors. There are three key ways this could occur: (1) by manipulating vulnerabilities in interfaces, hackers could trick bots into executing malicious commands; (2) bots could be used as "Trojan horses" to embed harmful links or code in messages to unsuspecting users; and (3) bots could be leveraged for phishing attacks and social engineering to gather sensitive user information or distribute malware. Developers and users must implement robust security measures and promote awareness to combat this emerging threat.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 1

Group COOKIESS – Lian Azenith R. Avila, Irish Nicole B.

Tabuena, Shereen Robredillo

How ChatGPT and

bots like it can

spread malware

In the age of advance artificial intelligence, chatbots have become

an integral part of our digital lives. These intelligent algorithms

are designed to assist with tasks, provide information, and even

engage in casual conversation. While they have brought immense

convenience to users, they also come with the potential for

misuse. One concerning aspect is the possibility of chatbots

spreading malware, causing harm and chaos in the digital realm.

In this discussion, we'll explore how ChatGPT and bots like it can Sam Altman – AI Inventor

be manipulated to facilitate the distribution of malicious

software, along with three key points explaining how this threat

unfolds..

Point 1: Exploiting Vulnerabilities More Information…

in Conversational Interfaces WHILE CHATBOTS, INCLUDING CHATGPT,

Chatbots, including ChatGPT, often communicate OFFER NUMEROUS ADVANTAGES AND

through various digital platforms and interfaces.

CONTRIBUTE TO OUR DIGITAL EXPERIENCE,

These interfaces may have security vulnerabilities

that can be exploited by malicious actors. Through THEY ALSO INTRODUCE NEW CHALLENGES

sophisticated social engineering techniques, AND SECURITY RISKS. THE POTENTIAL FOR

hackers can manipulate these vulnerabilities to THESE BOTS TO UNWITTINGLY SPREAD

deceive the bot into executing malicious

commands. This first point explores how these MALWARE IS A REAL CONCERN IN TODAY'S

deceptive tactics compromise the bot's INTERCONNECTED WORLD. TO COMBAT

trustworthiness. THIS THREAT, DEVELOPERS, USERS, AND

CYBERSECURITY EXPERTS MUST REMAIN

Point 2: The Trojan Horse Effect

VIGILANT, IMPLEMENTING ROBUST

One of the primary ways chatbots can be used to

distribute malware is through the Trojan Horse SECURITY MEASURES, AND PROMOTING

strategy. Malicious actors can embed harmful code AWARENESS ABOUT SAFE DIGITAL

or links within seemingly innocuous messages.

Since chatbots often interact with users in real-time

and handle numerous conversations

simultaneously, they might not detect these hidden

threats. This point delves into how unsuspecting

users can fall victim to this Trojan Horse approach

and unknowingly download malware onto their

devices.

Point 3: Phishing and Social

Engineering CHATGPT, WHICH STANDS FOR CHAT

Chatbots can be leveraged to conduct phishing GENERATIVE PRE-TRAINED TRANSFORMER, IS A

attacks. They can engage users in convincing LARGE LANGUAGE MODEL-BASED CHATBOT

conversations, tricking them into revealing sensitive DEVELOPED BY OPENAI AND LAUNCHED ON

information like passwords or financial details. By

impersonating trusted entities or individuals,

NOVEMBER 30, 2022, WHICH ENABLES USERS

malicious actors can exploit the chatbot's TO REFINE AND STEER A CONVERSATION

conversational nature to gather valuable data, TOWARDS A DESIRED LENGTH, FORMAT, STYLE,

ultimately leading to malware distribution. This LEVEL OF DETAIL, AND LANGUAGE.

point highlights how social engineering plays a

pivotal role in this threat.

https://www.wired.com/tag/engineering/ | Empowerment technology | Marvin Evardone

You might also like

- Contemporary Calculus TextbookDocument526 pagesContemporary Calculus TextbookJosé Luis Salazar Espitia100% (2)

- New Synthesis PaperDocument10 pagesNew Synthesis Paperapi-460973473No ratings yet

- Cyber TerrorismDocument26 pagesCyber Terrorismsaurabh_parikh27100% (2)

- Law of Inheritance in Different ReligionsDocument35 pagesLaw of Inheritance in Different ReligionsSumbal83% (12)

- Soal Olimpiade Matematika Grade 4Document3 pagesSoal Olimpiade Matematika Grade 4wakidsNo ratings yet

- S4800 Supplementary Notes1 PDFDocument26 pagesS4800 Supplementary Notes1 PDFTanu OhriNo ratings yet

- Review of Peer-to-Peer Botnets and Detection MechaDocument8 pagesReview of Peer-to-Peer Botnets and Detection MechaDuy PhanNo ratings yet

- 2 24473 WP New Era of BotnetsDocument16 pages2 24473 WP New Era of Botnetssabdullah60No ratings yet

- Bonet Englis Case StudyDocument3 pagesBonet Englis Case Studycaaraaa fellNo ratings yet

- The Solution To Online Abuse - AI Needs Human Intelligence - World Economic ForumDocument13 pagesThe Solution To Online Abuse - AI Needs Human Intelligence - World Economic ForumMartinNo ratings yet

- Fifth Guide - Jenny GuzmanDocument3 pagesFifth Guide - Jenny GuzmanJenny Guzmán BravoNo ratings yet

- En La Mente de Un HackerDocument44 pagesEn La Mente de Un HackerdjavixzNo ratings yet

- ReportDocument10 pagesReportrawan.1989.dNo ratings yet

- A Hacker-Centric Approach To Securing Your Digital DomainDocument16 pagesA Hacker-Centric Approach To Securing Your Digital DomainZaiedul HoqueNo ratings yet

- Mirai BotnetDocument3 pagesMirai Botnetctmc999No ratings yet

- Security Challenges For IoTDocument35 pagesSecurity Challenges For IoTPraneeth51 Praneeth51No ratings yet

- 2 Observing The Presence of Mobile Malwares UsingDocument5 pages2 Observing The Presence of Mobile Malwares UsingshwetaNo ratings yet

- Abusegpt: Abuse of Generative Ai Chatbots To Create Smishing CampaignsDocument6 pagesAbusegpt: Abuse of Generative Ai Chatbots To Create Smishing Campaignsvofariw199No ratings yet

- CHAPTER 8 CASE STUDY Information SecuritDocument3 pagesCHAPTER 8 CASE STUDY Information SecuritFufu Zein FuadNo ratings yet

- Hacking-PP Document For Jury FinalDocument28 pagesHacking-PP Document For Jury FinalAnkit KapoorNo ratings yet

- Bots Botnets 5Document4 pagesBots Botnets 5serdal bilginNo ratings yet

- BTIT603: Cyber and Network Security: BotnetDocument15 pagesBTIT603: Cyber and Network Security: BotnetKajalNo ratings yet

- English 11Document9 pagesEnglish 11cknadeloNo ratings yet

- TrickBot Botnet Targeting Multiple IndustriesDocument3 pagesTrickBot Botnet Targeting Multiple IndustriesViren ChoudhariNo ratings yet

- Cyberwar and The Future of CybersecurityDocument83 pagesCyberwar and The Future of CybersecurityKai Lin TayNo ratings yet

- Botnet Group 5Document10 pagesBotnet Group 5Joy ImpilNo ratings yet

- Roleof Machine Learnig Algorithmin Digital Forensic Investigationof Botnet AttacksDocument10 pagesRoleof Machine Learnig Algorithmin Digital Forensic Investigationof Botnet AttacksEdi SuwandiNo ratings yet

- Botnets: The Dark Side of Cloud Computing: by Angelo Comazzetto, Senior Product ManagerDocument5 pagesBotnets: The Dark Side of Cloud Computing: by Angelo Comazzetto, Senior Product ManagerDavidLeeCoxNo ratings yet

- Emerging Trends in Digital Forensic and Cyber Security-An OverviewDocument5 pagesEmerging Trends in Digital Forensic and Cyber Security-An OverviewAthulya VenugopalNo ratings yet

- Computer Internet Compromised Hacker Computer Virus Trojan Horse BotnetDocument6 pagesComputer Internet Compromised Hacker Computer Virus Trojan Horse BotnetHaider AbbasNo ratings yet

- Best Practices To Address Online and Mobile Threats 0Document52 pagesBest Practices To Address Online and Mobile Threats 0Nur WidiyasonoNo ratings yet

- The Biggest Cybersecurity Threats of 2013: Difficult To DetectDocument3 pagesThe Biggest Cybersecurity Threats of 2013: Difficult To Detectjeme2ndNo ratings yet

- Chapter 1 Cybersecurity and The Security Operations CenterDocument9 pagesChapter 1 Cybersecurity and The Security Operations Centeradin siregarNo ratings yet

- Lesson A Cybercrime TsunamiDocument2 pagesLesson A Cybercrime Tsunamimateuszwitkowiak3No ratings yet

- Study of Cyber SecurityDocument8 pagesStudy of Cyber SecurityErikas MorkaitisNo ratings yet

- 2023 Mid Year Cyber Security ReportDocument53 pages2023 Mid Year Cyber Security ReporttmendisNo ratings yet

- Hacking - 201204Document75 pagesHacking - 201204Danilo CarusoNo ratings yet

- HackingDocument6 pagesHackingRodica MelnicNo ratings yet

- Cybercrime Trends 2024Document44 pagesCybercrime Trends 2024robertomihali39No ratings yet

- Ethical HackingDocument17 pagesEthical Hackingmuna cliff0% (1)

- Cyber Ethics in The Scope of Information TechnologyDocument5 pagesCyber Ethics in The Scope of Information TechnologyUnicorn ProjectNo ratings yet

- Computer Hacking Related To Fraud of RecordsDocument52 pagesComputer Hacking Related To Fraud of RecordsRobin LigsayNo ratings yet

- Bot Defense Insights Into Basic and Advanced Techniques For Thwarting Automated ThreatsDocument11 pagesBot Defense Insights Into Basic and Advanced Techniques For Thwarting Automated ThreatsEduardo KerchnerNo ratings yet

- Draft of Algorithm and Ai Chatbot Technologies and The Threats They PoseDocument6 pagesDraft of Algorithm and Ai Chatbot Technologies and The Threats They Poseapi-707718552No ratings yet

- Inbound 7787586483020553402Document28 pagesInbound 7787586483020553402Ashley CanlasNo ratings yet

- Hack The Planet FinalDocument11 pagesHack The Planet FinalLuke DanciccoNo ratings yet

- Cybersecurity in The AI-BasedDocument24 pagesCybersecurity in The AI-Basedxaxd.asadNo ratings yet

- IMPRESSIVE Botnet GuideDocument29 pagesIMPRESSIVE Botnet Guidebellava053No ratings yet

- Ai For Malware Development Analyst NoteDocument4 pagesAi For Malware Development Analyst Notepfmhsgxx9jNo ratings yet

- (IJCST-V10I5P20) :MR D.Purushothaman, K PavanDocument9 pages(IJCST-V10I5P20) :MR D.Purushothaman, K PavanEighthSenseGroupNo ratings yet

- Malware Malware, Short For Malicious Software, Is Software (Or Script or Code) Designed To DisruptDocument2 pagesMalware Malware, Short For Malicious Software, Is Software (Or Script or Code) Designed To DisruptChris AlphonsoNo ratings yet

- Cyber SecurityDocument32 pagesCyber SecuritySHUBHAM SHAKTINo ratings yet

- Rumour Detection Models and Tools For Social PDFDocument6 pagesRumour Detection Models and Tools For Social PDFMohammad S QaseemNo ratings yet

- IC - ChatGPT - AsenateDocument8 pagesIC - ChatGPT - AsenateMaritza DiazNo ratings yet

- WP Botnets at The GateDocument11 pagesWP Botnets at The GateNunyaNo ratings yet

- CYBER CRIME TopicsDocument8 pagesCYBER CRIME TopicsLoy GuardNo ratings yet

- Blockchain For CybersecurityDocument7 pagesBlockchain For CybersecurityYarooq AnwarNo ratings yet

- Designing ProjectDocument4 pagesDesigning Projectvinaydevrukhkar2629No ratings yet

- Critical Infrastructure Under Attack: Lessons From A HoneypotDocument2 pagesCritical Infrastructure Under Attack: Lessons From A HoneypotfnbjnhquilfyfyNo ratings yet

- Lab 1 - Cybersecurity at A GlanceDocument9 pagesLab 1 - Cybersecurity at A GlancePhạm Trọng KhanhNo ratings yet

- Review of Malware and Phishing in The Current and Next Generation of The InternetDocument6 pagesReview of Malware and Phishing in The Current and Next Generation of The InternetIJRASETPublicationsNo ratings yet

- NSE 1: The Threat Landscape: Study GuideDocument30 pagesNSE 1: The Threat Landscape: Study Guidejosu_rc67% (3)

- Internet Safety PDFDocument5 pagesInternet Safety PDFMangaiNo ratings yet

- Ch567 Cognitive SOCIAL Emotional INTERACTIONDocument177 pagesCh567 Cognitive SOCIAL Emotional INTERACTIONjelyntabuena977No ratings yet

- Integrative Programming and Technologies 9Document9 pagesIntegrative Programming and Technologies 9jelyntabuena977No ratings yet

- Integrative Programming and Technologies 7Document12 pagesIntegrative Programming and Technologies 7jelyntabuena977No ratings yet

- Enderes PlaquiaPPT - Docx 010617Document61 pagesEnderes PlaquiaPPT - Docx 010617jelyntabuena977No ratings yet

- 5 Brilliant Depictions of Lucifer in Art From The Past 250 YearsDocument1 page5 Brilliant Depictions of Lucifer in Art From The Past 250 Yearsrserrano188364No ratings yet

- Flipkart Case StudyDocument9 pagesFlipkart Case StudyHarshini ReddyNo ratings yet

- Capital MarketDocument16 pagesCapital Marketdeepika90236100% (1)

- Architectural Theory: Charles Jencks: Modern Movements in ArchitectureDocument32 pagesArchitectural Theory: Charles Jencks: Modern Movements in ArchitectureBafreen BnavyNo ratings yet

- The Brain TED TALK Reading Comprehension Questions and VocabularyDocument4 pagesThe Brain TED TALK Reading Comprehension Questions and VocabularyJuanjo Climent FerrerNo ratings yet

- Dr. Shayma'a Jamal Ahmed Prof. Genetic Engineering & BiotechnologyDocument32 pagesDr. Shayma'a Jamal Ahmed Prof. Genetic Engineering & BiotechnologyMariam QaisNo ratings yet

- Why Does AFRICA Called The Dark ContinentDocument11 pagesWhy Does AFRICA Called The Dark ContinentGio LagadiaNo ratings yet

- Codirectores y ActasDocument6 pagesCodirectores y ActasmarcelaNo ratings yet

- Parking Standards: Parking Stall Dimensions (See Separate Handout For R-1 Single Family)Document1 pageParking Standards: Parking Stall Dimensions (See Separate Handout For R-1 Single Family)Ali HusseinNo ratings yet

- SFMDocument132 pagesSFMKomal BagrodiaNo ratings yet

- Peckiana Peckiana Peckiana Peckiana PeckianaDocument29 pagesPeckiana Peckiana Peckiana Peckiana PeckianaWais Al-QorniNo ratings yet

- Youth Arts Jersey - MembershipDocument2 pagesYouth Arts Jersey - MembershipSteve HaighNo ratings yet

- Case 580n 580sn 580snwt 590sn Service ManualDocument20 pagesCase 580n 580sn 580snwt 590sn Service Manualwilliam100% (39)

- Baby Food in India AnalysisDocument4 pagesBaby Food in India AnalysisRaheetha AhmedNo ratings yet

- International Council For Management Studies (ICMS), Chennai (Tamilnadu) Chennai, Fees, Courses, Admission Date UpdatesDocument2 pagesInternational Council For Management Studies (ICMS), Chennai (Tamilnadu) Chennai, Fees, Courses, Admission Date UpdatesAnonymous kRIjqBLkNo ratings yet

- Dokumen - Tips - Transfer Pricing QuizDocument5 pagesDokumen - Tips - Transfer Pricing QuizSaeym SegoviaNo ratings yet

- Brand ManagementDocument4 pagesBrand ManagementahmadmujtabamalikNo ratings yet

- Invoice Ebay10Document2 pagesInvoice Ebay10Ermec AtachikovNo ratings yet

- University of Santo Tomas Senior High School Documentation of Ust'S Artworks Through A Walking Tour Brochure and Audio GuideDocument30 pagesUniversity of Santo Tomas Senior High School Documentation of Ust'S Artworks Through A Walking Tour Brochure and Audio GuideNathan SalongaNo ratings yet

- Parental Presence Vs Absence - Dr. Julie ManiateDocument27 pagesParental Presence Vs Absence - Dr. Julie ManiateRooka82No ratings yet

- Chemical Engineering Department, Faculty of Engineering, Universitas Indonesia, Depok, West Java, IndonesiaDocument6 pagesChemical Engineering Department, Faculty of Engineering, Universitas Indonesia, Depok, West Java, IndonesiaBadzlinaKhairunizzahraNo ratings yet

- Prachi Ashtang YogaDocument18 pagesPrachi Ashtang YogaArushi BhargawaNo ratings yet

- November/December 2016Document72 pagesNovember/December 2016Dig DifferentNo ratings yet

- Populorum ProgressioDocument4 pagesPopulorum ProgressioSugar JumuadNo ratings yet

- Greeshma Vasu: Receptionist/Cashier/Edp Clerk/Customer ServiceDocument2 pagesGreeshma Vasu: Receptionist/Cashier/Edp Clerk/Customer Serviceoday abuassaliNo ratings yet

- Life Orientation September 2023 EngDocument9 pagesLife Orientation September 2023 EngmadzhutatakalaniNo ratings yet