Professional Documents

Culture Documents

Audio Segmentation in AAC Domain For Content

Audio Segmentation in AAC Domain For Content

Uploaded by

Ousseni OuedraogoCopyright:

Available Formats

You might also like

- Assignment 2: Read The "General Instructions" Before Completing This AssignmentDocument2 pagesAssignment 2: Read The "General Instructions" Before Completing This AssignmentMarina SimeonovaNo ratings yet

- LBG VQDocument3 pagesLBG VQGabriel Rattacaso CarvalhoNo ratings yet

- Control of Robot Arm Based On Speech Recognition Using Mel-Frequency Cepstrum Coefficients (MFCC) and K-Nearest Neighbors (KNN) MethodDocument6 pagesControl of Robot Arm Based On Speech Recognition Using Mel-Frequency Cepstrum Coefficients (MFCC) and K-Nearest Neighbors (KNN) MethodMada Sanjaya WsNo ratings yet

- An Approach To Extract Feature Using MFCDocument5 pagesAn Approach To Extract Feature Using MFCHai DangNo ratings yet

- Wang 2010Document6 pagesWang 2010manishscryNo ratings yet

- Design of M-Channel Pseudo Near Perfect Reconstruction QMF Bank For Image CompressionDocument9 pagesDesign of M-Channel Pseudo Near Perfect Reconstruction QMF Bank For Image CompressionsipijNo ratings yet

- A Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiDocument7 pagesA Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiUsman TariqNo ratings yet

- 2023 Midterm PapersDocument5 pages2023 Midterm Papers40 XII-B Satyam SinhaNo ratings yet

- Chapter - 1: 1.1 Introduction To Music Genre ClassificationDocument57 pagesChapter - 1: 1.1 Introduction To Music Genre ClassificationManoj KumarNo ratings yet

- MFCC and Vector Quantization For Arabic Fricatives2012Document6 pagesMFCC and Vector Quantization For Arabic Fricatives2012Aicha ZitouniNo ratings yet

- Speech Enhancement With Ad-Hoc Microphone Array Using Single Source ActivityDocument6 pagesSpeech Enhancement With Ad-Hoc Microphone Array Using Single Source Activity呂文祺No ratings yet

- Ecomms s07 MidtermDocument6 pagesEcomms s07 MidtermJos2No ratings yet

- Comparative Study Between DWT and BTC For The Transmission of Image Over FFT-OFDMDocument5 pagesComparative Study Between DWT and BTC For The Transmission of Image Over FFT-OFDMBasavaraj S KashappanavarNo ratings yet

- Analysis of Feature Extraction Techniques For Speech Recognition SystemDocument4 pagesAnalysis of Feature Extraction Techniques For Speech Recognition SystemsridharchandrasekarNo ratings yet

- Voice Recognition Using MFCC AlgorithmDocument4 pagesVoice Recognition Using MFCC AlgorithmNguyễn Đình NghĩaNo ratings yet

- MIMO OFDM Limted FeedbackDocument6 pagesMIMO OFDM Limted FeedbackYasser NaguibNo ratings yet

- Schmitz 2019Document6 pagesSchmitz 2019harshithb.ec20No ratings yet

- A Pilot Pattern Based Algorithm For MIMO-OFDM Channel EstimationDocument5 pagesA Pilot Pattern Based Algorithm For MIMO-OFDM Channel Estimationshaiza malikNo ratings yet

- Voice RecognitionDocument6 pagesVoice RecognitionVuong LuongNo ratings yet

- E2E-SINCNET Toward Fully End-To-End Speech RecognitionDocument5 pagesE2E-SINCNET Toward Fully End-To-End Speech RecognitionShiv shankarNo ratings yet

- Silence RemovalDocument3 pagesSilence RemovalJulsNo ratings yet

- Chapter 8 MmediaDocument18 pagesChapter 8 MmediaAbraham AbaynehNo ratings yet

- Artificial Neural Networks (The Tutorial With MATLAB)Document6 pagesArtificial Neural Networks (The Tutorial With MATLAB)DanielNo ratings yet

- ADSP Unit 1 QBDocument4 pagesADSP Unit 1 QBKumar ManiNo ratings yet

- Coding Techniques For Analog Sources: Prof - Pratik TawdeDocument11 pagesCoding Techniques For Analog Sources: Prof - Pratik TawdeabayteshomeNo ratings yet

- Heart Murmur Detection of PCG Using ResNet With Selective Kernel ConvolutionDocument4 pagesHeart Murmur Detection of PCG Using ResNet With Selective Kernel ConvolutionDalana PasinduNo ratings yet

- Implementation of A Basic AECDocument4 pagesImplementation of A Basic AECquitelargeNo ratings yet

- Analyzing Noise Robustness of MFCC and GFCC Features in Speaker IdentificationDocument5 pagesAnalyzing Noise Robustness of MFCC and GFCC Features in Speaker Identificationhhakim32No ratings yet

- DE63 Dec 2012Document6 pagesDE63 Dec 2012Rakesh RajbharNo ratings yet

- FPGA Implementation of LDPC Decoder Architecture For Wireless Communication StandardsDocument4 pagesFPGA Implementation of LDPC Decoder Architecture For Wireless Communication StandardsArgyrios KokkinisNo ratings yet

- Research Article: Efficient Multichannel NLMS Implementation For Acoustic Echo CancellationDocument6 pagesResearch Article: Efficient Multichannel NLMS Implementation For Acoustic Echo Cancellationhassani islamNo ratings yet

- Ericmario 2010Document4 pagesEricmario 2010Phạm ĐăngNo ratings yet

- Maximum A Posteriori Decoding ForDocument8 pagesMaximum A Posteriori Decoding Formianmuhammadawais321No ratings yet

- Single-Channel Electroencephalogram Analysis Using Non-Linear Subspace TechniquesDocument6 pagesSingle-Channel Electroencephalogram Analysis Using Non-Linear Subspace TechniquesIoana GuțăNo ratings yet

- Voice Activation Using Speaker Recognition For Controlling Humanoid RobotDocument6 pagesVoice Activation Using Speaker Recognition For Controlling Humanoid RobotDyah Ayu AnggreiniNo ratings yet

- Channel Coding For Underwater Acoustic Communication SystemDocument4 pagesChannel Coding For Underwater Acoustic Communication SystemmyonesloveNo ratings yet

- Speaker Recognition System Based On VQ in MATLAB EnvironmentDocument8 pagesSpeaker Recognition System Based On VQ in MATLAB EnvironmentmanishscryNo ratings yet

- Underwater Acoustic Channel: MC-CDMA Via Carrier Interferometry Codes in AnDocument5 pagesUnderwater Acoustic Channel: MC-CDMA Via Carrier Interferometry Codes in An283472 ktr.phd.ece.19No ratings yet

- Ec 2004 (PDC) - CS - End - May - 2023Document24 pagesEc 2004 (PDC) - CS - End - May - 2023223UTKARSH TRIVEDINo ratings yet

- L-3/T-,2/EEE Date: 09/06/2014: To Obtain A PAM Signal, Where, Is The Width of Pulse. What Is TheDocument34 pagesL-3/T-,2/EEE Date: 09/06/2014: To Obtain A PAM Signal, Where, Is The Width of Pulse. What Is TheMahmudNo ratings yet

- Channel Coding: Version 2 ECE IIT, KharagpurDocument8 pagesChannel Coding: Version 2 ECE IIT, KharagpurHarshaNo ratings yet

- Digital Communications: B o B oDocument12 pagesDigital Communications: B o B oLion LionNo ratings yet

- Algebraic Survivor Memory Management Design For Viterbi DetectorsDocument6 pagesAlgebraic Survivor Memory Management Design For Viterbi Detectorsapi-19790923No ratings yet

- SP Manual 2023-24Document98 pagesSP Manual 2023-24Manjunath ReddyNo ratings yet

- Pattern Division Multiple Access (PDMA) For Cellular Future Radio AccessDocument6 pagesPattern Division Multiple Access (PDMA) For Cellular Future Radio Accessletthereberock448No ratings yet

- McltechoDocument3 pagesMcltechojagruteegNo ratings yet

- Teach Spin 1Document4 pagesTeach Spin 1ttreedNo ratings yet

- A Texas Instruments DSP-based Acoustic Source Direction FinderDocument5 pagesA Texas Instruments DSP-based Acoustic Source Direction Finderimrankhan1995No ratings yet

- 1997 TCASII I. Galton Spectral Shaping of Circuit Errors in Digital To Analog ConvertersDocument10 pages1997 TCASII I. Galton Spectral Shaping of Circuit Errors in Digital To Analog Converterskijiji userNo ratings yet

- ECE650 Midterm Exam S - 10Document2 pagesECE650 Midterm Exam S - 10MohammedFikryNo ratings yet

- M.tech Advanced Digital Signal ProcessingDocument2 pagesM.tech Advanced Digital Signal Processingsrinivas50% (2)

- DSP - Student (Without Bonafide)Document50 pagesDSP - Student (Without Bonafide)Santhoshkumar R PNo ratings yet

- EC8501 UNIT 2 Linear Predictive CodingDocument12 pagesEC8501 UNIT 2 Linear Predictive CodingmadhuNo ratings yet

- Construction and Optimization For Adaptive Polar Coded CooperationDocument4 pagesConstruction and Optimization For Adaptive Polar Coded CooperationMehmet KibarNo ratings yet

- Home Automation Please Read This ShitDocument5 pagesHome Automation Please Read This ShitEnh ManlaiNo ratings yet

- SForum2023 FinalDocument4 pagesSForum2023 FinalThiago SantosNo ratings yet

- Reduced-Latency SC Polar Decoder Architectures: Chuan Zhang, Bo Yuan, and Keshab K. ParhiDocument5 pagesReduced-Latency SC Polar Decoder Architectures: Chuan Zhang, Bo Yuan, and Keshab K. ParhiFebru ekoNo ratings yet

- 5.c 41 - BLACK-BOX APPLICATION IN MODELING OFDocument4 pages5.c 41 - BLACK-BOX APPLICATION IN MODELING OFVančo LitovskiNo ratings yet

- Some Case Studies on Signal, Audio and Image Processing Using MatlabFrom EverandSome Case Studies on Signal, Audio and Image Processing Using MatlabNo ratings yet

- Software Radio: Sampling Rate Selection, Design and SynchronizationFrom EverandSoftware Radio: Sampling Rate Selection, Design and SynchronizationNo ratings yet

- Quiz 6: Multiple ChoiceDocument6 pagesQuiz 6: Multiple ChoiceAnupamNo ratings yet

- Architecture of Neural NetworkDocument32 pagesArchitecture of Neural NetworkArmanda Cruz NetoNo ratings yet

- Dwnload Full Digital Signal Processing Using Matlab A Problem Solving Companion 4th Edition Ingle Solutions Manual PDFDocument36 pagesDwnload Full Digital Signal Processing Using Matlab A Problem Solving Companion 4th Edition Ingle Solutions Manual PDFhaodienb6qj100% (19)

- Lect 7Document10 pagesLect 7Salem SobhyNo ratings yet

- BMI 704 - Machine Learning LabDocument17 pagesBMI 704 - Machine Learning Labjakekei5258No ratings yet

- Polynomials - Worksheet1Document2 pagesPolynomials - Worksheet1ckankaria749No ratings yet

- Top Down ParsingDocument45 pagesTop Down Parsinggargsajal9No ratings yet

- I and Q Components in Communications Signals: Sharlene Katz James FlynnDocument24 pagesI and Q Components in Communications Signals: Sharlene Katz James Flynnatalasa-1No ratings yet

- Schwartz-Zippel Lemma and Polynomial Identity TestingDocument7 pagesSchwartz-Zippel Lemma and Polynomial Identity TestingShriram RamachandranNo ratings yet

- Dual Simplex: January 2011Document14 pagesDual Simplex: January 2011Yunia RozaNo ratings yet

- A Short Course On Error-Correcting Codes: Mario Blaum C All Rights ReservedDocument104 pagesA Short Course On Error-Correcting Codes: Mario Blaum C All Rights ReservedGaston GBNo ratings yet

- Question 1-Canny Edge Detector (10 Points) : Fundamentals of Computer Vision - Midterm Exam Dr. B. NasihatkonDocument6 pagesQuestion 1-Canny Edge Detector (10 Points) : Fundamentals of Computer Vision - Midterm Exam Dr. B. NasihatkonHiroNo ratings yet

- HW03 CSCI570 - Spring2023Document5 pagesHW03 CSCI570 - Spring2023HB LNo ratings yet

- X X X E: Karatina University Sta 223: Introduction To Time Series Analysis Cat Ii 2020 Question OneDocument2 pagesX X X E: Karatina University Sta 223: Introduction To Time Series Analysis Cat Ii 2020 Question OneKimondo KingNo ratings yet

- Ipmv Mod 5&6 (Theory Questions)Document11 pagesIpmv Mod 5&6 (Theory Questions)Ashwin ANo ratings yet

- Computer Vision NewDocument28 pagesComputer Vision NewCE29Smit BhanushaliNo ratings yet

- Lecture 3 Complexity Analysis ReccuranceDocument39 pagesLecture 3 Complexity Analysis ReccuranceMahimul Islam AkashNo ratings yet

- Dip 08 09Document122 pagesDip 08 09saeed samieeNo ratings yet

- DAA NotesDocument115 pagesDAA Notesa44397541No ratings yet

- Design & Implementation of Image Compression Using Huffman Coding Through VHDL, Kumar KeshamoniDocument7 pagesDesign & Implementation of Image Compression Using Huffman Coding Through VHDL, Kumar KeshamoniKumar Goud.KNo ratings yet

- Desk Checking AlgorithmsDocument4 pagesDesk Checking AlgorithmsKelly Bauer83% (6)

- SyllabusDocument2 pagesSyllabusSrikanthNo ratings yet

- Digital Signal Processing-1703074Document12 pagesDigital Signal Processing-1703074Sourabh KapoørNo ratings yet

- Rational TheoremDocument12 pagesRational TheoremJose_Colella_1708No ratings yet

- Answer The Following Questions: Q1: Choose The Correct Answer (20 Points)Document13 pagesAnswer The Following Questions: Q1: Choose The Correct Answer (20 Points)Viraj JeewanthaNo ratings yet

- Assignment 1 PLE-2 MathsDocument3 pagesAssignment 1 PLE-2 MathsRajNo ratings yet

- Sri Vidya College of Engineering and Technology Course Material (Lecture Notes)Document35 pagesSri Vidya College of Engineering and Technology Course Material (Lecture Notes)BenilaNo ratings yet

- Cad SlidesviDocument69 pagesCad SlidesvimaxsilverNo ratings yet

- Channel Coding: Convolutional CodesDocument59 pagesChannel Coding: Convolutional CodesSabuj AhmedNo ratings yet

Audio Segmentation in AAC Domain For Content

Audio Segmentation in AAC Domain For Content

Uploaded by

Ousseni OuedraogoOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Audio Segmentation in AAC Domain For Content

Audio Segmentation in AAC Domain For Content

Uploaded by

Ousseni OuedraogoCopyright:

Available Formats

Audio Segmentation in AAC Domain for Content

Analysis

Rong Zhu, Haojun Ai, Ruimin Hu

National Engineering Research Center for Multimedia Software

Wuhan University

Wuhan, China

zhurong@whu.edu.cn ai.haojun@gmail.com hrm1964@public.wh.hb.cn

Abstract—We focus the attention on the audio scene segmentation audio classification. The audio content analysis experiments

in AAC domain for audio-based multimedia indexing and and performance results are presented in Section 3.

retrieval applications. In particular, a MFCC extraction method Conclusions are followed in Section 4.

is proposed, which is adaptive to the window switch in AAC

encoding process, and independent of the audio sampling

II. FEATURE EXTRACTION AND MODEL

frequency. We discuss the fusion method of MFCC features,

which came from different window type in order to keep the

balance of the frequency and temporal resolution. A series of

A. Structure of AAC Stream

experiments via the probability distribution of MFCC were A fundamental component in the AAC audio coding

implemented to test the effective in audio scene segmentation. process is the conversion the signals from time domain to a

The experimental results show that such approach based on time frequency representation [6]. This conversion is done by a

compression domain can approach the performance of the system forward modified discrete cosine transform (MDCT). A direct

based on PCM audio, and the CPU overload decreased MDCT transformation is performed over the samples without

dramatically. It is meaningful to the real time analysis of audio dividing the audio signal into 32 subbands as in MP3 encoding.

content. Two windowing modes are applied in order to achieve a better

time/frequency resolution:

Keywords-Audio Content Analysis; AAC; Compression

Domaint; MFCC

N −1

⎛ 2π ⎛ 1 ⎞⎞

I. INTRODUCTION X k = 2i∑ zi ,n cos ⎜ (n + n0 ) ⎜ k + ⎟ ⎟ ,

n =0 ⎝ N ⎝ 2 ⎠⎠ (1)

Audio information often plays an essential role in

understanding the semantic content of multimedia. Audio for 0 ≤ k ≤ N / 2

content analysis (ACA), i.e. the automatic extraction of

semantic information from sounds, arose naturally from the Where:

need to efficiently manage the growing collections of data and

enhance man-machine communication. ACA can typically zin = windowed input sequence

transfer the audio signals into a set of numerical measure,

which is usually called low-level features to denote that they n = sample index

represent a low level of abstraction [1]. The starting point of

audio content analysis for a general time-dependent audio k = spectral coefficient index

signal is the temporal segmentation, which segments this signal

to different types of audio. The Mel frequency cepstral i = block index

coefficients (MFCC) are often used due to their good

discriminative capabilities for a broad range of audio N = window length of the transform window

classification tasks [2].

n0 = ( N / 2 + 1) / 2

In our research, we explores the possibility of working

directly in the compression domain so that no decoding is In the encoder the filterbank takes the appropriate block of

needed, thus lowering the processing requirement. There are a time samples, we modulates them by an appropriate window

number of approaches proposed for audio segmentation and function, and performs the MDCT. Each block of input

summarization in compressed domain [3][4][5]. Because samples is overlapped by 50% with the immediately preceding

MPEG Advanced Audio Coding (AAC) is used widely in block and the following block. The transform input block

broadcast, movie, DTV and portable device, we focus our length N can be set either 2048 or 256 samples.

research on how to identify speaker in AAC domain directly.

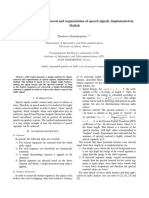

Fig.1 shows the sequence of blocks for the transition (D-E-

The structure of this paper is as follows. In section 2 we F) to and from a frame employing the sine function window.

describe MFCC extraction in AAC domain and model it for

This work was supported in part by the NSFC under Grant 60832002, and

the Sci. and Tech. Plan of Hubei Provenience under Grant 2007AA101C50.

978-1-4244-3693-4/09/$25.00 ©2009 IEEE

Fig.2 shows an example of window switching in a audio M

⎛ 1 π ⎞

sample. C j = ∑ X i ⋅ cos ⎜ j ⋅ (i − ) ⋅ ⎟ , with j=1,3,...,J (2)

i =1 ⎝ 2 M ⎠

Where, M is the number of filters in the filter bank, J is

Windows during state conditions

A B C

1

the number of cepstral coefficients which are computed

(usually J < M ). X i is the energy output of the i -th filter

[7].

0

Gain

windows during transient conditions We extend the MFCC algorithm from Fourier domain to

MDCT domain. The tri-angle filter banks can archived by

1

1 2 34 5 67 8 9 10

combined the Equ.2 and Equ.3. In Equ.3 N is the frame length,

fs is the sampling frequency and k is the index of the MDCT

spectrum.

0

0 512 1024 1536 2048 2560

Time(Samples)

3072 3584 4096

k MDCT = ⎡⎣ N ∗ ( flinear f s ) ⎤⎦ (3)

Figure 1. Example of Block Switching During Transient Signal Conditions Because N is 2048 or 512, two suit of filter banks should be

used for AAC audio stream.

As the MFCC only care the signal below 8 KHz, we have

Short Window

Audio PCM processed the MDCT spectrum below 8 KHz in the mode of 40

Window Type filterbanks for all sampling frequency.

Long Window

C. Silence Frame Detection

As for the first step our objective is to extract silent and

0

nonsilent frames and then to obtain an initial categorization

from the nonsilent frames in order to proceed with an initial

segmentation on the next step. Silence detection is performed

per frame by applying a threshold to the root mean squared

(RMS) of an whole audio frame. RMS energy as give in (4)

0 1024 2048 3072 4096 5120 6144 7168 8192 9216 10240

1 I −1

Time(Samples)

RMS j = ∑ Si ( j ) 2

I i =0

(4)

Figure 2. Example for the Window and block switching

Where I is the number of MDCT coefficients (for AAC,

AAC audio codec is a perceptual audio solution combined

with MDCT transform and entropy coding. Though the AAC I =1024), and Si ( j ) is the MDCT coefficient index at frame

support multichannel source, in which joint stereo is used to index j for MDCT coefficient index i. Silence detection is

represent the redundancy, at least a main channel should be performed per frame by applying a threshold TRMS to the

encoded independently. In AAC stream structure, RMS. If RMS < TRMS, the frame is considered as all mute

single_channel_element describes the main channel and hence no further steps are necessary.

information, including three groups of indispensable

parameters: global_gain, scale_factor_data, and D. Audio Scene Segmentation via MFCC

spectral_data [6]. Depending on them, we can reconstruct the

MDCT spectrum. An unsupervised, BIC-based system for audio scene

segmentation is implemented. The aim of this step is to detect

changes in speaker identity, environmental condition and

B. MFCC extraction in AAC domain channel condition, and then to find points in the audio stream

A fundamental component in the AAC audio coding likely to be changed between audio sources. BIC-based

process is the conversion the signals from time domain to a segmentation algorithms may not assume any prior knowledge

time frequency representation. This conversion is done by a of the number of speakers, their identities, or signal

forward modified discrete cosine transform (MDCT) [6]. characteristics. The BIC detection is performed using 20-

In our research, a filter bank of equal height filters is used dimensional MFCC vectors coming from the MDCT

in MDCT spectral domain. The MFCC parameters are coefficients directly.

computed as:

E. Fusion for the two frame types sized window (40 AAC encoding frames) firstly. If there is no

In general audio signal, the long window is selected more changing point detected, the window will be shifted by 4

frequently than the short window. In speech signal, the short seconds. Otherwise, the window will be shifted to the location

window was selected in the transient duration, especially from of the changing point.

silent to active. Because the short time stability is not evident We have combined 2 utterances together from 2 speakers.

in this situation, the LPC model gain will decrease. In proposed In Fig.3, we extract the window mode information near the

method the RMS including 8 short windows is calculated at speaker changing point. To compare the affectivity of different

first. If RMS is less than TRMS, the whole frame will be methods, two groups of experiments are designed. Indeed, in

discarded. the same temporal duration, the short window mode will

We statistic the Chain speech database, the sampling produced 8 MFCC vectors.

frequency is 16 KHz. The short frames are 12.25% in the

duration. The distribution of the short frames is related to the

signal feature and the window decision of the AAC encoder. Short Window

Speaker 1 Speaker 2

In audio frame feature extraction, we should keep the

resolution consistent in two windowing model. There are two

methods for this situation:

Window Type

• Because the short window is sparseness, we can

discard the short frame information directly. Especially

the measure is based on the probability characters.

• Another method is fusion the Long-frame and Short-

frame parameters, which are dependent on the natural

temporal sequence. There is 8 MFCC for a Short Long Window

frames window other than 1 MFCC for a Long frame

window.

0 20 40 60 80 100 120 140 160 180 200

Frames

III. EXPERIMENTS RESULTS Figure 3. The Window Type of the audio from two speakers

A. Data description and parameterization In Fig.4, MFCC vectors from short window mode and long

In this section, we present the evaluation results of the window mode are combined to the BIC model. In Fig.5, only

proposed speaker recognition algorithm. In our experiment, the MFCC vectors of the long window mode are used. The speaker

16 KHz audio is encoded to AAC stream in 128kbps firstly by changing point is the maximum of the BIC value in Fig.2. In

NeroAAC [8]. We have established the MDCT spectrum Fig.3, two BIC peaks were produced.

extraction tool based on FAAD open source [9].

500

The MFCC extraction in PCM domain can modify the

parameters granularity by modifying the size of the windows 400

Changing Point

length and shift. According to the AAC standard, in MFCC

analysis, the frame length is 2048 samples, which corresponds

to 128ms duration, the frame shift is 1024 samples, which 300

corresponds 64ms duration.

BIC

200

According to (1), MFCC can be extracted frame by frame.

In this paper, the MFCC order is 13, the filter banks number

is 40.

100

In the following experiments, two classical applications are 0

executed to test the effective of the MFCC in MDCT domain.

-100

B. BIC for Audio Scene Segmentation

0 50 100 150 200 250

Frames

In audio scene segmentation, the Chain speech database is

used. We have tested the SOLO group samples. The Solo Figure 4. The BIC curve via two type window fusion

group is a collection of conversational speech from 36

speakers. For each speaker there are 36 conversations of

approximately 20 seconds. We combine the speaker’s

utterances by interleave model. The BIC based on MFCC is

used to detection the change point.

To speed up the detection process, we apply two layers

detection algorithm. We find the changing point in a fixed-

block length, though the long window and short window have

different frequency and temporal resolution in AAC encoder.

450

400

Chang Point

Because the MFCC extraction is based on audio signal below 8

350

KHz, the algorithm should adapt to different sampling

frequency.

300

Many audio segmentation approaches are based on

250

probability model of MFCC. The two window types are

200

occurred alternatively in nature audio. Generally the

probability of short window is far less than that of long

150

window, though it is related to the audio signal and the

100

implementation of the codec. To discuss the fusion method of

MFCC from different window length, BIC model is selected

50

respectively in segmentation tasks.

0

0 50

Frames

100 150 The experimental results indicate that the MFCC fusion is

effective to the two type tasks. In the audio scene segmentation

task, the BIC curves increase more quickly depending on the

Figure 5. The BIC curve via only long window frames mixture MFCC than only Long-Window parameters.

On the other hand, extracting MFCC from MDCT spectrum

C. Video analysis based on Audio scene directly decreases the complexity by refrain from the IMDCT

A Video from YOUTUBE is used to test the scene and FFT. In general visual-based analysis involves much more

segmentation ability. Youtube supports two versions of high computation than audio-based one. Using the compressed-

and low quality video. The series is high stream. The video is domain audio information alone can often provide a good

encoded by H.264 at the bitrates of 422kbps. Audio is encoded initial solution for further examination based on visual

by AAC at the bitrates of 128kbps. The clip length is 637 information.

second.

REFERENCES

[1] Serkan Kiranyaz, Ahmad Farooq Qureshi, and Moncef Gabbouj, “A

Generic Audio Classification and Segmentation Approach for

Multimedia Indexing and Retrieval”, IEEE TRANSACTIONS ON

AUDIO, SPEECH, AND LANGUAGE PROCESSING, VOL. 14, NO.

3, pp. 1062-1081, MAY 2006

[2] Hyoung-Gook Kim Sikora, T, Comparison of MPEG-7 audio spectrum

projection features and MFCC applied to speaker recognition, sound

classification and audio segmentation, ICASSP 2004, pp: V- 925-8

vol.5

[3] Xi Shao, Changsheng Xu, Ye Wang and Mohan S Kankanhalli,

"Automatic Music Summarization in Compressed Domain," IEEE

ICASSP 2004, Montreal, Canada

[4] Y. Nakajima, Yang Lu, M. Sugano, A. Yoneyama, H. Yamagihara, A.

Kurematsu, “A Fast Audio Classification from MPEG Code Data”, in

Figure 6. The video retrieval via audio content analysis Proc. IEEE ICASSP, 1999, pp 3005-3008

[5] Yuhua Jiao; Mingyu Li; Bian Yang; Xiamu Niu, "Compressed domain

In the test all ten changing point of audio scene are detected robust hashing for AAC audio," Multimedia and Expo, 2008 IEEE

International Conference on , vol., no., pp.1545-1548, June 23 2008-

correctly. In Fig.6, the keyframes corresponding to the April 26 2008

changing points are shown. [6] Coding of Moving Pictures and Audio—IS 13818-7 (MPEG-2

Advanced, Audio Coding, AAC), JTC1/SC29/WG11/N7126, ISO/IEC,

IV. CONCLUSIONS 2005

[7] Beth Logan, Mel-Frequency Cepstral Coefficients for Music Modeling”,

The primary goal of this study is to implement audio scene Proc.Int. Conf. on Music Information Retrieval (ISMIR), Plymouth,

segmentation in AAC domain. Towards this goal, we have Massachusetts, 2000

investigated the algorithm to extent the MFCC extraction from [8] Nero AAC Codec 1.3.3.0, http://www.nero.com/

Fourier spectrum to MDCT domain. The MFCC extraction [9] FAAD, http://www.audiocoding.com/faad2.html

algorithm is developed, which adaptive the window switch and

You might also like

- Assignment 2: Read The "General Instructions" Before Completing This AssignmentDocument2 pagesAssignment 2: Read The "General Instructions" Before Completing This AssignmentMarina SimeonovaNo ratings yet

- LBG VQDocument3 pagesLBG VQGabriel Rattacaso CarvalhoNo ratings yet

- Control of Robot Arm Based On Speech Recognition Using Mel-Frequency Cepstrum Coefficients (MFCC) and K-Nearest Neighbors (KNN) MethodDocument6 pagesControl of Robot Arm Based On Speech Recognition Using Mel-Frequency Cepstrum Coefficients (MFCC) and K-Nearest Neighbors (KNN) MethodMada Sanjaya WsNo ratings yet

- An Approach To Extract Feature Using MFCDocument5 pagesAn Approach To Extract Feature Using MFCHai DangNo ratings yet

- Wang 2010Document6 pagesWang 2010manishscryNo ratings yet

- Design of M-Channel Pseudo Near Perfect Reconstruction QMF Bank For Image CompressionDocument9 pagesDesign of M-Channel Pseudo Near Perfect Reconstruction QMF Bank For Image CompressionsipijNo ratings yet

- A Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiDocument7 pagesA Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiUsman TariqNo ratings yet

- 2023 Midterm PapersDocument5 pages2023 Midterm Papers40 XII-B Satyam SinhaNo ratings yet

- Chapter - 1: 1.1 Introduction To Music Genre ClassificationDocument57 pagesChapter - 1: 1.1 Introduction To Music Genre ClassificationManoj KumarNo ratings yet

- MFCC and Vector Quantization For Arabic Fricatives2012Document6 pagesMFCC and Vector Quantization For Arabic Fricatives2012Aicha ZitouniNo ratings yet

- Speech Enhancement With Ad-Hoc Microphone Array Using Single Source ActivityDocument6 pagesSpeech Enhancement With Ad-Hoc Microphone Array Using Single Source Activity呂文祺No ratings yet

- Ecomms s07 MidtermDocument6 pagesEcomms s07 MidtermJos2No ratings yet

- Comparative Study Between DWT and BTC For The Transmission of Image Over FFT-OFDMDocument5 pagesComparative Study Between DWT and BTC For The Transmission of Image Over FFT-OFDMBasavaraj S KashappanavarNo ratings yet

- Analysis of Feature Extraction Techniques For Speech Recognition SystemDocument4 pagesAnalysis of Feature Extraction Techniques For Speech Recognition SystemsridharchandrasekarNo ratings yet

- Voice Recognition Using MFCC AlgorithmDocument4 pagesVoice Recognition Using MFCC AlgorithmNguyễn Đình NghĩaNo ratings yet

- MIMO OFDM Limted FeedbackDocument6 pagesMIMO OFDM Limted FeedbackYasser NaguibNo ratings yet

- Schmitz 2019Document6 pagesSchmitz 2019harshithb.ec20No ratings yet

- A Pilot Pattern Based Algorithm For MIMO-OFDM Channel EstimationDocument5 pagesA Pilot Pattern Based Algorithm For MIMO-OFDM Channel Estimationshaiza malikNo ratings yet

- Voice RecognitionDocument6 pagesVoice RecognitionVuong LuongNo ratings yet

- E2E-SINCNET Toward Fully End-To-End Speech RecognitionDocument5 pagesE2E-SINCNET Toward Fully End-To-End Speech RecognitionShiv shankarNo ratings yet

- Silence RemovalDocument3 pagesSilence RemovalJulsNo ratings yet

- Chapter 8 MmediaDocument18 pagesChapter 8 MmediaAbraham AbaynehNo ratings yet

- Artificial Neural Networks (The Tutorial With MATLAB)Document6 pagesArtificial Neural Networks (The Tutorial With MATLAB)DanielNo ratings yet

- ADSP Unit 1 QBDocument4 pagesADSP Unit 1 QBKumar ManiNo ratings yet

- Coding Techniques For Analog Sources: Prof - Pratik TawdeDocument11 pagesCoding Techniques For Analog Sources: Prof - Pratik TawdeabayteshomeNo ratings yet

- Heart Murmur Detection of PCG Using ResNet With Selective Kernel ConvolutionDocument4 pagesHeart Murmur Detection of PCG Using ResNet With Selective Kernel ConvolutionDalana PasinduNo ratings yet

- Implementation of A Basic AECDocument4 pagesImplementation of A Basic AECquitelargeNo ratings yet

- Analyzing Noise Robustness of MFCC and GFCC Features in Speaker IdentificationDocument5 pagesAnalyzing Noise Robustness of MFCC and GFCC Features in Speaker Identificationhhakim32No ratings yet

- DE63 Dec 2012Document6 pagesDE63 Dec 2012Rakesh RajbharNo ratings yet

- FPGA Implementation of LDPC Decoder Architecture For Wireless Communication StandardsDocument4 pagesFPGA Implementation of LDPC Decoder Architecture For Wireless Communication StandardsArgyrios KokkinisNo ratings yet

- Research Article: Efficient Multichannel NLMS Implementation For Acoustic Echo CancellationDocument6 pagesResearch Article: Efficient Multichannel NLMS Implementation For Acoustic Echo Cancellationhassani islamNo ratings yet

- Ericmario 2010Document4 pagesEricmario 2010Phạm ĐăngNo ratings yet

- Maximum A Posteriori Decoding ForDocument8 pagesMaximum A Posteriori Decoding Formianmuhammadawais321No ratings yet

- Single-Channel Electroencephalogram Analysis Using Non-Linear Subspace TechniquesDocument6 pagesSingle-Channel Electroencephalogram Analysis Using Non-Linear Subspace TechniquesIoana GuțăNo ratings yet

- Voice Activation Using Speaker Recognition For Controlling Humanoid RobotDocument6 pagesVoice Activation Using Speaker Recognition For Controlling Humanoid RobotDyah Ayu AnggreiniNo ratings yet

- Channel Coding For Underwater Acoustic Communication SystemDocument4 pagesChannel Coding For Underwater Acoustic Communication SystemmyonesloveNo ratings yet

- Speaker Recognition System Based On VQ in MATLAB EnvironmentDocument8 pagesSpeaker Recognition System Based On VQ in MATLAB EnvironmentmanishscryNo ratings yet

- Underwater Acoustic Channel: MC-CDMA Via Carrier Interferometry Codes in AnDocument5 pagesUnderwater Acoustic Channel: MC-CDMA Via Carrier Interferometry Codes in An283472 ktr.phd.ece.19No ratings yet

- Ec 2004 (PDC) - CS - End - May - 2023Document24 pagesEc 2004 (PDC) - CS - End - May - 2023223UTKARSH TRIVEDINo ratings yet

- L-3/T-,2/EEE Date: 09/06/2014: To Obtain A PAM Signal, Where, Is The Width of Pulse. What Is TheDocument34 pagesL-3/T-,2/EEE Date: 09/06/2014: To Obtain A PAM Signal, Where, Is The Width of Pulse. What Is TheMahmudNo ratings yet

- Channel Coding: Version 2 ECE IIT, KharagpurDocument8 pagesChannel Coding: Version 2 ECE IIT, KharagpurHarshaNo ratings yet

- Digital Communications: B o B oDocument12 pagesDigital Communications: B o B oLion LionNo ratings yet

- Algebraic Survivor Memory Management Design For Viterbi DetectorsDocument6 pagesAlgebraic Survivor Memory Management Design For Viterbi Detectorsapi-19790923No ratings yet

- SP Manual 2023-24Document98 pagesSP Manual 2023-24Manjunath ReddyNo ratings yet

- Pattern Division Multiple Access (PDMA) For Cellular Future Radio AccessDocument6 pagesPattern Division Multiple Access (PDMA) For Cellular Future Radio Accessletthereberock448No ratings yet

- McltechoDocument3 pagesMcltechojagruteegNo ratings yet

- Teach Spin 1Document4 pagesTeach Spin 1ttreedNo ratings yet

- A Texas Instruments DSP-based Acoustic Source Direction FinderDocument5 pagesA Texas Instruments DSP-based Acoustic Source Direction Finderimrankhan1995No ratings yet

- 1997 TCASII I. Galton Spectral Shaping of Circuit Errors in Digital To Analog ConvertersDocument10 pages1997 TCASII I. Galton Spectral Shaping of Circuit Errors in Digital To Analog Converterskijiji userNo ratings yet

- ECE650 Midterm Exam S - 10Document2 pagesECE650 Midterm Exam S - 10MohammedFikryNo ratings yet

- M.tech Advanced Digital Signal ProcessingDocument2 pagesM.tech Advanced Digital Signal Processingsrinivas50% (2)

- DSP - Student (Without Bonafide)Document50 pagesDSP - Student (Without Bonafide)Santhoshkumar R PNo ratings yet

- EC8501 UNIT 2 Linear Predictive CodingDocument12 pagesEC8501 UNIT 2 Linear Predictive CodingmadhuNo ratings yet

- Construction and Optimization For Adaptive Polar Coded CooperationDocument4 pagesConstruction and Optimization For Adaptive Polar Coded CooperationMehmet KibarNo ratings yet

- Home Automation Please Read This ShitDocument5 pagesHome Automation Please Read This ShitEnh ManlaiNo ratings yet

- SForum2023 FinalDocument4 pagesSForum2023 FinalThiago SantosNo ratings yet

- Reduced-Latency SC Polar Decoder Architectures: Chuan Zhang, Bo Yuan, and Keshab K. ParhiDocument5 pagesReduced-Latency SC Polar Decoder Architectures: Chuan Zhang, Bo Yuan, and Keshab K. ParhiFebru ekoNo ratings yet

- 5.c 41 - BLACK-BOX APPLICATION IN MODELING OFDocument4 pages5.c 41 - BLACK-BOX APPLICATION IN MODELING OFVančo LitovskiNo ratings yet

- Some Case Studies on Signal, Audio and Image Processing Using MatlabFrom EverandSome Case Studies on Signal, Audio and Image Processing Using MatlabNo ratings yet

- Software Radio: Sampling Rate Selection, Design and SynchronizationFrom EverandSoftware Radio: Sampling Rate Selection, Design and SynchronizationNo ratings yet

- Quiz 6: Multiple ChoiceDocument6 pagesQuiz 6: Multiple ChoiceAnupamNo ratings yet

- Architecture of Neural NetworkDocument32 pagesArchitecture of Neural NetworkArmanda Cruz NetoNo ratings yet

- Dwnload Full Digital Signal Processing Using Matlab A Problem Solving Companion 4th Edition Ingle Solutions Manual PDFDocument36 pagesDwnload Full Digital Signal Processing Using Matlab A Problem Solving Companion 4th Edition Ingle Solutions Manual PDFhaodienb6qj100% (19)

- Lect 7Document10 pagesLect 7Salem SobhyNo ratings yet

- BMI 704 - Machine Learning LabDocument17 pagesBMI 704 - Machine Learning Labjakekei5258No ratings yet

- Polynomials - Worksheet1Document2 pagesPolynomials - Worksheet1ckankaria749No ratings yet

- Top Down ParsingDocument45 pagesTop Down Parsinggargsajal9No ratings yet

- I and Q Components in Communications Signals: Sharlene Katz James FlynnDocument24 pagesI and Q Components in Communications Signals: Sharlene Katz James Flynnatalasa-1No ratings yet

- Schwartz-Zippel Lemma and Polynomial Identity TestingDocument7 pagesSchwartz-Zippel Lemma and Polynomial Identity TestingShriram RamachandranNo ratings yet

- Dual Simplex: January 2011Document14 pagesDual Simplex: January 2011Yunia RozaNo ratings yet

- A Short Course On Error-Correcting Codes: Mario Blaum C All Rights ReservedDocument104 pagesA Short Course On Error-Correcting Codes: Mario Blaum C All Rights ReservedGaston GBNo ratings yet

- Question 1-Canny Edge Detector (10 Points) : Fundamentals of Computer Vision - Midterm Exam Dr. B. NasihatkonDocument6 pagesQuestion 1-Canny Edge Detector (10 Points) : Fundamentals of Computer Vision - Midterm Exam Dr. B. NasihatkonHiroNo ratings yet

- HW03 CSCI570 - Spring2023Document5 pagesHW03 CSCI570 - Spring2023HB LNo ratings yet

- X X X E: Karatina University Sta 223: Introduction To Time Series Analysis Cat Ii 2020 Question OneDocument2 pagesX X X E: Karatina University Sta 223: Introduction To Time Series Analysis Cat Ii 2020 Question OneKimondo KingNo ratings yet

- Ipmv Mod 5&6 (Theory Questions)Document11 pagesIpmv Mod 5&6 (Theory Questions)Ashwin ANo ratings yet

- Computer Vision NewDocument28 pagesComputer Vision NewCE29Smit BhanushaliNo ratings yet

- Lecture 3 Complexity Analysis ReccuranceDocument39 pagesLecture 3 Complexity Analysis ReccuranceMahimul Islam AkashNo ratings yet

- Dip 08 09Document122 pagesDip 08 09saeed samieeNo ratings yet

- DAA NotesDocument115 pagesDAA Notesa44397541No ratings yet

- Design & Implementation of Image Compression Using Huffman Coding Through VHDL, Kumar KeshamoniDocument7 pagesDesign & Implementation of Image Compression Using Huffman Coding Through VHDL, Kumar KeshamoniKumar Goud.KNo ratings yet

- Desk Checking AlgorithmsDocument4 pagesDesk Checking AlgorithmsKelly Bauer83% (6)

- SyllabusDocument2 pagesSyllabusSrikanthNo ratings yet

- Digital Signal Processing-1703074Document12 pagesDigital Signal Processing-1703074Sourabh KapoørNo ratings yet

- Rational TheoremDocument12 pagesRational TheoremJose_Colella_1708No ratings yet

- Answer The Following Questions: Q1: Choose The Correct Answer (20 Points)Document13 pagesAnswer The Following Questions: Q1: Choose The Correct Answer (20 Points)Viraj JeewanthaNo ratings yet

- Assignment 1 PLE-2 MathsDocument3 pagesAssignment 1 PLE-2 MathsRajNo ratings yet

- Sri Vidya College of Engineering and Technology Course Material (Lecture Notes)Document35 pagesSri Vidya College of Engineering and Technology Course Material (Lecture Notes)BenilaNo ratings yet

- Cad SlidesviDocument69 pagesCad SlidesvimaxsilverNo ratings yet

- Channel Coding: Convolutional CodesDocument59 pagesChannel Coding: Convolutional CodesSabuj AhmedNo ratings yet