Professional Documents

Culture Documents

Week 6 Assignment

Week 6 Assignment

Uploaded by

kalidas0 ratings0% found this document useful (0 votes)

8 views2 pagesThe document outlines 5 assignments involving loading and manipulating data using PySpark. Assignment 1 asks to create a dataframe from sample data with 2 columns. Assignment 2 asks to load library data and enforce a schema. Assignment 3 asks to drop columns, remove duplicates and count unique values from train data. Assignment 4 asks to read sales data using different modes and count records. Assignment 5 asks to load hospital data, modify columns, add new columns with calculations, and select specific columns.

Original Description:

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document outlines 5 assignments involving loading and manipulating data using PySpark. Assignment 1 asks to create a dataframe from sample data with 2 columns. Assignment 2 asks to load library data and enforce a schema. Assignment 3 asks to drop columns, remove duplicates and count unique values from train data. Assignment 4 asks to read sales data using different modes and count records. Assignment 5 asks to load hospital data, modify columns, add new columns with calculations, and select specific columns.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

8 views2 pagesWeek 6 Assignment

Week 6 Assignment

Uploaded by

kalidasThe document outlines 5 assignments involving loading and manipulating data using PySpark. Assignment 1 asks to create a dataframe from sample data with 2 columns. Assignment 2 asks to load library data and enforce a schema. Assignment 3 asks to drop columns, remove duplicates and count unique values from train data. Assignment 4 asks to read sales data using different modes and count records. Assignment 5 asks to load hospital data, modify columns, add new columns with calculations, and select specific columns.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 2

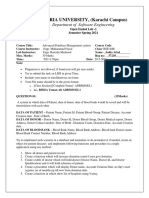

Assignment

1. Write PySpark code to create a new dataframe with the data given below

having 2 columns (‘season’) and (‘windspeed’).

[ Datatypes of the column names can be inferred ]

# Data

[("Spring", 12.3),

("Summer", 10.5),

("Autumn", 8.2),

("Winter", 15.1)]

2. Consider the library management dataset located at the following path

(/public/trendytech/datasets/library_data.json). Using PySpark, load the

data into a Dataframe and enforce schema using StructType.

3. Given the dataset (/public/trendytech/datasets/train.csv), create a

Dataframe using PySpark and perform the following operations

a) Drop the columns passenger_name and age from the dataset.

b) Count the number of rows after removing duplicates of columns

train_number and ticket_number.

c) Count the number of unique train names.

4. You are working as a Data Engineer in a large retail company. The

company has a dataset named "sales_data.json" that contains sales records

from various stores. The dataset is stored in JSON format and may have

some corrupt or malformed records due to occasional data quality issues.

Your task is to read the "sales_data.json" dataset

(/public/trendytech/datasets/sales_data.json) using PySpark, utilizing

different read modes to handle corrupt records. You need to create a

Dataframe using pyspark and perform the following operations:

1. Read the dataset using the "permissive" mode and count the number of

records read.

2. Read the dataset using the "dropmalformed" mode and display the

number of malformed records.

3. Read the dataset using the "failfast" mode.

5. You have a hospital dataset with the following fields:

● patient_id (integer): Unique identifier for each patient.

● admission_date (date): The date the patient was admitted to the

hospital. (MM-dd-yyyy)

● discharge_date (date): The date the patient was discharged from the

hospital. (yyyy-MM-dd)

● diagnosis (string): The diagnosed medical condition of the patient.

● doctor_id (integer): The identifier of the doctor responsible for the

patient's care.

● total_cost (float): The total cost of the hospital stay for the patient.

Using PySpark, load the data into a Dataframe and perform the following

operations on the hospital dataset

(/public/trendytech/datasets/hospital.csv):

1. Drop the "doctor_id" column from the dataset.

2. Rename the "total_cost" column to "hospital_bill".

3. Add a new column called "duration_of_stay" that represents the number

of days a patient stayed in the hospital. (hint: The duration should be

calculated as the difference between the "discharge_date" and

"admission_date" columns.)

4. Create a new column called "adjusted_total_cost" that calculates the

adjusted total cost based on the diagnosis as follows:

If the diagnosis is "Heart Attack", multiply the hospital_bill by 1.5.

If the diagnosis is "Appendicitis", multiply the hospital_bill by 1.2.

For any other diagnosis, keep the hospital_bill as it is.

5. Select the "patient_id", "diagnosis", "hospital_bill", and

"adjusted_total_cost" columns.

You might also like

- Sas Interview Questions With AnswersDocument124 pagesSas Interview Questions With AnswersVenkata Lakshmi Burla100% (5)

- Sas QuestionsDocument3 pagesSas Questionsgaggy1983No ratings yet

- Description: Hint: Perform Steps As Mentioned BelowDocument11 pagesDescription: Hint: Perform Steps As Mentioned BelowAnish Kumar100% (1)

- New HopeDocument25 pagesNew HopeJulius Juju Kenango100% (3)

- Tutorial WindografherDocument181 pagesTutorial WindografherGonzalo Gomez83% (6)

- Hipaa 837 InstDocument313 pagesHipaa 837 InstSam ShivaNo ratings yet

- Oregano CapstoneDocument16 pagesOregano CapstonePrincess Cloewie Ferrer100% (1)

- HP425 Seminar 1: Introduction To Stata: "Drop "Document6 pagesHP425 Seminar 1: Introduction To Stata: "Drop "Tú Minh ĐặngNo ratings yet

- DSML Problem StatementsDocument8 pagesDSML Problem StatementsMangesh PawarNo ratings yet

- Oops RevisionDocument1 pageOops RevisionKeerthi SattaluriNo ratings yet

- Health Care System AnalysispdfDocument19 pagesHealth Care System AnalysispdfhungdangNo ratings yet

- 500 Question 2Document157 pages500 Question 2saran2010No ratings yet

- Section 1: Input, Output and Storage Specifications. List of Tables in The DatabaseDocument4 pagesSection 1: Input, Output and Storage Specifications. List of Tables in The DatabaseRajat SharmaNo ratings yet

- October, 2011 (For July Session) : Section 1: C Programming LabDocument33 pagesOctober, 2011 (For July Session) : Section 1: C Programming LabPrabesh ManandharNo ratings yet

- B2 OopsDocument3 pagesB2 OopsRajesh KrishnamoorthyNo ratings yet

- Roll NO 2020Document8 pagesRoll NO 2020Ali MohsinNo ratings yet

- Tut w3sDocument6 pagesTut w3sFarhana Sharmin Tithi100% (1)

- Prog Assignment 3Document10 pagesProg Assignment 3Razvan RaducanuNo ratings yet

- PPC Project MockDocument20 pagesPPC Project MockbxoaoaNo ratings yet

- Hospital Billing SystemDocument16 pagesHospital Billing SystemAashutosh Sharma100% (1)

- Tutorial 01 Updated 06-10-2015Document8 pagesTutorial 01 Updated 06-10-2015SC Priyadarshani de SilvaNo ratings yet

- Sunny Micro ProjectDocument18 pagesSunny Micro Projectsantoshade491No ratings yet

- Parth B Rathod (IP)Document35 pagesParth B Rathod (IP)Bipin ChandraNo ratings yet

- SAS ETL ToolDocument19 pagesSAS ETL Toolusha85No ratings yet

- stata应用课程 回归Document50 pagesstata应用课程 回归Mengdi ShiNo ratings yet

- OOP Final Project ReportDocument55 pagesOOP Final Project ReportRaja Haríì RajpootNo ratings yet

- Exercises 3.3 Minería de Datos Profesor Luis Bayonet Sección: 01 José Germán 18-0200Document3 pagesExercises 3.3 Minería de Datos Profesor Luis Bayonet Sección: 01 José Germán 18-0200José GermánNo ratings yet

- ProjectDocument32 pagesProjectAnit Kumar MohapatraNo ratings yet

- HealthCare Domain TestingDocument6 pagesHealthCare Domain TestingsubhabirajdarNo ratings yet

- 361المشروع اسيات قواعد البياناتDocument8 pages361المشروع اسيات قواعد البياناتالصنوي فادي محمدNo ratings yet

- Blank Acl WorkpaperDocument6 pagesBlank Acl Workpaperxpac_53No ratings yet

- Performing Analysis of Meteorological Data: Punam SealDocument21 pagesPerforming Analysis of Meteorological Data: Punam SealPunamNo ratings yet

- Hospital Management SystemDocument11 pagesHospital Management Systemjojorefrence6969No ratings yet

- Hospital-Management-System-Project KhushiDocument19 pagesHospital-Management-System-Project KhushiyashNo ratings yet

- JPR - QB Pract 04 (A)Document2 pagesJPR - QB Pract 04 (A)api-3728136No ratings yet

- Material 3 - System Identification TutorialDocument41 pagesMaterial 3 - System Identification TutorialJannata Savira TNo ratings yet

- FOCP2 Projects 2022Document7 pagesFOCP2 Projects 2022AbcdNo ratings yet

- Assignment 2 Pe 1Document10 pagesAssignment 2 Pe 1Vishal GuptaNo ratings yet

- You Now Add The Custom Columns You Want On This UserDocument7 pagesYou Now Add The Custom Columns You Want On This UserVal DWNo ratings yet

- DSWS - Getting Started With Python - Version2 - 0 PDFDocument8 pagesDSWS - Getting Started With Python - Version2 - 0 PDFFaddy Lutfi-TejowatiNo ratings yet

- DS Report 03Document30 pagesDS Report 03Vishweshwar HosmathNo ratings yet

- Dealing With Health Care Data Using The SAS® SystemDocument9 pagesDealing With Health Care Data Using The SAS® SystemAkshay MathurNo ratings yet

- MS Access 2010 Example v2 PDFDocument33 pagesMS Access 2010 Example v2 PDFWy TeayNo ratings yet

- C# Practice Exercises 2Document2 pagesC# Practice Exercises 2Tony NakovskiNo ratings yet

- Ibb Test SQLDocument5 pagesIbb Test SQLginaNo ratings yet

- Report On Petroleum Consumption Data Analytics: - Submitted byDocument18 pagesReport On Petroleum Consumption Data Analytics: - Submitted byAyush SharmaNo ratings yet

- D03 Tutorial UpdatedDocument4 pagesD03 Tutorial UpdatedLEONG KAI JUN RVHS STU 2018No ratings yet

- Pre ProcessingDocument10 pagesPre ProcessingPrathmesh PatilNo ratings yet

- IT Spss PpracticalDocument40 pagesIT Spss PpracticalAsia mesoNo ratings yet

- CS 102 ProjectDocument3 pagesCS 102 ProjectWesam AL MofarehNo ratings yet

- SandeepDocument8 pagesSandeeppavan teja saikamNo ratings yet

- Web ApplicationDocument13 pagesWeb Applicationouiam ouhdifaNo ratings yet

- Format PageDocument8 pagesFormat PagePiyush ShahaneNo ratings yet

- BacLink 4.LIS Cerner ClassicDocument12 pagesBacLink 4.LIS Cerner Classicvn_ny84bio021666No ratings yet

- Predictive Modeling Business Report Seetharaman Final Changes PDFDocument28 pagesPredictive Modeling Business Report Seetharaman Final Changes PDFAnkita Mishra100% (1)

- Healthcare Cost Analysis (Source Code) : DescriptionDocument6 pagesHealthcare Cost Analysis (Source Code) : DescriptionShivaraj BevoorNo ratings yet

- R Programming ExerciceDocument5 pagesR Programming ExerciceCarmen Deni Martinez GomezNo ratings yet

- Usemore Soap CompanyDocument8 pagesUsemore Soap Companyg14032No ratings yet

- Data Understanding and PreparationDocument48 pagesData Understanding and PreparationMohamedYounesNo ratings yet

- BAHRIA UNIVERSITY, (Karachi Campus) : Department of Software EngineeringDocument11 pagesBAHRIA UNIVERSITY, (Karachi Campus) : Department of Software EngineeringSadia AfzalNo ratings yet

- Assignment Su2023Document4 pagesAssignment Su2023Đức Lê TrungNo ratings yet

- Introduction To Business Statistics Through R Software: SoftwareFrom EverandIntroduction To Business Statistics Through R Software: SoftwareNo ratings yet

- Here There Be Tygers by Stephen KingDocument3 pagesHere There Be Tygers by Stephen KingxXPizzaPresidentXx100% (1)

- Plasma TV: Service ManualDocument34 pagesPlasma TV: Service ManualClaudelino ToffolettiNo ratings yet

- Effectiveness of Myofunctional Therapy in Ankyloglossia: A Systematic ReviewDocument18 pagesEffectiveness of Myofunctional Therapy in Ankyloglossia: A Systematic Reviewmistic0No ratings yet

- Social and Psychological ManipulationDocument287 pagesSocial and Psychological ManipulationDean Amory100% (36)

- Summer Fields School Sub - Psychology CLASS - XII Opt Date: 30.5.14Document2 pagesSummer Fields School Sub - Psychology CLASS - XII Opt Date: 30.5.14max lifeNo ratings yet

- Sop For LP Pump (R1)Document6 pagesSop For LP Pump (R1)Sonrat100% (1)

- Clean Room DetailDocument40 pagesClean Room DetailAjay SastryNo ratings yet

- Cii-Sorabji Green Business CentreDocument46 pagesCii-Sorabji Green Business CentremadhuNo ratings yet

- Wages For ESICDocument10 pagesWages For ESICvai8havNo ratings yet

- Immigration Reform and Control Act of 1986Document3 pagesImmigration Reform and Control Act of 1986d8nNo ratings yet

- PDFDocument72 pagesPDFAkhmad Ulil AlbabNo ratings yet

- DC 1 5dDocument18 pagesDC 1 5dSymon Miranda SiosonNo ratings yet

- SPE-182811-MS Single-Well Chemical Tracer Test For Residual Oil Measurement: Field Trial and Case StudyDocument15 pagesSPE-182811-MS Single-Well Chemical Tracer Test For Residual Oil Measurement: Field Trial and Case StudySHOBHIT ROOHNo ratings yet

- Super HDB Turbo 15W-40Document1 pageSuper HDB Turbo 15W-40izzybjNo ratings yet

- Core dSAT Vocabulary - Set 3Document2 pagesCore dSAT Vocabulary - Set 3Hưng NguyễnNo ratings yet

- Asst. Prof Akash Prasad: SemesterDocument17 pagesAsst. Prof Akash Prasad: SemesterHemu BhardwajNo ratings yet

- Franciscan ThePulseNewsletterDocument4 pagesFranciscan ThePulseNewsletterJill McDonoughNo ratings yet

- Flo WatcherDocument2 pagesFlo WatcherAnonymous yWgZxGW5dNo ratings yet

- Sharp+LC-32A47L-ADocument32 pagesSharp+LC-32A47L-Aalexnder gallegoNo ratings yet

- Iso 27001 ChecklistDocument8 pagesIso 27001 ChecklistALEX COSTA CRUZNo ratings yet

- Epidural Intraspinal Anticoagulation Guidelines - UKDocument9 pagesEpidural Intraspinal Anticoagulation Guidelines - UKjoshNo ratings yet

- Ingles III - Advantages and Disadvantages of Rapid Development in The Province of TeteDocument6 pagesIngles III - Advantages and Disadvantages of Rapid Development in The Province of TeteVercinio Teodoro VtbNo ratings yet

- Reliance PresentationDocument36 pagesReliance PresentationrahsatputeNo ratings yet

- Typhoid Fever para PresentDocument23 pagesTyphoid Fever para PresentGino Al Ballano BorinagaNo ratings yet

- February 21, 2014 Strathmore TimesDocument28 pagesFebruary 21, 2014 Strathmore TimesStrathmore TimesNo ratings yet

- TEST1Document5 pagesTEST1mirza daniealNo ratings yet

- HealthySFMRA - 03001 - San Francisco MRA Claim FormDocument3 pagesHealthySFMRA - 03001 - San Francisco MRA Claim FormlepeepNo ratings yet

- DO Transfer PumpDocument40 pagesDO Transfer PumpUdana HettiarachchiNo ratings yet