Professional Documents

Culture Documents

SE Compiler Chapter 1

SE Compiler Chapter 1

Uploaded by

mikiberhanu41Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

SE Compiler Chapter 1

SE Compiler Chapter 1

Uploaded by

mikiberhanu41Copyright:

Available Formats

Principles of Compiler Design – CENG 2042

Chapter I – Introduction to Compiler Design

Software Engineering Department, School of Computing

Ethiopian Institute of Technology – Mekelle (EiT-M), Mekelle University

Everything Goes Through a Compiler.

Almost every piece of software used on any computer has been produced by a compiler.

1. Overview

Computers are a balanced mix of software and hardware. Hardware is just a piece of mechanical device

and its functions are being controlled by compatible software. Hardware understands instructions in the

form of electronic charge, which is the counterpart of binary language in software programming. Binary

language has only two alphabets, 0 and 1. To instruct, the hardware codes must be written in binary format,

which is simply a series of 1s and 0s. It would be a difficult and cumbersome task for computer

programmers to write such codes, which is why we have compilers to write such codes.

1.1.Why compilers? A brief History

With the advent of the stored-program computer pioneered by John von Neumann in the late 1940s, it

became necessary to write sequence of codes, or programs that would cause these computers to perform the

desired computations. Initially, these programs were written in machine language – numeric codes that

represented the actual machine operations to be performed. For example,

C7 06 0000 0002

Represents the instruction to move the number 2 to the location 0000(in hexadecimal) on the Intel 8x86

processors used in IBM PCs. Of course, writing such codes is extremely time consuming and tedious, and

this form of coding was soon replaced by assembly language, in which instructions and memory locations

are given symbolic forms. For example, the assembly language instruction

MOV X, 2

is equivalent to the previous machine instruction (assuming the symbolic memory location X is 0000). An

assembler translates the symbolic codes and memory locations of assembly language into the

corresponding numeric codes of machine language.

Assembly language greatly improved the speed and accuracy with which programs could be written, and it

is still in use today, especially when extremely speed or conciseness of code is needed. However, assembly

language has a number of defects: it is still not easy to write and it is difficult to read and understand.

Moreover, assembly language is extremely dependent on the particular machine for which it was written,

so code written for one computer must be completely rewritten for another machine. Clearly, the next

major step in programming technology was to write the operations of a program in a concise form more

nearly resembling mathematical notations or natural language, in a way that was independent of any one

particular machine and yet capable of itself being translated by a program into executable code. For

example, the previous assembly language code can be written in a concise, machine-independent for as

X=2

Ins: Fkrezgy Yohannes Compiler Design 1|Page

At first, it was feared that this might not be possible, or if it was, then the object code would be so

inefficient as to be useless.

The development of FORTRAN language and its compiler by a team at IBM led by John Backus between

1954 and 1957 showed that both these fears were unfounded. Nevertheless, the success of this project came

about only with great deal of effect, since most of the processes involved in translating programming

languages were not well understood at the time.

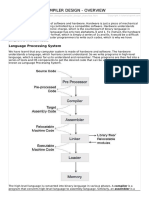

1.2.Language Processing System

We have learnt that any computer system is made of hardware and software. The hardware understands a

language, which humans cannot understand. So we write programs in high-level language, which is easier

for us to understand and remember. These programs are then fed into a series of tools and OS components

to get the desired code that can be used by the machine. This is known as Language Processing System.

The high-level language is converted into binary language in various phases. A compiler is a program that

converts high-level language to assembly language. Similarly, an assembler is a program that converts the

assembly language to machine-level language.

Let us first understand how a program, using C compiler, is executed on a host machine.

· User writes a program in C language (high-level language).

· The C compiler compiles the program and translates it to assembly program (low-level

language).

· An assembler then translates the assembly program into machine code (object).

· A linker tool is used to link all the parts of the program together for execution (executable

machine code).

Ins: Fkrezgy Yohannes Compiler Design 2|Page

· A loader loads all of them into memory and then the program is executed.

Before diving straight into the concepts of compilers, we should understand a few other tools that work

closely with compilers.

1.2.1. Preprocessor

A preprocessor, generally considered as a part of compiler, is a tool that produces input for compilers. Its

purpose is to process directives. Directives are specific instructions that start with # symbol and end with a

newline (NOT semicolon). The preprocessor is not smart – it does not understand C++ syntax; rather, it

manipulates text before the compiler runs.

Preprocessor may perform the following functions.

a. Macro processing: A preprocessor may allow a user to define macros that are short hands for longer

constructs. The #define directive can be used to create a macro. A macro is a rule that defines how an

input sequence (e.g. an identifier) is converted into a replacement output sequence (e.g. some text).

Example: #define MU “Mekelle University”

b. File inclusion: A preprocessor may include header files into the program text. You’ve already seen the

#include directive in action. When you #include a file, the preprocessor copies the contents of the

included file into the including file at the point of the #include directive. This is useful when you have

information that needs to be included in multiple places (as forward declarations often are).

The #include command has two forms:

#include<filename> tells the preprocessor to look for the file in a special place defined by the operating

system where header files for the C++ runtime library are held.

#include "filename" tells the preprocessor to look for the file in directory containing the source file

doing the #include. If it doesn’t find the header file there, it will check any other include paths that

you’ve specified as part of your compiler/IDE settings. That failing, it will act identically to the angled

brackets case.

c. Rational preprocessor: these preprocessors augment older languages with more modern flow-of-control

and data structuring facilities.

d. Language Extensions: These preprocessor attempts to add capabilities to the language by certain

amounts to build-in macro

1.2.2. COMPILER

Compiler is a translator program that translates a program written in (HLL) the source program and

translates it into an equivalent program in (MLL) the target program. As an important part of a compiler is

error showing to the programmer.

Ins: Fkrezgy Yohannes Compiler Design 3|Page

Executing a program written n HLL programming language is basically of two parts. The source program

must first be compiled translated into a object program. Then the results object program is loaded into a

memory executed.

The Fundamental Principles of Compilation

Compilers are large, complex, carefully engineered objects. While many issues in compiler design are

amenable to multiple solutions and interpretations, there are two fundamental principles that a compiler

writer must keep in mind at all times. The first principle is inviolable:

“The compiler must preserve the meaning of the program being compiled.”

Correctness is a fundamental issue in programming. The compiler must preserve correctness by faithfully

implementing the “meaning” of its input program. This principle lies at the heart of the social contract

between the compiler writer and compiler user. If the compiler can take liberties with meaning, then why

not simply generate a nop or a return? If an incorrect translation is acceptable, why expend the effort to get

it right? The second principle that a compiler must observe is practical:

“The compiler must improve the input program in some discernible way.”

LIST OF COMPILERS

1. Ada compilers

2 .ALGOL compilers

3 .BASIC compilers

4 .C# compilers

5 .C compilers

6 .C++ compilers

7 .COBOL compilers

8 .D compilers

9 .Common Lisp compilers

10. ECMAScript interpreters

11. Eiffel compilers

12. Felix compilers

13. Fortran compilers

14. Haskell compilers

15 .Java compilers

16. Pascal compilers

17. PL/I compilers

18. Python compilers

Ins: Fkrezgy Yohannes Compiler Design 4|Page

19. Scheme compilers

20. Smalltalk compilers

21. CIL compilers

1.2.3. Assembler

An assembler translates assembly language programs into machine code. The output of an assembler is

called an object file, which contains a combination of machine instructions as well as the data required to

place these instructions in memory.

1.2.4. Linker

Linker is a computer program that links and merges various object files together in order to make an

executable file. All these files might have been compiled by separate assemblers. The major task of a linker

is to search and locate referenced module/routines in a program and to determine the memory location

where these codes will be loaded, making the program instruction to have absolute references.

1.2.5. Loader

Loader is a part of an operating system and is responsible for loading executable files into memory and

executes them. It calculates the size of a program (instructions and data) and creates memory space for it. It

initializes various registers to initiate execution.

INTERPRETER:

An interpreter, like a compiler, translates high-level language into low-level machine language. The

difference lies in the way they read the source code or input. A compiler reads the whole source code at

once, creates tokens, checks semantics, generates intermediate code, executes the whole program and may

involve many passes. In contrast, an interpreter reads a statement from the input, converts it to an

intermediate code, executes it, then takes the next statement in sequence. If an error occurs, an interpreter

stops execution and reports it; whereas a compiler reads the whole program even if it encounters several

errors.

In principle, any programming language can be either interpreted or compiled, but an interpreter may be

preferred to a compiler depending on the language in use and the situation under which translation occurs.

On the other hand, a compiler is preferred if speed of execution is a primary consideration, since compiled

object code is invariably faster than interpreted source code, sometimes by a factor of 10 or more.

Interpreters, however, share many of their operations with compilers, and there can even be translators that

are hybrids, lying somewhere between interpreters and compilers.

Languages such as BASIC, SNOBOL, LISP can be translated using interpreters. JAVA also uses

interpreter.

The process of interpretation can be carried out in following phases.

1. Lexical analysis

2. Synatx analysis

3. Semantic analysis

4. Direct Execution

Compiler Vs Interpreter

Ins: Fkrezgy Yohannes Compiler Design 5|Page

Compiler Interpreter

Both read a source program written in some HLL and translates it to Machine code (Object code)

The object code can be executed directly on the Reads the source code one instruction or line at a

machine where it was compiled. time, converts this line into machine code and

executes.

The compiler translates the entire program in one The machine code is then discarded and the next line

go and then executes it. is read, executing each line as it is “translated”

Interpreters stop translating after the first error.

Advantage

The translation is done once only and as a separate Interpreters are easier to use, particularly for

process. beginners.

Compiled programs can run on any computer You can interrupt it while it is running, change the

program and either continue or start again.

Disadvantage

You cannot change the program without going Every line has to be translated every time it is

back to the original source code, editing that and executed. (because of this interpreters are slow)

recompiling.

1.3. Structure of compiler

A compiler is a large, complex software system. The community has been building compilers since 1955,

and over the years, we have learned many lessons about how to structure a compiler. Earlier, we depicted a

compiler as a simple box that translates a source program into a target program. Reality, of course, is more

complex than that simple picture.

As the single-box model suggests, a compiler must both understand the source program that it takes as

input and map its functionality to the target machine. The distinct nature of these two tasks suggests a

division of labor and leads to a design that decomposes compilation into two major pieces: a front end and

a back end.

The front end focuses on understanding the source-language program. The back end focuses on mapping

programs to the target machine. This separation of concerns has several important implications for the

design and implementation of compilers.

The front end must encode its knowledge of the source program in some IR structure for later use by the

back end. This intermediate representation (IR) becomes the compiler’s definitive representation for the

code it is translating. At each point in compilation, the compiler will have a definitive representation. It

may, in fact, use several different IRs as compilation progresses, but at each point, one representation will

be the definitive IR. We think of the definitive IR as the version of the program passed between

independent phases of the compiler, like the IR passed from the front end to the back end in the preceding

drawing.

Ins: Fkrezgy Yohannes Compiler Design 6|Page

In a two-phase compiler, the front end must ensure that the source program is well formed, and it must map

that code into the IR. The back end must map the IR program into the instruction set and the finite

resources of the target machine. Because the back end only processes IR created by the front end, it can

assume that the IR contains no syntactic or semantic errors.

The compiler can make multiple passes over the IR form of the code before emitting the target program.

This should lead to better code, as the compiler can, in effect, study the code in one phase and record

relevant details. Then, in later phases, it can use these recorded facts to improve the quality of translation.

This strategy requires that knowledge derived in the first pass be recorded in the IR, where later passes can

find and use it.

Introducing an IR makes it possible to add more phases to compilation. The compiler writer can insert a

third phase between the front end and the back Optimizer end. This middle section, or optimizer, takes an

IR program as its input and produces a semantically equivalent IR program as its output. By using the IR as

an interface, the compiler writer can insert this third phase with minimal disruption to the front end and

back end. This leads to the following compiler structure, termed a three-phase compiler.

The

optimizer is an IR-to-IR transformer that tries to improve the IR program in some way. The optimizer can

make one or more passes over the IR, analyze the IR, and rewrite the IR. The optimizer may rewrite the IR

in a way that is likely to produce a faster target program from the back end or a smaller target program

from the back end. It may have other objectives, such as a program that produces fewer page faults or uses

less energy.

Conceptually, the three-phase structure represents the classic optimizing compiler. In practice, each phase

is divided internally into a series of passes. The front end consists of two or three passes that handle the

details of recognizing valid source-language programs and producing the initial IR form of the program.

The middle section contains passes that perform different optimizations. The number and purpose of these

passes vary from compiler to compiler. The back end consists of a series of passes, each of which takes the

IR program one step closer to the target machine’s instruction set. The three phases and their individual

passes share a common infrastructure.

In practice, the conceptual division of a compiler into three phases, a front end, a middle section or

optimizer, and a back end, is useful. The problems addressed by these phases are different. The front end is

concerned with understanding the source program and recording the results of its analysis into IR form.

The optimizer section focuses on improving the IR form.

A compiler can have many phases and passes.

· Pass: A pass refers to the traversal of a compiler through the entire program.

· Phase: A phase of a compiler is a distinguishable stage, which takes input from the previous stage,

processes and yields output that can be used as input for the next stage. A pass can have more than

one phase.

Ins: Fkrezgy Yohannes Compiler Design 7|Page

1.4. Phases of a compiler

The compilation process is a sequence of various phases. Each phase takes input from its previous stage,

has its own representation of source program, and feeds its output to the next phase of the compiler. Let us

understand the phases of a compiler.

1.4.1. Lexical Analysis

The first phase of compiler also called scanner works as a text scanner. This phase scans the source code

as a stream of characters and converts it into meaningful lexemes called tokens.

The scanner begins the analysis of the source program by reading the input text—character by character—

and grouping individual characters into tokens (identifiers, integers, reserved words, delimiters, and so on).

This is the first of several steps that produce successively higher-level representations of the input. The

tokens are encoded (often as integers) and are fed to the parser for syntactic analysis. When necessary, the

actual character string comprising the token is also passed along for use by the semantic phases. The

scanner does the following.

· It puts the program into a compact and uniform format (a stream of tokens).

· It eliminates unneeded information (such as comments).

· It processes compiler control directives (for example, turn the listing on or off and include source

text from a file).

· It sometimes enters preliminary information into symbol tables (for example, to register the

presence of a particular label or identifier).

· It optionally formats and lists the source program.

Ins: Fkrezgy Yohannes Compiler Design 8|Page

Examples of Tokens:

a. Key words: while, if, void, int, float, for, …

b. Identifiers: declared by the programmer

c. Operators: +, -, *, /, =, ==, <, >, <=, >=, …

d. Numeric Constants: numbers such as 124, 12.35, 0.09E-23, etc

e. Character constants: single character or strings of characters enclosed in quotes.

f. Special characters: characters used as delimiters such as ( ) , ; :

Examples of Non-Tokens: comment and white space

Tokens can be represented as follows:

<Token type or class, Token value>

Example: Show the token classes or types, put out by the lexical analysis phase corresponding to this C++

source input:

a) position = initial + rate * 60 ;

b) sum = sum + unit * /* accumulate sum */ 1.2e-12 ;

Solution:

a) Identifier (position) b) Identifier (sum)

Assignment (=) Assignment (=)

Identifier (initial) Identifier (sum)

Operator (+) Operator (+)

Identifier (rate) Identifier (unit)

Operator (*) Operator (∗)

Numeric constant (60) Numeric constant (1.2e-12)

The main action of building tokens is often driven by token descriptions. Regular expression notation is an

effective approach to describing tokens. Regular expressions are a formal notation sufficiently powerful to

describe the variety of tokens required by modern programming languages. In addition, they can be used as

a specification for the automatic generation of finite automata that recognize regular sets, that is, the sets

that regular expressions define. Recognition of regular sets is the basis of the scanner generator. A scanner

generator is a program that actually produces a working scanner when given only a specification of the

tokens it is to recognize. Scanner generators are a valuable compiler-building tool.

1.4.2. Syntax Analysis (The parser)

The next phase is called the syntax analysis or parsing. It takes the token produced by lexical analysis as

input and generates a parse tree (or syntax tree). In this phase, token arrangements are checked against the

source code grammar, i.e., the parser checks if the expression made by the tokens is syntactically correct.

The parser verifies correct syntax. If a syntax error is found, it issues a suitable error message. Also, it may

be able to repair the error (to form a syntactically valid program) or to recover from the error (to allow

parsing to be resumed). In many cases, syntactic error recovery or repair can be done automatically by

consulting error-repair tables created by a parser/repair generator.

As syntactic structure is recognized, the parser usually builds an abstract syntax tree (AST). An abstract

syntax tree is a concise representation of program structure that is used to guide semantic processing.

Ins: Fkrezgy Yohannes Compiler Design 9|Page

IN syntax trees, each interior node represents an operator or control structure and each leaf node represents

an operand. A statement such as if (Expr) Stmt1 else Stmt2 could be implemented as a node having three

children – one for the condition expression, one for the true part (Stmt1), and one for the else statement

(Stmt2). The while control structure would have two children: one for the loop condition, and one for the

statement to be repeated. The compound statement could have an unlimited number of children, one for

each statement in the compound statement. The other way would be to treat the semicolon like a statement

concatenation operator, yielding a binary tree.

Example: Show a syntax tree for the C/C++ statement

a. position = initial + rate * 60

b. if (A + 3 < 400) A = 0; else B = A * A

Solution:

a) b)

1.4.3. Semantic Analysis

Semantic analysis checks whether the parse tree constructed follows the rules of language. For example,

assignment of values is between compatible data types, and adding string to an integer. Also, the semantic

analyzer keeps track of identifiers, their types and expressions; whether identifiers are declared before use

or not, etc. The semantic analyzer produces an annotated syntax tree as an output.

The type checker checks the static semantics of each AST node. That is, it verifies that the construct the

node represents is legal and meaningful (that all identifiers involved are declared that types are correct, and

so on). If the construct is semantically correct, the type checker “decorates” the AST node by adding type

information to it. If a semantic error is discovered, a suitable error message is issued.

Example: Draw an Attributed AST for position = initial + rate * 60

Solution:

Semantic errors:

Ins: Fkrezgy Yohannes Compiler Design 10 | P a g e

• Undeclared identifier

• Multiple declared identifier

• Index out of bounds

• Wrong number or types of args to call

• Incompatible types for operation

• Break statement outside switch/loop

• Goto with no label

• etc…

1.4.4. Intermediate Code Generation

After semantic analysis, the compiler generates an intermediate code of the source code for the target

machine. It represents a program for some abstract machine. It is in between the high-level language and

the machine language. This intermediate code should be generated in such a way that it makes it easier to

be translated into the target machine code.

One popular type of intermediate-language representation is “Three Address Code (TAC)”. Three-address

code statement is:

A := B op C where A, B and C are operands and op is a binary operator.

Example: The parse tree for position = initial + rate * 60 might be converted into the three-address

sequence:

Solution: Three Address Code

temp1:= int to real (60)

temp2:= id3 * temp1

temp3:= id2 + temp2

id1:= temp3.

Control Statements are translated into three-address code by using jump instructions. The basic idea of

converting any flow of control statement to a three address code is to simulate the “branching” of the flow

of control.

Ins: Fkrezgy Yohannes Compiler Design 11 | P a g e

1.4.5. Code Optimization

This is optional phase described to improve the intermediate code so that the output runs faster and takes

less space. Optimization can be assumed as something that removes unnecessary code lines, and arranges

the sequence of statements in order to speed up the program execution without wasting resources (CPU,

memory).

The IR code generated by the translator is analyzed and transformed into functionally equivalent but

improved IR code by the optimizer. This phase can be complex, often involving numerous sub phases,

some of which may need to be applied more than once. Most compilers allow optimizations to be turned

off so as to speed translation. Nonetheless, a carefully designed optimizer can significantly speed program

execution by simplifying, moving, or eliminating unneeded computations.

Optimization can also be done after code generation. An example is peephole optimization. Peephole

optimization examines generated code a few instructions at a time (in effect, through a “peephole”).

Common peephole optimizations include eliminating multiplications by one or additions of zero,

eliminating a load of a value into a register when the value is already in another register, and replacing a

sequence of instructions by a single instruction with the same effect.

The optimizer can produce an optimized three address code for position = initial + rate * 60 as follows:

temp1:= int to real (60) temp1 = rate * 60.0

temp2:= id3 * temp1 position= initial+ temp1

temp3:= id2 + temp2

id1:= temp3.

1.4.6. Code Generation

The last phase of translation is code generation. In this phase, the code generator takes the optimized

representation of the intermediate code and maps it to the target machine language. The code generator

translates the intermediate code into a sequence of (generally) re-locatable machine code. Sequence of

instructions of machine code performs the task as the intermediate code would do.

The code generator may produce either:

• Machine code for a specific machine, or

• Assembly code for a specific machine and assembler.

If it produces assembly code, then an assembler is used to produce the machine code.

Ins: Fkrezgy Yohannes Compiler Design 12 | P a g e

Example: The intermediate code position = initial + rate * 60; may be translated into the assembly code as

follows:

temp1:= int to real (60) MOVF rate, R2

temp2:= id3 * temp1 MULF #60.0, R2

temp3:= id2 + temp2 MOVF initial, R1

id1:= temp3. ADDF R2, R1

MOVF R1, position

1.4.7. Symbol Table

It is a data-structure maintained throughout all the phases of a compiler. All the identifiers’ names along

with their types are stored here. The symbol table makes it easier for the compiler to quickly search the

identifier record and retrieve it. The symbol table is also used for scope management.

A symbol table is a mechanism that allows information to be associated with identifiers and shared among

compiler phases. Each time an identifier is declared or used, a symbol table provides access to the

information collected about it. Symbol tables are used extensively during type checking, but they can also

be used by other compiler phases to enter, share, and later retrieve information about variables, procedures,

labels, and so on. Compilers may choose to use other structures to share information between compiler

phases. For example, a program representation such as an AST may be expanded and refined to provide

detailed information needed by optimizers, code generators, linkers, loaders, and debuggers.

1.4.8. Error Recovery and Handling

A compiler must be robust to allow analysis to proceed in the face of most unexpected situations. As such,

error recovery is an important consideration. To improve programmer productivity, users would expect that

as many errors as possible must be reported in a single compiler run. Recovery in compiler operation

implies that errors be localized as much as possible.

Error handling and recovery span all compiler phases as outlined earlier. A consistent approach by the

compiler in reporting errors will require that it be well integrated with the source handler. Further, an

effective strategy is necessary so that the error recovery effort is not overwhelming, clumsy or a distraction

to the compiler tasks proper.

One of the most important functions of a compiler is the detection and reporting of errors in the source

program. The error message should allow the programmer to determine exactly where the errors have

occurred. Errors may occur in all or the phases of a compiler. Whenever a phase of the compiler discovers

an error, it must report the error to the error handler, which issues an appropriate diagnostic msg. Both of

the table-management and error-Handling routines interact with all phases of the compiler.

1.5. T-Diagram

A compiler is a program, written in an implementation language (IL) or host language, accepting text in a

source language (SL) and producing text in a target language (TL). Language description languages are

used to define all of these languages and themselves as well. The source language is an algorithmic

language to be used by programmers. The target language is suitable for execution by some particular

computer.

A useful notation for describing a computer program, particularly a translator, uses so-called T- diagrams.

Ins: Fkrezgy Yohannes Compiler Design 13 | P a g e

Since there are so many languages involved, and thus so many translations, we need a notation to keep the

interactions straight. A given translator has three main languages (SL, TL, IL above) which are objects of

the prepositions from, to and in respectively. A T-diagram of the form

Example: A compiler written in language H (for the host language) that translates language S (for the

source language) into language T (for target language) is drawn as the following T-diagram.

Note: This is equivalent to saying that the compiler runs on “machine” H (if H is not machine code, then

we consider it to be the executable code for a hypothetical machine). Typically, we expect H to be the same

as T (that is, the compiler produces code for the same machine as the one on which it runs), but this needn’t

be the case.

1.6. Compiler Construction Techniques (Writing a compiler)

By this point it should be clear that a compiler is not a trivial program. A new compiler, with all

optimizations, could take over a person-year to implement. For this reason, we are always looking for

techniques or shortcuts which will speed up the development process. This often involves making use of

compilers, or portions of compiler, which have been developed previously. It also may involve compiler

generating tools, such as lex and yacc, which are part of the UNIX environment.

The languages used for compiler construction can be any of the following.

· For the compiler to execute immediately, this implementation (or host) language would have to be

machine language. This was indeed how the first compilers were written, since essentially no

compilers existed yet.

· A more reasonable approach today is to write the compiler in another language for which a

compiler already exists. If the existing compiler already runs on the target machine, then we need

only compile the new compiler using the existing compiler to get a running program. This and other

more complex situations are best described by drawing a compiler as T-diagram.

· If the existing compiler for language B runs on a machine different from the target machine, then

the situation is a bit more complicated. Compilation then produces a cross compiler, that is, a

compiler that generates target code for a different machine from the one on which it runs.

1.6.1. Using high-level host language.

Native Compiler: Native or hosted compiler is one which output is intended to directly run on the same

type of computer and operating system that the compiler itself runs on.

Ins: Fkrezgy Yohannes Compiler Design 14 | P a g e

If, as is increasingly common, one’s dream machine M is supplied with the machine coded version of a

compiler for a well-established language like C, then the production of a compiler for one’s dream

language X is achievable by writing the new compiler, say XtoM, in C and compiling the source (XtoM.C)

with the C compiler (CtoM.M) running directly on M (see Figure 3.1). This produces the object version

(XtoM.M) which can then be executed on M. (i.e. If we want the X compiler written in C to produce code

for machine M, then to run the compiler on machine M we would need first of all to translate it into M-

code by means of C compiler written in M-code and producing M-code).

Figure 1.6 use of C as an implementation language

Since a compiler does not change the purpose of the source program, the SL→ TL of the output (the third

T) is the same as the SL→TL on the input (the first T). The IL of the executing compiler (the middle T)

must be the machine language of the computer on which it is running. The IL of the input must be the same

as the SL of the executing compiler. The IL of the output must be the same as the TL of the executing

compiler.

1.6.2. Cross compiler

New computers with enhanced (and sometimes reduced) instruction sets are constantly being produced in

the computer industry. The developers face the problem of producing a new compiler for each existing

programming language each time a new computer is designed. This problem is simplified by process called

cross compiling.

Cross compiler is a compiler capable of creating executable code for a platform other than the one on

which the compiler is running. For example: a compiler that runs on windows 7 PC but generates code that

runs on Android smartphone is a cross compiler.

Look the T-diagrams chained together to produce an executable code for machine B from a compiler that

runs in machine A.

Figure : Cross compiling language L from a Machine A to machine B.

Ins: Fkrezgy Yohannes Compiler Design 15 | P a g e

1.6.3. Bootstrapping

All this may seem to be skirting around a really nasty issue - how might the first high-level language have

been implemented? In ASSEMBLER? But then how was the assembler for ASSEMBLER produced?

A full assembler is itself a major piece of software, albeit rather simple when compared with a compiler for

a really high level language, as we shall see. It is, however, quite common to define one language as a

subset of another, so that subset 1 is contained in subset 2 which in turn is contained in subset 3 and so on,

that is:

Subset 1 of Assembler ⊆ Subset 2 of Assembler ⊆ Subset 3 of Assembler

One might first write an assembler for subset 1 of ASSEMBLER in machine code, perhaps on a load-and-

go basis (more likely one writes in ASSEMBLER, and then hand translates it into machine code). This

subset assembler program might, perhaps, do very little other than convert mnemonic opcodes into binary

form. One might then write an assembler for subset 2 of ASSEMBLER in subset 1 of ASSEMBLER, and

so on.

This process, by which a simple language is used to translate a more complicated program, which in turn

may handle an even more complicated program and so on, is known as bootstrapping, by analogy with the

idea that it might be possible to lift oneself off the ground by tugging at one’s boot-straps.

1.6.4. Self-compiling compilers (Self-hosting compiler)

Once one has a working system, one can start using it to improve itself. Many compilers for popular

languages were first written in another implementation language, as implied in section 1.4.1 and then

rewritten in their own source language. The rewrite gives source for a compiler that can then be compiled

with the compiler written in the original implementation language. Self-compiling compiler is a compiler

that is written in the language it compiles.

Consider the following example. We wish to implement a Pascal compiler written in Pascal. Rather than

writing the whole thing in machine (or assembly) language, we instead choose to write two easier

programs. The first is a compiler for a subset of Pascal, written in FORTRAN language. The second is a

compiler for the full Pascal language written in the Pascal subset language. The first compiler is loaded into

the computer’s memory and the second is used as input. The output is the compiler we want, i.e., a

compiler for the full Pascal language, which runs on Machine M and produces object code that is M-code.

Ins: Fkrezgy Yohannes Compiler Design 16 | P a g e

1.7. Compiler Writing Tools

Finally, note that in discussing compiler design and construction, we often talk of compiler writing tools.

These are often packaged as compiler generators or compiler-compilers. Such packages usually include

scanner and parser generators. Some also include symbol table routines, attribute grammar evaluators, and

code-generation tools. More advanced packages may aid in error repair generation.

These sorts of generators greatly aid in building pieces of compilers, but much of the effort in building a

compiler lies in writing and debugging the semantic phases. These routines are numerous (a type checker

and translator are needed for each distinct AST node) and are usually hand-coded.

• Tools available to assist in the writing of lexical analyzers:

• lex - Produces C source code (UNIX).

• flex - Produces C source code (gnu).

• JLex - Produces Java source code.

• Tools available to assist in the writing of parsers:

• yacc - Produces C source code (UNIX).

• bison - Produces C source code (gnu).

• CUP - Produces Java source code.

Ins: Fkrezgy Yohannes Compiler Design 17 | P a g e

Summery

Phase Output Sample

Programmer (source Source string A=B+C;

code producer)

Scanner (performs Token string ‘A’, ‘=’, ‘B’, ‘+’, ‘C’, ‘;’

lexical analysis) And symbol table with names

Parser (performs syntax Parse tree or abstract syntax ;

analysis based on the tree |

grammar of the =

programming language) /\

A+

/\

BC

Semantic analyzer (type Annotated parse tree or

checking, etc) abstract syntax tree

Intermediate code Three-address code, quads, A=B+C

generator or RTL

Optimizer Three-address code, quads, A=B+C

or RTL

Code generator Assembly code MOVF B,r1

MOVF C, r2

ADDF r1,r2

MOVF r2,A

Ins: Fkrezgy Yohannes Compiler Design 18 | P a g e

Ins: Fkrezgy Yohannes Compiler Design 19 | P a g e

You might also like

- CGDI BMW Change FEM BDC 0318Document18 pagesCGDI BMW Change FEM BDC 0318gsmhelpeveshamNo ratings yet

- NSE 4 6.4 Sample Questions - Attempt Review 2Document10 pagesNSE 4 6.4 Sample Questions - Attempt Review 2Loraine Peralta100% (2)

- CS Configuration Document Ace V1.0Document106 pagesCS Configuration Document Ace V1.0Sam Sobitharaj100% (4)

- MAIN Electrical Parts List: Parts Code Design LOC DescriptionDocument12 pagesMAIN Electrical Parts List: Parts Code Design LOC DescriptionJ Carlos Menacho S0% (1)

- CSET 06101 - Basic Computer ProgramingDocument79 pagesCSET 06101 - Basic Computer ProgramingAlvin Kelly Jr.No ratings yet

- Ved - Practice PaperDocument5 pagesVed - Practice PaperShubha JainNo ratings yet

- Network Security LAB Manual For DiplomaDocument31 pagesNetwork Security LAB Manual For DiplomaIrshad Khan50% (2)

- Sir Chhotu Ram Ins. of Engg and Technology: Srs Report On CompilerDocument8 pagesSir Chhotu Ram Ins. of Engg and Technology: Srs Report On CompilerManish prajapatiNo ratings yet

- Chapter 1 IntroductionDocument32 pagesChapter 1 IntroductionHana AbeNo ratings yet

- Compiler Design Unit-1 - 1 Cont..Document4 pagesCompiler Design Unit-1 - 1 Cont..sreethu7856No ratings yet

- HabaDocument41 pagesHabatemsNo ratings yet

- Module 1Document9 pagesModule 1ARSHIYA KNo ratings yet

- Chapter 1 IntroductionDocument33 pagesChapter 1 Introductionkuma kebedeNo ratings yet

- Advanced Computer Systems: Compiler Design & ImplementationDocument20 pagesAdvanced Computer Systems: Compiler Design & ImplementationxliizbethxNo ratings yet

- 001chapter One - Introduction To Compiler DesignDocument45 pages001chapter One - Introduction To Compiler DesigndawodNo ratings yet

- CD ch1Document23 pagesCD ch1ibrahin mahamedNo ratings yet

- Debre Markos University Burie Campus Departement of Computer ScienceDocument44 pagesDebre Markos University Burie Campus Departement of Computer ScienceGebrekidane WalleNo ratings yet

- Introduction-Elementary Programming Principles Elementary Programming PrinciplesDocument38 pagesIntroduction-Elementary Programming Principles Elementary Programming PrinciplesOmagbemi WilliamNo ratings yet

- Lecture No.1: Compiler ConstructionDocument22 pagesLecture No.1: Compiler ConstructionMisbah SajjadNo ratings yet

- Unit 2 - Introduction To C Language Processing SystemDocument5 pagesUnit 2 - Introduction To C Language Processing SystembarnabasNo ratings yet

- Compile ConstructionDocument84 pagesCompile Constructionkadoo khanNo ratings yet

- Unit 1 Spos NotesDocument23 pagesUnit 1 Spos Notes47-Rahul RAjpurohitNo ratings yet

- Compiler Construction Lecture 1Document13 pagesCompiler Construction Lecture 1MinahilNo ratings yet

- Unit 1 - Compiler Design - WWW - Rgpvnotes.inDocument16 pagesUnit 1 - Compiler Design - WWW - Rgpvnotes.inSanjay PrajapatiNo ratings yet

- Compiler Design - OverviewDocument2 pagesCompiler Design - Overviewsumit jadeNo ratings yet

- Module - 1: Overview of Compilation & Lexical AnalysisDocument29 pagesModule - 1: Overview of Compilation & Lexical Analysis121910315060 gitamNo ratings yet

- CD - Unit 1Document67 pagesCD - Unit 1Dr. K. Sivakumar - Assoc. Professor - AIDS NIETNo ratings yet

- C Programming TutorialsDocument44 pagesC Programming TutorialsInduNo ratings yet

- Completed llDocument29 pagesCompleted llsunday johnsonNo ratings yet

- Structured ProgrammingDocument42 pagesStructured ProgrammingAkendombi EmmanuelNo ratings yet

- Compiler Design: Assoc. Prof. Ahmed Moustafa ElmahalawyDocument39 pagesCompiler Design: Assoc. Prof. Ahmed Moustafa ElmahalawyAli BadranNo ratings yet

- What Is A CompilerDocument5 pagesWhat Is A CompilerIhsan UllahNo ratings yet

- UNIT1Document84 pagesUNIT1Gaurav MahajanNo ratings yet

- Introduction of Compiler DesignDocument63 pagesIntroduction of Compiler DesignSayli GawdeNo ratings yet

- Lec - 2 C ProgrammingDocument22 pagesLec - 2 C ProgrammingjolieprincesseishimweNo ratings yet

- CSC 319 Compiler ConstructionsDocument54 pagesCSC 319 Compiler ConstructionstanitolorunfrancisNo ratings yet

- Process of Execution of A Program:: Compiler DesignDocument26 pagesProcess of Execution of A Program:: Compiler DesignNaresh SoftwareNo ratings yet

- Unit 2Document22 pagesUnit 2RAVI KumarNo ratings yet

- Chapter 1Document44 pagesChapter 1somsonengdaNo ratings yet

- CSC 437 Chapter 1Document82 pagesCSC 437 Chapter 1Ema NishyNo ratings yet

- Introduction To Computer ProgrammingDocument38 pagesIntroduction To Computer ProgrammingOladele CampbellNo ratings yet

- CSC411 Compiler Construction - MO Onyesolu and OU Ekwealor - First Semester 2020/2021 SessionDocument27 pagesCSC411 Compiler Construction - MO Onyesolu and OU Ekwealor - First Semester 2020/2021 SessionKelly IsaacNo ratings yet

- CompilerDocument79 pagesCompilerHitesh AleriyaNo ratings yet

- What Compiler IsDocument16 pagesWhat Compiler IschanakkayaNo ratings yet

- Machine Languages: Basic Computer Concepts Computer LanguagesDocument4 pagesMachine Languages: Basic Computer Concepts Computer LanguagesRajesh PaudyalNo ratings yet

- 4 Evolution of Programming Languages 150823203900 Lva1 App6892Document25 pages4 Evolution of Programming Languages 150823203900 Lva1 App6892jtudu671No ratings yet

- What Is Compiler: Name: Shubham More Roll No: 14 Class: Msccs-I Subject: CompilerDocument6 pagesWhat Is Compiler: Name: Shubham More Roll No: 14 Class: Msccs-I Subject: CompilerShubham MoreNo ratings yet

- Language Processors: Assembler, Compiler and InterpreterDocument5 pagesLanguage Processors: Assembler, Compiler and InterpreterRohith PeddiNo ratings yet

- What Is A CompilerDocument28 pagesWhat Is A CompilerSalman AhmadNo ratings yet

- Clanguage2013 2014Document78 pagesClanguage2013 2014mikinaniNo ratings yet

- Compiling and Running A Program: Introduction To A Programming LanguageDocument18 pagesCompiling and Running A Program: Introduction To A Programming LanguageSome BodyNo ratings yet

- Compiler DesignDocument85 pagesCompiler DesignRakesh K RNo ratings yet

- Compiler Construction CourseDocument12 pagesCompiler Construction CourseMiraculous MiracleNo ratings yet

- Wa0005.Document8 pagesWa0005.nehasatkur99No ratings yet

- 3Document29 pages3Sagar ThapliyalNo ratings yet

- Applications of Compiler DesignDocument2 pagesApplications of Compiler Designlavieinrose111No ratings yet

- f3 ElementaryDocument33 pagesf3 ElementarydanNo ratings yet

- Principles of Compiler Design PDFDocument162 pagesPrinciples of Compiler Design PDFPRINCE DEWANGANNo ratings yet

- CC 2Document43 pagesCC 2iamsabaalyNo ratings yet

- Unit 1 Spos Notes - DocxDocument24 pagesUnit 1 Spos Notes - DocxSrushti GhiseNo ratings yet

- Programming NotesDocument58 pagesProgramming NotesClementNo ratings yet

- Apunte Inglés Software 2021Document96 pagesApunte Inglés Software 2021samuelNo ratings yet

- Elementary Programming PrinciplesDocument61 pagesElementary Programming PrinciplesMULEKWA PAULNo ratings yet

- Programming 1 PresentationDocument248 pagesProgramming 1 PresentationdummyNo ratings yet

- Comp Fundamentals Unit 1Document34 pagesComp Fundamentals Unit 1Govardhan ReghuramNo ratings yet

- COMPUTER PROGRAMMING FOR KIDS: An Easy Step-by-Step Guide For Young Programmers To Learn Coding Skills (2022 Crash Course for Newbies)From EverandCOMPUTER PROGRAMMING FOR KIDS: An Easy Step-by-Step Guide For Young Programmers To Learn Coding Skills (2022 Crash Course for Newbies)No ratings yet

- Delta PLC DVPDocument3 pagesDelta PLC DVPNeftali Asaf Cazares HernandezNo ratings yet

- DPL Digsilent Programming LanguageDocument1 pageDPL Digsilent Programming LanguageCristobal MunguiaNo ratings yet

- Presentation - SEODocument24 pagesPresentation - SEOSoniya RajpurohitNo ratings yet

- Confirmation of Termination in Accordance To Job Description.Document2 pagesConfirmation of Termination in Accordance To Job Description.MARY JOY VILLARUELNo ratings yet

- Dell™ Optiplex™ 990 Desktop: Diagnostic LedsDocument2 pagesDell™ Optiplex™ 990 Desktop: Diagnostic Ledsevonine90No ratings yet

- FIN GL FBV0 Post-Parked-DocumentDocument4 pagesFIN GL FBV0 Post-Parked-DocumentBre RoNo ratings yet

- Redhat Actualtests Ex200 Exam Question 2020-Dec-23 by Peter 45q VceDocument15 pagesRedhat Actualtests Ex200 Exam Question 2020-Dec-23 by Peter 45q VcedeepakNo ratings yet

- Owmsu PDFDocument297 pagesOwmsu PDFbaluanneNo ratings yet

- Perl For Linguists: Michael HammondDocument145 pagesPerl For Linguists: Michael HammondÁngel MolinaNo ratings yet

- Configuration of Enterprise Services Using SICF and SOA ManagerDocument25 pagesConfiguration of Enterprise Services Using SICF and SOA ManagergmartinsNo ratings yet

- Fortianalyzer v5.6.2 Release NotesDocument29 pagesFortianalyzer v5.6.2 Release Notesjacostapl_579802982No ratings yet

- Dissertation On Wireless and Mobile ComputingDocument5 pagesDissertation On Wireless and Mobile ComputingHelpMeWithMyPaperAnchorage100% (1)

- Glass Cat Rat PredationDocument803 pagesGlass Cat Rat PredationadecbNo ratings yet

- Describe The Relationship Between Data Security and Data Integrity, With The Help of A Diagram?Document8 pagesDescribe The Relationship Between Data Security and Data Integrity, With The Help of A Diagram?Sayan DasNo ratings yet

- Oracle SlideDocument30 pagesOracle Slideshyam ranaNo ratings yet

- Where It All Began... Xda-Developers!: Gnettrack On FacebookDocument21 pagesWhere It All Began... Xda-Developers!: Gnettrack On FacebookAltafNo ratings yet

- RuleBook Full PDFDocument1,195 pagesRuleBook Full PDFrosostena100% (2)

- Selenium PythonDocument47 pagesSelenium PythonsumrynNo ratings yet

- RPGandthe IFSDocument23 pagesRPGandthe IFSAntoni MontesNo ratings yet

- Fibocom l860gl Datasheet v1.0 PDFDocument1 pageFibocom l860gl Datasheet v1.0 PDFHimanshu GondNo ratings yet

- Manual Ploter de Corte Ce 3000-60Document2 pagesManual Ploter de Corte Ce 3000-60cristian171819No ratings yet

- FT1300Document4 pagesFT1300Claudio Godoy ZepedaNo ratings yet

- Setup LogDocument266 pagesSetup LogCauê BarrosNo ratings yet

- Shaurya Joshi Resume UpdatedDocument3 pagesShaurya Joshi Resume UpdatedShaurya JoshiNo ratings yet