Professional Documents

Culture Documents

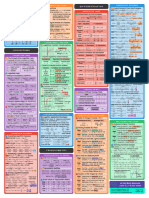

Math 156 Final Cheat Sheet

Math 156 Final Cheat Sheet

Uploaded by

shreyaCopyright:

Available Formats

You might also like

- Engineering Mathematics Cheat SheetDocument2 pagesEngineering Mathematics Cheat Sheettevin sessa67% (3)

- Year 7 Drama Marking SheetDocument2 pagesYear 7 Drama Marking Sheetruthdoyle76No ratings yet

- Stoichiometry Worksheet6-1Document6 pagesStoichiometry Worksheet6-1Von AmoresNo ratings yet

- Math Methods SummarisationDocument10 pagesMath Methods SummarisationYM GaoNo ratings yet

- Negative Integer and Fractional Order Differential Calculus by Ejiro Inije.Document10 pagesNegative Integer and Fractional Order Differential Calculus by Ejiro Inije.Ejiro InijeNo ratings yet

- Differentiation: Rule If F (X) Then F' (X) BasicDocument2 pagesDifferentiation: Rule If F (X) Then F' (X) BasicJo-LieAngNo ratings yet

- Week 11Document76 pagesWeek 11snaaaoNo ratings yet

- Negative Order Integer Calculus Using Iterative IntegrationDocument5 pagesNegative Order Integer Calculus Using Iterative IntegrationInije EjiroNo ratings yet

- Fourier TransformDocument25 pagesFourier TransformAini RizviNo ratings yet

- Stuff You Must Know Cold Freebie-1Document9 pagesStuff You Must Know Cold Freebie-1MiaNo ratings yet

- Ch4 numDocument13 pagesCh4 numgoldenmanmahmodNo ratings yet

- Lecture Note 9Document14 pagesLecture Note 9Workineh Asmare KassieNo ratings yet

- 2850-361 Sample Formulae Sheet v1-0 PDFDocument3 pages2850-361 Sample Formulae Sheet v1-0 PDFMatthew SimeonNo ratings yet

- AssignmentDocument3 pagesAssignmentSilvia Rahmi EkasariNo ratings yet

- Calculus - LP3 Unit 14Document11 pagesCalculus - LP3 Unit 14Razel Mae LaysonNo ratings yet

- Stuff You Must Know ColdDocument6 pagesStuff You Must Know Coldian2025230No ratings yet

- 2 FourierDocument17 pages2 FourierTingyang YUNo ratings yet

- Ing. Mardonio Huerta LópezDocument2 pagesIng. Mardonio Huerta LópezJOSSTIN SALDIERNA MEDRANONo ratings yet

- Quantum Mechanics NotesDocument5 pagesQuantum Mechanics NotesVincenzo Maria SchimmentiNo ratings yet

- Derivative 1Document14 pagesDerivative 1yousifshli0No ratings yet

- Ch4 NumDocument13 pagesCh4 Numahmed ramadanNo ratings yet

- How To Differentiate Different Types of Functions?Document1 pageHow To Differentiate Different Types of Functions?Alishmah JafriNo ratings yet

- Textbook Calculus Single Variable Bretscher Ebook All Chapter PDFDocument53 pagesTextbook Calculus Single Variable Bretscher Ebook All Chapter PDFshannon.millender193100% (20)

- A2 Pure Math NotesDocument7 pagesA2 Pure Math NotesSHREYA NARANGNo ratings yet

- Chap 2 DerivativeDocument14 pagesChap 2 DerivativeNguyễn Hoàng DuyNo ratings yet

- Assignment: Hafiz Noor-Ul-Amin BMAF17E117Document17 pagesAssignment: Hafiz Noor-Ul-Amin BMAF17E117Haseeb AhmedNo ratings yet

- Aerospace Structures 2011395Document7 pagesAerospace Structures 2011395kiantaylorNo ratings yet

- Convergence of Improper IntegralsDocument15 pagesConvergence of Improper Integralskashish goyalNo ratings yet

- Application of Partial Differential EquationsDocument7 pagesApplication of Partial Differential EquationsventhiiNo ratings yet

- C) Calculus Sheet SolutionsDocument4 pagesC) Calculus Sheet SolutionsHaoyu WangNo ratings yet

- Cheat SheetDocument4 pagesCheat SheetzxyjadNo ratings yet

- Formula. Basic CalculusDocument1 pageFormula. Basic Calculusjarenhndsm.placiegoNo ratings yet

- Day-2, 2 February, 2022 Section 7.2 Integration by PartsDocument18 pagesDay-2, 2 February, 2022 Section 7.2 Integration by PartsTahsina TasneemNo ratings yet

- Lecture Note 5Document9 pagesLecture Note 5Workineh Asmare KassieNo ratings yet

- Formulario Series de FourierDocument2 pagesFormulario Series de FourierItzan Charbel Flores BravoNo ratings yet

- Chain RuleDocument3 pagesChain RulekennethNo ratings yet

- Formula SheetDocument4 pagesFormula Sheetfexiko9727No ratings yet

- Reglas de DerivaciónDocument1 pageReglas de Derivaciónaxelmontenegro007No ratings yet

- Chapter 5 &6 Differentiation &its Applications: Quotient RuleDocument2 pagesChapter 5 &6 Differentiation &its Applications: Quotient RuleRahul SinghNo ratings yet

- FILLED Formula Sheet For BCDocument2 pagesFILLED Formula Sheet For BCVolcano GamerNo ratings yet

- Clase 2 - Mate III - Integrales DoblesDocument13 pagesClase 2 - Mate III - Integrales DoblesND ALEXANDRANo ratings yet

- FINAL CPB20203 JAN 2022 (Formula)Document2 pagesFINAL CPB20203 JAN 2022 (Formula)9xqyk4dpwbNo ratings yet

- Integrals S23Document5 pagesIntegrals S23Jovi Ann Quartel MirallesNo ratings yet

- Penj El Asan Integral Lipat Dua & Penerapan Pada Momen InersiaDocument26 pagesPenj El Asan Integral Lipat Dua & Penerapan Pada Momen InersiaDevi apNo ratings yet

- Definite Integral and The Fundamental Theorem of CalculusDocument4 pagesDefinite Integral and The Fundamental Theorem of CalculusshiiextraNo ratings yet

- Exposición TG 12Document11 pagesExposición TG 12MOISES DAVID HUAMAN TORRESNo ratings yet

- Class 36 - Indefinite IntegralsDocument3 pagesClass 36 - Indefinite IntegralsLeft SiderNo ratings yet

- Summary (ES) أ. احمد الزريعيDocument7 pagesSummary (ES) أ. احمد الزريعيLilian AlkordyNo ratings yet

- BC Calculus ReviewDocument29 pagesBC Calculus ReviewKawan EngNo ratings yet

- Aplikasi Integral Tentu: Volume TabungDocument14 pagesAplikasi Integral Tentu: Volume TabungKekek LeliyanaNo ratings yet

- Lecture-3 Chapter-4: DifferentiationDocument3 pagesLecture-3 Chapter-4: DifferentiationNaiem IslamNo ratings yet

- Differentiation TutorialDocument16 pagesDifferentiation TutorialNathan LongNo ratings yet

- The Concept of IntegrationDocument22 pagesThe Concept of IntegrationSatyam ShivamNo ratings yet

- Formula SheetDocument4 pagesFormula SheetSyasya AziziNo ratings yet

- ATAR Mathematics Methods Units 3 & 4: Exam Notes For Western Australian Year 12 StudentsDocument6 pagesATAR Mathematics Methods Units 3 & 4: Exam Notes For Western Australian Year 12 StudentsMain hoon LaHorE -LaHoreEeE Fun & FactsNo ratings yet

- Notes - M1 Unit 2Document28 pagesNotes - M1 Unit 2Sameer ShimpiNo ratings yet

- Chapter 7Document2 pagesChapter 7sygwapoooNo ratings yet

- Calculus Cheat Sheet Differentiation FormulasDocument3 pagesCalculus Cheat Sheet Differentiation FormulasHannahNo ratings yet

- Math 121_Integration Formulas_Simple PowerDocument7 pagesMath 121_Integration Formulas_Simple PowerRhomel John PadernillaNo ratings yet

- Calculus: I. Limit and Continuity of FunctionsDocument25 pagesCalculus: I. Limit and Continuity of FunctionsALOK SHARMANo ratings yet

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Pistons To JetsDocument41 pagesPistons To JetsRon Downey100% (2)

- Item Part No Location No - Available No. Used Remaining Min No. Required Reordered No Cost Per Item Total CostDocument1 pageItem Part No Location No - Available No. Used Remaining Min No. Required Reordered No Cost Per Item Total CostRockyNo ratings yet

- rx330 Gasoline 106Document2 pagesrx330 Gasoline 106Андрей СилаевNo ratings yet

- Selection Post IX Graduation 08-02-2022 EngDocument156 pagesSelection Post IX Graduation 08-02-2022 EngVijay singh TomarNo ratings yet

- Assessing Sleep Quality of Shs StudentsDocument10 pagesAssessing Sleep Quality of Shs StudentsDian HernandezNo ratings yet

- Ultra Dense NetworkDocument27 pagesUltra Dense NetworkYounesNo ratings yet

- F101-1 Client Information Form and ApprovalDocument4 pagesF101-1 Client Information Form and ApprovalgoyalpramodNo ratings yet

- SK 135 SR 3Document327 pagesSK 135 SR 3Trung Cuong100% (1)

- Ujian General Mobile CraneDocument5 pagesUjian General Mobile CraneAgil Wahyu PamungkasNo ratings yet

- CS198 Programming Assignment 2Document4 pagesCS198 Programming Assignment 2shellnexusNo ratings yet

- 14 Sept Quiz Chapter 1 SoalanDocument5 pages14 Sept Quiz Chapter 1 SoalanLukman MansorNo ratings yet

- Corrosion Detection Midterm LessonDocument52 pagesCorrosion Detection Midterm LessonVv ZoidNo ratings yet

- Deflocculation of Concentrated Aqueous Clay Suspensions With SodDocument5 pagesDeflocculation of Concentrated Aqueous Clay Suspensions With SodkhosrosaneNo ratings yet

- "Twist Off" Type Tension Control Structural Bolt/Nut/Washer Assemblies, Steel, Heat Treated, 120/105 Ksi Minimum Tensile StrengthDocument8 pages"Twist Off" Type Tension Control Structural Bolt/Nut/Washer Assemblies, Steel, Heat Treated, 120/105 Ksi Minimum Tensile StrengthMohammed EldakhakhnyNo ratings yet

- Parts List: JTR-MOL254/LBADocument74 pagesParts List: JTR-MOL254/LBAJoseNo ratings yet

- Ieee 1205-2014Document77 pagesIeee 1205-2014master9137100% (1)

- 21st Century Skills PaperDocument8 pages21st Century Skills PaperemilyraleyNo ratings yet

- InfoDocument2 pagesInfofukinbobNo ratings yet

- Lab6 Phase Locked LoopsDocument20 pagesLab6 Phase Locked Loopsuitce2011No ratings yet

- Models - Acdc.capacitor Fringing FieldsDocument16 pagesModels - Acdc.capacitor Fringing FieldsAnonymous pWNBPuMcf100% (1)

- Appositives and AdjectiveDocument2 pagesAppositives and AdjectiveRinda RiztyaNo ratings yet

- IPS-230X-IR 1.1 Starlight enDocument1 pageIPS-230X-IR 1.1 Starlight enahmed hashemNo ratings yet

- Chebyshev Filter: Linear Analog Electronic FiltersDocument10 pagesChebyshev Filter: Linear Analog Electronic FiltersSri Jai PriyaNo ratings yet

- Barreramora Fernando Linear Algebra A Minimal Polynomial AppDocument313 pagesBarreramora Fernando Linear Algebra A Minimal Polynomial AppStrahinja DonicNo ratings yet

- Module 5 in Eed 114: ReviewDocument6 pagesModule 5 in Eed 114: ReviewYvi BenrayNo ratings yet

- List Product YellowDocument10 pagesList Product YellowfitriNo ratings yet

- Assignment 6 Solar ERGY 420Document14 pagesAssignment 6 Solar ERGY 420Mostafa Ahmed ZeinNo ratings yet

- Bank Management System Source CodeDocument5 pagesBank Management System Source CodetheblueartboxNo ratings yet

Math 156 Final Cheat Sheet

Math 156 Final Cheat Sheet

Uploaded by

shreyaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Math 156 Final Cheat Sheet

Math 156 Final Cheat Sheet

Uploaded by

shreyaCopyright:

Available Formats

Integrals L'Hopital's rule

𝑑𝑣 𝑑𝑢 𝑓(𝑥) 0 ∞ 𝑓(𝑥) 𝑓′ (𝑥)

IBP: ∫ 𝑢

𝑑𝑥

𝑑𝑥 = 𝑢𝑣 − ∫ 𝑣

𝑑𝑥

𝑑𝑥 If lim equals or then lim = lim

𝑥→𝑎 𝑔(𝑥) 0 ∞ 𝑥→𝑎 𝑔(𝑥) 𝑥→𝑎 𝑔′ (𝑥)

𝑓′ (𝑥)

Reverse Chain Rule: ∫ 𝑑𝑥 = 𝑙𝑛|𝑓(𝑥)|

𝑓(𝑥)

Double Integrals Chain Rule

𝑑 𝑏 𝑏 𝑑 1. Two intermediate and one independent variable

1. ∫𝑐 ∫𝑎 𝑓(𝑥, 𝑦)𝑑𝑥 𝑑𝑦 = ∫𝑎 ∫𝑐 𝑓(𝑥, 𝑦)𝑑𝑦 𝑑𝑥

𝑑 𝑏 𝑑

• 𝑤 = 𝑓(𝑥, 𝑦)

2. ∫𝑐 ∫𝑎 𝑓(𝑦)𝑑𝑥𝑑𝑦 = (𝑏 − 𝑎) ∫𝑐 𝑓(𝑦)𝑑𝑦 • 𝑥 = 𝑥(𝑡), 𝑦 = 𝑦(𝑡)

𝑑 𝑏 𝑏 𝑑𝑤 𝜕𝑓 𝑑𝑥 𝜕𝑓 𝑑𝑦

3. ∫𝑐 ∫𝑎 𝑓(𝑥)𝑑𝑥𝑑𝑦 = (𝑑 − 𝑐) ∫𝑎 𝑓(𝑥)𝑑𝑦 → = +

𝑑𝑡 𝜕𝑥 𝑑𝑡 𝜕𝑦 𝑑𝑡

𝑑 𝑏 𝑏 2. Two intermediate and two independent variables

4. ∫ ∫ 𝑔(𝑥) + ℎ(𝑦) 𝑑𝑥𝑑𝑦 = (𝑑 − 𝑐) ∫ 𝑔(𝑥)𝑑𝑥 +

𝑐 𝑎 𝑎 • 𝑤 = 𝑓(𝑥, 𝑦)

𝑑

(𝑏 − 𝑎) ∫ ℎ(𝑦) 𝑑𝑦 • 𝑥 = 𝑥(𝑟, 𝑠), 𝑦 = 𝑦(𝑟, 𝑠)

𝑐 𝑑𝑤 𝜕𝑓 𝜕𝑥 𝜕𝑓 𝜕𝑦 𝑑𝑤 𝜕𝑓 𝜕𝑥 𝜕𝑓 𝜕𝑦

𝑑 𝑏 𝑏 𝑑 → = + → = +

5. ∫𝑐 ∫𝑎 𝑔(𝑥)ℎ(𝑦) 𝑑𝑥𝑑𝑦 = ∫𝑎 𝑔(𝑥)𝑑𝑥 ∫𝑐 ℎ(𝑦) 𝑑𝑦 𝑑𝑟 𝜕𝑥 𝜕𝑟 𝜕𝑦 𝜕𝑟 𝑑𝑠 𝜕𝑥 𝜕𝑠 𝜕𝑦 𝜕𝑠

Taylor Polynomials Partial Derivatives

𝑓𝑛 (𝑎) 𝜕2 𝑓 𝜕2 𝑓 𝜕2 𝑓 𝜕2 𝑓

Definition: 𝑓(𝑥) = ∑∞

𝑛=0 (𝑥 − 𝑎)𝑛 If , are continuous, then ,

𝑛! 𝜕𝑥𝜕𝑦 𝜕𝑥𝜕𝑦 𝜕𝑥𝜕𝑦 𝜕𝑥𝜕𝑦

Productivity Total Differential

𝜕𝑓 𝜕𝑓 𝜕𝑓

Marginal Productivity of labour: Formula: 𝑑𝑧 = (𝑥, 𝑦)𝑑𝑥 + (𝑥, 𝑦)𝑑𝑦

𝜕𝑥 𝜕𝑥 𝜕𝑦

𝜕𝑓

Marginal Productivity of capital: • dx = change in x

𝜕𝑦

• dy = change in y

• dz = approximate change in function

Lagrange Multipliers Present Value

• Find all (𝑥, 𝑦, 𝜆) that satisfy: Present Value Finite Term Forever

→ ∇𝑓(𝑥, 𝑦) = 𝜆∇𝑔(𝑥, 𝑦) on [0, T] on [0, ∞]

∞

→ 𝑔(𝑥, 𝑦) = 0 General Income 𝑇

• Compare

𝜕𝑓

(𝑥, 𝑦) = 𝜆

𝜕𝑔

(𝑥, 𝑦) and

𝜕𝑓

(𝑥, 𝑦) = Stream R(t) ∫ 𝑅(𝑡)𝑒−𝑟𝑡 𝑑𝑡 ∫ 𝑅(𝑡)𝑒−𝑟𝑡 𝑑𝑡

𝜕𝑥 𝜕𝑥 𝜕𝑦 0 0

𝜆

𝜕𝑔

(𝑥, 𝑦) with 𝑔(𝑥, 𝑦) = 0 m equal 𝑚𝑃 𝑚𝑃

𝜕𝑦 payments per (1 − 𝑒−𝑟𝑇 )

𝑟 𝑟

Gradient year.

𝜕𝑓 𝜕𝑓 Amount P each.

For a function in two variables, the gradient is ∇𝑓 = ( , ) • r is the rate of interest

𝜕𝑥 𝜕𝑦

Determining Relative Extrema Expected Value

1. Find critical points (a, b) by solving both: The probability weighted average of a random variable

𝜕𝑓 ∞

• (𝑎, 𝑏) = 0 1. 𝐸(𝑥) = ∫−∞ 𝑥𝑓(𝑥)𝑑𝑥

𝜕𝑥

𝜕𝑓

• (𝑎, 𝑏) = 0 Variance

𝜕𝑦

2. Second Derivative Test 𝐷(𝑥, 𝑦) = 𝑓𝑥𝑥 𝑓𝑦𝑦 − 𝑓𝑥𝑦

2

1. 𝑉𝑎𝑟(𝑥) = 𝐸[𝑥 2 ] − (𝐸[𝑥])2 easier

• 𝐷(𝑎, 𝑏) > 0 𝑎𝑛𝑑 𝑓𝑥𝑥 (𝑎, 𝑏) < 0 Relative Max 2. 𝑉𝑎𝑟(𝑥) = 𝐸[(𝑥 − 𝐸[𝑥])2 ]

at (a, b) 3. 𝑉𝑎𝑟(𝑥) = 𝜎 2

• 𝐷(𝑎, 𝑏) > 0 𝑎𝑛𝑑 𝑓𝑥𝑥 (𝑎, 𝑏) > 0 Relative Min

at (a, b)

• 𝐷(𝑎, 𝑏) < 0 Saddle Point

• 𝐷(𝑎, 𝑏) = 0 Inconclusive.

EV Properties Var Properties

1. 𝐸(𝑐) = 𝑐 1. 𝑉𝑎𝑟(𝑥 ± 𝑎) = 𝑉𝑎𝑟(𝑥)

2. 𝐸(𝑎𝑥) = 𝑎𝐸(𝑥) 2. 𝑉𝑎𝑟(𝑎𝑥) = 𝑎2 𝑉𝑎𝑟(𝑥)

3. 𝐸(𝑥 + 𝑎) = 𝐸(𝑥) + 𝑎 3. 𝑉𝑎𝑟(𝑥 2 ) = 𝑉𝑎𝑟(𝑥) + 𝐸(𝑥)2

4. 𝐸(𝑥 + 𝑦) = 𝐸(𝑥) + 𝐸(𝑦) 4. If x and y independent:

5. If x and y independent: • 𝑉𝑎𝑟(𝑥 + 𝑦) = 𝑉𝑎𝑟(𝑥) + 𝑉𝑎𝑟(𝑦)

• 𝐸(𝑥𝑦) = 𝐸(𝑥)𝐸(𝑦)

6. 𝐸(𝐸(𝑥)) = 𝐸(𝑥)

PDF Cumulative Distribution Function (CDF)

1. 𝑓(𝑥) ≥ 0 𝑓𝑜𝑟 𝑎𝑙𝑙 𝑥 Represents the probability that x is less than or equal to t.

∞

2. ∫−∞ 𝑓(𝑥)𝑑𝑥 = 1 Where 𝑓(𝑥) is a PDF.

𝑡

Joint PDF 𝐹(𝑡) = 𝑃(𝑥 ≤ 𝑡) = ∫ 𝑓(𝑥) 𝑑𝑥

−∞

1. 𝑓(𝑥, 𝑦) ≥ 0 𝑓𝑜𝑟 𝑎𝑙𝑙 𝑥, 𝑦

2.

∞

∬−∞ 𝑓(𝑥, 𝑦)𝑑𝑥𝑑𝑦 = 1

CDF Properties:

1. 0 ≤ 𝐹(𝑥) ≤ 1

2. 𝐹 ′ (𝑥) = 𝐹(𝑥) ≥ 0

3. 𝐹(𝑥) non-decreasing

4. lim 𝐹(𝑥) = 0 and lim 𝐹(𝑥) = 1

𝑥→−∞ 𝑥→∞

5. 𝑃(𝑎 < 𝑥 ≤ 𝑏) = 𝐹(𝑏) − 𝐹(𝑎)

Covariance Correlation

1. 𝐶𝑜𝑣(𝑥, 𝑦) = 𝐸[(𝑥 − 𝐸(𝑥))(𝑦 − 𝐸(𝑦))] The correlation is a measure for the degree to which large

2. Symmetry: values of X tend to be associated with large values of Y .

• 𝐶𝑜𝑣(𝑥, 𝑦) = 𝐶𝑜𝑣(𝑦, 𝑥) 𝐶𝑜𝑣(𝑥,𝑦)

𝑃(𝑥, 𝑦) = -1 ≤ 𝑝(𝑥, 𝑦) ≤ 1

• 𝑃(𝑥, 𝑦) = 𝑃(𝑦, 𝑥) √𝑉𝑎𝑟(𝑥)𝑉𝑎𝑟(𝑦)

3. Linearity: Variance and Standard Deviation (σ)

• 𝐶𝑜𝑣(𝑎𝑥1 + 𝑏𝑥2 + 𝑐, 𝑦) = 𝑎𝐶𝑜𝑣(𝑥1 , 𝑦) +

1a. 𝑉𝑎𝑟(𝑐𝑥) = 𝑐 2 𝑉𝑎𝑟(𝑥)

𝑏𝐶𝑜𝑣(𝑥2 , 𝑦)

1b. 𝜎(𝑐𝑥) = |𝑐|𝜎(𝑥)

4. 𝐶𝑜𝑣(𝑥, 𝑥) = 𝑉𝑎𝑟(𝑥)

2a. 𝑉𝑎𝑟(𝑐) = 0

5. 𝑉𝑎𝑟(𝑥 + 𝑦) = 𝑉𝑎𝑟(𝑥) + 𝑉𝑎𝑟(𝑦) + 2𝐶𝑜𝑣(𝑥, 𝑦)

2b. 𝜎(𝑐) = 0

3a. 𝑉𝑎𝑟(𝑥 + 𝑐) = 𝑉𝑎𝑟(𝑥)

3b. 𝜎(𝑥 + 𝑐) = 𝜎(𝑥)

Normal Distribution Markov’s Inequality

The most important distribution. If 𝑥 ≥ 0 and 𝑐 > 0, then

𝐸(𝑥)

PDF of a normal distribution: 𝑃(𝑥 ≥ 𝑐) ≤

1 𝑥−𝜇 2 𝑐

− ( )

𝑒 2 𝜎 Chebyshev’s Inequality:

𝑓(𝑥 ) = −∞ < 𝑝(𝑥, 𝑦) < ∞ If 𝑥 is a r.v. with a finite mean 𝜇 and variance of 𝜎 2 then for

𝜎√2𝜋

any number 𝜀 > 0:

Transformation 𝜎2

A normally distributed random value 𝑥 can be transformed 𝑃(|𝑥 − 𝜇| ≥ 𝜀) ≤

into a standard normal by: 𝜀2

𝑥−𝜇

𝑧=

𝜎

𝑎−𝜇 𝑏−𝜇

𝑃(𝑎 ≤ 𝑥 ≤ 𝑏) = 𝑃( ≤𝑧≤ )

𝑟 𝜎

You might also like

- Engineering Mathematics Cheat SheetDocument2 pagesEngineering Mathematics Cheat Sheettevin sessa67% (3)

- Year 7 Drama Marking SheetDocument2 pagesYear 7 Drama Marking Sheetruthdoyle76No ratings yet

- Stoichiometry Worksheet6-1Document6 pagesStoichiometry Worksheet6-1Von AmoresNo ratings yet

- Math Methods SummarisationDocument10 pagesMath Methods SummarisationYM GaoNo ratings yet

- Negative Integer and Fractional Order Differential Calculus by Ejiro Inije.Document10 pagesNegative Integer and Fractional Order Differential Calculus by Ejiro Inije.Ejiro InijeNo ratings yet

- Differentiation: Rule If F (X) Then F' (X) BasicDocument2 pagesDifferentiation: Rule If F (X) Then F' (X) BasicJo-LieAngNo ratings yet

- Week 11Document76 pagesWeek 11snaaaoNo ratings yet

- Negative Order Integer Calculus Using Iterative IntegrationDocument5 pagesNegative Order Integer Calculus Using Iterative IntegrationInije EjiroNo ratings yet

- Fourier TransformDocument25 pagesFourier TransformAini RizviNo ratings yet

- Stuff You Must Know Cold Freebie-1Document9 pagesStuff You Must Know Cold Freebie-1MiaNo ratings yet

- Ch4 numDocument13 pagesCh4 numgoldenmanmahmodNo ratings yet

- Lecture Note 9Document14 pagesLecture Note 9Workineh Asmare KassieNo ratings yet

- 2850-361 Sample Formulae Sheet v1-0 PDFDocument3 pages2850-361 Sample Formulae Sheet v1-0 PDFMatthew SimeonNo ratings yet

- AssignmentDocument3 pagesAssignmentSilvia Rahmi EkasariNo ratings yet

- Calculus - LP3 Unit 14Document11 pagesCalculus - LP3 Unit 14Razel Mae LaysonNo ratings yet

- Stuff You Must Know ColdDocument6 pagesStuff You Must Know Coldian2025230No ratings yet

- 2 FourierDocument17 pages2 FourierTingyang YUNo ratings yet

- Ing. Mardonio Huerta LópezDocument2 pagesIng. Mardonio Huerta LópezJOSSTIN SALDIERNA MEDRANONo ratings yet

- Quantum Mechanics NotesDocument5 pagesQuantum Mechanics NotesVincenzo Maria SchimmentiNo ratings yet

- Derivative 1Document14 pagesDerivative 1yousifshli0No ratings yet

- Ch4 NumDocument13 pagesCh4 Numahmed ramadanNo ratings yet

- How To Differentiate Different Types of Functions?Document1 pageHow To Differentiate Different Types of Functions?Alishmah JafriNo ratings yet

- Textbook Calculus Single Variable Bretscher Ebook All Chapter PDFDocument53 pagesTextbook Calculus Single Variable Bretscher Ebook All Chapter PDFshannon.millender193100% (20)

- A2 Pure Math NotesDocument7 pagesA2 Pure Math NotesSHREYA NARANGNo ratings yet

- Chap 2 DerivativeDocument14 pagesChap 2 DerivativeNguyễn Hoàng DuyNo ratings yet

- Assignment: Hafiz Noor-Ul-Amin BMAF17E117Document17 pagesAssignment: Hafiz Noor-Ul-Amin BMAF17E117Haseeb AhmedNo ratings yet

- Aerospace Structures 2011395Document7 pagesAerospace Structures 2011395kiantaylorNo ratings yet

- Convergence of Improper IntegralsDocument15 pagesConvergence of Improper Integralskashish goyalNo ratings yet

- Application of Partial Differential EquationsDocument7 pagesApplication of Partial Differential EquationsventhiiNo ratings yet

- C) Calculus Sheet SolutionsDocument4 pagesC) Calculus Sheet SolutionsHaoyu WangNo ratings yet

- Cheat SheetDocument4 pagesCheat SheetzxyjadNo ratings yet

- Formula. Basic CalculusDocument1 pageFormula. Basic Calculusjarenhndsm.placiegoNo ratings yet

- Day-2, 2 February, 2022 Section 7.2 Integration by PartsDocument18 pagesDay-2, 2 February, 2022 Section 7.2 Integration by PartsTahsina TasneemNo ratings yet

- Lecture Note 5Document9 pagesLecture Note 5Workineh Asmare KassieNo ratings yet

- Formulario Series de FourierDocument2 pagesFormulario Series de FourierItzan Charbel Flores BravoNo ratings yet

- Chain RuleDocument3 pagesChain RulekennethNo ratings yet

- Formula SheetDocument4 pagesFormula Sheetfexiko9727No ratings yet

- Reglas de DerivaciónDocument1 pageReglas de Derivaciónaxelmontenegro007No ratings yet

- Chapter 5 &6 Differentiation &its Applications: Quotient RuleDocument2 pagesChapter 5 &6 Differentiation &its Applications: Quotient RuleRahul SinghNo ratings yet

- FILLED Formula Sheet For BCDocument2 pagesFILLED Formula Sheet For BCVolcano GamerNo ratings yet

- Clase 2 - Mate III - Integrales DoblesDocument13 pagesClase 2 - Mate III - Integrales DoblesND ALEXANDRANo ratings yet

- FINAL CPB20203 JAN 2022 (Formula)Document2 pagesFINAL CPB20203 JAN 2022 (Formula)9xqyk4dpwbNo ratings yet

- Integrals S23Document5 pagesIntegrals S23Jovi Ann Quartel MirallesNo ratings yet

- Penj El Asan Integral Lipat Dua & Penerapan Pada Momen InersiaDocument26 pagesPenj El Asan Integral Lipat Dua & Penerapan Pada Momen InersiaDevi apNo ratings yet

- Definite Integral and The Fundamental Theorem of CalculusDocument4 pagesDefinite Integral and The Fundamental Theorem of CalculusshiiextraNo ratings yet

- Exposición TG 12Document11 pagesExposición TG 12MOISES DAVID HUAMAN TORRESNo ratings yet

- Class 36 - Indefinite IntegralsDocument3 pagesClass 36 - Indefinite IntegralsLeft SiderNo ratings yet

- Summary (ES) أ. احمد الزريعيDocument7 pagesSummary (ES) أ. احمد الزريعيLilian AlkordyNo ratings yet

- BC Calculus ReviewDocument29 pagesBC Calculus ReviewKawan EngNo ratings yet

- Aplikasi Integral Tentu: Volume TabungDocument14 pagesAplikasi Integral Tentu: Volume TabungKekek LeliyanaNo ratings yet

- Lecture-3 Chapter-4: DifferentiationDocument3 pagesLecture-3 Chapter-4: DifferentiationNaiem IslamNo ratings yet

- Differentiation TutorialDocument16 pagesDifferentiation TutorialNathan LongNo ratings yet

- The Concept of IntegrationDocument22 pagesThe Concept of IntegrationSatyam ShivamNo ratings yet

- Formula SheetDocument4 pagesFormula SheetSyasya AziziNo ratings yet

- ATAR Mathematics Methods Units 3 & 4: Exam Notes For Western Australian Year 12 StudentsDocument6 pagesATAR Mathematics Methods Units 3 & 4: Exam Notes For Western Australian Year 12 StudentsMain hoon LaHorE -LaHoreEeE Fun & FactsNo ratings yet

- Notes - M1 Unit 2Document28 pagesNotes - M1 Unit 2Sameer ShimpiNo ratings yet

- Chapter 7Document2 pagesChapter 7sygwapoooNo ratings yet

- Calculus Cheat Sheet Differentiation FormulasDocument3 pagesCalculus Cheat Sheet Differentiation FormulasHannahNo ratings yet

- Math 121_Integration Formulas_Simple PowerDocument7 pagesMath 121_Integration Formulas_Simple PowerRhomel John PadernillaNo ratings yet

- Calculus: I. Limit and Continuity of FunctionsDocument25 pagesCalculus: I. Limit and Continuity of FunctionsALOK SHARMANo ratings yet

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Pistons To JetsDocument41 pagesPistons To JetsRon Downey100% (2)

- Item Part No Location No - Available No. Used Remaining Min No. Required Reordered No Cost Per Item Total CostDocument1 pageItem Part No Location No - Available No. Used Remaining Min No. Required Reordered No Cost Per Item Total CostRockyNo ratings yet

- rx330 Gasoline 106Document2 pagesrx330 Gasoline 106Андрей СилаевNo ratings yet

- Selection Post IX Graduation 08-02-2022 EngDocument156 pagesSelection Post IX Graduation 08-02-2022 EngVijay singh TomarNo ratings yet

- Assessing Sleep Quality of Shs StudentsDocument10 pagesAssessing Sleep Quality of Shs StudentsDian HernandezNo ratings yet

- Ultra Dense NetworkDocument27 pagesUltra Dense NetworkYounesNo ratings yet

- F101-1 Client Information Form and ApprovalDocument4 pagesF101-1 Client Information Form and ApprovalgoyalpramodNo ratings yet

- SK 135 SR 3Document327 pagesSK 135 SR 3Trung Cuong100% (1)

- Ujian General Mobile CraneDocument5 pagesUjian General Mobile CraneAgil Wahyu PamungkasNo ratings yet

- CS198 Programming Assignment 2Document4 pagesCS198 Programming Assignment 2shellnexusNo ratings yet

- 14 Sept Quiz Chapter 1 SoalanDocument5 pages14 Sept Quiz Chapter 1 SoalanLukman MansorNo ratings yet

- Corrosion Detection Midterm LessonDocument52 pagesCorrosion Detection Midterm LessonVv ZoidNo ratings yet

- Deflocculation of Concentrated Aqueous Clay Suspensions With SodDocument5 pagesDeflocculation of Concentrated Aqueous Clay Suspensions With SodkhosrosaneNo ratings yet

- "Twist Off" Type Tension Control Structural Bolt/Nut/Washer Assemblies, Steel, Heat Treated, 120/105 Ksi Minimum Tensile StrengthDocument8 pages"Twist Off" Type Tension Control Structural Bolt/Nut/Washer Assemblies, Steel, Heat Treated, 120/105 Ksi Minimum Tensile StrengthMohammed EldakhakhnyNo ratings yet

- Parts List: JTR-MOL254/LBADocument74 pagesParts List: JTR-MOL254/LBAJoseNo ratings yet

- Ieee 1205-2014Document77 pagesIeee 1205-2014master9137100% (1)

- 21st Century Skills PaperDocument8 pages21st Century Skills PaperemilyraleyNo ratings yet

- InfoDocument2 pagesInfofukinbobNo ratings yet

- Lab6 Phase Locked LoopsDocument20 pagesLab6 Phase Locked Loopsuitce2011No ratings yet

- Models - Acdc.capacitor Fringing FieldsDocument16 pagesModels - Acdc.capacitor Fringing FieldsAnonymous pWNBPuMcf100% (1)

- Appositives and AdjectiveDocument2 pagesAppositives and AdjectiveRinda RiztyaNo ratings yet

- IPS-230X-IR 1.1 Starlight enDocument1 pageIPS-230X-IR 1.1 Starlight enahmed hashemNo ratings yet

- Chebyshev Filter: Linear Analog Electronic FiltersDocument10 pagesChebyshev Filter: Linear Analog Electronic FiltersSri Jai PriyaNo ratings yet

- Barreramora Fernando Linear Algebra A Minimal Polynomial AppDocument313 pagesBarreramora Fernando Linear Algebra A Minimal Polynomial AppStrahinja DonicNo ratings yet

- Module 5 in Eed 114: ReviewDocument6 pagesModule 5 in Eed 114: ReviewYvi BenrayNo ratings yet

- List Product YellowDocument10 pagesList Product YellowfitriNo ratings yet

- Assignment 6 Solar ERGY 420Document14 pagesAssignment 6 Solar ERGY 420Mostafa Ahmed ZeinNo ratings yet

- Bank Management System Source CodeDocument5 pagesBank Management System Source CodetheblueartboxNo ratings yet