Professional Documents

Culture Documents

Statistical Analysis in Method Comparison Studies Part One

Statistical Analysis in Method Comparison Studies Part One

Uploaded by

Reyster CastroCopyright:

Available Formats

You might also like

- 1010 Analytical Data-Interpretation and Treatment UspDocument29 pages1010 Analytical Data-Interpretation and Treatment Uspalexanderaristizabal100% (1)

- Network MetaanalysisDocument5 pagesNetwork MetaanalysisMalik Mohammad AzharuddinNo ratings yet

- Analysis Starry NightDocument3 pagesAnalysis Starry NightChincel AniNo ratings yet

- Armitage's Garden Annuals A Color Encyclopedia, 2004Document368 pagesArmitage's Garden Annuals A Color Encyclopedia, 2004iuliabogdan22100% (8)

- Systematic Reviews of Diagnostic Test Accur - 2018 - Clinical Microbiology and IDocument2 pagesSystematic Reviews of Diagnostic Test Accur - 2018 - Clinical Microbiology and IRadia LachehebNo ratings yet

- Home About This Website About The Exam News Syllabus Past Paper Saqs by Specialty Useful LinksDocument1 pageHome About This Website About The Exam News Syllabus Past Paper Saqs by Specialty Useful LinkskushishaNo ratings yet

- Fitting Nonlinear Calibration Curves No Models PerDocument17 pagesFitting Nonlinear Calibration Curves No Models PerLuis LahuertaNo ratings yet

- Network Meta-Analysis Users' Guide For Pediatrician BMJDocument12 pagesNetwork Meta-Analysis Users' Guide For Pediatrician BMJmikhatpbolonNo ratings yet

- Univariate Calibration Techniques in Flow Injection AnalysisDocument11 pagesUnivariate Calibration Techniques in Flow Injection Analysismahoutsukai_monikaNo ratings yet

- Metadta: A Stata Command For Meta-Analysis and Meta-Regression of Diagnostic Test Accuracy Data - A TutorialDocument15 pagesMetadta: A Stata Command For Meta-Analysis and Meta-Regression of Diagnostic Test Accuracy Data - A TutorialWilliam KamarullahNo ratings yet

- Traceability TrackabilityDocument13 pagesTraceability TrackabilityNevena RadomirovicNo ratings yet

- SPUP 2 Systematic Review and MetaanalysisDocument41 pagesSPUP 2 Systematic Review and MetaanalysispachayilstephanoseshintoNo ratings yet

- Comparison of Methods: Passing and Bablok Regression - Biochemia MedicaDocument5 pagesComparison of Methods: Passing and Bablok Regression - Biochemia MedicaLink BuiNo ratings yet

- Jurnal 1Document4 pagesJurnal 1Utari ZutiNo ratings yet

- Chemometric Methods For Spectroscopy-Based Pharmaceutical AnalysisDocument14 pagesChemometric Methods For Spectroscopy-Based Pharmaceutical AnalysisNandini GattadahalliNo ratings yet

- EpshteinN A AssesmentofmethodslinearityinpracticefDocument14 pagesEpshteinN A Assesmentofmethodslinearityinpracticefyepa0916No ratings yet

- 139 FullDocument4 pages139 FullMeysam AbdarNo ratings yet

- VALIDACIJA TechnicalNote17apr09Document9 pagesVALIDACIJA TechnicalNote17apr09dabicdarioNo ratings yet

- Hassan - Andwang.2017.ebm - Bmj. Guidelines For Reporting Meta-Epidemiological Methodology ResearchDocument4 pagesHassan - Andwang.2017.ebm - Bmj. Guidelines For Reporting Meta-Epidemiological Methodology ResearchmichaellouisgrecoNo ratings yet

- Method ValidationDocument16 pagesMethod ValidationRambabu komati - QA100% (3)

- 1 s2.0 S0021967317304909 MainDocument5 pages1 s2.0 S0021967317304909 MaineducobainNo ratings yet

- Estimating The Sample Mean and Standard Deviation From The Sample Size, Median, Range And/or Interquartile RangeDocument14 pagesEstimating The Sample Mean and Standard Deviation From The Sample Size, Median, Range And/or Interquartile RangeBA OngNo ratings yet

- Design of Experiments For Analytical Method Development and ValidationDocument5 pagesDesign of Experiments For Analytical Method Development and ValidationJoe Luis Villa MedinaNo ratings yet

- How To Establish Sample Sizes For Process Validation When Destructive Or...Document8 pagesHow To Establish Sample Sizes For Process Validation When Destructive Or...hanipe2979No ratings yet

- Task 3: (CITATION Bel18 /L 2057)Document5 pagesTask 3: (CITATION Bel18 /L 2057)Nadia RiazNo ratings yet

- 2020 Article 1198Document13 pages2020 Article 1198Nitya KrishnaNo ratings yet

- Resmeth (Bias PPT) Arianne LetadaDocument35 pagesResmeth (Bias PPT) Arianne LetadaHazel BisaNo ratings yet

- British J Pharmacology - 2018 - Curtis - Experimental Design and Analysis and Their Reporting II Updated and SimplifiedDocument7 pagesBritish J Pharmacology - 2018 - Curtis - Experimental Design and Analysis and Their Reporting II Updated and SimplifiedErmias Alemayehu BerisoNo ratings yet

- PSM1Document39 pagesPSM1Juan ToapantaNo ratings yet

- Article Analyst CompromiseinuncertaintyestimationDahmaniBenamarDocument11 pagesArticle Analyst CompromiseinuncertaintyestimationDahmaniBenamarBryan Alexis CastrillonNo ratings yet

- UncertaintyDocument8 pagesUncertaintymisranasrof9No ratings yet

- A Step-By-Step Guide To The SystematicDocument5 pagesA Step-By-Step Guide To The SystematicWiwien HendrawanNo ratings yet

- Jurnal Statistik PDFDocument10 pagesJurnal Statistik PDFAni Yunita SariNo ratings yet

- Use and Interpretation of Common Statistical Tests in Method-Comparison StudiesDocument9 pagesUse and Interpretation of Common Statistical Tests in Method-Comparison StudiesJustino WaveleNo ratings yet

- Statistics and TerminologyDocument9 pagesStatistics and TerminologyMarcos GonzalesNo ratings yet

- Project 2021Document12 pagesProject 2021Melvin EstolanoNo ratings yet

- KEY ASPECTS OF ANALYTICAL METHOD VALIDATION AND LINEARITY EVALUATION (Araujo 2009) PDFDocument11 pagesKEY ASPECTS OF ANALYTICAL METHOD VALIDATION AND LINEARITY EVALUATION (Araujo 2009) PDFFebrian IsharyadiNo ratings yet

- Evid Sint Decis Making 1Document10 pagesEvid Sint Decis Making 1Antonio López MolláNo ratings yet

- A Review Analytical Quality Control and Methodology in Internal Quality Control of Chemical AnalysisDocument9 pagesA Review Analytical Quality Control and Methodology in Internal Quality Control of Chemical AnalysisResearch ParkNo ratings yet

- Title: The Application and Research of T-Test in MedicineDocument12 pagesTitle: The Application and Research of T-Test in MedicineDivyasreeNo ratings yet

- Linsinger 2019Document5 pagesLinsinger 2019ConnieNo ratings yet

- White-2013-New Directions For Evaluation PDFDocument13 pagesWhite-2013-New Directions For Evaluation PDFEngrMustafaSyedNo ratings yet

- Visual Grading Characteristics VGC Analysis A NonDocument9 pagesVisual Grading Characteristics VGC Analysis A Nondrg.reskiNo ratings yet

- Quasi Experimental DesignDocument11 pagesQuasi Experimental DesignTATIANA ALEXANDRA GALVIS ROMERONo ratings yet

- Factorial DesignDocument14 pagesFactorial DesignNikka TusoNo ratings yet

- Benjamin S Brooke Moose Reporting Guidelines For MetaDocument2 pagesBenjamin S Brooke Moose Reporting Guidelines For MetatomniucNo ratings yet

- Dark UncertaintyDocument4 pagesDark UncertaintyCarlos RoqueNo ratings yet

- Prisma Guidelines For Literature ReviewDocument8 pagesPrisma Guidelines For Literature Reviewafmzydxcnojakg100% (1)

- Preferred Reporting Statements For Systemacit Reviews and Meta AnalysesDocument8 pagesPreferred Reporting Statements For Systemacit Reviews and Meta AnalysesJonathan PratamaNo ratings yet

- An Algorithm For The Classification of Study Designs Ot Asseess Diagnostic, Pronostico and Predictive Accuracy of TestsDocument8 pagesAn Algorithm For The Classification of Study Designs Ot Asseess Diagnostic, Pronostico and Predictive Accuracy of TestsDaniel EidNo ratings yet

- Improvement of The Decision Efficiency of The Accuracy ProfileDocument9 pagesImprovement of The Decision Efficiency of The Accuracy ProfileMihai MarinNo ratings yet

- 0100 4042 QN 43 08 1190 1Document14 pages0100 4042 QN 43 08 1190 1Shafy TasurraNo ratings yet

- (Clinical Chemistry and Laboratory Medicine (CCLM) ) Implementation of Standardization in Clinical Practice Not Always An Easy TaskDocument5 pages(Clinical Chemistry and Laboratory Medicine (CCLM) ) Implementation of Standardization in Clinical Practice Not Always An Easy TaskJulián Mesa SierraNo ratings yet

- Establishing Acceptance Criteria For Analytical MethodsDocument8 pagesEstablishing Acceptance Criteria For Analytical MethodsgcbNo ratings yet

- Interpreting and Understanding Meta-Analysis GraphsDocument4 pagesInterpreting and Understanding Meta-Analysis GraphsBogdan TrandafirNo ratings yet

- Evaluation Metrics For Data ClassificationDocument12 pagesEvaluation Metrics For Data ClassificationelmannaiNo ratings yet

- Rozet2012 Quality by Design Compliant Analytical Method ValidationDocument7 pagesRozet2012 Quality by Design Compliant Analytical Method ValidationAngel GarciaNo ratings yet

- Meta AnalysisofPrevalenceStudiesusingR August2021Document6 pagesMeta AnalysisofPrevalenceStudiesusingR August2021Juris JustinNo ratings yet

- Assessing Goodness-Of-Fitfor Evaluation of Dose-ProportionalityDocument10 pagesAssessing Goodness-Of-Fitfor Evaluation of Dose-Proportionalitygabriel.nathan.kaufmanNo ratings yet

- Evidence-Based Radiology: Step 3-Diagnostic Systematic Review and Meta-Analysis (Critical Appraisal)Document10 pagesEvidence-Based Radiology: Step 3-Diagnostic Systematic Review and Meta-Analysis (Critical Appraisal)Elard Salas ValdiviaNo ratings yet

- Analytical Methods: A Statistical Perspective On The ICH Q2A and Q2B Guidelines For Validation of Analytical MethodsDocument6 pagesAnalytical Methods: A Statistical Perspective On The ICH Q2A and Q2B Guidelines For Validation of Analytical MethodsSara SánNo ratings yet

- Module 3 - IPTV Architectures and ImplementationDocument57 pagesModule 3 - IPTV Architectures and ImplementationMladen VratnicaNo ratings yet

- Messages To Pastor EnocDocument3 pagesMessages To Pastor EnocpgmessageNo ratings yet

- Paper 3 SOLVE-Integrating Health Promotion Into Workplace OSH Policies - FinalDocument44 pagesPaper 3 SOLVE-Integrating Health Promotion Into Workplace OSH Policies - FinalAng chong bengNo ratings yet

- Adverb Exercise AnswerDocument3 pagesAdverb Exercise AnswerNatalia ErvinaNo ratings yet

- Human Rights and Gun ConfiscationDocument43 pagesHuman Rights and Gun ConfiscationDebra KlyneNo ratings yet

- OCFS2 - Frequently Asked QuestionsDocument18 pagesOCFS2 - Frequently Asked QuestionsJosneiNo ratings yet

- Eapp Group 2 Concept PaperDocument43 pagesEapp Group 2 Concept PaperVICTOR, KATHLEEN CLARISS B.No ratings yet

- Officer International TaxationDocument19 pagesOfficer International TaxationIsaac OkengNo ratings yet

- English Final Exam Sixth GradeDocument2 pagesEnglish Final Exam Sixth GradeCLAUDIA PAREJANo ratings yet

- Types of Financial InstitutionsDocument3 pagesTypes of Financial Institutionswahid_04050% (2)

- Analysis of Soils by Means of Pfeiffer S Circular Chromatography Test and Comparison To Chemical Analysis ResultsDocument17 pagesAnalysis of Soils by Means of Pfeiffer S Circular Chromatography Test and Comparison To Chemical Analysis ResultsSebastian Gomez VelezNo ratings yet

- Instructions Documents UploadDocument4 pagesInstructions Documents UploadGanesh IITNo ratings yet

- MulticulturalismDocument16 pagesMulticulturalismDanielle Kate MadridNo ratings yet

- Vitamin C Ascorbic AcidDocument21 pagesVitamin C Ascorbic Acidapi-388948078No ratings yet

- Resume As Brgy. SecDocument3 pagesResume As Brgy. SecShelly GumiyaoNo ratings yet

- ReadDocument5 pagesReadapi-270241956No ratings yet

- Assignment HWDocument10 pagesAssignment HWVipulkumar Govindbhai UlvaNo ratings yet

- Financial Statement Analysis Of: Icfai Business SchoolDocument1 pageFinancial Statement Analysis Of: Icfai Business SchoolVineet PushkarNo ratings yet

- Nowlin V USA: Petitioner's Reply To Government's Response To Petitioner's Motions For The Court To Take Judicial Notice of Related Pleading and Amended Request For Evidentiary HearingDocument15 pagesNowlin V USA: Petitioner's Reply To Government's Response To Petitioner's Motions For The Court To Take Judicial Notice of Related Pleading and Amended Request For Evidentiary HearingnowdoucitNo ratings yet

- Thesis Online ChulaDocument7 pagesThesis Online Chulaangelagibbsdurham100% (2)

- COVID 19 Vaccine Clinical Guidance Rheumatic Diseases SummaryDocument6 pagesCOVID 19 Vaccine Clinical Guidance Rheumatic Diseases SummarySiamakNo ratings yet

- Lesson Plan 3 Edst201 Unit PlanDocument7 pagesLesson Plan 3 Edst201 Unit Planapi-280934506No ratings yet

- Ice DigitalWidescreen August2017Document48 pagesIce DigitalWidescreen August2017Awais Abdul RehmanNo ratings yet

- H6 @the Effect of Social Media Addiction On Consumer Behavior - A CultDocument71 pagesH6 @the Effect of Social Media Addiction On Consumer Behavior - A CultAnindithaptrNo ratings yet

- Tutorial (Annotated) 1.1 Physical Quantities and Measurement TechniquesDocument14 pagesTutorial (Annotated) 1.1 Physical Quantities and Measurement Techniquesliming chenNo ratings yet

- AhobilamatamservicesDocument15 pagesAhobilamatamservicesSoumya RengarajanNo ratings yet

- Mondaiji-Tachi Ga Isekai Kara Kuru Sou Desu Yo 4 - Defeat The 13th SunDocument248 pagesMondaiji-Tachi Ga Isekai Kara Kuru Sou Desu Yo 4 - Defeat The 13th SunHanamiya Natsumi100% (1)

- Anthropological PerspectiveDocument17 pagesAnthropological PerspectiveKiara Adelene GestoleNo ratings yet

Statistical Analysis in Method Comparison Studies Part One

Statistical Analysis in Method Comparison Studies Part One

Uploaded by

Reyster CastroOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Statistical Analysis in Method Comparison Studies Part One

Statistical Analysis in Method Comparison Studies Part One

Uploaded by

Reyster CastroCopyright:

Available Formats

Search or Explore by topic

Home > Articles > Statistical analysis in method comparison studies part one

May 2016

Ar

Statistical analysis in method

tic

le

comparison studies part one

Ana-Maria Simundic

by Ana-Maria Simundic Quality assurance

University Department of Chemistry

University Hospital SESTRE

LinkedIn Tweet Share Email Print Download

MILOSRDNICE

School of Medicine, Faculty of Pharmacy

and Biochemistry, Zagreb University

Summary Vinogradska 29

This first of two articles on method comparison studies gives some key 10 000 Zagreb

concepts related to the design of the method comparison study, data CROATIA

analysis and graphical presentation, stressing the importance of a well-

designed and carefully planned experiment using adequate statistical

procedures for data analysis when carrying out a method comparison.

> Articles by this author

Method comparison is commonly performed by laboratory specialists to assess the

comparability of two methods.

The quality of method comparison study determines the quality of the results and

validity of the conclusions. The key to the successful method comparison is therefore a

well-designed and carefully planned experiment.

The question to be answered by the method comparison is whether two methods could

be used interchangeably without affecting patient results and patient outcome.

In other words, by comparing two methods we are looking for a potential bias between Acute care testing handbook

methods.

Get the acute care testing

If bias is larger than acceptable, methods are different and cannot be used handbook

interchangeably. It is important to understand why bias cannot be adequately assessed

by correlation analysis and by performing t-test. Your practical guide to critical

parameters in acute care testing.

It is also important to be aware of the importance of graphical presentation of the data

(scatter plots and difference plots), as a first step in data analysis. > Download now

Graphical presentation of the data will ensure that outliers and extreme values are

detected.

This article will provide some key concepts related to the design of the method

comparison study, data analysis and graphical presentation.

Passing-Bablok and Deming regression are going to be covered in the subsequent article

(part two).

Introduction

One of the important aspects of the method verification is the assessment of method

Scientific webinars

trueness.

Method trueness can be assessed either by following the CLSI EP15-A2 standard, which

Check out the list of

defines procedure of the verification of performance for precision and trueness, or the webinars

CLSI EP09-A3 standard, which provides guidance on how to estimate the bias by

Radiometer and acutecaretesting.org

comparison of measurement procedures using patient samples [1,2].

present free educational webinars on

The CLSI EP09-A3 standard also defines several statistical procedures which can be topics surrounding acute care testing

used to describe and analyze the data. presented by international experts.

The choice of correct statistical procedures for data analysis and knowledge about how > Go to webinars

to interpret the results of statistical analysis is of key importance for proper assessment

of the method trueness.

This article provides the insight into the proper design of the method comparison study

and some basic considerations about initial steps in data analysis and graphical

presentation (scatter and difference plots).

The following article will address statistical methods used in method comparison studies

(Passing-Bablok and Deming regression).

Study design

Method comparison study assesses the degree of agreement of the method currently

used in the laboratory and the new method.

Method comparison study is done whenever a new method that replaces the existing

method in the laboratory is introduced.

The aim of the method comparison experiment is to evaluate the possible difference

between these methods (the old one and the new one) and to ensure that the change of

methods is not going to affect patient results and medical decisions based on these.

At least 40 and preferably 100 patient samples should be used to compare two

methods.

Larger sample size is preferable to identify unexpected errors due to interferences or

sample matrix effects.

Samples should be selected with great care, taking into account the following:

cover the entire clinically meaningful measurement range

whenever possible, perform duplicate measurements for both current and new

method to minimize random variation effect

randomize the sample sequence to avoid carry-over effect

analyze samples within the period of their stability (preferably within the time span of

2 hours)

analyze samples on the day of the blood sampling

measure samples over several days (at least 5) and multiple runs to mimic the real-

world situation

Acceptable bias should be defined before the experiment and selection of the

performance specifications should be based on one of the three models in accordance

with the Milano hierarchy [3]:

1. Based on the effect of analytical performance on clinical outcomes (direct or indirect

outcome studies)

2. Based on components of biological variation of the measurand

3. Based on state-of-the-art

Which statistical tests should not be used in method comparison study?

The use of correlation analysis and t-test are quite commonly used in the literature as

the statistical methods of the first choice when assessing the comparability of two

methods.

However, it should be emphasized that neither correlation analysis nor the t-test is

adequate and appropriate for that purpose.

Correlation analysis provides evidence for the linear relationship (i.e. association) of two

independent parameters, but it can neither be used to detect proportional nor constant

bias between two series of measurements.

The degree of association is assessed by the respective correlation coefficient (r) and

coefficient of determination (r2). Coefficient of determination defines the degree to

which data fit into the linear regression model (how well data can be explained by the

linear relationship).

The greater the r2 is, the higher is the association.

The value of correlation coefficient (r) ranges from –1 to +1. The association can be

positive (r>0) and negative (r<0). Negative correlation between two parameters

indicates that the increase of one parameter is associated with the decrease of the

other.

Positive association is present when the increase of one parameter is concomitant with

the increase of the other parameter.

However, the existence of positive correlation does not mean that the values of these

two parameters are comparable, as is shown in the below example (Table I):

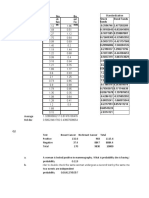

TABLE I: Glucose measurements by two different methods in a series of

samples (N=10)

Sample number 12 3 4 5 6 7 8 9 10

Glucose measured by Method 1 (mmol/L) 12 3 4 5 6 7 8 9 10

Glucose measured by Method 2 (mmol/L) 5 10 15 20 25 30 35 40 45 50

Let us assume that glucose is measured by one instrument (Method 1) with one method

and on the other instrument with another method (Method 2) in 10 patients.

With the increase of the glucose concentration measured by Method 1 there is an

unquestionable increase of glucose concentration measured by Method 2.

However, there is a large bias in these two methods and it is obvious that these two

methods are not comparable, although the coefficient of correlation (r) for these two

methods is 1.00 (P<0.001).

Coefficient of correlation shows that these two sets of measurements are in a linear

relationship, which is obvious if we look at Fig. 1. What the correlation analysis did not

detect is the proportional bias between glucose measured by Method 1 and Method 2.

FIG. 1: Scatter diagram showing the linear relationship between Method 1 (met1) and

Method 2 (met2) for glucose measurement (dataset from Table I). Red line shows line of

equality.

Another common mistake is to use t-test to evaluate method comparability. Neither

paired t-test nor t-test for independent samples can reliably assess the comparability of

two series of measurements.

Let us now again assume that glucose is measured by one instrument (Method 1) with

one method and on the other instrument with another method (Method 2) in five

patients and the results are presented in the table below (Table II):

TABLE II: Glucose measurements by two different methods in a series of five

samples

Sample number 1 2 3 4 5

Glucose measured by Method 1 (mmol/L) 1 2 3 4 5

Glucose measured by Method 2 (mmol/L) 5 4 3 2 1

If we test these two sets of data with independent t-test, it will show us there is no

difference between these two sets of measurements (P<0.001). This is obviously not

true.

Glucose measured by Method 1 and Method 2 are surely not comparable. So, why is

independent t-test not able to detect this? Independent t-test actually only detects

whether two independent sets of measurements have the same or similar average

values.

The averages of five measurements with Method 1 and Method 2 are indeed identical (3

mmol/L) and this is why t-test did not detect the difference between these two sets of

measurements.

Paired t-test is used to assess whether there is a difference between paired

measurements.

As measurements of one parameter by two different methods (Method 1 and Method 2)

are paired measurements, paired t-test is obviously a better choice to detect the

difference between them.

However, t-test will detect a difference which does not necessarily need to be a clinically

meaningful difference, if the size of the sample is large enough.

On the other hand, if the size of the sample is too small, paired t-test will not detect a

difference between two sets of measurements even if this difference is large and

clinically meaningful, as is shown in the below example (Table III).

According to paired t-test the two series of five glucose measurements measured by two

different methods, are not statistically different (P=0.208), although a mean difference

between the two sets of measurements is greater than clinically acceptable (-10.8%).

TABLE III: Glucose measurements by two different methods in a series of five

samples

Sample number 1 2 3 4 5

Method 1 (mmol/L) 2 4 6 8 10

Method 2 (mmol/L) 3 5 7 9 9

Scatter plots

Scatter plots (or scatter diagrams) help in describing the variability in the paired

measurements throughout the range of measured values.

Each pair of measurements is presented with one point, which is defined by the value

on the x axis (usually the reference method) against the measurement with the second

method (usually the comparison method) on the y axis (Fig. 2).

It is advisable, as already mentioned, to perform multiple (duplicate or even triplicate)

measurements to minimize random variation effects.

If a measurement of a certain analyte has been done in duplicate, a mean of two

measurements should be used in plotting the data.

In case three or more measurements have been done for one analyte, a median should

be used instead of the average value.

FIG. 2: The scatter diagram shows the set of paired values for βHCG measured on two

different instruments. a) Scatter diagram showing a set of measurement obtained over

the broad measurement range of 0-1000 IU/L. b) Scatter diagram showing the results

of an invalid method comparison experiment with a gap between βHCG values 200-600

IU/L.

Problems detected with the scatter plot should be dealt with before any other analysis is

done.

In case the data do not cover the entire measurement range, as showed in Fig. 2b, one

should go back and perform additional measurements in order to fill this gap.

Difference plots

Difference plots are commonly used graphical methods aimed at describing the

agreement between two measurement methods in method comparison studies [4].

Difference plots may be constructed so that a) the differences, ratios or percentages

between the methods are plotted on the y axis against the average of the methods on

the x axis (Bland-Altman plot) or so that b) the differences between the methods are

plotted on the y axis against one of two methods on the x axis (Krouwer plot).

Bland-Altman plots are used when none of the two measurement methods are reference

methods or the so-called “gold standard” methods, whereas Krouwer plots are used

when the method plotted on the x axis is a reference method.

Difference plots are used to assess the existence of a significant bias between the two

measurements. If there is a significant bias, difference plots may help to assess how

bias relates to the average value of the two measurements.

If one of the measurements is the reference method or the gold standard, difference

plot may help to assess how the bias relates to the true value of the analyte under

investigation. Examples of Bland-Altman and Krouwer plots are shown on Figs. 3 a-d.

FIG. 3: Various types of difference plot. a-b) Bland-Altman plots showing the difference

(a) and percentage of the difference (b) between the methods plotted on the y axis

against the average of the methods on the x axis. c-d) Krouwer plots showing the

differences between the methods (c) and percentage of the difference (b) between the

methods plotted on the y axis against one method (the reference method).

Legend: Solid blue horizontal line shows the mean difference, dotted green line shows

the 95 % confidence interval of the mean difference, red dotted lines show limits of

agreement (±1.96 standard deviation of the differences) and thin red dotted line shows

the line of equality (zero difference).

As already stated above, difference plots are helpful in determining whether there is

some bias between the methods and if the bias exists. They also help in evaluating how

bias relates to the average value of the two measurements.

Bias between two measurements can be random, proportional and constant. Bland-

Altman plots showing random, proportional and constant bias are presented in Figs. 4 a-

c.

Confidence limits of the bias depend on the number of measurements and the variability

of the measurements. The greater the number of measurements is, the narrower is the

95 % confidence interval of the mean difference.

Also, the greater the variability of the measurements, the broader will be the 95 %

confidence interval of the mean difference.

When interpreting the bias, one also needs to keep in mind that bias is statistically

significant only if the line of equality (zero difference) is not within the 95 % confidence

limits of the bias.

Of course, statistical significance of the bias does not provide evidence for its clinical

significance. As already pointed out in the beginning of this article, clinical significance

can only be assessed by evaluating the difference with the acceptance criteria.

Conclusions

Method comparison should be based on the carefully planned study. Properly designed

experiment and adequate statistical procedures for data analysis are the key to valid

method comparison and reliable assessment of method trueness.

When performing a method comparison, below requirements must be kept in mind:

Correlation analysis and t-test are not appropriate methods for analyzing the

comparability of measurements.

Before data analysis, paired measurements should be graphically presented using the

scatter plot. Scatter plot enables that outliers are detected as well as interval of

values which is not covered by the analysis. Before any further analysis, additional

experiments should be done to ensure that the entire clinically meaningful

measurement range is covered.

To detect the existence of bias, difference plots are used (Bland-Altman and Krouwer

plots). Difference plots may detect the existence of significant bias between the two

measurements and how it relates to the average value of the two measurements.

When interpreting the bias, one must always take into account the clinically

meaningful limits which must be set before the experiment and should be based on

the Milano hierarchy (clinical outcomes, biological variation or state-of-the-art) [3].

The following article (Statistical analysis in method comparison studies – Part two) will

address the proper use of statistical methods used in method comparison studies

(Passing-Bablok and Deming regression) by providing practical examples and guidance

on how to perform the analysis and how to interpret results obtained by the statistical

analysis.

References

1. Clinical and Laboratory Standards Institute. User Verification of

Performance for Precision and Trueness; Approved Guideline—Second

Edition. CLSI documen EP15-A2. Clinical and Laboratory Standards

Institute, Wayne, Pennsylvania, USA, 2005.

2. Clinical and Laboratory Standards Institute. Measurement procedure

+ comparison

View more and bias estimation using patient samples; approved

guideline —Third Edition. CLSI document EP09-A3. Clinical Laboratory

Standards Institute, Wayne, PA, USA, 2013.

3. Sandberg S, Fraser CG, Horvath AR, Jansen R, Jones G, Oosterhuis W,

LinkedIn

Petersen PH,Tweet

Schimmel H, Share Email

Sikaris K, Panteghini Print

M. Defining Download

analytical

performance specifications: Consensus Statement from the 1st

Disclaimer

Strategic Conference of the European Federation of Clinical Chemistry

May contain information that is not supported by performance and intended use claims

and Laboratory Medicine. Clin Chem Lab Med 2015; 53, 6: 833-35.

of Radiometer's products. See also Legal info.

4. Giavarina D. Understanding Bland Altman analysis. Biochem Med 2015;

25, 2: 141-51.

Related articles

January 2003

What to consider when

performing method

comparisons on blood gas

analyzers

by Gitte Wennecke

When performing method comparisons,

it is important to address a number of

preparatory and...

All articles

Sign up for the Acute Care

Testing newsletter

Sign up

ABOUT THIS SITE ABOUT RADIOMETER CONTACT US LEGAL NOTICE PRIVACY POLICY

Sign up for email info

© 2022 Radiometer Medical ApS | Åkandevej 21 | DK-2700 | Brønshøj | Denmark | Phone +45 3827 3827 | CVR no. 27509185

You might also like

- 1010 Analytical Data-Interpretation and Treatment UspDocument29 pages1010 Analytical Data-Interpretation and Treatment Uspalexanderaristizabal100% (1)

- Network MetaanalysisDocument5 pagesNetwork MetaanalysisMalik Mohammad AzharuddinNo ratings yet

- Analysis Starry NightDocument3 pagesAnalysis Starry NightChincel AniNo ratings yet

- Armitage's Garden Annuals A Color Encyclopedia, 2004Document368 pagesArmitage's Garden Annuals A Color Encyclopedia, 2004iuliabogdan22100% (8)

- Systematic Reviews of Diagnostic Test Accur - 2018 - Clinical Microbiology and IDocument2 pagesSystematic Reviews of Diagnostic Test Accur - 2018 - Clinical Microbiology and IRadia LachehebNo ratings yet

- Home About This Website About The Exam News Syllabus Past Paper Saqs by Specialty Useful LinksDocument1 pageHome About This Website About The Exam News Syllabus Past Paper Saqs by Specialty Useful LinkskushishaNo ratings yet

- Fitting Nonlinear Calibration Curves No Models PerDocument17 pagesFitting Nonlinear Calibration Curves No Models PerLuis LahuertaNo ratings yet

- Network Meta-Analysis Users' Guide For Pediatrician BMJDocument12 pagesNetwork Meta-Analysis Users' Guide For Pediatrician BMJmikhatpbolonNo ratings yet

- Univariate Calibration Techniques in Flow Injection AnalysisDocument11 pagesUnivariate Calibration Techniques in Flow Injection Analysismahoutsukai_monikaNo ratings yet

- Metadta: A Stata Command For Meta-Analysis and Meta-Regression of Diagnostic Test Accuracy Data - A TutorialDocument15 pagesMetadta: A Stata Command For Meta-Analysis and Meta-Regression of Diagnostic Test Accuracy Data - A TutorialWilliam KamarullahNo ratings yet

- Traceability TrackabilityDocument13 pagesTraceability TrackabilityNevena RadomirovicNo ratings yet

- SPUP 2 Systematic Review and MetaanalysisDocument41 pagesSPUP 2 Systematic Review and MetaanalysispachayilstephanoseshintoNo ratings yet

- Comparison of Methods: Passing and Bablok Regression - Biochemia MedicaDocument5 pagesComparison of Methods: Passing and Bablok Regression - Biochemia MedicaLink BuiNo ratings yet

- Jurnal 1Document4 pagesJurnal 1Utari ZutiNo ratings yet

- Chemometric Methods For Spectroscopy-Based Pharmaceutical AnalysisDocument14 pagesChemometric Methods For Spectroscopy-Based Pharmaceutical AnalysisNandini GattadahalliNo ratings yet

- EpshteinN A AssesmentofmethodslinearityinpracticefDocument14 pagesEpshteinN A Assesmentofmethodslinearityinpracticefyepa0916No ratings yet

- 139 FullDocument4 pages139 FullMeysam AbdarNo ratings yet

- VALIDACIJA TechnicalNote17apr09Document9 pagesVALIDACIJA TechnicalNote17apr09dabicdarioNo ratings yet

- Hassan - Andwang.2017.ebm - Bmj. Guidelines For Reporting Meta-Epidemiological Methodology ResearchDocument4 pagesHassan - Andwang.2017.ebm - Bmj. Guidelines For Reporting Meta-Epidemiological Methodology ResearchmichaellouisgrecoNo ratings yet

- Method ValidationDocument16 pagesMethod ValidationRambabu komati - QA100% (3)

- 1 s2.0 S0021967317304909 MainDocument5 pages1 s2.0 S0021967317304909 MaineducobainNo ratings yet

- Estimating The Sample Mean and Standard Deviation From The Sample Size, Median, Range And/or Interquartile RangeDocument14 pagesEstimating The Sample Mean and Standard Deviation From The Sample Size, Median, Range And/or Interquartile RangeBA OngNo ratings yet

- Design of Experiments For Analytical Method Development and ValidationDocument5 pagesDesign of Experiments For Analytical Method Development and ValidationJoe Luis Villa MedinaNo ratings yet

- How To Establish Sample Sizes For Process Validation When Destructive Or...Document8 pagesHow To Establish Sample Sizes For Process Validation When Destructive Or...hanipe2979No ratings yet

- Task 3: (CITATION Bel18 /L 2057)Document5 pagesTask 3: (CITATION Bel18 /L 2057)Nadia RiazNo ratings yet

- 2020 Article 1198Document13 pages2020 Article 1198Nitya KrishnaNo ratings yet

- Resmeth (Bias PPT) Arianne LetadaDocument35 pagesResmeth (Bias PPT) Arianne LetadaHazel BisaNo ratings yet

- British J Pharmacology - 2018 - Curtis - Experimental Design and Analysis and Their Reporting II Updated and SimplifiedDocument7 pagesBritish J Pharmacology - 2018 - Curtis - Experimental Design and Analysis and Their Reporting II Updated and SimplifiedErmias Alemayehu BerisoNo ratings yet

- PSM1Document39 pagesPSM1Juan ToapantaNo ratings yet

- Article Analyst CompromiseinuncertaintyestimationDahmaniBenamarDocument11 pagesArticle Analyst CompromiseinuncertaintyestimationDahmaniBenamarBryan Alexis CastrillonNo ratings yet

- UncertaintyDocument8 pagesUncertaintymisranasrof9No ratings yet

- A Step-By-Step Guide To The SystematicDocument5 pagesA Step-By-Step Guide To The SystematicWiwien HendrawanNo ratings yet

- Jurnal Statistik PDFDocument10 pagesJurnal Statistik PDFAni Yunita SariNo ratings yet

- Use and Interpretation of Common Statistical Tests in Method-Comparison StudiesDocument9 pagesUse and Interpretation of Common Statistical Tests in Method-Comparison StudiesJustino WaveleNo ratings yet

- Statistics and TerminologyDocument9 pagesStatistics and TerminologyMarcos GonzalesNo ratings yet

- Project 2021Document12 pagesProject 2021Melvin EstolanoNo ratings yet

- KEY ASPECTS OF ANALYTICAL METHOD VALIDATION AND LINEARITY EVALUATION (Araujo 2009) PDFDocument11 pagesKEY ASPECTS OF ANALYTICAL METHOD VALIDATION AND LINEARITY EVALUATION (Araujo 2009) PDFFebrian IsharyadiNo ratings yet

- Evid Sint Decis Making 1Document10 pagesEvid Sint Decis Making 1Antonio López MolláNo ratings yet

- A Review Analytical Quality Control and Methodology in Internal Quality Control of Chemical AnalysisDocument9 pagesA Review Analytical Quality Control and Methodology in Internal Quality Control of Chemical AnalysisResearch ParkNo ratings yet

- Title: The Application and Research of T-Test in MedicineDocument12 pagesTitle: The Application and Research of T-Test in MedicineDivyasreeNo ratings yet

- Linsinger 2019Document5 pagesLinsinger 2019ConnieNo ratings yet

- White-2013-New Directions For Evaluation PDFDocument13 pagesWhite-2013-New Directions For Evaluation PDFEngrMustafaSyedNo ratings yet

- Visual Grading Characteristics VGC Analysis A NonDocument9 pagesVisual Grading Characteristics VGC Analysis A Nondrg.reskiNo ratings yet

- Quasi Experimental DesignDocument11 pagesQuasi Experimental DesignTATIANA ALEXANDRA GALVIS ROMERONo ratings yet

- Factorial DesignDocument14 pagesFactorial DesignNikka TusoNo ratings yet

- Benjamin S Brooke Moose Reporting Guidelines For MetaDocument2 pagesBenjamin S Brooke Moose Reporting Guidelines For MetatomniucNo ratings yet

- Dark UncertaintyDocument4 pagesDark UncertaintyCarlos RoqueNo ratings yet

- Prisma Guidelines For Literature ReviewDocument8 pagesPrisma Guidelines For Literature Reviewafmzydxcnojakg100% (1)

- Preferred Reporting Statements For Systemacit Reviews and Meta AnalysesDocument8 pagesPreferred Reporting Statements For Systemacit Reviews and Meta AnalysesJonathan PratamaNo ratings yet

- An Algorithm For The Classification of Study Designs Ot Asseess Diagnostic, Pronostico and Predictive Accuracy of TestsDocument8 pagesAn Algorithm For The Classification of Study Designs Ot Asseess Diagnostic, Pronostico and Predictive Accuracy of TestsDaniel EidNo ratings yet

- Improvement of The Decision Efficiency of The Accuracy ProfileDocument9 pagesImprovement of The Decision Efficiency of The Accuracy ProfileMihai MarinNo ratings yet

- 0100 4042 QN 43 08 1190 1Document14 pages0100 4042 QN 43 08 1190 1Shafy TasurraNo ratings yet

- (Clinical Chemistry and Laboratory Medicine (CCLM) ) Implementation of Standardization in Clinical Practice Not Always An Easy TaskDocument5 pages(Clinical Chemistry and Laboratory Medicine (CCLM) ) Implementation of Standardization in Clinical Practice Not Always An Easy TaskJulián Mesa SierraNo ratings yet

- Establishing Acceptance Criteria For Analytical MethodsDocument8 pagesEstablishing Acceptance Criteria For Analytical MethodsgcbNo ratings yet

- Interpreting and Understanding Meta-Analysis GraphsDocument4 pagesInterpreting and Understanding Meta-Analysis GraphsBogdan TrandafirNo ratings yet

- Evaluation Metrics For Data ClassificationDocument12 pagesEvaluation Metrics For Data ClassificationelmannaiNo ratings yet

- Rozet2012 Quality by Design Compliant Analytical Method ValidationDocument7 pagesRozet2012 Quality by Design Compliant Analytical Method ValidationAngel GarciaNo ratings yet

- Meta AnalysisofPrevalenceStudiesusingR August2021Document6 pagesMeta AnalysisofPrevalenceStudiesusingR August2021Juris JustinNo ratings yet

- Assessing Goodness-Of-Fitfor Evaluation of Dose-ProportionalityDocument10 pagesAssessing Goodness-Of-Fitfor Evaluation of Dose-Proportionalitygabriel.nathan.kaufmanNo ratings yet

- Evidence-Based Radiology: Step 3-Diagnostic Systematic Review and Meta-Analysis (Critical Appraisal)Document10 pagesEvidence-Based Radiology: Step 3-Diagnostic Systematic Review and Meta-Analysis (Critical Appraisal)Elard Salas ValdiviaNo ratings yet

- Analytical Methods: A Statistical Perspective On The ICH Q2A and Q2B Guidelines For Validation of Analytical MethodsDocument6 pagesAnalytical Methods: A Statistical Perspective On The ICH Q2A and Q2B Guidelines For Validation of Analytical MethodsSara SánNo ratings yet

- Module 3 - IPTV Architectures and ImplementationDocument57 pagesModule 3 - IPTV Architectures and ImplementationMladen VratnicaNo ratings yet

- Messages To Pastor EnocDocument3 pagesMessages To Pastor EnocpgmessageNo ratings yet

- Paper 3 SOLVE-Integrating Health Promotion Into Workplace OSH Policies - FinalDocument44 pagesPaper 3 SOLVE-Integrating Health Promotion Into Workplace OSH Policies - FinalAng chong bengNo ratings yet

- Adverb Exercise AnswerDocument3 pagesAdverb Exercise AnswerNatalia ErvinaNo ratings yet

- Human Rights and Gun ConfiscationDocument43 pagesHuman Rights and Gun ConfiscationDebra KlyneNo ratings yet

- OCFS2 - Frequently Asked QuestionsDocument18 pagesOCFS2 - Frequently Asked QuestionsJosneiNo ratings yet

- Eapp Group 2 Concept PaperDocument43 pagesEapp Group 2 Concept PaperVICTOR, KATHLEEN CLARISS B.No ratings yet

- Officer International TaxationDocument19 pagesOfficer International TaxationIsaac OkengNo ratings yet

- English Final Exam Sixth GradeDocument2 pagesEnglish Final Exam Sixth GradeCLAUDIA PAREJANo ratings yet

- Types of Financial InstitutionsDocument3 pagesTypes of Financial Institutionswahid_04050% (2)

- Analysis of Soils by Means of Pfeiffer S Circular Chromatography Test and Comparison To Chemical Analysis ResultsDocument17 pagesAnalysis of Soils by Means of Pfeiffer S Circular Chromatography Test and Comparison To Chemical Analysis ResultsSebastian Gomez VelezNo ratings yet

- Instructions Documents UploadDocument4 pagesInstructions Documents UploadGanesh IITNo ratings yet

- MulticulturalismDocument16 pagesMulticulturalismDanielle Kate MadridNo ratings yet

- Vitamin C Ascorbic AcidDocument21 pagesVitamin C Ascorbic Acidapi-388948078No ratings yet

- Resume As Brgy. SecDocument3 pagesResume As Brgy. SecShelly GumiyaoNo ratings yet

- ReadDocument5 pagesReadapi-270241956No ratings yet

- Assignment HWDocument10 pagesAssignment HWVipulkumar Govindbhai UlvaNo ratings yet

- Financial Statement Analysis Of: Icfai Business SchoolDocument1 pageFinancial Statement Analysis Of: Icfai Business SchoolVineet PushkarNo ratings yet

- Nowlin V USA: Petitioner's Reply To Government's Response To Petitioner's Motions For The Court To Take Judicial Notice of Related Pleading and Amended Request For Evidentiary HearingDocument15 pagesNowlin V USA: Petitioner's Reply To Government's Response To Petitioner's Motions For The Court To Take Judicial Notice of Related Pleading and Amended Request For Evidentiary HearingnowdoucitNo ratings yet

- Thesis Online ChulaDocument7 pagesThesis Online Chulaangelagibbsdurham100% (2)

- COVID 19 Vaccine Clinical Guidance Rheumatic Diseases SummaryDocument6 pagesCOVID 19 Vaccine Clinical Guidance Rheumatic Diseases SummarySiamakNo ratings yet

- Lesson Plan 3 Edst201 Unit PlanDocument7 pagesLesson Plan 3 Edst201 Unit Planapi-280934506No ratings yet

- Ice DigitalWidescreen August2017Document48 pagesIce DigitalWidescreen August2017Awais Abdul RehmanNo ratings yet

- H6 @the Effect of Social Media Addiction On Consumer Behavior - A CultDocument71 pagesH6 @the Effect of Social Media Addiction On Consumer Behavior - A CultAnindithaptrNo ratings yet

- Tutorial (Annotated) 1.1 Physical Quantities and Measurement TechniquesDocument14 pagesTutorial (Annotated) 1.1 Physical Quantities and Measurement Techniquesliming chenNo ratings yet

- AhobilamatamservicesDocument15 pagesAhobilamatamservicesSoumya RengarajanNo ratings yet

- Mondaiji-Tachi Ga Isekai Kara Kuru Sou Desu Yo 4 - Defeat The 13th SunDocument248 pagesMondaiji-Tachi Ga Isekai Kara Kuru Sou Desu Yo 4 - Defeat The 13th SunHanamiya Natsumi100% (1)

- Anthropological PerspectiveDocument17 pagesAnthropological PerspectiveKiara Adelene GestoleNo ratings yet