Professional Documents

Culture Documents

Solution 5

Solution 5

Uploaded by

chandraadityaoffl0 ratings0% found this document useful (0 votes)

9 views2 pagesThe document discusses various concepts related to linear algebra and machine learning including:

1) Eigenvalues are solutions to the characteristic equation of a matrix A - λI.

2) Eigenvalues of a Hermitian matrix are real but not necessarily positive.

3) The eigenvalues of a sample matrix are found by solving its characteristic equation.

4) Pseudo-inverses and matrix operations are described.

Original Description:

Original Title

solution_5

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document discusses various concepts related to linear algebra and machine learning including:

1) Eigenvalues are solutions to the characteristic equation of a matrix A - λI.

2) Eigenvalues of a Hermitian matrix are real but not necessarily positive.

3) The eigenvalues of a sample matrix are found by solving its characteristic equation.

4) Pseudo-inverses and matrix operations are described.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

9 views2 pagesSolution 5

Solution 5

Uploaded by

chandraadityaofflThe document discusses various concepts related to linear algebra and machine learning including:

1) Eigenvalues are solutions to the characteristic equation of a matrix A - λI.

2) Eigenvalues of a Hermitian matrix are real but not necessarily positive.

3) The eigenvalues of a sample matrix are found by solving its characteristic equation.

4) Pseudo-inverses and matrix operations are described.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 2

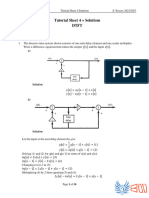

1.

The eigenvalues 𝜆 are given as solution to the equation

Det(𝐀 − 𝜆𝐈) = 0

Ans b

2. The eigenvalues of a Hermitian symmetric matrix are Real but not necessarily

positive

Ans c

3. The eigenvalues of the matrix below can be found as shown

2−𝜆 3

𝐀 − λ𝐈 = [ ] ⇒ |𝐀 − λ𝐈| = (2 − 𝜆)(1 − 𝜆) − 6 = 0

2 1−𝜆

⇒ 𝜆2 − 3𝜆 − 4 = 0 ⇒ (𝜆 − 4)(𝜆 + 1) = 0

⇒ 4, −1

Ans a

4. The pseudo-inverse of the matrix 𝐀 can be evaluated as below

1 1 −1

4 0 −1 1 1 0

(𝐀𝑇 𝐀)−1 = ([1 1 1 1 1 −1

][ ]) = ([ ]) = [ ]

1 −1 −1 1 1 −1 0 4 4 0 1

1 1

1

(𝐀𝑇 𝐀)−1 𝐀𝑇 = [1 0] [1 1 1 1

]

4 0 1 1 −1 −1 1

1 1 1 1 1

= [ ]

4 1 −1 −1 1

Ans b

5. The eigenvalues 𝜆𝑖 of a unitary matrix 𝐔 satisfy the property

|𝜆𝑖 | = 1

Ans c

6. Given ℎ1 = 1 − 𝑗, ℎ2 = −1 − 𝑗, the effective channel matrix for this system is given

as

ℎ ℎ2 1 − 𝑗 −1 − 𝑗

[ 1∗ ]=[ ]

ℎ2 −ℎ1∗ −1 + 𝑗 −1 − 𝑗

Ans d

7. The picture shown corresponds to a Regressor

Ans a

8. Principal Component Analysis (PCA) is used in machine learning for Dimensionality

reduction

Ans a

9. The pseudo-inverse of the matrix 𝐀 is

−1

1 1 1 1

1 1 −1 −1 1 −1

𝐀𝑇 (𝐀𝐀𝑇 )−1 = [ 1 −1 ] ([ ][ ])

−1 1 1 −1 1 −1 −1 1

−1 −1 −1 −1

1 1 1 1

4 0 −1 1 1 −1

= [ 1 −1 ] ([ ]) = [ ]

−1 1 0 4 4 −1 1

−1 −1 −1 −1

Ans d

10. Given 2 dimensional random column vector 𝐱̅ that has the multi-variate Gaussian

−3 2 0

distribution with mean 𝛍̅ = [ ] and covariance matrix 𝚺 = [ ]. Given the

2 0 4

vector

−2 1 1

𝐲̅ = 𝐀𝐱̅ + 𝐛̅ = 𝐲̅ = [ ] 𝐱̅ + [ ]

−3 2 −2

This is Gaussian with mean and covariance as follows

−2 1 −3 1 9

𝛍

̅ 𝑦 = 𝐀𝛍 ̅ + 𝐛̅ = [ ][ ] + [ ] = [ ]

−3 2 2 −2 11

−2 1 2 0 −2 −3

𝚺𝑦 = 𝐀𝚺𝐀𝑇 = [ ][ ][ ]

−3 2 0 4 1 2

−2 1 −4 −6 12 20

=[ ][ ]=[ ]

−3 2 4 8 20 34

Ans c

You might also like

- CELBAN Test Taking Strategies 2016Document15 pagesCELBAN Test Taking Strategies 2016Shin Escareses50% (4)

- Solution 6Document2 pagesSolution 6chandraadityaofflNo ratings yet

- Solution 4Document2 pagesSolution 4chandraadityaofflNo ratings yet

- Test 4 Version 1Document3 pagesTest 4 Version 1The oneNo ratings yet

- Linear AlgebraDocument17 pagesLinear Algebraamarjith9787No ratings yet

- Milki HW1Document5 pagesMilki HW1MilkiNo ratings yet

- Eigenvalues and EigenvectorsDocument9 pagesEigenvalues and Eigenvectorsfaaizashiq72No ratings yet

- MTH 501 Assigment 2 (2022)Document7 pagesMTH 501 Assigment 2 (2022)mc190400046 KHUDIJA BABERNo ratings yet

- Tutorial 4 SolutionsDocument10 pagesTutorial 4 Solutionssama abd elgelilNo ratings yet

- 2.5 Notes and Examples For Matrix Equations: Example 1Document4 pages2.5 Notes and Examples For Matrix Equations: Example 1七海未来No ratings yet

- PEU 3301 - Final Examination 2020 - Solution Q5Document2 pagesPEU 3301 - Final Examination 2020 - Solution Q5mufihussain2000No ratings yet

- Answer of Final (Spring 2018)Document6 pagesAnswer of Final (Spring 2018)Mohamed Salama Mohamed SalamaNo ratings yet

- Irwin Jeremy A. Isip A. The Bilateral Z-TransformDocument14 pagesIrwin Jeremy A. Isip A. The Bilateral Z-TransformIsip IsipNo ratings yet

- Linear AlgbraDocument73 pagesLinear AlgbraHassan ShaheenNo ratings yet

- Lineal PracticaDocument5 pagesLineal PracticaYherik Daner Acho VaquiataNo ratings yet

- Combinatorics Group 1: Alimento, Kaye ESTOQUE, Ritchell LIBOSADA, Arjay PRUDENCIO, JeminaDocument9 pagesCombinatorics Group 1: Alimento, Kaye ESTOQUE, Ritchell LIBOSADA, Arjay PRUDENCIO, JeminaKayeNo ratings yet

- Maths Retest Half Yearly 2022-23Document6 pagesMaths Retest Half Yearly 2022-23Parth AroraNo ratings yet

- MTH 501 Assigment 2 (2022) - VuanswerDocument8 pagesMTH 501 Assigment 2 (2022) - VuanswerMuqaddas RafiqNo ratings yet

- Linear Transformations: DefinitionsDocument22 pagesLinear Transformations: DefinitionsMiral ElnakibNo ratings yet

- Propiedad Í ( ) - ( ) +Document3 pagesPropiedad Í ( ) - ( ) +jhon pucuhuarangaNo ratings yet

- Quiz3 SolutionDocument3 pagesQuiz3 Solution張育安No ratings yet

- BC190408018Document6 pagesBC190408018Rahmeen ShahbazNo ratings yet

- Layaalin Mutmainah - 21060122140176 - Pers Diff 3-1Document5 pagesLayaalin Mutmainah - 21060122140176 - Pers Diff 3-1Layaalin MutmainahNo ratings yet

- Aljabar Linear Dan Matriks: Tugas Pertemuan Ke-11Document6 pagesAljabar Linear Dan Matriks: Tugas Pertemuan Ke-11MuhlasinNo ratings yet

- Determinant and Soultions To Matrices 1Document12 pagesDeterminant and Soultions To Matrices 1Alvin DeliroNo ratings yet

- Example 1:: 3.examples of SVMDocument4 pagesExample 1:: 3.examples of SVMShoppers CartNo ratings yet

- Imroatul Hudati (07111750020003) Tugas 1Document4 pagesImroatul Hudati (07111750020003) Tugas 1Argatha AlwinsyahNo ratings yet

- Mathematics-I, Sem-I Practice Questions On All ModulesDocument12 pagesMathematics-I, Sem-I Practice Questions On All Modulesaditya2189rdNo ratings yet

- M4 Merge PDFDocument68 pagesM4 Merge PDFSushant PatilNo ratings yet

- Example 1:: 3.examples of SVMDocument4 pagesExample 1:: 3.examples of SVMDeadNo ratings yet

- Lesson 1.3 Inverse of MatricesDocument9 pagesLesson 1.3 Inverse of MatricesJustine Faye Maurillo PetillaNo ratings yet

- Be Computer-Engineering Semester-4 2018 May Applied-Mathematics-Iv-CbcgsDocument19 pagesBe Computer-Engineering Semester-4 2018 May Applied-Mathematics-Iv-Cbcgsminji kunNo ratings yet

- Solutions Assignment No 1Document5 pagesSolutions Assignment No 1Jahid HasanNo ratings yet

- Laurent Series, Residues, and The Residue Theorem: AE 5332 - Professor Dora E. MusielakDocument18 pagesLaurent Series, Residues, and The Residue Theorem: AE 5332 - Professor Dora E. MusielakJohn100% (1)

- Math201Midterm1 - Fall 2018 - SolDocument5 pagesMath201Midterm1 - Fall 2018 - SolRamy El-HadadNo ratings yet

- Aplikasi Integral (1) : Menentukan Luas PermukaanDocument14 pagesAplikasi Integral (1) : Menentukan Luas PermukaanLisoh TegalNo ratings yet

- Assignment 4-SolDocument4 pagesAssignment 4-SolNadeem IsmailNo ratings yet

- Zio2023 EditorialsDocument6 pagesZio2023 EditorialsRusman Adi SaputraNo ratings yet

- Eigenvalues, Eigenvectors and Quadratic FormsDocument65 pagesEigenvalues, Eigenvectors and Quadratic FormsAngelo RamosNo ratings yet

- SOLUCIÓN - RECUPERATORIO - 2° Parcial 2023Document1 pageSOLUCIÓN - RECUPERATORIO - 2° Parcial 2023lourdes barriosNo ratings yet

- Solutions Assignment No 2Document8 pagesSolutions Assignment No 2Jahid HasanNo ratings yet

- 2020 Nov PPR 1 Suggested Marking Guide by Trockers-1Document23 pages2020 Nov PPR 1 Suggested Marking Guide by Trockers-1Kayla T NyakudangaNo ratings yet

- JEE Main 2021 August 26, Shift 1 (Mathematics)Document15 pagesJEE Main 2021 August 26, Shift 1 (Mathematics)Souhardya KunduNo ratings yet

- AffuDocument22 pagesAffuAhemadNo ratings yet

- DSP - Assignment 5Document1 pageDSP - Assignment 5mohammadtestpiNo ratings yet

- L3 System of Homogeneous Linear Differential EquationDocument4 pagesL3 System of Homogeneous Linear Differential EquationJohn Vissel F. ParaisoNo ratings yet

- Exam1Review SolutionsDocument6 pagesExam1Review SolutionsParthav PatelNo ratings yet

- 2-Qp Key Ece3048 Deep Learning f2 Cat1Document3 pages2-Qp Key Ece3048 Deep Learning f2 Cat1Anand AmsuriNo ratings yet

- Maths Mid Term MQP1-SolutionDocument14 pagesMaths Mid Term MQP1-SolutionmohitNo ratings yet

- TD1 Math3 SolutionDocument4 pagesTD1 Math3 Solutionanis - TvNo ratings yet

- ACET November 2022 - SolutionDocument12 pagesACET November 2022 - Solutionvarun chittoriaNo ratings yet

- Tarea 2 CalculoDocument8 pagesTarea 2 Calculovanesa reyesNo ratings yet

- Fourier SeriesDocument134 pagesFourier SeriesGolu KumarNo ratings yet

- Solution To Extra Problem Set 7: Alternative Solution: Since Is Symmetric About The Plane 0, We HaveDocument11 pagesSolution To Extra Problem Set 7: Alternative Solution: Since Is Symmetric About The Plane 0, We Have物理系小薯No ratings yet

- M5 - Inverse Z-Transform PDFDocument41 pagesM5 - Inverse Z-Transform PDFJeevith JeeviNo ratings yet

- M5 - Inverse Z-Transform PDFDocument41 pagesM5 - Inverse Z-Transform PDFMOHAMMED A.M. ABUJARAD 19EE0049No ratings yet

- M5 - Inverse Z-TransformDocument41 pagesM5 - Inverse Z-TransformGantan Etika MurtyNo ratings yet

- Inverse Trigonometric Functions (Trigonometry) Mathematics Question BankFrom EverandInverse Trigonometric Functions (Trigonometry) Mathematics Question BankNo ratings yet

- Application of Derivatives Tangents and Normals (Calculus) Mathematics E-Book For Public ExamsFrom EverandApplication of Derivatives Tangents and Normals (Calculus) Mathematics E-Book For Public ExamsRating: 5 out of 5 stars5/5 (1)

- Analytic Geometry: Graphic Solutions Using Matlab LanguageFrom EverandAnalytic Geometry: Graphic Solutions Using Matlab LanguageNo ratings yet

- DCN Lab Ex.1Document4 pagesDCN Lab Ex.1chandraadityaofflNo ratings yet

- DTSP Exp 6Document5 pagesDTSP Exp 6chandraadityaofflNo ratings yet

- Solution 1Document1 pageSolution 1chandraadityaofflNo ratings yet

- Solution 6Document2 pagesSolution 6chandraadityaofflNo ratings yet

- Theories of LLDocument55 pagesTheories of LLMoe ElNo ratings yet

- Present PerfectDocument8 pagesPresent PerfectVerónica Martín GonzálezNo ratings yet

- The Power of WordsDocument2 pagesThe Power of WordsWendy WezerNo ratings yet

- Characteristic of Quality Function Point AnalysisDocument54 pagesCharacteristic of Quality Function Point Analysisabhinav.kishore89172950% (2)

- Ab Initio - V1.4Document15 pagesAb Initio - V1.4Praveen JoshiNo ratings yet

- Literary AnalysisDocument6 pagesLiterary Analysisapi-314203600100% (1)

- On Student Support System: Roject EportDocument20 pagesOn Student Support System: Roject EportarunNo ratings yet

- Class VII Syllabus Term - 2Document2 pagesClass VII Syllabus Term - 2deepansh jaiswalNo ratings yet

- Pali Prosody by Charles DuroiselleDocument10 pagesPali Prosody by Charles DuroisellemehdullaNo ratings yet

- Creative W. PrelimDocument1 pageCreative W. PrelimDondee PalmaNo ratings yet

- Final ExamDocument3 pagesFinal ExamJacob SchuitemanNo ratings yet

- Transaction CodesDocument6 pagesTransaction Codes2sandlerNo ratings yet

- Eapp Month 2Document47 pagesEapp Month 2LouRenDelaPenaNo ratings yet

- History and Evolution of Indian Film Industry: December 2016Document15 pagesHistory and Evolution of Indian Film Industry: December 2016Vibhuti KachhapNo ratings yet

- Running Tensorflow Lite On The Raspberry Pi 4Document21 pagesRunning Tensorflow Lite On The Raspberry Pi 411mustafa.mNo ratings yet

- DLL Oral Comm Week 3Document5 pagesDLL Oral Comm Week 3Foreign Mar PoNo ratings yet

- Form 2 Lesson PlanDocument3 pagesForm 2 Lesson Planbadrul romeoNo ratings yet

- Past Perfect Tense WorksheetDocument2 pagesPast Perfect Tense Worksheetjulide.ozkocNo ratings yet

- Programming Project: C++ Code For A Basic Game Name:SarveshDocument11 pagesProgramming Project: C++ Code For A Basic Game Name:SarveshSarveesh PrabhakaranNo ratings yet

- 1 - All Ye Who Seek A Comfort Sure PDFDocument1 page1 - All Ye Who Seek A Comfort Sure PDFRaymond DevadassNo ratings yet

- Window To World HistoryDocument22 pagesWindow To World HistoryDAYYAN AHMED BHATT100% (1)

- Le Discours Rapportã© (GR 10)Document29 pagesLe Discours Rapportã© (GR 10)shubhamks0208No ratings yet

- Why Study A Foreign LanguageDocument4 pagesWhy Study A Foreign Languageapi-234862369No ratings yet

- Revelations of Rome in Virgilõs Aeneid: by Tara VandiverDocument5 pagesRevelations of Rome in Virgilõs Aeneid: by Tara VandivergfvilaNo ratings yet

- Proficy IFIX - IntellutionDocument8 pagesProficy IFIX - IntellutionthlsatheeshNo ratings yet

- Big DataDocument2,255 pagesBig DatadayaNo ratings yet

- PreDocument3 pagesPreChia TanNo ratings yet

- Stylianides WorkshopDocument4 pagesStylianides WorkshopVassilisNo ratings yet

- FSUIPC Offsets StatusDocument70 pagesFSUIPC Offsets StatusKAPTAN XNo ratings yet