Professional Documents

Culture Documents

EBPTA

EBPTA

Uploaded by

Roudra Chakraborty0 ratings0% found this document useful (0 votes)

13 views3 pagesThe document describes using backpropagation to update the weights of a multilayer perceptron neural network with two inputs, two hidden units, and one output unit. The network is presented with an input pattern and target output. Initial weights are provided. Forward propagation is used to calculate activations and output. Backpropagation is then used to calculate error terms and update the weights using a learning rate of 0.25. The new weights after training on this example are provided.

Original Description:

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document describes using backpropagation to update the weights of a multilayer perceptron neural network with two inputs, two hidden units, and one output unit. The network is presented with an input pattern and target output. Initial weights are provided. Forward propagation is used to calculate activations and output. Backpropagation is then used to calculate error terms and update the weights using a learning rate of 0.25. The new weights after training on this example are provided.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

0 ratings0% found this document useful (0 votes)

13 views3 pagesEBPTA

EBPTA

Uploaded by

Roudra ChakrabortyThe document describes using backpropagation to update the weights of a multilayer perceptron neural network with two inputs, two hidden units, and one output unit. The network is presented with an input pattern and target output. Initial weights are provided. Forward propagation is used to calculate activations and output. Backpropagation is then used to calculate error terms and update the weights using a learning rate of 0.25. The new weights after training on this example are provided.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

Download as pdf or txt

You are on page 1of 3

1

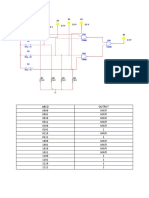

V01

1

V11 Z1

X1 W11 W0

V21

Y y

V12 W21

X2 Z2

V22

V02

1

Error back propagation training algorithm / MLP

X = x1…xi

Z = z1 …zj

Y = y1..yk

Using back-propagation network, find the new weights for the network shown in above figure.

It is presented with the input pattern [x1, x2] = [0, 1] and the target output is 1. Use a Learning

rate = α = 0.25 and use binary sigmoidal activation function

[v11, v21 v01] = [0.6, -0.1, 0.3]

[v12, v22 v02] = [0.4, 0.1, -0.2]

[w11, w21 w0] = [-0.3, 0.4, 0.5]

Feedforward phase:

Zin1 = V01+x1v11+x2v21

Zin2 = v02+x1v12+x2v22

Z1 = f(Zin1) = 1/1+e-(zin1)

Z2 = f(Zin2) = 1/1+e-(zin2)

yin = w0+Z1w11+Z2w21

y = f(yin) = 1/1+e-(yin)

Backpropagation Phase:

k=1

Error between hidden – output layer

δk = (tk-yk)*f`(yk)

f’(yk) = f(yin)*(1-f(yin))

j = 1, 2

δj = δinj* f’(zinj)

f’(zinj) = f(zinj)*(1-f(zinj))

δinj=summation(δkwjk) for k=1 to m

δin1 = δ1w11

δin2 = δ1w21

Error between input – hidden layer

δ1 = δin1* f’(zin1)

δ2= δin2* f’(zin2)

Calculation of Change in weights:

Δw1 = α δkz1

Δw2 = α δkz2

Δw0 = α δ1

Δv11 = 0

Δv21 = 0.0029475

Δv01 = 0.0029475

Δv12 = 0

Δv22 = 0.00061195

Δv02 = 0.00061195

Δv11 = α δ1x1

Δv21 = α δ1x2

Δv01 = α δ1

Δv12 = α δ2x1

Δv22 = α δ2x2

Δv02 = α δ2

New weights Calculation:

v11(new) = v11(old)+ Δv11

v21(new) = v21(old) + Δv21

v01(new) = v01(old)+ Δv01

v12(new) = v12(old)+ Δv12

v22(new) = v22(old)+ Δv22

v02(new) = v02(old)+ Δv02

Wnew = Wold + Δw

w1(new) = w1(old)+ Δw1

w2(new) = w2(old)+ Δw2

w0(new) = w0(old)+ Δw0

v11(new) = 0.6

v21(new) = -0.0970525

v01(new) = 0.3029475

v12(new) = -0.3

v22(new) = 0.40061195

v02(new) = 0.50061195

w1(new) = 0.41637

w2(new) = 0.121167

w0(new) = -0.170225

You might also like

- Red Neuronal BackpropagationDocument24 pagesRed Neuronal BackpropagationHUGO ALBERTO BERNAL PERDOMONo ratings yet

- 457 Nodal Analysis 2015Document17 pages457 Nodal Analysis 2015chawara779No ratings yet

- Planos Red LineDocument6 pagesPlanos Red Linevicvarg3235No ratings yet

- Usc Ee479 HW1 2018Document3 pagesUsc Ee479 HW1 2018Nafi IfanNo ratings yet

- Week 6Document67 pagesWeek 6Aaditya KumarNo ratings yet

- Two Port NetworkDocument34 pagesTwo Port NetworkWaqarNo ratings yet

- 004 Introduction To S7 1200Document23 pages004 Introduction To S7 1200ugui81No ratings yet

- Microwave NetworksDocument14 pagesMicrowave NetworksDenis CarlosNo ratings yet

- Ch19 - Two-Port NetworksDocument26 pagesCh19 - Two-Port NetworksdadsdNo ratings yet

- Lecture 53Document12 pagesLecture 53vivek singhNo ratings yet

- Hahahahaha AhDocument8 pagesHahahahaha AhBống PhanNo ratings yet

- Chapter 6 - Two-Port NetworkDocument35 pagesChapter 6 - Two-Port NetworkAnonymous 20Uv9c2No ratings yet

- Iso BelajarDocument785 pagesIso BelajarSuhari AriNo ratings yet

- Two-Port Network AnalysisDocument21 pagesTwo-Port Network Analysissaleh gaziNo ratings yet

- 6.2 & 6.3 MESH & NODE Analysis KKKDocument4 pages6.2 & 6.3 MESH & NODE Analysis KKKcutiesfunnypetNo ratings yet

- Network Analysis and Synthesis: Two Port NetworksDocument44 pagesNetwork Analysis and Synthesis: Two Port NetworksESTIFANOS NegaNo ratings yet

- ACtive Notch Filter DesignDocument44 pagesACtive Notch Filter DesignDrMohammad Rafee ShaikNo ratings yet

- Teknik Digital K MapDocument1 pageTeknik Digital K Mapshafa mantasyaNo ratings yet

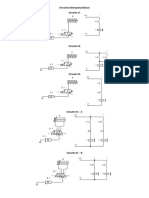

- Circuitos Eletropneumáticos para ImpressãoDocument7 pagesCircuitos Eletropneumáticos para ImpressãoDamião SilvaNo ratings yet

- Perhitungan Manual FixDocument8 pagesPerhitungan Manual Fixmuke gileNo ratings yet

- NA Unit-IVDocument33 pagesNA Unit-IVvijayalakshmiv VEMURINo ratings yet

- Digital Electronics Assignment Sample SolutionsDocument6 pagesDigital Electronics Assignment Sample Solutionsamitav_biswas840% (1)

- d1b(2)Document36 pagesd1b(2)bens082023No ratings yet

- Acesso Ao Novo Meu SENAIDocument1 pageAcesso Ao Novo Meu SENAIzarake uchihaNo ratings yet

- Four PortDocument29 pagesFour PortDharamNo ratings yet

- Lecture One: Introduction To Power System Yoseph MekonnenDocument23 pagesLecture One: Introduction To Power System Yoseph MekonnenKalab TenadegNo ratings yet

- Ashley Lite Subboard SchematicsDocument2 pagesAshley Lite Subboard SchematicsIsabel GarciaNo ratings yet

- Ashley Lite Subboard SchematicsDocument2 pagesAshley Lite Subboard SchematicsNelsonNo ratings yet

- Mic0101 Mic0101: MK2 MK2 MK2Document2 pagesMic0101 Mic0101: MK2 MK2 MK2Mario BasileNo ratings yet

- XT1922 Ashley SubBoard Component LocationDocument2 pagesXT1922 Ashley SubBoard Component LocationAlfonso BasileNo ratings yet

- Grafcet 22Document1 pageGrafcet 22Ivor JerkicNo ratings yet

- 05 Combinational Logic Building BlocksDocument17 pages05 Combinational Logic Building BlocksAyhan AbdulAzizNo ratings yet

- Chapter_4 (3rd Edition)Document27 pagesChapter_4 (3rd Edition)Abdullah SalmanNo ratings yet

- DCIM ppt-1Document5 pagesDCIM ppt-1310Janhavi PatangeNo ratings yet

- E141 TD-6 Quadripolesp Probleme-3Document3 pagesE141 TD-6 Quadripolesp Probleme-3Hamza GhoudraniNo ratings yet

- Sheet2 - Assignment2 Logic DesignDocument2 pagesSheet2 - Assignment2 Logic Designossamaforwork671No ratings yet

- Publication 3 20665 132Document5 pagesPublication 3 20665 132TibelchNo ratings yet

- SVPWM 3 LevelDocument27 pagesSVPWM 3 Levelthirumalai22No ratings yet

- No Y (CM) Y (CM) Y (CM) Q E E: Garis Head TotalDocument2 pagesNo Y (CM) Y (CM) Y (CM) Q E E: Garis Head TotalAnonymous HaB8RdNo ratings yet

- Mic0101 Mic0101: MK2 MK2 MK2Document2 pagesMic0101 Mic0101: MK2 MK2 MK2Humberto YumaNo ratings yet

- EE 740 Professor Ali Keyhani Lecture #3: Ideal TransformersDocument11 pagesEE 740 Professor Ali Keyhani Lecture #3: Ideal TransformersMohamed A. HusseinNo ratings yet

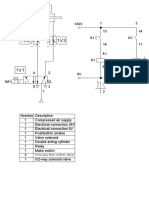

- Automatismos CableadosDocument1 pageAutomatismos Cableadosjuanramon.profesormmseeNo ratings yet

- Load Flow Gauss-Seidel Method EE 452: Computer Methods in Power SystemsDocument9 pagesLoad Flow Gauss-Seidel Method EE 452: Computer Methods in Power SystemsJerryNo ratings yet

- Effect of Internal Transistor Capacitances:: At, The Due To, and The Amplifier Response. But The WillDocument46 pagesEffect of Internal Transistor Capacitances:: At, The Due To, and The Amplifier Response. But The WillMuthukrishnan Vijayan VijayanNo ratings yet

- Connection Diagram Acc. Nen-En-Iec60034-8: D.O.L StartingDocument2 pagesConnection Diagram Acc. Nen-En-Iec60034-8: D.O.L Startingroby72No ratings yet

- Red Neuronal BackpropagationDocument17 pagesRed Neuronal BackpropagationHUGO ALBERTO BERNAL PERDOMONo ratings yet

- 제05주차 Chapter12 정전액츄에이터Document13 pages제05주차 Chapter12 정전액츄에이터K SiriusNo ratings yet

- Singh Studentsolutions Misc4Document36 pagesSingh Studentsolutions Misc4Debdutta ChatterjeeNo ratings yet

- Two Port NetworksDocument34 pagesTwo Port Networksvijayalakshmiv VEMURINo ratings yet

- Chapter 5Document30 pagesChapter 5Mohammad AliffuddinNo ratings yet

- Analisis - Estructural - JEFF - LAIBLE 489Document1 pageAnalisis - Estructural - JEFF - LAIBLE 489Andres Felipe Prieto AlarconNo ratings yet

- Modelling of Load Flow Analysis in ETAP SoftwareDocument22 pagesModelling of Load Flow Analysis in ETAP SoftwarevipinrajNo ratings yet

- Lecture1 MicrowaveNetworkAnalysisDocument74 pagesLecture1 MicrowaveNetworkAnalysisAbdelrahman RagabNo ratings yet

- Chem 211Document19 pagesChem 211Vlademir De GuzmanNo ratings yet

- Lecture 21: Z Parameter: Lecturer: Dr. Vinita Vasudevan Scribe: Shashank ShekharDocument4 pagesLecture 21: Z Parameter: Lecturer: Dr. Vinita Vasudevan Scribe: Shashank ShekharAniruddha RoyNo ratings yet

- Two Port Networks: Millia Institute of Technology, Rambagh PurneaDocument32 pagesTwo Port Networks: Millia Institute of Technology, Rambagh PurneaAJaz AlamNo ratings yet

- Two Port Networks PPT 1 B TECH 3RDDocument32 pagesTwo Port Networks PPT 1 B TECH 3RDEuthecas KipkiruiNo ratings yet

- PerceptronDocument26 pagesPerceptronSANJIDA AKTERNo ratings yet

- Unit 2Document36 pagesUnit 2Anu GraphicsNo ratings yet

- Bias-Variance Trade-OffDocument28 pagesBias-Variance Trade-OffRoudra ChakrabortyNo ratings yet

- Resampling Methods - MLDocument115 pagesResampling Methods - MLRoudra ChakrabortyNo ratings yet

- Module 4 SVM PCA KmeansDocument101 pagesModule 4 SVM PCA KmeansRoudra ChakrabortyNo ratings yet

- Module 2 - DS IDocument94 pagesModule 2 - DS IRoudra ChakrabortyNo ratings yet

- Module-2 - Assessing Accuracy of ModelDocument24 pagesModule-2 - Assessing Accuracy of ModelRoudra ChakrabortyNo ratings yet

- Orientation - Basic Mathematics and Statistics - NDDocument33 pagesOrientation - Basic Mathematics and Statistics - NDRoudra ChakrabortyNo ratings yet

- Orientation - Basic Mathematics and Statistics - ProbabilityDocument48 pagesOrientation - Basic Mathematics and Statistics - ProbabilityRoudra ChakrabortyNo ratings yet

- Orientation - Basic Mathematics and Statistics - CGDocument15 pagesOrientation - Basic Mathematics and Statistics - CGRoudra ChakrabortyNo ratings yet

- Orientation - Basic Mathematics and Statistics - CTDDocument35 pagesOrientation - Basic Mathematics and Statistics - CTDRoudra ChakrabortyNo ratings yet