Professional Documents

Culture Documents

Data Engineering Workbook

Data Engineering Workbook

Uploaded by

PatOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Data Engineering Workbook

Data Engineering Workbook

Uploaded by

PatCopyright:

Available Formats

Think Big, Start Small,

Scale Fast: The Data

Engineering Workbook

10 steps to designing an industrial

data architecture for scale

Introduction

Many manufacturers are drowning in data and

struggling to make it useful. A modern industrial

facility can easily produce more than a terabyte of

data each day. With a wave of new technologies

for artificial intelligence and machine learning

coupled with real-time dashboards, we should see

huge gains in productivity. Unplanned asset and

production line maintenance should be a thing of

the past, but even today, that is not the case.

Access to data does not make it useful. Industrial

data is raw and must be made fit for purpose to

extract its true value. Furthermore, the tools used to

make the data fit for purpose must operate at the

scale of an industrial enterprise.

With these realities in mind, here is a practical,

10-step guide with a few short questionnaires to

help manufacturing and industrial leaders better

understand the process of applying proven data

engineering processes and technologies to make

their data fit for purpose.

The Data Engineering Workbook 2

Table of Contents

Step 1

Think Big: Align Your Goals With The Organization..............4

Step 2

Get Strategic: Consider Your Architectural Approach..........6

Step 3

Start Small: Begin With A Use Case...............................................8

Step 4

Identify The Target Systems...............................................................10

Step 5

Identify The Data Sources....................................................................12

Step 6

Select The Integration Architecture..............................................15

Step 7

Establish Secure Connections...........................................................19

Step 8

Model The Data.........................................................................................21

Step 9

Flow The Data...........................................................................................24

Step 10

Chart Your Progress And Expand To New Areas...................27

The Data Engineering Workbook 3

Step 1

Think Big: Align Your Goals

With The Organization

As with any major initiative, ensuring that your project is aligned

with corporate goals has to be the first step. Because industrial

data will be used by IT, operations, and line-of-business users,

your objectives must support overarching business goals while

being easily understood by data users across the organization.

Cross-departmental collaboration will be necessary, as data

architecture tends to reach across a great deal of the enterprise.

IT, OT, and business users experience

“Make sure the right numerous benefits when they can

more easily access and understand

cross-functional data. Their experience with industrial

stakeholders are in data has likely been characterized by

the room from the lack of access and understanding,

project beginning.” so guaranteeing access to

contextualized, ready-to-use data

will simplify and accelerate the work

of IT and business users. Make sure the right cross-functional

stakeholders are in the room from the project beginning, and

that all stakeholders agree to prioritize the project and can reach

consensus on the project goals.

The Data Engineering Workbook 4

Questions:

■ How will end users benefit?

■ How will the organization benefit?

■ What is the best way to present those benefits?

■ What is your timeline and budget?

Document your answers here:

The Data Engineering Workbook 5

Step 2

Get Strategic: Consider Your

Architectural Approach

Lack of architectural strategy is one of

the most common sources of failure

“Taking the time

for Industry 4.0 initiatives. A lot of well-

to plan your meaning manufacturers start building

architecture integrations that perform their given

before you start tasks on day one, for the first project.

your project pays But what happens when the custom

code of the integration needs to be

dividends as you

altered to receive new datasets? Or it

expand to other needs to be duplicated or expanded

areas and sites.” beyond the pilot program? What

about if a new data source or target

is introduced? Any given integration

can survive these challenges, but the time, effort, and cost of

manually maintaining and scaling individual integrations always

prohibits meaningful expansion eventually.

Taking the time to plan your architecture before you start your

project pays dividends as you expand to other areas and sites.

A well-planned data architecture will deliver the visibility and

accessibility necessary to give your people and systems the data

they need when they need it.

The Data Engineering Workbook 6

Questions:

■ What are your data sources and target systems?

■ How are your systems currently integrated?

■ How will you scale your integrations?

■ How will you know if data is not flowing or a connection is down?

■ What does success look like, and how will you measure it?

Document your answers here:

The Data Engineering Workbook 7

Step 3

Start Small: Begin

With A Use Case

Information Technology (IT) and Operations Technology (OT)

projects should begin with clear use cases and business goals.

For many manufacturing companies, projects may focus on

machine maintenance, process improvements, or product

analysis to improve quality or traceability. As part of the use case,

company stakeholders should identify the project scope and

applicable data that will be required.

In a factory, the use cases are typically driven by one of three key

data structures: assets, processes, or products.

■ Assets are the core data structure for use cases like predictive

asset maintenance and tracking power consumption and

emissions.

■ Processes are the core data structure when looking at

process control and monitoring line or cell production

metrics.

■ Products are the core data structure when looking at

traceability, defect root cause analysis, and batch reporting.

Each use case has a target persona

who will consume this information

“Identify the

and act on it. This persona is critical

project scope and to identify because their knowledge,

applicable data that experience, and background will all

will be required.” impact how the data will be delivered

to them—and ultimately the success

of the project.

The Data Engineering Workbook 8

Questions:

■ What will your first (or next) use case be?

■ Who are the stakeholders and how will they benefit?

■ What outcomes do you hope to produce with your use case?

Document your answers here:

The Data Engineering Workbook 9

Step 4

Identify The

Target Systems

With the business goals and use cases identified, your next

step is identifying the target applications that will be used to

accomplish these goals. This approach is contrary to traditional

data acquisition approaches that would have you begin with

source systems. Focusing on your

target systems first will allow you to

“Focusing on your identify exactly what data you need

to send, how it must be sent, and at

target systems first

what frequency it should be sent, so

will allow you to you can determine which sources and

identify exactly what structure are best suited to deliver

data you need to that data. Focusing on the target

send, how it must system and persona will also help

identify the context the data may

be sent, and at what

require and the frequency of the data

frequency it should updates. Some target systems may

be sent.” require data from multiple sources

blended into a single payload. Most

require the data to be formatted

in a specific way for consumption. By starting with the target

system and persona, you can ensure that you are architecting

your solution in a way that delivers not just the right data but the

right data with the right context at the right time.

The Data Engineering Workbook 10

You can characterize the target application by asking these

questions:

■ Where is the target application located: at the Edge,

on-premises, in a data center, in the Cloud, etc.?

■ How can this application receive data: MQTT, OPC UA, REST,

database load, etc.?

■ What information is needed for this use case in this application?

■ How frequently should the data be updated and what causes

the update?

Document your answers here:

The Data Engineering Workbook 11

Step 5

Identify The

Data Sources

Identifying the right data sources for your use case is a crucial

step. However, there are often significant barriers to accessing

the right data sources, including:

VOLUME

The typical modern industrial factory

has hundreds to thousands of

“Gain a better

pieces of machinery and equipment

constantly creating data. This data understanding

is generally aggregated within of the specific

Programmable Logic Controllers challenges you will

(PLCs), machine controllers, or need to overcome

Distributed Control Systems (DCSs) for your project by

within the automation layer, though

newer approaches may also include documenting your

smart sensors and smart actuators data sources.”

that feed data directly into the

software layer.

CORRELATION

Automation data was primarily put in place to manage,

optimize, and control the process. The data is correlated for

process control, not for asset maintenance, product quality, or

traceability purposes.

CONTEXT

Data structures on PLCs and machine controllers have minimal

descriptive information—if any. In many cases, data points

are referenced with cryptic data-point naming schemes or

references to memory locations.

The Data Engineering Workbook 12

Furthermore, the context for telemetry data is often stored

across transaction systems like MES, CMMS, QMS, and ERP.

For example, the context for asset maintenance is typically in

the CMMS, including the asset vendor, model, service date,

and specification, while the context for a batch is in the MES

including set points, alarms, product description, planned

volume, and customer. This information is critical when taking

the data outside the OT domain and delivering it to line of

business users.

STANDARDIZATION

Automation in a factory evolves over time, with machinery

and equipment sourced from a wide variety of hardware

vendors. Hardware is typically programmed and defined by the

respective vendor, resulting in unique data models created for

each piece of machinery and a lack of standards across the site

and enterprise.

You can better understand the specific challenges you will need

to overcome for your project by documenting your data sources.

The Data Engineering Workbook 13

Characterize the data available to meet the target system’s

needs by asking these questions:

■ What data is available?

■ Where is the data located: PLCs, machine controllers,

databases, etc.?

■ Is it real-time data or informational data (metadata)?

■ Is the data currently available in the right format or will it

need to be derived?

Document your answers here:

The Data Engineering Workbook 14

Step 6

Select The Integration

Architecture

Integration architectures fall in two camps: direct Application

Programming Interface (API) connections (application-to-

application) or integration hubs (DataOps solutions).

Direct API connections work well if you only have two

applications that need to be integrated. The data does not need

to be curated or prepared for the receiving application, and the

source and target systems are static. Direct API connections are

typically successful in environments in which the manufacturing

company has a single SCADA or MES solution that houses all the

information, and there is no need for additional applications to

get access to the data.

Direct API connections do not work

well when industrial data is needed in

“The DataOps

multiple applications like SCADA, MES,

approach to data ERP, IIoT platforms, data warehouses

integration and and lakes, analytics, QMS, AMS, cyber

security aims to threat monitoring systems, various

improve data quality custom databases, dashboards, or

spreadsheet applications. Direct

and reduce time

API connections also do not work

spent preparing and well when there are many data

maintaining data for transformations that must occur to

use throughout the prepare the data for the consuming

enterprise.” system. These transformations can

easily be performed in Python, C#, or

any other programming language,

but they are then “invisible” and hard to maintain.

Finally, direct API connections do not work well when data

structures are frequently changing. When the factory equipment,

programs running on the equipment, or target system

requirements are frequently changing, direct API connections

tend to fail and either stop working or leave holes in the data.

The Data Engineering Workbook 15

For example, a manufacturer may need to create short-run

batches that require loading new programs on the PLCs. The

products produced will require changes to the automation,

the automation will likely need to be changed to improve

efficiency, and in some cases, the equipment may need to be

replaced due to age and performance.

Using the API approach buries the integrations, and therefore

the ability to make changes to the automation, in code.

Stakeholders may not even be aware of integrated systems

until long after the equipment has been replaced or changes

have been made, resulting in undetected bad or missing data

for weeks or even months.

An alternative to direct API connections is a DataOps

integration hub. The DataOps approach to data integration

and security aims to improve data quality and reduce time

spent preparing and maintaining data for use throughout the

enterprise. An integration hub acts as an abstraction layer that

uses APIs to connect to other applications while providing

a management, documentation, and governance tool that

connects data sources to all required applications.

An integration hub is purpose-built to move high volumes of

data at high speeds with transformations being performed

in real time while the data is in motion. Since a DataOps

integration hub is an application itself, it provides a platform

to identify impact when devices or applications are changed,

perform data transformations, and provide visibility to these

transformations.

As your use case scales, the integration hub methodology easily

scales with it. With an integration hub, you can templatize

connections, models, and flows, allowing you to more easily

reuse integrations and onboard similar assets. Integrations

hubs can also share configurations, projects, and more, so

when you are ready to expand your use case to another site,

you can simply launch another integration hub and carry your

work over.

The Data Engineering Workbook 16

Selecting Your

Integration Architecture

Figure 1: Integration hubs are an alternative to using Application

Programming Interfaces (APIs) solely when managing the many

types of data associated today with automation projects.

The Data Engineering Workbook 17

Questions:

■ Where does your current integration architecture excel?

■ Are there areas in your organization held back by your current

integration architecture?

■ On a scale of 1-10, how would you rank your ability to quickly

create or adapt integrations? Why?

Document your answers here:

The Data Engineering Workbook 18

Step 7

Establish Secure

Connections

Once your project plan is in place, you can begin system

integration in earnest by establishing secure connections to the

source and target systems. It is vital that you wholly understand

the protocols you will be working with and the security risks and

benefits that come with them.

Many systems support open and

secure protocols to define the

“Wholly understand connection and communication.

the protocols you Typical open protocols include OPC

will be working with UA, MQTT, REST, ODBC and AMQP—

and the security among others. There also are many

risks and benefits closed protocols and vendor-defined

APIs for which the application

that come with vendor publishes the API protocol

them.” documentation. Some protocols

and systems support certificates

exchanged by the applications. Other

protocols support usernames and passwords or tokens manually

entered into the connecting system or through third-party

validation. In addition to user authentication, some protocols

support encrypted data packets, so if there is a “man in the

middle” attack they cannot read the data being passed. Finally,

some protocols support data authentication. Then, even if the

data is viewed by a third party, it cannot be changed.

Security is not just about usernames, passwords, encryption, and

authentication. It is also about integration architecture. In many

companies the controls network is separated from the business

network by at least one firewall (if not two) and a DMZ (the space

between the two networks). Some companies will have even

more networks to separate traffic and secure systems. Protocols

like MQTT require only outbound openings in firewalls, which

security teams prefer because hackers are unable to exploit the

protocol to get on internal networks.

The Data Engineering Workbook 19

Questions:

■ Does your communication protocol support secure connections,

and how are these connections created?

■ Where does your data move to and from, and how exposed is it

during movement?

Document your answers here:

The Data Engineering Workbook 20

Step 8

Model The Data

The corporate-wide deployment and adoption of advanced

analytics or other Industry 4.0 use cases is often delayed by

the variability of data coming off the factory floor. As we noted

in Step 5, each industrial device may have its own data model.

Historically, vendors, systems integrators, and in-house controls

engineers have not focused on creating data standards; they

have refined the systems and changed the data models

piecemeal over time to suit their needs. This approach worked

for one-off projects, but today’s IIoT projects require more

scalability.

The first step in modeling data is to

define the standard data set required

“Define the standard

in the target system to meet the

data set required in project’s business goals. The real-time

the target system to data coming off the machinery and

meet the project’s automation equipment is at the core

business goals.” of the model. Most of the real-time

data points will map to single-source

data points, but when a specific data

point does not exist, data points can be derived by executing

expressions or logic using other data points. Data also can be

parsed or extracted from other data fields, or additional sensors

can be added to provide required data.

These models also should include attributes for any descriptive

data, which are not typically stored in the industrial devices but

are especially useful when matching and evaluating data in the

target systems. Descriptive data could be the machine’s location

and asset number, unit of measure, operating ranges, asset

vendor, last service date, or other contextual information.

The Data Engineering Workbook 21

Once the standard models are created, they should be

instantiated for each asset, process, and/or product. This

is typically a manual task, but it can be accelerated if the

mapping already exists in Excel or other formats, if there is

consistency from device to device that can be copied, or when

parametric definitions can be applied.

Some source data must be pre-processed or conditioned

prior to being mapped into an instance. The data may not be

readable in its current state and may require a transformation

or third-party library to convert it. Or, perhaps the data must

be aggregated across a time period or based on an event

occurrence, and then undergo calculations performed like

average, max, or count.

The Data Engineering Workbook 22

Questions:

■ What descriptive data do your target systems need?

■ How will you implement your models once they’re created?

■ What additional processing of the raw data is required to make it

usable in a model?

Document your answers here:

The Data Engineering Workbook 23

Step 9

Flow The Data

When the instances are complete, data flows control the timing

of when the values for an instance are sourced, standardized,

contextualized, calculated, and sent to the target system. This is

typically performed by identifying the instance to be moved, the

target system, and the frequency or trigger for the movement.

Three publishing examples are provided below.

CYCLIC PUBLISHING

Some systems require complete data sets

published to them at a consistent frequency, which

might range from milliseconds to days. Cyclic publishing is

used when publishing to systems, such as databases, data

warehouses or data lakes, that use time-based trends and

analysis to visualize the data.

EVENT PUBLISHING

In event publishing, a complete set of information is

assembled and published when a given event occurs,

notifying necessary systems and/or logging the event. Events

might range from notifications that work cell has completed a

part or the temperature reading exceeds set limits.

TIME SERIES PUBLISHING

Time series publishing only publishes when changes

occur. It is often the lightest load on the network

and storage, but lack of context can make reconstructing

information for analytics or visualization challenging. Many

Operational Technology (OT) systems and some IT systems can

consume and reconstruct time series data but require domain

knowledge to do this.

The Data Engineering Workbook 24

In addition to collecting, modeling,

and flowing data, some system “A well-built data

integrations require more complex

processing of the data. This can

flow will retain

include buffering data, compressing the semantics

it, and sending it in a file to a data of a model while

lake. It also may include complex transforming the

sequencing of multi-step processes. presentation and

These activities are best performed

through a graphical staged pipeline

delivery to the

builder that allows for multiple reads unique needs

and writes in a single pipeline, has of the systems

many predefined steps as well as core consuming it.”

data transformation steps, and can

store pipeline state across runs.

A well-built data flow will retain the semantics of a model while

transforming the presentation and delivery to the unique needs

of the systems consuming it. Over time, data flows also will

require monitoring and management. Managing your flows

in an integration hub allows you easily track changes across

iterations and scale changes via templating.

The Data Engineering Workbook 25

Questions:

■ Which flow triggers will you use and why?

■ How will your flows alter your model’s presentation for

consuming systems?

■ What additional processing is required to optimize or

sequence interactions to enable the required integrations?

■ How will the data be organized in the target system and

how can this be identified in the payload?

Document your answers here:

The Data Engineering Workbook 26

Step 10

Chart Your Progress And

Expand To New Areas

Now that your data is flowing and

your use case is officially off the “New Industry 4.0

ground, it is vital that you pay close

use cases often

attention to your results and track

your progress. It might seem like explore entirely new

an obvious step, but gathering functionality, so

performance data may not always be processes to track

as simple as it seems. New Industry your progress may

4.0 use cases often explore entirely not presently exist in

new functionality, so processes to

track your progress may not presently your organization.”

exist in your organization.

Questions:

■ How will you chart your progress?

■ Can your flows be easily scaled across the site or enterprise?

Why or why not?

■ How will you present ROI to your peers?

Document your answers here:

The Data Engineering Workbook 27

Wrap Up

Factories and other industrial environments change

over time. Equipment is replaced, programs are

changed, products are redesigned, systems are

upgraded, and new users need new information

to perform their jobs. Amid this change, OT and IT

professionals will collaborate on new projects aimed

at improving factory floor productivity, efficiency,

and safety. You will need industrial data that is fit

for purpose to make the data useable, and you will

need tools to accomplish this task at scale—like a

DataOps integration hub. By using an integration

hub, administrators can evaluate equipment and

system changes and identify integrations that must

be modified or replaced. They can make changes to

data models and enable new flows in real time.

Making industrial data fit for purpose will be

critical to manufacturers interested in scaling their

Industry 4.0 projects, handling data governance

and ultimately driving digital transformation. I hope

this workbook serves as a practical guide to getting

started on your next project and designing an agile

architecture. Remember: Think big, start small, and

scale fast!

The Data Engineering Workbook 28

About HighByte

HighByte is an industrial software company

founded in 2018 with headquarters in Portland,

Maine USA. The company builds solutions that

address the data architecture and integration

challenges created by Industry 4.0. HighByte

Intelligence Hub, the company’s award-winning

Industrial DataOps software, provides modeled,

ready-to-use data to the Cloud using a codeless

interface to speed integration time and accelerate

analytics. The Intelligence Hub has been deployed in

more than a dozen countries by some of the world’s

most innovative companies spanning a wide range

of vertical markets, including food and beverage,

health sciences, pulp and paper, industrial products,

consumer goods, and energy.

Learn more at https://highbyte.com.

The Data Engineering Workbook 29

© 2023 HighByte, Inc. All rights reserved.

HighByte isThe

a registered trademark

Data Engineering of HighByte, Inc.

Workbook

You might also like

- SuperFunctional TrainingDocument208 pagesSuperFunctional TrainingPat100% (4)

- Power Focus 8 LeafletDocument12 pagesPower Focus 8 Leafletfahed.fattelNo ratings yet

- Big Book of Data Warehousing and Bi v9 122723 Final 0Document88 pagesBig Book of Data Warehousing and Bi v9 122723 Final 0AblekkNo ratings yet

- JTMFIT - The Functional Method 1.0Document73 pagesJTMFIT - The Functional Method 1.0Pat100% (1)

- Progression Murph TrainingDocument18 pagesProgression Murph TrainingPatNo ratings yet

- Sample Outline Azure Machine Learning EngineeringDocument17 pagesSample Outline Azure Machine Learning EngineeringAli AbidiNo ratings yet

- Adaptations of Pharma 4.0 From Industry 4.0: Review ArticleDocument11 pagesAdaptations of Pharma 4.0 From Industry 4.0: Review ArticleADZZ P.No ratings yet

- Kboges' Weighted Pull Up MasteryDocument2 pagesKboges' Weighted Pull Up MasteryPatNo ratings yet

- (IJIT-V6I5P7) :ravishankar BelkundeDocument9 pages(IJIT-V6I5P7) :ravishankar BelkundeIJITJournalsNo ratings yet

- Stability System II v.6.5.ppsDocument17 pagesStability System II v.6.5.ppsEstuardo MolinaNo ratings yet

- SQL Server 2019 Editions Datasheet PDFDocument3 pagesSQL Server 2019 Editions Datasheet PDFKhantKoNo ratings yet

- Patterns of The Hypnotic Techniques of M PDFDocument237 pagesPatterns of The Hypnotic Techniques of M PDFjeanotte100% (2)

- Industry 4.0, Digitization, and Opportunities For SustainabilityDocument21 pagesIndustry 4.0, Digitization, and Opportunities For SustainabilityRui GavinaNo ratings yet

- Deepa ResumeDocument3 pagesDeepa ResumeIID SalemNo ratings yet

- Data EngineeringDocument63 pagesData Engineering20egjcs071No ratings yet

- ResumeDocument5 pagesResumeajeet rathoreNo ratings yet

- AWS Batch User GuideDocument215 pagesAWS Batch User GuideHimanshu KhareNo ratings yet

- Dimensional ModelingDocument22 pagesDimensional ModelingajazkhankNo ratings yet

- Akhil Suresh: Synthite Industries LimitedDocument2 pagesAkhil Suresh: Synthite Industries LimitedAkhil Suresh (Synthite Biotech)No ratings yet

- Mittr-X-Databricks Survey-Report Final 090823Document25 pagesMittr-X-Databricks Survey-Report Final 090823Grisha KarunasNo ratings yet

- ResumeDocument3 pagesResumeSankalp KothiyaNo ratings yet

- Data Engineering Study PlanDocument4 pagesData Engineering Study PlanEgodawatta PrasadNo ratings yet

- C2 Databricks - Sparks - EEDocument9 pagesC2 Databricks - Sparks - EEyedlaraghunathNo ratings yet

- Databricks: Building and Operating A Big Data Service Based On Apache SparkDocument32 pagesDatabricks: Building and Operating A Big Data Service Based On Apache SparkSaravanan1234567No ratings yet

- Big QueryDocument5 pagesBig QueryMilena Zurita CamposNo ratings yet

- Dataeng-Zoomcamp - 4 - Analytics - MD at Main Ziritrion - Dataeng-Zoomcamp GitHubDocument26 pagesDataeng-Zoomcamp - 4 - Analytics - MD at Main Ziritrion - Dataeng-Zoomcamp GitHubAshiq KNo ratings yet

- Top 5 Data Engineering Projects You Can't Afford To Miss by Yusuf Ganiyu Feb, 2024 MediumDocument23 pagesTop 5 Data Engineering Projects You Can't Afford To Miss by Yusuf Ganiyu Feb, 2024 Mediumvlvpkiw1vNo ratings yet

- Oracle Database 11g: SQL Fundamentals I: D49996GC11 Edition 1.1 April 2009 D59980Document16 pagesOracle Database 11g: SQL Fundamentals I: D49996GC11 Edition 1.1 April 2009 D59980Ashlesh KumarNo ratings yet

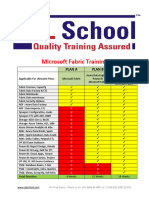

- MicrosoftFabric TrainingDocument16 pagesMicrosoftFabric TrainingAmarnath Reddy KohirNo ratings yet

- Azure Data EngineeringDocument41 pagesAzure Data EngineeringpaulaNo ratings yet

- Next Pathway - Azure Synapse Analytics Migration ChecklistDocument3 pagesNext Pathway - Azure Synapse Analytics Migration ChecklistBobo TangNo ratings yet

- Making Big Data Simple With DatabricksDocument25 pagesMaking Big Data Simple With DatabrickstoddsawickiNo ratings yet

- 180 Sample ResumeDocument3 pages180 Sample ResumePuja ShawNo ratings yet

- Scope, and The Inter-Relationships Among These EntitiesDocument12 pagesScope, and The Inter-Relationships Among These EntitiesSyafiq AhmadNo ratings yet

- Well Architected Lakehouse WorkshopDocument49 pagesWell Architected Lakehouse WorkshopekanshmmaxNo ratings yet

- MLOps Specialization Course April 2024Document25 pagesMLOps Specialization Course April 2024aayushs7haNo ratings yet

- The 8 Basic Statistics Concepts For Data Science - KDnuggetsDocument13 pagesThe 8 Basic Statistics Concepts For Data Science - KDnuggetsjigsan5No ratings yet

- Troubleshooting Spark ChallengesDocument7 pagesTroubleshooting Spark ChallengeshaaNo ratings yet

- Koenig Solutions - Postgres Training Details PDFDocument1 pageKoenig Solutions - Postgres Training Details PDFrampreetham4388No ratings yet

- Data Fabric Architecture (High Level) - Version 1.0Document2 pagesData Fabric Architecture (High Level) - Version 1.0kpalanivel123No ratings yet

- Complex Event Processing With Apache Flink PresentationDocument49 pagesComplex Event Processing With Apache Flink Presentationanibal.vmezaNo ratings yet

- Snowflake PoV Brochure V6bgeneric TDDocument16 pagesSnowflake PoV Brochure V6bgeneric TDMohammed ImamuddinNo ratings yet

- MSBIDocument30 pagesMSBISuresh MupparajuNo ratings yet

- 7 Snowflake Reference Architectures For Application BuildersDocument13 pages7 Snowflake Reference Architectures For Application BuildersMaria PilarNo ratings yet

- Horton Works Sparko DBC Driver User GuideDocument87 pagesHorton Works Sparko DBC Driver User GuideOzioma IhekwoabaNo ratings yet

- Power QueryDocument1,995 pagesPower QueryAjit KumarNo ratings yet

- Scalable Data Streaming With Amazon Kinesis Design and Secure Highly Available, Cost-Effective Data Streaming Applications... (Tarik Makota, Brian Maguire, Danny Gagne Etc.) (Z-Library)Document314 pagesScalable Data Streaming With Amazon Kinesis Design and Secure Highly Available, Cost-Effective Data Streaming Applications... (Tarik Makota, Brian Maguire, Danny Gagne Etc.) (Z-Library)Abelardo RodriguezNo ratings yet

- Installation Guide Apache KylinDocument17 pagesInstallation Guide Apache KylinJose100% (1)

- Premanand Naik DataScientist 4years PuneDocument4 pagesPremanand Naik DataScientist 4years PuneSteelwinNo ratings yet

- AIU BroucherDocument2 pagesAIU Brouchernisha.vasudevaNo ratings yet

- Gen AI - Capstone - Report - Flappy BirdDocument22 pagesGen AI - Capstone - Report - Flappy BirdShaila SathishNo ratings yet

- Arize U - Intro To ML ObservabilityDocument13 pagesArize U - Intro To ML Observabilityemma adeoyeNo ratings yet

- SAP Oracle Microsoft Infor Sage: Accolades - 2019Document1 pageSAP Oracle Microsoft Infor Sage: Accolades - 2019patel_rajendraNo ratings yet

- Entry Level Data Scientist ResumeDocument1 pageEntry Level Data Scientist ResumeZefanya SimamoraNo ratings yet

- Business Case For EA - IserverDocument23 pagesBusiness Case For EA - IserverNishant KulshresthaNo ratings yet

- Data Engineering Nanodegree Program SyllabusDocument16 pagesData Engineering Nanodegree Program SyllabusJonatas EleoterioNo ratings yet

- MLOps Continuous Delivery For ML On AWSDocument69 pagesMLOps Continuous Delivery For ML On AWSNASIM THANDERNo ratings yet

- Microsoft - Practicetest.dp 201.v2020!08!07.by - Julissa.92qDocument126 pagesMicrosoft - Practicetest.dp 201.v2020!08!07.by - Julissa.92qrottyNo ratings yet

- DLTDG Er4Document186 pagesDLTDG Er4angchauNo ratings yet

- Data Platform and Analytics Foundational Training: (Speaker Name)Document23 pagesData Platform and Analytics Foundational Training: (Speaker Name)Kathalina SuarezNo ratings yet

- A Guide To: Data Science at ScaleDocument20 pagesA Guide To: Data Science at ScaleTushar SethiNo ratings yet

- Trivago PipelineDocument18 pagesTrivago Pipelinebehera.eceNo ratings yet

- Loan Risk Analysis With Databricks and XGBoost - A Databricks Guide, Including Code Samples and Notebooks (2019)Document11 pagesLoan Risk Analysis With Databricks and XGBoost - A Databricks Guide, Including Code Samples and Notebooks (2019)Ma GaNo ratings yet

- Design Key-Value Database + Real UsecaseDocument49 pagesDesign Key-Value Database + Real UsecaseCNTT-2C-18 Vũ Thu hoàiNo ratings yet

- CB Queryoptimization 01Document78 pagesCB Queryoptimization 01Jean-Marc BoivinNo ratings yet

- IDMC Best Practices and StandardsDocument27 pagesIDMC Best Practices and StandardsOrachai TassanamethinNo ratings yet

- The 32-Hour, 4-Day Workweek - A Win-Win or A Risky Move - IndustryWeekDocument6 pagesThe 32-Hour, 4-Day Workweek - A Win-Win or A Risky Move - IndustryWeekPatNo ratings yet

- Quality ManualDocument7 pagesQuality ManualPatNo ratings yet

- Improving Material Flow and Production Layout Using Value Stream Mapping A Case Study in A Manufacturing CompanyDocument68 pagesImproving Material Flow and Production Layout Using Value Stream Mapping A Case Study in A Manufacturing CompanyPatNo ratings yet

- 30 Day Tabata WorkoutDocument11 pages30 Day Tabata WorkoutPatNo ratings yet

- Switching From ERP To MES Drives Improvement For Plastics Manufacturer - IndustryWeekDocument9 pagesSwitching From ERP To MES Drives Improvement For Plastics Manufacturer - IndustryWeekPatNo ratings yet

- What Is KataDocument13 pagesWhat Is KataPatNo ratings yet

- Gcag Schlosser FinalDocument3 pagesGcag Schlosser FinalPatNo ratings yet

- What Is Continuous ImprovementDocument15 pagesWhat Is Continuous ImprovementPatNo ratings yet

- Mike Mentzer's Real Workout Routine & Diet PlanDocument14 pagesMike Mentzer's Real Workout Routine & Diet PlanPat100% (1)

- CBT Challenging Your Thoughts2Document1 pageCBT Challenging Your Thoughts2PatNo ratings yet

- W10 E3 2Document90 pagesW10 E3 2PatNo ratings yet

- Pomodoro TechniqueDocument1 pagePomodoro TechniquePatNo ratings yet

- CBT Breaking Down Tasks2Document1 pageCBT Breaking Down Tasks2PatNo ratings yet

- CBT Daily Activities2Document1 pageCBT Daily Activities2PatNo ratings yet

- Crossfit 1836: Murph Training GuideDocument8 pagesCrossfit 1836: Murph Training GuidePatNo ratings yet

- Pomodoro Technique: Student Success Skills For Polar BearsDocument1 pagePomodoro Technique: Student Success Skills For Polar BearsPatNo ratings yet

- Cellular Wide-Area and Non-Terrestrial IoT A Survey On 5G Advances and The Road Toward 6GDocument58 pagesCellular Wide-Area and Non-Terrestrial IoT A Survey On 5G Advances and The Road Toward 6GShraddha ChopraNo ratings yet

- Manufacturing Operations Management - The Smart Backbone of Industry 4.0Document6 pagesManufacturing Operations Management - The Smart Backbone of Industry 4.0Raviteja GogineniNo ratings yet

- Introduction To Industry 4.0 and Industrial Internet of ThingsDocument1 pageIntroduction To Industry 4.0 and Industrial Internet of Thingssiva rajaNo ratings yet

- Industrial AutomationDGDocument236 pagesIndustrial AutomationDGreynaldorxNo ratings yet

- Accenture Industrial Internet Insights Report 2015 PDFDocument36 pagesAccenture Industrial Internet Insights Report 2015 PDFClaudia ArévaloNo ratings yet

- Internet of ThingsDocument34 pagesInternet of ThingsEd Cornejo MaytaNo ratings yet

- Digital Twin: Enabling Technologies, Challenges and Open ResearchDocument21 pagesDigital Twin: Enabling Technologies, Challenges and Open ResearchTimothy GuobadiaNo ratings yet

- Research and Education For Industry 4.0 (2018)Document8 pagesResearch and Education For Industry 4.0 (2018)lazarNo ratings yet

- Siemens SW MG Motor India Case StudyDocument4 pagesSiemens SW MG Motor India Case StudyShailesh BishnoiNo ratings yet

- Dast Unit1Document8 pagesDast Unit1Karunya MannavaNo ratings yet

- Factory of The FutureDocument6 pagesFactory of The FutureNick PalmerNo ratings yet

- Unit 1 PDFDocument78 pagesUnit 1 PDFशून्य अद्वैतNo ratings yet

- Catalog Modicon TM3 Expansion IO Modules For Modicon M221 M241 M251 and M262 PLCDocument35 pagesCatalog Modicon TM3 Expansion IO Modules For Modicon M221 M241 M251 and M262 PLCtarak7787No ratings yet

- Kura WiresDocument83 pagesKura WiresBhupender Singh TanwarNo ratings yet

- Root-Cause Problem Solving in An Industry 4.0 ContextDocument9 pagesRoot-Cause Problem Solving in An Industry 4.0 Contextmoustafa magdiNo ratings yet

- Digital I/O For Raspberry Pi Starter Kit: FeaturesDocument6 pagesDigital I/O For Raspberry Pi Starter Kit: Featuresapic20No ratings yet

- Chen, Lin, Liu - Edge Computing Gateway of The Industrial Internet of Things Using Multiple Collaborative MicrocontrollersDocument9 pagesChen, Lin, Liu - Edge Computing Gateway of The Industrial Internet of Things Using Multiple Collaborative MicrocontrollersJose Edson Moreno JuniorNo ratings yet

- ProposalDocument31 pagesProposallast manNo ratings yet

- GP-Pro Ex-Basic TrainingDocument244 pagesGP-Pro Ex-Basic TrainingDAJIMMANZ TVNo ratings yet

- Batch-Operated Filtration Centrifuge: SeparationDocument16 pagesBatch-Operated Filtration Centrifuge: SeparationWebquiqueNo ratings yet

- Technology Hype and IOT PDFDocument3 pagesTechnology Hype and IOT PDFKriti DixitNo ratings yet

- ThesisDocument28 pagesThesisK1K5KNo ratings yet

- RTru 301 CatalogueDocument4 pagesRTru 301 CatalogueamukadamNo ratings yet

- Industry 4.0 and Society 5.0: Opportunities and Threats: Revised Manuscript Received On January 15, 2020Document4 pagesIndustry 4.0 and Society 5.0: Opportunities and Threats: Revised Manuscript Received On January 15, 2020gizex2013No ratings yet

- New Paradigm of Industry 4.0 Internet of Things, Big Data Cyber Physical SystemsDocument187 pagesNew Paradigm of Industry 4.0 Internet of Things, Big Data Cyber Physical SystemsEgle Fitne100% (6)

- Unit 5 AutomationDocument39 pagesUnit 5 AutomationAndrew lingtanNo ratings yet

- 9 A Comprehensive Survey of Digital Twins and Federated Learning For Industrial Internet of Things (IIoT), Internet of Vehicles (IoV) and Internet of Drones (IoD)Document16 pages9 A Comprehensive Survey of Digital Twins and Federated Learning For Industrial Internet of Things (IIoT), Internet of Vehicles (IoV) and Internet of Drones (IoD)Omar KhalifaNo ratings yet