Professional Documents

Culture Documents

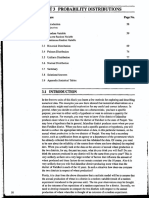

Module 3 - DISCRETE PROBABILITY DISTRIBUTIONS

Module 3 - DISCRETE PROBABILITY DISTRIBUTIONS

Uploaded by

Therese SibayanOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Module 3 - DISCRETE PROBABILITY DISTRIBUTIONS

Module 3 - DISCRETE PROBABILITY DISTRIBUTIONS

Uploaded by

Therese SibayanCopyright:

Available Formats

MATH 115 ENGINEERING DATA ANALYSIS

Chapter 3

DISCRETE PROBABILITY DISTRIBUTIONS

You can use probability and discrete random variables to calculate the likelihood of

lightning striking the ground five times during a half-hour thunderstorm. (Credit: Leszek

Leszczynski)

Objectives:

After careful study of this chapter, you should be able to do the following:

1. Determine probabilities from probability mass functions and the reverse

2. Determine probabilities from cumulative distribution functions and cumulative

distribution functions from probability mass functions, and the reverse

3. Calculate means and variances for discrete random variables

4. Understand the assumptions for some common discrete probability distributions

5. Select an appropriate discrete probability distribution to calculate probabilities in

specific applications

6. Calculate probabilities, determine means and variances for some common

discrete probability distributions

3.1 Concept of a Random Variable

Statistics is concerned with making inferences about populations and population

characteristics. Experiments are conducted with results that are subject to chance. The

testing of a number of electronic components is an example of a statistical experiment,

a term that is used to describe any process by which several chance observations are

generated. It Is often important to allocate a numerical description to the outcome.

ENGR. JOSHUA C. JUNIO 1

MATH 115 ENGINEERING DATA ANALYSIS

For example, the sample space giving a detailed description of each possible

outcome when three electronic components are tested may be written as

𝑺 = {𝑵𝑵𝑵, 𝑵𝑵𝑫, 𝑵𝑫𝑵, 𝑫𝑵𝑵, 𝑵𝑫𝑫, 𝑫𝑵𝑫, 𝑫𝑫𝑵, 𝑫𝑫𝑫},

Where N denotes non-defective, and D denotes defective. One is naturally concerned

with the number of defectives that occur. Thus, each point in the sample space will be

assigned a numerical value of 0, 1, 2, or 3. These values are, of course, random quantities

determined by the outcome of the experiment. They may be viewed as values assumed

by the random variable X, the number of defective items when three electronic

components are tested.

Definition 3.1:

A random variable is a function that associates a real number with each element in the

sample space.

We shall use a capital letter, say X, to denote a random variable and its

corresponding small letter, x in this case, for one of its values. In the electronic component

testing example above, we notice that the random variable X assumes the value 2 for all

elements in the subset

𝑬 = {𝑫𝑫𝑵, 𝑫𝑵𝑫, 𝑵𝑫𝑫}

of the sample space S. That is, each possible value of X represents an event that is a

subset of the sample space for the given experiment.

Example 3.1

Two balls are drawn in succession without replacement from an urn containing 4 red balls

and 3 black balls. List all the possible outcomes and the values y of the random variable

Y, where Y is the number of red balls.

Example 3.2

A stockroom clerk returns three safety helmets at random to three steel mill employees

who had previously checked them. If Smith, Jones, and Brown, in that order, receive one

of the three hats, list the sample points for the possible orders of returning the helmets,

and find the value m of the random variable M that represents the number of correct

matches.

The two preceding examples are composed of a sample space that contains a

finite number of elements. On the other hand, when a die is thrown until a 5 occurs, we

obtain a sample space with an unending sequence of elements,

𝑆 = {𝐹, 𝑁𝐹, 𝑁𝑁𝐹, 𝑁𝑁𝑁𝐹, … },

where F and N represent, respectively, the occurrence and nonoccurrence of a 5. But

even in this experiment, the number of elements can be equated to the number of whole

numbers so that there is a fist element, a second element, a third element, and so on,

and in this sense can be counted.

There are cases where the random variable is categorical in nature. Variables,

often called dummy variables, are used.

ENGR. JOSHUA C. JUNIO 2

MATH 115 ENGINEERING DATA ANALYSIS

Example 3.3

Suppose a sampling plan involves sampling items from a process until a defective is

observed. The evaluation of the process will depend on how many consecutive items are

observed. In that regard, let X be a random variable defined by the number of items

observed before a defective is found. List the possible sample points until a defective is

observed and find the value of x of the random variable X that represents the number of

items observed before a defective is found.

Example 3.4

Let X be random variable defined fined by the waiting time, in hours, between successive

speeders spotted by a radar unit. List all values of x of the random variable X.

Definition 3.2

If a sample space contains a finite number of possibilities or an unending sequence with

as many elements as there are whole numbers, it is called a discrete sample space.

Definition 3.3

If a sample space contains an infinite number of possibilities equal to the number of point

on a line segment, it is called a continuous sample space.

A random variable is called a discrete random variable if its set of possible

outcomes is countable. The random variables of Examples 3.1 to 3.3 are discrete random

variables.

But a random variable whose set of possible values is an entire interval of numbers

is not discrete. When a random variable can take on values on a continuous scale, it is

called a continuous random variable.

In most practical problems, continuous random variables represent measured

data, such as all possible heights, weights, temperatures, distance, or life periods,

whereas discrete random variables represent count data, such as the number of

defectives in a sample of k items or the number of highway fatalities per year in a given

state.

3.2 Discrete Probability Distributions

A discrete random variable assumes each of its values with a certain probability.

Frequently, it is convenient to represent all the probabilities of a random variable X by a

formula. Such formula would necessarily be a function of the numerical values x that we

shall denote by 𝑓(𝑥), 𝑔(𝑥), 𝑟(𝑥), and so forth.

Therefore, we write 𝑓(𝑥) = 𝑃(𝑋 = 𝑥); that is, 𝑓(3) = 𝑃(𝑋 = 3). The set of ordered pairs

(𝑥, 𝑓(𝑥)) is called the probability function, probability mass function, or probability

distribution of the discrete random variable X.

Definition 3.4

The set of ordered pairs (𝑥, 𝑓(𝑥)) is a probability function, probability mass function, or

probability distribution of the discrete random variable X if, for each possible outcome x,

1. 𝑓(𝑥) ≥ 0,

2. ∑𝑥 𝑓(𝑥) = 1,

3. 𝑃(𝑋 = 𝑥) = 𝑓(𝑥)

ENGR. JOSHUA C. JUNIO 3

MATH 115 ENGINEERING DATA ANALYSIS

Example 3.5

A shipment of 20 similar laptop computers to a retail outlet contains 3 that are defective.

If a school makes a random purchase of 2 of these computers, find the probability

distribution for the number of defectives.

Example 3.6

If a car agency sells 50% of its inventory of a certain foreign car equipped with side

airbags, find a formula for the probability distribution of the number of cars with side

airbags among the next 4 cars sold by the agency.

There are many problems where we may wish to compute the probability that the

observed value of a random variable X will be less than or equal to some real number x.

Writing 𝐹(𝑥) = 𝑃(𝑋 ≤ 𝑥) for every real number x, we define 𝐹(𝑥) to be the cumulative

distribution function of the random variable X.

Definition 3.5

The cumulative distribution function 𝐹(𝑥) of a discrete random variable X with probability

distribution 𝑓(𝑥) is

𝑭(𝒙) = 𝑷(𝑿 ≤ 𝒙) = ∑ 𝒇(𝒕)

𝒕≤𝒙

For −∞ < 𝒙 < ∞.

Example 3.7

Find the cumulative distribution function of the random variable X in Example 3.6. Using

𝐹(𝑥), verify that 𝑓(2) = 3/8.

3.3 Mean and Variance of a Discrete Random Variable

Mean of a Random Variable

The method where the relative frequencies is used to calculate the average value

of the data. We shall refer to this average value as the mean of the random variable X or

the, mean of the probability distribution of X and write it as 𝜇𝑥 or simply as 𝜇 when it is

clear to which random variable we refer. It is also common among statisticians to refere

to this mean as the mathematical expectation, or the expected value of the random

variable X, and denoted as E(X).

Definition 3.6

Let X be a random variable with probability distribution 𝑓(𝑥). The mean, or expected

value, of X is

𝝁 = 𝑬(𝑿) = ∑ 𝒙𝒇(𝒙)

𝒙

If X is discrete, and

∞

𝝁 = 𝑬(𝑿) = ∫ 𝒙𝒇(𝒙)𝒅𝒙

−∞

If X is continuous.

ENGR. JOSHUA C. JUNIO 4

MATH 115 ENGINEERING DATA ANALYSIS

Example 3.8

A lot containing 7 components is sampled by a quality inspector; the lot contains 4 good

components and 3 defective components. A sample of 3 is taken by the inspector. Find

the expected value of the number of good components in this sample.

Example 3.9

A salesperson for a medical device company has two appointments on a given day. At

the first appointment, he believes that he has a 70% chance to make the deal, from

which he can earn $1000 commission if successful. On the other hand, he thinks he only

has a 40% chance to make the deal at the second appointment, from which, if

successful, he can make $1500. What is his expected commission based on his own

probability belief? Assume that the appointment results are independent of each other.

Theorem 3.1

Let X be a random variable with probability distribution 𝑓(𝑥). The expected value of the

random variable 𝑔(𝑋) is

𝝁𝒈 (𝒙) = 𝑬[𝒈(𝑿)] = ∑ 𝒈(𝒙)𝒇(𝒙)

𝒙

If X is discrete, and

∞

𝝁𝒈 (𝒙) = 𝑬[𝒈(𝑿)] = ∫ 𝒈(𝒙)𝒇(𝒙)𝒅𝒙

−∞

If X is continuous.

Variance of Random Variables

The mean, or expected value, of a random variable X is of special importance in

statistics because it describes where the probability distribution is centered. By itself,

however, the mean does not give an adequate description of the shape of the

distribution.

The most important measure of variability of a random variable X is obtained by

applying Theorem 3.1 with 𝑔(𝑋) = (𝑋 − 𝜇)2 . The quantity is referred to as the variance of

the random variable X or the variance of the probability distribution of X and is denoted

by 𝑉𝑎𝑟(𝑋) or the symbol 𝜎𝑥2 , or simply by 𝜎 2 when it is clear to which random variable we

refer.

Definition 3.7

Let X be a random variable with probability distribution 𝑓(𝑥) and mean 𝜇. The variance

of X is

𝝈𝟐 = 𝑬[(𝑿 − 𝝁)𝟐 ] = ∑(𝒙 − 𝝁)𝟐 𝒇(𝒙)

𝒙

If X is discrete, and

∞

𝝈𝟐 = 𝑬[(𝑿 − 𝝁)𝟐 ] = ∫ (𝒙 − 𝝁)𝟐 𝒇(𝒙)𝒅𝒙

−∞

If X is continuous.

The positive square root of the variance, 𝜎, is called the standard deviation of X.

ENGR. JOSHUA C. JUNIO 5

MATH 115 ENGINEERING DATA ANALYSIS

The quantity 𝑥 − 𝜇 in Definition 3.7 is called the deviation of an observation from its

mean. Since the deviations are squared and then averaged, 𝜎 2 will be much smaller for

a set of x values that are close to 𝜇 than it will be for a set of values that vary considerably

from 𝜇.

Example 3.10

Let the random variable X represent the number of automobiles that are used for official

business purposes on any given workday.

The probability distribution for Company A (See figure (a))

And that for company B (See figure (b)) is

Show that the variance of the probability distribution for company B is greater than that

for company A.

Theorem 3.2

The variance of a random variable X is

𝝈𝟐 = 𝑬(𝑿𝟐 ) − 𝝁𝟐

Example 3.11

Let the random variable X represent the number of defective parts for a machine when

3 parts are sampled from a production line and tested. The following is the probability

distribution of X.

Using Theorem 3.2, calculate 𝜎 2 .

ENGR. JOSHUA C. JUNIO 6

MATH 115 ENGINEERING DATA ANALYSIS

3.4 Discrete Uniform Distribution

The simplest discrete random variable is one that assumes only a finite number of possible

values, each with equal probability. A random variable A that assumes each of the

values 𝑥1 , 𝑥2 , … , 𝑥𝑛 , with equal probability 1/𝑛, is frequently of interest.

Definition 3.8

A random variable X has a discrete uniform distribution if each of the n values in its range,

say, 𝑥1 , 𝑥2 , … , 𝑥𝑛 , has equal probability. Then,

𝒇(𝒙𝒊 ) = 𝟏/𝒏

Example 3.12

The first digit of a part’s serial number is equally likely to be any one of the digits 0 through

9. If one part is selected from a large batch and X is the first digit of the serial number, X

has a discrete uniform distribution with probability 0.1 for each value in 𝑅 = {0, 1, 2, … , 9).

Calculate 𝑓(𝑥) and draw the probability mass function of X.

Suppose the range of the discrete random variable A is the consecutive integers

𝑎, 𝑎 + 1, 𝑎 + 2, … , 𝑏, for 𝑎 ≤ 𝑏. The range of X contains 𝑏 − 𝑎 + 1 values each with probability

1/(𝑏 − 𝑎 + 1). Now,

𝑏

1

𝜇 = ∑𝑘( )

𝑏−𝑎+1

𝑘=𝑎

Simplifying,

(𝑏 + 𝑎)

𝜇=

2

Definition 3.9

Suppose X is a discrete uniform random variable on the consecutive integers 𝑎, 𝑎 + 1, 𝑎 +

2, … , 𝑏, for 𝑎 ≤ 𝑏. The mean of X is

(𝒃 + 𝒂)

𝝁 = 𝑬(𝑿) =

𝟐

The variance of X

(𝒃 − 𝒂 + 𝟏)𝟐 − 𝟏

𝝈𝟐 =

𝟏𝟐

Example 3.13

Let the random variable X denote the number of the 48 voice lines that are in use at a

particular time. Assume that X is a discrete uniform random variable with a range of 0 to

48. Then, solve for the mean and variance of X.

ENGR. JOSHUA C. JUNIO 7

MATH 115 ENGINEERING DATA ANALYSIS

3.5 Binomial Distribution

Consider the following random experiments and random variables:

1. Flip a coin 10 times. Let X = number of heads obtained,

2. A worn machine tool procedures 1% defective parts. Let X = number of defective

parts in the next 25 parts produced.

3. Each sample of air has a 10% chance of containing a particular rare molecule. Let

X = the number of air samples that contain the rare molecule in the next 18

samples analyzed.

4. Of all bits transmitted through a digital transmission channel, 10% are received in

error. Let X = the number of bits in error in the next five bits transmitted.

5. A multiple-choice test contains 10 questions, each with four choices, and you

guess at each question. Let X = the number of questions answered correctly.

6. In the next 20 births at a hospital, let X = the number of female births.

7. Of all patients suffering a particular illness, 35% experience improvement from a

particular medication. In the next 100 patients administered the medication, let X

= the number of patients who experience improvement.

These examples illustrate that a general probability model that includes these

experiments as particular cases would be very useful. Each of these random experiments

can be thought of as consisting of a series of repeated, random trials. The random

variable in each case is a count of the number of trials that meet a specified criterion.

The outcome from each trial either meets the criterion that X counts, or it does not;

consequently, each trial can be summarized as resulting in either success or a failure.

The terms success and failure are just labels. We can just as well use A and B or 0 or 1.

Unfortunately, the usual labels can sometimes be misleading. In experiment 2, because

X counts defective parts, the production of a defective part is called a success.

A trial with only two possible outcomes is used so frequently as a building block of a

random experiment that it is called a Bernoulli trial. It is usually assumed that the trials that

constitute the random experiment are independent. This implies that the outcome from

one trial has no effect on the outcome to be obtained from any other trial. Furthermore,

it is often reasonable to assume that the probability of a success in each trial is constant.

The Bernoulli Process

The Bernoulli process must possess the following properties:

1. The experiment consists of repeated trials.

2. Each trial results in an outcome that may be classified as a success or a failure.

3. The probability of success, denoted by p, remains constant from trial to trial.

4. The repeated trials are independent

Binomial Distribution

The number X of successes in n Bernoulli trials is called a binomial random variable.

The probability distribution of this discrete random variable is called the Binomial

Distribution, and its values will be denoted by 𝑏(𝑥; 𝑛, 𝑝) since they depend on the number

of trials and the probability of a success on a given trial.

We wish to find a formula that gives the probability of x successes in n trials for a

binomial experiment. First, consider the probability of x successes and 𝑛 − 𝑥 failures in a

specified order. Since the trials are independent, we can multiply all the probabilities

ENGR. JOSHUA C. JUNIO 8

MATH 115 ENGINEERING DATA ANALYSIS

corresponding to the different outcomes. Each success occurs with probability p and

each failure with probability 𝑞 = 1 − 𝑝. Therefore, the probability for the specified order is

𝑝 𝑥 𝑞𝑛−𝑥 . We must now determine the total number of sample points in the experiment that

have x successes and 𝑛 − 𝑥 failures. This number is equal to the number of partitions of n

𝑛

outcomes into two groups with x in one group and 𝑛 − 𝑥 in the other and is written ( ).

𝑥

Because these partitions are mutually exclusive, we add the probabilities of all the

𝑛

different partitions to obtain the general formula, or simply multiply 𝑝 𝑥 𝑞𝑛−𝑥 by ( ).

𝑥

Definition 3.10

A Bernoulli trial can result in a success with probability p and a failure with probability 𝑞 =

1 − 𝑝. Then, the probability distribution of the binomial random variable X, the number of

successes in n independent trials, is

𝒏

𝒃(𝒙; 𝒏, 𝒑) = ( ) 𝒑𝒙 𝒒𝒏−𝒙

𝒙

Where 𝒙 = 𝟎, 𝟏, 𝟐, … , 𝒏

Example 3.14

The probability that a certain kind of component will survive a shock test is ¾. Find the

probability that exactly 2 of the next 4 components tested survive.

Where Does the Name Binomial come from?

The binomial distribution derives its name from the fact that the 𝑛 + 1 terms in the

binomial expansion of (𝑞 + 𝑝)𝑛 correspond to the various values of 𝑏(𝑥; 𝑛, 𝑝) for 𝑥 =

0,1,2, … , 𝑛. That is,

𝑛 𝑛 𝑛 𝑛

(𝑞 + 𝑝)𝑛 = ( ) 𝑞 𝑛 + ( ) 𝑝𝑞 𝑛−1 + ( ) 𝑝2 𝑞 𝑛−2 + ⋯ + ( ) 𝑝𝑛

0 1 2 𝑛

= 𝑏(0; 𝑛, 𝑝) + 𝑏(1; 𝑛, 𝑝) + 𝑏(2; 𝑛, 𝑝) + ⋯ + 𝑏(𝑛; 𝑛, 𝑝)

Since 𝑝 + 𝑞 = 1, we see that

𝑛

∑ 𝑏(𝑥; 𝑛, 𝑝) = 1

𝑥=0

A condition that must hold for any probability distribution.

Frequently, we are interested in problems where it is necessary to find 𝑃(𝑋 < 𝑟) or

𝑃(𝑎 ≤ 𝑋 ≤ 𝑏). Binomial sums

𝑟

𝐵(𝑟; 𝑛, 𝑝) = ∑ 𝑏(𝑥; 𝑛, 𝑝)

𝑥=0

Are given in Table A.1 for 𝑛 = 1,2, … ,20 for selected values of p from 0.1 to 0.9.

ENGR. JOSHUA C. JUNIO 9

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 10

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 11

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 12

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 13

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 14

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 15

MATH 115 ENGINEERING DATA ANALYSIS

Example 3.15

The probability that a patient recovers from a rare blood disease is 0.4. If 15 people are

known to have contracted this disease, what is the probability that

a. At least 10 survive?

b. From 3 to 8 survive?

c. Exactly 5 survive?

Example 3.16

A large chain retailer purchases a certain kind of electronic device from a manufacturer.

The manufacturer indicates that the defective rate of the device is 3%.

a. The inspector randomly picks 20 items from a shipment. What is the probability

that there will be at least one defective item among these 20?

b. Suppose that the retailer receives 10 shipments in a month and the inspector

randomly tests 20 devices per shipment. What is the probability that there will be

exactly 3 shipments each containing at least one defective device among the

20 that are selected and tested from the shipment?

Mean and Variance of the Binomial Distribution

Since the probability distribution of any binomial random variable depends only

on the values assumed by the parameters n, p, and q, it would seem reasonable to

assume that the mean and variance of a binomial random variable also depend on the

values assumed by these parameters.

Theorem 3.3

The mean and variance of the binomial distribution 𝑏(𝑥; 𝑛, 𝑝) are

𝝁 = 𝒏𝒑 and 𝝈𝟐 = 𝒏𝒑𝒒

Example 3.17

It is conjectured that an impurity exists in 30% of all drinking wells in a certain rural

community. In order to gain some insight into the true extent of the problem, it is

determined that some testing is necessary. It is too expensive to test all of the wells in the

area, so 10 are randomly selected for testing.

a. Using binomial distribution, what is the probability that exactly 3 wells have the

impurity, assuming that the conjecture is correct?

b. What is the probability that more than 3 wells are impure?

Example 3.18

Find the mean and variance of the binomial random variable in Example 3.15

Example 3.19

Consider the situation of Example 3.17. The notion that 30% of the wells are impure is

merely a conjecture put forth by the area water board. Suppose 10 wells are randomly

selected and 6 are found to contain the impurity. What does this imply about the

conjecture? Use a probability statement.

ENGR. JOSHUA C. JUNIO 16

MATH 115 ENGINEERING DATA ANALYSIS

3.6 Poisson Distribution

Experiments yielding numerical values of a random variable X, the number of

outcomes occurring during a given time interval or in a specified region, are called

Poisson experiments. The given time interval may be of any length, such as a minute, a

day, a week, a month, or even a year. For example, a Poisson experiment can generate

observations for the random variable X representing the number of telephone calls

received per hour by an office, the number of days school is closed due to snow during

the winter, or the number of games postponed due to rain during a baseball season. The

specified region could be a line segment, an area, a volume, or perhaps a piece of

material. In such instances, X might represent the number of field mice per acre, the

number of bacteria in a given culture, or the number of typing errors per page. A Poisson

experiment is derived from the Poisson process and possesses the following properties.

Properties of the Poisson Process

1. The number of outcomes occurring in one time interval or specified region of

space is independent of the number that occur in any other disjoint time interval

or region. In this sense, we say that the Poisson process has no memory.

2. The probability that a single outcome will occur during a very short time interval or

in a small region is proportional to the length of the time interval or the size of the

region and does not depend on the number of outcomes occurring outside this

time interval or region.

3. The probability that more than one outcome will occur in such a short time interval

or fall in such a small region is negligible.

The number X of outcomes occurring during a Poisson experiment is called a

Poisson random variable, and its probability distribution is called the Poisson distribution.

The mean number of outcomes is computed from 𝜇 = 𝜆𝑡, where t is the specific “time”,

“distance”, “area”, or “volume” of interest. Since the probabilities depend on 𝜆, the rate

of occurrence of outcomes, we shall denote them by 𝑝(𝑥; 𝜆𝑡).

Definition 3.11

The probability distribution of the Poisson random variable X, representing the number of

outcomes occurring in a given time interval or specified region denoted by t, is

𝒆−𝝀𝒕 (𝝀𝒕)𝒙

𝒑(𝒙; 𝝀𝒕) =

𝒙!

𝑥 = 0,1,2 …,

Where 𝜆 is the average number of outcomes per unit time, distance, area, or volume

and 𝑒 = 2.71828 …

Table A.2 contains Poisson probability sums

𝒓

𝑷(𝒓; 𝝀𝒕) = ∑ 𝒑(𝒙; 𝝀𝒕),

𝒙=𝟎

For selected values of 𝜆𝑡 ranging from 0.1 to 18.0.

ENGR. JOSHUA C. JUNIO 17

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 18

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 19

MATH 115 ENGINEERING DATA ANALYSIS

ENGR. JOSHUA C. JUNIO 20

MATH 115 ENGINEERING DATA ANALYSIS

Example 3.20

During the laboratory experiment, the average number of radioactive particles passing

through a counter in 1 millisecond is 4. What is the probability that 6 particles enter the

counter in a given millisecond?

Example 3.21

Ten is the average number of oil tankers arriving each day at a certain port. The facilities

at the port can handle at most 15 tankers per day. What is the probability that on a given

day tankers have to be turned away?

Theorem 3.4

Both the mean and the variance of the Poisson distribution 𝑝(𝑥; 𝜆𝑡) are 𝜆𝑡.

Nature of the Poisson Probability Function

Like so many discrete and continuous distributions, the form of the Poisson distribution

becomes more and more symmetric, even bell-shaped, as the mean grows large. The

figure below illustrates this, showing plots of the probability function for 𝜇 = 0.1, 𝜇 = 2, and

𝜇 = 5. Note the nearness to symmetry when 𝜇 becomes as large as 5.

Approximation of Binomial Distribution by a Poisson Distribution

It should be evident from the three principles of the Poisson process that the Poisson

distribution is related to the binomial distribution. Although the Poisson usually finds

applications in space and time problems, it can be viewed as a limiting form of the

binomial distribution. In the case of the binomial, if n is quite large and p is small, the

conditions begin to simulate the continuous space or time implications of the Poisson

process. The independence among Bernoulli trials in the binomial case is consistent with

principle 2 of the Poisson process. Allowing the parameter p to be close to 0 relates to

principle 3 of the Poisson process. Indeed, if n is large and p is close to 0, the Poisson

distribution can be used, with 𝜇 = 𝑛𝑝, to approximate binomial probabilities. If p is close

ENGR. JOSHUA C. JUNIO 21

MATH 115 ENGINEERING DATA ANALYSIS

to 1, we can still use the Poisson distribution to approximate binomial probabilities by

interchanging what we have defined to be a success and a failure, thereby changing p

to a value close to 0.

Theorem 3.5

Let X be a binomial random variable with probability distribution 𝑏(𝑥; 𝑛, 𝑝). When 𝑛 → ∞,

𝑛→∞

𝑝 → 0, and 𝑛𝑝 → 𝜇 remains constant,

𝒏→∞

𝒃(𝒙; 𝒏, 𝒑) → 𝒑(𝒙; 𝝁).

Example 3.22

In a certain construction site, accidents occur infrequently. It is known that the probability

of an accident on any given day is 0.005 and accidents are independent of each other.

a. What is the probability that in any given period of 400 days there will be an

accident on one day?

b. What is the probability that there are at most three days with an accident?

Example 3.23

In a manufacturing process where glass products are made, defects or bubbles occur,

occasionally rendering the piece undesirable for marketing. It is known that, on average,

1 in every 1000 of these items produced has one or more bubbles. What is the probability

that a random sample of 8000 will yield fewer than 7 items possessing bubbles?

ENGR. JOSHUA C. JUNIO 22

You might also like

- Maths Project (XII) - ProbabilityDocument18 pagesMaths Project (XII) - ProbabilityAnwesha Kar, XII B, Roll No:1165% (17)

- Module 4 Problem Set All PDFDocument51 pagesModule 4 Problem Set All PDFPenny TratiaNo ratings yet

- @ CHPTR 03Document43 pages@ CHPTR 03Mominul Islam OpuNo ratings yet

- Stationary and Related Stochastic Processes: Sample Function Properties and Their ApplicationsFrom EverandStationary and Related Stochastic Processes: Sample Function Properties and Their ApplicationsRating: 4 out of 5 stars4/5 (2)

- Lecture No.5Document10 pagesLecture No.5MUHAMMAD USMANNo ratings yet

- Lecture No.5Document10 pagesLecture No.5Awais Rao100% (1)

- Walpole 2012 Textpages For Topic4Document14 pagesWalpole 2012 Textpages For Topic4MULINDWA IBRANo ratings yet

- IE101 Module 2 Part 1 Reference1 1Document28 pagesIE101 Module 2 Part 1 Reference1 1Mark Reynier De VeraNo ratings yet

- Random Variables and Probability DistributionDocument50 pagesRandom Variables and Probability DistributiondaRainNo ratings yet

- Chapter 3: Probability Distribution: 3.1 Random VariablesDocument11 pagesChapter 3: Probability Distribution: 3.1 Random VariablesSrinyanavel ஸ்ரீஞானவேல்No ratings yet

- Chapter 3 - Clean Random Variables and Probability Distributions NotesDocument17 pagesChapter 3 - Clean Random Variables and Probability Distributions NoteszazoNo ratings yet

- Chapter 3Document19 pagesChapter 3Shimelis TesemaNo ratings yet

- PSUnit I Lesson 2 Constructing Probability DistributionsDocument33 pagesPSUnit I Lesson 2 Constructing Probability DistributionsJaneth Marcelino50% (2)

- Ugc Net Economics English Book 2Document17 pagesUgc Net Economics English Book 2Vidhi SinghNo ratings yet

- Chapter 1 ProbabilityDocument13 pagesChapter 1 ProbabilityVaibhavGuptaNo ratings yet

- Probability DistributionDocument33 pagesProbability DistributionIvyJoyceNo ratings yet

- Probabilistic Analysis and Randomized Quicksort: T T (I) T T (I)Document7 pagesProbabilistic Analysis and Randomized Quicksort: T T (I) T T (I)Sangeeta JainNo ratings yet

- ENENDA30 - Module 3Document48 pagesENENDA30 - Module 3cjohnaries30No ratings yet

- Introduction To Probability: Rohit Vishal KumarDocument7 pagesIntroduction To Probability: Rohit Vishal KumarChiradip SanyalNo ratings yet

- Unit 2 - A Quick Review of ProbabilityDocument15 pagesUnit 2 - A Quick Review of ProbabilitynganduNo ratings yet

- Chapter 3Document19 pagesChapter 3Mohamad Hafizi PijiNo ratings yet

- Engineering Data Analysis Chapter 3 - Discrete Probability DistributionDocument18 pagesEngineering Data Analysis Chapter 3 - Discrete Probability Distributionetdr4444No ratings yet

- Sample Theory With Ques. - Estimation (JAM MS Unit-14)Document25 pagesSample Theory With Ques. - Estimation (JAM MS Unit-14)ZERO TO VARIABLENo ratings yet

- CH 03Document31 pagesCH 03TríNo ratings yet

- Module 3: Random Variables Lecture - 4: Descriptors of Random Variables (Contd.) Measure of SkewnessDocument8 pagesModule 3: Random Variables Lecture - 4: Descriptors of Random Variables (Contd.) Measure of SkewnessabimanaNo ratings yet

- Chapter Five One Dimensional Random VariablesDocument12 pagesChapter Five One Dimensional Random VariablesOnetwothree TubeNo ratings yet

- Random Variable: Chapter 3: Probability DistributionDocument11 pagesRandom Variable: Chapter 3: Probability DistributioncharliewernerNo ratings yet

- 3 - Discrete Random Variables & Probability DistributionsDocument80 pages3 - Discrete Random Variables & Probability DistributionsMohammed AbushammalaNo ratings yet

- A Short Tutorial On The Expectation-Maximization AlgorithmDocument25 pagesA Short Tutorial On The Expectation-Maximization AlgorithmaezixNo ratings yet

- Unit 3Document38 pagesUnit 3swingbikeNo ratings yet

- Chapter 1 - Probability Distribution PDFDocument13 pagesChapter 1 - Probability Distribution PDFNur AqilahNo ratings yet

- Introduction To ProbabilityDocument510 pagesIntroduction To Probabilitymiss_bnmNo ratings yet

- Chapter 3Document58 pagesChapter 3YaminNo ratings yet

- Lecture Notes 3 Discrete Probability Distributions For StudentsDocument21 pagesLecture Notes 3 Discrete Probability Distributions For StudentsFrendick LegaspiNo ratings yet

- Chapter Three: 3. Random Variables and Probability Distributions 3.1. Concept of A Random VariableDocument6 pagesChapter Three: 3. Random Variables and Probability Distributions 3.1. Concept of A Random VariableYared SisayNo ratings yet

- Chapter 3: Random Variables and Probability DistributionsDocument37 pagesChapter 3: Random Variables and Probability DistributionsMert SEPETOĞLUNo ratings yet

- Chapter 4 Mathematical Expectation - Part 1 0Document14 pagesChapter 4 Mathematical Expectation - Part 1 0Shammi KumarNo ratings yet

- Haan C T Statistical Methods in Hydrology SolutionDocument32 pagesHaan C T Statistical Methods in Hydrology Solutiongizem duralNo ratings yet

- 4 - StatisticsDocument79 pages4 - StatisticsSami HassounNo ratings yet

- Chapter 3 Discrete Random Variable PDFDocument23 pagesChapter 3 Discrete Random Variable PDFLizhe KhorNo ratings yet

- III-Discrete DistributionsDocument46 pagesIII-Discrete DistributionsΙσηνα ΑνησιNo ratings yet

- New Microsoft Word DocumentDocument8 pagesNew Microsoft Word DocumentGhanshyam SharmaNo ratings yet

- 1998 Material Ease 5Document4 pages1998 Material Ease 5Anonymous T02GVGzBNo ratings yet

- PME-lec5Document40 pagesPME-lec5naba.jeeeNo ratings yet

- Comparison of Resampling Method Applied To Censored DataDocument8 pagesComparison of Resampling Method Applied To Censored DataIlya KorsunskyNo ratings yet

- Faculty of Information Science & Technology (FIST) : PSM 0325 Introduction To Probability and StatisticsDocument7 pagesFaculty of Information Science & Technology (FIST) : PSM 0325 Introduction To Probability and StatisticsMATHAVAN A L KRISHNANNo ratings yet

- Chapter 3Document6 pagesChapter 3Habtie WalleNo ratings yet

- Probability Theory-MergedDocument127 pagesProbability Theory-MergedabhayblackbeltNo ratings yet

- Estimation of The Sample Size and Coverage For Guaranteed-Coverage Nonnormal Tolerance IntervalsDocument8 pagesEstimation of The Sample Size and Coverage For Guaranteed-Coverage Nonnormal Tolerance IntervalsAnonymous rfn4FLKD8No ratings yet

- Probability Distribution: Question BookletDocument8 pagesProbability Distribution: Question Bookletoliver senNo ratings yet

- Module 4 - Fundamentals of ProbabilityDocument50 pagesModule 4 - Fundamentals of ProbabilitybindewaNo ratings yet

- Statistics and Probability Lecture 8Document10 pagesStatistics and Probability Lecture 8john christian de leonNo ratings yet

- Uzair Talpur 1811162 Bba 4B Statistical Inference AssignmentDocument15 pagesUzair Talpur 1811162 Bba 4B Statistical Inference Assignmentuzair talpurNo ratings yet

- Stochastics NotesDocument19 pagesStochastics Notesvishalmundhe494No ratings yet

- Discrete Probability Models: Irina Romo AguasDocument35 pagesDiscrete Probability Models: Irina Romo AguasShneyder GuerreroNo ratings yet

- Chapter 3Document17 pagesChapter 3gontsenyekoshibambo04No ratings yet

- Math13 Topic 2Document10 pagesMath13 Topic 2Linette Joyce BinosNo ratings yet

- Module 1. Introduction To Probability Theory Student Notes 1.1: Basic Notions in ProbabilityDocument6 pagesModule 1. Introduction To Probability Theory Student Notes 1.1: Basic Notions in ProbabilityRamesh AkulaNo ratings yet

- MATH220 Probability and Statistics: Asst. Prof. Merve BULUT YILGÖRDocument26 pagesMATH220 Probability and Statistics: Asst. Prof. Merve BULUT YILGÖRCan AtarNo ratings yet

- Probability MathDocument3 pagesProbability MathAngela BrownNo ratings yet

- Mathematical Foundations of Information TheoryFrom EverandMathematical Foundations of Information TheoryRating: 3.5 out of 5 stars3.5/5 (9)

- Probability: An Introduction To Modeling Uncertainty: Business AnalyticsDocument58 pagesProbability: An Introduction To Modeling Uncertainty: Business Analyticsthe city of lightNo ratings yet

- Statistics and Probability: Quarter 3 - Module 16: Identifying Sampling Distribution of StatisticsDocument27 pagesStatistics and Probability: Quarter 3 - Module 16: Identifying Sampling Distribution of StatisticsMoreal QazNo ratings yet

- Stat FlexbookDocument308 pagesStat FlexbookleighhelgaNo ratings yet

- ATAL FDP Probability 261222Document26 pagesATAL FDP Probability 261222NIrmalya SenguptaNo ratings yet

- Isesco: Science and Technology VisionDocument5 pagesIsesco: Science and Technology VisionMehmet ArslanNo ratings yet

- Practical Data Analysis With JMPDocument8 pagesPractical Data Analysis With JMPrajv88No ratings yet

- (Download PDF) Elementary Statistics A Step by Step Approach 11E Ise 11Th Ise Edition Allan Bluman Full Chapter PDFDocument69 pages(Download PDF) Elementary Statistics A Step by Step Approach 11E Ise 11Th Ise Edition Allan Bluman Full Chapter PDFryogogeylan100% (6)

- Math 7 Q4 Week 2Document14 pagesMath 7 Q4 Week 2Trisha ArizalaNo ratings yet

- Basic Stats Cheat SheetDocument26 pagesBasic Stats Cheat SheetMbusoThabetheNo ratings yet

- Sampling Methods Applied To Fisheries ScienceDocument100 pagesSampling Methods Applied To Fisheries ScienceAna Paula ReisNo ratings yet

- B. Tech. in Metallurgical & Materials Engineering VNITDocument29 pagesB. Tech. in Metallurgical & Materials Engineering VNITShameekaNo ratings yet

- MA1014 Probability and Queueing Theory PDFDocument4 pagesMA1014 Probability and Queueing Theory PDFDivanshuSriNo ratings yet

- Introductory Probability Theory (Nicholas N.N. Nsowah-Nuamah)Document299 pagesIntroductory Probability Theory (Nicholas N.N. Nsowah-Nuamah)ntn.nhutNo ratings yet

- Probability DistributionsDocument85 pagesProbability DistributionsEduardo JfoxmixNo ratings yet

- For MulesDocument191 pagesFor MulessoroNo ratings yet

- 4.2! Probability Distribution Function (PDF) For A Discrete Random VariableDocument5 pages4.2! Probability Distribution Function (PDF) For A Discrete Random VariableDahanyakage WickramathungaNo ratings yet

- Quiz 3 STT041.1Document3 pagesQuiz 3 STT041.1Denise Jane RoqueNo ratings yet

- Probability PlotDocument17 pagesProbability PlotCharmianNo ratings yet

- Local Media100839098740108681Document4 pagesLocal Media100839098740108681Majoy AcebesNo ratings yet

- Binomial Distribution - Definition, Criteria, and ExampleDocument6 pagesBinomial Distribution - Definition, Criteria, and ExampleKidu YabeNo ratings yet

- TSA PPT Lesson 03Document15 pagesTSA PPT Lesson 03souravNo ratings yet

- Vs30 From Shallow ModelsDocument7 pagesVs30 From Shallow ModelsNarayan RoyNo ratings yet

- Stat RatDocument3 pagesStat RatMalvin Roix OrenseNo ratings yet

- PDF Statistics For Business Economics With Xlstat Education Edition Printed Access Card David R Anderson Ebook Full ChapterDocument53 pagesPDF Statistics For Business Economics With Xlstat Education Edition Printed Access Card David R Anderson Ebook Full Chaptercecil.sholders619100% (1)

- Data Analysis For Physics Laboratory: Standard ErrorsDocument5 pagesData Analysis For Physics Laboratory: Standard Errorsccouy123No ratings yet

- MBA First Year (1 Semester) Paper - 105: Quantitative Techniques ObjectiveDocument2 pagesMBA First Year (1 Semester) Paper - 105: Quantitative Techniques ObjectiveRevanth NarigiriNo ratings yet

- 3rd SemDocument25 pages3rd Semsaish mirajkarNo ratings yet

- Probability Statistics and Numerical MethodsDocument4 pagesProbability Statistics and Numerical MethodsAnnwin Moolamkuzhi shibuNo ratings yet